Escolar Documentos

Profissional Documentos

Cultura Documentos

Hashing: John Erol Evangelista

Enviado por

John Erol EvangelistaDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Hashing: John Erol Evangelista

Enviado por

John Erol EvangelistaDireitos autorais:

Formatos disponíveis

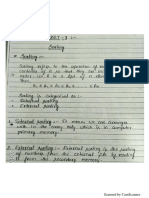

Hashing

John Erol Evangelista

Denition of terms

Keys. A data that identies a record.

Hashing. Distributing keys among a

one dimensional array called hash

table..

Hash Function. Maps a key to its hash

address

Hash Address. The address of the key

in a hash table.

Denition of Terms

Radix. Base of a number system.

Collisions. A phenomenon of two (or more)

keys being hashed in the same cell of a hash

table.

Probe Sequence. Sequence of slots that are

examined when using open addressing. A

permutation of <1,...,m-1>

Clustering. Series of occupied slots in a

probe sequence.

Hashing

based on the idea of distributing keys

among a one dimensional array

H[0,...,m-1] called a hash table.

The idea is that we start the search at a

position in the table that depends on

the search key. If indeed it is in the

table, we expect it to be a few

comparisons away.

Hash Function

Hash Functions: assigns an integer

from 1 to m-1 to a key. This integer is

called the hash address.

It maps any key, say k, from some

universe of keys Uk to a position, or

address, i in a hash table T.

Hash Functions

256 Chapter 11 Hash Tables

11.2 Hash tables

The downside of direct addressing is obvious: if the universe U is large, storing

a table T of size jUj may be impractical, or even impossible, given the memory

available on a typical computer. Furthermore, the set K of keys actually stored

may be so small relative to U that most of the space allocated for T would be

wasted.

When the set K of keys stored in a dictionary is much smaller than the uni-

verse U of all possible keys, a hash table requires much less storage than a direct-

address table. Specically, we can reduce the storage requirement to .jKj/ while

we maintain the benet that searching for an element in the hash table still requires

only O.1/ time. The catch is that this bound is for the average-case time, whereas

for direct addressing it holds for the worst-case time.

With direct addressing, an element with key k is stored in slot k. With hashing,

this element is stored in slot h.k/; that is, we use a hash function h to compute the

slot from the key k. Here, h maps the universe U of keys into the slots of a hash

table T 0 : : m 1:

h W U ! f0; 1; : : : ; m 1g ;

where the size m of the hash table is typically much less than jUj. We say that an

element with key k hashes to slot h.k/; we also say that h.k/ is the hash value of

key k. Figure 11.2 illustrates the basic idea. The hash function reduces the range

of array indices and hence the size of the array. Instead of a size of jUj, the array

can have size m.

T

U

(universe of keys)

K

(actual

keys)

0

m1

k

1

k

2

k

3

k

4

k

5

h(k

1

)

h(k

4

)

h(k

3

)

h(k

2

) = h(k

5

)

Figure 11.2 Using a hash function h to map keys to hash-table slots. Because keys k

2

and k

5

map

to the same slot, they collide.

Hashing

We have three tasks that we must

attend to when we use a hash table.

Convert non-numeric keys to

numbers

Choose a good hash function

Choose a collision resolution policy.

Converting keys to numbers

In applying h(K) we assume K is a

positive integer.

In the case where keys are strings, we

must rst convert each key to an

equivalent integer such that distinct keys

are represented by distinct integers.

Various techniques

Key conversion

Replace the letters by their position in

the alphabet (A=01, B=02...)

e.g. KEYS = 11052519

Odd digits would either be 0,1 or 2

which is not a good idea.

Key Conversion

Express the key as a radix-26 integer.

e.g. KEYS = 11 x 26^3 + 05 x 26^2 +25 x

26 + 19 = 197385

Key Conversion

Express the key as a radix-256 integer

with the letter representing their ASCII

codes (A = 010000012 B = 010000102 ...)

e.g. KEYS 01001011 01000101 01011001

010100112 = 75 x 256^3 + 69 x 256^2 +

89 x 256 + 83 = 1262836051

For long keys use XOR

Choosing a Hash Function

A good hash function h(k) must

uniformly and randomly distribute

keys over the table address 0,1,2,...,m-1

must satisfy two important requisites:

It can be calculated fast

It minimizes collisions.

Choosing a Hash Function

folding: KEYS = 1262836051

hash address is 12 + 62 + 83 + 60 + 51

= 268

midsquare method

multiply the key by itself and take the

middle p digits of the product as the

hash address.

Division Method

Let m be the table size; then the hash

address h(K) is simply the remainder

upon dividing K by m. It follows that

0h(K)m-1

h(K) K mod m

A proper choice of table size m will

reduce collisions.

Choosing m

m should not be even.

m should not be a power of the radix of

a computer.

m should not be of the form 2^ij, if the

keys are interpreted in radix 2^r

m should be a prime number that is not to

close to the exact powers of 2.

Multiplication Method

The multiplication method for creating

hash functions operates in two steps.

First we multiply the key K by a

constant A in the range 0< A < 1 and

extract the fractional part of kA. Then

we multiply this value by m and take

the oor of the result.

264 Chapter 11 Hash Tables

s D A 2

w

w bits

k

r

0

r

1

h.k/

extract p bits

Figure 11.4 The multiplication method of hashing. The w-bit representation of the key k is multi-

plied by the w-bit value s D A 2

w

. The p highest-order bits of the lower w-bit half of the product

form the desired hash value h.k/.

fractional part of kA. Then, we multiply this value by m and take the oor of the

result. In short, the hash function is

h.k/ D bm.kA mod 1/c ;

where kA mod 1 means the fractional part of kA, that is, kA bkAc.

An advantage of the multiplication method is that the value of m is not critical.

We typically choose it to be a power of 2 (m D 2

p

for some integer p), since we

can then easily implement the function on most computers as follows. Suppose

that the word size of the machine is w bits and that k ts into a single word. We

restrict A to be a fraction of the form s=2

w

, where s is an integer in the range

0 < s < 2

w

. Referring to Figure 11.4, we rst multiply k by the w-bit integer

s D A 2

w

. The result is a 2w-bit value r

1

2

w

Cr

0

, where r

1

is the high-order word

of the product and r

0

is the low-order word of the product. The desired p-bit hash

value consists of the p most signicant bits of r

0

.

Although this method works with any value of the constant A, it works better

with some values than with others. The optimal choice depends on the character-

istics of the data being hashed. Knuth [211] suggests that

A .

p

5 1/=2 D 0:6180339887 : : : (11.2)

is likely to work reasonably well.

As an example, suppose we have k D 123456, p D 14, m D 2

14

D 16384,

and w D 32. Adapting Knuths suggestion, we choose A to be the fraction of the

form s=2

32

that is closest to .

p

5 1/=2, so that A D 2654435769=2

32

. Then

k s D 327706022297664 D .76300 2

32

/ C 17612864, and so r

1

D 76300

and r

0

D 17612864. The 14 most signicant bits of r

0

yield the value h.k/ D 67.

Multiplication method

Advantage: m is not critical. Often a

power of 2 (easily implement this on a

computer)

Multiplication method

Assume that all keys are integers m =

2^r and our computer has w-bit words.

Dene:

Where rsh is the bitwise right-shift

operator and A is an odd integer in the

range 2^w1 <A<2^w.

October 3, 2005 Copvright 2001-5 bv Erik D. Demaine and Charles E. Leiserson L7.14

Multiplication method

Assume that all keys are integers, m 2

r

, and our

computer has w-bit words. DeIine

h(k) (Ak mod 2

w

) rsh (w r),

where rsh is the 'bitwise right-shiIt operator and

A is an odd integer in the range 2

w1

A 2

w

.

Don`t pick A too close to 2

w1

or 2

w

.

Multiplication modulo 2

w

is Iast compared to

division.

The rsh operator is Iast.

Collisions

A phenomenon of two (or more) keys

being hashed in the same cell of a hash

table.

Worst case: All keys are hashed on the

same hash address

Chaining

Keys are stored in linked lists attached

to cells of a hash table.

Chaining

Ll

266

Space and Time Tradeoffs

FIGURE 7.4 Collision of two keys in hashing: h(K;) = h(K;)

where Cis a constant larger than every ord(c;).

In general, a hash function needs to satisfy two somewhat conflicting require-

ments:

1!!1 A hash function needs the cells of the hash table

as evenly as possible. (Because of this requirement, the value of m is usually

chosen to be prime. This requirement also makes it desirable, for most appli-

cations, to have a hash function dependent on all bits of a key, not just some

of them.)

1!!1 A hash function has to be.eas-yto compute.

Obviously, if we choose a hash table's size m to be smaller than the number of

keys n, we will get collisions-a phenomenon of two (or more) keys being hashed

into the same cell of the hash table (Figure 7.4). But collisions should be expected

even if m is considerably larger than n (see Problem 5). In fact, in the worst case,

all the keys could be hashed to the same cell of the hash table. Fortunately, with an

appropriately chosen size of the hash table and a good hash function, this situation

will happen very rarely. Still, every hashing scheme must have a collision resolution

mechanism. This mechanism is different in the two principal versions of hashing:

open hashing (also called separate chaining) and closed hashing (also called open

addressing).

Open Hashing (Separate Chaining)

In open hashing, keys are stored in linked lists attached to cells of a hash table.

Each list contains all the keys hashed to its cell. Consider, as an example, the

following list of words:

A,FOOL,AND,HIS,MONEY,ARE,SOON,PARTED.

As a hash function, we will use the simple function for strings mentioned above,

that is, we will add the positions of a word's letters in the alphabet and compute

the sum's remainder after division by 13.

We start with the empty table. The first key is the word A; its hash value is

h(A) = 1 mod 13 = 1. The second key-the word FOOL-isinstalled in the ninth cell

(since (6 + 15 + 15 + 12) mod 13 = 9), and so on. The final result of this process is

given in Figure 7.5; note a collision of the keys ARE and SOON (because h(ARE) =

(1 + 18 + 5) mod 13 = 11 and h(SOON) = (19 + 15 + 15 + 14) mod 13 = 11).

Chaining

keys

hash addresses

0 2 3 4 5

6 7 8

A

AND MONEY

7.3 Hashing

9 10

FOOL HIS

11

ARE

J.

SOON

FIGURE 7.5 Example of a hash table construction with separate chaining

267

12

PARTED

How do we search in a dictionary implemented as such a table of linked lists?

We do this by simply applying to a search key the same procedure that was used

for creating the table. To illustrate, if we want to search for the key KID in the hash

table of Figure 7.5, we first compute the value of the same hash function for the

key: h(KID) = 11. Since the list attached to cellll is not empty, its linked list may

contain the search key. But because of possible collisions, we cannot tell whether

this is the case until we traverse this linked list. After comparing the string KID first

with the string ARE and then with the string SOON, we end up with an unsuccessful

search. -

In general, the efficiency of searching depends on the lengths of the liuked

lists, which, in turn, depend on the dictionary and table sizes, as well as the quality

of the hash function. If the hash function distributes n keys among m cells of the

hash table about evenly, each list will be about n/m keys long. The ratio a= n/m,

called the load factor of the hash table, plays a crucial role in the efficiency of

hashing. In particular, the average number of pointers (chain links) inspected in

successful searches, S, and unsuccessful searches, U, turn out to be

" S"'1+ z and U=a, (7,4)

respectively (under the standard assumptions of searching for a randomly selected

element and a hash function distributing keys uniformly among the table's cells).

These results are quite natural. Indeed, they are almost identical to searching

sequentially in a linked list; what we have gained by hashing is a reduction in

average list size by a factor of m, the size of the hash table.

Normally, we want the load factor to be not far from 1. Having it too small

would imply a lot of empty lists and hence inefficient use of space; having it too

large would mean longer linked lists and hence longer search times. But if the load

factor is around 1, we have an amazingly efficient scheme that makes it possible to

search for a given key for, on average, the price of one or two comparisons! True,

in addition to comparisons, we need to spend time on computing the value of the

Analysis

Efciency depends on the lengths of the linked

lists.

If a hash function distributes n keys among m

cells of the hash tables about evenly, each list

will be about n/m keys long. This ratio is the

load factor of the hash table.

Average number of pointers inspected in a

successful search S and unsuccessful search U

are:

keys

hash addresses

0 2 3 4 5

6 7 8

A

AND MONEY

7.3 Hashing

9 10

FOOL HIS

11

ARE

J.

SOON

FIGURE 7.5 Example of a hash table construction with separate chaining

267

12

PARTED

How do we search in a dictionary implemented as such a table of linked lists?

We do this by simply applying to a search key the same procedure that was used

for creating the table. To illustrate, if we want to search for the key KID in the hash

table of Figure 7.5, we first compute the value of the same hash function for the

key: h(KID) = 11. Since the list attached to cellll is not empty, its linked list may

contain the search key. But because of possible collisions, we cannot tell whether

this is the case until we traverse this linked list. After comparing the string KID first

with the string ARE and then with the string SOON, we end up with an unsuccessful

search. -

In general, the efficiency of searching depends on the lengths of the liuked

lists, which, in turn, depend on the dictionary and table sizes, as well as the quality

of the hash function. If the hash function distributes n keys among m cells of the

hash table about evenly, each list will be about n/m keys long. The ratio a= n/m,

called the load factor of the hash table, plays a crucial role in the efficiency of

hashing. In particular, the average number of pointers (chain links) inspected in

successful searches, S, and unsuccessful searches, U, turn out to be

" S"'1+ z and U=a, (7,4)

respectively (under the standard assumptions of searching for a randomly selected

element and a hash function distributing keys uniformly among the table's cells).

These results are quite natural. Indeed, they are almost identical to searching

sequentially in a linked list; what we have gained by hashing is a reduction in

average list size by a factor of m, the size of the hash table.

Normally, we want the load factor to be not far from 1. Having it too small

would imply a lot of empty lists and hence inefficient use of space; having it too

large would mean longer linked lists and hence longer search times. But if the load

factor is around 1, we have an amazingly efficient scheme that makes it possible to

search for a given key for, on average, the price of one or two comparisons! True,

in addition to comparisons, we need to spend time on computing the value of the

Insertion and Deletion

Insertion: Normally done at the end of

the list.

Deletion: Searching for key to be

deleted and deleting it

Open Addressing

All keys are stored in the hash table

itself without the use of linked lists.

Advantage: Avoid pointers.

We compute the sequence of slots to be

examined.

Hash function is dependent on the key

and the probe number

270 Chapter 11 Hash Tables

no elements are stored outside the table, unlike in chaining. Thus, in open ad-

dressing, the hash table can ll up so that no further insertions can be made; one

consequence is that the load factor can never exceed 1.

Of course, we could store the linked lists for chaining inside the hash table, in

the otherwise unused hash-table slots (see Exercise 11.2-4), but the advantage of

open addressing is that it avoids pointers altogether. Instead of following pointers,

we compute the sequence of slots to be examined. The extra memory freed by not

storing pointers provides the hash table with a larger number of slots for the same

amount of memory, potentially yielding fewer collisions and faster retrieval.

To perform insertion using open addressing, we successively examine, or probe,

the hash table until we nd an empty slot in which to put the key. Instead of being

xed in the order 0; 1; : : : ; m 1 (which requires .n/ search time), the sequence

of positions probed depends upon the key being inserted. To determine which slots

to probe, we extend the hash function to include the probe number (starting from 0)

as a second input. Thus, the hash function becomes

h W U f0; 1; : : : ; m 1g ! f0; 1; : : : ; m 1g :

With open addressing, we require that for every key k, the probe sequence

hh.k; 0/; h.k; 1/; : : : ; h.k; m 1/i

be a permutation of h0; 1; : : : ; m1i, so that every hash-table position is eventually

considered as a slot for a new key as the table lls up. In the following pseudocode,

we assume that the elements in the hash table T are keys with no satellite infor-

mation; the key k is identical to the element containing key k. Each slot contains

either a key or NIL (if the slot is empty). The HASH-INSERT procedure takes as

input a hash table T and a key k. It either returns the slot number where it stores

key k or ags an error because the hash table is already full.

HASH-INSERT.T; k/

1 i D 0

2 repeat

3 j D h.k; i /

4 if T j == NIL

5 T j D k

6 return j

7 else i D i C 1

8 until i == m

9 error hash table overow

The algorithm for searching for key k probes the same sequence of slots that the

insertion algorithm examined when key k was inserted. Therefore, the search can

Open Addressing

The probe sequence h(k,0), h(k,1), ..., h(k,m1)

should be a permutation of {0, 1, ..., m

1}.

Linear Probing

If a collision occurs, it checks the cell

next to the cell were the collision

occurs. If it is empty, the new key is

placed there, otherwise it checks for the

next cell and so on.

Circular array design.

272 Chapter 11 Hash Tables

Linear probing

Given an ordinary hash function h

0

W U ! f0; 1; : : : ; m 1g, which we refer to as

an auxiliary hash function, the method of linear probing uses the hash function

h.k; i / D .h

0

.k/ C i / mod m

for i D 0; 1; : : : ; m 1. Given key k, we rst probe T h

0

.k/, i.e., the slot given

by the auxiliary hash function. We next probe slot T h

0

.k/ C 1, and so on up to

slot T m 1. Then we wrap around to slots T 0; T 1; : : : until we nally probe

slot T h

0

.k/ 1. Because the initial probe determines the entire probe sequence,

there are only m distinct probe sequences.

Linear probing is easy to implement, but it suffers from a problem known as

primary clustering. Long runs of occupied slots build up, increasing the average

search time. Clusters arise because an empty slot preceded by i full slots gets lled

next with probability .i C 1/=m. Long runs of occupied slots tend to get longer,

and the average search time increases.

Quadratic probing

Quadratic probing uses a hash function of the form

h.k; i / D .h

0

.k/ C c

1

i C c

2

i

2

/ mod m ; (11.5)

where h

0

is an auxiliary hash function, c

1

and c

2

are positive auxiliary constants,

and i D 0; 1; : : : ; m 1. The initial position probed is T h

0

.k/; later positions

probed are offset by amounts that depend in a quadratic manner on the probe num-

ber i . This method works much better than linear probing, but to make full use of

the hash table, the values of c

1

, c

2

, and m are constrained. Problem 11-3 shows

one way to select these parameters. Also, if two keys have the same initial probe

position, then their probe sequences are the same, since h.k

1

; 0/ D h.k

2

; 0/ im-

plies h.k

1

; i / D h.k

2

; i /. This property leads to a milder form of clustering, called

secondary clustering. As in linear probing, the initial probe determines the entire

sequence, and so only m distinct probe sequences are used.

Double hashing

Double hashing offers one of the best methods available for open addressing be-

cause the permutations produced have many of the characteristics of randomly

chosen permutations. Double hashing uses a hash function of the form

h.k; i / D .h

1

.k/ C ih

2

.k// mod m ;

where both h

1

and h

2

are auxiliary hash functions. The initial probe goes to posi-

tion T h

1

.k/; successive probe positions are offset from previous positions by the

Linear Probing

.. . .. .. ... ... . ...: ..

I,

f

n

268

Space and Time Tradeoffs

hash function for a search key, but it is a constant-time operation, independent

from n and m. Note that we are getting this remarkable efficiency not only as a

result of the method's ingenuity but also at the expense of extra space.

The two other dictionary operations-insertion and deletion-are almost

identical to searching. Insertions are normally done at the end of a list (but see

Problem 6 for a possible modification of this rule). Deletion is performed by

searching for a key to be deleted and then removing it from its list. Hence, the

efficiency of these operations is identical to that of searching, and they are all

8(1) in the average case if the number of keys n is about equal to the hash table's

size m.

Closed Hashing (Open Addressing)

In closed hashing, all keys are stored in the hash table itself without the use

of linked lists. (Of course, this implies that the table size m must be at least as

large as the number of keys n.) Different strategies can be employed for collision

resolution. The simplest one-called linear probing-checks the cell following the

one where the collision occurs. If that cell is empty, the new key is installed there; if

the next cell is already occupied, the availability of that cell's immediate successor

is checked, and so on. Note that if the end of the hash table is reached, the search

is wrapped to the beginning of the table; that is, it is treated as a circular array.

This method is illustrated in Figure 7.6 by applying it to the same list of words we

used above to illustrate separate chaining (we also use the same hash function).

To search for a given key K, we start by computing h(K) where his the hash

function used in the table's construction. If the cell h(K) is empty, the search is

unsuccessful. If the cell is not empty, we must compare K with the cell's occupant:

keys

hash addresses

0 2 3 4 5 6 7 8 9 10 11 12

A

A FOOL

A AND FOOL

A

AND FOOL HIS

A AND MONEY FOOL HIS

A AND MONEY FOOL HIS ARE

A AND MONEY FOOL HIS ARE SOON

PARTED A

AND MONEY FOOL HIS ARE SOON

FIGURE 7.6 Example of a hash table construction with linear probing

Linear Probing

Insertion and Search are pretty much

straightforward.

Deletion can be tricky, but a lazy deletion

can make the problem easier.

Given the load factor, the number of

times the algorithm must access the

table for successful and unsuccessful

searches is, respectively:

,z

7.3 Hashing

269

if they are equal, we have found a matching key; if they are not, we compare

K with a key in the next cell and continue in this manner until we encounter

either a matching key (a successful search) or an empty cell (unsuccessful search).

For example, if we search for the word LIT in the table of Figure 7.6, we will

get h (LIT) = (12 + 9 + 20) mod 13 = 2 and, since cell 2 is empty, we can stop

immediately. However, if we search for KID with h(KID) = (11 + 9 + 4) mod 13 =

11, we will have to compare KID with ARE, SOON, PARTED, and A before we can

declare the search unsuccessful.

While the search and insertion operations are straightforward for this version

of hashing, deletion is not. For example, if we simply delete the key ARE from the

last state of the hash table in Figure 7.6, we will be unable to find the key SOON

afterward. Indeed, after computing h(SOON) = 11, the algorithm would find this

location empty and report the unsuccessful search result. A simple solution is to

use "lazy deletion," that is, to mark previously occupied locations by a special

symbol to distinguish them from locations that have not been occupied.

The mathematical analysis of linear probing is a much more difficult problem

than that of separate chaining

3

The simplified versions of these results state that

the average number of times the algorithm must access a hash table with the load

factor a in successful and unsuccessful searches is, respectively,

1 1 ] ]

S"'-(1+--) and U"'-(1+ )

2 1 - a 2 (1 - a )2

(7.5)

(and the accuracy of these approximations increases with larger sizes of the hash

table). These numbers are surprisingly small even for densely populated tables,

i.e., for large percentage values of a:

50%

75%

90%

1(1 + _1_)

2 1-a

1.5

2.5

5.5

2.5

8.5

50.5

Still, as the hash table gets closer to being full, the performance of linear prob-

ing deteriorates because of a phenomenon called clustering. A cluster in linear

probing is a sequence of contiguously occupied cells (with a possible wrapping).

For example, the final state of the hash table in Figure 7.6 has two clusters. Clusters

are bad news in hashing because they make the dictionary operations less effi-

cient. Also note that as clusters become larger, the probability that a new element

3. This problem was solved in 1962 by a young graduate student in mathematics named Donald E. Knuth.

Knuth went on to become one of the most important computer scientists of our time. His multivolume

treatise The Art of Computer Programming [Knul, Knuli, Knuiii] remains the most comprehensive

and influential book on algorithmics ever published.

Linear Probing

Linear probing can suffer from primary

clustering. A primary cluster in linear

probing is a sequence of contiguously

occupied cells.

Because initial probe determines the

entire probe sequence, there are only m

distinct probe sequences.

Quadratic Probing

has the form:

can suffer from secondary clustering.

Only m distinct probe sequences.

272 Chapter 11 Hash Tables

Linear probing

Given an ordinary hash function h

0

W U ! f0; 1; : : : ; m 1g, which we refer to as

an auxiliary hash function, the method of linear probing uses the hash function

h.k; i / D .h

0

.k/ C i / mod m

for i D 0; 1; : : : ; m 1. Given key k, we rst probe T h

0

.k/, i.e., the slot given

by the auxiliary hash function. We next probe slot T h

0

.k/ C 1, and so on up to

slot T m 1. Then we wrap around to slots T 0; T 1; : : : until we nally probe

slot T h

0

.k/ 1. Because the initial probe determines the entire probe sequence,

there are only m distinct probe sequences.

Linear probing is easy to implement, but it suffers from a problem known as

primary clustering. Long runs of occupied slots build up, increasing the average

search time. Clusters arise because an empty slot preceded by i full slots gets lled

next with probability .i C 1/=m. Long runs of occupied slots tend to get longer,

and the average search time increases.

Quadratic probing

Quadratic probing uses a hash function of the form

h.k; i / D .h

0

.k/ C c

1

i C c

2

i

2

/ mod m ; (11.5)

where h

0

is an auxiliary hash function, c

1

and c

2

are positive auxiliary constants,

and i D 0; 1; : : : ; m 1. The initial position probed is T h

0

.k/; later positions

probed are offset by amounts that depend in a quadratic manner on the probe num-

ber i . This method works much better than linear probing, but to make full use of

the hash table, the values of c

1

, c

2

, and m are constrained. Problem 11-3 shows

one way to select these parameters. Also, if two keys have the same initial probe

position, then their probe sequences are the same, since h.k

1

; 0/ D h.k

2

; 0/ im-

plies h.k

1

; i / D h.k

2

; i /. This property leads to a milder form of clustering, called

secondary clustering. As in linear probing, the initial probe determines the entire

sequence, and so only m distinct probe sequences are used.

Double hashing

Double hashing offers one of the best methods available for open addressing be-

cause the permutations produced have many of the characteristics of randomly

chosen permutations. Double hashing uses a hash function of the form

h.k; i / D .h

1

.k/ C ih

2

.k// mod m ;

where both h

1

and h

2

are auxiliary hash functions. The initial probe goes to posi-

tion T h

1

.k/; successive probe positions are offset from previous positions by the

Double hashing

Best method for open addressing

because permutations produced have

many characteristics of randomly

chosen permutation.

has the form:

272 Chapter 11 Hash Tables

Linear probing

Given an ordinary hash function h

0

W U ! f0; 1; : : : ; m 1g, which we refer to as

an auxiliary hash function, the method of linear probing uses the hash function

h.k; i / D .h

0

.k/ C i / mod m

for i D 0; 1; : : : ; m 1. Given key k, we rst probe T h

0

.k/, i.e., the slot given

by the auxiliary hash function. We next probe slot T h

0

.k/ C 1, and so on up to

slot T m 1. Then we wrap around to slots T 0; T 1; : : : until we nally probe

slot T h

0

.k/ 1. Because the initial probe determines the entire probe sequence,

there are only m distinct probe sequences.

Linear probing is easy to implement, but it suffers from a problem known as

primary clustering. Long runs of occupied slots build up, increasing the average

search time. Clusters arise because an empty slot preceded by i full slots gets lled

next with probability .i C 1/=m. Long runs of occupied slots tend to get longer,

and the average search time increases.

Quadratic probing

Quadratic probing uses a hash function of the form

h.k; i / D .h

0

.k/ C c

1

i C c

2

i

2

/ mod m ; (11.5)

where h

0

is an auxiliary hash function, c

1

and c

2

are positive auxiliary constants,

and i D 0; 1; : : : ; m 1. The initial position probed is T h

0

.k/; later positions

probed are offset by amounts that depend in a quadratic manner on the probe num-

ber i . This method works much better than linear probing, but to make full use of

the hash table, the values of c

1

, c

2

, and m are constrained. Problem 11-3 shows

one way to select these parameters. Also, if two keys have the same initial probe

position, then their probe sequences are the same, since h.k

1

; 0/ D h.k

2

; 0/ im-

plies h.k

1

; i / D h.k

2

; i /. This property leads to a milder form of clustering, called

secondary clustering. As in linear probing, the initial probe determines the entire

sequence, and so only m distinct probe sequences are used.

Double hashing

Double hashing offers one of the best methods available for open addressing be-

cause the permutations produced have many of the characteristics of randomly

chosen permutations. Double hashing uses a hash function of the form

h.k; i / D .h

1

.k/ C ih

2

.k// mod m ;

where both h

1

and h

2

are auxiliary hash functions. The initial probe goes to posi-

tion T h

1

.k/; successive probe positions are offset from previous positions by the

Double Hashing

The value of h2(K) must be relatively

prime to the hash-table size m for the

entire hash table to be searched.

One way is to make m a power of 2 and

design h2(K) to produce only odd

numbers.

Double Hashing

11.4 Open addressing 273

0

1

2

3

4

5

6

7

8

9

10

11

12

79

69

98

72

14

50

Figure 11.5 Insertion by double hashing. Here we have a hash table of size 13 with h

1

.k/ D

k mod 13 and h

2

.k/ D 1 C.k mod 11/. Since 14 1 .mod 13/ and 14 3 .mod 11/, we insert

the key 14 into empty slot 9, after examining slots 1 and 5 and nding them to be occupied.

amount h

2

.k/, modulo m. Thus, unlike the case of linear or quadratic probing, the

probe sequence here depends in two ways upon the key k, since the initial probe

position, the offset, or both, may vary. Figure 11.5 gives an example of insertion

by double hashing.

The value h

2

.k/ must be relatively prime to the hash-table size m for the entire

hash table to be searched. (See Exercise 11.4-4.) A convenient way to ensure this

condition is to let m be a power of 2 and to design h

2

so that it always produces an

odd number. Another way is to let m be prime and to design h

2

so that it always

returns a positive integer less than m. For example, we could choose m prime and

let

h

1

.k/ D k mod m ;

h

2

.k/ D 1 C.k mod m

0

/ ;

where m

0

is chosen to be slightly less than m (say, m 1). For example, if

k D 123456, m D 701, and m

0

D 700, we have h

1

.k/ D 80 and h

2

.k/ D 257, so

that we rst probe position 80, and then we examine every 257th slot (modulo m)

until we nd the key or have examined every slot.

When m is prime or a power of 2, double hashing improves over linear or qua-

dratic probing in that .m

2

/ probe sequences are used, rather than .m/, since

each possible .h

1

.k/; h

2

.k// pair yields a distinct probe sequence. As a result, for

Theorem

Theorem: Given an open-addressed hash table with

load factor = n/m < 1, the expected number of probes in

an unsuccessful search is at most 1/(1).

Proof

At least one probe is necessary

theres a n/m probability that the rst

probe hits an occupied slot and that

another probe is necessary.

(n-1)/(m-1) probability for the second

probe etc.

October 3, 2005 Copvright 2001-5 bv Erik D. Demaine and Charles E. Leiserson L7.24

!"##$%#$%&'(%&'(#"()

Proof.

At least one probe is always necessary.

With probability n/m, the Iirst probe hits an

occupied slot, and a second probe is necessary.

With probability (n1)/(m1), the second probe

hits an occupied slot, and a third probe is

necessary.

With probability (n2)/(m2), the third probe

hits an occupied slot, etc.

D

m

n

i m

i n

Ior i 1, 2, ., n. Observe that

Proof

October 3, 2005 Copvright 2001-5 bv Erik D. Demaine and Charles E. Leiserson L7.25

!"##$%&'#()*(+,-.

ThereIore, the expected number oI probes is

|

.

|

\

|

|

.

|

\

|

|

.

|

\

|

|

.

|

\

|

+

+

+ +

1

1

1

2

2

1

1

1

1 1

n m m

n

m

n

m

n

( ) ( ) ( ) ( )

o

o

o o o

o o o o

=

=

+ + + + s

+ + + + s

=

1

1

1

1 1 1 1

0

3 2

i

i

.

The textbook has a

more rigorous proof

and an analvsis of

successful searches.

October 3, 2005 Copvright 2001-5 bv Erik D. Demaine and Charles E. Leiserson L7.25

!"##$%&'#()*(+,-.

ThereIore, the expected number oI probes is

|

.

|

\

|

|

.

|

\

|

|

.

|

\

|

|

.

|

\

|

+

+

+ +

1

1

1

2

2

1

1

1

1 1

n m m

n

m

n

m

n

( ) ( ) ( ) ( )

o

o

o o o

o o o o

=

=

+ + + + s

+ + + + s

=

1

1

1

1 1 1 1

0

3 2

i

i

.

The textbook has a

more rigorous proof

and an analvsis of

successful searches.

Source

Cormen, T., Leiserson, C., Introduction

to Algorithms. 3rd Ed.

Quiwa, E., Data Structures.

Leiserson, C. Introduction To

Algorithms. Lecture 7 slides.

Você também pode gostar

- Lecture-3 Problems Solving by SearchingDocumento79 páginasLecture-3 Problems Solving by SearchingmusaAinda não há avaliações

- Week13 1Documento16 páginasWeek13 1tanushaAinda não há avaliações

- CDocumento20 páginasCKhondoker Razzakul HaqueAinda não há avaliações

- HashingDocumento9 páginasHashingmitudrudutta72Ainda não há avaliações

- HashingDocumento23 páginasHashingHarsimran KaurAinda não há avaliações

- Unit 3.4 Hashing TechniquesDocumento7 páginasUnit 3.4 Hashing TechniquesAditya SinghAinda não há avaliações

- HashDocumento1 páginaHashSudipta ChatterjeeAinda não há avaliações

- BCS304-DSA Notes M-5Documento22 páginasBCS304-DSA Notes M-5sagar2024kAinda não há avaliações

- Hashing SlideDocumento16 páginasHashing Slidesdsourav713Ainda não há avaliações

- Done DS GTU Study Material Presentations Unit-4 13032021035653AMDocumento24 páginasDone DS GTU Study Material Presentations Unit-4 13032021035653AMYash AminAinda não há avaliações

- Hash TableDocumento31 páginasHash TableShubhashree SethAinda não há avaliações

- Hashing: Why We Need Hashing?Documento22 páginasHashing: Why We Need Hashing?sri aknthAinda não há avaliações

- 1994 - Graphs, Hypergraphs and HashingDocumento13 páginas1994 - Graphs, Hypergraphs and HashingculyunAinda não há avaliações

- Module5 NotesDocumento68 páginasModule5 Notesparidhi8970Ainda não há avaliações

- 6 - HashingDocumento52 páginas6 - HashingShakir khanAinda não há avaliações

- HashingDocumento42 páginasHashingrootshaAinda não há avaliações

- Overview of Hash TablesDocumento4 páginasOverview of Hash TablessadsdaAinda não há avaliações

- Hashing PPT For StudentDocumento53 páginasHashing PPT For StudentshailAinda não há avaliações

- c11 HashingDocumento9 páginasc11 Hashinggowtham saiAinda não há avaliações

- Hash FunctionsDocumento26 páginasHash FunctionsGANESH G 111905006Ainda não há avaliações

- Hash Tables: Map Dictionary Key "Address."Documento16 páginasHash Tables: Map Dictionary Key "Address."ManstallAinda não há avaliações

- Linear Data StructuresDocumento9 páginasLinear Data StructuresY. Y.Ainda não há avaliações

- Module5 HashingDocumento14 páginasModule5 HashingAnver S RAinda não há avaliações

- Chapter10 Part1Documento12 páginasChapter10 Part1Artemis ZeusbornAinda não há avaliações

- Chapter 5 Lec 3Documento37 páginasChapter 5 Lec 3Nuhamin BirhanuAinda não há avaliações

- Lec 5Documento28 páginasLec 5AltamashAinda não há avaliações

- Hash TablesDocumento15 páginasHash TablesRaj KumarAinda não há avaliações

- 10 DictionariesDocumento11 páginas10 DictionariesBrandy HydeAinda não há avaliações

- Symbol Table OrganizationDocumento9 páginasSymbol Table Organizationrobinthomas176693100% (1)

- DS M6 Ktunotes - inDocumento16 páginasDS M6 Ktunotes - inLoo OtAinda não há avaliações

- DS (KCS-301) Unit 3 CSE Sorting and SearchingDocumento27 páginasDS (KCS-301) Unit 3 CSE Sorting and Searchingfijoxa3396Ainda não há avaliações

- Hash-Data StructureDocumento16 páginasHash-Data Structurenikag20106Ainda não há avaliações

- Hashing in Data StructureDocumento25 páginasHashing in Data StructureTravis WoodAinda não há avaliações

- 05 HashingDocumento47 páginas05 HashingcloudcomputingitasecAinda não há avaliações

- DS Lecture - 6 (Hashing)Documento27 páginasDS Lecture - 6 (Hashing)Noman MirzaAinda não há avaliações

- Data Structures Using C'Documento26 páginasData Structures Using C'Avirup RayAinda não há avaliações

- Hashing and Hash TablesDocumento23 páginasHashing and Hash TablesAndre LaurentAinda não há avaliações

- CLRS Linked ListsDocumento6 páginasCLRS Linked ListspulademotanAinda não há avaliações

- Final HashingDocumento41 páginasFinal HashingRajAinda não há avaliações

- Dsa Module 6 KtuassistDocumento9 páginasDsa Module 6 Ktuassistlost landAinda não há avaliações

- Hashing TechniquesDocumento13 páginasHashing Techniqueskhushinj0304Ainda não há avaliações

- Ads-Unit IDocumento16 páginasAds-Unit Irajasekharv86Ainda não há avaliações

- 1 Hashing: 1.1 Maintaining A DictionaryDocumento17 páginas1 Hashing: 1.1 Maintaining A DictionaryselvaAinda não há avaliações

- Questions 11Documento3 páginasQuestions 11test789000Ainda não há avaliações

- HashingDocumento34 páginasHashingAmisha ShettyAinda não há avaliações

- DS Lecture - 6 (Hashing)Documento32 páginasDS Lecture - 6 (Hashing)Lamia AlamAinda não há avaliações

- HashingDocumento20 páginasHashing[CO - 174] Shubham MouryaAinda não há avaliações

- Hash ConceptsDocumento6 páginasHash ConceptsSyed Faiq HusainAinda não há avaliações

- Hashing RPKDocumento61 páginasHashing RPKAnkitaAinda não há avaliações

- Hash TableDocumento9 páginasHash TableMuzamil YousafAinda não há avaliações

- Analysis of Algorithms CS 477/677: Hashing Instructor: George BebisDocumento53 páginasAnalysis of Algorithms CS 477/677: Hashing Instructor: George Bebisபிரசன்னா அகுலாAinda não há avaliações

- Hashing - 1Documento29 páginasHashing - 1pranav reddyAinda não há avaliações

- Data Structure Unit 3 Important QuestionsDocumento32 páginasData Structure Unit 3 Important QuestionsYashaswi Srivastava100% (1)

- What Is Hashing Technique? Describe in BriefDocumento30 páginasWhat Is Hashing Technique? Describe in BriefKONDA ROHITHAinda não há avaliações

- AlgoDocumento11 páginasAlgoMichael DoleyAinda não há avaliações

- CS 561, Lecture 2: Randomization in Data Structures: Jared Saia University of New MexicoDocumento46 páginasCS 561, Lecture 2: Randomization in Data Structures: Jared Saia University of New MexicoΑλέξανδρος ΓεωργίουAinda não há avaliações

- Dsa Module 6 Ktustudents - inDocumento9 páginasDsa Module 6 Ktustudents - inVISHAL MUKUNDANAinda não há avaliações

- Hashing PDFDocumento61 páginasHashing PDFImogenDyerAinda não há avaliações

- Hashing Unit 6 ADSDocumento16 páginasHashing Unit 6 ADSAnanth KallamAinda não há avaliações

- Hashingppts 150618032137 Lva1 App6891Documento30 páginasHashingppts 150618032137 Lva1 App6891Blessing MapadzaAinda não há avaliações

- CH 12 Hash FunctionDocumento23 páginasCH 12 Hash FunctiontartarAinda não há avaliações

- 19MID0068 - Adv Algo - ETH DA-1Documento14 páginas19MID0068 - Adv Algo - ETH DA-1M puneethAinda não há avaliações

- Data Management: INFO125Documento111 páginasData Management: INFO125gAinda não há avaliações

- Searching, Hashing and Sorting: MU Problems (CS)Documento4 páginasSearching, Hashing and Sorting: MU Problems (CS)Yash MulwaniAinda não há avaliações

- Unit5 FDSDocumento21 páginasUnit5 FDSOur Little NestAinda não há avaliações

- The Job Shop Scheduling Problem Solved WTHDocumento30 páginasThe Job Shop Scheduling Problem Solved WTHGastonVertizAinda não há avaliações

- Unit 2Documento26 páginasUnit 2skraoAinda não há avaliações

- 6.034 Quiz 1 October 13, 2005: Name EmailDocumento15 páginas6.034 Quiz 1 October 13, 2005: Name Emailارسلان علیAinda não há avaliações

- 2011S Midterm 1 - KeyDocumento9 páginas2011S Midterm 1 - KeyheyyamofosobAinda não há avaliações

- Greedy Best-First Search NotesDocumento19 páginasGreedy Best-First Search NotesRAMAN KUMARAinda não há avaliações

- 12 Hash-Table Data StructuresDocumento61 páginas12 Hash-Table Data Structuresi_s_raAinda não há avaliações

- CSM6120 Introduction To Intelligent Systems: Informed SearchDocumento58 páginasCSM6120 Introduction To Intelligent Systems: Informed SearchreemAinda não há avaliações

- Gezgin Satici Problemi Icin Sezgisel Metotlarin Performans AnaliziDocumento8 páginasGezgin Satici Problemi Icin Sezgisel Metotlarin Performans Analizitethe heyraAinda não há avaliações

- CHAPTER 8 Hashing: Instructors: C. Y. Tang and J. S. Roger JangDocumento78 páginasCHAPTER 8 Hashing: Instructors: C. Y. Tang and J. S. Roger Janggunjan1920Ainda não há avaliações

- Uninformed Search Strategies (Section 3.4) : Source: FotoliaDocumento46 páginasUninformed Search Strategies (Section 3.4) : Source: FotoliaMohid SiddiqiAinda não há avaliações

- Crypt Hash FunctionDocumento26 páginasCrypt Hash FunctionRAJ TAPASEAinda não há avaliações

- Uninformed Search AlgorithmsDocumento58 páginasUninformed Search AlgorithmsAnkur GuptaAinda não há avaliações

- 6 CSBS 20CB603 QB1Documento10 páginas6 CSBS 20CB603 QB1Jayaram BAinda não há avaliações

- Session 11 ILPDocumento34 páginasSession 11 ILPAtulya AmanAinda não há avaliações

- Fakulti Teknologi Maklumat & Komunikasi Universiti Teknikal Kebangsaan Malaysia Melaka (Utem) Diti 3113 Artificial Intelligence Laboratory 2Documento4 páginasFakulti Teknologi Maklumat & Komunikasi Universiti Teknikal Kebangsaan Malaysia Melaka (Utem) Diti 3113 Artificial Intelligence Laboratory 2Efa ZifaAinda não há avaliações

- 9-Hashing SchemesDocumento23 páginas9-Hashing SchemesDIVYANSHUAinda não há avaliações

- String Matching Algorithms: 1 Brute ForceDocumento5 páginasString Matching Algorithms: 1 Brute ForceAarushi RaiAinda não há avaliações

- Informed Search AlgorithmsDocumento12 páginasInformed Search AlgorithmsphaniAinda não há avaliações

- BFS & DFS AnswersDocumento2 páginasBFS & DFS AnswersUvindu ThilankaAinda não há avaliações

- Edmonds-Karp AlgorithmDocumento4 páginasEdmonds-Karp AlgorithmAmita GetAinda não há avaliações

- Artificial Intelligence Lecture 6Documento62 páginasArtificial Intelligence Lecture 6Alishma KanvelAinda não há avaliações

- Cryptographic Hash FunctionsDocumento40 páginasCryptographic Hash FunctionsPedada Sai kumarAinda não há avaliações

- AIR - Unit IDocumento41 páginasAIR - Unit IDeepali PatilAinda não há avaliações

- Alpha-Beta Pruning-Ref-4 PDFDocumento6 páginasAlpha-Beta Pruning-Ref-4 PDFFaheemMasoodAinda não há avaliações