Escolar Documentos

Profissional Documentos

Cultura Documentos

BST Handout E07

Enviado por

Prodigious JattTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

BST Handout E07

Enviado por

Prodigious JattDireitos autorais:

Formatos disponíveis

DWR/AGS

Digital Data Comms

ROYAL SCHOOL OF ARTILLERY

BASIC SCIENCE & TECHNOLOGY SECTION

Digital Data Communication

INTRODUCTION

omputer-based systems are widespread in weapons systems and many weapons systems now have several computer systems embedded in them. The two systems - weapon and computer - are inextricable linked and, probably, neither would function without the other. The computer functions are often shared between a number of distinct IT sub-systems as this gives resilience. There is also a requirement for these sub-systems to communicate not only with each other but also with the main controlling system and with the human operator(s). Further data communications might be required between one weapons system and another. This handout describes the various techniques used to communicate data between computers and other computers or computer-based equipment. Many of the techniques to be described are also used in PCs, facsimile machines and teleprinters.

Binary numbers usually have more than one digit, just like any other number system, and each digit is stored and transmitted separately from the others. Place Value: the dials on the odometer (mileometer) of a car might read 07352 and we know that the 7 digit, because of its position, means seven thousand whereas the 3 digit means three hundred. This illustrates what we mean by place value and, in decimal numbers the place values are as follows: 105 104 | | 100k 10k 103 | 1k 102 | 100 101 | 10 100 | Units

The place values are based on powers of ten and the least significant digit (the units) is ten to the power Zero. Binary Numbers: these follow the same pattern as decimal numbers except that they are based on powers of two, rather than ten. The place values are: 27 | 128 26 | 64 25 | 32 24 | 16 23 | 8 22 | 4 21 | 2 20 | Units

BINARY DATA

igital systems store data using binary numbers. The prefix bi-, as in bicycle, means two of something and in computers it means two possible states of a signal. These two states are designated Zero and One and some of the ways commonly used to represented them are described in the following:

Voltage: Zero Volts represents the Zero state and Five Volts represents the One state. This might be used for used when storing data in a memory chip. Magnetism: a South Pole represents the Zero state and a North Pole represents the One state. This might be used when storing data on a floppy disk. Frequency: a low frequency represents the Zero state and a high frequency represents the One state. This might be used to send data or fax signals along a telephone line. Light: A change from high brightness to low brightness represents the Zero state and a change from low brightness to high brightness represents the One state. This might be used to send data along a fibre-optic cable. Phase: a phase change of +90 represents a One and a phase change of 90 represents a Zero. This might be used when sending data along a telephone line.

The binary representation is used because it is relatively easy and very fast for an electronic circuit to distinguish between just two states. Any binary digit is either one or zero, with no halfway.

Thus, the binary number 01000110 represents 64 + 4 + 2 or the decimal number 70. The decimal number 47 can be expressed in binary as 00101111 or 32 + 8 + 4 + 2 + 1, remembering to use a zero digit in the place values that are not used. Digit Grouping: a single-digit number is limited in what it can store and most numbers require several digits, depending on their purpose. The Trip Meter of a car generally has three digits for miles (and a further one for tenths of a mile), so it can display up to 999.9 miles (effectively, this is one-thousand miles). The odometer of a car often has six digits and can display up to onemillion miles. People generally group denary digits in threes but binary numbers are grouped as required by the data processing system in use. The most common grouping is the Byte. Byte: a group of eight binary digits is called a byte and this is the standard unit of computer data storage. A byte can store numbers between 00000000 (Zero) and 11111111 (255) so numbers outside that range will need more than a single byte to store them. A byte has the capacity to store 26 capital letters, 26 lower-case letters, punctuation, numbers and many special characters (e.g. , , , ). A byte can be thought of as the amount of data generated by one key-press. Hexa-decimal: representing decimal digits directly in bytes (binary-coded decimal - BCD) is not very efficient because a byte can have values from zero to 255

7 Jul 05

E07-1

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

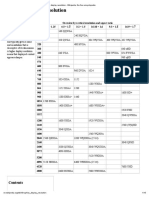

Decimal 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

Hex 0 1 2 3 4 5 6 7 8 9 A B C D E F

Binary 0000 0001 0010 0 0 11 0100 0101 0 11 0 0 111 1000 1001 1010 1 0 11 11 0 0 11 0 1 111 0 1111

01011001 0101 1001 0101 1001 001 011 001

= = =

89 59 59

denary (64+16+8+1) hex (516) +9 BCD (510) +9 Octal (164) +(38) +1

= 131

Figure 2: Types of Binary Number contains eight octals as well as three bytes. IFF codes use Octal encoding.

REPRESENTING TEXT IN BINARY

essages containing text and numbers are represented in digital systems as a series of bytes where each byte corresponds to a letter, digit, punctuation mark, etc. There are several different coding methods but the ASCII (pronounced ass-key) Code is very common and will be used here. Each datum to be coded has a number in the range that one byte can store (i.e. Zero - 255). For example, the letter A has code number 65 (six tens and five units) in decimal, 01000001 (one sixty-four and one unit) in binary or 41 (four sixteens and one unit) in hexadecimal whilst the letter a has the code 01100001b or 97d or 61h where the subscripts b, d and h are used to identify binary, denary and hex numbers, respectively. Often, the number in a byte will represent something other than a letter, for example,t he brightness of a pixel in an image.

Figure 1: Conversion between Decimal and Hex whereas a decimal digit uses only zero to nine. If a byte is divided into two sets of four-digit numbers (called nybbles) then the situation is slightly better, because each half can hold numbers up to fifteen - but there is still some inefficiency if decimal digits are used as these do not exceed nine. Hexa-decimal numbers are based on sixteen and have values from zero to fifteen - requiring four bits, that can be fitted, without waste, exactly two to each byte. The hexa-decimal notation (or hex) requires digits from zero to fifteen, and uses the same zero to nine as decimal digits, but also letters A to F to represent digits ten to fifteen. This is illustrated in Figure One. The place values for hex are based on sixteen, in the sam way that the place values for decimal numbers are based on ten, as follows: 163 | 4096 162 | 256 161 | 16 160 | Units

UNICODE

Thus, the hex number AB represents a ten in the sixteens place and an eleven in the units place. That corresponds to decimal 160 + 11 = 171. The decimal number 124 can be broken down into seven sixteens (112) and twelve ones to give the hex number 7C. (The letters in hex numbers may be typed in either lowercase or upper-case.) Words: groups of binary digits may also be called Words and they might contain any number of bits. In Windows, a Word is sixteen bits (2 Bytes) and a DWord (double-word) is 32 bits (4 Bytes). A word is a group of bits that are treated as a single unit but the particular system in use. Octal: when bits are treated in groups of three then the numbers that can be stored in each digit range from zero to seven (eight values), and place values are based on powers of eight. This grouping is called Octal and implies that the bits are processed in threes. In this system, a Word is often 24 bits as this conveniently

o enable computer font files (e.g. Times New Roman) to contain letters and symbols from many languages (e.g. Cyrilic, Greek, Chinese) then the allocation of one byte to store the code for a symbol is insufficient, as this only permits 255 different symbols. Unicode, which uses two bytes to store the code for each of the symbols. Unicode allows up to 65 535 different codes and each font file can store every character from every human language - and still have spare capacity. In Unicode, each keypress generates two bytes of data - compared to one byte when using ASCII.

E07 Digital Data Comms.QXD

E07-2

7 Jul 05

DWR/AGS

Digital Data Comms

TRANSMITTING DATA IN BINARY - PARALLEL

o send a digital representation of a letter A from one place to another then parallel transmission can be used. This allocates one wire or track on a printed circuit board to each binary digit and sets it to 0 V or 5 V depending on the binary digit. Thus, eight parallel wires are required to carry the data and, to avoid interference between the signals in the parallel wires (cross-talk), eight earth wires are often used, placed one between each data wire. This is illustrated in Figure Three. In addition to the sixteen wires that might be used to carry the data there are additional wires used for signalling, for example: a signal that the receiving end is ready for the data to be sent. a signal that the transmitting end is ready to send. a signal that the data have been sent. a signal that the data have been received.

Types of parallel systems that you might encounter are: IDE, E-IDE, ISA, E-ISA, VESA, SCSI, PCI, AGP, Centronics, IEE488 and Euro-Bus. Unfortunately, most of them can only be directly connected to another bus of the same type and conversion from one to another can be difficult. All parallel systems share the same basic properties as outlined above.

TRANSMITTING DATA IN BINARY - SERIAL

The cable used to transfer data between a PC and a printer has 36 conductors, including one to signal when the printer has run out of paper. Ribbon cables, used inside computers and other electronic equipment to convey digital data from one place to another, might have fifty or sixty parallel wires. Some parallel connections allow for a number of devices to be connected. These are often called a bus from the latin omnibus, which translates as for all. A bus requires additional wires to identify which device is to handle the data and whether it is to send data or receive it. The wires that carry the data form the data bus; those that identify the location of the data form the address bus and those that carry the signalling data are the control bus. Parallel transfer is a short-range system as the expense of the cables and connectors increases rapidly over long distances and for complex systems. There are additional problems when attempting to use it at high speeds or over long distances, because minor differences between the wires can cause some signals to arrive later than others. However, for short distances, parallel transmission offers medium to high speed as many bits are sent simultaneously (as many as 64, or 8-bytes, at a time). Parallel transfer is used in PCs to transfer data between motherboard and hard disk (ribbon cable) and from CPU to memory (parallel tracks on the motherboard), for example.

or long transmission distances, or where there is insufficient space for a large cable, it becomes impractical to send each bit along its own, dedicated wire as in parallel transmission, and an alternative called serial transmission, is used. In the serial system, the bits are sent one-by-one along a single pair of wires and re-assembled at the far end. For serial transmission, each Byte is placed in a shift register. This is a special type of memory in which the bits move one position along in response to a shift signal applied to one of its control lines. (Often, the bits circulate so that they are all back in their correct places after eight shifts.) The sequence of events, might be as follows and illustrated by Figure four.: The byte to be transmitted is moved into the shift register. The bit on the end of the shift register is sent along the wire. The Byte is shifted one place, the (new) bit on the end is sent along the wire. This process is repeated until all eight bits have been sent. Meanwhile, at the far end of the line, the receiver accepts each bit in turn and shifts it into its register.

Thus, after eight operations of the shift register, a Byte has been sent. Obviously, some means is required of synchronising the shift registers at each ends and that will be covered later. The waveform of the signal that was sent is illustrated at the bottom of Figure Four. This is a rectangular

A

0 1 0 0 0 0 0 1 0V 0V 0V 0V 0V 0V 0 1 0 0 0 0 0 1

0 1 1 1 0 1 0 0

Tr an s

m it

IN

OUT

0 1 1 1 0 1 0 0

Figure 3: Parallel Transmission of One Byte

Figure 4: Serial Transmission of One Byte

7 Jul 05

E07-3

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

wave where consecutive signals (e.g. 111) are merged into one long pulse and there is no return to zero between such pulses. This is called a non-return to zero (NRZ) waveform.

PROPERTIES OF SERIAL TRANSMISSION

modated in one byte can be allocated to commands (e.g. stop transmitting) so the data lines can be used to carry control signals (this is called software flow control and the codes are called X-ON and X-OFF). Examples of serial transmission that you might encounter in computing are: IEE-1394 (Fire-wire): used for high-speed data, such as digital video USB (Universal Serial Bus): used to connect external devices (e.g. scanner, modem) to a computer. SATA (Serial ATA - used to connect a computer motherboard to a hard disk, inside the computer. RS-232, RS332, RS422: the serial port on a computer (obsolete, as USB replaces it). PCI-Express: the latest replacement for PCI and AGP connections on computer motherboards.

he advantage of serial communications is that only one pair of wires or one communications link is required to carry the information. It seems that, under identical conditions, the transmission of a Byte by serial means would take at least eight times longer than if a parallel link were used to transmit the same byte. However, a serial link can be operated at a much higher speed than a parallel link because all the bits travel down the same piece of wire and, therefore, arrive at the other end in the correct sequence. With a parallel link, there might be sixteen, different wires (running side-byside) and the bits can travel at different speeds in adjacent wires. This limits the speed at which parallel links can be operated, because the system has to allow time for all bits from one set to arrive before sending the next set. Consequently, few parallel links operate faster than about 100 MHz whereas serial links of 10 GHz are available. When information is to be transmitted in both directions then this normally requires a minimum of three conductors: an earth or common wire. a wire to carry data out of the data transmitter a wire to carry data into the data receiver.

Depending on the methods used to transmit the data, it is possible to use the same wire to send and receive data - and to do this simultaneously - so just two wires can be used. PC modem communications to the Internet, that use ordinary telephone lines, transmit and receive data using just two wires. Simplex: communications links that are capable of conveying data in only one direction obviously need two channels to conduct a two-way conversation - one for each direction. These are called Simplex channels. Half-Duplex: where the channel is bi-directional, but can only be used in one direction at a time then it is called Half-Duplex - many radio nets use this technique and the person transmitting says Over when he has finished so that the other person knows that he can begin to transmit. Duplex: when a channel can be used in both directions at once (e.g. telephone cable) then it is called Duplex or Full-Duplex. (Mobile phones use two simplex channels - on different radio frequencies - to simulate a duplex system.) Control: additional wires can be employed for handshaking - to assist in controlling the flow of data and for signalling tasks (this is called hardware flow control). For example, a Modem that answers an incoming call uses a dedicated line to signal to the computer that it has detected an incoming call and various other lines to keep the computer informed about the status of the call. Alternatively, some of the 255 codes that can be accom-

E07 Digital Data Comms.QXD

E07-4

12 Nov 04

DWR/AGS

Digital Data Comms

PROPERTIES OF COMMUNICATIONS LINES

ommunications links, be they radio, telephone or dedicated cable, all have their practical limitations. You are no doubt aware of the differences between the sound of a piece of music played on a Hi-Fi and the same music heard on the telephone. This is mainly due to differences in bandwidth between the two systems. The Hi-Fi has a bandwidth ranging from about 20 Hz to 20 kHz whereas the telephone only allows 300 Hz to 3,400 Hz. Since audible sounds range from 20 Hz to 20 kHz, then the telephone gives only an impression of the sound - sufficient for voice communications but inadequate for music. Bandwidth. The bandwidth of many communications lines does not extend to Zero Hz (dc) - this means that dc signals (steady values that change relatively slowly, if at all) cannot be communicated down such links in their original form. The bandwidth of a signal always has an upper limit (for telephone signals it is about 3.4 kHz, for television signals it is about 5.5 MHz) and the expense of setting up and running the communications link increases as the bandwidth increases. If the bandwidth of the computers signals exceeds the bandwidth available in the cable, radio link or telephone system then the signals will be unlikely to arrive at the far end in a usable form. Capacitance. Many communications links contain capacitors and, when transmitting ordinary voice signals (with equal positive and negative parts of the signal) the average charge in these capacitors is zero so they do not cause problems. However, you should be able to see from Figure Three that the example of a computer data signal is never negative - always zero or positive and this causes the capacitors to gain charge during the transmission of the data signal. A long series of binary Ones will tend to charge the capacitors towards 5 V whilst a long string of Zeroes would tend to discharge them. On average they might reach half-way. Distortion. The shape of the wave that arrives at the far end of a communications link should be the same as the shape of the original wave. Even if there is sufficient bandwidth, the shape of the wave can be altered . This is because capacitance and inductance in the system reduce the amplitude of the some frequencies by a greater amount than they reduce the the amplitude of others. This distortion can cause problems when data are transmitted. Noise and Interference. This is an irregular signal that arises from a variety of sources in the communications channel. In telephony, it can be heard as a hissing or rushing sound; a noisy television picture is indistinct with a granular appearance. Impulsive interference, produced by electrical machinery (even light switches) could exceed 2.5 V in our computer data link and cause a signal that had been sent as a Zero to be received as a One and vice-versa. This is illustrated in Figure Five. Dispersion. When white light passes through a prism then the individual colours appear as a rainbow. This splitting arises because the different frequencies of light (which we see as different colours) travel at different

speeds in the glass. This effect is called dispersion. The different frequencies of electrical signals travel along a cable at different speeds and, as in the beam of white light example, they spread out as a result. In a comms link, a single pulse (e.g. a binary One), that contains a mixture of harmonic frequencies, gets separated into its various harmonics as it proceeds along the cable. The problem of dispersion worsens as the length of the cable increases until some of the slower-moving frequencies from one pulse fall behind and get mixed up with some of the faster-moving frequencies from the following pulse - the pulses tend to merge together and the computer cannot recognise the data. Attenuation. As the signal travels along the cable or radio link then its power reduces as some is absorbed by resistance and other losses in the line. A short cable (few metres) often has negligible loss (attenuation) but the losses mount as the length is increased - and they get worse at higher frequencies (i.e. at higher data rates). Amplifiers (called line drivers) can be used to boost the signal before it enters the cable but they dont cure dispersion and bandwidth problems. Repeater amplifiers can be used at intervals along a cable but they will also amplify any noise and distortion present so they have limited value. Digital lines can use re-generators - these must be located sufficiently close together along the line so that the data signal is still detectable. The re-generator reads the data and uses switching circuits to re-form the pulses into the correct shape before forwarding them to the next part of the line. Reflections. Electrical signals travelling along wires will reflect, either wholly or partly, whenever they encounter a change in the electrical properties of the line. An open-ended cable or a socket without a cable plugged into it are typical examples of discontinuities that can cause reflections. It can become impossible to receive any data at all when reflections arise as for each pulse that was transmitted there might be several duplicates produced by reflections. Correct termination of cables is essential - co-axial cables typically have an electrical impedance between 50 and 75 so a 50 or 75 resistor is normally connected at the end of every bus, cable, etc., to prevent reflections. [Some very

Figure 5: Noise and Distortion Added to a SIgnal

7 Jul 05

E07-5

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

0

1 Signal 1 0 0 1

This bit changes to Zero!

Signal 2

0

1 0 1 1 1 0

This bit no longer changes to Zero!

1 Signal 1 Signal 2 0 0 1

Figure 6: Data Changed by Noise & Interference fast systems, in modern PCs, have more complex means of eliminating reflections at the end of cables, called Active Terminations. Without these, the bus fails to transfer data reliably.] The combined effect of the above problems is illustrated in Figure Six which shows how a byte of serial data might get distorted whilst passing along a comms link. The effect of interference and distortion is usually to flip one or more bits in the signal. In plain text, a letter b could become a r but the operator would probably notice this and correct a spelling error. If the signal were from a cash machine to your bank to inform it that you had taken out 25 then you would not be impressed if some electrical interference changed the sum involved to 65! In a weapons system, data corrupted during transmission is most undesirable too. Errors can be detected - so that a re-transmission can be made - or detected and corrected - so that no retransmission is needed. This will be covered later.

Figure 7: Differential Signalling to Reduce the Effects of Interference remains at 5 V so the interference is rejected by the receiver. The signal is said to operate in differential mode (opposite signals on each wire) whilst the interference is common mode (same signal on each wire). This is illustrated in Figure Seven, which shows the same signals as Figure Six but with differential signalling. The interference that corrupted the bit in Figure Six now has no effect on the same bit in Figure Eight. Under normal conditions and using similar signals and cables, an unbalanced system, such as RS-232, can operate up to about 10 m whilst a balanced system, such as RS-422, can operate up to about 1 km. The extra range is due to the resistance to interference provided by the balanced system. The unbalanced system needs three wires whilst the balanced system needs four wires well worth the expense for the benefits achieved.

REDUCING INTERFERENCE

fibre-optic cable is immune to interference because interference is electrical and fibre-optics uses light signals - not use electrical signals. At some point, the light signals will probably be converted back into electrical ones but this should take place in a shielded enclosure to minimise interference. Where copper cables are used then a method called differential signalling can be used. When an ordinary signal passes along a wire as, for example, a positive signal - this is a single-ended system as the common, or earth, wire remains at zero volts and only the live wire has the signal on it. A differential transmission system uses two wires (a pair) - neither is earthed - and antiphase signals are sent along the pair to the other end which only responds to differences between the wires. This can also be called a balanced system of transmission. For example, a 5 V signal would be sent as +2.5 V on one wire of the pair and -2.5 V on the other. At the far end, the difference between the wires is taken as the signal. If any interference enters the system then it will affect both wires equally so that, for example, 1 V of interference would add to each wire and make one +3.5 V and the other -1.5 V. Nevertheless, the difference

BANDWIDTH

he digital signals described above contain harmonics that cover the frequency range from zero (dc) to several times the pulse repetition rate. Most transmission paths (e.g. radio, telephone, satellite) do not permit the passage of dc and they are not very effective at carrying the sharp edges of rectangular waves either. In other words, you cant send digital data signals down a transmission link using the waveforms illustrated above because their bandwidth does not match. One solution to the mismatch is to use a carrier signal that is compatible with the transmission link and to change the carrier (modulate it) using the computer signals. One simple example of this is frequency modulation. In its simplest form, a binary digit of Zero causes a low frequency (e.g. 1 200 Hz) to be transmitted whilst a binary digit of One causes a high frequency (e.g. 1 400 Hz) to be transmitted. This is also called frequency-shift keying (FSK) and was used to transmit teleprinter data.

E07 Digital Data Comms.QXD

E07-6

12 Nov 04

DWR/AGS

Digital Data Comms

START

0 0 0 1 1 1 0 0 1 1 Direction of travel

Direction of travel

Figure 9: A Frame, with Start & Stop Bits data are about to be sent in order to allow the receiver time to prepare to accept it. Recognising the end of the data. If the cable became disconnected in the middle of sending some data then the receiver might interpret the missing data as all Zeroes (since the voltage would fall to zero). A signal is needed at the end of the data so that the receiver can be sure that correct number of bits was received. Some slower systems, e.g. mechanical teleprinters require time to get ready for the next character and this time influences the duration of the stop signal. The Ready Signal. In between transmissions, the receiver needs to be aware that the transmitter is powered up and liable to send at any moment. A Zero signal on the line would not suffice as a disconnected line would give this. A signal that says idle but ready is required. In many systems this is a steady One signal. If ever you have dialled a fax machine then you will have heard the high-pitched whine that it produces - this is the carrier signal - it contains no data yet, but you know that it is there! Some communications links (those with dedicated wires) send a steady 5 V or 12 V, instead of an audible tone, to indicate that the transmitting device is powered up and ready. The message (data). The data to be sent fit in between the start and stop signals. The data can be of any length but many are quite short for reasons that will be covered later Frame: the entire signal is called a frame. The start signal is a change from the ready state (binary one) to Zero. This lasts for one bit. The next eight bits are the data and the final bit, always a binary One, marks the end of the frame of data. The stop bit must be a One because this re-sets the line for the next start bit. The line may remain idle (set to One) for an indefinite time until the next byte is ready for transmission. Baud Rate: the rate at which the pulses are sent down the line is called the Baud Rate. A signalling rate of 2 400 Baud is possible on a telephone line. This corresponds to the number of changes of signal - per second - that can be sent down the transmission link.

Figure 8: Types of Modulation (Upper) Frequency Modulation (FSK) (Middle) Amplitude Modulation (ASK) (Lower) Phase-Modulation (PSK) A sample waveform is shown in Figure Eight. In the Figure, the byte 00111001 is shown being transmitted using simple FSK. The digital data may also be used to modulate the amplitude or phase of the carrier signal, as shown in Figure Eight.

ASYNCHRONOUS TRANSMISSION

ost data are not sent continuously - rather they are sent as and when necessary, with breaks in between. (A teleprinter is an example of this where a character is transmitted each time the operator presses a key.) The time between one character and the next is variable and unknown and this is called asynchronous transmission. Much serial traffic passes in this way, including that sent via a PCs Modem to the Internet, etc. It relies on using special indicators to signal the start and stop of the binary data being transmitted. Recognising the start of the data. The digital signals from each byte contain Ones and Zeroes. If a signal of 00010000 were to be sent then how would the receiver know that the signal had begun? The arrival of the One digit in the middle is a clear indication that data are present but the receiver has already missed the start as it contained several Zeroes - and there is no way of going back. Clearly, some method is required of signalling that

7 Jul 05

E07-7

E07 Digital Data Comms.QXD

STOP

Digital Data Comms

DWR/AGS

DECODING STRATEGY

he receiver requires timing information in order to decode the incoming signal. The standard strategy is as follows: The receiver monitors the oncoming carrier waiting for it to change from One to Zero - the start bit. The receiver knows the parameters of the transmission because the operator has set them (e.g. 1 200 Baud, 8 data bits, one stop-bit) or because the receiver has previously performed test communications with the transmitting device to determine them. Upon receipt of the start bit, the receiver waits for 1.5 pulse-periods and then tests the signal on the line. At 1 200 Baud, this will be 1.5 / 1200 or 1.25 ms. This should be right in the middle of the first data bit. This bit, zero or one, is shifted into its receiving register. The receiver continues to test the signal on the line at intervals of one pulse-period, 1 / 1200 s or 833 s, until eight bits (one byte) have been received. The receiver then tests for the stop bit by waiting one further pulse-period before testing the line for a One.

twenty percent of a bit-period - not enough to miss the stop bit as this is tested at its expected 50% point (midpoint), whilst the error would test at the 70% point or 30% point - still well within the safe time. In this example, a timing error of 5% would be the greatest consistent error that could be tolerated. A random error greater than this might not interfere with communications as it might consist of some gains and some losses of time which could partly cancel each other.

SYNCHRONOUS TRANSMISSION

n weapons systems, the data do not usually travel along a telephone line although the same considerations apply for most cables and radio links. Where the computer system has its own dedicated cables, not shared with telephony signals, then a different system can be used. Computers can easily generate data at a rate of Mega-bits per second whilst ordinary communications channels are several hundred times slower. Bigger Packets: when a dedicated cable is used then the bandwidth can be much greater so the data can be transmitted at a much higher rate. This also enables the use of much bigger frames (packets) of data - containing 128 or 256 bits, for example. Fewer start and stop bits are needed so more data can be transmitted. Eliminating DC. To eliminate any stray dc signals, the connection between the cable and the computer system can be made using a transformer (which does not pass dc) or opto-couplers (which pass the data using light signals) and capacitor filters (to remove dc). This means that the signalling method chosen must produce signals with an average value of Zero Volts - i.e. with no dc content. Reducing Spurious Detection. In Manchester Encoding, a binary One is represented by a signal that is positive for half of the bit-period and then changes to

Errors. If the stop bit is not received when expected then a Framing Error is generated. This usually occurs when the receiver is set to the wrong Baud rate and it ends up looking for the stop bit at the wrong time. It can also occur if the cable is shorted or dis-connected during transmission. Timing Accuracy. The receiver examines the incoming signal in the middle of each (expected) bit-period. The message lasts for ten bit-periods and this is the time during which the receiver must remain synchronised to the bit stream. A timing error of two percent will not affect the accuracy of reception because, even after ten bitperiods have elapsed, as this would only amount to

Start 0 0 1 1 1 0 0 1 0 1

Direction of travel

etc....

Pos Neg

This bit is a Zero because it goes from neg to pos half way along. This bit is a One because it goes from pos to neg half way along.

Figure 10: Illustrating One Type of Manchester Encoding for Synchronous Transmission

E07 Digital Data Comms.QXD

E07-8

12 Nov 04

DWR/AGS

Digital Data Comms

negative half-way through. A binary Zero is negative for the first half of the bit-period and then changes to positive half way through. The average value of each bit is zero because each is encoded with a 50% positive and 50% negative signal. If the change does not occur close to the middle of the bit-period then an error is flagged. Long Strings of Identical Bits. If a data message contains lots of Ones or lots of Zeroes then it becomes difficult to identify where one ends and the next one starts. (This is a problem with the non-return-to-zero (NRZ) signal - if the next bit has the same value as the current bit then the signal transmitted remains constant. This is shown in Figure Ten where three successive Ones appear in the middle of the signal.) A string of thirty successive Ones might be decoded as thirty-one or twenty-nine because the receiver loses synchronisation. Embedded Timing: In Manchester Encoding, there is a change from positive to negative (or vice-versa) in every bit - the receiver can easily identify each bit because the timing information is included in the data. Longs strings of ones or zeroes cannot merge together in this system. Start Bit. This signal must identify clearly the start of a message and, therefore, it is usually formed differently from the message that follows it. In FSB2, the start-bit (also called a sync-bit) spans two or three bit-periods (depending on the type of message that follows it) and it only changes once during that time. This marks the beginning of a transmission in a distinct way as the initial pulse is two or three times longer than any other in the system. Since the start bit is used to synchronise the receiver to the transmitter then it is often called the sync signal. Typical Manchester Encoded signals are shown in Figure Ten. Stop Bit: A useful check that the entire packet was received - however, this can also be accomplished by counting the number of changes that were identified in the packet. Padding: when the amount of data to be sent is less than a complete packet then the packet can be padded with zeroes (or ones) to ensure that it is the correct length.

PARIT

STAR

Direction of travel

Figure 11: A Complete Data Frame, with Start Bit, Stop Bit and Parity Bit

7 Jul 05

STO

E07-9

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

Noise / Interference + Errors

Data Source

Parity or: Encoding

Comms Channel or storage device

Parity check or: Decoder detect / correct

Data Sink

Figure 11A: Error correction principle

THE IDEAL TRANSMISSION

Thus, the byte 00111001 would be sent as: Start 0 Data Bits 00111001 Parity 0 Stop 1

he main ideals of message transmission are to send the information as fast as possible down a channel that has a given bandwidth, using the least amount of power with no errors in the received message. In any comms system there will be noise and interference which degrade the signal and will cause errors. There are two main ways to reduce the frequency of bit errors. One method is to increase the signal to noise ratio, which may cost in the form of higher power delivery, or the receiver electronics could be made more sophisticated and again this will cost money. The second method is to add additional parity bits in order to perform an error check on the data bits. This second method obviously costs too, because if the rate of transmission is not altered, the data will take longer to send. If the data rate is to be maintained to the speed before adding the parity bits, then the bandwidth must increased accordingly.

The nine bits, data plus parity, contain four Ones an even number and this is illustrated in Figure Ten. If a bit is changed during transmission then the receiver will decode nine bits that have either three or five Ones. These are odd numbers so a parity error will be recognised. The transmission must be repeated until a correct frame is received because simple parity does not identify which bit is wrong. The byte 00100110, which currently has an odd number of Ones needs a parity bit of One and would be sent as: Start 0 Data Bits 00100110 Parity 1 Stop 1

ERROR DETECTION - PARITY

ou have already seen, in Figure Four, how a noise or interference signal can change a bit and, therefore, corrupt the signal. When noise and interference levels are fairly low then this is a rare event and the vast majority of bits arrive at the destination. However, errors can occur during transmission and it is often necessary to be able to detect them. When an error is detected then the receiver can signal for a re-transmission sometimes called Automatic Repeat on Request (ARQ) and it requires a duplex channel. When many errors are detected then the receiver might signal that a lower Baud rate should be used - this narrows the bandwidth and reduces the amount of noise and interference. Errors can be detected using a simple system called parity where the transmitter counts the number of Ones in a frame of data and ensures that this number is always even. This works as follows:

CHECKSUM COMPUTATION

The following example uses decimal numbers for simplicity; normally it would be done with hexadecimal numbers and many more digits. The message to be sent is a stream of eight numbers and we want to ensure that no changes occur during transmission. The numbers are: 03249856 Compute a checksum by multiplying the first digit by 9, the next by 8, the next by 7, etc. and add together all the results to get: 166. Next, divide this by 25 and find the remainder (16). Add one to this to get 17 and find the 17th letter of the alphabet Q - this is the checksum and it is placed at the end of the digits to be sent. Thus, the numbers are sent as: 03249856Q Upon receipt of the message, the receiver repeats the calculation and verifies that it comes to Q - the checksum that is included in the message. Any changes to the data will produce the wrong checksum. In practice, more complicated methods may be used to calculate the checksum, but the principle is generally the same as that described here.

The frame is extended by one bit - the parity bit. If a frame with an odd number of Ones is about to be transmitted then a One is placed into the parity bit. There will now be an even number of Ones. If the frame already has an even number of Ones then the parity bit is set to Zero. The start and stop bits do not count as they are not part of the data

E07 Digital Data Comms.QXD

E07-10

12 Nov 04

DWR/AGS

Digital Data Comms

We now require a frame of duration eleven bits in order to transmit eight bits of data - an efficiency of 8 / 11 or 73%. Some systems use odd parity, where the number of Ones is made up to an odd number. Parity fails when two bits have been changed - but that is very rare and it would represent a situation where practically every frame would have been corrupted. In that case, communication would be practically impossible at the selected Baud rate as re-transmitted frames would be clogging up the system. Checksum: parity works well when there are few errors and short frames. For longer frames, with many more bits, then there is a significant chance that two or more bits might be altered during transmission - and parity might fail to detect this. Any long stream of data (e.g. a computer file saved on a disk or a long packet sent using synchronous transmission) has a checksum figure that is calculated by the originator of the data. This might be a 32-bit number calculated by multiplying the bytes of the message by a series of numbers and adding them all together. The resulting checksum is added to the message. When the data are received then the receiver re-calculates the checksum and compares it with the original. Any changes to the data will alter the checksum and it is most unlikely that several changes could cancel out and restore the original checksum. This method is also called a cyclic redundancy check. Checksums are also used by some computer programs to determine whether a file has been tampered with, for example by a virus, as it is very difficult to change a file whilst keeping the same checksum - espe-

cially if the location of the checksum in the file is not known or when the checksum is stored elsewhere.

FORWARD ERROR CORRECTION (FEC)

The key to error correction is redundancy. Indeed, the simplest error-correcting code is simply to repeat everything several times. If, for example, you anticipate no more than one error to occur in transmission, then repeating each bit three times and using "majority vote" at the receiving end will guarantee that the message is heard correctly (e.g., 111 000 011 111 will be correctly heard as 1011). In general, n errors can be compensated for by repeating things 2n + 1 times. Simple parity, as described above, checks the validity of a message but it cannot determine which bit or bits are in error and it cannot correct the problem. Simple parity is an error detection method. More advanced methods can not only detect an error but also correct it immediately. This is achieved by increasing the number of bits in the message and, therefore, reducing the efficiency of transmission. There are three common methods used for error correction: block parity, Hamming and ReedSolomon. Block Parity. Consider a set of eight Bytes with simple parity that are to be transmitted. The set of eight is considered to be a block of data and can be thought of as eight rows of data. As each Byte has its own parity then each row has nine bits. If a ninth row were added and its bits used as parity bits for the columns of the block then we will have both horizontal (row) parity and vertical (column) parity. When an error occurs then two parity bits will identify it as the bit at the intersection of the row and column with the error. This is illustrated in Figure Eleven. The block of data has one error and the row parity is wrong in row 5 whilst the column parity is wrong in column 4 (numbering columns from the right, starting at Zero). To correct the error, bit 4 of Byte 5 needs to be changed. Efficiency. The block parity method works well when there a few errors but it is inefficient unless there are eight bytes ready to be sent. If fewer bytes are to be sent then the unused ones are filled with zeroes and this represents an inefficient use of the system. When all eight bytes are in use then there are 64 data bits and 17 parity bits - an efficiency of 64/81 or 79%. This falls to just 10% if only one byte contains real data.

TRANSMITTED DATA BLOCK Data Byte 01100110 11011010 10000000 01111111 00000000 11111111 10110111 00100011 00101000 Parity 0 1 1 1 0 0 0 1 0

Row1 Row2 Row3 Row4 Row5 Row6 Row7 Row8 Parity

RECEIVED DATA BLOCK BIT ERROR RATE PROBABILITY Row1 Row2 Row3 Row4 Row5 Row6 Row7 Row8 Parity Data Byte 01100110 11011010 10000000 01111111 00010000 11111111 10110111 00100011 00101000 x Parity 0 1 1 1 0 x 0 0 1 0

hen a coin is tossed, there are two possible outcomes (Head or Tail) and these outcomes are equally likely. The probability in laymans terms is 50-50. Mathematically speaking, the probability of getting a head is 1/2 = 0.5 or 50%. All three methods of quoting the result are valid. In Data Comms, the mathematical probability of an error occurring cannot be determined, but we can make an estimate by counting the number of errors that occur over a given time interval. The estimate is determined like this:

Figure 12: Block Parity Check to Correct an Error

Bit Error Rate probability = Number of error bits Total Number of bits sent

7 Jul 05

E07-11

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

The longer the period of time over which this experiment is performed, the more accurate the estimate will be. The resultant (BERP) will lie between 0 and 1. Stated below are bench mark values in comms. Of course the qualitative nature of these benchmarks depend on the criticality of the data. BERP = 0.1 Very poor (one in ten bits are errors) BERP = 0.001 Average (one in 1000 bits are errors) BERP = 0.000 001 Good (one in a million bits are errors). In scientific notation the above are written like this 101, 103, 106 The computer industry standard allows for an error rate of 1013 , that is less than one uncorrectable error in 1012 (one million million) bits. The audio industry is a little more forgiving. DAT assumes a data error rate of 105 (at 48kHz) which works out at about two errors every second. Optical media has a high error rate of 1 bit out of every 100 000, but magnetic disk has a much lower error rate of one bit out of every thousand million.

SN Ratio (dB) 3 6 10 20 40 60

Factor 2x 4x 10x 100x 10 000x 1 000 000x

A television engineer will monitor this dB level using a meter for every channel that your aerial is capable of receiving. A reading of over 60dB is desirable for a clear pictures from analogue systems (ie normal terrestrial television channels from a transmitter like Mendip that serves the South West. A reading of 30dB will give a snowy picture. In digital data comms, the value does not need to be any where near this high. We will discover below that Signal to Noise Ratios as low as 10dB can give good results.

COMPARISON OF DIFFERENT COMMS SYSTEMS

We will discover that for any Comms system, the BERP will decrease (improvement) when the Signal to Noise ratio is increased (improvement). If there is an inherent low Signal to Noise Ratio, that cannot be improved upon, the BERP can be improved by the use of a Hamming Code (see below). If the Signal to Noise Ratio is high, then it turns out that the BERP is not improved with the use of a Hamming Code. Hence there will be a turning point when the two systems give equal BERP. The choice of system then will be decided on other mat-

SIGNAL TO NOISE RATIO

good Comms system has a high signal to nose ratio and is usually expressed in dB. Here are some examples of what is means: For each dB quoted, the factor is the number of times more powerful that the signal is in relation to the noise.

Figure 18: Baseline PSK BERP plotted against S-N Ratio

E07 Digital Data Comms.QXD

E07-12

7 Jul 05

DWR/AGS

Digital Data Comms

Dec

Inputs

Output

0 1 2 3 4 5 6 7

0 0 0 0 1 1 1 1

0 0 1 1 0 0 1 1

0 1 0 1 0 1 0 1

0 1 1 0 1 0 0 1

DDDD PPP DDDD PPP DDDD PPP DDDD PPP 3210 210 3210 210 3210 210 3210 210 -------------------------------------------------0000 000 0100 110 1000 111 1100 001 0001 011 0101 101 1001 100 1101 010 0010 101 0110 011 1010 010 1110 100 0011 110 0111 000 1011 001 1111 111

Figure 13: Sixteen blocks of Data/Parity 0, 1 for a transmitted binary word (a string of 4 bits in this example) shown in italics on the upper right of figure 13. The parity bit P0 is output from the EOR truth table (second line of numbers in figure 15). We see that this value is 1, so P0 = 1. The other two parity bits P1 and P2 are calculated in a similar way using the EOR rule given in figure 14. All seven bits are then sent down the Comms channel and when they are received, the parity bits are calculated from the same set of rules as before. If no errors have occurred, the same parity bits will be achieved. Figure 16 shows the case when one error occurs. The figure shows a word received as 1 1 0 1 when it should be 1 1 0 0. Dont forget here that figure 13 shows the least significant bit is called D0 and the most significant bit called D3. We can see from figure 16 that two of the parity bits do not agree with parity bits that have been sent. Analysis of the make up of the rules for parity bit calculation, reveals that D0 is common to the errors in P0 and P1. Hence D0 is the culprit, and its value will be inverted to D0 = 0. Here is a summary: Transmitted data = Received data = 1100 001 1101 001

Figure 15: EOR Gate Truth Table

ters such as cost of manufacture, running costs and ease of use. A BASELINE SYSTEM Lets examine a Binary Phase Shift Keying system for three levels of Signal to Noise Ratio. This system has no form of parity bit error checker, so it will act as a baseline system, to which other systems can be compared. SN Ratio (dB) 3 7.5 10 BERP 0.03 (3 errors in a 100) 0.0006 (6 errors in 10 000) 0.000006 (6 in a million)

HAMMING CODING ow what happens to the BERP when we add parity bits? This will be answered later. Lets examine a specific arrangement; the (7,4) Hamming code. In this code, data is taken 4 bits at a time and 3 parity bits appended to make a "word" of 7 bits, hence the designation (7,4). Denoting the data bits D0, D1, D2, D3, each of the parity bits P0, P1, P2 are formed from the output of one three-input exclusive-ORs (EOR gate) of a chosen three of the data bits. The rules for the choice of combination is shown in figure 14 and the truth table for an EOR gate is shown in figure 15. For example P0 is formed by putting the inputs of an EOR gate to the bit values represented by D0, D1, D3. This is written sybollically in Electronics as in the top line of figure 14. The corresponding values of D0, D1, D3 are respectively 0,

From the first four bits ie data bits received, the parity bits should be 111, but the received parity bits are in disagreement showing 001. Two errors pinpoint that the least significant bit D0 of the data block is wrong.

P0 = D0 D1 D3 P1 = D0 D2 D3 P2 = D1 D2 D3 D0 D1 D2 D3 = = = = 0 0 1 1

P0 = D0 D1 D3 P1 = D0 D2 D3 P2 = D1 D2 D3 D0 D1 D2 D3 = = = = 1 (error) 0 1 1

P0 = 0 0 1 = 1 P1 = 0 1 1 = 0 P2 = 0 1 1 = 0

P0 = 1 0 1 = 0 (wrong) P1 = 1 1 1 = 1 (wrong) P2 = 0 1 1 = 0 (correct) The common factor in the wrong Parity bits is D0.

Figure 14: Hamming Code Formula

Figure 16: Pinpointing the error

7 Jul 05

E07-13

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

The Bit Error Rate Probabilities for this data comms system is show below for three levels of S-N Ratio. SN Ratio (dB) 3 7.5 10 BERP 0.07 (7 errors in a 100) 0.0006 (6 errors in 10 000) 0.000002 (2 in a million)

We observe that Hamming code provides an improvement over the baseline system when the S-N Ratio is greater than 7.5

CODING GAIN

he fundamental concept of error control coding is the addition of redundancy to a signal at the transmitter, and the exploitation of that redundancy at the receiver to detect and/or correct errors. The inclusion of redundancy in the transmitted signal results in a coded signal consisting of more bits than the original uncoded signal. The trade-off for this overhead is the ability to detect, and possibly correct, errors at the receiver. The performance improvement that occurs when using error control coding is often measured in terms of coding gain. Suppose an uncoded communications system achieves a given bit error rate (BER) at an SNR of 30 dB. Imagine that an error control coding scheme with a coding gain of 3 dB was added to the system. This coded system would be able to achieve the same BER at the even lower SNR of 27 dB. Alternatively, if the system was still operated at an SNR of 30 dB, the BER achieved by the coded system would be the same BER that the uncoded system achieved at an SNR of 33 dB. The power of the coding gain is that it allows a communications system to either maintain a desired BER at a lower SNR than was possible without coding, or achieve a higher BER than an uncoded system could attain at a given SNR. As an example, lets compare the Hamming (7, 4) system previously discussed, with a Hamming (32, 6) system used by the Mariner 69 deep space probe on Mars. (32, 6) means that the data blocks are 6 bits long followed by 26 parity bits. For the (7, 4) system a S-N Ratio of 10.2 dB is required to maintain a BERP of 10 -6. The (32, 6) system only requires 6.7dB S-N Ratio to maintain the same BERP. Hence the Coding Gain of the Mariner System over the (7, 4) system is 3.5dB. This system was used because transmitter power to the space probe was limited and there was a vast distance between the finite antenna size on the spacecraft and the receiver on Earth.

Random Bit Error Burst Error

Hello thos is a test transmistion

Hello xgey ts s tejd transmission

Figure 17: Error classification

E07 Digital Data Comms.QXD

E07-14

7 Jul 05

DWR/AGS

Digital Data Comms

TYPES OF DATA ERROR

here are two main types of data error, Random Bit Errors and Burst Errors. Our last discussion was an example of a Random-Bit Error. It is an error that has no relation to any other error that may occur in the future. This type of error occurs singly and are easily corrected. Burst errors occur when a sequence of bits is corrupted - e.g. a long circumferential scratch on a CD. That is the reason why one should always use a soft cloth along the radius of a CD rather than along the perimeter of the CD. Burst errors can also be caused by noise spikes, connector problems, transmission problems in the connecting cables, defects, dust, etc. Burst error usually results in data and redundant data loss (ie the parity bits). Correction is difficult, and our system already discussed would not cope well with burst error. Burst error is a serious phenomenon. So much so that systems are measured by how they react to burst errors. Look at figure 17, the burst error has caused the main meaning of the message to be lost. The maximum number of contiguous error bits that can be corrected is a measure of the quality of a system. Also, a system must be able to correct random and burst errors simultaneously. The problem with BERP as a measure of quality, is that it counts large and small burst errors equally without taking account of the distribution of the errors. Reed-Solomon encoding is a technique discussed later which can deal with burst errors up to a specified amount.

A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

Number Number of of Morse % freq Code Symbols Huffman Code Symbols 6.22 .2 1011 4 1.32 -... 4 10100 5 3.11 -.-. 4 10101 5 2.97 -.. 3 1011 4 28.00 . 1 1 1 1.68 ..-. 4 110001 6 1.65 --. 3 110000 6 3.63 .... 4 11001 5 6.14 .. 2 1001 4 0.06 .--4 1010111011 10 0.31 -.3 1010110 7 3.07 .-.. 4 10100 5 2.48 -2 11 2 5.73 -. 2 100 3 6.06 --3 1000 4 1.87 .--. 4 0 1 0.10 --.4 101011100 9 5.87 .-. 3 111 3 5.81 ... 3 110 3 7.68 1 1101 4 2.27 ..3 10 2 0.70 ...4 101010 6 1.13 .-3 11 2 0.25 -..4 10101111 8 1.07 -.-4 10 2 0.06 --.. 4 101011101011 10 99.24 Average 3.15 Average 4.65

Figure 19: Huffman: assigning symbol length to character

CONVOLUTIONAL CODING

eturning to the subject of channel coding; the schemes outlined so far are examples of "Block Coding", because data is transmitted and processed in discrete blocks. But there is another method called "Convolutional Coding". In this the parity bits and data bits are interwoven (often alternately); the parity bits are formed from the exclusive-OR of selected preceding data bits on a continuous basis. Figure 20: Huffman:

Symbol Length of Character(S) S = -log 2 ( p) where p is the probability of that character appearing Example 1 (letter e) 28% = 0.28 p = 0.28 S = -log 2 (0.28) S =1 Example 1 (letter z) 0.06% = 0.0006 p = 0.0006 S = -log 2 (0.0006) S = 10

Figure 21: Symbol Length of character:

E07-15 E07 Digital Data Comms.QXD

7 Jul 05

Digital Data Comms

DWR/AGS

INTERLEAVING

ursts of noise are not uncommon on radio links, and coding schemes don't like error bursts. So in many applications the data is interleaved just prior to transmission. For example a 100 byte message could be sent in the byte order:

1,11,21,31,41,51,61,71,81,91 2,12,22,32,42,52,62,72,82,92 3,13,23,33,43,53,63,73,83,93 4,14,24,34,44,54,64,74,84,94 5,15,25,35,45,55,65,75,85,95 6,16,26,36,46,56,66,76,86,96 7,17,27,37,47,57,67,77,87,97 8,18,28,38,48,58,68,78,88,98 9,19,29,39,49,59,69,79,89,99 The bytes would be re-ordered upon reception. So a burst of channel errors is spread out in the re- ordered block, making the decoder's job less arduous

bits to be used for each symbol is calculated as shown in Figure 21 The % column indicates the average probability (expressed in percent) of the letter occurring in English. The entropy of the this source is 4.14 bits. Shannon proved that the most efficient length of symbol must be within one bit of this figure. The average Morse codeword length is 3.15 bits. Adding one more symbol for the letter separator and converting to bits yields an average codeword length of 5.56 bits. The average Huffman codeword length is 4.65 bits, so the Huffman code is very efficient.

REED SOLOMON

eed-Solomon codes are block-based error correcting codes with a wide range of applications in digital communications and storage. Reed-Solomon codes are used to correct errors in many systems including:

SOURCE CODING

code associates a code word with each data symbol, and the code string is a concatenation of code words (placing code words side by side). The design of the code requires an estimate of the symbol probabilities, since compression is achieved by assigning shorter code words to more frequent events. The use of a code designed with an incorrect estimate of the probabilities can generate a code string that is larger than the original data. Huffman's code-construction algorithm is optimal in that no other association of code words to symbols for the given symbol frequencies can compress better. An entropy encoding is a coding scheme that assigns codes to symbols so as to match code lengths with the probabilities of the symbols. Typically, entropy encoders are used to compress data by replacing symbols represented by equal-length codes with symbols represented by codes proportional to the negative logarithm of the probability. Therefore, the most common symbols use the shortest codes. According to Shannon's theorem, the optimal code length for a symbol is -logbP, where b is the number of symbols used to make output codes and P is the probability of the input symbol. Probably the best known coding method based on probability statistics is Huffman coding. Huffman (see figure 20) published a paper in 1952 describing a method of creating a code table for a set of symbols given their probabilities. The Huffman code table was guaranteed to produce the lowest possible output bit count possible for the input stream of symbols, when using fixed length codes. Huffman called these "minimum redundancy codes", but the scheme is now universally called Huffman coding. Other fixed length coding systems, such as Shannon-Fano codes, were shown by Huffman to be non-optimal. Huffman coding assigns an output code to each symbol, with the output codes being as short as 1 bit, or considerably longer than the input symbols, strictly depending on their probabilities. The optimal number of

Storage devices (including tape, Compact Disk, DVD, barcodes, etc) Wireless or mobile communications (including cellular telephones, microwave links, etc) Satellite communications Digital television / DVB High-speed modems such as ADSL, xDSL, etc. The Reed-Solomon encoder takes a block of digital data and adds extra "redundant" bits. Errors occur during transmission or storage for a number of reasons (for example noise or interference, scratches on a CD, etc). The Reed-Solomon decoder processes each block and attempts to correct errors and recover the original data. The number and type of errors that can be corrected depends on the characteristics of the Reed-Solomon code. A Reed-Solomon code is specified as RS(n,k) with s-bit symbols. This means that the encoder takes k data symbols of s bits each and adds parity symbols to make an n symbol codeword. There are n-k parity symbols of s bits each. A Reed-Solomon decoder can correct up to (n-k)/2 symbols that contain errors in a codeword. Figure ten shows a typical Reed-Solomon codeword (this is known as a Systematic code because the data is left unchanged and the parity symbols are appended): The letter t in Figure 22 represents the maximum number of errors that can be corrected. Example A popular Reed-Solomon code is RS(255,223) with 8-bit symbols. Each codeword contains 255 code word bytes, of which 223 bytes are data and 32 bytes are parity. For this code: n = 255, k = 223, s = 8 (because 8 bits = 1 byte) 2t = 32, t = 16

Figure 22: Reed-Solomon Codeword

E07 Digital Data Comms.QXD

E07-16

7 Jul 05

DWR/AGS

Digital Data Comms

The decoder can correct any 16 symbol errors in the code word: i.e. errors in up to 16 bytes anywhere in the codeword can be automatically corrected. Maximum Codeword Length Given a symbol size s, the maximum codeword length (n) for a Reed-Solomon code is n = 2s 1 For example, the maximum length of a code with 8-bit symbols (s = 8) is 255 bytes.

SHANONS LAW: CAPACITY OF A CHANNEL

his law relates the maximum data capacity of a transmission system, C , to its bandwidth, W, and its signal-to-noise ratio S/N. The law is: C = 3.32 W Log ( 1 + S/N )

Where Log is the logarithm to the base ten of the contents of the bracket (use the Log button on the calculator. For example, a channel with a bandwidth of 3 kHz and S/N ratio of 30 db (30 dB = 103 = 1,000), the maximum theoretical capacity is: C = = 3.32 3000 Log ( 1001 ) 29.9 kbits per second

the Baud rate (typical telephone lines can work at rates up to about 2 400 Baud). Some advanced techniques can send more than one bit per signal so that the bit rate can be as much as 56 k bits per second along a telephone line (V90 modem) even though it is still operating at 2 400 Baud. When a short copper cable or a medium/long fibre-optic cable is used than the signalling rate can easily exceed several million Bauds. The time taken to send one bit might vary between several milli-seconds to less than a micro-second. Modern Giga-Byte Ethernet can send 10 000 Million Bits per second. Di-bits: to understand how we can send data faster than the Baud rate the following simple example is included. The data stream is one that we have used before: 00101110. At 1 000 Baud, each bit takes 1 ms (1 / 1000 s) to send so the whole byte takes 8 ms (not including the start, stop and parity bits). If we break the message into four pairs of bits then it becomes: 00 10 11 10

Real systems can never exceed this limit and practical systems only approach it (due to cost). A high-quality line is one with a wide bandwidth and low noise. Figures 23 and 24 show two examples of how to calculate the capacity of a communication channel

Instead of defining the line signals as 0 V = Zero and 5 V = One we change this so that there are four levels and each one corresponds to a pair of bits (a di-bit) as follows: 0 V = 00, 1.7 V = 01, 3.4 V = 10, 5 V = 11 The before and after signals are shown in Figure 25. You will see that the signal sent down the line continues to change one thousand times each second (1 000 Baud) but each change sends two bits. The byte now takes 4 ms - half the previous time - to send. You can probably see that the new signal will be more susceptible to noise and interference as a result of the change to di-bits. This is because although the changes on the line still occur at the Baud rate, they are now smaller changes than before and, therefore, more likely to be affected by noise and interference. In practice, these techniques are used in conjunction with frequency-shift keying in computer modems. The amplitude, phase and frequency of the carrier are

DATA TRANSMISSION RATE

he bandwidth of the comms link determines the maximum rate at which data may be sent. The frequency at which the signal on the line changes is called

Telephone Example S/N = 20dB = factor 100 Bandwidth = 4kHz C = 3.32 W log 1 + S N C = 3.32 4 log( + 100 ) 1 C = 27 kbit / s

Figure 23: Shannons Law Capacity of a telephone channel

Spread Spectrum Example S/N = - 14.5dB = factor 0.035 (very low) Bandwidth = 1000kHz (much greater) C = 3.32 W log 1 + S N C = 3.32 1000 log( + 0.035) 1 C = 50kbit / s

1 ms

00 10

11

10

Figure 24: Shannons Law Capacity using spread spectrum

Figure 25: Using Di-Bits to Double the DataCapacity of a Transmission System

E07-17 E07 Digital Data Comms.QXD

7 Jul 05

Digital Data Comms

DWR/AGS

changed so that there can be, for example, as many as sixteen combinations. This allows the bit rate to be sixteen times greater than the Baud rate when line conditions are good. An ideal telephone line can transmit data at 56 kbits per second, but few real telephone lines achieve more that 49 kbits per second in practice. When line conditions are poor then the bit rate must be reduced until the number of errors is small. Modern modems adjust their bit rate during transmission to accommodate changes in levels of noise and interference during the data transfer.

1. Each information-bearing signal is transmitted with a bandwidth in excess of the minimum bandwidth necessary to send the information. 2. The bandwidth is increased by using a spreading code that is independent of the information. 3. The receiver has advance knowledge of the spreading code and uses this knowledge to recover the information from the received, spread-out signal. Lets begin by considering a technique for spreading the spectrum of an information signal. Suppose we have a series of information bitse.g, 110110that we want to transmit at a particular speede.g, rb = 1000 bits/sec. We know that the bandwidth required to transmit this signal will be proportional to the transmission speed (If PSK is used and we need 90% in-band power, the signal will require a bandwidth of 2rb = 2 kHz). Now consider the circuit shown in Figure 26, which exclusiveORs the information bits from the source with a second sequence of bits known as a spreading code. The spreading code is being clocked at a rate three times as fast as the source is outputting information. If the source information and the spreading code are synchronized, the sequence at the output of the exclusive-OR gate also has a rate of 3rb . Lets use rss to symbolize the rate of the spreading code, which we call the chipping rate. The circuit in Figure 26 (which we can call a spreader) converts the information sequence, which is being output by the source at a rate of rb , into a longer, faster sequence of bits being output at a rate of rss. This longer, faster sequence of bits will require more bandwidth to transmit (again, if PSK is used and we need 90% in-band power, the new sequence will require a bandwidth of 2rss = 6 kHz). The technique of exclusiveORing an information sequence with a faster spreading code is known as direct sequence spread spectrum The general spreading and despreading processes in the frequency domain is shown in Figures 27 and 28. Spreading increases the bandwidth by a factor of rss /rb, and despreading reduces the bandwidth back to that of the original signal. This factor, rss /rb, is known as the processing gain and is symbolized Gp. Weve now shown that spreading and despreading are mathematically valid operations, but we have yet to show why they are useful. In fact, as mentioned earlier, these operations seem counterintuitive because they increase the bandwidth of the transmitted signal something communication systems engineers instinctively avoid. In order to see practical applications for spreading and despreading, we must investigate the properties of the spreading code. any sequence of 1s and 0s can be used as a spreading code. Practical spreading codes must look like a sequence of random, independent, equiprobable bits. These codes are called pseudo-random (PN)rather than truly random because the transmitter and receiver must generate the same sequence (otherwise despreading will not work.) The bits in a PN code are essentially uncorrelated. Thus, when a PN spreading code is applied to additive white Gaussian noise, the power spectral density of the noise remains flat and unchanged. Figure 28 shows the

ENCRYPTION

uppose an 8 bit message needs to be sent securely down a comms channel. The message is 11010110. Consider a bit stream 01011011 as an encrypter. One method of encryption is to use these two data streams as inputs to an Exclusive-OR gate. The output of the EOR gate is the encrypted message. Working from the least significant bit with the message bit placed first, the result will be

0 1 1 0 1 0 1 1

1 1 0 1 1 0 1 0

= = = = = = = =

1 (LSB) 0 1 1 0 0 0 1(MSB)

The encrypted message is therefore 10001101 and this is sent down the channel to a decoder which knows the encrypter data stream 01011011. If these two are input to an EOR gate, the original message is revealed as follows: 1 0 1 1 0 0 0 1 1 1 0 1 1 0 1 0 = = = = = = = = 0 (LSB) 1 1 0 1 0 1 1(MSB)

the original data 11010110 has been decrypted

CODE DIVISION MULTIPLEXING (CDM)

ode division multiplexing (CDM) allows signals from a series of independent sources to be transmitted at the same time over the same frequency band. This is accomplished by using orthogonal codes (see later) to spread each signal over a large, common frequency band. At the receiver, the appropriate orthogonal code is then used again to recover the particular signal intended for a particular user. The key principle of CDM is Spread Spectrum. Spread Spectrum is a means of communication with the following features:

E07 Digital Data Comms.QXD

E07-18

7 Jul 05

DWR/AGS

Digital Data Comms

Figure 26: Speading using a chipping code

spreading, transmitting, and despreading processes for a general signal corrupted in the channel by additive white Gaussian noise. A PN spreading code is used. Note that the spreading process flattens (as well as spreads) the spectrum of the information signal, and that the spread transmitted signal is essentially buried in the noise by the time it arrives at the receiver. The despreading process narrows and heightens the spectrum of the received signal, yet leaves the spectrum of the received noise essentially unchanged. The receiver can now extract the despread signal from the noise using a bandpass filter. One advantage of spread spectrum is that the transmitted signal is buried in the noise and is virtually undetectable by other Figure 27: Spreading and De-spreading

receivers that do not know the spreading code. This provides security. Also, since a spread spectrum signal contains low energy spread over a large bandwidth, the signal can be transmitted on a channel containing other communications without causing significant interference to the other communications (i.e., the spread spectrum signal will simply look like a very small amount of additional noise) The Pseudo-Random code must satisfy a number of properties. One particular property is that in any block of spreading code, the number of zeroes and ones must not differ by more than one. The second propery puts a restriction of the sequence of identical bits and the third property is to do with correlation which is beyond the scope of this handout. If these conditions are met, the codes are orthogonal codes

Figure 28 Code Division Multiplexing

7 Jul 05

E07-19

E07 Digital Data Comms.QXD

Digital Data Comms

DWR/AGS

SELF ASSESSMENT QUESTIONS

Q1. When a byte of data is carried by a parallel bus then: a. b. c. d. No handshaking is required. Each bit has its own conductor. A shift register is required to queue the bits. Frequency-shift Keying is used.

Q8. Which one of the following binary numbers has the highest bandwidth? a. b. c. d. 10101010 11001100 00110011 11111111

Q2. A communications channel that is used to carry signals in both directions simultaneously is called: a. b. c. d. Full-Simplex. Simplex. Half-Duplex. Duplex.

Q9. The following binary data, 8 data bits and one parity bit, have been transmitted with even parity. Which one was corrupted during transit? a. b. c. d. 100100110 111111111 000000000 010101010

Q3. Which one of the following is NOT a feature of a parallel data bus? a. b. c. d. Requires start and stop bits. Gives high-speed data transfer. Transfers several bits simultaneously. Requires handshaking signals.

Q10. Why does Manchester encoding allow long messages to be sent asynchronously? a. Differential transmission, on balanced wires, is used. b. Error detection is used. c. The message carries timing signals. d. Ones and Zeroes are encoded in different ways. Q11. A byte is transmitted with a bit-period of 10 ms (100 Baud) using one start bit, 8 data bits, even parity and one stop bit. How long after the arrival of the start bit does the receiver examine the parity bit? a. b. c. d. 100 95 15 10 ms ms ms ms

Q4. When serial transmission is required: a. b. c. d. A telephone link cannot be employed. A modem is necessary. A shift-register is required. A cable with at least 50 conductors is normally used.

Q5. The raw data from a computer cannot be transmitted directly over a long distance, e.g. 5 km, because: a. There is no dc component in the waveform. b. There will be too many reflections from the other end. c. The signal will be attenuated and distorted in transit. d. There are no parity bits.

Q12. In frequency shift keying, which of the following statements would be incorrect? a. A One is sent as a loud tone and a Zero is sent as a quiet tone. b. Ones and Zeroes are encoded using different frequencies. c. A One is sent as a high-pitched tone and a Zero is sent as a low-pitched tone. d. For use on a telephone circuit, the tones must be audible. Q13. One method that could be used to correct most errors that arise during transmission of data is: a. b. c. d. One parity bit for each byte. Manchester encoding. Differential transmission on balanced wires. Block parity.

Q6. Frequency Shift Keying is used because: a. It is needed for error detection and/or correction. b. It produces a non-return to zero (NRZ) signal waveform. c. Fewer conductors are required in the comms cable. d. It changes the frequencies of the data waveform to lie within the bandwidth of the datalink. Q7. The use of differential transmission on a balanced line reduces problems caused by: a. b. c. d. Poor bandwidth. Dispersion. Reflections. Interference.

E07 Digital Data Comms.QXD

E07-20

7 Jul 05

DWR/AGS

Digital Data Comms

Q14. When communicating over a poor quality datalink, the system is likely to: a. b. c. d. increase the Baud rate. reduce the bit-rate. encode more bits into each pulse. stop sending the parity bits.

Q21. A channel with a bandwidth of 10 kHz and a signalto-noise ratio of 50 db (105) has a maximum data-carrying capacity of: a. b. c. d. 50 kbits per sec. 133 kbits per sec. 10 kbits per sec. 500 kbits per sec

Q15. The hex number 6B is equivalent to the decimal number: a. b. c. d. 71 611 107 17

Q22. A Bit Error Rate Probability of 0.000001 implies that one error will occur in the following number of bits: a. 100 b. 1000 c. 10000 d. 1000000 Q23. A Comms channel uses Hamming Code (11,8) This will mean that the number of data bits and parity bits are respectively: a. b. c. d. 11 and 8 11 and 3 8 and 3 3 and 8

Q16. The decimal number 25 is equivalent to the hex number: a. b. c. d. 15 19 FA A1

Q17. The binary number 10000011 is equivalent to the decimal number: a. b. c. d. 255 128 131 111

Q24. If the Signal to Noise ratio in a comms channel is one, then if 500 bits are sent, the likely number of erroneous bits will be: a. b. c. d. 500 none one 250

Q18. When a byte is sent asynchronously, with parity, then the number of bits per frame is: a. b. c. d. 8 9 10 11

19. One difference between synchronous and asynchronous transmission of data is that synchronous transmission has: a. b. c. d. fewer errors. smaller frames. greater throughput. frames containing a single byte.