Escolar Documentos

Profissional Documentos

Cultura Documentos

Description: Tags: G2eseastategrants

Enviado por

anon-270605Descrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Description: Tags: G2eseastategrants

Enviado por

anon-270605Direitos autorais:

Formatos disponíveis

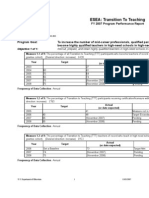

ESEA: State Grants for Innovative Prog

FY 2007 Program Performance Report

Strategic Goal 2

Formula

ESEA, Title V, Part A

Document Year 2007 Appropriation: $99,000

CFDA 84.298: Innovative Education Program Strategies

Program Goal: To support state and local programs that are a continuing source o

Objective 1 of 2: To encourage states to use flexibility authorities in ways that will increase stud

Measure 1.1 of 4: The percentage of districts targeting Title V funds to Department-designated strategic priorities t

Actual

Year Target

(or date expected)

2003 Set a Baseline 65 Target Met

2004 68 69 Target Exceeded

2005 69 69 Target Met

2006 70 70 Target Met

2007 71 (August 2008) Pending

2008 72 (August 2009) Pending

2009 73 (August 2010) Pending

2010 74 (August 2011) Pending

Source. U.S. Department of Education, Consolidated State Performance Report.

Frequency of Data Collection. Annual

Data Quality. The data were more precise for School Year 2005-06. In 2004-05, the Consolidated State

Performance Report (CSPR) asked for the number of LEAs that used 20% or more of Title V, Part A funds

under each of the 4 strategic priorities separately, and how many of these LEAs met AYP; therefore, a

district would be counted multiple times for 2004-05 if it used 20% or more of its program funds for more

than one of the 4 strategic priorities. Duplicate counts were eliminated for 2005-06, when the CSPR

asked for the number of LEAs that used 85% or more of program funds for the 4 priorities.

Explanation. The standard was raised when CSPR asked for the number of LEAs that used 85% or

more of program funds for the 4 priorities for 2005-06 (instead of the number of LEAs that used 20% or

more of program funds under each of the 4 strategic priorities separately as in 2004-05), and how many

of these LEAs met AYP. Strategic priorities include those activities that (1) support student achievement,

enhance reading and math, (2) improve the quality of teachers, (3) ensure that schools are safe and drug

free, (4) and promote access for all students. Activities authorized under Section 5131 of the ESEA that

are included in the four strategic priorities are 1-5, 7-9, 12, 14-17, 19-20, 22, and 25-27. Authorized

activities that are not included in the four strategic priorities are 6, 10-11, 13, 18, 21, and 23-24.

Measure 1.2 of 4: The percentage of districts not targeting Title V funds that achieve AYP. (Desired direction: incr

Actual

Year Target

(or date expected)

2003 Set a Baseline 55 Target Met

2004 58 49 Did Not Meet Tar

2005 59 54 Made Progress F

2006 60 78 Target Exceeded

2007 61 (August 2008) Pending

U.S. Department of Education 1 11/02/2007

2008 62 (August 2009) Pending

2009 63 (August 2010) Pending

2010 64 (August 2011) Pending

Source. U.S. Department of Education, Consolidated State Performance Report.

Frequency of Data Collection. Annual

Data Quality. Data were reported by the States.

Explanation. For 2005-06, the standard was raised when the CSPR asked for the number of LEAs that

did not use 85% or more of Title V, Part A funds for the 4 strategic priorities (instead of the number of

LEAs that did not use 20% or more of program funds for any of the 4 strategic priorities, as in 2004-05),

and how many of these LEAs met AYP. Strategic priorities include those activities that (1) support student

achievement, enhance reading and math, (2) improve the quality of teachers, (3) ensure that schools are

safe and drug free, (4) and promote access for all students. Activities authorized under Section 5131 of

the ESEA that are included in the four strategic priorities are 1-5, 7-9, 12, 14-17, 19-20, 22, and 25-27.

Authorized activities that are not included in the four strategic priorities are 6, 10-11, 13, 18, 21, and 23-

24.

Measure 1.3 of 4: The percentage of combined funds that districts use for the four Department-designated strateg

Actual

Year Target

(or date expected)

2005 Set a Baseline 91 Target Met

2006 92 Not Collected Not Collected

2007 93 (August 2008) Pending

2008 94 (August 2009) Pending

2009 95 (August 2010) Pending

2010 96 (August 2011) Pending

Source. U.S. Department of Education, Consolidated State Performance Report.

Frequency of Data Collection. Annual

Explanation. To improve the precision of the data, the Consolidated State Performance Report (CSPR)

asked States to report actual program dollar amounts that LEAs spent on the four strategic priorities for

2005-06, instead of the percentage as in 2004-05. However, the percentage for 2005-06 could not be

calculated, because figures for the denominator (total amount of program funds spent by LEAs) were not

reliable. To reduce burden on States and LEAs, the Department did not ask for this information on the

CSPR for 2005-06, but instead calculated the total Title V, Part A funds spent by the LEAs as 85 percent

of the State allocation plus or minus the amount transferred under the State Transferability authority of

section 6123(a). Unfortunately, using this calculation as the denominator yielded percentages exceeding

100% for 18 States, suggesting that carry-over may be an important factor not addressed in this

calculation. For this reason, the Department has requested OMB's approval to ask for the total Title V,

Part A funds spent by the LEAs in the Consolidated State Performance Report CSPR for 2006-07 and

future years.

Measure 1.4 of 4: The percentage of participating LEAs that complete a credible needs assessment. (Desired dir

Actual

Year Target

(or date expected)

2005 Set a Baseline 100 Target Met

2006 100 100 Target Met

U.S. Department of Education 2 11/02/2007

2007 100 (August 2008) Pending

2008 100 (August 2009) Pending

2009 100 (August 2010) Pending

2010 100 (August 2011) Pending

Source. U.S. Department of Education, Consolidated State Performance Report.

Frequency of Data Collection. Annual

Data Quality. States review LEAs' needs assessments when monitoring. The Department asks States to

submit examples of needs assessments from their LEAs. To improve the precision of the data for 2005-

06, the CSPR asked for the actual number of LEAs that completed needs assessments that the State

determined to be credible, compared to 2004-05 when the CSPR asked for the percentage of LEAs. The

response rate also improved: 4 states did not collect these data for 2005-06, compared to 7 states the

previous year.

Explanation. The median average across States is 100. Thirty-seven states reported that 100% of their

LEAs completed credible needs assessments. Of the rest, 4 States reported that 90-99% of their LEAs

completed credible needs assessments, 2 States reported 62%, and the remaining five States reported

81%, 74%, 45%, 32%, and 4%.

Objective 2 of 2: Improve the operational efficiency of the program

Measure 2.1 of 2:

The number of days it takes the Department to send a monitoring report to States after monitoring visits (both on-si

(Desired direction: decrease) 1883

Actual

Year Target

(or date expected)

2006 Set a Baseline Not Collected Not Collected

2007 Set a Baseline 56 Target Met

2008 51 (September 2008) Pending

2009 48 (September 2009) Pending

2010 45 (September 2010) Pending

Source. U.S. Department of Education, Office of Elementary and Secondary Education, State Grants for

Innovative Programs, program office records.

Frequency of Data Collection. Annual

Target Context. In FY 2006, the program office developed and began a series of innovative, virtual site

visits using videoconferencing to gather the comprehensive information needed for multiple programs at a

significantly lower cost and greater efficiency than traditional on-site visits. Because FY 2006 was a

developmental year for virtual site visits and follow-up activities, the new site visit protocol and monitoring

report template were completed in FY 2007, and FY 2007 data are used to establish the baseline.

Explanation.

By August 31, 2007, ED had completed reports for 3 of the 10 site visits conducted in FY 2007. In FY

2007, ED also completed reports for 4 of the 7 site visits conducted in FY 2006. It took ED an average of

56 days to send the 3 reports from FY 2007 site visits, compared to an average of 258 days to send the 4

reports from FY 2006 site visits. None of the monitoring reports was sent to States within 45 days after

the site visit. Challenges included: developing a new site visit protocol and coordinating specific input from

5 programs to plan each site visit, working with a new technology delivery system and trouble-shooting

videoconferencing technology problems, creating a template and integrating information from the 5

U.S. Department of Education 3 11/02/2007

programs into each comprehensive monitoring report, following up with the State to obtain additional

information before finalizing each monitoring report, and preparing monitoring reports using the new

template for those site visits conducted in FY 2006 at the same time that we were moving forward with the

new FY 2007 site visits. Because of advances achieved in FY 2007, the Department anticipates

continued improvement in reducing the time it takes to send the monitoring reports to States until we

reach the goal of 45 days.

Measure 2.2 of 2:

The number of days it takes States to respond satisfactorily to findings in the monitoring reports.

(Desired direction: decrease) 1882

Actual

Year Target Status

(or date expected)

2006 Set a Baseline Not Collected Not Collected

2007 Set a Baseline 28 Target Met

2008 30 (September 2008) Pending

2009 30 (September 2009) Pending

2010 30 (September 2010) Pending

Source. U.S. Department of Education, Office of Elementary and Secondary Education, State Grants for

Innovative Programs, program office records.

Frequency of Data Collection. Annual

Target Context. In FY 2006, the program office developed and began a series of innovative, virtual site

visits using videoconferencing to gather the comprehensive information needed for multiple programs at a

significantly lower cost and greater efficiency than traditional on-site visits. Because FY 2006 was a

developmental year for virtual site visits and follow-up activities, the new site visit protocol and monitoring

report template were completed in FY 2007, and FY 2007 data are used to establish the baseline.

Explanation. By August 31, 2007, States had responded to 5 of the 7 monitoring reports that ED sent in

FY 2007. It took States an average of 28 days to respond. Four States met the 30-day target, and one

State responded in 32 days.

U.S. Department of Education 4 11/02/2007

Você também pode gostar

- List of Key Financial Ratios: Formulas and Calculation Examples Defined for Different Types of Profitability Ratios and the Other Most Important Financial RatiosNo EverandList of Key Financial Ratios: Formulas and Calculation Examples Defined for Different Types of Profitability Ratios and the Other Most Important Financial RatiosAinda não há avaliações

- Description: Tags: G1eseastategrantsDocumento3 páginasDescription: Tags: G1eseastategrantsanon-164691Ainda não há avaliações

- Description: Tags: G1esearuralDocumento5 páginasDescription: Tags: G1esearuralanon-976836Ainda não há avaliações

- Description: Tags: G2eseastategrantsDocumento3 páginasDescription: Tags: G2eseastategrantsanon-527605Ainda não há avaliações

- Description: Tags: G1cratrainingDocumento2 páginasDescription: Tags: G1cratraininganon-247735Ainda não há avaliações

- Description: Tags: G2esearuralDocumento6 páginasDescription: Tags: G2esearuralanon-754907Ainda não há avaliações

- Description: Tags: G5heacollegeDocumento2 páginasDescription: Tags: G5heacollegeanon-956698Ainda não há avaliações

- Description: Tags: G2esearuralDocumento5 páginasDescription: Tags: G2esearuralanon-150504Ainda não há avaliações

- Description: Tags: G2eseaenglishDocumento8 páginasDescription: Tags: G2eseaenglishanon-125746Ainda não há avaliações

- Description: Tags: G3heacollegeDocumento2 páginasDescription: Tags: G3heacollegeanon-864259Ainda não há avaliações

- Description: Tags: G2eseaforeignDocumento1 páginaDescription: Tags: G2eseaforeignanon-660336Ainda não há avaliações

- Description: Tags: G5heacollegeDocumento2 páginasDescription: Tags: G5heacollegeanon-289525Ainda não há avaliações

- Description: Tags: G2eseaimprovingDocumento3 páginasDescription: Tags: G2eseaimprovinganon-13733Ainda não há avaliações

- Description: Tags: G1eseaenglishDocumento6 páginasDescription: Tags: G1eseaenglishanon-58286Ainda não há avaliações

- Description: Tags: G5heaaiddevelopingDocumento4 páginasDescription: Tags: G5heaaiddevelopinganon-275612Ainda não há avaliações

- Description: Tags: G5ravrstateDocumento6 páginasDescription: Tags: G5ravrstateanon-629720Ainda não há avaliações

- Description: Tags: G5heabyrdDocumento2 páginasDescription: Tags: G5heabyrdanon-740907Ainda não há avaliações

- Description: Tags: G1eseastateassessmentsDocumento1 páginaDescription: Tags: G1eseastateassessmentsanon-53985Ainda não há avaliações

- Description: Tags: G2eseaimpactaidconstructionDocumento2 páginasDescription: Tags: G2eseaimpactaidconstructionanon-542140Ainda não há avaliações

- Description: Tags: G5heatriotalentDocumento2 páginasDescription: Tags: G5heatriotalentanon-93667Ainda não há avaliações

- Description: Tags: G5heaaidinstitutionsDocumento3 páginasDescription: Tags: G5heaaidinstitutionsanon-652996Ainda não há avaliações

- Description: Tags: G5heabjstupakDocumento2 páginasDescription: Tags: G5heabjstupakanon-556395Ainda não há avaliações

- Description: Tags: G5heainternationaldomesticDocumento14 páginasDescription: Tags: G5heainternationaldomesticanon-566842Ainda não há avaliações

- Description: Tags: G2eseacreditDocumento2 páginasDescription: Tags: G2eseacreditanon-5342Ainda não há avaliações

- Description: Tags: G1eseatroopsDocumento2 páginasDescription: Tags: G1eseatroopsanon-951070Ainda não há avaliações

- Description: Tags: G2heatriotalentDocumento2 páginasDescription: Tags: G2heatriotalentanon-414909Ainda não há avaliações

- Description: Tags: G1eseaimprovingDocumento3 páginasDescription: Tags: G1eseaimprovinganon-299115Ainda não há avaliações

- Description: Tags: G5raprotectionDocumento1 páginaDescription: Tags: G5raprotectionanon-811899Ainda não há avaliações

- Description: Tags: G2vteavocedstateDocumento10 páginasDescription: Tags: G2vteavocedstateanon-796589Ainda não há avaliações

- Description: Tags: G1eseatransitionDocumento6 páginasDescription: Tags: G1eseatransitionanon-7228Ainda não há avaliações

- Description: Tags: G2esracomprehensiveDocumento3 páginasDescription: Tags: G2esracomprehensiveanon-407024Ainda não há avaliações

- Description: Tags: G2ctestateDocumento10 páginasDescription: Tags: G2ctestateanon-182765Ainda não há avaliações

- Description: Tags: G3raclientDocumento2 páginasDescription: Tags: G3raclientanon-389048Ainda não há avaliações

- Description: Tags: G3raprotectionDocumento1 páginaDescription: Tags: G3raprotectionanon-439526Ainda não há avaliações

- Description: Tags: G5raprotectionDocumento1 páginaDescription: Tags: G5raprotectionanon-172667Ainda não há avaliações

- Description: Tags: G2vteavocedstateDocumento8 páginasDescription: Tags: G2vteavocedstateanon-578997Ainda não há avaliações

- Description: Tags: G2eseaevenDocumento3 páginasDescription: Tags: G2eseaevenanon-501831Ainda não há avaliações

- Description: Tags: G5heatrioupwardDocumento2 páginasDescription: Tags: G5heatrioupwardanon-416879Ainda não há avaliações

- Description: Tags: G5ravrgrantsDocumento2 páginasDescription: Tags: G5ravrgrantsanon-109327Ainda não há avaliações

- Description: Tags: G5heastudentDocumento3 páginasDescription: Tags: G5heastudentanon-108204Ainda não há avaliações

- Description: Tags: G5heachildDocumento3 páginasDescription: Tags: G5heachildanon-149843Ainda não há avaliações

- Description: Tags: G2eseastateassessmentsDocumento1 páginaDescription: Tags: G2eseastateassessmentsanon-933809Ainda não há avaliações

- Description: Tags: G2eseasmallerDocumento3 páginasDescription: Tags: G2eseasmalleranon-20253Ainda não há avaliações

- Description: Tags: G1eseaearlychildDocumento2 páginasDescription: Tags: G1eseaearlychildanon-304574Ainda não há avaliações

- Description: Tags: G2eseaforeignDocumento1 páginaDescription: Tags: G2eseaforeignanon-458661Ainda não há avaliações

- Description: Tags: G2eseaadvancedplaceDocumento3 páginasDescription: Tags: G2eseaadvancedplaceanon-805642Ainda não há avaliações

- Description: Tags: G1eseavoluntaryDocumento2 páginasDescription: Tags: G1eseavoluntaryanon-900496Ainda não há avaliações

- Description: Tags: G3ravrstateDocumento5 páginasDescription: Tags: G3ravrstateanon-592114Ainda não há avaliações

- Description: Tags: G2eseaearlychildDocumento2 páginasDescription: Tags: G2eseaearlychildanon-629979Ainda não há avaliações

- Description: Tags: G2eseatransitionDocumento3 páginasDescription: Tags: G2eseatransitionanon-269352Ainda não há avaliações

- Description: Tags: G3eseaexchangesDocumento2 páginasDescription: Tags: G3eseaexchangesanon-108371Ainda não há avaliações

- Description: Tags: G1esraresearchDocumento3 páginasDescription: Tags: G1esraresearchanon-547141Ainda não há avaliações

- Description: Tags: G2eseaeducationalDocumento2 páginasDescription: Tags: G2eseaeducationalanon-392313Ainda não há avaliações

- Description: Tags: G2eseaevenDocumento3 páginasDescription: Tags: G2eseaevenanon-628645Ainda não há avaliações

- Description: Tags: G2eseaimpactaidconstructionDocumento2 páginasDescription: Tags: G2eseaimpactaidconstructionanon-503821Ainda não há avaliações

- Description: Tags: G1eseatitleiDocumento3 páginasDescription: Tags: G1eseatitleianon-538643Ainda não há avaliações

- Description: Tags: G3heachildDocumento3 páginasDescription: Tags: G3heachildanon-972141Ainda não há avaliações

- Description: Tags: G5ravrrecreationalDocumento1 páginaDescription: Tags: G5ravrrecreationalanon-43986Ainda não há avaliações

- Description: Tags: G5aeflaadultednationalDocumento1 páginaDescription: Tags: G5aeflaadultednationalanon-932450Ainda não há avaliações

- Description: Tags: G2heatrioupwardDocumento2 páginasDescription: Tags: G2heatrioupwardanon-670351Ainda não há avaliações