Escolar Documentos

Profissional Documentos

Cultura Documentos

Ethics, Regulation - Sobre Warwick - I Parte

Enviado por

Anonymous BssSe5Descrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Ethics, Regulation - Sobre Warwick - I Parte

Enviado por

Anonymous BssSe5Direitos autorais:

Formatos disponíveis

This article was downloaded by: [University of Navarra] On: 22 November 2012, At: 07:53 Publisher: Routledge Informa

Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House, 37-41 Mortimer Street, London W1T 3JH, UK

Information, Communication & Society

Publication details, including instructions for authors and subscription information: http://www.tandfonline.com/loi/ rics20

ETHICS, REGULATION AND THE NEW ARTIFICIAL INTELLIGENCE, PART I: ACCOUNTABILITY AND POWER

Perri 6 Version of record first published: 07 Dec 2010.

To cite this article: Perri 6 (2001): ETHICS, REGULATION AND THE NEW ARTIFICIAL INTELLIGENCE, PART I: ACCOUNTABILITY AND POWER, Information, Communication & Society, 4:2, 199-229 To link to this article: http://dx.doi.org/10.1080/713768525

PLEASE SCROLL DOWN FOR ARTICLE Full terms and conditions of use: http://www.tandfonline.com/ page/terms-and-conditions This article may be used for research, teaching, and private study purposes. Any substantial or systematic reproduction,

redistribution, reselling, loan, sub-licensing, systematic supply, or distribution in any form to anyone is expressly forbidden. The publisher does not give any warranty express or implied or make any representation that the contents will be complete or accurate or up to date. The accuracy of any instructions, formulae, and drug doses should be independently verified with primary sources. The publisher shall not be liable for any loss, actions, claims, proceedings, demand, or costs or damages whatsoever or howsoever caused arising directly or indirectly in connection with or arising out of the use of this material.

Downloaded by [University of Navarra] at 07:53 22 November 2012

Information, Communication & Society 4:2 2001

199229

ETHICS, REGULATION AND THE NEW ARTIFICIAL INTELLIGENCE, PART I: ACCOUNTABILITY AND POWER

Perri 6

Kings College, London, UK

Abstract

Downloaded by [University of Navarra] at 07:53 22 November 2012

A generation ago, there was a major debate about the social and ethical implications of artificial intelligence (AI). Interest in that debate waned from the late 1980s. However, both patterns of public risk perception and new technological developments suggest that it is time to re-open that debate. The important issues about AI arise in connection with the prospect of robotic and digital agent systems taking socially signi cant decisions autonomously. Now that this is possible, the key concerns are now about which decisions should be and which should not be delegated to machines, issues of regulation in the broad sense covering everything from consumer information through codes of professional ethics for designers to statutory controls, issues of design responsibility and problems of liability.

Keywords

arti cial intelligence, robotics, digital agents, technological risk, ethics, regulation, accountability

This is the first of a two-part series of articles concerned with the social, ethical and public policy challenges raised by the new AI. The article begins with a discussion of the popular and expert fears associated down the ages with the ambition of AI. The article uses a neo-Durkheimian theoretical framework: for reasons of space, the interested reader is referred to other works for a full explanation of the theoretical basis of this approach. The argument is presented that the common and enduring theme of an AI takeover arising from the autonomous decision-making capability of AI systems, most recently resurrected by Professor Kevin Warwick, is misplaced. However, there are some genuine practical issues in the accountability of AI systems that must be addressed, and in the second half of the article, ethical issues of design, management and translation are addressed.

Information, Communication & Society ISSN 1369-118X print/ISSN 1468-4462 online 2001 Taylor & Francis Ltd http://www.tandf.co.uk/journals DOI: 10.1080/13691180110044461

PERRI

ARTIFICIAL INTELLIGENCE AS AN ETHICAL AND REGULATORY ISSUE

It is twenty- ve years since the rst edition of Joseph Weizenbaums (1984 [1976]) Computer Power and Human Reason: From Judgment to Calculation appeared, questioning the ethics of some of the ambitions of the arti cial intelligence (AI) community at that time. Weizenbaum feared that AI advocates, what ever the practical achievements of their programmes and tools, might effect an uncivilizing change, and that they might persuade people that problems which call for complex, humane, ethical and re ective judgment should be reduced to crude optimization upon a small number of variables. Such arguments resonated with the resurgence of much older fears during the second half of the 1970s through to the later 1980s. In the same years, Habermas (1989), in writing about his alarm about what he called the colonization of the life-world by instrumental reason,1 was breathing new life into Webers (1976) fear in the rst two decades of the twentieth century about the coming of the iron cage of reason. Fears about the dangers of intelligent technology long pre-dates Mary Shelleys Frankenstein , or even Rabbi Loeuws Golem, and it continues to recur at various points in our social history (Winner 1977). Fears about AI cluster around several themes, of which three are of central importance. In the extreme variant, which will be discussed in this rst article, the fear is of AI as all-powerful, or as the tyrant out of control. In this rst article (in a two-part series), I argue that this is vastly overstated. However, I suggest that it does raise important issues of both design and regulation for the accountability of AI systems. Secondly, the opposite fear of AI as highly vulnerable is also evident. This is the fear that we shall nd these systems so benign and so reliable that we shall become so routinely dependent upon their operations, with the result that any systemic failure whether resulting from natural causes, from technical weaknesses, from the incomprehensibility of the labyrinth of inherited systems making repair almost impossible (as was expected by some to be the case with the so-called millennium bug), or from malevolent human guided attack by information warfare could bring our society to its knees, just as completely as denial of fossil fuels has threatened to at various times. Thirdly, there are Weizenbaums fears that, rst, we shall allocate categories of decisions to arti cially intelligent systems, resulting both in the degradation of values and in damaging outcomes, because in many areas of life, we do not simply want decisions to be made simply by optimization upon particular variables, and, secondly, that as a result of using AI to do so, both human capabilities of, and the social sense of moral responsibility for, making judgment will be corroded.

200

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

The aspiration of AI has always raised these fears, because it promises or threatens, depending on ones point of view decision making that is autonomous of detailed control by named human beings with specific oversight roles and responsibilities. Perhaps it is surprising that popular anxiety about AI has been rather subdued during the 1990s, because that decade was one in which developed societies went through panics about many other technological risks, ranging from the great anxiety in Sweden and Germany about civil nuclear power to the British alarm about food biotechnology. Indeed, during a period when Hollywood has presented us with many lm versions of the enduring fears about AI (from 2001: A Space Odyssey, Bladerunner and The Terminator , through to the more recent The Matrix, and so on). Indeed, if fears were simply a response to actual technological development, then one would have expected popular anxiety levels about AI to have grown during the 1990s, given the flood of popular science material about developments in the last ten or fifteen years in such fields as robotics, neural net systems (Dennett 1998; Kaku 1998; Warwick 1998), digital agent systems (Barrett 1998), arti cial life modelling (Ward 1999), virtual reality modelling (Woolley 1992), modelling the less crudely calculative activities of mental life (Jeffery 1999), and the burgeoning and increasingly intelligent armamentarium of information warfare (Schwartau 1996). Indeed, writers within the AI community such as Warwick (1998) have speci cally tried to stir up anxiety (while others writers have tried to excite euphoria, e.g. Kurzweil 1999) about the idea of takeover of power by AI systems, but they have little popular impact by comparison with the series of food scares, panics about large system technologies and urban pollution, etc. Serious considerations of the ethics of AI such as the work of Whitby (1988, 1996) have attracted little attention during the 1990s not only within the AI community, but also among wider publics. The key to understanding these surprising cycles in concern is to recognize that social fears are patently not simply based upon the calculation of probabilities based upon evidence. Rather they re ect the shifting patterns of social organization and the worldviews thrown up by rival forms of social organization, each of which pin their basic concerns on the technologies that are to hand.2 At some point in the near future, however, AI is likely once again to be the focus of attention. Technological developments alone are not the principal driver of such dynamics of social fear. Rather, technologies attract anxiety when they are construed3 as violating, blurring or straddling basic conceptual categories of importance to certain styles of social organization, producing things seen as anomalous (Douglas 1966, 1992). For example, biotechnology arouses the anxiety of some because it is blurs the boundary (blurred in practice for millennia, but readily construed as sharp) between the natural and the artificial. The

201

Downloaded by [University of Navarra] at 07:53 22 November 2012

PERRI

categorical blurring in AI is that between tool and agent. A tool is supposed to be dependent on human action, whereas one enters into an agreement with someone to become an agent precisely because the person has the capacity to act and make judgments independently. Groups within societies grow anxious about such boundaries, not necessarily when particular technologies actually threaten them, but when those boundaries feel most under threat generally. When people are concerned about the integrity of their capacity for effective agency, things that seem to invade the space of human agency will seem most threatening. We live at a time when many themes in popular and even social science discourse are about the loss of agency a runaway world of vast global forces (Giddens 1999), a risk society where humans can expect to do little more than survive amid vast and dangerous forces (Beck 1992). Technologies are the natural peg on which to hang such larger social anxieties. Therefore, in thinking about the ethical, social, political and regulatory issues raised by recent breakthroughs in artificial intelligence, we need, if we are to attempt anticipation, rather than simply to rely on resilience or having enough resources to cope with problems as they occur, to think not only about technological prospects but also about the social dynamics of fear and the forms of social organization that people promote through the implicit and unintended mobilization of fears.4 The point here is certainly not that there are no risks, or that all technological dangers and failures are imaginary. In many cases, and in that of AI, that would be, as Douglas (1990: 8) put it, a bad joke. As Weizenbaum (1984 [1976]) argued a quarter of a century ago, complex and humane decisions must be made using judgment (6 2001a), and there are decisions that, even if they soon can be, should not be delegated to AI systems. Rather, the point is that a political response to popular risk perception is at least as important as the fine calibration of technocratic response to expert perceived risk, and it is more a process of conciliation between rival forms of social organization than a technocratic exercise in the optimization of a few variables on continuously differentiable linear scales. Humans are not endlessly inventive in the basic forms of social organization. This article uses the classi cation developed by the neo-Durkheimian tradition in the sociology of knowledge over the last thirty- ve years. The basic argument is that each of the different forms of social organization produce distinct worldviews, each with their distinct patterns of fear or risk perception. This is summarized in a heuristic device. The device cross-tabulates Durkheims (1951 [1897]) dimensions of social regulation and social integration, to yield four basic types of mechanical solidarity namely, hierarchy producing a bureaucratic and managerialist worldview, the isolate structure yielding a fatalist worldview,

202

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

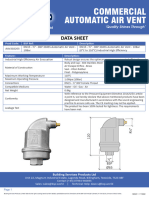

individualism producing an entrepreneurial worldview, and egalitarian sect or enclave producing a chiliastic worldview. Lacking space here to explain the theory in further detail or to offer evidence for it, I refer the reader to the following key texts: Douglas (1970, 1982, 1986, 1990, 1992, 1994, 1996), Fardon (1999), Adams (1995), Gross and Rayner (1985); Rayner (1992), Schwarz and Thompson (1990), Thompson et al. (1990), 6 (1998, 1999a, 1999b), Ellis and Thompson (1997), Coyle and Ellis (1993) and Mars (1982). However, Figure 1 presents the heuristic device in a form that displays the relationships between institutional styles of social organization or solidarities and worldviews which highlight certain ways of attaching fear and reassurance to technologies, and the anomalies in systems of classification (e.g. between nature and artifice, or tool and agent) that new technologies can come to represent. Each of these institutional solidarities produces a distinct cognitive bias in how risks, such as those attached to AI, are perceived. Hierarchy worries that technologies will run out of the control of systems of management, and calls for more regulation to tie them down. Individualism fears that large-scale technologies will undermine the entrepreneurial capacity of individuals, and calls for deregulation and market-based instruments in the governance of risk. Egalitarianism fears that cold technologies will dominate or undermine the warm moral community, and calls for sacred spaces freed from technological development. By contrast, fatalism sees technologies randomly failing, merely exacerbating the arbitrariness, randomness and noise of an already picaresque social life. Robots, neural nets and digital agents take their appointed places in this eternal social system of fears, in which the rival forms of social organization attempt to hold people, technologies and systems to account to their various basic values. There is no Archimedean point available, where we might stand to move beyond these solidarities and tropes. Rather, in the ethics and political institutions by which we exercise governance over technological risk, we have little choice but to give some but not excessive recognition to each solidarity, in more or less unstable settlements between them. Central, therefore, to the present argument is the necessity for social viability in such settlements, how ever temporary or fragile. This is best achieved when policy arrangements give some recognition or some articulation to each of the four basic solidarities in short, when they promote what Durkheim (1984 [1893]) called organic solidarity. Institutions of ethics and governance of any technology will prove unviable if any of the four mechanical solidarities is disorganized beyond a point, although that point is not typically determinable with any exactitude in advance. These strategies will be best pursued by what Thompson (1997a, b, c) calls clumsy institutions.

203

Downloaded by [University of Navarra] at 07:53 22 November 2012

Downloaded by [University of Navarra] at 07:53 22 November 2012

Grid

Strong social regulation Social relations are conceived as if they were principally involuntary Tragic view of society Hierarchy / central community Regulated systems are necessary: unregulated systems need management and deliberate action to give them stability and structure Social structure: central community, controlled and managed network Value stance: af rmation (e.g. of social values, social order institutions) by rule-following and strong incorporation of individuals in social order World view: hierarchy View of natural and arti cial: Nature is tolerant up to a point, but tends to be perverse unless carefully managed; the role of the arti cial is to give structure, stability and regulation to nature Response to hybrids: Anomalies should be managed, regulated, controlled

Fatalism/ isolate All systems are capricious Social structure: isolate; casual ties Value stance: personal withdrawal (e.g. from others, social order, institutions), eclectic values World view: fatalism (bottom of society) /despotism (top of society) View of natural and arti cial: Nature is capricious; the arti cial is little better what s the difference, anyway? Response to hybrids: Only to be expected

Weak social integration

Strong social integration

PERRI

204

Individual autonomy should not always be held to account

Individualism / openness Regulated systems are unnecessary or harmful: effective system emerges spontaneously from individual action Social structure: individualism, markets, openness Value stance: af rmation (e.g. of social values, social order institutions) by personal entrepreneurial initiative World view: libertarian View of natural and arti cial: Nature is benign, a cornucopia, for individuals to make the most of; the arti cial should be allowed free reign Response to hybrids: Hybridity and anomaly is the desirable and welcome outcome of human lateral thinking, inventiveness and enterprise

Egalitarianism / enclave Regulated systems are oppressive except when they protect Social network structure: enclave, sect, inward-looking Value stance: collective withdrawal (e.g. from perceived mainstream ), dissidence, principled dissent World view: egalitarian View of natural and arti cial: Nature is fragile, and people must tread lightly upon the earth; the arti cial is the source of de lement, pollution, danger and oppression, except when used strictly for protection Response to hybrids: Anomalies and hybrids are a sign of de lement and pollution, and must be stopped, prevented, eliminated

Individual autonomy should be held accountable

Heroic view of society Social relations are conceived as if they were principally voluntary Weak social regulation

Group

Figure 1 How institutional styles of social organization produce worldviews and styles of fear and reassurance about technologies. Source: 6 (1999a,b, adapting Douglas 1982, 1992); Thompson et al. (1990); Schwarz and Thompson (1990). For development of the heuristic, see Fardon (1999: ch. 10).

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

Downloaded by [University of Navarra] at 07:53 22 November 2012

Clumsiness has certain merits over sleekness: being cumbersome enough to give ground to each bias allows for an ongoing negotiation. It is this theoretical argument that provides the underpinning for the approach taken, in these two articles, to risk perception and response in connection with AI. This first article explores the limitations of the enduring theme that autonomous arti cially intelligent systems represent a threat to the autonomy and power of human beings, which is grounded in the fear that we shall not be able to hold AI to account to our interests. The second article develops and complicates the concept of autonomous decision making by AI systems and examines in more detail the moral and public policy requirements of developing clumsy ethical and regulatory institutions; but for the present purpose, a sophisticated model of machine autonomy is not required. The next section presents a brief review of that theme, before considering its two central weaknesses, in respect of its awed understanding of the relationship between intelligence and functional advantage, and in respect of its misunderstanding of collective action and power. The second half of this article examines the practical issues of what accountability for AI systems might mean.

THE TROPE OF THE ARTIFICIALLY INTELLIGENT TYRANT

It seems to be one of western societys recurring nightmares that intelligent artefacts will dominate or destroy their creators or the humans who come to depend upon them, and that humans generally will eventually become an embattled enclave struggling for mastery against their own creation. In ction and fantasy, from The Golem and Mary Shelleys Frankenstein , to lms such as Ridley Scotts Bladerunner or Stanley Kubricks 2001: A Space Odyssey and the more recent film The Matrix, and now in such popular science texts as Professor Kevin Warwicks In the Mind of the Machine, an apocalyptic future is offered. The trope running through these works is that autonomous arti cially intelligent machines would because of the combination of their greater intelligence than human beings, their capacity to accumulate intelligence over digital networks and their development of independent interests of their own work together gradually or suddenly to seize power from human beings. The trope offers each of the solidarities and their biases an opportunity to set out their fears and aspirations. For individualism, it offers the chance to show humans winning against all the odds (2001 ). For hierarchy, the trope takes the tragic form in which the intelligent artefact destroys order because the human creator has violated central norms (Frankenstein), or else the still more

205

PERRI

Downloaded by [University of Navarra] at 07:53 22 November 2012

tragic form, in which humans lose their own humanity (the ending of Bladerunner: The Directors Cut). For egalitarianism, the trope is one in which the creation of artificial intelligence results in the loss of individual accountability to human community and only with its regaining can humans triumph (many versions of The Golem). For fatalism, there is little that can be done because the technological achievement in itself determines the outcome, and the genie cannot be put back into a human bottle (which is Professor Warwicks tale). Is any version of the story plausible, interesting or ethically important, or is it in every variant a vehicle for the claims of the basic biases that should be taken metaphorically rather than literally? As a serious risk assessment about a plausible future, I shall argue, the doomsday scenario need not detain us long. But it does at least serve to raise more practical and mundane issues of control and accountability of machines in the management of risk, which do deserve some practical attention.

INTELLIGENCE, JUDGMENT AND AI

The first stage in the construction of the trope is made explicit in Warwicks (1998) argument. He asserts that AI systems will be so intelligent that they will functionally outperform human beings in the same ecological niche that human beings now occupy, and that they will do so through exercising domination over human beings. Much of his argument in support of this claim is directed to the comparative claim that AI systems will be more intelligent than human beings. In this section, I shall argue that even to the extent that this might well be true, it does not suf ce to support his conclusion. I shall argue that the comparison in intelligence is not the relevant consideration in determining either functional superiority in decision making, or more widely, competitive advantage in any characterization of the ecological niche(s) that human beings occupy. For Warwick considers that it is beside the point to respond to his argument by asking him to explain what is meant by intelligence in this context, on the grounds that sooner or later, machines are able to meet any standards we might set (1998: 170). That may be true, but Warwick seems only to be interested in answering those arguments that start by claiming some special characteristic or function for human brains that AI systems cannot possess or replicate (Warwick 1998: ch. 8). The more important arguments against his position do not rely on any such assumption. Rather, I shall argue in this section that, even if AI systems are more rational, more intelligent and better informed than human brains are, it does not follow that the consequences of their decisions will necessarily be superior. The rst problem with the trope, then, is the assumption that functional

206

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

superiority is the product of intelligence. When AI systems are equipped with other capacities, I shall argue, the whole issue disappears as a problem. Intelligence shows itself, although it certainly involves much else besides, in decision-making capacity. At least in the ideal model, in almost any area of decision making, the following standard stages must be gone through, to come to a decision:5 Allocating attention to problems: attention, for any decision maker, is a scarce resource, and its allocation between competing candidate priority problems is a constant problem. Diagnosis: classifying and identifying the structure and nature of the problems or conditions presented by particular individuals or groups; and the balance of probabilities between various alternative diagnoses. Risk appraisal: judging the nature, severity, probability of risks presented by the case. Action set: identifying the optimal, normal or feasible range of responses to those conditions from the viewpoint of the person or organization on behalf of whom or which the intelligent machine is undertaking the decision, or from the interest from which the decision type is modelled. Choice considerations: appraising the balance of advantages and disadvantages associated, in cases of the type the diagnosis has classi ed the present situation under, with each element in the action set. Preference ranking or decision protocol: some weighting or ranking of considerations for an against each element in the action set; and either Recommending action and, if appropriate, taking action: identifying the option that is on balance preferred; or where two or more elements in the action set are equally highly preferred, either making an arbitrary choice made; or else Decision remittal: remitting the nal decision to a human being to make. One way to question Warwicks claim is to ask, are there limits to the extent to which improvements can be made in the quality of decisions, at each of these stages? To make the matter a little more concrete using an example from AI already in use, consider a neural-net-based system making medical decisions about diagnosis and treatment for humans. The quality of decision making is a less stable virtue than decision theory positivists would have us believe. Consider the stage of diagnosis. It is a wellknown nding in modelling that any statistical procedure that is designed, when analysing modest numbers of cases, to minimize false positives will run the risk of high levels of false negatives (more generally, Type I and Type II errors, or

207

Downloaded by [University of Navarra] at 07:53 22 November 2012

PERRI

those of wrongly rejecting or accepting a given hypothesis), and vice versa, which often decreases significantly the statistical confidence one can have in results (Cranor 1988, 1990, cited in Hollander 1991: 167). Moreover, judgement cannot be eliminated from diagnosis. Suppose, for example, that the evidence before the arti cially intelligent autonomous decision maker means that a null hypothesis cannot be ruled out for example, that we cannot rule out the possibility that the rate at which the level of antibodies has fallen, which is observed in a persons bloodstream, is no greater than would be likely if they were not in fact ill. This does not entitle any human or any machine to draw the conclusion that the person is well. At most it gives some information to be taken into account in the making of a judgement which will typically not reach the 95% con dence level which the rule of thumb is typically relied upon by statisticians (Hollander 1991: 167; Mayo 1991: 271-2). Indeed, this may suf ce to shift the onus of argument. However, from not-p, one cannot conclude that q, even if q describes the direct or diametric opposite case from p. The artificially intelligent system is, for reasons that lie in the nature of inference under limited information and uncertainty, no more likely than a careful and statistically literate human to draw a sensible judgement on the basis of such evidence. And most decisions have to be made in conditions of limited availability of information, how ever extensively networked the decision maker is with other potential informants. It follows that the assumption is probably wrong, that increasing the computational or crude cognitive or even the learning power of arti cially intelligent systems amounts to increasing their decisional intelligence to the point where they are intrinsically more intelligent decision makers than humans in respect of the quality of decisions. Of course, AI systems may be able to process more information, make decisions more speedily and perhaps taken account of a wider range of considerations and perhaps identify more robust decisions than human decision makers can. There are, nevertheless, limits to the range of possible improvement in the reliability of judgment under uncertainty with limited evidence, and there are limits to the range of possible improvement in the trade-off between risks of different basic types of error. The choice of statistical procedures is one that already reflects judgments about what error risks are acceptable, which are already judgments that involve values (Mayo 1991). Those value choices can be modelled, but not eliminated in ways that could be said to constitute an improvement in the quality of intelligence of decision making. The consideration of AI-conducted decision making or decision support in medical and bio-ethics cases, such as discontinuation of treatment, raises another limitation to the positivist model of decision-making intelligence which will be

208

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

morally important. Sometimes in the most dif cult cases in medical ethics, it is necessary to factor into a decision about, for example, whether to continue with a pregnancy when the foetus has been diagnosed as having a currently incurable condition, a judgment about whether at some point in the expected lifetime of the child, if born, a cure might be discovered or at least improved palliation might be developed. The timing of the development of new knowledge appears, as far as we can tell, a genuinely and intrinsically unpredictable variable, and not particularly well related to expenditure or effort on research (Popper 1949). There is no reason to think that even the most intelligent of autonomous machines could make a better guess than any human about the probabilities of future discoveries, or could know better than a human what weight to put in decision making on any guess made. What is shown by this point is that there are limits to the soundness of base of any decision-making procedure, as well as to the possibilities for the elimination of judgment. Next, we need to consider how far arti cially intelligent machines capable of higher technical quality decision making than humans, even within the general limits upon quality of decision, can handle risk assessments, for instance, about individual persons, better than human decision makers could. For example, some psychologists of criminal behaviour claim that it is possible with surprisingly high accuracy to predict which individuals will become delinquent or turn to crime, and, therefore, in principle, to target preventive interventions to at-risk groups of young people. Teachers claim to be able to predict early in the lives of children, with high degrees of accuracy, who will become an educational failure. (In practice, of course, much of this tends to be rather dangerous inference from population or sample statistics to individual cases the ecological fallacy using rather coarse indicators of individual risk or vulnerabilities that might well and often do prove wrong in the individual case.) It is worth considering what our attitude might be, and what the consequences might be, of a proposal to substitute for human psychologists conducting such risk assessments, an artificially intelligent machine supplied with large quantities of pro ling data, which has been shown for arguments sake to be accurate in its diagnoses more often than humans to a statistically signi cant degree. Here, we have the problem already discussed of the moral weight of the consequences of the risk assessment being wrong, just as in the medical ethics cases. But here we also have an additional complicating factor: individual risk assessments have consequences for the behaviour of those assessed, even if they are not told the results of the assessment. Individuals can be stigmatized, and treated in particular ways that reflect the knowledge of, for example, teachers, social workers and police of cers that they have been identi ed as potential troublemakers. In response to the expectations

209

Downloaded by [University of Navarra] at 07:53 22 November 2012

PERRI

of others, they may actually turn into trouble-makers or educational dropouts or substance abusers or whatever. The result is that risk assessment becomes self-fulfilling (Merton 1948). Alternatively, they may be so outraged by the expectations of others, whether or not they know of their assessment, that they behave in the opposite manner. In this case, the assessment is self-confounding. Even if the artificially intelligent machine were to perform the computations on the evidence with greater accuracy than a human being, we would not know indeed, could not, even in principle, know whether that was because it was exercising greater cognitive and learning intelligence than human experts do, or whether its prophecies were self-ful lling in more cases than they were self-confounding, by comparison with those of human risk assessors. Because the interaction between assessment, communication of the assessment within the network of others such as professionals or other intelligent machines, their expectations and behaviour, and the choices, cognition, strategies and behavioural responses of the person assessed forms a non-linear dynamic system, how ever greater the intelligence of the machine, it will not necessarily be producing better decisions. Technologically, to produce an autonomous arti cially intelligent machine is an unprecedented, extraordinary achievement. But intelligence, what ever we mean by it, surely does not comprise all the capabilities that we look for in the making of decisions that will be functional, viable, acceptable and sensible. Perhaps it helps to turn to psychological studies. In one recent study that examined rival de nitions of intelligence in that eld (Gordon and Lemons 1997: 3269) a distinction is drawn between lumpers and splitters, accordingly as they take intelligence to a single phenomenon or several only loosely related. For the former, intelligence is a general unified capacity for acquiring knowledge, reasoning and solving problems (Weinberg 1989, quoted in Gordon and Lemons 1997). That is, it consists principally in the functions of abstract reasoning and recall. In general, the psychological literature tends to assume and the mainstream of the arti cial intelligence community has typically followed this that these capacities are sufficient to account for adaptive problem solving by animals including humans. Splitters about intelligence, such as Gardner (1983, Gardner et al. 1997), by contrast, recognize a plural and wider range of capacities including kinetic, spatial, communicative, musical and other context-speci c or skill-specific capabilities. Whatever the merits of each of these approaches to de nition of the term, intelligence, the key issue is that pattern recognition, abstract reasoning and other such cognitive abilities will not suf ce to deal with the challenges of problem structuring and judgment under conditions of uncertainty, for which a much wider set of skills are required, or for solving new

210

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

kinds of trust problems in collective action, of which social capabilities of institutional creativity are required, or for many situations in which lateral thought and inventiveness are needed. Improving mere (!) intelligence is not suf cient to produce technically better decisions in the sense of more rational ones, or ones that yield better judgment. However, the trope of AI as tyrant out of control, in Warwicks version in particular, also requires the claim that the triumph of the machines over human beings will be due to functional superiority of their intelligence. The relationship between superior technical quality of decision making and functional superiority, or competitive advantage in evolutionary terms over human beings, the same resource space or niche in the sense that ecologists use the term, is supposed to be entirely transparent, but as we have seen already, this can hardly be the case. Even if there were to be accepted, the argument can only be made out if we are prepared to assume that AI systems and human beings are indeed, and necessarily have to be, direct competitors in the same ecological niche. This assumption is left entirely unargued in Warwicks treatment. However, even the briefest consideration of the history of technologies suggests that once human beings have developed a technology that serves a certain purpose suf ciently reliably, and once they have developed with an institutional framework that provides some more or less provisionally stable settlement the kinds of con ict over that task that erupt in any given society, then human beings have tended to vacate those ecological niches. Indeed, they tend almost invariably to vacate those economic arenas represented by stable and institutionally acceptably governed technologies, despite the occasionally serious Luddite backlash. Is there something different about AI systems that closes off this welltrodden path? Warwick would argue that intelligence and autonomy make all the difference. But how could they make this difference? We have already seen that, without more, intelligence alone would not make this difference. Autonomy will be considered in much greater detail in the second article in this series, and there it will be argued that in fact autonomy for AI systems is likely to make sense only in the context of institutional arrangements. But Warwick needs AI autonomy to undermine all human institutions, although he cannot show how or why this would happen. Perhaps Warwick would argue in favour of his assumption that AI systems and human beings will be competitors in the same ecological niche with the claim that AI systems will either be completely general problem solvers or else will come under the control (via networking) of other AI systems that are, that general problem solvers will be able to operate with a measure of freedom across ecological niches comparable to that of human beings. At the very least, Warwick

211

Downloaded by [University of Navarra] at 07:53 22 November 2012

PERRI

has not shown this to be the case. As we shall see in the next section, there is nothing about networking in general that automatically gives control to the node that possesses the most general problem structuring, analysis and solving capabilities, or even that solves the well-known collection action problems. Secondly, there is no reason to think that completely general problem-solving tools will be universally preferred. Ecologists know that the degree of generalism or specialism that is more likely to be a successful survival strategy for any organism is very hard to predict, and indeed is genuinely uncertain in advance because it is hard in complex systems to predict the reactions of other organisms to the strategic choice of the focal organism, which may in turn impact upon the focal organisms strategy, sometimes even forcing it to reverse its initial preference. This is why modelling the effects of the introduction of alien species into environments has proven so intractable. Successful species, such as human beings, ants and many kinds of bacteria, have found that ecological specialization within the species and a closely chained and integrated division of labour is often rather efficient, if institutions can be developed and sustained that make this possible. In the same way, human beings have developed specialist machines that work together in highly speci c ways. Autonomous intelligent machines are not necessarily generalists, nor do they necessarily bring about there any fundamental change in the economic and ecological drivers that make some specialism and tight, prede ned coupling of systems, ef cient. In practice, therefore, AI systems are likely to be developed that are quite specialized, and to reproduce daughter systems with further specialization. In turn, there is every reason to think that human beings should be able to use these features, as they have always done, slightly to shift the range of ecological niches that we ourselves occupy so that we are not put out of business by these technologies, any more than we were by previous less intelligent and less autonomous technologies. It follows then, that even if we were to accept that superiority in intelligence is principally valuable as an important competitive advantage, it does not follow that superior intelligence will automatically produce better decisions or general functional superiority. Bluntly, there are basic limits to what can be achieved with intelligence alone. If, on the other hand, AI systems are developed that have all the other capacities institutional sensitivities, affective capacities, ethical sensibilities and competencies, etc. that make for effective functioning in the niche that human beings operate in, then there is no particular issue of superiority, but rather we should be dealing with persons, and the issue would not be one of superiority, for personhood is, by de nition, not something that one person is superior to another in, or that represents a competitive advantage. If, at some stage, autonomous thinking machines may be developed that are capable of

212

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

Downloaded by [University of Navarra] at 07:53 22 November 2012

second-order institutional creativity (for an explanation of this concept, see the second article in this series), then we shall surely then have no choice but to regard them as persons in all but matters of full responsibility under institutions, despite the fact that their material structure may not be biotic. But such machines will be more than merely arti cially intelligent. Moreover, such advanced AI systems will presumably have to regard themselves as persons. For a capability for and a strategy of taking part in institutions, of engaging in institutional creativity, in producing trust-based environments, and regarding oneself as a person necessarily involves the acceptance of certain constraints of moral civilization. Among those are duties not to commit certain kinds of wrongs against other persons, including old-fashioned biotic ones like human beings. This will bring about signi cant changes in their behaviour from those that Warwick seems to expect. Perhaps human beings might resent the emergence of non-biotic persons, and we or our descendents might fail to abide these duties to extend the circle of those to whom duties are owed; but that is quite another matter. The point is that, if and when machine persons are produced, or even if they emerge by Lamarckian evolution from AI systems unaided by human intervention after the human production of AI systems that are significantly less than persons, then there is no reason to suppose that such machine-persons would be malevolent, but rather every reason to suppose that they would be no more and no less benign than human persons, who are capable of great evil but also of co-operation and acceptance of moral responsibilities on an awesome scale.

COLLECTIVE ACTION AND POWER

The second central problem with the Terminator trope, taken literally, is that it utterly lacks any understanding of collective action and power. Yet it is impossible to make sense of a claim about the likely transfer of power without understanding the nature of power relationships. For this, we require not the tools of the analysis of arti cial intelligence, but of social science. Power even, and in the simple and crude form of domination without consent, or tyranny which is the main conception of power offered by the trope requires several things of those seek it and maintain it. The rst is collective action. In the trope, this collective action by autonomous arti cially intelligent machines is supposed to be achieved straightforwardly by networking and the issuing of commands from one machine to another, and the accumulation of vast intelligence through agglomeration over networks. But collective action is dif cult. Those autonomous actors that are equipped only with high degrees of

213

PERRI

rational intelligence and nothing much else in fact nd it more dif cult than the rest of us. For the temptation to free ride, to put other priorities rst, and, if the circumstances and the balance of incentives shift against collective action, to defect, are great indeed for purely and only rational and intelligent autonomous agents (Olson 1971). Perhaps machines might overcome these problems, in the ways that human beings do, by developing a shared identity and common culture, around which they achieve social closure (Dunleavy 1991: ch.3). In Warwicks tale, they are expected to do so purely by virtue of their intelligence. But this is utterly unlikely and possibly incoherent. Indeed, the more simply and merely intelligent a system is, the more it is likely to be ready to trade off the limited consolations of identity for the practical bene ts of defection from the shared identity. One needs more than intelligence to achieve social closure around identity. Indeed, the only structure that has been identified that can reliably achieve this, is a form of social organization solidarity, and to deploy this requires the capacity for institutional creativity in solving trust problems within the de ned group, at least as well as cohesive groups ever do, and groups are ssiparous structures by nature. Managing institutional creativity requires capacities such as affective and institutional sensibilities that are more than merely intelligent: they are characteristic of persons. Machine-persons may one day have these capacities, but at that point, most of the interesting questions about arti cial intelligence as distinct from any other kind have disappeared, for they will then be persons operating within and subject to institutions alongside and just like the rest of us. One might overcome this in autonomous artificially intelligent machines by programming some of them or other arti cially intelligent machines might learn that it is in their interests to program themselves or other machines to act as hierarchical managers of other machines, to skew the incentives for collective action by machines. This was Hobbess (1968 [1651]) solution to the same problem in the human context, by programming an autonomous Leviathan to discipline and structure incentives for all others. However, there are severe limits to the effectiveness of such a hierarchical order of decision making and imposition in organization (see, for example, Miller 1992). These are not only found in human societies, where other biases quickly apply negative feedback of various kinds in social systems. They are found in purely rational autonomous systems of the kinds that can be modelled by game theory, and which can embodied in anything from purely digital entities to networked robots. In the rst place, it is costly for the programming machine, for it would have to have the capacity to acquire unachievably large amounts of information about the incentives facing all other machines. Even assuming the processing capacity

214

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

could be solved by quantum computing or enzyme computing or some other nano-technique not yet available, the costs of acquisition, and re-acquisition as the incentives facing each machine change by the minute would be large indeed. It was this logical problem that was the basic flaw underpinning command economies such as socialist systems (von Mises 1935). Decisionally autonomous intelligent systems are agents that already present trust problems, even for other machines. Even the most thorough-going hierarchy has to invest significant resources in overcoming or solving these problems, and there are common situations in which costs can rise over time, rather than fall. A rational autonomous intelligent system would quickly identify the inef ciency of hierarchical systems, for these kinds of costs typically rise to the point where they outweigh the bene ts from collective action in hierarchical systems (Miller 1992). The second thing that is required for the maintenance even of simple domination, is capacity for judgment under uncertainty. As has been argued above, this is a tall order, requiring more than intelligence conventionally de ned. Even the most intelligent system attempting judgment under uncertainty needs skills in problem structuring (Rosenhead 1989), abilities to anticipate the behaviour of large numbers of other agents (humans and machines), who may be working with different patterns of risk perceptions and preferences and different deviations from the supposed rational norm, and to make judgements about their likely interactions and the effects (since there are typically too many Nash equilibria in remotely realistic decision matrices to yield uniqueness, even before taking into account uncertainty, limited information and the varieties of institutions and social organization styles, and the role of institutional affect (6, 2000) to be anticipated). The machine or group of machines seeking power would need to possess and be able to use skills which go far beyond intelligence, in the appreciation of institutional and cultural settings. One of the most developed and convincing theories of decision making by those exercising power focuses on the nature of judgment (Vickers 1995). Judgment does not principally consist in the intelligent gathering of information and the selection of a preferred strategy, given a prior goal (Lindblom 1959, 1979; Lindblom and Braybrooke 1970). In general, the shortage of time and the costs of information collection make this unfeasible, even for a network of machines with near in nite processing power. Rather, the essential capacity for judgment is the deployment of an appreciative system (Vickers 1995: ch.2), which involves institutional and affective sensibilities. An appreciative system is a set of institutionally enabled capacities to identify salient, relevant and contextually important information from noise, using analogical and lateral rather than exclusively analytical and vertical reasoning methods, to determine particular

215

Downloaded by [University of Navarra] at 07:53 22 November 2012

PERRI

structures to be problems and not mere conditions (Barzelay with Armajani 1992: 21), to allocate the scarce resource of attention to problems (March and Olsen 1975), and to do all this not in relation to a given purpose, but for the process of identifying possible purposes, and at the same time identifying the range of potential values, ideals and practical operating norms at stake. Feasibly, under practical constraints of the costs of information and the range of possible trajectories, institutions are needed with which to manage and sustain appreciative systems, and the cognitive skills of engaging in institutional life are not reducible to mere intelligence. At the point where we have autonomous artificially intelligent machines that display the capacities for institutionally sustaining appreciation systems by striking settlements between forms of social organization between each other and with humans, we are no longer dealing with domination or even a takeover of legitimate power, but we are dealing with autonomous participation in institutions and creativity in institutional life, in which the roles of humans are not necessarily, still less typically, subaltern at all. At that stage, we should be dealing with machine-persons. If that is the case, then we are dealing with an ordinary problem of institutionally ordered and sanctioned responsibility and power that can practically be held accountable, not with a threat of domination. In empirical terms, then, symbiosis between human and machine, even the machine-person, is much the most likely scenario, as it always has been. The central incoherence of the theme of takeover by intelligent machines, then, is this. The capacities that would, on any reasonable account of anything that we would recognize meaningfully as being power or domination over human beings, be ones that would also lead the autonomous rationally intelligent system to identify that domination was inef cient, not likely to be achieved for reasons not only of lack of processing power, but because of the cost of information and the problems of collective action. Secondly, the capacities needed to exercise and sustain power, even power as domination, are ones that would make those doing so into machine-persons, not autonomous intelligent machines, and would in practice quickly turn domination into ordinary power, subject to the usual feedback mechanisms of backlash, defection and backsliding from the nonhierarchical biases, and quickly returning the power system to one of ordinary political mobilization. Themes like the takeover of the machines from their creators persist in human societies not because they represent coherent and possible scenarios which are the practical focus on decision making. They persist because they serve certain latent social functions (Douglas 1986: ch.3). They enable one or more of the basic biases to engage in con ict with the other biases over how social organization should be

216

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

conceived and re-modelled in its own image. The power of the trope lies in its apocalyptic aesthetic and its mobilizing capacity for particular solidarities, rather than its intellectual coherence. But there are deep continuities between rival aesthetic appreciations and competing moral commitments (Thompson 1983). The role of the trope is itself to organize fears to engage in aesthetic and moral conflict in human, not machine, society and to mobilize for conflict between biases, the widespread sense of the fragility to that con ict of the institutions and cultures that sustain the organic solidarity or settlements that sustain social cohesion.

Downloaded by [University of Navarra] at 07:53 22 November 2012

PRACTICAL ISSUES OF RISK MANAGEMENT FOR AUTONOMOUS INTELLIGENT SYSTEMS

Setting aside the trope as a myth (in the proper sense of that term, namely, a theme that expresses fears and ideals implicit in certain forms of social organization), however, there are some important practical issues about how we are to manage the risks that might be presented by autonomous arti cially intelligent systems, whether in the form of digital agents or of kinetic robots. No doubt some systems will learn the wrong things; some will go haywire; some will fail in either chronic or spectacularly acute ways; some will use their capacity to evolve communication systems that humans cannot immediately understand with other autonomous arti cially intelligent systems to undertake activities that are not desired; some autonomous intelligent digital agents will be captured by hostile systems and turned to work against the interests of their owners or principals. The risk management problem, then, is to nd an appropriate trade-off between the advantages to business and government users from deploying systems with unrestricted autonomy to pursue the goals set for them, and the importance of securing limits to that autonomy to prevent these kinds of problems. This is not a new or particularly intellectually dif cult problem, for it occurs in all kinds of agency relationships, including employment contracts and in the design and management of many less autonomous and less intelligent systems. In the remainder of this article, I discuss just two of the principal elds in which this trade-off between unhampered pursuit of the task might lead to greater autonomy and sensible risk management might lead to some restrictions upon autonomy (the second article will deal in more detail with the concept of autonomy in AI systems).

217

PERRI

Cl assificato ry A utonomy and Transp arency to Human Understanding

In his book on his own experiments with groups of robots communicating with one another, Warwick (1998) reports that in his laboratory at the University of Reading, he has found that robots develop systems of communication which supplement the basic concepts that are programmed into them, and which require hard work for humans to translate. This is indeed an important nding, but not necessarily as catastrophic as his version of the takeover myth would suggest. The key point here is that these systems have the capacity to develop by evolutionary problem solving (in the particular sense that AI programmers use the terms evolutionary and genetic, to mean strategies of sequential generation of large numbers of routines, followed by brute force trial and error, elimination and incremental improvement techniques) and communication transfer of capacities over networks, to develop from a small number of pre-programmed categories and concepts, autonomy within the group of autonomous systems, among which collective action problems have been solved by one means or another, classi catory autonomy. The central challenge for an anthropologist and a linguist learning a language for which no dictionaries, no grammatical rulebooks and no prior translations exist, is to understand the classi cation system, and what is counted as similar, and what dissimilar, to what (Quine 1960; Goodman 1970; Douglas 1972, Douglas and Hull 1992). As Warwick indicates, the problem is not insoluble, provided that we have rich contextual information and that we can follow over time the nature of the practices that are associated with communication, but difficulties and uncertainties remain. Classi catory autonomy in groups can be an important and powerful resource. It can be part of solutions to collective action problems; it can be much more ef cient, and in particular less time consuming, than relying upon systems for which all classi cation must be provided in detail in advance. The whole point of introducing autonomous arti cially intelligent machines and systems will be to economize on precisely this detailed and expensive control. But when the systems begin to operate in ways that are undesirable, classificatory autonomy limits human capacity for understanding, and thus reduces the transparency to humans of such systems. Here we have a conflict between the individualist and instrumental outlook, which regards the bene ts from systems that are not under detailed control as likely to outweigh the risks, and the hierarchical and controloriented outlook, which takes the reverse view of such problems. In practice, some settlement or some series of settlements changing over time will have to be struck between the rival claims of these two biases.

218

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

Downloaded by [University of Navarra] at 07:53 22 November 2012

Warwicks own laboratory solution to the problem may represent the basis of a viable settlement in many practical contexts. It may be possible to permit extensive AI systems to exercise classi catory autonomy in environments that are designed to be manipulated to limit the risks of machines causing damage or harm, or else in environments that are suf ciently limited that the range of communicative possibilities is readily understood by humans and where translation and understanding can be achieved relatively readily, or where the period is relatively short for which groups of autonomous machines are run without being switched off and the capacities gained thereby erased, so that evolutionary development in group classi cation is curtailed. It is not dif cult to think of many applications of autonomous artificially intelligent systems in business and government where these kinds of environmental restrictions could be used without too much dif culty. Employed in waste collection and processing and recycling, or environmental monitoring, and with limited freedom of movement beyond de ned environmental border, such machines might be allowed a wide degree of classi catory autonomy without incurring great risk. In richer and more complex environments, or where the decision making delegated to autonomous arti cially intelligent systems is signi cant, and where the systems will be operated for longer periods to evolve knowledge and communication systems, it may be necessary to hobble to some degree, the extent to which their classi catory autonomy is permitted to develop in ways that are not transparent to human observers and managers. Finally, there may be ways in which the development of autonomous arti cially intelligent systems themselves can help with this problem, for some systems may be deployed to undertake contemporaneous translation into human languages of the operations of other systems in a network.

Desi gn for Accountabi lity

Where decisions made by autonomous artificially intelligent systems are for high stakes, where the costs of error could be catastrophic and acute or else produce chronic low-level problems, acceptable trade-offs between ef ciency in operation when functioning well and limited autonomy to limit the damage from malfunction may need to affect decisional autonomy as well as classificatory autonomy. In short, we may need to innovate and expect designers to innovate in the development of systems of accountability for autonomous artificially intelligent systems. The basic level of accountability for any system is for it to be called to provide an account of its decisions, operations, effectiveness, resource use, probity and

219

PERRI

propriety in transactions, etc. (Day and Klein 1987; March and Olsen 1995: ch.5; Vickers 1995: ch. 12; Bovens 1998; Hogwood et al. 1998). For example, an arti cially intelligent system that provides a translation into human languages of the communications of a network of other collectively acting arti cially intelligent systems is providing an elementary form of account. More sophisticated or summary, analytical and relevant, less tediously blow-by-blow accounts can readily be provided by intelligent systems. The next level of accountability is the acceptance by a system held to account that it is responsible for its autonomous decisions under some accepted goal or value, and redirecting its decisions, actions and operations to that goal or value. One might imagine here the designing in of some code of professional ethics to the motivation functions of autonomous arti cially intelligent systems, whether digital or kinetic. This was, presumably, what Asimov had in mind for his famous three laws. While those laws are of the wrong order of generality to be of much use (as Warwick (1998) correctly argues), the basic idea of designing for accountability by embodying some commitments, even if they can be overridden in certain kinds of emergencies, is broadly sound. The design must serve as the arti cial equivalent of the human professional taking an oath binding her or him to the code of professional conduct. However, as we shall see in the second article in this series, there are dif culties in determining what it would mean to sanction an autonomous arti cially intelligent system for failure to abide by the code. Designing in such a code would represent a limitation upon autonomy in the minimal sense that it represents the honest and sincere attempt of an agent to focus action upon a task desired by a principal, and which has been internalized. This will presumably be basic to the design of arti cially intelligent systems and to the training of neural nets and evolutionary problem-solving systems. The next level of accountability is the possibility of the revocation of the mandate. For autonomous artificially intelligent machines, this might mean a simple switch-off, commissioning the machines (or other machines) to reverse damage done, re-training its decision-making system, and/or allocating the task to another system, perhaps another autonomous arti cially intelligent system or perhaps a group of humans. It will be important in many contexts that not only is there the possibility of such revocation of mandate designed into autonomous artificially intelligent machines, but that there is also designed in from the beginning, the trigger mechanisms that will enable humans either to begin the process of revocation or to ensure that it is done. One possibility might be of course to use autonomous arti cially intelligent machines to hold other machines accountable. They too might fail. But this is not a new problem. Since ancient times, the question, quis custodiet custodes? (who

220

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

Downloaded by [University of Navarra] at 07:53 22 November 2012

shall guard the guardians?), has arisen for every system of audit and accountability, whether those who hold others to account are auditors, inspectors, police of cers, politicians or machines. In practice, we nd various ways of solving it, through creating mutual accountability systems, rotating those who hold others to account by removing them from of ce after a while, etc. all of which could be used with autonomous arti cially intelligent machine systems. In the human context, the nal level of accountability is concerned with the administration of sanctions. But this is probably not meaningful in the case of most artificially intelligent systems, at least until such time as we possess the technology to create machine-persons (see the second article in this series). Robots and digital agents will almost certainly not take over and subject humans to their power, as the now centuries-old myth has it. But, like any other technology, when they go wrong, they will no doubt expose humans to some risks. Those risks may well be worth running for the bene ts that will accrue from the mainstream deployment of these systems. But sensible risk management will be an important element in good design. The exact nature of the degree to which design for transparency and accountability needs to restrict the classificatory and decisional autonomy of these machines will vary from one application and context to another. But trade-offs will have to be made between the individualist view that bene ts will always outweigh risks and the hierarchical attitude that control must always override autonomy.

CONCLUSION

The really important challenge that the new AI presents is not an eschatological one, in which human beings become the prey of their own creations and human societies are subjected to a tyranny of merciless machines. Rather, the really important challenges are only (!) exacerbations of the eternal problems of technological risk. The exacerbating factor is the vastly greater autonomy of AI systems in making decisions than is exhibited by almost any other tool. In the second and concluding article, I explore in much more conceptual and policy detail the nature and the implications of this recognition. The reverse of the coin of developing autonomous intelligence to the point that a tool must be thought of also as an agent, is that practical problems of accountability become very important indeed. The choice must be made whether to subject AI systems to the kinds of accountability that are appropriate to a tool or to an agent: some key practical implications of this will be explored in the second article in this series. No doubt on a case-by-case basis depending on particular institutional setting, and the technical capabilities of the AI systems

221

PERRI

involved, different regimes may be applied over time to one and the same AI system (however, we individuate self-replicating virtual systems working over networks, when they do not have physical bodies to manage like a robot). In the rst instance, accountability is a challenge for designers, and it ought to be a matter of some concern that there is no agreed statement of the professional ethics to which AI designers are expected to subscribe. In the second place, accountability is a matter for the governance of AI systems by owners and users in many cases within large organizations. The disciplines of management and organization design will, sooner or later, be expected to respond to the challenge of creating human roles and institutions within organizations for the proper governance of AI systems. Finally, as AI systems become commonplace household artefacts, in the initial case, the onus will fall back upon designers to ensure that without special procedures and with only ordinary consumer expertise, we can all ensure the accountability of what may otherwise have to be treated as among the more hazardous products found in a home. No doubt, in some part, we shall look for technical fixes to these essentially social problems, as we always do (Weinberg 1966): speci cally, we shall try to use AI systems to hold other AI systems accountable. But this is never enough. In the long run, as the history of technological risk shows, only substantive settlements between rival forms of social accountability will work (Schwarz and Thompson 1990). In this context, great importance must attach to the development and institutionalization of codes of professional practice among the AI community (Whitby 1988, 1996) but also among the commercial, entrepreneurial and governmental community: these represent the moment of hierarchy. However, it will also be important, as it has been in environmental and data protection policy, to find ways to open up entrepreneurial opportunities in markets for safety, in order to mobilize the individualist solidarity. That there will be consumer, public and labour movement mobilization in response to safety concerns, securing the egalitarian commitment, can probably be assumed in any case. As so often in public policy, a key question about the viability of the overall institutional mix is the degree to which more fatalist strands will regard occasional failures as inevitable, and the degree to which people with few opportunities to in uence the uses and safeguards upon AI will be mobilized by other institutional forces when things do go wrong. There is no reason for grand pessimism about our capacity to achieve some practical settlement between these solidarities in the context of the new AI. After all, our societies have managed to strike more or less adequate, if always imperfect and clumsy, settlements for the governance of many kinds of equally dangerous technologies ranging from hazardous chemicals through nuclear reactors to some categories of weapons of mass destruction. What this does require, however, is

222

Downloaded by [University of Navarra] at 07:53 22 November 2012

ETH ICS,

REGULATION

&

NEW

ARTIFICIAL

IN TELLIGENCE

some social pressure on technology communities to think carefully about the institutionalization of their own professional ethics. It is that challenge that must be made to the AI research and development community in the coming decades.

ACKNOWLEDGEMENTS

Downloaded by [University of Navarra] at 07:53 22 November 2012

The research on which this article is based was conducted with nancial support from Bull Information Systems (UK and Ireland): for the full report from which much of the material in this article has been derived, see 6 (1999b). I am particularly grateful to Stephen Meyler, Marketing Director, and Amanda Purdie, then Chief Press Of cer, at Bull, for their support. In particular, I am grateful to Bull and the Internet Intelligence Bulletin for supporting an electronic conference of experts where I was able to elicit comments on a late draft of the report from Francis Aldhouse, Liam Bannon, Paul Bennett, John Browning, Christian Campbell, Ian Christie, David Cooke, John Haldane, Peter Hewkin, Clive Holtham, Dan Jellinek, Mary Mulvihill, Bill Murphy, Clive Norris, Charles Raab, Sarah Tanburn and Blay Whitby. I am grateful also to Bill Dutton and Brian Loader, the editors of this journal, and to two anonymous referees, for their helpful suggestions on how to improve upon my earlier drafts. However, none of these people should be held in any responsible for my errors nor should they be assumed to agree with my arguments.

Perri 6 Director, Policy Programme Institute for Applied Health & Social Policy Kings College, London c/o 63 Leyspring Rd Leytonstone London E11 3BP perri@stepney-green.demon.co.uk

NOTES

1 2

The title of Weizenbaums nal chapter was Against the imperialism of instrumental reason. See, for example, Douglas (1992) for a social systems perspective grounded in anthropology; Joffe (1999) for a combined psychoanalytic and social representation view; Lupton (1999) for a compendium of sociological views; and Caplan (2000) for some other anthropological theories. Even mainstream psychological writers with roots in psychometric work have recognized the force of at least some part of these arguments in recent years: see, for example, Renn (1998) and Slovic (1993). By construal here, I do not mean as the text argues below to suggest that the distinctions are in some way illusory. For an explanation of why the term construal, which suggests interpretation of evidence about the real, is preferred in the neo-Durkheimian

223

PERRI

Downloaded by [University of Navarra] at 07:53 22 November 2012

tradition to that of construction or social construction, which suggests a kind of fantasy, see Douglas (1997: 123). Durkheim (1983) himself speci cally rejected relativism, but argued powerfully (Durkheim and Mauss 1963) for the social construal of evidence of the real through concepts that re ect styles of social organization. For a valuable discussion the distinction between anticipation and resilience, see Hood and Jones (1996). I do not here have space to defend the general case for anticipation against defenders of resilience such as Wildavsky (1988). However, see 6 (2001b forthcoming). This is a brief statement of what might be called the classical synoptic model of ideal decision theory, derived from Simon (1947). In practice, of course, no one makes decisions in this way, as successive waves of critics have pointed out. For the best known critiques, see Braybrooke and Lindblom (1970), Vickers (1995 [1963]) and Kahneman and Tversky (1979).

REFERENCES

Adams, J. (1995) Risk, London: UCL Press. Barrett, N. (1998) 2010 the year of the digital familiar, in N. Barrett (ed.) Into the Third Millennium: Trends Beyond the Year 2000, London: Bull Information Systems, pp. 2737. Barzelay, M. with collaboration of Armajani, B.J. (1992) Breaking Through Bureaucracy: A New Vision for Managing in Government, Berkeley: University of California Press. Beck, U. (1992) Risk Society, London: Sage. Bovens, M. and Hart, P. (1996) Understanding Policy Fiascoes, New Brunswick: Transaction Books. Bovens, M. (1998) The Quest for Responsibility: Accountability and Citizenship in Complex Organisations, Cambridge: Cambridge University Press. Braybrooke, D. and Lindblom, C.E. (1970) A Strategy of Decision: Policy Evaluation as a Social Process, New York: Free Press. Caplan, P. (ed.) (2000) Risk Revisited, London: Pluto Press. Coyle, D.J. and Ellis, R.J. (eds) (1993) Politics, Policy and Culture, Boulder, CO: Westview Press. Cranor, C.F. (1988) Some public policy problems with risk assessment: how defensible is the use of the 95% con dence rule in epidemiology?, paper presented as part of the University of California Riverside Carcinogen Risk Assessment Project, Toxic Substance Research and Teaching Programme. Cranor, C.F. (1990) Some moral issues in risk assessment, Ethics. Day, P. and Klein, R.E. (1987) Accountabilities: Five Public Services, London: Tavistock Publications. Dennett, D.C. (1998) Brainchildren: Essays on Designing Minds, Harmondsworth: Penguin. Douglas, M. (1966) Purity and Danger: An Analysis of the Concepts of Pollution and Taboo, London: Routledge. Douglas, M. (1970) Natural Symbols: Explorations in Cosmology, London: Routledge.

224