Escolar Documentos

Profissional Documentos

Cultura Documentos

6 - 2 - Analysis of Variance (26-20)

Enviado por

Guru SarafDescrição original:

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

6 - 2 - Analysis of Variance (26-20)

Enviado por

Guru SarafDireitos autorais:

Formatos disponíveis

>> So finally, we are ready to start testing our research questions statistically. Well, it took us a while to get here.

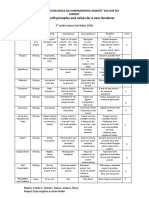

Our earlier steps should never be avoided. That is, no matter how sophisticated, you may become as a quantitative researcher. You will always need to examine your code book, manage your data and examine descriptive statistics for the variables of interest. In my description of hypothesis testing, where we looked at the association between depression and smoking, we were working with a categorical explanatory variable, the presence or absence of depression And a quantitative response variable, the number of cigarettes smoked per month. When you are testing hypothesis with a categorical explanatory variable and a quantitative response variable, the tool that you should use is analysis of variance, sometimes called ANOVA. Now that you understand in what kind of situations ANOVA is used, we're ready to learn how it works, or more specifically, what the idea is behind comparing means. The test that you will be learning is called the ANOVA F-test. So let's use another categorical to quantitative research question. Is academic frustration related to major? In this example, a college dean believes that students with different majors may experience different levels of academic frustration. Random samples of size 35 of business, English, mathematics, and psychology majors are asked to rate their level of academic frustration on a scale of 1, lowest, to 20, highest. This figure highlights that we will be examining the relationship between major, our explanatory x variable, and frustration level, our response variable, or y, to compare the mean of frustration levels among the four majors defined by x. The null hypothesis claims that, that there is no relationship between my explanatory and response variable, x and y. Since the relationship is examined by comparing the means of y in the populations defined by the values of x, no relationship would mean that all the means are equal. Therefore, the null hypothesis of the F-test is population mean 1 equals population mean 2 equals population mean 3

equals population mean 4. Here, we have just one alternative hypothesis, which claims that there is a relationship between x and y. In terms of the means, it simply says the opposite, that not all of the means are equal, and we simply write H subscript a, not all of the population means are equal. Note that there are many ways for the population means not to be equal. We'll talk about that later. For now, let's think about how we would go about testing whether the population means are equal. We could calculate the mean frustration level for each major and see how far apart these sample means are, or in other words, measure the variation between the sample means. If we find that the four sample means are not all close together, we'll say that we have evidence against the null hypothesis, and otherwise, if they are close together, we'll say that we do not have evidence against the null hypothesis. This seems quite simple, but is this enough? Let's see. It turns out that the sample mean frustration score of the 35 business majors is 7.3. The sample mean frustration score for the 35 English majors is 11.8. The sample mean frustration for the 35 math majors is 13.2. The sample mean frustration score for the 35 psychology majors is 14.0. Here are two possible scenarios for this example. In both cases, we have side-by-side box plots for four groups of frustration levels. A box plot is a convenient way of graphically depicting groups of numerical data, including the smallest observation of the group, the median, and the largest observation. You can see that each scenario has the same variation or differences among their means. Scenario 1 and Scenario 2 both show data for four groups with the sample mean, 7.3, 11.8, 13.2, and 14.0 indicated with red marks. The important difference between the two scenarios is that the first represents data with a large amount of variation within each of the four groups. The second represents data with a small amount of variation within each of the

four groups. Scenario 1, because of the large amount of spread within the groups, show box plots with plenty of overlap. One could imagine the data arising from four random samples taken from four populations all having the same mean of about 11 or 12. The first group of values may have been a bit on the low side and the other three a bit on the high side, but such differences could conceivably have come about by chance. This would be the case if the null hypothesis claiming equal population means were true. Scenario 2, because of the small amount of spread within the groups, Shows box plots with very little overlap. It would be very hard to believe that we are sampling from four groups that have equal population means. This would be the case if the null hypothesis claiming equal population means were false. Thus, in the language of hypothesis tests, we would say that if the data were configured as they are in Scenario 1, we would not reject the null hypothesis that population mean frustration levels were equal for the four majors. If the data were configured as they are in Scenario 2, we would reject the null hypothesis and we would conclude that mean frustrations levels differ depending on major. The question we need to answer with the ANOVA F-test is are the differences among the sample means due to true differences among the population means or merely due to sampling variability. In order to answer this question using our data, we obviously need to look at the variation among the sample means, but that's not enough. We also need to look at the variation among the sample means relative to the variation within the groups. So F is the variation among sample means divided by the variation within groups. In other words, we need to look at the quantity, variation among sample means, divided by variation within groups, which measures to what extent the difference among the sampled groups means dominates over the usual variation within sampled groups, which reflects differences in individuals that are typical in random samples. When the variation within groups is large,

like in Scenario 1, the differences or variation among the sample means could become negligible and the data provide very little evidence against the null hypothesis. When the variation within groups is small, like in Scenario 2, the variation among the sample means dominates over it and the data have stronger evidence against the null hypothesis. Looking at the ratio of variations is the idea behind comparing means, thus the name analysis of variance. The results of the analysis of variants testing the relationship between major and frustration score are shown here. The F statistic circled in red is 46.6. Since we know this is the variability among sample means divided by the variability within groups, this large number suggests that the variability among sample means is much greater than that within sample groups. The P value of the ANOVA F-test is the probability of getting and F statistic as large as we got or even larger had the null hypothesis been true. That is, had the population means been equal. In other words, it tells us how surprising it is to find data like those observed, assuming that there is no difference among the population means. This P value is practically zero, telling us that it would be next to impossible to get data like those observed, had the mean frustration level of the four majors been the same as the null hypothesis claims. The P value of 0.0001 suggests that we will incorrectly reject the null hypothesis 1 in 10,000 times And we will be correct in accepting the alternate hypothesis 9,999 out of 10,000 times. So we can confidently conclude that the frustration level means of the four majors are not all the same, or in other words, there is a significant association between frustration level and major. So we accept the alternate hypothesis and reject the null hypothesis. Now that you have a good feel for analysis of variance, let's run the statistical test using SAS. To do this, I'm going back to an example that I covered when describing the process of hypothesis testing. Specifically, is major depression associated due to a smoking quantity among current young adult smokers? Or in hypothesis testing terms, are the

mean number of cigarettes smoked per month equal or not equal for those individuals with and without major depression? My explanatory variable here is categorical with two levels, that is, the presence or absence of major depression. My response variable, smoking quantity, measured by the number of cigarettes smoked per month ranges from 1 to 2940. Returning to the SAS program I have been building to examine our NESARC data, I will just quickly just review the program pieces once again. First, I have my libname statement which calls inter points to the course data set and tells us where to find the data. Next I start my data step with the data command, and then read in the specific data set. In this case, the NESARC data. I had also added labels to help me more easily read the output, Had set appropriate missing data. And then created secondary variables measuring usual smoking frequency per month, number of cigarettes smoked per month, and also, packs of cigarettes smoked per month as both a quantitative as well as a categorical variable. Just before the end of my data step, I subset my data to observations including young adult smokers who had smoked in the past year, and then, I concluded my data step by sorting the data by my unique identifier. As you know, all procedures that follow the data step are written to request specific output or results. So this is where we'll need to add the syntax for analysis of variance. To conduct an analysis of variance, we're going to use the PROC ANOVA procedure. So let me walk you through this syntax. We start with PROC ANOVA; and we follow this with the class statement. The class statement identifies the categorical explanatory variable. The name of my categorical explanatory variable is MAJORDEPLIFE, so I include this after the word "class", and as always, end the command with a semicolon. Next we write a model statement, naming the quantitative response variable. Including the equals sign, and then following this with a categorical explanatory variable. So in this case, I'm going to say model, num sig m o underline e s t, the name of my quantitative response variable, equals major dep life, the name of my categorical

explanatory variable. Ending a statement with a semi colon. Finally, the mean statement tells us which groups you would like to compare mean number of cigarettes smoked per month. So again we include the categorical exlanatory variable and end the statement with a semi colon. So now we're ready to run the program and take a look at the output. Proc Anova first displays a table that includes the following. The name of the variable in the class statement, the number of different values or levels of the class variable, the values of the class variable and the number of observations in the data set and the number of observations excluded from the analysis because of missing data, if any. So here we see our categorical explanatory variable majordeplife. As two levels an that the values are zero and one. Of the 1706 observations 1697 were included in the analysis. Procanova then displays an analysis of variance table for the response variable, also known as the dependent variable from the model Statement. Our calculated f statistic, called the f value in this output, is 3.55. The signifigance probability, or p value, is so stated with this s statistic, is labeled pr greater than f. And as you can see, the p value is .0597. Just over our p.05 cut point. If I look at the means table I can see that young adult smokers without major depression, as indicated by a value of zero, smoke an average of 312.8 cigarettes per month. And that those with major depression, indicated by a value of 1, smoke on average 341.4 cigarettes per month. Because my p value is greater than 0.05, nearly 0.06, I'm going to accept the null hypothesis, and say that these means are equal, and that there is no association between the presence or absence of major depression. And the number of cigarettes smoked per month, among young adult smokers. If I chose to reject the null hypothesis, I would be wrong 6 out of 100 times. And again, by normal scientific standards, this is not adequate certainty to reject the null hypothesis, and say there's an association. Instead, we're going to accept the null

hypothesis, and say that there is no association. Had the p value been less than .05, I would know that there was a significant association. And to interpret that as significant association, I would look at the means table. If p would have been less than .05, I can see that individuals with major depression smoke more than individuals without. And again with a significant P value, I could have said that young adult smokers with major depression smoke significantly more cigarettes per month than young adult smokers without major depression. So I've shown you the ropes in terms of interpreting a significant or non-significant anova, when my categorical explanatory variable has 2 levels, as it did here with depression. For this interpretation, all I need to know is the P value and the means for each of the two groups. But, what if my categorical explanatory variable has more than two groups? In this example, I'm going to examine the association between ethnicity and smoking quantity. My explanatory variable ethnicity actually has five levels or groups. One is white, two is black, three is American Indian Alaskan native, four Asian native, Hawaiian Pacific Islander, and five, Hispanic or latino. So by running an analysis of variants I'm asking whether the number of cigarettes smoked differs for different ethnic groups. Since I'm only changing the categorical explanatory variable that's the only part of the syntax that we'll need to change. Change. So rather than including major death life. In my class statement, I'm going to include F raised 2A. I'm going to include it here again in my model statement, and then here again in my mean statement. This time the f statistic is 24.4 with an associated p value of less than .0001. Well, this tells me that I can safely reject the null hypothesis. And say that there is an association between ethnicity and smoking quantity. All I know, at this point, is that not all of the means are equal. I could eyeball each mean in the means table. And make a guess as to which pairs are

signifigantly different from one another. For example, the ethnic group with the lowest mean number of cigarettes smoked per month among young adult smokers is ethnic group 5, Hispanic or Latino. And the group with the highest number of cigarettes smoked per month is ethnic group 1, White. Because there are multiple levels of my catagorical explanitory variable You know the f test and p value do not provide any insight into why the null hypothesis can be rejected. It doesn't not tell us in what way the different population means are not equal. Note that there are many ways for population means not to be all equal. Having each of them not equal to the other is just one of them. Another way could be that only two of the populations are not equal to one another. In the case where the explanitary variable represents more than two groups a significant nova does not tell us which groups are different from the others To determine which groups are different from the others. We would need to perform a post hoc test. A post hoc test is generally termed post hoc paired comparisons. These post hoc paired comparisons. Meaning, after the fact, or after data collection. Must be conducted in a particular way, in order to prevent excessive type 1 error. Type 1 error, as you'll recall, occurs when you make an incorrect decision about the null hypothesis. Why can't we just perform multiple ANOVAs? That is, why can't we just subset our observations, and take 2 at a time. So we compare white versus black. White versus American Indian, Alaskan Native, etcetera. Until all the paired comparisons have been made. As you know, we accept significance and reject the null hypothesis at p less than or equal to 0.05, a 5% chance that we are wrong and have committed a type 1 error. There's actually a 5% chance of making a type 1 error for each analysis of variance that we conduct on this question. Therefore performing multiple tests means that our overall chance of committing type, type 1 error could be far greater than 5%. Here is how it works out. Using the formula displayed under this table You can see that while one test has

a type one error rate of .05 by the time we've conducted 10 tests on this question our chance of rejecting the known hypothesis when the known hypothesis is true is up to 40%. This increase in the type one error rate is called the Family-Wise error rate and the first of the error rate for the group of pair comparison. Post hoc tests are designed to evaluate the difference between pairs of means while protecting against the inflation of type one errors. And there are a lot of post hoc tests to choose from when it comes to analysis of variance. There's the Sidak, and the Holm T test, and Fisher's Least Significant Difference Test. Tooky's honestly significant difference test, the Scheffe test, the Newman Cools test, Dunnett's multiple comparison test, the Duncan multiple range test, the Bonferroni Procedure. It's enough to make your head swim. While there are certainly differences in how conservative each test is in terms of protecting against type one error. In many cases it is far less important which post hoc test you conduct and far more important that you do conduct one. In order to conduct post hoc paired comparisons in the context of my nova examining the association between ethnicity and number of cigarettes smoked per month among young adult smokers. I'm going to use the Duncan test. [inaudible]. To do this, all I need to do is add a slash and the word Duncan at the end of my means statement. And then save and run the program. The top of our results looks the same as in our original test. The F value, or F statistic, is 24.4, and is significant at the p 0.0001 level. Hoerver, if we scroll down, we see a new table displayting the results of the paried comparisons conducted by the Duncan multiple range test. Test. Here is how the table should be interpreted. Basically, means with the same capital letter next to them are not signifigantly different. So you can see that ethnic group 1 and 3 are not signifigantly different, because they both have a's. Grouups 2, 4, and 5 are not signifigantly

different. Because each has a c. Groups 3, 2, and 4, again, are not significantly different from one another. Each with a B next to the name. Okay. So where are the significant differences? Group 1, which indicates white ethnicity, smokes significantly more cigarettes per month, than ethnic groups 2. Four and five, that is black, Asian, and Hispanic or Latino. Group three, American Indian Alaskan Native, smoke significantly more per month than group five, Hispanic or Latio. Notice that some of the means have more than one letter next to them so you need to be careful and follow the rule that means that share even one letter are not significantly different from one another. So we have really covered a lot. Let me very quickly summarize analysis of variance. First, an analysis of variant's used when we have a categorical explanatory variable and a quantitative response variable. The null hypothesis is that there is no relationship between the explanatory and response variable. In other words, that all means are equal. The alternate hypothesis is that not all means are equal. The f statistic is calculated by comparing the variation among sample means to the variation within groups. If the variation among sample means wins out, the p value will be less than or equal to .05, and we have a significant finding. This would allow us to reject the null hypotheses, and say that the explanatory, and response variables, are associated. The model sas syntax, for conducting an analysis of variance, is the following. Proc ANOVA: CLASS CAT_EXPLANATORY: Model quantitative response variable equal to categorical explanatory variables, semicolon. And then, finally, the mean statement, which includes the categorical explanatory variable. If your explanatory variable has more than two levels or groups. You'll also need to conduct a post hoc. Hoc test. To conduct a Duncan multiple range test, you include a slash, followed by the word Duncan, and a semicolon. Now you are ready to test a categorical to quantitative relationship.

If your own research question does not include these types of variables. You might want to test the procedure with variables from your data set that do require an inova. For example, you could look at mean age differences. Like any categorical variable in the NESARC. Or, treating the grade level variable in ad health as quantitative. You could look at mean differences, again, by any categorical variable that you choose. For both gap-minder and the Mars craters data, there are many quantitative variables. So you might choose to categorize one of them for inclusion in a nova. I promise, whatever types of variables you have, you will be able to test the association with the right tool. More to come.

Você também pode gostar

- P ValueDocumento13 páginasP ValueKamal AnchaliaAinda não há avaliações

- A Simple Introduction To ANOVA (With Applications in Excel) : Source: MegapixlDocumento22 páginasA Simple Introduction To ANOVA (With Applications in Excel) : Source: MegapixlmelannyAinda não há avaliações

- DataScience Interview Master DocDocumento120 páginasDataScience Interview Master DocpriyankaAinda não há avaliações

- Inferential StatisticsDocumento28 páginasInferential Statisticsadityadhiman100% (3)

- AnovaDocumento24 páginasAnovabatiri garamaAinda não há avaliações

- ANOVA de Dos VíasDocumento17 páginasANOVA de Dos VíasFernando GutierrezAinda não há avaliações

- Making Inferences from Non-Probability SamplesDocumento4 páginasMaking Inferences from Non-Probability SamplesnouAinda não há avaliações

- My Educational FieldDocumento27 páginasMy Educational FieldMaria KanwalAinda não há avaliações

- Null and Alternative HypothesisDocumento4 páginasNull and Alternative HypothesisGercel MillareAinda não há avaliações

- Tests of Significance and Measures of AssociationDocumento21 páginasTests of Significance and Measures of AssociationVida Suelo QuitoAinda não há avaliações

- Hi in This Video I Will Help You ChooseDocumento7 páginasHi in This Video I Will Help You ChooseMixx MineAinda não há avaliações

- Assignment No. 2 Subject: Educational Statistics (8614) (Units 1-4) SubjectDocumento7 páginasAssignment No. 2 Subject: Educational Statistics (8614) (Units 1-4) Subjectrida batoolAinda não há avaliações

- Tests of Significance and Measures of Association ExplainedDocumento21 páginasTests of Significance and Measures of Association Explainedanandan777supmAinda não há avaliações

- Introduction: Hypothesis Testing Is A Formal Procedure For Investigating Our IdeasDocumento7 páginasIntroduction: Hypothesis Testing Is A Formal Procedure For Investigating Our IdeasRaven SlanderAinda não há avaliações

- Null Hypothesis SymbolDocumento8 páginasNull Hypothesis Symbolafbwrszxd100% (2)

- What Is A HypothesisDocumento4 páginasWhat Is A Hypothesis12q23Ainda não há avaliações

- Correlation and ANOVA TechniquesDocumento6 páginasCorrelation and ANOVA TechniquesHans Christer RiveraAinda não há avaliações

- Null Hypothesis FormulaDocumento8 páginasNull Hypothesis Formulatracyhuangpasadena100% (2)

- SRMDocumento6 páginasSRMsidharthAinda não há avaliações

- Q2 Module 5 - Data Analysis Using Statistics and Hypothesis TestingDocumento9 páginasQ2 Module 5 - Data Analysis Using Statistics and Hypothesis TestingJester Guballa de LeonAinda não há avaliações

- Statistics Data Analysis and Decision Modeling 5th Edition Evans Solutions Manual 1Documento32 páginasStatistics Data Analysis and Decision Modeling 5th Edition Evans Solutions Manual 1jillnguyenoestdiacfq100% (26)

- Inferential Statistics For Data ScienceDocumento10 páginasInferential Statistics For Data Sciencersaranms100% (1)

- Inferential StatisticsDocumento23 páginasInferential StatisticsAki StephyAinda não há avaliações

- SmartAlAnswers ALLDocumento322 páginasSmartAlAnswers ALLEyosyas Woldekidan50% (2)

- Name: Hasnain Shahnawaz REG#: 1811265 Class: Bba-5B Subject: Statistical Inferance Assignment 1 To 10Documento38 páginasName: Hasnain Shahnawaz REG#: 1811265 Class: Bba-5B Subject: Statistical Inferance Assignment 1 To 10kazi ibiiAinda não há avaliações

- Testing of AssumptionsDocumento8 páginasTesting of AssumptionsK. RemlalmawipuiiAinda não há avaliações

- Z ScoresDocumento19 páginasZ ScoresDela Cruz GenesisAinda não há avaliações

- Lab VivaDocumento8 páginasLab VivaShrid GuptaAinda não há avaliações

- ANNOVADocumento2 páginasANNOVAdivi86Ainda não há avaliações

- Inferential Statistics in Psychology3Documento11 páginasInferential Statistics in Psychology3ailui202Ainda não há avaliações

- Activity 5Documento28 páginasActivity 5Hermis Ramil TabhebzAinda não há avaliações

- What Is A P ValueDocumento4 páginasWhat Is A P ValueDurvesh MeshramAinda não há avaliações

- Assignment No: 1: Answer: 1. Paired Sample T-TestDocumento3 páginasAssignment No: 1: Answer: 1. Paired Sample T-TestLubaba ShabbirAinda não há avaliações

- Lesson 12 Hypothesis Testing and InterpretationDocumento10 páginasLesson 12 Hypothesis Testing and InterpretationshinameowwwAinda não há avaliações

- Hypothesis LectureDocumento7 páginasHypothesis Lectureikvinder randhawaAinda não há avaliações

- Q.4. Critically Analyze The Different Procedures of Hypothesis Testing. Ans. Procedure of Testing A HypothesisDocumento2 páginasQ.4. Critically Analyze The Different Procedures of Hypothesis Testing. Ans. Procedure of Testing A HypothesispraveenAinda não há avaliações

- Title Page Co Relational ResearchDocumento5 páginasTitle Page Co Relational ResearchRai Shabbir AhmadAinda não há avaliações

- Statistical Analysis: Eileen D. DagatanDocumento30 páginasStatistical Analysis: Eileen D. Dagataneileen del dagatanAinda não há avaliações

- Hypotheses ExerciseDocumento7 páginasHypotheses Exerciseellaine_rose911Ainda não há avaliações

- Null Hypothesis Significance TestingDocumento6 páginasNull Hypothesis Significance Testinggjgcnp6z100% (2)

- Student's t Test ExplainedDocumento20 páginasStudent's t Test ExplainedNana Fosu YeboahAinda não há avaliações

- Thesis With AnovaDocumento7 páginasThesis With Anovashannongutierrezcorpuschristi100% (2)

- Inferential StatisticsDocumento48 páginasInferential StatisticsNylevon78% (9)

- Hypothesis TestingDocumento21 páginasHypothesis TestingMisshtaCAinda não há avaliações

- Workshop 2 Statistical SupplementDocumento4 páginasWorkshop 2 Statistical Supplementazazel666Ainda não há avaliações

- Unit-4 Hypothesis Testing F T Z Chi TestDocumento17 páginasUnit-4 Hypothesis Testing F T Z Chi TestVipin SinghAinda não há avaliações

- Statistics Lecture NotesDocumento6 páginasStatistics Lecture NotesPriyankaAinda não há avaliações

- Draft: Why Most of Psychology Is Statistically UnfalsifiableDocumento32 páginasDraft: Why Most of Psychology Is Statistically UnfalsifiableAmalAinda não há avaliações

- (A) Null Hypothesis - Female Adolescents Are Taller Than Male AdolescentsDocumento13 páginas(A) Null Hypothesis - Female Adolescents Are Taller Than Male AdolescentsJohAinda não há avaliações

- ExP Psych 13Documento29 páginasExP Psych 13Jhaven MañasAinda não há avaliações

- What Do You Mean by ANCOVA?: Advantages of SPSSDocumento5 páginasWhat Do You Mean by ANCOVA?: Advantages of SPSSAnantha NagAinda não há avaliações

- Course 7 - Statistics For Genomic Data Science - Week 4Documento25 páginasCourse 7 - Statistics For Genomic Data Science - Week 4AbbasAinda não há avaliações

- Islamic Values: o o o o o o o o o o o o o o o oDocumento10 páginasIslamic Values: o o o o o o o o o o o o o o o oMitesh GandhiAinda não há avaliações

- Biostatistics 2-Homework 2Documento3 páginasBiostatistics 2-Homework 2rrutayisireAinda não há avaliações

- Hypothesis TestingDocumento5 páginasHypothesis TestingRikki MeraAinda não há avaliações

- MBA Hypothesis TestingDocumento3 páginasMBA Hypothesis TestingRohit SibalAinda não há avaliações

- Null Hypothesis ExampleDocumento6 páginasNull Hypothesis Examplejessicatannershreveport100% (2)

- DsagfDocumento5 páginasDsagf53melmelAinda não há avaliações

- Power AnalysisDocumento8 páginasPower Analysisnitin1232Ainda não há avaliações

- Inventory VelocityDocumento3 páginasInventory VelocityGuru SarafAinda não há avaliações

- Whats New 7 0 SR2Documento34 páginasWhats New 7 0 SR2Guru SarafAinda não há avaliações

- Module 2Documento2 páginasModule 2Guru SarafAinda não há avaliações

- AUDocumento1 páginaAUGuru SarafAinda não há avaliações

- Module 1Documento3 páginasModule 1Guru SarafAinda não há avaliações

- Slideshop Textbox IllustrationsDocumento14 páginasSlideshop Textbox IllustrationsGuru SarafAinda não há avaliações

- Cenvat Credit Rules From Scratch - Excise ArticlesDocumento16 páginasCenvat Credit Rules From Scratch - Excise ArticlesGuru SarafAinda não há avaliações

- Student class marks dataDocumento3 páginasStudent class marks dataGuru SarafAinda não há avaliações

- Sprout Diagram: Your Own Sub HeadlineDocumento10 páginasSprout Diagram: Your Own Sub HeadlineGuru SarafAinda não há avaliações

- Fluid Mechanics and Hydraulic Machines Textbook Scanned PDFDocumento287 páginasFluid Mechanics and Hydraulic Machines Textbook Scanned PDFPullavartisrikanthChowdaryAinda não há avaliações

- Slideshop 3D Timeline Arrow BlueDocumento10 páginasSlideshop 3D Timeline Arrow BlueGuru SarafAinda não há avaliações

- Word MacrosDocumento60 páginasWord MacrosShivakumar PatilAinda não há avaliações

- Lecture Presentation on Organization and Functioning of Securities MarketsDocumento75 páginasLecture Presentation on Organization and Functioning of Securities MarketsAmrit KeyalAinda não há avaliações

- How To Make Reengineering PDFDocumento22 páginasHow To Make Reengineering PDFGuru SarafAinda não há avaliações

- Cenvat Credit Rules From Scratch - Excise ArticlesDocumento16 páginasCenvat Credit Rules From Scratch - Excise ArticlesGuru SarafAinda não há avaliações

- Reco grad-BTech E&cDocumento1 páginaReco grad-BTech E&cRamkrishna TalwadkerAinda não há avaliações

- Reco grad-BTech E&cDocumento1 páginaReco grad-BTech E&cRamkrishna TalwadkerAinda não há avaliações

- Supply Chain INSIGHT StrategyDocumento2 páginasSupply Chain INSIGHT StrategyGuru SarafAinda não há avaliações

- Happy ChipsDocumento2 páginasHappy ChipsGuru SarafAinda não há avaliações

- Big Joe Pds30-40Documento198 páginasBig Joe Pds30-40mauro garciaAinda não há avaliações

- Felizardo C. Lipana National High SchoolDocumento3 páginasFelizardo C. Lipana National High SchoolMelody LanuzaAinda não há avaliações

- Google Earth Learning Activity Cuban Missile CrisisDocumento2 páginasGoogle Earth Learning Activity Cuban Missile CrisisseankassAinda não há avaliações

- Form Active Structure TypesDocumento5 páginasForm Active Structure TypesShivanshu singh100% (1)

- Analyze and Design Sewer and Stormwater Systems with SewerGEMSDocumento18 páginasAnalyze and Design Sewer and Stormwater Systems with SewerGEMSBoni ClydeAinda não há avaliações

- Checklist of Requirements For OIC-EW Licensure ExamDocumento2 páginasChecklist of Requirements For OIC-EW Licensure Examjonesalvarezcastro60% (5)

- Crystallizers: Chapter 16 Cost Accounting and Capital Cost EstimationDocumento1 páginaCrystallizers: Chapter 16 Cost Accounting and Capital Cost EstimationDeiver Enrique SampayoAinda não há avaliações

- Rubric 5th GradeDocumento2 páginasRubric 5th GradeAlbert SantosAinda não há avaliações

- Anti Jamming of CdmaDocumento10 páginasAnti Jamming of CdmaVishnupriya_Ma_4804Ainda não há avaliações

- Chromate Free CoatingsDocumento16 páginasChromate Free CoatingsbaanaadiAinda não há avaliações

- Final Year Project (Product Recommendation)Documento33 páginasFinal Year Project (Product Recommendation)Anurag ChakrabortyAinda não há avaliações

- Advantages of Using Mobile ApplicationsDocumento30 páginasAdvantages of Using Mobile ApplicationsGian Carlo LajarcaAinda não há avaliações

- Lab StoryDocumento21 páginasLab StoryAbdul QadirAinda não há avaliações

- Simba s7d Long Hole Drill RigDocumento2 páginasSimba s7d Long Hole Drill RigJaime Asis LopezAinda não há avaliações

- Oxygen Cost and Energy Expenditure of RunningDocumento7 páginasOxygen Cost and Energy Expenditure of Runningnb22714Ainda não há avaliações

- Guide To Raising Capital From Angel Investors Ebook From The Startup Garage PDFDocumento20 páginasGuide To Raising Capital From Angel Investors Ebook From The Startup Garage PDFLars VonTurboAinda não há avaliações

- I Will Be Here TABSDocumento7 páginasI Will Be Here TABSEric JaoAinda não há avaliações

- Steps To Christ AW November 2016 Page Spreaad PDFDocumento2 páginasSteps To Christ AW November 2016 Page Spreaad PDFHampson MalekanoAinda não há avaliações

- Bula Defense M14 Operator's ManualDocumento32 páginasBula Defense M14 Operator's ManualmeAinda não há avaliações

- Production of Sodium Chlorite PDFDocumento13 páginasProduction of Sodium Chlorite PDFangelofgloryAinda não há avaliações

- Template WFP-Expenditure Form 2024Documento22 páginasTemplate WFP-Expenditure Form 2024Joey Simba Jr.Ainda não há avaliações

- Price List PPM TerbaruDocumento7 páginasPrice List PPM TerbaruAvip HidayatAinda não há avaliações

- Pfr140 User ManualDocumento4 páginasPfr140 User ManualOanh NguyenAinda não há avaliações

- Listening Exercise 1Documento1 páginaListening Exercise 1Ma. Luiggie Teresita PerezAinda não há avaliações

- Techniques in Selecting and Organizing InformationDocumento3 páginasTechniques in Selecting and Organizing InformationMylen Noel Elgincolin ManlapazAinda não há avaliações

- Day 4 Quiz - Attempt ReviewDocumento8 páginasDay 4 Quiz - Attempt ReviewĐỗ Đức AnhAinda não há avaliações

- PowerPointHub Student Planner B2hqY8Documento25 páginasPowerPointHub Student Planner B2hqY8jersey10kAinda não há avaliações

- ArDocumento26 páginasArSegunda ManoAinda não há avaliações

- An Introduction To Ecology and The BiosphereDocumento54 páginasAn Introduction To Ecology and The BiosphereAndrei VerdeanuAinda não há avaliações

- Nokia CaseDocumento28 páginasNokia CaseErykah Faith PerezAinda não há avaliações