Escolar Documentos

Profissional Documentos

Cultura Documentos

CEQUITY Analytics Variable Reduction

Enviado por

Cequity SolutionsDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

CEQUITY Analytics Variable Reduction

Enviado por

Cequity SolutionsDireitos autorais:

Formatos disponíveis

Enhancing Analytical Modeling for

Large Data Sets

with

Variable reduction

Doesn’t it become tricky resolving accurate relationships between

variables when there are thousands of them? More so, when each

can be used to create segmentation models…

More often than not, some variables are decidedly correlated with

one another. Including these highly correlated variables in the

modeling process definitely increases the amount of time spent by

the statistician finding a segmentation model that meets business

needs. In order to speed up the modeling process, the predictor

variables should be grouped into similar clusters. A few variables

can then be selected from each cluster - this way the analyst can

quickly reduce the number of variables and speed up the

modeling process.

T oday, technology helps store huge data at no or token

additional cost, as compared to earlier days. In today’s

business we keep information in different tables in suitable

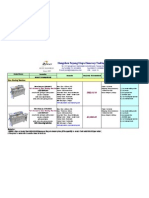

structure. For instance, it can have account data, transaction Factor analysis technique

data, customer demographic data, payment data, inbound-

outbound call data, campaign data, account history data etc. in a

financial business. For our analytical purpose we collate all the

Cluster of variable technique

information to create customers’ single view that may contain

huge number of variables. The challenge is to identify which few Method of Correlation*

of them we will use for our modeling purpose. In high

dimensional data sets, identifying irrelevant variables is more

(*for Predictive Analysis)

difficult than identifying redundant variables. It is suggested that

first the redundant variables be removed and then the irrelevant

ones looked for. There are several ways of identifying irrelevant

variables. The technique of identifying less important variables

can differ on the basis of what specific analysis need to be done Factor Analysis Technique

using the final data. We will discuss what different steps should

be followed to reduce the variables.

Let us consider we have N number of predictors. Do a factor

analysis for M factors where M is significantly less than N. For

Step One. Reduce variables on the basis of a specific factor we will get load value for each and every

missing percentage variable. The load factor will be high corresponding to those

variables which have a high influence on the specific factor.

The variable with high missing information has very less The set of variables which are highly correlated with each

contribution / predictive power in statistical model. Sometimes other will get high absolute load value. Select one variable for

the missing value needs to be imputed on the judgmental basis. the model with high load value out of those which have high

E.g. in case of amount purchase in last month missing can load value corresponding to this factor. Select the 2nd

indicate no purchase happened by the customer for last one variable for the model looking at the load value corresponding

month. So missing field need to be replaced by zero. But there to 2nd factor in the similar way. Continue this till the kth factor

are many cases where missing is actual missing. In this situation to identify the variable corresponding to that factor. Number

it is not suggested to impute the value in case the % missing is of k (<M) should be selected on the basis of cumulative

very high. We should remove the variables for which a high percentage of variation explained till k factors out of the total

proportion of missing observations are there. variation explained by M factors. You can take a cut-off within

90% to 95%.

Step Two. Variable reduction on the basis of

percentage of equal value

There might be fields with equal value for all the observation.

For this specific situation variable standard deviation will be

zero. We can remove these set of variables as it cannot have

any contribution on the model. There may be variables also for

which almost all (say > 98%) the records are with equal value.

We should not use these variables as they cannot contribute

much in the model. Calculating percentile with minimum and

maximum value of the variable will help identify such variables.

Step Three. Variable reduction among the

correlated variables

It is not desirable to use set of correlated predictor variables

either in cluster analysis or any types of regression analysis,

and forecasting technique. When we do subjective

segmentation using cluster technique we can identify

correlated variables by:

Cluster of Variable Technique

In this technique it will split the variables in two groups on the

basis of correlation to each other in each step of variable

splitting. The variables within the same group have higher

correlation with each other as compared to ‘between-group’

correlation. We can impose a condition, ‘whether a specific

subgroup needs to split farther or not’ on basis of cut-off point

of 2nd highest Eigen value of a subgroup. The cut-off point for

Eigen value is typically chosen as 0.8 to 0.5. Once the final

convergence happens you can select one or two variables

from each child group for the purpose of modeling.

Method of Correlation

● ● ●

In the method of prediction where we have one response Data mining methods simplify the extraction of

variable and other set of predictors this technique is very

much useful. Though we can first use any one of the above key insights from a huge database. They offer the

two methods to reduce the number of predictors in stage one possibility of starting the analysis from any given

and then use this method for farther reduction. Let use point in it. However, without proper methods and

consider we have response variable as Y and predictors are

X1, X2, … , Xn. Calculate the correlation matrix for all techniques we may never be able to do so. Variable

predictors including Y. Here we can impose a condition on reduction technique greatly helps both in handling

correlation value when we will take any one of two predictors

huge data and reducing the model development

if it is higher than some specific value, say r. Now if r ij,

correlation between Xi and Xj is greater than r we will keep time. And in the bargain, all of this is accomplished

Xi if r yi > r yj, where ryi is correlation between Y and Xi. In without sacrificing the quality of the model.

practice we generally use r ranges within (0.75 to 0.9).

Identifying the right technique becomes all the

more easier with a better understanding of the

data.

Note: If you feel that still you have many variables for model

and need to reduce prior to actual model you can do this on

With techniques like these we, at Cequity, are able

the basis of VIF value of each predictors performing

regression of Y on predictors. Remove the variable which to combine data & technology, and build

has VIF higher than 2.5 and remove variable one by one. actionable analytical marketing services to

accelerate ROI-driven, real-time customer-

engaged marketing. Touch base with us to learn

more…

Cequity Solutions Pvt. Ltd.

Reach us at 105-106, 1st Floor, Anand Estate, 189-A, Sane Guruji Marg, Mahalaxmi, Mumbai-400 011, India

Phone: +91 22-43453800 Fax: +91 22-43453840

For more case studies, white papers and presentations log on to www.cequitysolutions.com

Or Write to info@cequitysolutions.com

For the latest thinking in Analyical Marketing, check out our blog at blog.cequitysolutions.com

Você também pode gostar

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5794)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- Operator'S Manual: E-Series Ultraviolet Hand LampsDocumento9 páginasOperator'S Manual: E-Series Ultraviolet Hand LampsGuiss LemaAinda não há avaliações

- VbeltDocumento7 páginasVbeltMinaBasconAinda não há avaliações

- Baep 471 Syllabus Spring 2016Documento8 páginasBaep 471 Syllabus Spring 2016api-299352148Ainda não há avaliações

- Super CatalogueDocumento8 páginasSuper CatalogueITL200_UAinda não há avaliações

- Evaluasi Keandalan PLTA Bakaru: Akbar Tanjung, Arman Jaya, Suryanto, ApolloDocumento8 páginasEvaluasi Keandalan PLTA Bakaru: Akbar Tanjung, Arman Jaya, Suryanto, Apollohilda tangkeAinda não há avaliações

- Remote SensingDocumento30 páginasRemote SensingAdeel AhmedAinda não há avaliações

- Iso 10211 Heat2 Heat3Documento16 páginasIso 10211 Heat2 Heat3nsk377416100% (1)

- Unit 4 SoftwareDocumento16 páginasUnit 4 Softwareapi-293630155Ainda não há avaliações

- V$SESSIONDocumento8 páginasV$SESSIONCristiano Vasconcelos BarbosaAinda não há avaliações

- 2007 DB Drag FinalsDocumento4 páginas2007 DB Drag FinalsTRELOAinda não há avaliações

- S9300&S9300E V200R001C00 Hardware Description 05 PDFDocumento282 páginasS9300&S9300E V200R001C00 Hardware Description 05 PDFmike_mnleeAinda não há avaliações

- Roxtec CatalogDocumento41 páginasRoxtec Catalogvux004Ainda não há avaliações

- Broch 6700 New 1Documento2 páginasBroch 6700 New 1rumboherbalAinda não há avaliações

- Quotation For Blue Star Printek From Boway2010 (1) .09.04Documento1 páginaQuotation For Blue Star Printek From Boway2010 (1) .09.04Arvin Kumar GargAinda não há avaliações

- Myanmar Power SystemDocumento4 páginasMyanmar Power Systemkayden.keitonAinda não há avaliações

- Dyatlov Interview 1995 enDocumento6 páginasDyatlov Interview 1995 enmhulot100% (1)

- Lynx ROMdisassemblyDocumento156 páginasLynx ROMdisassemblyMark LoomisAinda não há avaliações

- Water FountainDocumento13 páginasWater Fountaingarych72Ainda não há avaliações

- U-Joint Shaft PDFDocumento5 páginasU-Joint Shaft PDFAdrian SantosAinda não há avaliações

- F4a41, F4a51, F5a51Documento4 páginasF4a41, F4a51, F5a51Vadim Urupa67% (3)

- How Can The European Ceramic Tile Industry Meet The EU's Low-Carbon Targets A Life Cycle Perspective (Ros-Dosda - España-2018)Documento35 páginasHow Can The European Ceramic Tile Industry Meet The EU's Low-Carbon Targets A Life Cycle Perspective (Ros-Dosda - España-2018)juan diazAinda não há avaliações

- 7th Sem PPT FinalDocumento28 páginas7th Sem PPT FinalDeepa JethvaAinda não há avaliações

- Hard Disk Formatting and CapacityDocumento3 páginasHard Disk Formatting and CapacityVinayak Odanavar0% (1)

- CatalogDocumento42 páginasCatalogOnerom LeuhanAinda não há avaliações

- HMA Road Design NotesDocumento86 páginasHMA Road Design NotesFarooq AhmadAinda não há avaliações

- Cooling Tower 3DTrasar ManualDocumento90 páginasCooling Tower 3DTrasar ManualArevaLemaAinda não há avaliações

- Characteristics of Responsible Users and Competent Producers of Media and InformationDocumento83 páginasCharacteristics of Responsible Users and Competent Producers of Media and InformationMarson B. GranaAinda não há avaliações

- Standards For Rolling Stock CablesDocumento9 páginasStandards For Rolling Stock CablesNathathonAinda não há avaliações

- Attempt 1 OrgDocumento19 páginasAttempt 1 OrgMohammed Irfan SheriffAinda não há avaliações

- Amplificador LA42102Documento8 páginasAmplificador LA42102SilvestrePalaciosLópezAinda não há avaliações