Escolar Documentos

Profissional Documentos

Cultura Documentos

Sri Hari Etal 87

Enviado por

Manidip Ganguly0 notas0% acharam este documento útil (0 voto)

34 visualizações18 páginasSri Hari

Direitos autorais

© Attribution Non-Commercial (BY-NC)

Formatos disponíveis

PDF, TXT ou leia online no Scribd

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoSri Hari

Direitos autorais:

Attribution Non-Commercial (BY-NC)

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

0 notas0% acharam este documento útil (0 voto)

34 visualizações18 páginasSri Hari Etal 87

Enviado por

Manidip GangulySri Hari

Direitos autorais:

Attribution Non-Commercial (BY-NC)

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

Você está na página 1de 18

ON KNOWLEDGE REPRESENTAnON USING

SEMANTIC NETWORKS AND SANSKRIT

S.N. Srihari, WJ. Rapaport, D. Kumar

87-03 February 1987

II

II

II

Department of Computer Science

State University of New York at Buffalo

226 Bell Hall

Buffalo, New York 14260

I

I

II

This work was supported in part by the National Science Foundation under grant no. IST

8S04713, and in part by the Air Force Systems Command, Rome Air Development Center,

Griffiss Air Force Base, NY 13441 and the Air Force Office of Scientific Research, Bolling AFB,

IX; 20332 under contract no.

ON KNOWLEDGE REPRESENTATION USING SEMANTIC NETWORKS

AND SANSKRIT]

II

Sargur N. Srihari, William J. Rapaport, and Deepak Kumar

II

Department of Computer Science

State University of New York at Buffalo

Buffalo, NY 14260

U.S.A.

ABSTRACT

The similarity between the semantic network method of knowledge representation

in artificial intelligence and shastric Sanskrit was recently pointed out by Briggs. As

a step towards further research in this field, we give here an overview of semantic

networks and natural-language understanding based on semantic networks. It is

shown that linguistic case frames are necessary for semantic network processing and

that Sanskrit provides such case frames. Finally, a Sanskrit-based semantic network

representation is proposed as an interlingua for machine translation.

I Th is material is based in part upon work supported by the Nat ional Science foundati on under Grant No. IST-8504713 (Ra

paport) and in part by work supported by the Air Force Systems Command, Rome Atr Development Center, Grifliss Air Force

Base. NY 13441 -5700. and the Air Force Office of Scienti fic Research. B011ing AFB. LX 20.\32 under contract No. F30602-85-C

0008 (Sribaril,

SRIHARI, RAPAPORT, & KUMAR i

I

1. INTRODUCTION

I

Computational linguistics is a subfield of artificial intelligence (AI) concerned with the develop

I

ment of methodologies and algorithms for processing natural-language by computer. Methodologies for

computational linguistics are largely based on linguistic theories, both traditional and modern.

Recently, there has been a proposal to utilize a traditional method, viz., the shastric Sanskrit method of

I

analysis (Briggs, 1985), as a knowledge representation formalism for natural-language processing. The

proposal is based on the perceived similarity between a commonly used method of knowledge

representation in AI, viz., semantic networks, and the shastric Sanskrit method, which is remarkably

unambiguous.

I

The influence of Sanskrit on traditional Western linguistics is acknowledgedly significant (Gelb,

1985). While linguistic traditions such as Mesopotamian, Chinese, Arabic, etc., are largely enmeshed

with their particularities, Sanskrit has had at least three major influences. First, the unraveling of

Indo-European languages in comparative linguistics is attributed to the discovery of Sanskrit by

Western linguists. Second, Sanskrit provides a phonetic analysis method which is vastly superior to

I

Western phonetic tradition and its discovery led to the systematic study of Western phonetics. Third,

and most important to the present paper, the rules of analysis (e.g., sutras of Panini) for compound

nouns, etc, is very similar to contemporary theories such as those based on semantic networks.

I

The purpose of this paper is threefold: en to describe propositional semantic networks as used in

I

AI, as well as a software system for semantic network processing known as SNePS (Shapiro, 1979), (ii)

to describe several case structures that have been proposed for natural-language processing and which

are necessary for natural language understanding based on semantic networks, and (iii) to introduce a

proposal for natural-language translation based on shastric Sanskrit and semantic networks as an

interlingua.

I

2. KNOWLEDGE REPRESENTATION USING SEMANTIC NETWORKS

2.1. Semantic Networks

I

A semantic network is a method of knowledge representation that has associated with it pro

cedures for representing information, for retrieving information from it, and for performing inference

with it. There are at least two sorts of semantic networks in the AI literature (see Findler 1979 for a

I

survey): The most Common is what is known as an "inheritance hierarchy," of which the most well

I

known is probably KL-DNE (cf. Brachman & Schmolze 1985). In an inheritance semantic network,

nodes represent concepts, and arcs represent relations between them. For instance, a typical inheritance

semantic network might represent the propositions that Socrates is human and that humans are mortal

I

as in Figure l(a). The interpreters for such systems allow properties to be "inherited," so that the fact

that Socrates is mortal does not also have to be stored at the Socrates-node. What is essential, however,

is that the representation of a proposition (e.g., that Socrates is human) consists only of separate

representations of the individuals (Socrates and the property of being human) linked by a relation arc

(the "ISA" arc). That is, propositions are not themselves objects.

I

[Figure 1 here]

In a propositional semantic network, all information, including propositions, is represented by

I

nodes. The benefit of representing propositions by nodes is that propositions about propositions can be

I

represented with no limit. Thus, for example, the information represented in the inheritance network

of Figure l(a) could (though it need not) be represented as in Figure l(b); the crucial difference is that

the propositional network contains nodes (mS, mS) representing the propositions that Socrates is

human and that humans are mortal, thus enabling representations of beliefs and rules about those pro

positions.

I

I

SRIHARI, RAPAPORT, & KUMAR 1

NATURAL LANGUAGE PROCESSING

2.2. SN ePS

SNePS, the Semantic Network Processing System, is a knowledge-representation .and reasoning

software system based on propositional semantic networ ks. It has been used to model a cognitive

agent's understanding of natural -language, in part icular, Engli sh (Shapiro 1979; Maida & Shapiro 1982;

Shapiro & Rapaport 1986, 1987; Rapaport 1986). SNePS is implemented in the LISP programming

language and currently runs in Unix- and LISP-machine environments.

Arcs merely form the underl ying syntact ic structure of SNePS. This is embodied in the restric

tion that one cannot add an arc between two existing nodes. That would be tantamount to telling

SNePS a proposition that is not represented as a node. Another restriction is the Uniqueness Principle:

There is a one-to-one correspondence between nodes and represented concepts. This principle guaran

tees that nodes will be shared whenever possible and that nodes represent intensional objects. (Shapiro

& Rapaport 1987,)

SNePS nodes that only have arcs pointing to them are considered to be unstructured or atomic.

They include: (1) sensory nodes, whi ch- when SNePS is being used to model a cognitive agent

represent interfaces with the external world (in the examples that follow, they represent utterances);

(2) base nodes, which represent individual concepts and properties; and (3) variable nodes, which

represent arbitrary individuals (Fine 1983) or ar bitrary propositions.

Molecular nodes, which have arcs emanating from them, include: (1) structured individual nodes,

which represent structured individual concepts or properties (i.e., concepts and properties represented in

such a way that their internal structure is exhibit edl-e-for an example, see Section 3, below; and (2)

structured proposition nodes, which represent propositions; those with no incoming arcs represent

beliefs of the system. (Note t hat st ructured proposition nodes can also be considered to be structured

individuals.) Proposit ion nodes are either atomic (represent ing atomic propositions) or are rule nodes.

Rule nodes represent deduct ion ru les and are used for node-based deductive inference (Shapiro 1978;

Shapiro & McKay 1980; 'v1 cKay &. Shapiro 1981; Shapiro, Martins, & McKay 1982). For each of the

three categories of molecular nodes (st ructured individuals, atomic propositions, and rules), there are

constant nodes of that categorv and pattern nodes of that category representing arbitrary entities of

that category.

There are a few built-in arc labels, used mostly for rule nodes. Paths of arcs can be defined,

allowing for path-based inference, including property inheritance within generalization hierarchies

(Shapiro 1978, Srihari 1981). All other arc labels ar e defined by the user, typically at the beginning of

an interaction with SNePS. In fact, since most arcs are user-defined, users are obligated to provide a

formal syntax and semantics for their STePS net wor ks. We provide some examples, below.

Syntax and Seman tics of SNePS

In this section, we give the syntax and semant ics of the nodes and arcs used in the interaction.

(A fuller presentation, toget her with the rest of the conversation, is in Shapiro & Rapaport 1986,

1987,)

(Def. 1) A node dominates anot her node if t here is a path of directed arcs from the first node to the

second node.

(Def . 2) A pattern node is a node that dominates a variable node.

(Def . 3) An individual node is either a base node, a variable node, or a structured constant or pattern

individual node.

(Def. 4) A proposition node is eithe r a structured proposition node or an atomic variable node

representing an arbit rary proposit ion.

(Syn.I) If w is a(n English) w ord and i is an identifier not previously used, then

SRIHARI, RAPAPORT, & KUMAR 2

I

I

I

I

NATURAL LANGUAGE PROCESSING

LEX

Q)----70

is a network, w is a sensory node, and i is a structured individual node.

ISem.I) i is the object of thought corresponding to the utterance of w.

(Syn.2) If either t I and t 2 are identifiers not previously used, or t I is an identifier not previously used

and t 2 is a temporal node, then

BEFORE

@----7G)

is a network and t I and t 2 are temporal nodes, i.e, individual nodes representing times.

(Sem.2) t 1 and t 2 are objects of thought corresponding to two times, the former occurring before the

latter.

(Syn.3) If i and j are individual nodes and m is an identifier not previously used, then

PROPERTY OBJECT

OX B XD

is a network and m is a structured proposition node.

(Sem.3) m is the object of thought corresponding to the proposition that i has the property j.

(Syn.4) If i and j are individual nodes and m is an identifier not previously used, then

rv. PROPER-NAME OBJECT

\:LA 6) >Q)

is a network and m is a structured proposition node.

(Sem.4) m is the object of thought corresponding to the proposition that i's proper name is j. (j is

the object of thought that is i's proper name; its expression in English is represented by a node

at the head of a LEX-arc emanating from j.)

(Syn.5) If i and j are individual nodes and m is an identifier not previously used, then

CLASS MEMBER

Q)( @ >CD

is a network and m is a structured proposition node.

(Sem.S) m is the object of thought corresponding to the proposition that i is a (member of class) j.

(Syn.6) If i and j are individual nodes and m is an identifier not previously used, then

SUPERCLASS SUBCLASS

Q)( ~

is a network and m is a structured proposition node.

(Sem.6) m is the object of thought corresponding to the proposition that (the class of) is are (a sub

class of the class of) j s.

(Syn.7) If iI' i 2 , i 3 are individual nodes, t 1 , t 2 are temporal nodes, and m is an identifier not previ

ously used, then

[Figure 2 here]

is a network and m is a structured proposition node.

SRIHARI. RAPAPORT, & KUMAR

3

NATURAL LANGUAGE PROCESSING

(Sem.7) m is the object of thought corresponding to the proposition that agent i

1

performs act i

2

with

respect to i 3 starting at time t 1 and ending at time t 2, where t 1 is before t 2'

3. NATURAL-LANGUAGE UNDERSTANDING USING SEMANTIC NETWORKS

Semantic networks can be used for natural-language understanding as follows. The user inputs

an English sentence to an augmented-transition-network (ATN) grammar (Woods, 1970, Shapiro 1982).

The parsing component of the grammar updates a previously existing knowledge base containing

semantic networks (or builds a new knowledge base, if there was none before) to represent the

system's understanding of the input sentence. Note that this is semantic analysis, not syntactic pars

ing. The newly built node representing the proposition (or a previously existing node, if the input

sentence repeated information already stored in the knowledge base) is then passed to the generation

component of the ATN grammar, which generates an English sentence expressing the proposition in

the context of the knowledge base. It should be noted that there is a single ATN parsing-generating

grammar; the generation of an English output sentence from a node is actually a process of "parsing"

the node into English. If the input sentence expresses a question, information-retrieval and inferencing

packages are used to find or deduce an answer to the question. The node representing the answer is

then passed to the generation grammar and expressed in English.

Here is a sample conversation with the SNePS system, together with the networks that are built

as a result. User input is on lines with the .-prompt; the system's output is on the lines that follow.

Comments are enclosed in brackets.

: Young Lucy petted a yellow dog

I understand that young Lucy petted a yellow dog

[The system is told something, which it now "believes." Its entire belief structure

consists of nodes bl, ml-mI3, and the corresponding sensory nodes (Figure 3). The

node labeled "now" represents the current time, so the petting is clearly represented

as being in the past. The system's response is "I understand that" concatenated with

its English description of the proposition just entered.]

: What is yellow

a dog is yellow

[This response shows that the system actually has some beliefs; it did not just parrot

back the above sentence. The knowledge base is not updated, however.]

: Dogs are animals

I understand that dogs are animals

[The system is told a small section of a class hierarchy; this information does update

the knowledge base.]

[Figure 3 here]

There are three points to note about the use of SNePS for natural -language understanding. First,

the system can "understand" an English sentence and express its understanding; this is illustrated by

the first part of the conversation above. Second, the system can answer questions about what it under

stands; this is illustrated by the second part. Third, the system can incorporate new information into

its knowledge base; this is illustrated by the third part.

Case Frames

Implicit in such a language understanding system are so-called case frames. We give a brief

summary here; for a more thorough treatment see Winograd (1983).

SRIHARI. RAPAPORT. & KUMAR 4

I

I

I

I

I

I

I

I

I

I

I

I

NATURAL LANGUAGE PROCESSI NG

Case-based deep st ruct ure analysis of English was suggested by Fillmore (1968). The surface

structure of Engli sh relies only on the order of constituents and propositions in a clause to indicate

role. Examples are:

Your dog just bit my mother.

My mot her just bit your dog.

In Russian, Sanskrit, etc., explicit mar kings are used to represent relationships between participants,

Examples in Russian, w hich uses six cases (nominative, genit ive, dative, accusative, instrumental, and

preposi tional), are: .

Professor uchenika tseloval (the professor kissed the student ).

Prof'essora uchenik tseloval (the student kissed t he professor).

The extremely li mited surface case system of English led Fillmore to suggest cases for English

deep structure as f oll ows: Agentive (anima te instiga tor of act ion), Instrumental (inanimate force or

object involved), Dati ve (animate being affected by acti on), Fact it ive (object resulting from action),

Locative (l ocat ion or orientation), and Objecti ve (everything else). For example, consider the sentence:

John opened t he door w ith t he key.

Its case anal ysis yields: Agentive = John. Object ive = t he door, Inst rumental = the key.

Schank ( 1975) developed a representation for meaning (conceptual dependency) based on

language inde pendent concept ual relationships between objects and actions: case roles filled by objects

(actor, object. attribuant, recipient ), case roles filled by conceptualizations (instrument, attribute, ... ).

and case roles fill ed by ot her conceptual categories (time, locati on. state). For example:

John handed Mary a book.

has the analysis: Actor = John , Donor = John. Recipient = Mary, Object = book. Instrument = an action

of physical motion with actor = John and object = hand.

4. SANSKRIT CASE FRAMES AND SEMANTIC NETWORKS

In the previous section we noted that natu ral -language understanding based on semanitc net

works involves determining what case fra mes will be used. The current set of case frames used in

SNePS is not intended to be a complete set. Thus, we propose here that shastric Sanskrit case frames,

implemented as SNePS networ ks. mak e an ideal kn owledge-representat ion "language."

There are t wo dist inct advantages to the use of classical Sanskrit analysis techniques. First, and

of greatest importance, it is not an ad hoc method. As Briggs (1985) has observed, Sanskrit grammari

ans have developed a t horough sys tem of semant ic anal ysis. Why should researchers in knowledge

representation and natural-language unde rstan din g reinvent the wheel? (cf. Rapaport 1986). Thus, we

propose the use of case f rames based on Sansk rit gra mma tical analysis in place of (or, in some cases, in

addition to) the case f rames used in current SNePS natural-language research.

Second, and impli cit in the first advantage. Sanskri t grammatical analyses are easily implement

able in SNePS. This should not be surprising. The Sanskrit analyses are case-based analyses, similar.

for example, to those of Fill more (1968). Proposit ional semant ic networks such as SNePS are based on

such analyses and. t hus, are highly suitable symbolisms for implement ing them.

As an example, consider the analysis of the foll owing Engl ish translation of a Sanskrit sentence

(from Briggs 1985):

Out of friendship, Maitra cooks rice for Devadatta in a pot over a fire.

Briggs offers the f ollowing set of "triples," t hat is, a linear representation of a semantic network for

this sentence (Briggs 1985: 37. 38):

cause, event , f riendship

friendship, object 1, Devadat ta

SRIHARI. RAPAPORT. & KUMAR 5

NA TURAL LANGUAGE PROCESSING

friendship, object2, Maitra

cause, result, cook

cook, agent, Maitra

cook, recipient, Maitra

cook, instrument, fire

cook, object, rice

cook, en-loe. pot

But what is the syntax and semantics of this knowledge-representation scheme? It appears to be

rather ad hoc. Of course, Briggs only introduces it in order to compare it with the Sanskrit grammati

cal analysis, so let us concentrate on t ha t , instead. Again using triples, this is:

cook, agent, Mait ra

cook, object, rice

cook, instrument, fire

cook, recipient, Devadat ta

cook, because-of, friendship

friendship, Maitra, Devadat ta

cook, locality, pot

Notice that all but the penultimate triple begins with cook. The triple beginning with friendship can

be thought of as a structured indi vidual : the f riendship between Maitra and Devadatta. Implemented

in SNePS, this becomes the network shown in Figure 4. Node ml l represents the structured individual

consisting of the relation of friendship holding between Maitra and Devadatta. Node m13 represents

the proposition that an agent (named Maitra ) performs an act (cooking) directed to an object (rice),

using an inst r ument (fire), f or a recipient (named Devadarta), at a locality (a pot), out of a cause (the

friendship between the agent and t he recipient).

Such an analysis can, presumably, be algorithmically derived from a Sanskrit sentence and can

be algorithmically transformed back. .into a Sanskrit sentence. Since an English sentence, for instance,

can also presumably be analyzed in this wav (at the very least, sentences of Indo-European languages

should be easily analyzable in this f'ashton), we have the basis for an interlingual machine-translation

system grounded in a well-established semantic theory.

[Figure 4 here]

5. INTERLINGUAL MACHINE TRANSLATION

The possibility of translating natural -language texts using an intermediate common language

was suggested by Warren Weaver (1949). Translation using a common language (an "interllngua") is a

two-stage process: from source language to an interlingua, and from the interlingua to the target

language (Figure 5). This approach is characteristic of a system in which representation of the "mean

ing" of the source-language input is intended to be independent of any language, and in which this

same representation is used to synthesize the target-language output. In an alternative approach (the

"transfer" approach), the results of source text analysis are converted into a corresponding representa

tion for target text, which is then used for output. Figure 6 shows how the interlingua (indirect)

approach compares to other (direct and transfer) approaches to ma chine translation (MT). The inter

lingua approach to translation was heavil y influenced by formal linguistic theories (Hutchins 1982).

This calls for an interlingua to be formal , language-independent, and "adequate" for knowledge

representation.

[Figures 5 and 6 here]

SRIHARI, RAPAPORT, & KUMAR 6

I

I

I

NATURAL LANGUAGE PROCESSING

Va r ious proposals for in ter li nguas have included the use of formalized natural-language, artificial

"internat ional " languages li ke Esperanto, and various symbolic representations. Most prior work on

interlinguas has centered on the representation of the lexical content of text. Bennet et al. (1986) point

out t hat a large portion of syntactic structures, even w hen reduced to "canonical form," remain too

language-specific to act as an interlingua representation. Thus, major disadvantages of an interlingua

based system result from the practical difficulty of act ually defining a language-free interlingua

representa t ion.

Besides, none of the existing MT systems use a significant amount of semantic information (Slo

cum 1985). Thus, t he success of an interlingua depends on t he nature of the interlingua as well as the

analysis rendered on the source text to obtain its inter li ngual representa t ion. This made the inter

lingua approach too ambitious, and researchers have inclined more towards a transfer approach.

It has been argued t hat analyses of natural-language sentences in semantic networks and in San

skrit grammar is remarkably similar (Briggs 1985). Thus we propose an implementation of Sanskrit

in a semant ic network to be used as an interlingua fo r MT. As an interlingua, Sanskrit fulfills the

basic requirements of being formal, language-independent , and a powerful medium for representing

meaning.

REFERENCES

[1] Bennet , P. A.; Johnson , R. L.; Mc. laught, John; Pug h, Jeanette; Sager, J. C: and Somers, Harold L.

( 1986), Multilingual Aspects oj Information Technology, (Hampshi re, Eng.: Gower).

[2] Brachman, Ronald J., & Levesque, Hector J. ( 1985), Readings in Knowledge Representation, (Los

Al tos, CA : Morgan Kaufmann).

[3] Brachman , Ronald J., & Schrnolze, James G. (1985), "An Overview of the KL-0NE Knowledge

Representa t ion System," Cognit ive Science. 9: 17 1-216.

[4] Br iggs, Rick ( 1985), "Knowledge Representation in Sanskrit and Artificial Intelligence," AI

M agazine, 6.1 (Spring 1985) 32-39.

[5] Fillmore, Charles ( 1968), "The Case for Case:' in E. Bach & R. T. Harms (eds.), Universals in

Linguistic Theory, (Chicago: Holt, Rinehart and Wi nston): 1-90.

[6] Fine, K. (1983), "A Defence of Arbitrary Objects," Proc. Arist otelian Soc., Supp. Vol. 58: 55-77.

[7] Fi ndler , Nicholas V. ( 1979), Associative Networks: The Representation and Use of Knowledge

by Computers, (New York: Academic Press).

[8] Gel b, Ignace J. ( 1985), Linguistics, Encyclopedia Britannica Macropedia, Fifteenth Edition.

[9] Hutchins, John W. (] 982), "The Evolution of Machine Translat ion Systems," in V. Lawson (ed.),

Practical Ex periences of Machine Translation, (Amsterdam: Nort h-Holland): 21-38.

[10] Maida, Anthony 5., & Shapiro, Stuart C. ( 1982), "Intens ional Concepts in Propositional Semantic

Networ ks," Cognitive Science. 6: 291 -330; reprinted in Brachman & Levesque 1985: 169-89.

[11] McKay, D. P., & Shapi ro. Stuart C. (1981), "Using Active Connect ion Graphs for Reasoning with

Recursive Rul es:' Proc. IJCAl-81, 368-74.

[12] Rapaport, William J. (1986), "Logical Foundations f or Belief Representation," Cognitive Science,

10. 371-422.

[13] Schan k, Roger C. (1975), Conceptual Information Processing, (Amsterdam: North Holland),

1975.

[14] Shapiro. Stuart C. (1978), "Path-Based and Node-Based Inf erence in Semantic Networks," in D.

Waltz (ed.), Tinla p-2: Theoretical Issues in Natural Language Processing, (New York: ACM):

219-25 .

[15] Shapiro, St uart C. (1979), "The 5 :ePS Semantic Net wor k Processing System," in Findler 1979:

179-203.

SRIHARI, RAPAPORT, & KUMAR 7

NATURAL LANGUAGE PROCESSING

[16] Shapiro, Stuart C (1982), "Generalized Augmented Transition Network Grammars For Genera

tion From Semantic Networks," American Journal of Computational Linguistics, 8: 12-25.

[17] Shapiro, Stuart C; Martins, J.; & McKay, D. P. (1982), "Bi-Directional Inference," Proc. 4th

Annual Conf. Cognitive Science Soc; (U. Michigan): 90-93.

[18] Shapiro, Stuart C, & McKay, D. P. (1980), "Inference with Recursive Rules," Proc. AAAl-80,

151-53.

[19] Shapiro, Stuart C, & Rapaport, William J. (1986), "SNePS Considered as a Fully Intensional Pro

positional Semantic Network," in G. McCalla & N. Cercone (eds.), The Knowledge Frontier, (New

York: Springer-Verlag), 262-315.

[20] Shapiro, Stuart C, & Rapaport, William J. (1986), "SNePS Considered as a Fully Intensional Pro

positional Semantic Network," Proc. National Conference on Artificial Intelligence (AAAl-86;

Philadelphia), Vol. 1 (Los Altos, CA: Morgan Kaufmann): 278-83.

[21] Slocum, Jonathan (1985), "A Survey of Machine Translation: its History, Current Status, and

Future Prospects," Computational Linguistics, 11: 1-17.

[22] Srihari, Rohini K. (1981), "Combining Path -Based and Node-Based Inference in SNePS," Tech. Rep.

183, (SUNY Buffalo Dept. of Computer Science).

[23] Weaver, Warren (1949), "Translation," in W. N. Locke & A. D. Booth (eds.), Machine Translation

of Languages, (Cambridge, MA: MIT Press, 1955): 15-23.

[24] Winograd, Terry (1983), Language as a Cognitive Process, Reading, MA: Addison-Wesley . .

[25] Woods, William (1970), Transition Network Grammars for Natural Language Analysis, Commun

ications of the ACM, 13: 591-606.

SRIHARI. RAPAPORT. & KUMAR 8

SUPERCLASS SUBCLASS

LEX

I

I

I

I

I

I

I

I

I

I

I

I

I

ISA

ISA

SOCRATES

Figur e 1(a). An "ISA" inheritance-hierarchy semantic network

I

I

Figure 1(b). A SNePS propositional semantic network (m3 and mS represent the

propositions that Socrates is human and that humans are mortal, respectively)

BEFORE

ACT

Figure 2. _ l t

-

-

I

_ ,_ _

,

..

now

PROPERTY

LEX

ACT

BEFORE

Figure 3. SNePS network for "Young Lucy petted a yellow dog."

INSTRUMENT

LEX

Figure 4. SNePS network for "Out of friendship, Maitra cooks rice for Devadatta in a pot over a fire."

I

I

~

~ Source Analysis

Generation Target

Text

Text

Figure 5. The Interlingua Approaches

Source Text Target Text

Direct

Transfer

Interlingua

Figure 6. Various Approaches to MT

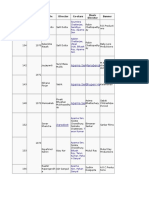

TECHNICAL REPORTS

Following are recent technical reports published by the Department of Computer Science,

University at Buffalo. Reports may be ordered from:

Library Committee

Department of Computer Science

University at Buffalo (SUNY)"

226 Bell Hall

Buffalo, NY 14260

USA

Prices are given as (USA and Canada lather Countries). Payment should be enclosed with

your order (unless we have a free exchange agreement with your institution, in which case

the reports are free). Please make checks payable to "Department of Computer Science".

If your institution is interested in an exchange agreement, please write to the above

address for information.

86-01 A. Rastogi, S.N. Srihari: Recognizing Textual Blocks in Document Images Using the

Hough Transform ($1.001$1.50)

86-02 P. Swaminathan, S.N. Srihari: Document Image Binarizatlon: Second Derivative

Versus Adaptive Thresholding ($1.001$1.50)

86-03 WJ. Rapaport: Philosophy of Artificial 1ntelligence: A Course Outline ($1.001$1.50)

86-04 J.W. de Bakker, J.-J.Ch. Meyer, E.-R. Olderog, J.1. Zucker: Transition Systems, Metric

Spaces and Ready Sets in the Semantics of Uniform Concurrency ($1.001$1.50)

86-05 S.L. Hardt, WJ. Rapaport: Recent and Current AI Research in the Dept. of Com

puter Science, SUNY-Buffalo ($1.001$1.50)

86-06 PJ. Eberlein: On the Schur Decomposition of a Matrix for Parallel Computation

($1.001$1.50)

86-07 R. Bharadhwaj: Proto-Maya: A Distributed System and its Implementation

($2.001$3.00 )

86-08 1. Geller, JJ. Hull: Open House 1986: Current Research by Graduate Students of

the Computer Science Dept; SUNY-Buffalo ($1.001$1.50)

86-09 K. Ebcioglu: An Expert System for Harmonization of Chorales in the Style of J S,

Bach ($3.001$4.00)

86-10 S.c. Shapiro: Symmetric Relations, Iruenslonal Lndioiduals, and Variable Binding

($1.001$1.50)

86-11 Automatic Address Block Location: S.N. Srihari, JJ. Hull, P.W. Palumbo. C. Wang:

($1.001$1.50) Analysis of Images and Statistical Data

86-12 The DUNE S.L. Hardt, D.H. MacFadden, M. Johnson, T. Thomas, S. Wroblewski:

($1.001$1.50) Shell Manual: Version 1

86-13 The Buffalo Font Catalogue, Version 4 ($1.001$1.50) G.L. Sicherman, J.Y. Arrasjid:

86-14 Representing De Re and De Dicto Belief Reports in J.M. Wiebe, WJ. Rapaport:

($1.001$1.50) Discourse and Narrative

86-15 W.J. Rapaport, S.c. Shapiro, J.M. Wiebe: Quasi-Indicators, Knowledge Reports, and

Discourse ($1.001$1.50)

86-16 PJ. Eberlein: Comments on Some ParaUel Jacobi Orderings ($1.00/$1.50)

86-17 PJ. Eberlein : On One-Sided Jacobi Methods for ParaUel Computation ($1.00/$1.50)

86-18 R. Miller, Q.F. Stout: Mesh Computer Algorithms for Computational Geometry

($1.00/$1.50)

86-19 EJ.M. Morgado: Semantic Networks as Abstract Data Types ($3.00/$4.00)

86-20 G.A. Bruder, J.F. Duchan, WJ . Rapaport, E.M. Segal, S.c. Shapiro, D.A. Zubin: Deictic

Centers in Narrative: An Iruerdisciplinary Cognitive-Science Project ($1.00/$1.50)

86-21 Y.H. Jang: Semantics of Recursive Procedures with Parameters and Aliasing

($1.00/$1.50)

86-22 D.S. Martin: Modified Kleene Recursion Theorem Control Structures ($1.00/$1.50)

86-23 PJ. Eberlein: On using the Jacobi Method on the Hypercube ($1.00/$1.50)

86-24 WJ . Rapaport: Syntactic Semantics: Foundations of Computational Natural-

Language Understanding ($1.00/$1.50)

87-01 S. Chakravarty, H.B. Hunt III: On Computing Test Vectors for Multiple Faults in

Gate Level Combinational Circuits ($1.00/$1.50)

87-02 J. Case: Turing Machines ($1.00/$1.50)

87-03 S.N. Srihari, W.J. Rapaport, D. Kumar: On Knowledge Representation using Semantic

Networks and Sanskrit ($1.00/$1.50)

Você também pode gostar

- Semantic NetworkDocumento8 páginasSemantic Networknoah676Ainda não há avaliações

- Biosystem in PressDocumento20 páginasBiosystem in PressmfcarAinda não há avaliações

- Semantic Networks1Documento29 páginasSemantic Networks1Sakshi TyagiAinda não há avaliações

- Abdallah, Gold, Marsden - 2016 - Analysing Symbolic Music With Probabilistic GrammarsDocumento33 páginasAbdallah, Gold, Marsden - 2016 - Analysing Symbolic Music With Probabilistic GrammarsGilmario BispoAinda não há avaliações

- MusicAL - Go - Algorithmic Music GenerationDocumento9 páginasMusicAL - Go - Algorithmic Music GenerationIJRASETPublicationsAinda não há avaliações

- AIML-Unit 3 NotesDocumento22 páginasAIML-Unit 3 NotesHarshithaAinda não há avaliações

- Biological Cybernetics: Self-Organizing Semantic MapsDocumento14 páginasBiological Cybernetics: Self-Organizing Semantic MapsgermanAinda não há avaliações

- Gerd K. Heinz - Interference Networks - A Physical, Structural and Behavioural Approach To Nerve SystemDocumento16 páginasGerd K. Heinz - Interference Networks - A Physical, Structural and Behavioural Approach To Nerve SystemDominos021Ainda não há avaliações

- Brain NetworksDocumento16 páginasBrain NetworksSharon AnahíAinda não há avaliações

- Irvine SSA Peirce Computation Expanded VersionDocumento40 páginasIrvine SSA Peirce Computation Expanded VersionofficeAinda não há avaliações

- Artificial Grammar Processing in Spiking Neural Networks: Philip Cavaco, Baran C Ur Ukl UDocumento9 páginasArtificial Grammar Processing in Spiking Neural Networks: Philip Cavaco, Baran C Ur Ukl UMochammad MuzakhiAinda não há avaliações

- AI Magazine - Spring 1985 - Briggs - Knowledge Representation in Sanskrit and Artificial IntelligenceDocumento8 páginasAI Magazine - Spring 1985 - Briggs - Knowledge Representation in Sanskrit and Artificial IntelligencePranshu BararAinda não há avaliações

- 9 Cryptical Learning IsDocumento5 páginas9 Cryptical Learning IsRichard BryanAinda não há avaliações

- In The Mind of An AIDocumento32 páginasIn The Mind of An AIKatherine HaylesAinda não há avaliações

- Semantic NetworkDocumento12 páginasSemantic NetworkKartik jainAinda não há avaliações

- System Language: Understanding Systems George Mobus: Corresponding Author: Gmobus@uw - EduDocumento28 páginasSystem Language: Understanding Systems George Mobus: Corresponding Author: Gmobus@uw - EduRodrigo CalhauAinda não há avaliações

- Development and Application of Artificial Neural NetworkDocumento12 páginasDevelopment and Application of Artificial Neural Networkmesay bahiruAinda não há avaliações

- Semantic Web Unit - 3Documento25 páginasSemantic Web Unit - 3suryachandra poduguAinda não há avaliações

- Aphasia RehabDocumento72 páginasAphasia RehabkicsirekaAinda não há avaliações

- Recurrent Temporal Networks and Language Acquisition-From Corticostriatal Neurophysiology To Reservoir ComputingDocumento14 páginasRecurrent Temporal Networks and Language Acquisition-From Corticostriatal Neurophysiology To Reservoir ComputingJaeeon LeeAinda não há avaliações

- Semantic Networks: John F. SowaDocumento25 páginasSemantic Networks: John F. SowaSubramanyam Arehalli MuniswariahAinda não há avaliações

- Sanskrit & A.I.Documento8 páginasSanskrit & A.I.Krishna Chaitanya DasAinda não há avaliações

- Gesture Recognition System: Submitted in Partial Fulfillment of Requirement For The Award of The Degree ofDocumento37 páginasGesture Recognition System: Submitted in Partial Fulfillment of Requirement For The Award of The Degree ofRockRaghaAinda não há avaliações

- UNIT-II Part 2 KRDocumento21 páginasUNIT-II Part 2 KRGirishAinda não há avaliações

- Teoria Sobre o AglDocumento23 páginasTeoria Sobre o AglRafael CésarAinda não há avaliações

- Network ScienceDocumento11 páginasNetwork ScienceFRANCESCO222Ainda não há avaliações

- Qualitative Models, Quantitative Tools and Network AnalysisDocumento8 páginasQualitative Models, Quantitative Tools and Network AnalysisCarlos Alberto Beltran ArismendiAinda não há avaliações

- FQS - 1 - 3 - Ashmore - Innocence and Nostalgia in Conversation Analysis - The Dynamic Relations of Tape and ...Documento19 páginasFQS - 1 - 3 - Ashmore - Innocence and Nostalgia in Conversation Analysis - The Dynamic Relations of Tape and ...ELVERAinda não há avaliações

- Fracking Sarcasm Using Neural Network: June 2016Documento10 páginasFracking Sarcasm Using Neural Network: June 2016bAxTErAinda não há avaliações

- Recent and Current Artificial Intelligence Research in The Department of Computer Science, State University of New York at BuffaloDocumento10 páginasRecent and Current Artificial Intelligence Research in The Department of Computer Science, State University of New York at BuffaloDavid TothAinda não há avaliações

- ANN - WikiDocumento39 páginasANN - Wikirudy_tanagaAinda não há avaliações

- 01-C Baronchelli Et Al (2013) Networks in Cognitive ScienceDocumento13 páginas01-C Baronchelli Et Al (2013) Networks in Cognitive Science100111Ainda não há avaliações

- Pulsed Neural Networks and Their Application: Daniel R. Kunkle Chadd MerriganDocumento11 páginasPulsed Neural Networks and Their Application: Daniel R. Kunkle Chadd MerriganinnocentbystanderAinda não há avaliações

- Unit-4 AimlDocumento27 páginasUnit-4 AimlRAFIQAinda não há avaliações

- Neuroscience-Inspired Artificial IntelligenceDocumento15 páginasNeuroscience-Inspired Artificial IntelligenceGOGUAinda não há avaliações

- An Improvised Approach of Deep Learning Neural Networks in NLP ApplicationsDocumento7 páginasAn Improvised Approach of Deep Learning Neural Networks in NLP ApplicationsIJRASETPublicationsAinda não há avaliações

- A Large Database of Hypernymy Relations Extracted From The WebDocumento8 páginasA Large Database of Hypernymy Relations Extracted From The WebMouhammad SryhiniAinda não há avaliações

- Machine Learning Data Mining: ProblemsDocumento27 páginasMachine Learning Data Mining: ProblemsnanaoAinda não há avaliações

- D V 3: S T - S C S L: EEP Oice Caling EXT TO Peech With Onvolutional Equence EarningDocumento16 páginasD V 3: S T - S C S L: EEP Oice Caling EXT TO Peech With Onvolutional Equence EarningAbu SufiunAinda não há avaliações

- D V 3: S T - S C S L: EEP Oice Caling EXT TO Peech With Onvolutional Equence EarningDocumento16 páginasD V 3: S T - S C S L: EEP Oice Caling EXT TO Peech With Onvolutional Equence Earningmrbook79Ainda não há avaliações

- A Complex Network Approach To Distributional Semantic ModelsDocumento34 páginasA Complex Network Approach To Distributional Semantic Modelsefegallego9679Ainda não há avaliações

- NLP UNIT 2 (Ques Ans Bank)Documento26 páginasNLP UNIT 2 (Ques Ans Bank)Zainab BelalAinda não há avaliações

- Semantic Network Representation: StatementsDocumento7 páginasSemantic Network Representation: StatementsHarshit JainAinda não há avaliações

- Interpretable Machine Learning For Science With Pysr and Symbolicregression - JLDocumento24 páginasInterpretable Machine Learning For Science With Pysr and Symbolicregression - JLJuan Los NoblesAinda não há avaliações

- Neural NetworkDocumento12 páginasNeural NetworkkomalAinda não há avaliações

- Topics in Cognitive Science - 2010 - Kintsch - The Construction of MeaningDocumento25 páginasTopics in Cognitive Science - 2010 - Kintsch - The Construction of MeaningadeleAinda não há avaliações

- Chapter 7Documento15 páginasChapter 7نور هادي حمودAinda não há avaliações

- Non-Axiomatic Term Logic A Computational Theory of Cognitive Symbolic ReasoningDocumento27 páginasNon-Axiomatic Term Logic A Computational Theory of Cognitive Symbolic ReasoningRepositorioAinda não há avaliações

- Neural Network: From Wikipedia, The Free EncyclopediaDocumento9 páginasNeural Network: From Wikipedia, The Free EncyclopediaLeonardo Kusuma PAinda não há avaliações

- Interactive SemanticDocumento15 páginasInteractive SemanticfcvieiraAinda não há avaliações

- Research Paper For SounderDocumento4 páginasResearch Paper For Soundervagipelez1z2100% (1)

- Labeling of Phones The Mic SensesDocumento7 páginasLabeling of Phones The Mic SensesMEL45Ainda não há avaliações

- Neural Network ReportDocumento27 páginasNeural Network ReportSiddharth PatelAinda não há avaliações

- Intro To Spiking Neural NetworksDocumento10 páginasIntro To Spiking Neural NetworksjeffconnorsAinda não há avaliações

- Collocational Overlap Can Guide Metaphor Interpretation : Anatol StefanowitschDocumento25 páginasCollocational Overlap Can Guide Metaphor Interpretation : Anatol StefanowitschAndrea Laudid Granados DavilaAinda não há avaliações

- Evolution of Semantic SystemsDocumento241 páginasEvolution of Semantic SystemsGora Heil Viva Jesucristo100% (1)

- Neural Distributed Autoassociative Survey 2017Documento32 páginasNeural Distributed Autoassociative Survey 2017Jacob EveristAinda não há avaliações

- Database Semantics For Natural Language: Roland HausserDocumento48 páginasDatabase Semantics For Natural Language: Roland Haussermanskebe6121Ainda não há avaliações

- Job Description - MIS Executive Mumbai: Overall Purpose of RoleDocumento4 páginasJob Description - MIS Executive Mumbai: Overall Purpose of RoleManidip GangulyAinda não há avaliações

- Points To Be Discussed in UKDocumento1 páginaPoints To Be Discussed in UKManidip GangulyAinda não há avaliações

- 6428 Chapter 6 Lee (Analyzing) I PDF 7 PDFDocumento30 páginas6428 Chapter 6 Lee (Analyzing) I PDF 7 PDFManidip GangulyAinda não há avaliações

- Aparna Sen, Lolita Chattopadhyay Manabendra Mukhopadhyay: No Year Movie Director Co-Stars Banner Music DirectorDocumento2 páginasAparna Sen, Lolita Chattopadhyay Manabendra Mukhopadhyay: No Year Movie Director Co-Stars Banner Music DirectorManidip GangulyAinda não há avaliações

- HotelsDocumento2 páginasHotelsManidip GangulyAinda não há avaliações

- Mahabharater Chhay PrabinDocumento416 páginasMahabharater Chhay PrabinManidip Ganguly100% (2)

- New Joiner's Form: (Please Fill in The Form in BLOCK Letters)Documento1 páginaNew Joiner's Form: (Please Fill in The Form in BLOCK Letters)Manidip GangulyAinda não há avaliações

- Kalim CV RecruitmentDocumento3 páginasKalim CV RecruitmentManidip GangulyAinda não há avaliações

- Review Deck - MIS Automation - Final v1Documento16 páginasReview Deck - MIS Automation - Final v1Manidip GangulyAinda não há avaliações

- BC HRMA - HR Metrics Interpretation GuideDocumento30 páginasBC HRMA - HR Metrics Interpretation GuideManidip GangulyAinda não há avaliações

- Sap HRDocumento3 páginasSap HRManidip GangulyAinda não há avaliações

- Coordinating Conjunction RulesDocumento22 páginasCoordinating Conjunction RulesTeacher AisahAinda não há avaliações

- Adjectival Clauses G7Documento2 páginasAdjectival Clauses G7Kritika RamchurnAinda não há avaliações

- Purposes, Vol. 16, No. 4., Elsevier Science, Great Britain, 1997, Pp. 271-287Documento5 páginasPurposes, Vol. 16, No. 4., Elsevier Science, Great Britain, 1997, Pp. 271-287AlessandraGironiAinda não há avaliações

- b2 First Vol1 Answer KeyDocumento17 páginasb2 First Vol1 Answer KeyDavid Alba DuránAinda não há avaliações

- Anderson Anthony C 22some Emendations of Gc3b6dels Ontological Proof22Documento13 páginasAnderson Anthony C 22some Emendations of Gc3b6dels Ontological Proof22Ruan DuarteAinda não há avaliações

- The Corpus-Based ApproachDocumento7 páginasThe Corpus-Based ApproachÉrico AssisAinda não há avaliações

- Grammar For Writing SheetsDocumento1 páginaGrammar For Writing SheetsYehia IslamAinda não há avaliações

- Course Paper-AnaraDocumento18 páginasCourse Paper-AnaraОрозбекова Нурзада КубанычбековнаAinda não há avaliações

- Resumen - A Textbook of TranslationDocumento50 páginasResumen - A Textbook of TranslationBárbaraCáseres90% (10)

- Compgrammar BcsDocumento111 páginasCompgrammar BcsQin HuishengAinda não há avaliações

- Nominativ 1st Case CorrectedDocumento4 páginasNominativ 1st Case CorrectedSuzana AnđelkovićAinda não há avaliações

- Homonymy Is The Relation Between Words With Identical Forms ButDocumento2 páginasHomonymy Is The Relation Between Words With Identical Forms ButWolf ClawsAinda não há avaliações

- Subordinating Conjunctions PowerpointDocumento21 páginasSubordinating Conjunctions PowerpointmarioAinda não há avaliações

- HUBT Testing Center Test Code: 2: LexicologyDocumento3 páginasHUBT Testing Center Test Code: 2: LexicologyThùy Linh HoàngAinda não há avaliações

- Sentences LessonDocumento12 páginasSentences LessonDanielle ClemmerAinda não há avaliações

- Fish On Text Purpose and Intent 2 PDFDocumento39 páginasFish On Text Purpose and Intent 2 PDFrachel oAinda não há avaliações

- Unit 2 Lesson 4 6Documento20 páginasUnit 2 Lesson 4 6Steffa GamingAinda não há avaliações

- Articulation and Phonology in Speech Sound Disorders A Clinical Focus 6Th Edition Jacqueline Bauman Waengler Full ChapterDocumento67 páginasArticulation and Phonology in Speech Sound Disorders A Clinical Focus 6Th Edition Jacqueline Bauman Waengler Full Chapterjohn.parks381100% (5)

- Stylistics Course of Lectures 2020 Final (Repaired)Documento192 páginasStylistics Course of Lectures 2020 Final (Repaired)Mariam SinghiAinda não há avaliações

- Subject Subjective Complement Direct Object Indirect Object: WhichDocumento13 páginasSubject Subjective Complement Direct Object Indirect Object: WhichLawxusAinda não há avaliações

- Rara VarisyaDocumento12 páginasRara VarisyaNabil AbdurrahmanAinda não há avaliações

- Preface: TeachingDocumento10 páginasPreface: TeachingHoli100% (1)

- Makalah Semantics RulesDocumento6 páginasMakalah Semantics RulesNeng100% (1)

- PossessivesDocumento2 páginasPossessivesapi-323975421Ainda não há avaliações

- BAHASA INGgrisDocumento27 páginasBAHASA INGgrisUnknownAinda não há avaliações

- Acp English Class 4Documento10 páginasAcp English Class 4Chinmayee MishraAinda não há avaliações

- Grammar - Parts of Speech - ConjunctionsDocumento3 páginasGrammar - Parts of Speech - ConjunctionsBalram JhaAinda não há avaliações

- Summary of Translation TheoriesDocumento6 páginasSummary of Translation TheoriesZobia Asif100% (1)

- English Quiz QuestionsDocumento6 páginasEnglish Quiz QuestionsSunusiAinda não há avaliações

- Structure of Reality in Izutsu's Oriental PhiloDocumento17 páginasStructure of Reality in Izutsu's Oriental Philoabouahmad100% (1)