Escolar Documentos

Profissional Documentos

Cultura Documentos

Gesture Technology: A Review: Aarti Malik, Ruchika

Enviado por

Bhooshan WaghmareTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Gesture Technology: A Review: Aarti Malik, Ruchika

Enviado por

Bhooshan WaghmareDireitos autorais:

Formatos disponíveis

International Journal of Electronics and Computer Science Engineering Available Online at www.ijecse.

org

2324

ISSN- 2277-1956

Gesture Technology: A Review

Aarti Malik

1

, Ruchika

Department of Electronics and Communication Engineering Department of Electronics and Communication Engineering, I.E.T, Bhaddal, INDIA 2 Department of Electronics and Communication Engineering, I.E.T, Bhaddal, INDIA 1 malik.aarti185@gmail.com, ruchi_khrn@yahoo.co.in

Abstract- Body language comprises of two main parts- postures and gestures where the literal meaning of posture is static positions or poses and gestures is dynamic hand or body signs. The traditional input devices have limitations especially for those users who are uncomfortable with them. So a way out of this problem is gesture based interaction system. The main goal of gesture recognition is to create a human interaction based system which can recognize specific human gestures and use them to convey information or for device control. This paper presents a review of the introduction, types of gestures and identifies trends in technology, application and usability. Keywords Gesture based interaction system, User interfaces, Hand gestures, Applications

I. INTRODUCTION For centuries keyboards and mouse have been the main input devices but now with the popularity of ubiquitous and ambient devices like digital TV, play stations, options like grasping virtual objects, hand, head or body gesture, eye fixation tracking are becoming the need of time. Gestures have always being used in a human interaction like waving goodbye is a gesture but now with the advent of human computer interaction system their utility has increased. It provides easy means to interact with the surrounding environment especially for handicapped people who are unable to live their lives in a traditional way. A gesture is scientifically categorized into two distinctive categories: dynamic and static. A dynamic gesture is intended to change over a period of time whereas a static gesture is observed at the spurt of time. A waving hand means goodbye is an example of dynamic gesture and the stop sign is an example of static gesture. To understand a full message, it is necessary to interpret all the static and dynamic gestures over a period of time. This complex process is called gesture recognition. Gesture recognition is the process of recognizing and interpreting a stream continuous sequential gesture from the given set of input data like movements of the hands, arms, face, or sometimes head. Without any mechanical help elderly and disable people can also use gesture based system for controlling the PC. Different technologies like camera, graphics, vision etc. are used in Gesture controlled system. II.TYPES OF GESTURES According to Cadoz [1] gesture have three complementary and interdependent functions: 1. The epistemic function, which corresponds to perception. This includes: - The haptic sense, which combines tactile (touch) and kinesthetic sensations (awareness of the position ofthe body and limbs), and gives information about size, shape and orientation. - The proprioceptive sense, which provides informationon weight and movement through joint sensors. 2. The ergotic function, which corresponds to actions applied to objects. 3. The semiotic function, which concerns communication. Examples include sign language and gesture accompanying speech. Gestures are often language and culture-specific. They canbroadly be of the following types [2]: 1. Hand and arm gestures: recognition of hand poses, sign languages, and entertainment applications (allowing children to play and interact in virtual environments).

ISSN 2277-1956/V1N4-2324-2327

2325 Gesture Technology: A Review 2. Head and face gestures: Some examples are a) nodding or head shaking, b) direction of eye gaze, c) raising the eyebrows, d) opening and closing the mouth, e) winking, f) flaring the nostrils, e) looks of surprise, happiness, disgust, fear, sadness, and many others represent head and face gestures. Body gestures: involvement of full body motion, as in a) tracking movements of two people having a conversation, b) analyzing movements of a dancer against the music being played and the rhythm, c) recognizing human gaits for medical rehabilitation and athletic training.

3.

III. GESTURE ACQUISITION TECHNIQUES

A. Hidden Markov Models

The dynamic aspects of gestures are utilized in this method [3]. Recognition of two classes of Gestures: deictic and symbolic is the main goal. 1. In this technique gesture are extracted from a sequence of video images by tracking the skin-color blobs corresponding to the hand into a body face space centered on the face of the user. 2. Image filtration is done using a fast lookup indexing table of skin colour pixels in YUV color space. 3. After filtering, skin colour pixels are gathered into blobs. Blobs are statistical objects based on the location (x,y) and the colourimetry (Y,U,V) of the skin colour pixels in order to determine homogeneous areas. 4. A skin colour pixel belongs to the blob which has the same location and colourimetry component. Deictic gestures are pointing movements towards the left (right) of the bodyface space and symbolic gestures are intended to execute commands (grasp, click, rotate) on the left (right) of shoulder.

B. YUV Color Space and CAMSHIFT Algorithm [4]

This method deals with recognition of hand gestures. It is done in the following five steps. 1. First, a digital camera records a video stream of hand gestures. 2. All the frames are taken into consideration and then using YUV colour space skin color based segmentation is performed. The YUV colour system is employed for separating chrominance and intensity. The symbol Y indicates intensity while UV specifies chrominance components. 3. Now the hand is separated using CAMSHIFT [5] algorithm .Since the hand is the largest connected region, we can segment the hand from the body. 4. After this is done, the position of the hand centroid is calculated in each frame. This is done by first calculating the zeroth and first moments and then using this information the centroid is calculated. 5. Now the different centroid points are joint to form a trajectory .This trajectory shows the path of the hand movement and thus the hand tracking procedure is determined.

C. Using Time Flight Camera

This approach uses x and y-projections of the image and optional depth features for gesture classification. The system uses a 3-D time-of-flight (TOF) [6] [7] sensor which has the big advantage of simplifying hand segmentation. The gestures used in the system show a good separation potential along the two image axes. The algorithm can be divided into five steps: 1. Segmentation of the hand and arm via distance values: The hand and arm are segmented by an iterative seed fill algorithm. 2. Determination of the bounding box: The segmented region is projected onto the x- and y-axis to determine the bounding box of the object. 3. Extraction of the hand. 4. Projection of the hand region onto the x- and y-axis.

ISSN 2277-1956/V1N4-2324-2327

IJECSE,Volume1,Number 4 Aarti & Ruchika

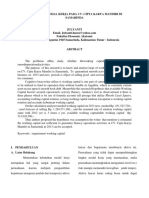

IV.MILESTONES IN GESTURE TECHNOLOGY

Research Study-year Visual Touchpad [8]2004 Users-Gesture General-Hand Technology Quadrangle panel with a rigid backing with PCs & two cameras. Visualization method architecture using the accelerometer data Applications Interaction with PCs using touchpad. Gesture visualization method, animation of hand movement performed during the gesture control control of smart home environments such as lights and curtains Hands free control system of an intelligent wheelchair Enables controls to applications such as MS Office, web browser & multimedia programs in multiple devices. gesture classifications have been developed for human discursive gesture multimodal gestures with speech An industrial design perspective on pointing devices as an input channel. Result /Conclusion Vision-based input device that allows for fluid twohanded gestural interactions

2326

Visualization method [9]-2006

Elderly-Hand

Visualization provides information about the gesture performed.

Intelligent Smart Home Control Using Body Gestures[10] -2006 Head gesture recognition [11] 2007 Select-and-Point[12] -2008

General

Elderly & disabled

Smart home with 3 CCD cameras. Marker attached in the human body Wheelchair with laptop & webcam.

Recognition rate is 95.42% for continuously changing gestures

Head gesture control system.

General

User-Defined Gestures for Surface Computing [13] -2009

General-Hand

Composed of three parts-a presence server, controlling peer & controlled peer using cameral, software tools user-defined gesture set, implications for surface technology, and a taxonomy of surface gestures.

Implementing intelligent meeting room

It results include a gesture taxonomy, the userdefined gesture set, performance measures, subjective responses, and qualitative observations.

Designing for

gestures

design patterns

(multi-touch)

screens -2011

Desktop operating systems, mobile operating systems, 3rd Party software, small software products, and common hardware products.

It discusses the evolution of interface design from a hardware driven to a software driven approach

V.CONCLUSION In this paper, we described introduction, gestures types and different methods to acquire different types of gestures. A primary goal of virtual environments is to provide natural, efficient, and flexible interaction between human and computer. Gestures as input modality can help meet these requirements. Human gestures being natural are efficient and powerful and therefore, through this paper we discuss this powerful medium.

ISSN 2277-1956/V1N4-2324-2327

2327 Gesture Technology: A Review

VI. REFERENCE [1] Cadoz, C., Le Geste Canal de Communication HommeMachine, la Communication Instrumentale, Technique et Science Informatiques, 13, 1, 1994, pp 31-61. [2] S. Mitra and T. Acharya, Gesture recognition: A survey, IEEE Transactions on Systems, Man, and Cybernetics - Part C, vol. 37, no. 3, pp. 311324, 2007. [3] Chih-Ming Fu et.al, Hand gesture recognition using a real-time tracking method and hidden Markov models, Science Direct Image and Vision Computing, Volume 21, Issue 8, 1 August 2003, pp.745-758 [4] Harshith.C1, Karthik.R.Shastry2, Manoj Ravindran3, M.V.V.N.S Srikanth4, Naveen Lakshmikhanth5: survey on various gesture recognition techniques for interfacing machines based on ambient intelligence, (IJCSES) Vol.1, No.2, November 2010 [5] Vadakkepat, P et.al, Multimodal Approach to Human-Face Detection and Tracking, Industrial Electronics, IEEE Transactions on Issue Date: March 2008, Volume: 55 Issue:3, pp.1385 - 1393 [6] E. Kollorz, J. Hornegger and A. Barke, Gesture recognition with a time-of-flight camera, Dynamic 3D Imaging, International Journal of Intelligent Systems Technologies and Applications Issue: Volume 5, Number 3-4 2008, pp.334 343. [7] Bohme, M., Haker, M., Martinetz, T., and Barth, E. (2007) A facial feature tracker for humancomputer interaction based on 3D TOF cameras, Dynamic 3D Imaging (Workshop in conjunction with DAGM 2007). [8] Malik, S. and Laszlo, J. (2004). Visual Touchpad: A Two-handed Gestural Input Device. In Proceedings of the ACM International Conference on Multimodal Interfaces. p. 289 [9] Sanna K., Juha K., Jani M. and Johan M (2006), Visualization of Hand Gestures for Pervasive Computing Environments, in the Proceedings of the working conference on Advanced visual interfaces, ACM, Italy, p. 480-483. [10] Kim, D, Kim, D, (2006), An Intelligent Smart Home Control Using Body Gestures. In the Proceedings of International Conference on Hybrid Information Technology (ICHIT'06), IEEE, Korea [11] Jia, P. and Huosheng H. Hu. (2007), Head gesture recognition for hands-free control of an intelligent wheelchair. Industrial Robot: An International Journal,Emerald, p60-68. [12] Hyunglae Lee, Heeseok Jeong, JoongHo Lee, Ki-Won Yeom, HyunJin Shin, Ji-Hyung Park, "Select-and-Point: A Novel Interface for Multi- Device Connection and Control based on Simple Hand Gestures", CHI 2008, April 510, 2008, Florence, Italy, ACM 978-1-60558-012-8/08/04 [13] Jacob O. Wobbrock, Meredith Ringel Morris, Andrew D. Wilson, User-Defined Gestures for Surface Computing

ISSN 2277-1956/V1N4-2324-2327

Você também pode gostar

- Seminar On Gesture RecognitionDocumento35 páginasSeminar On Gesture RecognitionPrathibha Pydikondala86% (7)

- Real Time Hand Gesture Recognition Using Finger TipsDocumento5 páginasReal Time Hand Gesture Recognition Using Finger TipsJapjeet SinghAinda não há avaliações

- Gesture Recognition Using AccelerometerDocumento52 páginasGesture Recognition Using AccelerometerJithin RajAinda não há avaliações

- Survey On 3D Hand Gesture RecognitionDocumento15 páginasSurvey On 3D Hand Gesture RecognitionMuhammad SulemanAinda não há avaliações

- Hand Gesture Recognition Approach:A SurveyDocumento4 páginasHand Gesture Recognition Approach:A SurveyIJIRSTAinda não há avaliações

- Human Computer Interaction Using Vision-Based Hand Gesture RecognitionDocumento10 páginasHuman Computer Interaction Using Vision-Based Hand Gesture RecognitionrukshanscribdAinda não há avaliações

- Vision-Based Hand Gesture Recognition For Human-Computer InteractionDocumento4 páginasVision-Based Hand Gesture Recognition For Human-Computer InteractionRakeshconclaveAinda não há avaliações

- Hand Gesture Recognition Using Neural Networks: G.R.S. Murthy R.S. JadonDocumento5 páginasHand Gesture Recognition Using Neural Networks: G.R.S. Murthy R.S. JadonDinesh KumarAinda não há avaliações

- Hand Gesture Recognition For Sign Language Recognition: A ReviewDocumento6 páginasHand Gesture Recognition For Sign Language Recognition: A ReviewIJSTEAinda não há avaliações

- Ijcse V1i2p2 PDFDocumento9 páginasIjcse V1i2p2 PDFISAR-PublicationsAinda não há avaliações

- Neural Network Based Static Hand Gesture RecognitionDocumento5 páginasNeural Network Based Static Hand Gesture RecognitionInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- Gesture Controlled Virtual Mouse Using AIDocumento8 páginasGesture Controlled Virtual Mouse Using AIIJRASETPublicationsAinda não há avaliações

- Literature Review and Proposed Research: Alba Perez GraciaDocumento34 páginasLiterature Review and Proposed Research: Alba Perez GraciaShubha ShriAinda não há avaliações

- Machine Learning and Ai (Eaepcpc09) : Project: Sign Language RecognitionDocumento20 páginasMachine Learning and Ai (Eaepcpc09) : Project: Sign Language RecognitionPragyadityaAinda não há avaliações

- Real Time Vision-Based Hand Gesture Recognition For Robotic ApplicationDocumento10 páginasReal Time Vision-Based Hand Gesture Recognition For Robotic ApplicationkrishnagdeshpandeAinda não há avaliações

- Improve The Recognition Accuracy of Sign Language GestureDocumento7 páginasImprove The Recognition Accuracy of Sign Language GestureIJRASETPublicationsAinda não há avaliações

- A Real Time Static & Dynamic Hand Gesture Recognition SystemDocumento6 páginasA Real Time Static & Dynamic Hand Gesture Recognition Systemsharihara ganeshAinda não há avaliações

- A Survey On Sign Language Recognition With Efficient Hand Gesture RepresentationDocumento7 páginasA Survey On Sign Language Recognition With Efficient Hand Gesture RepresentationIJRASETPublicationsAinda não há avaliações

- Ijecet: International Journal of Electronics and Communication Engineering & Technology (Ijecet)Documento9 páginasIjecet: International Journal of Electronics and Communication Engineering & Technology (Ijecet)IAEME PublicationAinda não há avaliações

- Methods, Databases and Recent Advancement of Vision Based Hand Gesture Recognition For HCI Systems: A ReviewDocumento40 páginasMethods, Databases and Recent Advancement of Vision Based Hand Gesture Recognition For HCI Systems: A Reviewboran28232Ainda não há avaliações

- A Survey of Robotic Hand-Arm Systems: C.V. Vishal Ramaswamy S. Angel DeborahDocumento6 páginasA Survey of Robotic Hand-Arm Systems: C.V. Vishal Ramaswamy S. Angel DeborahDHARANIDHARAN M SITAinda não há avaliações

- Gesture Based CommunicationDocumento3 páginasGesture Based Communicationanju91Ainda não há avaliações

- Hand Gesture 17Documento10 páginasHand Gesture 17ifyAinda não há avaliações

- Gesture Recognition Is A Topic in Computer Science and Language Technology With TheDocumento34 páginasGesture Recognition Is A Topic in Computer Science and Language Technology With Thenandu bijuAinda não há avaliações

- Autonomous Multiple Gesture Recognition System For PDFDocumento8 páginasAutonomous Multiple Gesture Recognition System For PDFAbhi SigdelAinda não há avaliações

- Optimized Hand Gesture Based Home Automation For FeeblesDocumento11 páginasOptimized Hand Gesture Based Home Automation For FeeblesIJRASETPublicationsAinda não há avaliações

- Meenakshi Seminar BodyDocumento25 páginasMeenakshi Seminar BodyMEENAKSHI K SAinda não há avaliações

- Hand Biometrics: Erdem Yo Ru K, Helin Dutag Aci, Bu Lent SankurDocumento15 páginasHand Biometrics: Erdem Yo Ru K, Helin Dutag Aci, Bu Lent Sankurjit_72Ainda não há avaliações

- Gesture-Controlled User Input To Complete Questionnaires On Wrist-Worn WatchesDocumento10 páginasGesture-Controlled User Input To Complete Questionnaires On Wrist-Worn WatchesAjita LahaAinda não há avaliações

- Vision Based Gesture Recognition Systems - A SurveyDocumento4 páginasVision Based Gesture Recognition Systems - A SurveyInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- Recognition of ASL Using Hand GesturesDocumento5 páginasRecognition of ASL Using Hand GesturesInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- I Am Coauther Inam PaperDocumento13 páginasI Am Coauther Inam PaperGDC Totakan MalakandAinda não há avaliações

- Indian Sign Language Gesture ClassificationDocumento22 páginasIndian Sign Language Gesture ClassificationVkg Gpta100% (2)

- Vision Based Hand Gesture RecognitionDocumento6 páginasVision Based Hand Gesture RecognitionAnjar TriyokoAinda não há avaliações

- Give Me A Hand - How Users Ask A Robotic Arm For Help With GesturesDocumento5 páginasGive Me A Hand - How Users Ask A Robotic Arm For Help With GesturesyrikkiAinda não há avaliações

- ReportDocumento13 páginasReportCa HuyAinda não há avaliações

- 7 ReferredDocumento12 páginas7 ReferredPanosKarampisAinda não há avaliações

- Hand Gesture Recognition For Hindi Vowels Using OpencvDocumento5 páginasHand Gesture Recognition For Hindi Vowels Using OpencvInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- Real Time Finger Tracking and Contour Detection For Gesture Recognition Using OpencvDocumento4 páginasReal Time Finger Tracking and Contour Detection For Gesture Recognition Using OpencvRamírez Breña JosecarlosAinda não há avaliações

- Recognition of Hand Gesture Using PCA, Image Hu's Moment and SVMDocumento4 páginasRecognition of Hand Gesture Using PCA, Image Hu's Moment and SVMInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- Research Article: Hand Gesture Recognition Using Modified 1$ and Background Subtraction AlgorithmsDocumento9 páginasResearch Article: Hand Gesture Recognition Using Modified 1$ and Background Subtraction AlgorithmsĐào Văn HưngAinda não há avaliações

- Sensors 13 11842Documento19 páginasSensors 13 11842Animesh GhoshAinda não há avaliações

- ReportnewDocumento18 páginasReportnewApoorva RAinda não há avaliações

- Hand Gestures To Control Infotainment Equipment in CarsDocumento6 páginasHand Gestures To Control Infotainment Equipment in CarsnavAinda não há avaliações

- Hand Gesture Recognition System: January 2011Documento6 páginasHand Gesture Recognition System: January 2011raviAinda não há avaliações

- Vdocument - in - Haptics Technologies Site Elsaddikabedwebteachingelg5124pdfchapter PDFDocumento23 páginasVdocument - in - Haptics Technologies Site Elsaddikabedwebteachingelg5124pdfchapter PDFJigar ChovatiyaAinda não há avaliações

- Sensors: Machine-Learning-Based Muscle Control of A 3D-Printed Bionic ArmDocumento16 páginasSensors: Machine-Learning-Based Muscle Control of A 3D-Printed Bionic ArmDhinu LalAinda não há avaliações

- G J E S R: Gesture and Voice Based Real Time Control SystemDocumento3 páginasG J E S R: Gesture and Voice Based Real Time Control SysteminzovarAinda não há avaliações

- Real-Time Hand Gesture Detection and Recognition Using Boosted Classifiers and Active LearningDocumento14 páginasReal-Time Hand Gesture Detection and Recognition Using Boosted Classifiers and Active LearningdraconboAinda não há avaliações

- Human Activity RecognitionDocumento40 páginasHuman Activity RecognitionRasool ReddyAinda não há avaliações

- 2008-PCSC-Vision Based Hand MimickingDocumento5 páginas2008-PCSC-Vision Based Hand MimickingJoel P. IlaoAinda não há avaliações

- Survey On Gesture Recognition For Sign LanguageDocumento10 páginasSurvey On Gesture Recognition For Sign LanguageIJRASETPublications0% (1)

- Hand-Gesture Recognition Using Computer-Vision Techniques: David J. Rios-Soria Satu E. Schaeffer Sara E. Garza-VillarrealDocumento8 páginasHand-Gesture Recognition Using Computer-Vision Techniques: David J. Rios-Soria Satu E. Schaeffer Sara E. Garza-Villarrealseven knightsAinda não há avaliações

- Computer Vision Based Hand Gesture Recognition For Human-Robot InteractionDocumento26 páginasComputer Vision Based Hand Gesture Recognition For Human-Robot InteractionNazmus SaquibAinda não há avaliações

- Real Time Hand Gesture Movements Tracking and Recognizing SystemDocumento5 páginasReal Time Hand Gesture Movements Tracking and Recognizing SystemRowell PascuaAinda não há avaliações

- Robot Hand Movement Electromyography SignalDocumento23 páginasRobot Hand Movement Electromyography SignalWilliam VenegasAinda não há avaliações

- Sensors: A Review On Systems-Based Sensory Gloves For Sign Language Recognition State of The Art Between 2007 and 2017Documento44 páginasSensors: A Review On Systems-Based Sensory Gloves For Sign Language Recognition State of The Art Between 2007 and 2017Глеб ДёминAinda não há avaliações

- Computer Vision: Exploring the Depths of Computer VisionNo EverandComputer Vision: Exploring the Depths of Computer VisionAinda não há avaliações

- SH Case3 Informants enDocumento1 páginaSH Case3 Informants enHoLlamasAinda não há avaliações

- Cropanzano, Mitchell - Social Exchange Theory PDFDocumento28 páginasCropanzano, Mitchell - Social Exchange Theory PDFNikolina B.Ainda não há avaliações

- The Mystery of The Secret RoomDocumento3 páginasThe Mystery of The Secret RoomNur Farhana100% (2)

- Natureview Case StudyDocumento3 páginasNatureview Case StudySheetal RaniAinda não há avaliações

- All This Comand Use To Type in NotepadDocumento9 páginasAll This Comand Use To Type in NotepadBiloul ShirazAinda não há avaliações

- Exam in Analytic Geometry With AnswersDocumento4 páginasExam in Analytic Geometry With Answersmvmbapple100% (6)

- India: Labor Market: A Case Study of DelhiDocumento4 páginasIndia: Labor Market: A Case Study of DelhiHasnina SaputriAinda não há avaliações

- MNDCS-2024 New3 - 231101 - 003728Documento3 páginasMNDCS-2024 New3 - 231101 - 003728Dr. Farida Ashraf AliAinda não há avaliações

- Educational Institutions: Santos, Sofia Anne PDocumento11 páginasEducational Institutions: Santos, Sofia Anne PApril ManjaresAinda não há avaliações

- Boiler-Water ChemistryDocumento94 páginasBoiler-Water ChemistryPRAG100% (2)

- Mahindra and Mahindra Limited - 24Documento12 páginasMahindra and Mahindra Limited - 24SourabhAinda não há avaliações

- Kebutuhan Modal Kerja Pada Cv. Cipta Karya Mandiri Di SamarindaDocumento7 páginasKebutuhan Modal Kerja Pada Cv. Cipta Karya Mandiri Di SamarindaHerdi VhantAinda não há avaliações

- (Promotion Policy of APDCL) by Debasish Choudhury: RecommendationDocumento1 página(Promotion Policy of APDCL) by Debasish Choudhury: RecommendationDebasish ChoudhuryAinda não há avaliações

- The Til Pat YearsDocumento1 páginaThe Til Pat Yearsrajkumarvpost6508Ainda não há avaliações

- Aditya Man BorborahDocumento4 páginasAditya Man BorborahAditya BorborahAinda não há avaliações

- DAR Provincial Office: On-the-Job Training (OJT) Training PlanDocumento3 páginasDAR Provincial Office: On-the-Job Training (OJT) Training PlanCherry Jean MaanoAinda não há avaliações

- EARTH SCIENCE NotesDocumento8 páginasEARTH SCIENCE NotesAlthea Zen AyengAinda não há avaliações

- Nonfiction Reading Test The Coliseum: Directions: Read The Following Passage and Answer The Questions That Follow. ReferDocumento3 páginasNonfiction Reading Test The Coliseum: Directions: Read The Following Passage and Answer The Questions That Follow. ReferYamile CruzAinda não há avaliações

- Sustainable Building: Submitted By-Naitik JaiswalDocumento17 páginasSustainable Building: Submitted By-Naitik JaiswalNaitik JaiswalAinda não há avaliações

- ADVOCATE ACT - Smart Notes PDFDocumento30 páginasADVOCATE ACT - Smart Notes PDFAnonymous n7rLIWi7100% (2)

- How To Install Linux, Apache, MySQL, PHP (LAMP) Stack On Ubuntu 16.04Documento12 páginasHow To Install Linux, Apache, MySQL, PHP (LAMP) Stack On Ubuntu 16.04Rajesh kAinda não há avaliações

- Dolphin Is An a-WPS OfficeDocumento3 páginasDolphin Is An a-WPS Officeinova ilhamiAinda não há avaliações

- Pearson Edexcel A Level Economics A Fifth Edition Peter Smith Full Chapter PDF ScribdDocumento67 páginasPearson Edexcel A Level Economics A Fifth Edition Peter Smith Full Chapter PDF Scribdrobert.eligio703100% (5)

- 12-List of U.C. Booked in NGZ Upto 31032017Documento588 páginas12-List of U.C. Booked in NGZ Upto 31032017avi67% (3)

- Culvert StatementDocumento4 páginasCulvert StatementRubul Prasad DasAinda não há avaliações

- Sample Information For Attempted MurderDocumento3 páginasSample Information For Attempted MurderIrin200Ainda não há avaliações

- Back To School Proposal PDFDocumento2 páginasBack To School Proposal PDFkandekerefarooqAinda não há avaliações

- Bhrighu Saral Paddhati - 1Documento212 páginasBhrighu Saral Paddhati - 1ErrovioAinda não há avaliações

- A Study of Consumer Protection Act Related Related To Banking SectorDocumento7 páginasA Study of Consumer Protection Act Related Related To Banking SectorParag SaxenaAinda não há avaliações

- Intj MbtiDocumento17 páginasIntj Mbti1985 productionAinda não há avaliações