Escolar Documentos

Profissional Documentos

Cultura Documentos

Book Andrei N

Enviado por

NAndreiDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Book Andrei N

Enviado por

NAndreiDireitos autorais:

Formatos disponíveis

Table of Contents

Chapter 1 Author: Andrei Nistreanu ....................................................................................................3

Chapter 2 The tricks ............................................................................................................................3

Chapter 3 List of articles:.....................................................................................................................5

Chapter 4 Group theory.......................................................................................................................8

4.1 Basis ........................................................................................................................................8

4.2 Rotations................................................................................................................................11

4.3 Reflections.............................................................................................................................13

4.4 Group representations............................................................................................................20

4.5 Unitary Matrix ......................................................................................................................22

4.6 Schur's Lemma......................................................................................................................23

4.7 Great orthogonality theorem..................................................................................................23

4.8 Great orthogonality theorem (application)............................................................................24

4.9 D3 representations and irreducible representations...............................................................25

4.10 Basis Functions....................................................................................................................28

4.11 Projection Operators (D3)....................................................................................................29

4.12 Symmetry adapted linear combinations(C3v).....................................................................36

4.13 Bonding in polyatomics - constructing molecular orbitals from SALCs............................39

4.14 Product representations........................................................................................................40

4.15 Clebsch-Gordan coefficients for simply reducible groups (CGC)......................................42

4.16 Full matrix representations..................................................................................................49

4.17 Selections rules....................................................................................................................52

4.18 (SO3)Linear combinations of spherical harmonics of point groups....................................53

Chapter 5 Tensors...............................................................................................................................58

5.1 Direct products, vectors.........................................................................................................58

5.2 Tensor continuation Polarizability Directions cosines..........................................................66

Chapter 6 Fine structure.....................................................................................................................74

6.1 Angular Momentum...............................................................................................................74

6.2 Atom with Many Electrons....................................................................................................81

6.3 The band structure of blue and yellow diamonds..................................................................88

6.4 optical transition ...................................................................................................................90

6.5 angular momentum deduction...............................................................................................91

Chapter 7 Quantum mechanics and postulates...................................................................................99

7.1 The most important thing in Quantum Mechanics ...............................................................99

7.2 Matrix representation of operators:.......................................................................................99

7.3 Hermitian Operators..............................................................................................................99

Chapter 8 Clebsch-Gordan...............................................................................................................100

8.1 Addition of angular Momenta: Clebsch-Gordan coefficients I..........................................100

8.2 Clebsch-Gordan coefficients (other source)II.....................................................................102

8.3 Examples..............................................................................................................................105

Chapter 9 Binomial relationship.......................................................................................................108

9.1 Permutations........................................................................................................................108

9.2 Combinations......................................................................................................................109

9.3 Binomial formula ...............................................................................................................109

9.4 A relationship among binomial coefficents (Wigner [55] pag 194).....................................111

1

Chapter 10 Explicit formulas for GC or Wigner coefficients...........................................................112

10.1 Equivalence between Fock states or coherent states and spinors......................................112

10.2 Functions of operators ......................................................................................................113

10.3 Generating Function of the States .....................................................................................114

10.4 Evaluation of the Elements of the Wigner Rotation Matrix..............................................115

10.5 RWF lecture.......................................................................................................................117

10.5.1The Beta Function..........................................................................................................117

10.6 Explicit expression for Wigner (CG) coefficients I...........................................................119

10.7 Explicit expression for Clebsch-Gordan coefficients (CGC) (Wigner's formula)II..........121

10.8 Explicit expression for Clebsch-Gordan coefficients (CGC) (Wigner's formula)III ........125

10.9 Van der Waerden symmetric form of CGC .......................................................................127

10.10 Wigner 3j symbols or Racah formula .............................................................................131

Chapter 11 The formula of Lagrange and Sylvester for function F of matrix .................................134

11.1 Sylvester formula for Rabi oscillations full deduction......................................................134

11.2 Eigenvalues and Eigenvectors...........................................................................................135

11.3 Properties of determinants.................................................................................................136

11.4 The Method of Cofactors...................................................................................................138

11.5 Cramer's rule proof............................................................................................................138

11.6 Vandermonde determinant ................................................................................................140

11.7 Vandermonde determinant and Cramer's rule result Lagrange's interpolation formula.. . .141

11.8 Cayley-Hamilton Theorem................................................................................................145

11.8.1 The Theorem.................................................................................................................145

11.8.2The Simple But Invalid Proof........................................................................................146

11.8.3An Analytic Proof..........................................................................................................146

11.8.4Proof for Commutative Rings........................................................................................148

11.8.5Appendix A. Every Complex Matrix is Similar to an Upper Triangular Matrix...........148

11.8.6APPENDIX B. A Complex Polynomial Is Identically Zero Only If All Coefficients Are

Zero..........................................................................................................................................149

11.8.7From Wikipedia, the free encyclopedia.........................................................................149

11.9 SYLVESTER'S MATRIX THEOREM..............................................................................150

11.10 Final formula....................................................................................................................153

Chapter 12 Time evolution two level atom Rabi frequency.............................................................154

12.1 Two level atom..................................................................................................................154

2

Chapter 1 Author: Andrei Nistreanu

I must put the heading 4 because the numbering of formulas become long 10.10.12

Lenef

Chapter 2 The tricks

At each bibliography name you must to create a button back so at the page insert the text : Insert

Bookmark give a number

JsMath rfs10 hhand lhand H L

ctrl +b J

ctrl sift+b J

2

ctrl shift +p J

2

Vezi flv-urile

alignl pentru ecuatii

dupa ce apesi hyperlinkul nu face ctrl-z ci Edit si vezi undo sau redo New Paragraph

fn apesi F3 si apare

E=mc

2

(2.1)

Wikipedia in order to remove hyper-links: select all right click remove hyper-link

Images , jpg from internet : click on the images after double click on the graphics from: Format

styles and formatting

ghilimelee ca text (b'

+

) and(b'

)

Pui caption la figuri apoi click dreapta wrap(a inveli, infasura) > optimal to page

spelling and grammar intri in tools in options languages settings si pui ca sa faca spell check

3( x+4)2( x1)=3 x+12(2 x2)

=3 x+122 x+2

=x+14

"" =alignl

3

x+y = 2

x = 2y

http://wiki.services.openoffice.org/wiki/Documentation/OOoAuthors_User_Manual/Writer_Guide/Cre

ating_a_table_of_contents

1. Mai intai configurezi headingsurile: If you want numbering by chapter, you first have to

configure chapter numbering for the document. Your document uses no heading styles and is

not configured to number the headings. Such numbering can be configured under Tools >

Outline Numbering; normally you would use the "Heading 1" style for the top-level heading.

2. Deci pentru chapter pui hedings 2 show sublevels 1

3. pentru paragrafe pui headings 3 - show sublevels 2 (inserezi si din coltul stanga de sus pui

hedings 3)

4. la equatii pui levelul 2 sau 3 daca pui level 2 atunci apare apare 2 cifre numarul capitolului

punct numarul ecuatiei; daca pui level 3 apare 3 numere adica este inclus si num paragrafului

5. Contents of table : Insert > Indexes and tables

6. right click on the field of the tables: Edit index/tables

7. if you want hyper-link on the headings 3 then chose level 3, after chose hyperlink to pe click on

the page or on the entry

windows Ooo 3.2.1 cu negru vede default faci dublu click pe ecuatie si schimbi la fiecare in parte

dac vrei din gray in negru sau scrie in windows

Right-click page este page setup margins

Open the Navigator (press F5).

1. Click the + sign next to Indexes.

2. Right-click on the desired index and choose Index > Edit.

3. Delete table of contents

In order to change the cross-reference in a Hyperlink:

-make a cross reference

-Insert that cross-reference > Hyperlink

-Click the target in document button to the right of the Target text field, simply

type the name into the target text field box.

-Click apply

Mai bine ctrl-B ctrl U si blue caci in pdf tot nu se vede displayed information

Dar may bine hyperlink caci mai apoi cu navigatorul te intorci ina poi F5 si alegi hyperlinkul

dar daca ai o mie de hyperlincuri?

Add special character in Math first see the name in Latex e.g. \dagger or \otimes after go in insert

object formula or F2 and chose catalog special edit symbols

Step 4. Add the symbol.

(a) In the Edit symbols dialog, delete the entry under Old symbol

(b) In the symbol field, type the command that you want to use to refer to the symbol. For example, say

you want to use the binary order symbol . If you type succ then you will be able to access the

4

symbol using %succ in the equation editor.

(c) Select the font that you want to use under the font dropdown menu. The MIT math fonts are named

Math1 through Math5. You will need to browse through the catalog to find your symbol.

(d) Select the symbol that you want to add and click add.

ubuntu 3.2.1 cu gray dublu click si schimbi in gray

p

j

=

i

c

a

c

(ci )(c j ) E

i

p

j

=

i

c

a

c

(ci )(c j ) E

i

p

j

=

i

c

a

c

(ci )(c j ) E

i

p

j

=

i

c

a

c

(ci )(c j ) E

i

p

j

=

i

c

a

c

(ci )(c j ) E

i

p

j

=

i

c

a

c

(ci )(c j ) E

i

latex

http://es.wikipedia.org/wiki/LaTeX

0=a_{11} + a_{12}

0=a

11

+a

12

correct

x^{a+b}=x^ax^b x

a+b

=x

a

x

b

spatiu intre a si x

x'+x'' = \dot x + \ddot x x ' +x ' ' = x+

x nu se pune \

E &=& mc^2

E = mc

2

&=~

You can do it with LaTeX using Eqe:

\definecolor{grey}{rgb}{0.5,0.5,0.5}

\textcolor{grey}{What you want in grey}

or for example:

\definecolor{grey}{rgb}{0.5,0.5,0.5}

\textcolor{grey}{$\left(\begin {array}{c}7289\\2\end {array}\right) = 65\times10^9$}

Chapter 3 List of articles:

[1] A. Lenef, S. Rand , Electronic structure of the N-V center in diamond: Theory, Phys. Rev. B 53

13441(1996)(Ref. Pag:34, 44, 48)

[2] N. Manson, R. McMurtrie , Issues concerning the nitrogen-vacancy center in diamond, J.

Lumin. 127, 98 103(2007)

[3] J. Loubser and J. Van Wyk Electron spin resonance in the study of diamond, Rep. Prog. Phys.

41 1201-48(1978)

[4] M. Doherty, N. Manson, P. Delaney and L. Hollenberg , The negatively charged N-V center in

diamond: the electronic solution, arXiv:1008.5224(2010)

[5] J. Maze, A. Gali, E. Togan, Y Chu, A. Trifonov, E. Kaxiras, And M. Lukin , Propreties of

nitrogen-vacancy centers in diamond: the group theoretical approach, New J. Phys. 13

025025(2011)

[6] Jacobs P. , Group theory with applications in Chemical Physics, (Cambridge 2005)(Ref.

Pag:13,13)

[7] B. Naydenov, F. Dolde, L. Hall, C. Shin, H Fedder, L. Hollenberg, F. Jelezco, and J. Wrachtrup,

5

Dynamical decoupling of a single-electron spin at room temperature, Phys. Rev. B 83,

081201(R)(2011)

[8] A. Nizovtsev, S. Kilin, V. Pushkarchuk, A. Pushkarchuk, and S. Kuten , Quantum informatics.

Quantum information processors, Optics and Spectroscopy 108,2 230(2010)

[9] A. Abragam and B. Bleaney, Electron Paramagnetic Resonance of Transition Ions, Vol. I

(Moscow 1972, Oxford 1970)

[10] A. Lesk, Introduction to Symmetry and Group Theory for Chemists, Dordrecht (2004)

[11] C. Vallance, Lecture, Molecular Symmetry, Group Theory & Applications (2006)(Ref.

Pag:34,36)

[12] R. Powell,_Symmetry, Group Theory, and the Physical Properties of Crystals Springer (2010)

[13] M. Dresselhaus, Application of Group Theory to the Physics of Solids, Spring 2002

[14] , Curs de matematica superioara, Chisinau 1971

[15] H. Eyring and G. Kimball, Quantum Chemistry, Singapore, New-York, London (1944)

[16] K. Riley, M. Hobson, Mathematical Methods for Physics and Engineering: A Comprehensive

Guide, Second Edition, Cambridge 2002

[17] D. Long, The Raman Effect A Unified Treatment of the Theory of Raman Scattering by

Molecules , (2002)

[18] L. Barron, Molecular Light Scattering and Optical Activity(2004) (Ref. Pag:134, 134)

[19] Eric C. Le Ru, Pablo G. Etchegoin, Principles of Surface Enhanced Raman Spectroscopy,

(2009)(bond- polarizability model Wolkenstein)

[20] D. Griffiths, Introduction to electrodynamics, New Jersey (1999)

[21] M. , , Leningrad 1960

[22] . . . . , . . 1 2, . .: 1949

[23] H. Heinbockel, Introduction to tensor calculus,(1996)

[24] G. Arfken, H. Weber, Mathematical Methods For Physicists, International Student

Edition(2005)

[25] B. Berne and R. Pecora, Dynamic Light Scattering, New York 1990

[26] Liang, ScandaloJ. Chem. Phys. 125, 194524 (2006)

[27] R. Feynman, The Feynman Lectures on Physics, V I,II, III, California (1964)

[28] C.J. Foot, Atomic Physics , Oxford 2005(Ref. Pag:57)

[29] C. Gerry, Introductory Quantum Optics, Cambridge 2005

[30] E. Kartheuser , Elements de MECANIQUE QUANTIQUE , Liege 1998?!

[31] M. Kuno, Quantum mechanics, Notre Dame, USA 2008(Ref. Pag:32)

[32] M. Kuno, Quantum spectroscopy, Notre Dame,USA 2006

[33] I. Savelyev, Fundamental of theoretical Physics,Moscow 1982

[34] I. Savelyev, Physics: A general course vol. I,III,III, Moscow 1980

[35] .. . [ 5, 1]

[36] . . , M 1991

[37] S. Blundell, Magnetism in condensed matter,Oxford 2001

[38] P. Atkins, Physical Chemistry

[39] J. Sakurai, Modern quantum mechanics, 1993

[40] A. Matveev , , Moscow 1989(Ref. Pag:32)

[41] . , , 2001

[42] A. Messiah, Quantum mechanics

[43] G. Arfken, H. Weber, Mathematical Methods For Physicists, International Student

Edition(2005)

[44] C. Clark , Angular Momentum, 2006 august (5 pag)

6

[45] A. Restrepo, Group theory in Quantum Mhechanics, (gt3)(2009)

[46] . , , 1976

[47] http://en.wikipedia.org/wiki/Lowering_operator

[48] W. Nolting, Quantum thory of magnetism, Springer-Verlag Berlin Heidelberg 2009

[49] I. Irodov, Problems in General Physics, English translation, Mir Publishers, 1981 Moscow

[50] Abhay Kumar Singh, Solutions to Irodov's Problems in General Physics Vol. I and 2, New

Delhi (2005)

[51] G. Herzberg, Atomic Spectra and Atomic Structure, New-York (1944)

[52] M. Mueller, Fundamentals of Quantum Chemistry, New-York (2002)

[53] E. U. Condon, G. H. Shortley, The Theory of Atomic Spectra Cambridge (1959)

[54] D. Perkins, Introduction to High Energy Physics, Cambridge (2000)

[55] E. Wigner, Group Theory And Its Application To The Quantum Mechanics Of Atomic

Spectra, New-York (1959) (Ref. Pag: 117)

[56] K. Schulten, Notes on Quantum Mechanics, Universities of Illinois (2000)

[57] M Caola, Lat. Am. J. Phys. Educ. Vol. 4, No. 1, Jan 2010 p84 (Ref. Pag: )

[58] Calais J-L., I. Jour. Of Quant. Chem. Vol.II, 715-727(1968)(Ref. Pag: )

[59] L. C. Biedenharn, J.D. Louck, Angular momentum in quantum physics, London (1981) (Ref.

Pag: 125, 130)

[60] E.B. Manoukian, Quantum Theory:A Wide Spectrum , Springer (2006)(Ref. Pag: 117 )

[61] Smirnov V., Cours de Mathmatique Suprieure , tome III deuxime partie dition MIR

Moscou 1972

[62] Robuk V. , Nuc. Ins. and Meth. in Phys. Res. A 534, p 319-323 (2004)

[63] Claude F., Atomes et lumire :Interactions Matire rayonnement, Universit Pierre et Marie

Curie (2006)

[64] Orszag M., Quantum Optics, Springer 2008

[65] Scully M., Zubairy M., Quantum optics, Cambridge 2001

[66] Brink Satchler Angular_momentum(1968)

[67] Rieger P., Electron Spin Resonance: Analysis_and_Interpretation, Royal Society of Chemistry

(2007)

[68] Jones H. Groups, Representations, and Physics (IPP, 1998) (Ref. Pag: 23)

[69] Weissbluth M., Atoms and molecules, Academic Press Inc.,U.S.(1978)(Ref. Pag:13, 25, 32, 35,

40, 57, 55)

[70] Ludwig W., Falter C., Symmetries in Physics: Group Theory Applied to Physical Problems,

Springer Series In Solid-State Sciences 64(1988)(Ref. Pag:14, 42, 46, 48, 53, 55)

[71] Inui T., Tanabe I.,Onodera I.,Group Theory and its Applications in Physics,Springer Series In

Solid-State Sciences 78(1990)(Ref. Pag:12, 34, 52, 53)

[72] Harris D., Symmetry and Spectroscopy: An Introduction to Vibrational and Electronic

Spectroscopy, Dover (1989)(Ref. Pag:32)

[73] Tinkham M., Group Theory and Quantum Mechanics, Dover(1992) (Ref. Pag:32, 132, 134)

[74] Cornwell J., Group Theory in Physics, Volume 1 (Techniques of Physics) , Academic Press

London (1984) (Ref. Pag:43)

[75] Cornwell J., :Symmetry Proprieties of the Clebsch-Gordan Coefficients, phys. stat. sol.(b)

37,225(1970) (Ref. Pag:42, 44)

[76] VAN DEN Broek P.,Cornwell J., :Clebsch-Gordan Coefficients of Symmetry Groups, phys.

stat. sol. (b) 90,211(1978) (Ref. Pag:44)

[77] Sugano S., Tanabe Y., Kamimura H., Multiplets of Transition-Metal Ions in Crystals,

Academic Press ,New-York 1970 (Ref. Pag:49, 48)

7

[78] Cornwell J., Group theory in physics ,Academic Press London (1997) (Ref. Pag:51)

[79] Fitts D.D., Principles of Quantum Mechanics, as Applied to Chemistry and Chemical Physics,

Cambridge (2002)(Ref. Pag:57 )

[80] Racah G., (I) Phys. Rev. 61, 186197 (1942) (Ref. Pag:128 )

[81] Racah G., (II)Phys. Rev. 62, 438462 (1942) (Ref. Pag:128 )

[82] Judd B., Operator Techniques in Atomic Spectroscopy, Princeton University Press(1998)(Ref.

Pag:128 )

[83] Griffith J., The Theory of Transition-Metal Ions Cambridge (1971)(Ref. Pag:127 )

[84] Edmonds A., Angular momentum in quantum mechanics. [Rev. printing, 1968]Princeton

(1957) (Ref. Pag:127)

[85] Sobelman I., Atomic Spectra and Radiative Transitions(1992) (Ref. Pag:133)

Wolkenstein, M. I94I C.R. Acad. Sci., U.R.S.S. 32, 185.

Eliashevich, M. & Wolkenstein, M. I945 J. Phys., Moscow, 9, 101, 326

Chapter 4 Group theory

4.1 Basis

pag 11 [10]

Definition of a group

A group consists of a set (of symmetry operations, numbers, etc.) together with a rule by which any two

elements of the set may be combined which will be called, generically, multiplication with the

following four properties:

1 Closure: The result of combining any two elements the product of any two elements is another

element in the set.

2 Group multiplication satisfies the associative law: a (b c) = (a b) c for all elements a, b and c of

the group.

3 There exists a unit element, or identity, denoted E, such that E a = a for any element of the group.

4 For every element a of the group, the group contains another element called the inverse, a1, such

that a a1 = E. Note that as EE = E, the inverse of E is E itself.

Examples of groups

Elements of the group Rule of combination

1. covering operations of an object Successive application

2. All real numbers Addition

3. All complex numbers Addition

4. All real numbers except 0 Multiplication

5. All complex numbers except (0, 0) Multiplication

6. All integers Addition

8

7. Even integers Addition

8. The n complex numbers of the form cos 2k/n + i sin 2k/n, k = 0, . . . , n 1 Multiplication

9. All permutations of an ordered set of objects Successive application

A permutation is a specification of a way to reorder a set. For example, the group of permutations of

two objects contains two elements: the identity, first first, second second and the exchange, first

second, second first. This group is isomorphic to the group formed by 1 and 1 under

multiplication.

10. All possible rotations in three-dimensional space Successive application

Pag 15 [10]

Def. A point group is the symmetry group of an object of finite extent, such as an atom or molecule.

Pag 3. [11]

A symmetry operation is an action that leaves an object looking the same after it has been carried out.

For example, if we take a molecule of water and rotate it by 180 about an axis passing through the

central O atom (between the two H atoms) it will look the same as before. It will also look the same if

we reflect it through either of two mirror planes, as shown in the figure below.

1. E - the identity. The identity operation consists of doing nothing, and the corresponding symmetry

element is the entire molecule. Every molecule has at least this element.

2. C

n

- an n-fold axis of rotation. Rotation by 360/n leaves the molecule unchanged. The H2O

molecule above has a C

2

axis. Some molecules have more than one C

n

axis, in which case the one with

the highest value of n is called the principal axis. Note that by convention rotations are counter-

clockwise about the axis.

3. - a plane of symmetry. Reflection in the plane leaves the molecule looking the same. In a molecule

that also has an axis of symmetry, a mirror plane that includes the axis is called a vertical mirror plane

and is labelled

v

, while one perpendicular to the axis is called a horizontal mirror plane and is labelled

h

. A vertical mirror plane that bisects the angle between two C

2

axes is called a dihedral mirror plane,

d

.

4. i - a centre of symmetry. Inversion through the centre of symmetry leaves the molecule unchanged.

Inversion consists of passing each point through the centre of inversion and out to the same distance on

the other side of the molecule. An example of a molecule with a centre of inversion is shown below.

5. S

n

- an n-fold improper rotation axis (also called a rotary-reflection axis). The rotary reflection

9

operation consists of rotating through an angle 360/n about the axis, followed by reflecting in a plane

perpendicular to the axis. Note that S

1

is the same as reflection and S

2

is the same as inversion. The

molecule shown above has two S

2

axes.

C

nv

contains the identity, an n-fold axis of rotation, and n vertical mirror planes

v

.

C

nh

- contains the identity, an n-fold axis of rotation, and a horizontal reflection plane

h

(note that in

C

2h

this combination of symmetry elements automatically implies a centre of inversion).

D

n

- contains the identity, an n-fold axis of rotation, and n 2-fold rotations about axes perpendicular to

the principal axis.

D

nh

- contains the same symmetry elements as D

n

with the addition of a horizontal mirror plane.

Now we will investigate what happens when we apply two symmetry operations in sequence. As an

example, consider the NH

3

molecule, which belongs to the C

3v

point group. Consider what happens if

we apply a C

3

rotation followed by a

v

reflection. We write this combined operation

v

C

3

(when

written, symmetry operations operate on the thing directly to their right, just as operators do in quantum

mechanics we therefore have to work backwards from right to left from the notation to get the correct

order in which the operators are applied).

The combined operation

v

C

3

is equivalent to

v

'', which is also a symmetry operation of the C

3v

point

group. Now lets see what happens if we apply the operators in the reverse order i.e. C

3

v

(

v

followed

by C

3

).

10

There are two important points that are illustrated by this example:

1. The order in which two operations are applied is important. For two symmetry operations A and B,

AB is not necessarily the same as BA, i.e. symmetry operations do not in general commute. In some

groups the symmetry elements do commute; such groups are said to be Abelian.

2. If two operations from the same point group are applied in sequence, the result will be equivalent to

another operation from the point group. Symmetry operations that are related to each other by other

symmetry operations of the group are said to belong to the same class. In NH

3

, the three mirror planes

v

,

v

' and

v

'' belong to the same class (related to each other through a C

3

rotation), as do the rotations

C

3

+

and C

3

-

(anticlockwise and clockwise rotations about the principal axis, related to each other by a

vertical mirror plane).

or

Pag 26 [12]

Group multiplication Table

E A B C D F

E E A B C D F

A A E D F B C

B B F E D C A

C C D F E A B

D D C A B F E

F F B C A E D

Consider a group consisting of six elements represented by the letters A , B , C , D , E , and F that

obey the multiplication table shown above. The elements in the table are the product of the element

designating its column and the element designating its row. Following this convention, the table shows

that the identity element is a member of the group, the product of any two elements is an element of the

group, and each group element has an element in the group that is its inverse. Each element appears

only once in any given row or column. The associative law holds but the commutative law does not

hold for all products so the group is not Abelian. The order of the group is 6.

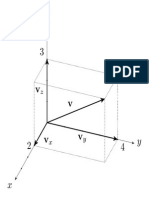

4.2 Rotations

11

pag 22 [14]

Rotation of Cartesian axes by an angle o about the

z-axis.

j=j' , =' +o

x=jcos, y=jsin

x ' =jcos' , y' =jsin '

x=jcos(' +coso)=j(cos' cososin ' sino)=x ' cosoy ' sin o

y=jsin (' +cos o)=j(sin ' coso+cos' sin o)=y' coso+x' sino

finally we get

x=x' cosoy' sino

y=x ' sin o+y ' cos o

z=z '

(4.2.1)

Another method (pag 780 [16] )that you can sum the

projections (the triangle rule x ' =x+y ) so we have

that:

x ' =x coso+ysino

see Fig 1

and the same for the other

y' =xsin o+ycos o

the transformation matrix are:

R=

(

coso sin o 0

sino coso 0

0 0 1

)

(4.2.2)

and from (4.2.1) we have the inverse:

R

1

=

(

coso sin o 0

sino coso 0

0 0 1

)

13

(4.2.3)

Thus we can write 321 in matrix form

r=R

1

r '

r ( x , y , z)

28

r ' =Rr see pag 51[71]

(4.2.4)

12

Fig 1

y

x

o

x coso

y sino

x '

pag 2 [13] also see [69] pag 61-63:

Figure 1.1: The symmetry operations on an equilateral triangle,

are the rotations by !2n/ 3 about the origin 0 and the rotations

by n about the axes 01, 02, and 03.

As a simple example of a group, consider the permutation group

for three elements, P(3). Below are listed the 3!=6 possible

permutations that can be carried out; the top row denotes the initial

arrangement of the three numbers and the bottom row denotes the

final arrangement.

This is for group D3:

E=

(

1 2 3

1 2 3

)

A=

(

1 2 3

2 1 3

)

B=

(

1 2 3

1 3 2

)

C=

(

1 2 3

3 2 1

)

D=

(

1 2 3

3 1 2

)

F=

(

1 2 3

2 3 1

)

(AB)C = DC = B

A(BC) = AD = B

bellow 14:

E=

(

1 0

0 1

)

A=

(

1 0

0 1

)

B=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

C=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

D=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

F=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

For C

3v

:

we have see[6] pag 70-71:

C

3v

={ E C

3

+

C

3

c

d

c

e

c

f

}

we have two rotations using for C

3

+

o=

2n

3

and for C

3

o=

2n

3

in eq.(4.2.3):

C

3

+

=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

and

C

3

=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

4.3 Reflections

at page 59 [6] for reflection in plane we have:

from theFig. 2 we put

](P'O c )=x

](YOP')=y we have we need to find the angle:

13

Fig. 2 Reflection of a point P(x y) in a mirror

plane whose normal m makes an angle with

y,so that the angle between and the zx plane is

0 . OP makes an angle o with x . P'(x'

y') is the reflection of P in x=0o , and OP'

makes an angle 20o with x .

x+x+o=2x+o (4.3.1)

We make the system of eq.:

2x+y+o=n/ 2

x+y+0=n/ 2

(4.3.2)

The solution is

x=0o (4.3.3)

Substitution of (4.3.3)in(4.3.1) yields

20o (4.3.4)

x=cos o and y=sin o

x ' =cos(20o)=x cos(20)+ysin(20) and

y' =sin( 20o)=xsin(20)ycos(20)

thus we got the transformation:

|

x '

y'

z'

=

|

cos(20) sin( 20) 0

sin(20) cos( 20) 0

0 0 1

|

x

y

z

(4.3.5)

With the representation :

I(c(0 y))=

|

cos(20) sin(20) 0

sin (20) cos(20) 0

0 0 1

(4.3.6)

Thus we have three reflections:

for

c

d

0=0 ,for

c

e

0=

n

3

and for

c

f

0=

n

3

in eq.(4.3.6):

c

d

=

(

1 0

0 1

)

c

e

=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

c

f

=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

for representation of

S

3

we have

e=

(

1 0

0 1

)

a=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

b=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

c=

(

1 0

0 1

)

d=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

f =

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

d=D, f=F, c=A, a=B where the signs in principal diagonal is ganged, and b=C where the signs in

principal diagonal is ganged see 13 above.

In conclusion we see that

D

3

C

3v

also with

S

3

:see [70]pag 409 .

14

pag 27 [10]

Linear transformations and matrices

in linear transformation the most important class of geometrical manipulations is the set of linear

transformations, for which the effect on a point is given by the linear equations:

x

f

=a

11

x

i

+a

12

y

i

+a

13

z

i

y

f

=a

21

x

i

+a

22

y

i

+a

23

z

i

z

f

=a

31

x

i

+a

32

y

i

+a

33

z

i

in which (x

i

, y

i

, z

i

) and (x

f

, y

f

, z

f

) are vectors representing the initial and final points, and the a

ij

are real

numbers characterizing the transformation. All the covering operations we have dealt with are of this

type.

Matrix notation is a shorthand way of writing such systems of linear equations, that avoids tedious

copying of the symbols x

i

, y

i

and z

i

. The application of the identity matrix:

(

x

f

y

f

z

f

)

=

(

1 0 0

0 1 0

0 0 1

)

(

x

i

y

i

z

i

)

in abbreviations of system equations:

x

f

=1x

i

+0y

i

+0z

i

y

f

=0x

i

+1y

i

+0z

i

z

f

=0x

i

+0y

i

+1z

i

in general the array of numbers:

(

a

11

a

12

a

13

a

21

a

22

a

23

a

31

a

32

a

33

)

is called a matrix. The numbers

a

ij

are its elements: the first subscript specifies the row and the

second subscript specifies the column.

Each of the symmetry operations we have defined geometrically can be represented by a matrix. The

elements of the matrices depend on the choice of coordinate system.

15

The operations

c

v

, the mirror reflection in the y-z plane, has the effect in this coordinate system of

reversing the sign of the x-coordinate of any point it operates on. This effect is specified by the

equations:

x

f

=x

i

, y

f

=y

i

, z

f

=z

i

or bu the matrix equation:

(

x

f

y

f

z

f

)

=

(

1 0 0

0 1 0

0 0 1

)

(

x

i

y

i

z

i

)

The identity operation is expressed by identity matrix:

I =

(

1 0 0

0 1 0

0 0 1

)

Successive transformations; matrix multiplication

Because the successive application of two linear transformations is itself a linear transformation, it

must also correspond to a matrix. The matrix corresponding to a compound transformation can be

computed directly from the matrices corresponding to the individual transformations. Let us derive the

formulas; for simplicity we shall work in two dimensions. Given two linear transformations:

x

f

=a

11

x

i

+a

12

y

i

y

f

=a

21

x

i

+a

22

y

i

and

x'

f

=b

11

x'

i

+b

12

y'

i

y'

f

=b

21

x '

i

+b

22

y'

i

use the final point of the first transformation as the initial point of the second. That is, let

x '

i

=x

f

=a

11

x

i

+a

12

y

i

y'

i

=y

f

=a

21

x

i

+a

22

y

i

then

x'

f

=b

11

( a

11

x

i

+a

12

y

i

)+b

12

( a

21

x

i

+a

22

y

i

)

y'

f

=b

21

(a

11

x

i

+a

12

y

i

)+b

22

(a

21

x

i

+a

22

y

i

)

or

x '

f

=(b

11

a

11

x

i

+b

12

a

21

) x

i

+(b

11

a

12

+b

12

a

21

) y

i

y'

f

=(b

21

a

11

x

i

+b

22

a

21

) x

i

+( b

21

a

12

+b

22

a

22

) y

i

The last set of equations is in the standard form for a linear transformation. Its matrix form is:

(

x '

f

y'

f

)

=

(

(b

11

a

11

x

i

+b

12

a

21

) (b

11

a

12

+b

12

a

21

)

(b

21

a

11

x

i

+b

22

a

21

) (b

21

a

12

+b

22

a

22

)

)(

x

i

y

i

)

16

In more than two dimensions, the calculation is similar. The general result is that: if the matrix

C=

(

c

11

... c

1n

... ... ...

c

n1

... c

nn

)

A=

(

a

11

... a

1n

... ... ...

a

n1

... a

nn

)

B=

(

b

11

... b

1n

... ... ...

b

n1

... b

nn

)

we say that C is the product of B and A: C=BA . The elements of C are given by the formula:

c

ik

=

j =1

n

b

ij

a

jk

If the rows of B and the columns of A are considered as vectors, then the element

c

ik

is the dot

product of the i th row of B with the k th column of A:

C=

(

... c

ik

... ...

)

=

(

b

i1

b

i2

... b

i n

)

(

a

1k

a

2k

...

a

nk

)

The effect on a matrix of a change in coordinate system

The elements of the matrix that corresponds to a geometrical operation such as a rotation depend on the

coordinate system in which it is expressed. Consider a mirror reflection, in two dimensions, expressed

in three different coordinate systems, as shown in Figure 52. The mirror itself is in each case vertical,

independent of the orientation of the coordinate system.

17

Figure 5.2. Expression of a reflection in a mirror plane in three different coordinate systems. Note that

the points

x

i

, y

i

and

x

f

, y

f

dont change position. Only the coordinate axes change.

The relationships between the matrices representing the reflection in different coordinate systems are

expressible in terms of the matrix S that defines the relationships between the coordinate systems

themselves. Suppose ( x , y) and ( x' , y' ) are two pairs of normalized vectors oriented along the

axes of two Cartesian coordinate systems related by a linear transformation:

(

x '

y'

)

=S

(

x

y

)

If the matrix A represents the mirror reflection in the ( x , y) coordinate system, then the matrix that

represents the reflection in the ( x' , y' ) coordinate system is the triple matrix product S

1

AS ,

where S

1

is the inverse of S. Such a change in representation induced by a change in coordinate

system is called a similarity transformation.

Traces and determinants

Linear transformations that correspond to nonorthogonal matrices distort lengths or angles. The trace

and determinant of a matrix provide partial measures of the distortions introduced. The trace of a

matrix is defined as the sum of the diagonal elements.

Tr A=

i =1

n

a

ii

det

(

a b

c d

)

=adbc

Analogous but more complicated formulas define the determinants of square matrices of higher

dimensions. (A square matrix is a matrix which has the same number of rows as columns.) It is not

possible to define the determinant of a non-square matrix.

18

What conclusions do these examples suggest? First, note that only the first six examples are orthogonal

transformations. The determinant of each of these matrices is +1 or 1. Every matrix that represents an

orthogonal transformation must have determinant 1.

19

The importance of the trace and determinant lies in their independence of the coordinate system in

which the matrix is expressed. Recalling that a change in coordinate system leads to a change in the

matrix representation of a transformation by a similarity transformation, the independence of trace and

determinant on coordinate system is expressed by the equations:

Tr ( S

1

AS )=Tr A

det ( S

1

AS )=det A

where S is an orthogonal matrix. Thus the trace and determinant provide numerical characteristics of a

transformation independent of any coordinate system.

Pag 52 [10]

4.4 Group representations

A representation of a symmetry group is a set of square matrices, all of the same dimension,

corresponding to the elements of the group, such that multiplication of the matrices is consistent with

the multiplication table of the group. That is, the product of matrices corresponding to two elements of

the group corresponds to that element of the group equal to the product of the two group elements in

the group itself. Representations can be of any dimension; 11 arrays are of course just ordinary

numbers.

If each group element corresponds to a different matrix, the representation is said to be faithful. A

faithful representation is a matrix group that is isomorphic to the group being represented. If the same

matrix corresponds to more than one group element, the representation, is called unfaithful. Unfaithful

representations of any group are available by assigning the number 1 to every element, or by assigning

the identity matrix of some dimension to every element. (But the number 1 is a faithful representation

of the group C1.) The collection of matrices occurring in an unfaithful representation of a group, if

taken each only once, forms a group isomorphic to a subgroup of the original group. Thus to any

unfaithful representation of a group there corresponds a faithful representation of a subgroup.

Examples of group representations:

20

21

4.5 Unitary Matrix

The following complex matrix is unitary: that its set of row vectors form an orthonormal set in C

3

:

A=

|

1

2

1i

2

1

2

i

.3

i

.3

1

.3

5i

2.15

3+i

2.15

4+3i

2.15

27 (4.5.1)

Let

r

1

r

2

r

3

be defined as follows:

r

1

=

(

1

2

,

1i

2

,

1

2

)

r

2

=

(

i

.3

,

i

.3

,

1

.3

)

r

3

=

(

5i

2.15

,

3+i

2.15

,

4+3i

2.15

)

(4.5.2)

The length of

r

1

is

r

1

=

(

r

1

r

1

)

1/2

=

|

(

1

2

)(

1

2

)

+

(

1+i

2

)(

1+i

2

)

+

(

1

2

)(

1

2

)

1/ 2

=

|

1

4

+

2

4

+

1

4

1/ 2

=1

49 (4.5.3)

For the vectors

r

2

r

3

the same.

The inner product of

r

1

r

2

is given by:

r

1

r

2

=

(

1

2

)(

i

.3

)

+

(

1+i

2

)(

i

.3

)

+

(

1

2

)(

1

.3

)

=

(

1

2

)(

i

.3

)

+

(

1+i

2

)(

i

.3

)

+

(

1

2

)(

1

.3

)

=

i

2.3

i

2.3

+

i

2.3

i

2.3

=0

(4.5.4)

Similarly, and

r

1

r

3

=0

and

r

2

r

3

=0

we can conclude that{

r

1

,

r

2

,

r

3

} is an orthonormal

set. (Try showing that the column vectors of A also form an orthonormal set in C3 .)

see [15]pag174 :

In addition the determinant of the A's is unity. The matrices representing rotations, reflections and

22

inversions are unitary follows that the matrix representations of groups are unitary.

4.6 Schur's Lemma

See pag 59 [68]:

Lemma:

In matrix form this states that any matrix which commutes with all the matrices of an irreducible

representation must be a multiple of the unit matrix, i.e.

BD( g)=D( g) B gGB=\1 (4.6.1)

To prove the identity , let b be an eigenvector of B with eigenvalue \ :

Bb=\b (4.6.2)

Then

B( D( g) b)=D( g) B b=D( g)\b=\( D( g) b) (4.6.3)

You know from quantum mechanics that if the operators commutes then they have the same

eigenvalues.

This means that D( g) b is also an eigenvector of B, with the same eigenvalue \ .

In matrix form one has to solve the equation Bb=\b , leading to the characteristic equation

det ( B\1)=0 (4.6.4)

. If we are working within the framework of complex numbers, this polynomial equation is guaranteed

to have at least one root \ , with a corresponding eigenvector b .

4.7 Great orthogonality theorem

Theorem:

Consider two unitary irreducible matrix representations I

(i )

(G) and I

( j )

(G) of a group G then:

g

I

( i)

( g)

o

I

( j )

( g)

6

=

|G

l

i

6

ij

6

o

6

6

30, 43 (4.7.1)

Where [G] is order of a group G and

l

i

is the dimension of I

(i )

(G) .

Proof:

Define the matrix M :

M

gG

I

(i )

( g) X I

( j)

( g)

(4.7.2)

Where X is some unspecified matrix. Then

23

I

( i)

( h) M=I

( i)

( h)

g

I

(i )

( g) X I

( j )

( g)

=

g

I

( i)

(hg) X I

( j )

( g)

=

hg

I

( i)

(hg) X I

( j )

(h

1

hg)

=

g

I

( i)

( g) X I

( j )

( h

1

g) (relabing hg - g)

=

g

I

( i)

( g) X I

( j )

I

( j )

(h)

=M I

( j )

(h)

(4.7.3)

By the converse of Schur's lemma , either i=j, or M=0. If i=j then by Schu's lemma M=\1=\ I

where we find m by taking the trace .

g

I

( i)

( g) X I

( j )

( g)=\6

ij

1

(4.7.4)

|G tr X =\l

(i )

So

g

I

( i)

( g) X I

( j )

( g)=

|G

l

i

6

ij

tr X 1

(4.7.5)

Or with index notations:

g

I

( i)

( g)

o

X

I

( j )

( g)

6

=

|G

l

i

6

X

6

ij

6

o6

(4.7.6)

But since

X

o

is arbitrary we chose to be also unity matrix:

g

I

( i)

( g)

o

I

( j)

( g)

6

=

| G

l

i

6

6

ij

6

o6

(4.7.7)

4.8 Great orthogonality theorem (application)

Example of the permutation group on 3 objects:

The 3! permutations of three objects form a group of order 6, commonly denoted by S

3

(symmetric

group). This group is isomorphic to the point group C

3v

, consisting of a threefold rotation axis and

three vertical mirror planes. The groups have a 2-dimensional irrep (l = 2). In the case of S

3

one usually

labels this irrep by the Young tableau = [2,1] and in the case of C

3v

one usually writes = E. In both

cases the irrep consists of the following six real matrices, each representing a single group element:

24

(

1 0

0 1

)(

1 0

0 1

)

(

1

2

.3

2

.3

2

1

2

)(

1

2

.3

2

.3

2

1

2

)(

1

2

.3

2

.3

2

1

2

)(

1

2

.3

2

.3

2

1

2

)

we have the flowing vectors:

(

V

11

V

12

V

21

V

22

)

The normalization of the (1,1) (2,2) ,(1,2)and (2,1) elements:

V

11

V

11

=

RG

6

I( R)

11

I( R)

11

=1

2

+1

2

+

(

1

2

)

2

+

(

1

2

)

2

+

(

1

2

)

2

+

(

1

2

)

2

=3

V

12

V

12

=

RG

6

I( R)

12

I( R)

12

=

2

+0

2

+

(

.3

2

)

2

+

(

.3

2

)

2

+

(

.3

2

)

2

+

(

.3

2

)

2

=3

V

21

V

21

=

RG

6

I( R)

21

I( R)

21

=0

2

+0

2

+

(

.3

2

)

2

+

(

.3

2

)

2

+

(

.3

2

)

2

+

(

.3

2

)

2

=3

V

22

V

22

=

RG

6

I( R)

22

I( R)

22

=1

2

+(1)

2

+

(

1

2

)

2

+

(

1

2

)

2

+

(

1

2

)

2

+

(

1

2

)

2

=3

(4.8.1)

The orthogonality of the (1,1) and (2,2) elements:

V

11

V

22

=

RG

6

I( R)

11

I( R)

22

=1

2

+(1)(1)+

(

1

2

)(

1

2

)

+

(

1

2

)(

1

2

)

+

(

1

2

)

2

+

(

1

2

)

2

=0. (4.8.2)

Similar relations hold for the orthogonality of the elements (1,1) and (1,2), etc. One verifies easily in

the example that all sums of corresponding matrix elements vanish because of the orthogonality of the

given irrep to the identity irrep.

4.9 D

3

representations and irreducible representations

See [69]pag 66:

A matrix representation of a group is defined as a set of square, nonsingular matrices (matrices with

nonvanishing determinants) that satisfy the multiplication table of the group when the matrices are

multiplied by the ordinary rules of matrix multiplication. There are other kinds of representations (see

Section 6.1) but unless there is a specific statement to that effect, it will be understood that a

representation means a matrix representation.

We give several examples of representations of the group D3:

I

(1)

( E)=I

( 1)

( A)=I

( 1)

( B)=I

( 1)

(C)=I

(1)

( D)=I

( 1)

( F)=1 28 (4.9.1)

This is a one-dimensional representation (lxl matrices) which obviously satisfies the group

multiplication table. It appears to be trivial but nevertheless plays an important role in later

developments. This representation is called the totally symmetric representation or the unit

representation. Another one-dimensional representation is

I

( 2)

( E)=I

(2)

( A)=I

( 2)

( B)=I

( 2)

(C)=1, I

( 2)

( D)=I

( 2)

( F )=1 (4.9.2)

25

A two-dimensional representation consisting of 2 x 2 matrices is:

I

(3)

( E)=

(

1 0

0 1

)

I

(3)

( A)=

(

1 0

0 1

)

I

(3)

( B)=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

28,31

I

(3)

(C)=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

I

(3)

( D)=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

I

(3)

( F)=

(

1/ 2 .3/ 2

.3/ 2 1/ 2

)

(4.9.3)

We may therefore regard these matrices as a three-dimensional representation of D

3

:

I have calculated in Mathematica (4.11.31) and here I found a lot of mistakes in Weissbluth the correct

are:

I

( 4)

( E)=

(

1 0 0

0 1 0

0 0 1

)

I

( 4)

( A)=

(

1 0 0

0 1 0

0 0 1

)

I

( 4)

( B)=

(

1/ 2 .3/ 2 0

.3/ 2 1/ 2 0

0 0 1

)

I

( 4)

(C)=

(

1/ 2 .3/ 2 0

.3/ 2 1/ 2 0

0 0 1

)

35

I

( 4)

( D)=

(

1/ 2 .3/ 2 0

.3/ 2 1/ 2 0

0 0 1

)

I

( 4)

( F)=

(

1/ 2 .3/ 2 0

.3/ 2 1/ 2 0

0 0 1

)

(4.9.4)

Another three-dimensional representation is the set

I

(5)

( E)=

(

1 0 0

0 1 0

0 0 1

)

I

(5)

( A)=

(

1 0 0

0 0 1

0 1 0

)

I

(5)

(C)=

(

0 0 1

0 1 0

1 0 0

)

I

(5)

( B)=

(

0 1 0

1 0 0

0 0 1

)

I

(5)

( D)=

(

0 0 1

1 0 0

0 1 0

)

I

(5)

( F)=

(

0 1 0

0 0 1

1 0 0

)

29

(4.9.5)

The number of representations that may be constructed is without limit; as a final example we show a

six-dimensional representation:

26

I

(6)

( E)=

(

1 0 0 0 0 0

0 1 0 0 0 0

0 0 1 0 0 0

0 0 0 1 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

I

(6)

( A)=

(

1 0 0 0 0 0

0 1 0 0 0 0

0 0 1 0 0 0

0 0 0 1 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

I

(6)

( B)=

(

1/ 2 .3/ 2 0 0 0 0

.3/ 2 1/ 2 0 0 0 0

0 0 1/ 2 .3/ 2 0 0

0 0 .3/ 2 1/ 2 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

I

(6)

(C)=

(

1/ 2 .3/ 2 0 0 0 0

.3/ 2 1/ 2 0 0 0 0

0 0 1/ 2 .3/ 2 0 0

0 0 .3/ 2 1/ 2 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

I

(6)

( D)=

(

1/ 2 .3/ 2 0 0 0 0

.3/ 2 1/ 2 0 0 0 0

0 0 1/ 2 .3/ 2 0 0

0 0 .3/ 2 1/ 2 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

I

(6)

( F)=

(

1/ 2 .3/ 2 0 0 0 0

.3/ 2 1/ 2 0 0 0 0

0 0 1/ 2 .3/ 2 0 0

0 0 .3/ 2 1/ 2 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

(4.9.6)

If the matrices belonging to a representation I are subjected to a similarity transformation, the

result is a new representation I ' . The two representations I and I ' are said to be equivalent.

If I and I ' cannot be transformed into one another by a similarity transformation, I and

I ' are then said to be inequivalent. It may be shown (eq.(4.5.1)) that for a finite group every

representation is equivalent to a unitary representation (i.e., consisting entirely of unitary matrices). We

shall assume henceforth that all representations are unitary unless the contrary is explicitly stated (see

also Section 3.4).

An important notion concerning representations is that of reducibility. Consider, for example

27

I

(6)

( B) in (4.9.6):

I

(6)

( B)=

(

1/ 2 .3/ 2 0 0 0 0

.3/ 2 1/ 2 0 0 0 0

0 0 1/ 2 .3/ 2 0 0

0 0 .3/ 2 1/ 2 0 0

0 0 0 0 1 0

0 0 0 0 0 1

)

(4.9.7)

This matrix consists of four separate blocks along the main diagonaltwo of the blocks are two-

dimensional and the other two are one-dimensional. Moreover, examination of the separate blocks

reveals that the two- dimensional blocks are identical with the representation matrix I

(3)

( B)

[Eq. (4.9.3)] and the one-dimensional blocks coincide with I

(1)

( B) [Eq. (4.9.1)]. Therefore

I

(6)

( B) is said to be reducible into 2 I

(3)

( B) and 2 I

(1)

( B) . Symbolically this is expressed by

I

(6)

( B)=2 I

( 3)

( B)+2I

( 1)

( B)

I

(6)

( B)=2 I

( 3)

( B)2I

(1)

( B)

(4.9.8)

in which the right side of the equation signifies that I

(3)

( B) appears twice and I

(1)

( B) appears

twice along the main diagonal in (4.9.7). In this context the symbol + clearly has nothing to do with

addition. The symbol is also used which means direct sum.

The character is denoted by X

( j )

( R) , thus

X

( j )

( R)=

o

I

oo

( j )

( R)

(4.9.9)

Table 1.1 Character table of D

3 31

E A B C D F

I

(1)

I

( 2)

I

(3)

1

1

2

1

-1

0

1

-1

0

1

-1

0

1

1

-1

1

1

-1

4.10 Basis Functions

The representations of a group are intimately connected with sets of functions called basis functions. A

few examples will help to establish the central idea. Let

1

( r)=x ,

2

(r)=y

(4.10.1)

We now inquire as to how these functions are altered under the coordinate transformations E, A,. .., F

which are elements of the group D

3

. Under any coordinate transformation described by r ' =Rr (R is

a matrix of rotations(see (4.2.4),(5.2.14))), a function f (r) transforms in accordance with

P

R

f ( r )=f ( R

1

r) (4.10.2)

28

For R=E we obtain trivially,

P

E

1

(r)=

1

(r)=x , P

E

2

( r)=

2

( r )=y

(4.10.3)

Or in matrix form:

P

E

(

1,

2

)=(

1,

2

)

(

1 0

0 1

)

(4.10.4)

The general statement is that a set of linearly independent functions

1

( j)

( r) ,

2

( j )

(r) ,... ,

n

( j )

(r) are

basis functions for the I

( j )

representations of a group if

P

R

k

( j )

(r)=

\=1

n

\

( j )

(r) I

\k

( j )

( R) 29,30, 40 (4.10.5)

For all the elements of the group R.

The basis set for a representation consists of linearly independent functions. These can always be

chosen so as to be orthonormal, i.e.,

l

j

( r)

k

j

( r)=6

lk

(4.10.6)

From (4.10.5),

l

j

( r )P

R

k

j

( r ) =

\

l

j

(r)

k

j

(r) I

\k

( j)

( R)=I

l k

( j )

( R) P

R

k

j

( r)=

\=1

n

\

j

( r)I

\k

( j)

(4.10.7)

4.11 Projection Operators (D

3

)

We will prove that see(4.11.31):

I

(5)

=I

(3)

+I

(1)

31 (4.11.1)

It is pertinent to inquire what relations, if any, exist between the basis functions of I

(5)

and the basis

functions of the component irreducible representations I

(3)

and I

(1)

. Suppose (f

l

,f

2

,f

3

) is the set

of (normalized) basis functions of I

(5)

[Eq. (4.9.5)]. It follows, from the definition of basis

functions (4.10.5),that

29

P

E

( f

1,

f

2,

f

3

)=( f

1,

f

2,

f

3

)

(

1 0 0

0 1 0

0 0 1

)

=( f

1,

f

2,

f

3

)

P

A

( f

1,

f

2,

f

3

)=( f

1,

f

2,

f

3

)

(

1 0 0

0 0 1

0 1 0

)

=( f

1,

f

3,

f

2

)

P

B

( f

1,

f

2,

f

3

)=( f

1,

f

2,

f

3

)

(

0 0 1

0 1 0

1 0 0

)

=( f

3,

f

2,

f

1

)

P

C

( f

1,

f

2,

f

3

)=( f

1,

f

2,

f

3

)

(

0 1 0

1 0 0

0 0 1

)

=( f

2,

f

1,

f

3

)

P

D

( f

1,

f

2,

f

3

)=( f

1,

f

2,

f

3

)

(

0 0 1

1 0 0

0 1 0

)

=( f

2,

f

3,

f

1

)

P

F

( f

1,

f

2,

f

3

)=( f

1,

f

2,

f

3

)

(

0 1 0

0 0 1

1 0 0

)

=( f

3,

f

1,

f

2

) 32

(4.11.2)

Where we have multiplied the row with matrix = row.

Let

k

j

(r) be a basis function belonging to the jth irreducible representation I

( j )

see(4.10.5).

Then, by definition,

P

R

k

j

( r)=

\=1

n

\

( j )

(r ) I

\k

( j )

( R) (4.11.3)

Multiplying through by I

\ ' k '

( j )

( R)

and summing over R, we obtain

R

I

\' k '

( j ' )

( R)

P

R

k

( j )

(r)=

R

\=1

l

j

I

\' k '

( j ' )

( R)

\

( j )

(r )I

\k

( j )

( R)

=

h

l

j

\

\

( j)

6

jj '

6

\\'

6

kk '

=

h

l

j

\'

( j)

6

jj '

6

kk '

(4.11.4)

in which the second and third equalities are a consequence of the orthogonality theorem (4.7.1). We

may now define an operator

P

\k

( j )

=

l

j

h

R

I

\k

( j )

( R)

P

R

48 (4.11.5)

Having the property from (4.11.4):

30

P

\k

( j )

l

( i)

=

\

(i )

6

ij

6

lk

(4.11.6)

Or when i=j and l=k,

P

\k

( j )

k

( j )

=

\

( j )

(4.11.7)

When \=k (4.11.6) becomes

P

k k

( j )

l

( i)

=

k

(i )

6

ij

6

lk

(4.11.8)

Or

P

k k

( j )

k

( j )

=

k

( j)

P

k k

( j )

l

( j )

=0, i j , l k

(4.11.9)

From (4.11.9) it is seen that

k

( j)

is an eigenvector of P

k k

( j )

with eigenvalue equal to one. Also

(P

k k

( j)

)

2

k

( j )

=P

k k

( j )

k

( j )

=

k

( j)

(4.11.10)

So that

(P

k k

( j)

)

2

=P

k k

( j )

=

l

j

h

R

I

k k

( j )

( R)

P

R

(4.11.11)

P

k k

( j )

is known as a projection operator; operators that obey a relation of the type O

2

= O are said to

be idempotent. From (3.5-14) we see that projection operators are idempotent.

If one now sums P

k k

( j )

over k, then, from (4.11.11),

k

P

k k

( j )

P

( j )

=

l

j

h

R

k

I

k k

( j)

( R)

P

R

=

l

j

h

R

X

( j)

( R)

P

R

(4.11.12)

P

( j )

Is also a projection operator(for example I

kk

(3)

is a matrix 2x2 (4.9.3) and kk= 11,12,21,22).

Illustration of (4.11.12) :

P

(1)

=

l

1

h

R

X

( 1)

P

R

=

1

6

|

P

E

+P

A

+P

B

+P

C

+P

D

+P

F

32

P

( 2)

=

l

1

h

R

X

(1)

P

R

=

1

6

|

P

E

P

A

P

B

P

C

+P

D

+P

F

P

( 3)

=

2

6

|

2P

E

P

D

P

F

(4.11.13)

We will prove (4.11.1) by using (4.11.12) we will generate the basis functions for I

(3)

in the

decomposition of I

(5)

as is given by (4.11.1) . From (4.9.3) or Table 1.1 Character table of D3 31 we

have

X

(3)

( E)=2, X

(3)

( A)=X

( 3)

( B)=X

( 3)

(C)=0, X

( 3)

( D)=X

(3)

( F )=1 (4.11.14)

31

Therefore using (4.11.2), (for example

P

D

f

2

=f

3

) we get:

P

( 3)

f

1

=

2

6

|

2P

E

f

1

P

D

f

1

P

F

f

1

=

1

3

| 2 f

1

f

2

f

3

h

1

P

(3)

f

2

=

2

6

|

2P

E

f

2

P

D

f

2

P

F

f

2

=

1

3

| 2 f

2

f

3

f

1

h

2

P

(3)

f

3

=

2

6

|

2P

E

f

3

P

D

f

3

P

F

f

3

=

1

3

| 2 f

3

f

1

f

2

h

3

39

(4.11.15)

The three functions h1,h2, and h3 are not independent since hl + h2 + h3 = 0. Hence there are only two

independent functions and these may be constructed in an infinite number of ways. The choice

corresponding to (3.5-3), after normalization, is g2 = h1 and g3 = h3 h2.

So we have

g

2

=

1

3

| 2 f

1

f

2

f

3

33,39 (4.11.16)

And

g

3

=h

3

h

2

=

1

3

| 2 f

3

f

1

f

2

1

3

| 2 f

2

f

3

f

1

=f

2

+ f

3

33 ,39 (4.11.17)

In similar way taking into account that for first equation in (4.11.13) all characters are equal to one :

P

(1)

f

1

=

l

1

h

R

X

(1)

P

R

f

1

=

1

6

|

P

E

f

1

+P

A

f

1

+P

B

f

1

+P

C

f

1

+P

D

f

1

+P

F

f

1

=

1

3

|

f

1

+ f

2

+ f

3

P

( 1)

f

2

=

1

3

|

f

1

+ f

2

+f

3

P

( 1)

f

3

=

1

3

|

f

1

+f

2

+ f

3

(4.11.18)

Thus we get for

g

1

g

1

=

1

3

|

f

1

+ f

2

+ f

3

33 (4.11.19)

In what follows we will normalise applying the procedure described at pag 248 [72] or pag 141,248

[40] or pag 63,61[31] or 214,230 [73] or pag 588 [69] :

1=

( N 1)

( N 1)d t

(4.11.20)

32

1=N

2

( c

1

+c

2

)( c

1

1

+c

2

2

) d t

1=N

2

( c

1

2

1

2

d t+2c

1

c

2

2

d t+c

2

2

2

2

d t)

(4.11.21)

1=N

2

(c

1

2

+2c

1

c

2

2

d t+c

2

2

)

1=N

2

(c

1

2

+2c

1

c

2

S

12

+c

2

2

)

N=

1

.

(c

1

2

+2c

1

c

2

S

12

+c

2

2

)

(4.11.22)

Where

S

12

=

2

d t

(4.11.23)

Is the overlap integral.

Thus the normalisation for (4.11.19) is:

|

f

1

+f

2

+ f

3

N

3

N

3

|

f

1

+ f

2

+ f

3

=

N

2

9

(

f

1

f

1

+ f

2

f

2

+ f

3

f

3

+2 f

1

f

2

+2 f

1

f

3

+2 f

2

f

3

)

=

N

2

9

( 3+6S)=1

thus

N=

3

.3+6S

(4.11.24)

The normalisation for (4.11.16):

| 2 f

1

f

2

f

3

N

3

N

3

| 2 f

1

f

2

f

3

=

N

2

9

(

4 f

1

f

1

+ f

2

f

2

+ f

3

f

3

4 f

1

f

2

4 f

1

f

3

+2 f

2

f

3

)

=

N

2

9

( 4+28S+2S)=1

thus

N=

3

.66S

(4.11.25)

And the normalisation for (4.11.17):

33

|f

2

f

3

N

N |f

2

f

3

=N

2

(

f

2

f

2

+ f

3

f

3

2 f

2

f

3

)

=N

2

( 22S)=1

thus

N=

1

.22S

(4.11.26)

If we put all together we get (see [1])

g

1

=