Escolar Documentos

Profissional Documentos

Cultura Documentos

Quantum channel additivity proof

Enviado por

gejikeijiDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Quantum channel additivity proof

Enviado por

gejikeijiDireitos autorais:

Formatos disponíveis

a

r

X

i

v

:

q

u

a

n

t

-

p

h

/

0

4

0

3

0

7

2

v

1

9

M

a

r

2

0

0

4

A quantum channel with additive minimum output entropy

Nilanjana Datta,

1,

Alexander S. Holevo,

2,

and Yuri Suhov

1,

1

Statistical Laboratory, Centre for Mathematical Science,

University of Cambridge, Wilberforce Road, Cambridge CB3 0WB, UK

2

Steklov Mathematical Institute, Gubkina 8, 119991 Moscow, Russia

We give a direct proof of the additivity of the minimum output entropy of a particular quantum

channel which breaks the multiplicativity conjecture. This yields additivity of the classical capacity

of this channel, a result obtained by a dierent method in [10]. Our proof relies heavily upon certain

concavity properties of the output entropy which are of independent interest.

PACS numbers: 03.67.Hk, 03.67.-a

INTRODUCTION

A number of important issues of quantum information theory would be greatly claried if several resources

and parameters were proved to be additive. However, the proof of additivity of such resources as the minimum

output entropy of a quantum memoryless channel and its classical capacity remains in general an open problem,

see e.g. [8]. Recently Shor [4] provided a new insight into how several additivitytype properties are related to

each other. He proved that: (i) additivity of the minimum output entropy of a quantum channel, (ii) additivity

of the classical capacity of a quantum channel, (iii) additivity of the entanglement of formation, and (iv) strong

superadditivity of the entanglement of formation are equivalent in the sense that if one of them holds for all

channels then the others also hold for all channels.

In this paper we study the additivity of the minimum output entropy for a channel which is particularly

interesting because it breaks a closely related multiplicativity property [2]. For this channel the additivity of the

classical capacity and of the minimum output entropy are equivalent, which allows us to derive an alternative

proof of the result in [10], where additivity of its capacity was established. The problem of additivity of

the minimum output entropy is interesting and important in its own right (it is straightforward, addresses a

fundamental geometric feature of a channel and may provide insight into more complicated channel properties).

In this paper, the key observation that ensures the additivity is that the output entropy of the product channel

exhibits specic concavity properties as a function of the Schmidt coecients of the input pure state. It is our

hope that a similar mechanism might be responsible for the additivity of the minimum output entropy in other

interesting cases.

THE ADDITIVITY CONJECTURE

A channel in the nite dimensional Hilbert space H C

d

is a linear trace-preserving completely positive

map of the algebra of complex d dmatrices. A state is a density matrix , that is Hermitian matrix such

that 0, Tr = 1. The minimum output entropy of the channel is dened as

h() := min

S(()), (1)

where the minimization is over all possible input states of the channel. Here S() = log is the von

Neumann entropy of the channel output matrix = (). The additivity problem for the minimum output

entropy is to prove that

h(

1

2

) = h(

1

) +h(

2

), (2)

where

1

,

2

are two channels in H

1

, H

2

respectively, denotes tensor product.

A channel is covariant, if there are unitary representations U

g

, V

g

of a group G such that

(U

g

U

g

) = V

g

()V

g

; g G. (3)

If both representations are irreducible, then we call the channel irreducibly covariant. In this case there is a

simple formula

C() = log d h(), (4)

relating the Holevo capacity

C() of the channel with h() [9]. Since the tensor product of irreducibly covariant

channels (with respect to possibly dierent groups G

1

, G

2

) is again irreducibly covariant (with respect to the

group G

1

G

2

)), it follows that if (2) holds for two such channels, then

C(

1

2

) =

C(

1

) +

C(

2

). (5)

Notice that this does not follow from the result of [4] which asserts that if (2) holds for all channels, then (5)

also holds for all channels. In the latter case also

C(

1

n

) =

C(

1

) + +

C(

n

), which implies that

C() is equal to the classical capacity of the channel (see [8] for more detail).

The concavity of the von Neumann entropy implies that the minimization in (1) can be restricted to pure

input states, since the latter correspond to the extreme points of the convex set of input states. Hence, we can

equivalently write the minimum output entropies in the form

h() = min

|H

||||=1

S(([)[)); (6)

h(

1

2

) = min

|

12

H

1

H

2

||

12

||=1

S((

1

2

)([

12

)

12

[)). (7)

Here [

12

)

12

[ is a pure state of a bipartite system with the Hilbert space H

1

H

2

, where H

i

C

di

for

i = 1, 2. In order to prove (2), it is sucient to show that the minimum in (7) is attained on unentangled

vectors [

12

). Consider the Schmidt decomposition

[

12

) =

d

=1

_

[; 1)[; 2), (8)

where d = mind

1

, d

2

, [; j) is an orthonormal basis in H

j

; j = 1, 2, and = (

1

, . . . ,

d

) is the vector of

the Schmidt coecients. The state [

12

)

12

[ can then be expressed as

[

12

)

12

[ =

d

,=1

_

[; 1); 1[ [; 2); 2[. (9)

The Schmidt coecients form a probability distribution:

0 ;

d

=1

= 1, (10)

thus the vector varies in the (d 1)dimensional simplex

d

, dened by these constraints. Extreme points

(vertices) of

d

correspond precisely to unentangled vectors [

12

) = [

1

) [

2

) H

1

H

2

. The proof of (2)

becomes straightforward if we can prove that for every choice of the bases, the function

d

S(M( )) (11)

2

attains its minimum at the vertices of

d

. Here S(M( )) is the von Neumann entropy of the channel matrix

M() := (

1

2

) ([

12

)

12

[) =

d

,=1

_

1

([; 1); 1[)

2

([; 2); 2[). (12)

Two special properties of a function can guarantee this: one is concavity, and another is Shur concavity (see

the Appendix). Both of them appear useful in consideration of the particular channel we pass to.

THE CHANNEL

The channel considered in this paper was introduced in [2]. It is dened by its action on d d matrices as

follows:

() =

1

d 1

_

I tr()

T

_

(13)

where

T

denotes the transpose of the matrix , and I is the unit matrix in H C

d

. It is easy to see that the

map is linear and trace-preserving. For the proof of complete positivity see [2]. Moreover, is irreducibly

covariant since for any arbitrary unitary transformation U

(UU

) =

U()

, (14)

hence the relation (4) holds for this channel.

Our aim will be to prove the additivity relation

h( ) = 2h(), (15)

for the channel (13). For d = 2, (13) is a unital qubit channel, for which property (15) follows from [5]. For

d 3, (15) can be deduced from additivity of the Holevo capacity (5), established in [10], by a dierent method.

Here we provide a direct proof based on the idea described at the end of the previous section.

For a pure state = [)[ the channel output is given by

([)[) =

1

d 1

_

I [) [

_

, (16)

where the entries of vector [) are complex conjugates of the corresponding entries of vector [). The matrix

([)[) has a non-degenerate eigenvalue equal to 0 and an eigenvalue 1/(d1) which is (d1)fold degenerate.

The von Neumann entropy S(([)[)) is obviously the same for all pure states, and so

h() = log(d 1). (17)

As argued in the previous section, in order to prove (15), it is sucient to show that the minimum in

h( ) = min

|

12

C

d

C

d

||

12

||=1

S(( )([

12

)

12

[))

is attained on unentangled vectors [

12

). Consider the Schmidt decomposition (8) of [

12

). Owing to the

property (14), we can choose for [; j), ; j = 1, 2, the canonical basis in C

d

. As it was shown in the previous

section, it suces to check that S(M()) attains its minimum at the vertices of

d

. Here M() is the matrix

dened in (12) for the channel under consideration:

M() =

d

,=1

_

([)[) ([)[),

3

where by (13)

([)[) =

1

d 1

(

I [)[) ,

owing to the fact that [) and [) are real.

Using (8) and the completeness relations:

I =

d

=1

[)[, I I =

d

,=1

[)[,

we obtain

M() =

1

(d 1)

2

_

_

d

,=1

[)[(1

) +

d

,=1

_

[)[

_

_

. (18)

In order to nd the eigenvalues of M(), it is instructive to rst study the secular equation of a more general

n n matrix:

A =

n

j=1

j

[j)j[ +

n

j,k=1

k

[j)k[.

Matrix A gives ( )([

12

)

12

[) for a particular choice of the parameters

j

and

j

[see eq.(21) below]. It

has the form:

_

_

_

_

_

_

_

1

+

1

1

2

+

2

n

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

n

+

n

_

_

_

_

_

_

_

. (19)

The secular equation det(A I) = 0 can be written as

F() = 0, (20)

where

F() =

j

(

j

)

_

1 +

1

+. . . +

n

_

.

Solving eq.(20) would be in general non-trivial. However, representing the matrix

_

(d 1)

2

M()

in the form

(19) results in a convenient expression for F(). This allows us to identify many of the eigenvalues of (d 1)

2

M(). More precisely, we identify j with a pair (, ) and obtain

= 1

;

j

, , = 1, . . . , d. (21)

Therefore,

F() =

d

,=1

(1

)

_

_

1 +

d

=1

(1

)

_

_

=

d

,=1=

(1

)

_

_

d

=1

(1 2

)

_

_

_

1 +

d

=1

(1 2

)

_

_

_

_

_

.

(22)

4

Eq.(20) yields the following equations:

(1

) = 0, ,= , , = 1, 2, . . . , d, (23)

where

denote the Schmidt coecients (8). Equation (23) implies that there are d(d 1) eigenvalues of

the form

= 1

, ,= , = 1, . . . , d. (24)

The roots of the equation

d

=1

(1 2

)

_

1 +

d

=1

(1 2

)

_

= 0 (25)

give the remaining d eigenvalues of the matrix

_

(d 1)

2

M()

.

For the case d = 3 the roots of (25) can be explicitly evaluated. This is done in the next section. The case

of arbitrary d > 3 is discussed in sections that follow. Note that the sum of all eigenvalues of

_

(d 1)

2

M()

equals

tr

_

(d 1)

2

M()

= (d 1)

2

Tr M() = (d 1)

2

,

since M() is a density matrix acting in C

d

2

.

EIGENVALUES FOR d = 3

For d = 3, there are d(d 1) = 6 eigenvalues of the matrix

_

(d 1)

2

M()

= 4M(), which are given by

(24). The sum of these eigenvalues is:

3

,=1

=

(1

) = 2

_

3 2(

1

+

2

+

3

)

= 2

since

1

+

2

+

3

= 1. (26)

The remaining three eigenvalues of 4M() are given by the roots of the equation

3

=1

(1 2

)

_

1 +

3

=1

(1 2

)

_

= 0. (27)

Since the sum of all the eigenvalues is equal to (d 1)

2

4, these remaining three eigenvalues sum up to

4 2 = 2. Using (26), we can cast (27) as:

3

+a

2

2

+a

1

+a

0

= 0 (28)

where

a

0

= 4

1

3

; a

1

= 1 ; a

2

= 2. (29)

5

The three roots of (28) are given by

1

:=

a

2

3

+ (T

1

+T

2

),

2

:=

a

2

3

1

2

(T

1

+T

2

) +

1

2

i

3 (T

1

T

2

),

3

:=

a

2

3

1

2

(T

1

+T

2

)

1

2

i

3 (T

1

T

2

). (30)

Here

T

1

:=

_

R +

D

_

1/3

and T

2

:=

_

R

D

_

1/3

,

and

R =

1

54

(9a

1

a

2

27a

0

2a

3

2

), D = Q

3

+R

2

, Q :=

1

9

(3a

1

a

2

2

). (31)

Thus, the matrix M() has six eigenvalues of the form (1/4)(1

), where , = 1, 2, 3 and ,= ,

and three eigenvalues

1

,

2

and

3

, with

i

:=

i

/4. The output entropy S

_

M()

_

can be expressed as the

sum:

S(M()) = S

1

() +S

2

().

Here

S

1

() =

3

,=1

=

1

4

(1

) log

_

1

4

(1

)

_

=

1

2

3

=1

log

4

=

1

2

H() + 1 (32)

where H() =

d

=1

log

denotes the Shannon entropy of , and

S

2

() =

3

i=1

i

log

i

.

Since H() is a concave function of = (

1

,

2

,

3

), so is S

1

(). Hence S

1

() attains its minimum at the

vertices of

3

.

Let us now evaluate the summand S

2

(). Substituting the values of a

0

, a

1

and a

2

from (29) into (31), we get

R =

1

27

+ 2t, Q =

1

9

, D = 4t(

1

27

t) 0.

Here t =

1

3

, 0 t 1/27. Hence, we can write

R +

D = R +i

_

[D[ = re

i

,

where r =

_

R

2

+[D[ = 1/27 and = arctan(

_

[D[/R), 0 , so that

tan =

_

t(1/27 t)

t 1/54

.

6

Considering the sign of this expression we nd that t = 0 corresponds to = , while t = 1/27 to = 0. In

terms of the eigenvalues

k

, k = 1, 2, 3 can now be expressed as:

k

=

1

6

_

1 + cos

_

3

2(k 1)

3

__

=

1

3

cos

2

_

6

2(k 1)

6

_

.

Hence,

S

2

() =

3

k=1

1

3

cos

2

_

6

2(k 1)

6

_

log

_

1

3

cos

2

_

6

2(k 1)

6

__

. (33)

An argument similar to Lemma 3 of [6] shows that the RHS of (33) has a global minimum, equal to 1, at

= corresponding to t =

1

3

= 0. Hence, S

2

() attains its minimal value 1 at every point of the boundary

3

, in particular at its vertices:

i

= 1,

j

= 0 for j ,= i, i = 1, 2, 3. The summand S

1

(), given by (32 ), also

attains its minimum, equal to 1, at the vertices. Therefore the sum S(M()) attains its minimum, equal to 2,

at the vertices of

3

. Hence, h( ) = 2, and the additivity (15) holds, as h() = 1 by (17).

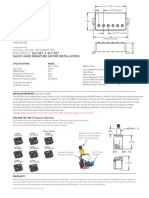

We conjecture that the entropy S(M()) as a function of is concave. This is supported by a 3D-plot

of S(M()) as a function of two independent Schmidt coecients

1

and

2

; here

i

0 for i = 1, 2 and

1

+

2

1. See Figure 1 below.

0

0.2

0.4

0.6

0.8

1

0

0.2

0.4

0.6

0.8

1

2

3

4

0

0.2

0.4

0.6

0.8

FIG. 1: The entropy S(M()) as a function of two independent Schmidt

coecients 1 and 2.

However S

2

() is not concave as can be seen e.g. by taking

2

=

1

,

3

= 1 2

1

. See Figure 2.

MINIMUM OUTPUT ENTROPY IN d > 3 DIMENSIONS

In a previous section we found that the matrix

_

(d 1)

2

M()

, where M() is the output density matrix of

the channel and is given by (18), has d(d 1) eigenvalues of the form

(1

) , with ,= , , = 1, 2, . . . , d, (34)

7

0.1 0.2 0.3 0.4 0.5

1.02

1.04

1.06

1.08

1.1

1.12

FIG. 2: S2() as a function of = 1 = 2.

and the remaining d eigenvalues are given by the roots

1

, . . . ,

d

of (25). Hence, the matrix M() has d(d 1)

eigenvalues of the form

e

:=

1

(d 1)

2

_

1

_

, ,= , , = 1, 2, . . . , d,

and d eigenvalues of the form

g

i

:=

i

(d 1)

2

, i = 1, 2, . . . , d.

Note that the

i

s and g

i

s are functions of

d

. Accordingly, we write the von Neumann entropy of the

output density matrix as a sum

S(M()) = S

1

() +S

2

() (35)

where

S

1

() :=

d

=1

d

=1

=

e

log e

, S

2

() :=

d

i=1

g

i

log g

i

. (36)

Note that

d

=1

d

=1

=

e

=

d

=1

d

=1

=

1

(d 1)

2

=

d 2

d 1

. (37)

Dene the following variables:

e

(= e

()) :=

d 1

d 2

e

=

1

(d 1) (d 2)

1d

=,

, ,= , , = 1, . . . , d. (38)

g

i

(= g

i

()) := (d 1) g

i

=

1

(d 1)

i

, i = 1, . . . , d. (39)

8

For d 3 we have e

0, and from (37) it follows that

d

=1

d

=1

=

e

=

d 1

d 2

d

=1

d

=1

=

e

= 1,

d

i=1

g

i

= (d 1)

_

1

d 2

d 1

_

= 1.

Hence, e := e

[ ,= , , = 1, 2, . . . , d and g = g

i

[i = 1, . . . , d are probability distributions. In terms

of these variables

S

1

() =

d 2

d 1

H( e)

d 2

d 1

log

_

d 2

d 1

_

, (40)

where H( e) denotes the Shannon entropy of e. In view of (36)

S

2

() =

1

(d 1)

H( g) +

1

d 1

log (d 1) . (41)

From (40) it follows that S

1

() in (35) is a concave function of the variables e

. These variables are ane

functions of the Schmidt coecients

1

, . . . ,

d

. Hence, S

1

is a concave function of and attains its minimum

at the vertices of

d

, dened by the constraints (10).

Let us now analyze S

2

(). We wish to prove the following:

Theorem . The function S

2

is Schur-concave in

d

i.e.,

= S

2

() S

2

_

_

, where denotes

the majorization order (see the Appendix).

Since every

d

is majorized by the vertices of

d

, this will imply that S

2

() also attains its minimum at

the vertices. Thus S() = S

1

() +S

2

() is minimized at the vertices, which correspond to unentangled states.

As was observed, this implies the additivity.

PROOF OF THE THEOREM

We will use the quite interesting observation made in [7], that the Shannon entropy H(x) is a monotonically

increasing function of the elementary symmetric polynomials s

k

(x

1

, x

2

, . . . , x

d

), k = 0, . . . , d, in the variables

x = (x

1

, x

2

, . . . , x

d

). The latter are dened by equations (65) of the Appendix. Hence the Shannon entropy

H( g) in (41) is a monotonically increasing function of the symmetric polynomials

s

k

() := s

k

( g

1

, g

2

, . . . , g

d

)

1

(d 1)

k

s

k

(

1

,

2

, . . . ,

d

), k = 0, . . . , d, (42)

Therefore, to prove the Theorem it is sucient to prove that the functions s

k

() are Schur concave in

d

.

Here the variables g

i

are given by (39), and the variables

i

are the roots of eq. (25). Dene the variables

:= 1 2

, = 1, 2, . . . , d.

Note that 1

1, owing to the inequality 0

1. Moreover,

d

=1

= d 2,

since

d

=1

= 1. In terms of the variables

, (25) can be expressed as

d

=1

(

)

_

1 +

1

2

d

=1

1

)

_

= 0. (43)

9

Since the roots

1

, . . . ,

d

of (25) are identied, trivially, as the zeroes of the product (

1

)(

2

) . . . (

d

),

equation (43) can be expressed in terms of these roots as follows:

d

k=0

k

(1)

k

s

dk

(

1

,

2

, . . . ,

d

) = 0. (44)

In terms of the elementary symmetric polynomials s

l

, of the variables

1

,

2

, . . . ,

d

, (43) can be rewritten as

d

k=0

k

(1)

k

s

dk

(

1

,

2

, . . . ,

d

) +

d1

k=0

k

(1)

k

d

l=1

s

d1k

(

1

, . . . , ,

l

. . . ,

d

)

(1

l

)

2

= 0, (45)

where the symbol ,

l

means that the variable

l

has been omitted from the arguments of the corresponding

polynomial. Equating the LHS of (44) with the LHS of (45) yields, for each 0 k d 1 :

s

dk

(

1

,

2

, . . . ,

d

) = s

dk

(

1

,

2

, . . . ,

d

) +

d

l=1

s

d1k

(

1

, . . . , ,

l

. . . ,

d

)

(1

l

)

2

. (46)

Note that in (46), values s

dk

(

1

,

2

, . . . ,

d

) are expressed in terms of values of elementary symmetric polyno-

mials in the variables

1

,

2

, . . . ,

d

(which are themselves linear functions of the Schmidt coecients

1

, . . . ,

d

).

Our aim is to prove that s

k

() is Schur concave in the Schmidt coecients

1

, . . . ,

d

. Eq.(42) implies that

this amounts to proving Schur concavity of s

dk

(

1

,

2

, . . . ,

d

) as a function of

1

, . . . ,

d

, for all 0 k d.

The functions

k

(

1

, . . . ,

d

) := s

dk

(

1

, . . . ,

d

) +

d

l=1

s

d1k

(

1

, . . . , ,

l

. . . ,

d

)

(1

l

)

2

RHS of (46) (47)

are symmetric in the variables

1

,

2

, . . . ,

d

, and hence in the variables

1

, . . . ,

d

. By eq.(64) (see the Appendix)

it remains to prove

(

i

j

)

_

j

_

(

i

j

)

_

j

_

0, 1 i, j d. (48)

By (66) we have

k

(

1

, . . . ,

d

) =

i

s

dk

(

1

, . . . ,

d

) +

i

d

l=1

s

d1k

(

1

, . . . , ,

l

. . . ,

d

)

(1

l

)

2

= s

d1k

(

1

, .., ,

i

, ..,

d

) +

d

l=1

l=i

s

d1k

(

1

, . . . , ,

i

, .., ,

l

. . . ,

d

)

(1

l

)

2

1

2

s

d1k

(

1

, . . . , ,

i

. . . ,

d

). (49)

Therefore,

_

j

_

(

1

, . . . ,

d

) = s

d1k

(

1

, .., ,

i

, ..,

d

) s

d1k

(

1

, .., ,

j

, ..,

d

)

+

d

l=1

l=i

s

d1k

(

1

, .., ,

i

, .., ,

l

..,

d

)

(1

l

)

2

d

l=1

l=j

s

d1k

(

1

, .., ,

j

, .., ,

l

. . . ,

d

)

(1

l

)

2

1

2

_

s

d1k

(

1

, . . . , ,

i

. . . ,

d

) s

d1k

(

1

, . . . , ,

j

. . . ,

d

)

. (50)

10

Using (67) we get

_

j

_

(

1

, . . . ,

d

) =

1

2

_

j

i

)s

dk2

(

1

, .., ,

i

, .., ,

j

. . . ,

d

)

+

(

i

j

)

2

s

dk2

(

1

, .., ,

i

, .., ,

j

. . . ,

d

)

+

d

l=1

l=i,j

(1

l

)

2

_

s

dk2

(

1

, .., ,

i

, .., ,

l

. . . ,

d

) s

dk2

(

1

, .., ,

j

, .., ,

l

. . . ,

d

)

=

d

l=1

l=i,j

(1

l

)

2

_

s

dk2

(

1

, .., ,

i

, .., ,

l

. . . ,

d

) s

dk2

(

1

, .., ,

j

, .., ,

l

. . . ,

d

)

=

d

l=1

l=i,j

(1

l

)

2

(

j

i

)s

dk3

(

1

, .., ,

i

, .., ,

j

, ..,

l

. . . ,

d

). (51)

Substituting (51) in (48) we obtain that Schur concavity holds if and only if

d

l=1

l=i,j

(1

l

)s

dk3

(

1

, .., ,

i

, .., ,

j

, ..,

l

. . . ,

d

) 0, 1 i, j d. (52)

The variables

i

and

j

do not appear in (52). Owing to symmetry, without loss of generality, we can choose

i = d 1 and j = d. Then omitting

d1

and

d

results in replacing (52) by

d2

l=1

(1

l

)s

dk3

(

1

, .., ,

l

. . . ,

d2

) 0.

By setting n = d 2, we can express the condition for Schur concavity by the following lemma.

Lemma . The functions

k

, dened in (47), are Schur concave in the Schmidt coecients

1

, . . . ,

d

if

n

l=1

(1

l

)s

nk1

(

1

, .., ,

l

. . . ,

n

) 0, 0 k d 3, (53)

where the variables

i

:= 1 2

i

, 1 i n, satisfy

1

i

1,

n

l=1

l

n 2. (54)

Note: The constraints (54) follow from the relations:

l

0 l, and

n

l=1

l

=

d2

l=1

l

1.

Proof of the Lemma

The constraints (54) imply that at most one of the variables

1

, . . . ,

n

can be negative. Note that (1

l

) is

always nonnegative since

l

1. Thus if all

1

, . . . ,

n

0, (53) obviously holds. Hence, we need to prove (53)

only in the case in which one, and only one, of the variables

1

, . . . ,

n

is negative.

To establish the latter fact, we rst prove the inequality

n

l=1

1

l

l

0, or

n

l=1

l

1 2

l

0. (55)

11

Without loss of generality we can choose

1

< 0 and

l

> 0 for all l = 2, 3, . . . , n. Hence,

1

> 1/2 and

l

< 1/2

for all l = 2, 3, . . . , n. Write:

LHS of (55) =

1

1 2

1

+

n

l=2

l

1 2

l

:= T

1

+T

2

.

Note that T

1

0 since

1

> 1/2. The function

f(

i

) :=

i

1 2

i

, 0

i

< 1/2,

is convex. Hence, T

2

(

2

, ,

n

), as a sum of convex functions, is convex on the simplex dened by

2

+ +

n

1

1

, 0

i

< 1/2 , i = 2, . . . , n,

with xed

i

> 1/2.

Hence, T

2

achieves its maximum on the vertices of the simplex. One vertex is (0, , 0) , for which T

2

= 0,

and hence T

1

+T

2

< 0. Other vertices are obtained by permutations from (1

1

, 0, , 0) and give

T

2

=

1

1

1 2(1

1

)

=

1

1

1 2

1

.

Thus the maximal value of T

1

+T

2

is

1

1 2

1

1

1

1 2

1

=

1 2

1

1 2

1

= 1

which proves (55).

To prove (53), using the denition (65) of elementary symmetric polynomials, we write:

s

nk1

(

1

, .., ,

l

. . . ,

n

) =

c

n

(k 1)!

n

j

1

=1

j

1

=l

n

j

2

=1

j

2

=l,j

1

n

j

k

=1

j

k

=l

j

k

=j

i

1ik

1

j1

j

k

l

.

Here c

n

:=

1

, ..,

l

. . . ,

n

< 0. Hence, the required inequality (53) becomes

n

l=1

n

j

1

=1

j

1

=l

n

j

2

=1

j

2

=l,j

1

n

j

k

=1

j

k

=l

j

k

=j

i

1ik1

1

l

j1

j

k

l

0. (56)

Once again, without loss of generality we can choose

1

< 0 and

l

> 0 for all l = 2, 3, . . . , n. Then

LHS of (56) =

n

j1=2

n

j

2

=2

j

2

=j

1

n

j

k

=2

j

k

=j

i

1ik1

1

j1

j

k

_

_

1

1

1

+

k

r=1

1

jr

1

+

n

l=2

l=j

i

1ik

1

l

l

_

_ (57)

Equation (57) can be derived as follows: Let

T(l, j

1

, j

2

, . . . , j

k

) :=

1

l

j1

j

k

l

, (58)

with l, j

1

, j

2

, . . . , j

k

1, 2, . . . , n and l, j

1

, j

2

, . . . , j

k

all dierent.

12

Without loss of generality we can choose

1

< 0 and

l

> 0 for all l = 2, 3, . . . , n. Then

LHS of (56) =

n

l=1

n

j

1

=1

j

1

=l

n

j

2

=1

j

2

=l,j

1

n

j

k

=1

j

k

=l

j

k

=j

i

1ik1

T(l, j

1

, j

2

, . . . , j

k

)

=

n

j1=2

n

j

2

=2

j

2

=j

1

n

j

k

=2

j

k

=j

i

1ik1

T(1, j

1

, j

2

, . . . , j

k

) +

n

l=2

n

j

2

=2

j

2

=l

n

j

k

=2

j

k

=l

j

k

=j

i

1ik1

T(l, 1, j

2

, . . . , j

k

)

+

n

l=2

n

j

1

=2

j

1

=l

n

j

3

=2

j

3

=j

1

,l

n

j

k

=2

j

k

=l

j

k

=j

i

1ik1

T(l, j

1

, 1, j

3

, . . . , j

k

) +

+

n

l=2

n

j

1

=2

j

1

=l

n

j

k1

=2

j

k1

=l

j

k1

=j

i

1ik2

T(l, j

1

, . . . , j

k1

, 1) +

n

l=2

n

j

1

=2

j

1

=l

n

j

k

=2

j

k

=l

j

k

=j

i

1ik1

T(l, j

1

, . . . , j

k

).

(59)

Now,

n

l=2

n

j

1

=2

j

1

=l

n

j

i1

=2

j

i1

=l

j

i1

=jr1ri2

n

j

i+1

=2

j

i+1

=l

j

i+1

=jr1ri1

n

j

k

=2

j

k

=l

j

k

=jr1rk1

T(l, j

1

, . . . , j

i1

, 1, j

i+1

, . . . , j

k

)

=

n

l=2

n

j

1

=2

j

1

=l

n

j

i1

=2

j

i1

=l

j

i1

=jr1ri2

n

j

i+1

=2

j

i+1

=l

j

i+1

=jr1ri1

n

j

k

=2

j

k

=l

j

k

=jr1rk1

1

l

j1

. . .

ji1

ji+1

. . .

j

k

l

=

n

j1=2

n

j

i1

=2

j

i1

=jr1ri2

n

j

i

=2

j

i

=jr1ri1

n

j

i+1

=2

j

i+1

=jr1ri

n

j

k

=2

j

k

=jr1rk1

1

ji

j1

. . .

ji1

ji

ji+1

. . .

j

k

=

n

j1=2

n

j

2

=2

j

2

=j

1

n

j

i

=2

j

i

=jr1ri1

n

j

k

=2

j

k

=jr1rk1

1

j1

j

k

_

1

ji

1

_

. (60)

In the second last line on the RHS of (60) , we have changed the dummy variable from l to j

i

. Hence,

RHS of (59) =

n

j1=2

n

j

2

=2

j

2

=j

1

n

j

k

=2

j

k

=j

i

1ik1

1

j1

j

k

_

_

1

1

1

+

k

r=1

1

jr

1

+

n

l=2

l=j

i

1ik

1

l

l

_

_

= RHS of (57) (61)

From (55) it follows that for given j

1

, j

2

, . . . , j

k

, with 2 j

r

n for r = 1, 2, . . . , k, and j

m

,= j

k

for all m ,= k:

n

l=2

l=j

i

1ik

1

l

l

+

k

r=1

1

jr

jr

+

1

1

1

0.

13

Hence,

n

l=2

l=j

i

1ik

1

l

l

_

k

r=1

1

jr

jr

+

1

1

1

_

(62)

Substituting (62) on the RHS of (57) yields

RHS of (57)

n

j1=2

n

j

2

=2

j

2

=j

1

n

j

k

=2

j

k

=j

i

1ik

1

j1

j

k

_

k

r=1

(1

jr

)

_

1

jr

_

_

=

n

j1=2

n

j

2

=2

j

2

=j

1

n

j

k

=2

j

k

=j

i

1ik

1

j1

j

k

_

k

r=1

(1

jr

)

_

jr

1

jr

_

_

0. (63)

This proves (56) and hence (53) for n 3 and all k = 1, 2, . . . , n 2.

APPENDIX

A realvalued function on R

n

is said to be Schur concave (see [11]) if:

x y = (x) (y).

Here the symbol x y means that x = (x

1

, x

2

, . . . , x

n

) is majorized by y = (y

1

, y

2

, . . . , y

n

) in the following

sense: Let x

be the vector obtained by rearranging the coordinates of x in decreasing order:

x

= (x

1

, x

2

, . . . , x

n

) means x

1

x

2

. . . x

n

.

For x, y R

n

, we say that x is majorized by y and write x y if

k

j=1

x

j

k

j=1

y

j

, 1 k n,

and

n

j=1

x

j

=

n

j=1

y

j

.

In the simplex

d

, dened by the constraints (10), the minimal point is (1/d, . . . , 1/d) (the baricenter of

d

),

and the maximal points are the permutations of (1, 0, . . . , 0) (the vertices).

A dierentiable function (x

1

, x

2

, . . . , x

n

) is Schur concave if and only if :

1. is symmetric

2.

(x

i

x

j

)

_

x

i

x

j

_

0, 1 i, j n. (64)

14

The l

th

elementary symmetric polynomial s

l

in the variables x

1

, x

2

, . . . , x

n

is dened as

s

0

(x

1

, x

2

, . . . , x

n

) = 1,

s

l

(x

1

, x

2

, . . . , x

n

) =

1i1<i2<i

l

d

x

i1

x

i2

. . . x

i

l

for l = 1, 2, . . . , n. (65)

We shall use the following identities:

x

j

s

k

(x

1

, x

2

, . . . , x

n

) = s

k1

(x

1

, . . . , ,x

j

, . . . , x

n

) (66)

and

s

k

(x

1

, . . . , ,x

i

, . . . , x

n

) s

k

(x

1

, . . . , ,x

j

, . . . , x

n

) = (x

j

x

i

)s

k1

(x

1

, . . . , ,x

i

, .., ,x

j

, . . . , x

n

) (67)

Acknowledgments This work was initiated when A.S.H. was an overseas visiting scholar at St Johns Col-

lege, Cambridge. He gratefully acknowledges the hospitality of the Statistical Laboratory of the Centre for

Mathematical Sciences. N.D. and Y.S. worked in association with the CMI.

n.datta@statslab.cam.ac.uk

holevo@mi.ras.ru

yms@statslab.cam.ac.uk

[1] K.Matsumoto, T.Shimono and A.Winter, Remarks on additivity of the Holevo channel capacity and the entangle-

ment of formation, quant-ph/0206148

[2] R.F.Werner and A.S.Holevo, Counterexample to an additivity conjecture for output purity of quantum channels,

Jour. Math. Phys., 43, 2002

[3] G.G.Amosov and A.S.Holevo, On the multiplicativity conjecture for quantum channels, quant-ph/0103015

[4] P. Shor, Equivalence of Additivity Questions in Quantum Information Theory, quant-ph/0305035

[5] C.King, Additivity for a class of unital qubit channels, quant-ph/0103156

[6] M.Sasaki, S.Barnett, R.Jozsa, M.Osaki and O.Hirota, Accessible information and optimal strategies for real sym-

metrical quantum sources,quant-ph/9812062

[7] G.Mitchison and R. Jozsa, Towards a geometrical interpretation of quantum information compression, quant-

ph/0309177

[8] A. S. Holevo, Additivity of classical capacity and related problems, http://www.imaph.nat.tu-bs.de/qi/problems/problems.html

[9] A. S. Holevo, Remarks on the classical capacity of quantum channel covariant channels quant-ph/0212025

[10] K.Matsumoto, F. Yura, Entanglement cost of antisymmetric states and additivity of capacity of some channels,

quant-ph/0306009

[11] R. Bhatia, Matrix analysis, SpringerVerlag, New York, 1997.

15

Você também pode gostar

- Additivity in Isotropic Quantum Spin ChannelsDocumento13 páginasAdditivity in Isotropic Quantum Spin ChannelsgejikeijiAinda não há avaliações

- Entanglement-Breaking Channels in Infinite Dimensions: A. S. Holevo Steklov Mathematical Institute, MoscowDocumento16 páginasEntanglement-Breaking Channels in Infinite Dimensions: A. S. Holevo Steklov Mathematical Institute, MoscowgejikeijiAinda não há avaliações

- The Maximal P-Norm Multiplicativity Conjecture Is FalseDocumento12 páginasThe Maximal P-Norm Multiplicativity Conjecture Is FalsegejikeijiAinda não há avaliações

- Maximal P-Norms of Entanglement Breaking ChannelsDocumento7 páginasMaximal P-Norms of Entanglement Breaking ChannelsgejikeijiAinda não há avaliações

- Additivity of Weyl covariant quantum channelsDocumento1 páginaAdditivity of Weyl covariant quantum channelsAnonymous zsjvwTDAinda não há avaliações

- Multiplicativity of maximal 2-norms for some CP mapsDocumento16 páginasMultiplicativity of maximal 2-norms for some CP mapsgejikeijiAinda não há avaliações

- Classical Information Capacity of A Class of Quantum ChannelsDocumento14 páginasClassical Information Capacity of A Class of Quantum ChannelsgejikeijiAinda não há avaliações

- On Peaked Periodic Waves To The Nonlinear Surface Wind Waves EquationDocumento9 páginasOn Peaked Periodic Waves To The Nonlinear Surface Wind Waves EquationRofa HaninaAinda não há avaliações

- A Counterexample to Additivity of Minimum Output EntropyDocumento4 páginasA Counterexample to Additivity of Minimum Output EntropygejikeijiAinda não há avaliações

- Eiko Kin and Mitsuhiko Takasawa - Pseudo-Anosov Braids With Small Entropy and The Magic 3-ManifoldDocumento47 páginasEiko Kin and Mitsuhiko Takasawa - Pseudo-Anosov Braids With Small Entropy and The Magic 3-ManifoldMopadDeluxeAinda não há avaliações

- An Application of A Matrix Inequality in Quantum Information TheoryDocumento8 páginasAn Application of A Matrix Inequality in Quantum Information TheorygejikeijiAinda não há avaliações

- Additivity of The Classical Capacity of Entanglement-Breaking Quantum ChannelsDocumento7 páginasAdditivity of The Classical Capacity of Entanglement-Breaking Quantum ChannelsgejikeijiAinda não há avaliações

- "Entropy Inequalities For Discrete ChannelsDocumento7 páginas"Entropy Inequalities For Discrete Channelschang lichangAinda não há avaliações

- Qualifying Exams Fall Fall 22 Wo SolutionsDocumento6 páginasQualifying Exams Fall Fall 22 Wo SolutionsbassemaeAinda não há avaliações

- Additivity and Multiplicativity Properties of Some Gaussian Channels For Gaussian InputsDocumento9 páginasAdditivity and Multiplicativity Properties of Some Gaussian Channels For Gaussian InputsgejikeijiAinda não há avaliações

- 2014 - Chakrabarty Et Al. - Integrality Gap of The Hypergraphic Relaxation of Steiner Trees - A Short Proof of A 1.55 Upper BoundDocumento6 páginas2014 - Chakrabarty Et Al. - Integrality Gap of The Hypergraphic Relaxation of Steiner Trees - A Short Proof of A 1.55 Upper Boundsumi_11Ainda não há avaliações

- CR ExtensionDocumento19 páginasCR ExtensionRodrigo MendesAinda não há avaliações

- QMF FilterDocumento17 páginasQMF FilterShilpa Badave-LahaneAinda não há avaliações

- Wiener SpaceDocumento22 páginasWiener SpaceforwardhoAinda não há avaliações

- W ChannelDocumento14 páginasW ChannelmjrahimiAinda não há avaliações

- 13 - Feasibility Conditions of Interference Alignment Via Two Orthogonal SubcarriersDocumento6 páginas13 - Feasibility Conditions of Interference Alignment Via Two Orthogonal SubcarriersLê Dương LongAinda não há avaliações

- Ecuaciones diferenciales parciales y kernels-2021Documento41 páginasEcuaciones diferenciales parciales y kernels-2021Lina Marcela Valencia GutierrezAinda não há avaliações

- Singularities of Holomorphic Foliations: To S. S. Chern & D. C. Spencer On Their 60th BirthdaysDocumento64 páginasSingularities of Holomorphic Foliations: To S. S. Chern & D. C. Spencer On Their 60th BirthdaysandreAinda não há avaliações

- Digital Communications HomeworkDocumento7 páginasDigital Communications HomeworkDavid SiegfriedAinda não há avaliações

- Ijaret: ©iaemeDocumento5 páginasIjaret: ©iaemeIAEME PublicationAinda não há avaliações

- Random walks and algorithms handoutDocumento4 páginasRandom walks and algorithms handoutAndré Marques da SilvaAinda não há avaliações

- Klaus Schmidt and Evgeny Verbitsky - Abelian Sandpiles and The Harmonic ModelDocumento35 páginasKlaus Schmidt and Evgeny Verbitsky - Abelian Sandpiles and The Harmonic ModelHemAO1Ainda não há avaliações

- Solution To Problem 87-6 : The Entropy of A Poisson DistributionDocumento5 páginasSolution To Problem 87-6 : The Entropy of A Poisson DistributionSameeraBharadwajaHAinda não há avaliações

- Van Der Pauw Method On A Sample With An Isolated Hole: Krzysztof Szymański, Jan L. Cieśliński and Kamil ŁapińskiDocumento9 páginasVan Der Pauw Method On A Sample With An Isolated Hole: Krzysztof Szymański, Jan L. Cieśliński and Kamil ŁapińskiDejan DjokićAinda não há avaliações

- Acoustic Admittance Prediction of Two Nozzle Part2Documento3 páginasAcoustic Admittance Prediction of Two Nozzle Part2Abbas AmirifardAinda não há avaliações

- Cardinal Inequalities For Topological Spaces Involving The Weak Lindelof NumberDocumento10 páginasCardinal Inequalities For Topological Spaces Involving The Weak Lindelof NumberGabriel medinaAinda não há avaliações

- Acoustic Communications in Shallow Waters: Sławomir JastrzębskiDocumento8 páginasAcoustic Communications in Shallow Waters: Sławomir JastrzębskiBùi Trường GiangAinda não há avaliações

- 05 NDP - Tollfreq (Ictts2000)Documento9 páginas05 NDP - Tollfreq (Ictts2000)guido gentileAinda não há avaliações

- Statistical Mechanics Lecture Notes (2006), L2Documento5 páginasStatistical Mechanics Lecture Notes (2006), L2OmegaUserAinda não há avaliações

- Ducting and Turbulence Effects On Radio-Wave Propagation in An Atmospheric Boundary LayerDocumento15 páginasDucting and Turbulence Effects On Radio-Wave Propagation in An Atmospheric Boundary LayerEdward Roy “Ying” AyingAinda não há avaliações

- Comparative Analysis of Multiscale Gaussian Random Field Simulation AlgorithmsDocumento55 páginasComparative Analysis of Multiscale Gaussian Random Field Simulation AlgorithmsAnonymous 1Yc9wYDtAinda não há avaliações

- Ps 4 2007Documento3 páginasPs 4 2007Zoubir CHATTIAinda não há avaliações

- ConnesMSZ Conformal Trace TH For Julia Sets PublishedDocumento26 páginasConnesMSZ Conformal Trace TH For Julia Sets PublishedHuong Cam ThuyAinda não há avaliações

- Inverting a DTFTDocumento14 páginasInverting a DTFTDavid MartinsAinda não há avaliações

- Automorphisms of Character VarietiesDocumento11 páginasAutomorphisms of Character VarietiesChristopher SIMONAinda não há avaliações

- Uniform Bounds For The Bilinear Hilbert Transforms, IDocumento45 páginasUniform Bounds For The Bilinear Hilbert Transforms, IPeronAinda não há avaliações

- Diffusion of a Gas Through a Membrane ExplainedDocumento3 páginasDiffusion of a Gas Through a Membrane ExplainedMiguel Angel Hanco ChoqueAinda não há avaliações

- Implementation of Level Set Method Based On OpenFOAM For Capturing The Free Interface in in Compressible Fluid FlowsDocumento10 páginasImplementation of Level Set Method Based On OpenFOAM For Capturing The Free Interface in in Compressible Fluid FlowsAghajaniAinda não há avaliações

- High-SNR Capacity of Wireless Communication Channels in The Noncoherent Setting: A PrimerDocumento7 páginasHigh-SNR Capacity of Wireless Communication Channels in The Noncoherent Setting: A PrimerQing JiaAinda não há avaliações

- Course 311: Galois Theory Problems Academic Year 2007-8Documento4 páginasCourse 311: Galois Theory Problems Academic Year 2007-8Noor FatimaAinda não há avaliações

- Olaf Lechtenfeld and Alexander D. Popov-Supertwistors and Cubic String Field Theory For Open N 2 StringsDocumento10 páginasOlaf Lechtenfeld and Alexander D. Popov-Supertwistors and Cubic String Field Theory For Open N 2 StringsSteam29Ainda não há avaliações

- Suppl MaterialsDocumento12 páginasSuppl MaterialsrichardAinda não há avaliações

- TraceDocumento30 páginasTraceKevin D Silva PerezAinda não há avaliações

- Parks Mcclellan Fir Filter Design 3Documento9 páginasParks Mcclellan Fir Filter Design 3nitesh mudgalAinda não há avaliações

- Exercise 8.9 (Johnson Jonaris Gadelkarim) : X Xy YDocumento6 páginasExercise 8.9 (Johnson Jonaris Gadelkarim) : X Xy YHuu NguyenAinda não há avaliações

- Comput. Methods Appl. Mech. Engrg.: Xiaoliang WanDocumento9 páginasComput. Methods Appl. Mech. Engrg.: Xiaoliang WanTa SanAinda não há avaliações

- Characterization of Depolarizing Channels Using Two-Photon InterferenceDocumento11 páginasCharacterization of Depolarizing Channels Using Two-Photon InterferencegtemporaoAinda não há avaliações

- On Cell Complexities in Hyperplane Arrangements: 1 Complexity of Many CellsDocumento8 páginasOn Cell Complexities in Hyperplane Arrangements: 1 Complexity of Many CellsmfernandexAinda não há avaliações

- Symbol Error Probability and Bit Error Probability For Optimum Combining With MPSK ModulationDocumento14 páginasSymbol Error Probability and Bit Error Probability For Optimum Combining With MPSK ModulationEmily PierisAinda não há avaliações

- Encounters With The Golden Ratio in Fluid Dynamics: M. MokryDocumento10 páginasEncounters With The Golden Ratio in Fluid Dynamics: M. MokryLeon BlažinovićAinda não há avaliações

- Geometry of Multiple Zeta ValuesDocumento9 páginasGeometry of Multiple Zeta ValuesqwertyAinda não há avaliações

- A Multimodal Approach For Frequency Domain Independent Component Analysis With Geometrically-Based InitializationDocumento5 páginasA Multimodal Approach For Frequency Domain Independent Component Analysis With Geometrically-Based InitializationcoolhemakumarAinda não há avaliações

- J. Electroanal. Chem., 101 (1979) 19 - 28Documento10 páginasJ. Electroanal. Chem., 101 (1979) 19 - 28Bruno LuccaAinda não há avaliações

- Polar Codes: Characterization of Exponent, Bounds, and ConstructionsDocumento10 páginasPolar Codes: Characterization of Exponent, Bounds, and ConstructionsnabidinAinda não há avaliações

- Discrete Series of GLn Over a Finite Field. (AM-81), Volume 81No EverandDiscrete Series of GLn Over a Finite Field. (AM-81), Volume 81Ainda não há avaliações

- Gravity Cartan Geometry and Idealized WaywisersDocumento24 páginasGravity Cartan Geometry and Idealized WaywisersgejikeijiAinda não há avaliações

- Cartan ConnectionsDocumento24 páginasCartan ConnectionsgejikeijiAinda não há avaliações

- Hypothesis testing for entangled states produced by spontaneous parametric down-conversionDocumento18 páginasHypothesis testing for entangled states produced by spontaneous parametric down-conversiongejikeijiAinda não há avaliações

- A Partial Order On Classical and Quantum StatesDocumento8 páginasA Partial Order On Classical and Quantum StatesgejikeijiAinda não há avaliações

- Islamic Astronomy: Robert G. MorrisonDocumento30 páginasIslamic Astronomy: Robert G. MorrisongejikeijiAinda não há avaliações

- A Domain Theoretic Model of Qubit ChannelsDocumento15 páginasA Domain Theoretic Model of Qubit ChannelsgejikeijiAinda não há avaliações

- Shannon Theory On General Probabilistic TheoryDocumento18 páginasShannon Theory On General Probabilistic TheorygejikeijiAinda não há avaliações

- TRANSLATIONS OF AVICENNA'S WORKSDocumento8 páginasTRANSLATIONS OF AVICENNA'S WORKSgejikeijiAinda não há avaliações

- Ptolemy, Alhazen, and Kepler and The Problem of Optical Images A. Mark SmithDocumento36 páginasPtolemy, Alhazen, and Kepler and The Problem of Optical Images A. Mark SmithgejikeijiAinda não há avaliações

- Optimal State Discrimination in General Probabilistic TheoriesDocumento9 páginasOptimal State Discrimination in General Probabilistic TheoriesgejikeijiAinda não há avaliações

- An Extension of Gleason's Theorem For Quantum ComputationDocumento13 páginasAn Extension of Gleason's Theorem For Quantum ComputationgejikeijiAinda não há avaliações

- Hypothesis testing for entangled states produced by spontaneous parametric down-conversionDocumento18 páginasHypothesis testing for entangled states produced by spontaneous parametric down-conversiongejikeijiAinda não há avaliações

- QcmipDocumento10 páginasQcmipgejikeijiAinda não há avaliações

- A Limit Relation For Entropy and Channel Capacity Per Unit CostDocumento12 páginasA Limit Relation For Entropy and Channel Capacity Per Unit CostgejikeijiAinda não há avaliações

- Quantum ControlDocumento38 páginasQuantum ControlgejikeijiAinda não há avaliações

- 1 Round 070907Documento27 páginas1 Round 070907gejikeijiAinda não há avaliações

- A Partial Ordering For Binary Channels PDFDocumento11 páginasA Partial Ordering For Binary Channels PDFgejikeijiAinda não há avaliações

- 2 ProverDocumento14 páginas2 ProvergejikeijiAinda não há avaliações

- Permutation Matrices Whose Convex Combinations Are OrthostochasticDocumento11 páginasPermutation Matrices Whose Convex Combinations Are OrthostochasticgejikeijiAinda não há avaliações

- Strong Super AddDocumento1 páginaStrong Super AddgejikeijiAinda não há avaliações

- Fukuda EquivalenceDocumento8 páginasFukuda EquivalencegejikeijiAinda não há avaliações

- Multiplicativity of maximal 2-norms for some CP mapsDocumento16 páginasMultiplicativity of maximal 2-norms for some CP mapsgejikeijiAinda não há avaliações

- Entanglement Under SymmetryDocumento16 páginasEntanglement Under SymmetrygejikeijiAinda não há avaliações

- Additivity and Multiplicativity Properties of Some Gaussian Channels For Gaussian InputsDocumento9 páginasAdditivity and Multiplicativity Properties of Some Gaussian Channels For Gaussian InputsgejikeijiAinda não há avaliações

- Equi Valle Nce of Add I TivityDocumento20 páginasEqui Valle Nce of Add I TivitygejikeijiAinda não há avaliações

- ConjChan1b 1Documento9 páginasConjChan1b 1gejikeijiAinda não há avaliações

- General Entanglement Breaking ChannelDocumento15 páginasGeneral Entanglement Breaking ChannelgejikeijiAinda não há avaliações

- E of of Symmetric Gaussian StateDocumento4 páginasE of of Symmetric Gaussian StategejikeijiAinda não há avaliações

- An Application of A Matrix Inequality in Quantum Information TheoryDocumento8 páginasAn Application of A Matrix Inequality in Quantum Information TheorygejikeijiAinda não há avaliações

- ConjChan1b 1Documento9 páginasConjChan1b 1gejikeijiAinda não há avaliações

- Checklist of Requirements For OIC-EW Licensure ExamDocumento2 páginasChecklist of Requirements For OIC-EW Licensure Examjonesalvarezcastro60% (5)

- Essential Rendering BookDocumento314 páginasEssential Rendering BookHelton OliveiraAinda não há avaliações

- Case Study IndieDocumento6 páginasCase Study IndieDaniel YohannesAinda não há avaliações

- JM Guide To ATE Flier (c2020)Documento2 páginasJM Guide To ATE Flier (c2020)Maged HegabAinda não há avaliações

- Service Manual: Precision SeriesDocumento32 páginasService Manual: Precision SeriesMoises ShenteAinda não há avaliações

- Combined Set12Documento159 páginasCombined Set12Nguyễn Sơn LâmAinda não há avaliações

- Listening Exercise 1Documento1 páginaListening Exercise 1Ma. Luiggie Teresita PerezAinda não há avaliações

- The Service Marketing Plan On " Expert Personalized Chef": Presented byDocumento27 páginasThe Service Marketing Plan On " Expert Personalized Chef": Presented byA.S. ShuvoAinda não há avaliações

- ALXSignature0230 0178aDocumento3 páginasALXSignature0230 0178aAlex MocanuAinda não há avaliações

- Staffing Process and Job AnalysisDocumento8 páginasStaffing Process and Job AnalysisRuben Rosendal De Asis100% (1)

- Techniques in Selecting and Organizing InformationDocumento3 páginasTechniques in Selecting and Organizing InformationMylen Noel Elgincolin ManlapazAinda não há avaliações

- Pemaknaan School Well-Being Pada Siswa SMP: Indigenous ResearchDocumento16 páginasPemaknaan School Well-Being Pada Siswa SMP: Indigenous ResearchAri HendriawanAinda não há avaliações

- Weone ProfileDocumento10 páginasWeone ProfileOmair FarooqAinda não há avaliações

- Easa Management System Assessment ToolDocumento40 páginasEasa Management System Assessment ToolAdam Tudor-danielAinda não há avaliações

- Consumers ' Usage and Adoption of E-Pharmacy in India: Mallika SrivastavaDocumento16 páginasConsumers ' Usage and Adoption of E-Pharmacy in India: Mallika SrivastavaSundaravel ElangovanAinda não há avaliações

- Ovr IbDocumento27 páginasOvr IbAriel CaresAinda não há avaliações

- Real Estate Broker ReviewerREBLEXDocumento124 páginasReal Estate Broker ReviewerREBLEXMar100% (4)

- Certification Presently EnrolledDocumento15 páginasCertification Presently EnrolledMaymay AuauAinda não há avaliações

- Flexible Regression and Smoothing - Using GAMLSS in RDocumento572 páginasFlexible Regression and Smoothing - Using GAMLSS in RDavid50% (2)

- SOP-for RecallDocumento3 páginasSOP-for RecallNilove PervezAinda não há avaliações

- Anti Jamming of CdmaDocumento10 páginasAnti Jamming of CdmaVishnupriya_Ma_4804Ainda não há avaliações

- Consensus Building e Progettazione Partecipata - Marianella SclaviDocumento7 páginasConsensus Building e Progettazione Partecipata - Marianella SclaviWilma MassuccoAinda não há avaliações

- Product Data Sheet For CP 680-P and CP 680-M Cast-In Firestop Devices Technical Information ASSET DOC LOC 1540966Documento1 páginaProduct Data Sheet For CP 680-P and CP 680-M Cast-In Firestop Devices Technical Information ASSET DOC LOC 1540966shama093Ainda não há avaliações

- Maharashtra Auto Permit Winner ListDocumento148 páginasMaharashtra Auto Permit Winner ListSadik Shaikh50% (2)

- LegoDocumento30 páginasLegomzai2003Ainda não há avaliações

- Technical Manual - C&C08 Digital Switching System Chapter 2 OverviewDocumento19 páginasTechnical Manual - C&C08 Digital Switching System Chapter 2 OverviewSamuel100% (2)

- Day 4 Quiz - Attempt ReviewDocumento8 páginasDay 4 Quiz - Attempt ReviewĐỗ Đức AnhAinda não há avaliações

- Cab&Chaissis ElectricalDocumento323 páginasCab&Chaissis Electricaltipo3331100% (13)

- SEC QPP Coop TrainingDocumento62 páginasSEC QPP Coop TrainingAbdalelah BagajateAinda não há avaliações

- Display PDFDocumento6 páginasDisplay PDFoneoceannetwork3Ainda não há avaliações