Escolar Documentos

Profissional Documentos

Cultura Documentos

Neural Networks

Enviado por

srkcsDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Neural Networks

Enviado por

srkcsDireitos autorais:

Formatos disponíveis

Let's start with some biology

Nerve cells in the brain are called neurons. There is an estimated 1010 to the power(1013) neurons in the human brain. Each neuron can make contact with several thousand other neurons. Neurons are the unit which the brain uses to process information.

So what does a neuron look like

A neuron consists of a cell body, with various extensions from it. Most of these are branches called dendrites. There is one much longer process (possibly also branching) called the axon. The dashed line shows the axon hillock, where transmission of signals starts The following diagram illustrates this.

Figure 1 Neuron

The boundary of the neuron is known as the cell membrane. There is a voltage difference (the membrane potential) between the inside and outside of the membrane. If the input is large enough, an action potential is then generated. The action potential (neuronal spike) then travels down the axon, away from the cell body.

Figure 2 Neuron Spiking

Synapses

The connections between one neuron and another are called synapses. Information always leaves a neuron via its axon (see Figure 1 above), and is then transmitted across a synapse to the receiving neuron.

Neuron Firing

Neurons only fire when input is bigger than some threshold. It should, however, be noted that firing doesn't get bigger as the stimulus increases, its an all or nothing arrangement.

Figure 3 Neuron Firing Spikes (signals) are important, since other neurons receive them. Neurons communicate with spikes. The information sent is coded by spikes.

The input to a Neuron

Synapses can be excitatory or inhibitory. Spikes (signals) arriving at an excitatory synapse tend to cause the receiving neuron to fire. Spikes (signals) arriving at an inhibitory synapse tend to inhibit the receiving neuron from firing. The cell body and synapses essentially compute (by a complicated chemical/electrical process) the difference between the incoming excitatory and inhibitory inputs (spatial and temporal summation). When this difference is large enough (compared to the neuron's threshold) then the neuron will fire. Roughly speaking, the faster excitatory spikes arrive at its synapses the faster it will fire (similarly for inhibitory spikes).

So how about artificial neural networks

Suppose that we have a firing rate at each neuron. Also suppose that a neuron connects with m other neurons and so receives m-many inputs "x1 . xm", we could imagine this configuration looking something like:

Figure 4 Artificial Neuron configuration This configuration is actually called a Perceptron. The perceptron (an invention of Rosenblatt [1962]), was one of the earliest neural network models. A perceptron models a neuron by taking a weighted sum of inputs and sending the output 1, if the sum is greater than some adjustable threshold value (otherwise it sends 0 - this is the all or nothing spiking described in the biology, see neuron firing section above) also called an activation function. The inputs (x1,x2,x3..xm) and connection weights (w1,w2,w3..wm) in Figure 4 are typically real values, both postive (+) and negative (-). If the feature of some xi tends to cause the perceptron to fire, the weight wi will be positive; if the feature xi inhibits the perceptron, the weight wi will be negative. The perceptron itself, consists of weights, the summation processor, and an activation function, and an adjustable threshold processor (called bias here after). For convenience the normal practice is to treat the bias, as just another input. The following diagram illustrates the revised configuration.

Figure 5 Artificial Neuron configuration, with bias as additinal input The bias can be thought of as the propensity (a tendency towards a particular way of behaving) of the perceptron to fire irrespective of its inputs. The perceptron configuration network shown in Figure 5 fires if the weighted sum > 0, or if you're into math-type explanations

Activation Function

The activation usually uses one of the following functions.

Sigmoid Function

The stronger the input, the faster the neuron fires (the higher the firing rates). The sigmoid is also very useful in multi-layer networks, as the sigmoid curve allows for differentation (which is required in Back Propogation training of multi layer networks).

or if your into maths type explanations

Step Function

A basic on/off type function, if 0 > x then 0, else if x >= 0 then 1

or if your into math-type explanations

Learning

A foreword on learning

Before we carry on to talk about perceptron learning lets consider a real world example :

How do you teach a child to recognize a chair? You show him examples, telling him, "This is a chair. That is not a chair," until the child learns the concept of what a chair is. In this stage, the child can look at the examples we have shown him and answer correctly when asked, "Is this object a chair?" Furthermore, if we show to the child new objects that he hasn't seen before, we could expect him to recognize correctly whether the new object is a chair or not, providing that we've given him enough positive and negative examples. This is exactly the idea behind the perceptron.

Learning in perceptrons

Is the process of modifying the weights and the bias. A perceptron computes a binary function of its input. Whatever a perceptron can compute it can learn to compute. "The perceptron is a program that learn concepts, i.e. it can learn to respond with True (1) or False (0) for inputs we present to it, by repeatedly "studying" examples presented to it. The Perceptron is a single layer neural network whose weights and biases could be trained to produce a correct target vector when presented with the corresponding input vector. The training technique used is called the perceptron learning rule. The perceptron generated great interest due to its ability to generalize from its training vectors and work with randomly distributed connections. Perceptrons are especially suited for simple problems in pattern classification." Professor Jianfeng feng, Centre for Scientific Computing, Warwick university, England.

The Learning Rule

The perceptron is trained to respond to each input vector with a corresponding target output of either 0 or 1. The learning rule has been proven to converge on a solution in finite time if a solution exists. The learning rule can be summarized in the following two equations: b=b+[T-A] For all inputs i: W(i) = W(i) + [ T - A ] * P(i)

Where W is the vector of weights, P is the input vector presented to the network, T is the correct result that the neuron should have shown, A is the actual output of the neuron, and b is the bias.

Training

Vectors from a training set are presented to the network one after another. If the network's output is correct, no change is made. Otherwise, the weights and biases are updated using the perceptron learning rule (as shown above). When each epoch (an entire pass through all of the input training vectors is called an epoch) of the training set has occured without error, training is complete. At this time any input training vector may be presented to the network and it will respond with the correct output vector. If a vector, P, not in the training set is presented to the network, the network will tend to exhibit generalization by responding with an output similar to target vectors for input vectors close to the previously unseen input vector P.

So what can we use do with neural networks

Well if we are going to stick to using a single layer neural network, the tasks that can be achieved are different from those that can be achieved by multi-layer neural networks. As this article is mainly geared towards dealing with single layer networks, let's dicuss those further:

Single layer neural networks

Single-layer neural networks (perceptron networks) are networks in which the output unit is independent of the others - each weight effects only one output. Using perceptron networks it is possible to achieve linear seperability functions like the diagrams shown below (assuming we have a network with 2 inputs and 1 output)

It can be seen that this is equivalent to the AND / OR logic gates, shown below.

Figure 6 Classification tasks So that's a simple example of what we could do with one perceptron (single neuron essentially), but what if we were to chain several perceptrons together? We could build some quite complex functionality. Basically we would be constructing the equivalent of an electronic circuit. Perceptron networks do however, have limitations. If the vectors are not linearly separable, learning will never reach a point where all vectors are classified properly. The most famous example of the perceptron's inability to solve problems with linearly nonseparable vectors is the boolean XOR problem.

Multi layer neural networks

With muti-layer neural networks we can solve non-linear seperable problems such as the XOR problem mentioned above, which is not acheivable using single layer (perceptron) networks.

Perceptron Configuration ( Single layer network)

The inputs (x1,x2,x3..xm) and connection weights (w1,w2,w3..wm) shown below are typically real values, both positive (+) and negative (-). The perceptron itself, consists of weights, the summation processor, an activation function, and an adjustable threshold processor (called bias here after). For convenience, the normal practice is to treat the bias as just another input. The following diagram illustrates the revised configuration.

The bias can be thought of as the propensity (a tendency towards a particular way of behaving) of the perceptron to fire irrespective of it's inputs. The perceptron configuration network shown above fires if the weighted sum > 0, or if you have into maths type explanations

So that's the basic operation of a perceptron. But we now want to build more layers of these, so let's carry on to the new stuff.

So Now The New Stuff (More layers)

From this point on, anything that is being discussed relates directly to this article's code. In the summary at the top, the problem we are trying to solve was how to use a multilayer neural network to solve the XOR logic problem. So how is this done. Well it's really an incremental build on what Part 1 already discussed. So let's march on. What does the XOR logic problem look like? Well, it looks like the following truth table:

Remember with a single layer (perceptron) we can't actually achieve the XOR functionality, as it is not linearly separable. But with a multi-layer network, this is achievable.

What Does The New Network Look Like

The new network that will solve the XOR problem will look similar to a single layer network. We are still dealing with inputs / weights / outputs. What is new is the addition of the hidden layer.

As already explained above, there is one input layer, one hidden layer and one output layer. It is by using the inputs and weights that we are able to work out the activation for a given node. This is easily achieved for the hidden layer as it has direct links to the actual input layer. The output layer, however, knows nothing about the input layer as it is not directly connected to it. So to work out the activation for an output node we need to make use of the output from the hidden layer nodes, which are used as inputs to the output layer nodes. This entire process described above can be thought of as a pass forward from one layer to the next. This still works like it did with a single layer network; the activation for any given node is still worked out as follows:

Where (wi is the weight(i), and Ii is the input(i) value) You see it the same old stuff, no demons, smoke or magic here. It's stuff we've already covered. So that's how the network looks/works. So now I guess you want to know how to go about training it.

Types Of Learning

There are essentially 2 types of learning that may be applied, to a Neural Network, which is "Reinforcement" and "Supervised"

Reinforcement

In Reinforcement learning, during training, a set of inputs is presented to the Neural Network, the Output is 0.75, when the target was expecting 1.0. The error (1.0 - 0.75) is used for training ('wrong by 0.25'). What if there are 2 outputs, then the total error is summed to give a single number (typically sum of squared errors). Eg "your total error on all outputs is 1.76"

Note that this just tells you how wrong you were, not in which direction you were wrong. Using this method we may never get a result, or it could be a case of 'Hunt the needle'. NOTE : Part 3 of this series will be using a GA to train a Neural Network, which is Reinforcement learning. The GA simply does what a GA does, and all the normal GA phases to select weights for the Neural Network. There is no back propagation of values. The Neural Network is just good or just bad. As one can imagine, this process takes a lot more steps to get to the same result.

Supervised

In Supervised Learning the Neural Network is given more information. Not just 'how wrong' it was, but 'in what direction it was wrong' like 'Hunt the needle' but where you are told 'North a bit', 'West a bit'. So you get, and use, far more information in Supervised Learning, and this is the normal form of Neural Network learning algorithm. Back Propagation (what this article uses, is Supervised Learning)

Learning Algorithm

In brief, to train a multi-layer Neural Network, the following steps are carried out:

Start off with random weights (and biases) in the Neural Network Try one or more members of the training set, see how badly the output(s) are compared to what they should be (compared to the target output(s)) Jiggle weights a bit, aimed at getting improvement on outputs Now try with a new lot of the training set, or repeat again, jiggling weights each time Keep repeating until you get quite accurate outputs This is what this article submission uses to solve the XOR problem. This is also called "Back Propagation" (normally called BP or BackProp) Backprop allows you to use this error at output, to adjust the weights arriving at the output layer, but then also allows you to calculate the effective error 1 layer back, and use this to adjust the weights arriving there, and so on, back-propagating errors through any number of layers.

The trick is the use of a sigmoid as the non-linear transfer function (which was covered in Part 1. The sigmoid is used as it offers the ability to apply differentiation techniques.

Because this is nicely differentiable it so happens that

Which in context of the article can be written as delta_outputs[i] = outputs[i] * (1.0 - outputs[i]) * (targets[i] - outputs[i]) It is by using this calculation that the weight changes can be applied back through the network.

Things To Watch Out For

Valleys: Using the rolled ball metaphor, there may well be valleys like this, with steep sides and a gently sloping floor. Gradient descent tends to waste time swooshing up and down each side of the valley (think ball!)

So what can we do about this. Well we add a momentum term, that tends to cancel out the back and forth movements and emphasizes any consistent direction, then this will go down such valleys with gentle bottom-slopes much more successfully (faster)

Você também pode gostar

- Monitors: - Programming Language Construct That Supports Controlled Access To Shared DataDocumento11 páginasMonitors: - Programming Language Construct That Supports Controlled Access To Shared DatasrkcsAinda não há avaliações

- CPU Scheduling: Basic ConceptsDocumento3 páginasCPU Scheduling: Basic ConceptssrkcsAinda não há avaliações

- DMCSDocumento142 páginasDMCSsrkcsAinda não há avaliações

- Geo GebraDocumento19 páginasGeo Gebrasrkcs100% (2)

- A Short Guide On C ProgrammingDocumento28 páginasA Short Guide On C ProgrammingsrkcsAinda não há avaliações

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5795)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- CE 411 Lecture 03 - Moment AreaDocumento27 páginasCE 411 Lecture 03 - Moment AreaNophiAinda não há avaliações

- Avaya Call History InterfaceDocumento76 páginasAvaya Call History InterfaceGarrido_Ainda não há avaliações

- Brochure sp761lfDocumento10 páginasBrochure sp761lfkathy fernandezAinda não há avaliações

- SA Flight Instructors Training ProceduresDocumento371 páginasSA Flight Instructors Training ProceduresGuilioAinda não há avaliações

- PDK Repair Aftersales TrainingDocumento22 páginasPDK Repair Aftersales TrainingEderson BJJAinda não há avaliações

- SECTION 1213, 1214, 1215: Report By: Elibado T. MaureenDocumento19 páginasSECTION 1213, 1214, 1215: Report By: Elibado T. MaureenJohnFred CativoAinda não há avaliações

- .Preliminary PagesDocumento12 páginas.Preliminary PagesKimBabAinda não há avaliações

- Seamless Fiux Fored Wire - Megafil250Documento1 páginaSeamless Fiux Fored Wire - Megafil250SungJun ParkAinda não há avaliações

- Ruckus Wired Accreditation ExamDocumento15 páginasRuckus Wired Accreditation ExamDennis Dube25% (8)

- TIL 1881 Network Security TIL For Mark VI Controller Platform PDFDocumento11 páginasTIL 1881 Network Security TIL For Mark VI Controller Platform PDFManuel L LombarderoAinda não há avaliações

- Yucca Mountain Safety Evaluation Report - Volume 2Documento665 páginasYucca Mountain Safety Evaluation Report - Volume 2The Heritage FoundationAinda não há avaliações

- Fundamentals of Fluid Mechanics (5th Edition) - Munson, OkiishiDocumento818 páginasFundamentals of Fluid Mechanics (5th Edition) - Munson, OkiishiMohit Verma85% (20)

- F4 Search Help To Select More Than One Column ValueDocumento4 páginasF4 Search Help To Select More Than One Column ValueRicky DasAinda não há avaliações

- DC PandeyDocumento3 páginasDC PandeyPulkit AgarwalAinda não há avaliações

- Nuendo 4 Remote Control Devices EsDocumento24 páginasNuendo 4 Remote Control Devices EsRamon RuizAinda não há avaliações

- 1996 Club Car DS Golf Cart Owner's ManualDocumento48 páginas1996 Club Car DS Golf Cart Owner's Manualdriver33b60% (5)

- Programmable Safety Systems PSS-Range: Service Tool PSS SW QLD, From Version 4.2 Operating Manual Item No. 19 461Documento18 páginasProgrammable Safety Systems PSS-Range: Service Tool PSS SW QLD, From Version 4.2 Operating Manual Item No. 19 461MAICK_ITSAinda não há avaliações

- Vertical Take Off and LandingDocumento126 páginasVertical Take Off and LandingMukesh JindalAinda não há avaliações

- Source 22Documento2 páginasSource 22Alexander FloresAinda não há avaliações

- MC9S12XD128 ProcessadorDocumento1.350 páginasMC9S12XD128 ProcessadorMarcelo OemAinda não há avaliações

- 031 - Btech - 08 Sem PDFDocumento163 páginas031 - Btech - 08 Sem PDFtushant_juneja3470Ainda não há avaliações

- Crisfield - Vol1 - NonLinear Finite Element Analysis of Solids and Structures EssentialsDocumento360 páginasCrisfield - Vol1 - NonLinear Finite Element Analysis of Solids and Structures EssentialsAnonymous eCD5ZRAinda não há avaliações

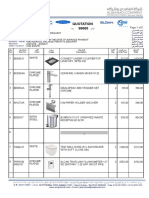

- Quotation 98665Documento5 páginasQuotation 98665Reda IsmailAinda não há avaliações

- CFJV00198BDocumento360 páginasCFJV00198BCheongAinda não há avaliações

- Research Papers in Mechanical Engineering Free Download PDFDocumento4 páginasResearch Papers in Mechanical Engineering Free Download PDFtitamyg1p1j2Ainda não há avaliações

- RT120 ManualDocumento161 páginasRT120 ManualPawełAinda não há avaliações

- E Insurance ProjectDocumento10 páginasE Insurance ProjectChukwuebuka Oluwajuwon GodswillAinda não há avaliações

- Process Control in SpinningDocumento31 páginasProcess Control in Spinningapi-2649455553% (15)

- Photosynthesis LabDocumento3 páginasPhotosynthesis Labapi-276121304Ainda não há avaliações

- ReadMe STEP7 Professional V14 enUS PDFDocumento74 páginasReadMe STEP7 Professional V14 enUS PDFAndre Luis SilvaAinda não há avaliações