Escolar Documentos

Profissional Documentos

Cultura Documentos

ARIMA Time Series

Enviado por

Vineetha VipinDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

ARIMA Time Series

Enviado por

Vineetha VipinDireitos autorais:

Formatos disponíveis

1 Time Series Analysis: The Basics Steve Carpenter, Zoology 535, Spring 2006 Models for Univariate Time

Series A series is an ordered sequence of observations in space or time. We will use t as a subscript to denote the position of an observation in a time series. For example yt is observation t of time series y. The backshift operator BS shifts a time series backward s steps. For example, B yt = yt-1 [1] B2 yt = yt-2 B3 yt = yt-s Serial correlation is the correlation of a time series with itself. The noise model is the model for the stochasticity of a time series that cannot be explained by serial correlation, or by correlation with another variable. An intervention model is the model for proposed causal relationships. In an ecological study, the intervention model relates series of one or more independent variates, or input variates, to the response variate. Its the time-series analog of a regression. Intervention analysis is the process of identifying, fitting, and evaluating combined intervention and noise models for a set of input and response series. Diagnostics for serial correlation include Autocorrelation function or ACF: This function is a plot of the autocorrelation as a function of lag. The autocorrelation is simply the ordinary Pearson productmoment correlation of a time series with itself at a specified lag. The autocorrelation at lag 0 is the correlation of the series with its unlagged self, or 1. The autocorrelation at lag 1 is the correlation of the series with itself lagged one step; the autocorrelation at lag 2 is the correlation of the series with itself lagged 2 steps; and so forth. Partial autocorrelation function or PACF: This function is a plot of the partial autocorrelations versus lag. The partial autocorrelation at a given lag is the autocorrelation that is not accounted for by autocorrelations at shorter lags. To calculate the partial autocorrelation at lag s, yt is first regressed against yt-1, yt-2, . . . yt-(s-1). Then calculate the correlation of the residuals of this regression with yt-s. The partial autocorrelation as the autocorrelation which remains at lag s after the effects of shorter lags (1, 2, . . . s-1) have been removed by regression. [2] [3]

It is useful to know that for a time series of n observations, the smallest significant autocorrelation is about 2/sqrt(n). There are many kinds of models for serially correlated errors. Most ecological time series can be fit by one of a few types of models. We will restrict our attention to the fairly small group of models that usually work for ecological studies. Moving average or MA models have the general form yt = (B) t [4]

In equation 4, y is the observed series which is serially correlated. is a series of errors which are free of serial correlation. Time series without serially correlated errors are sometimes called "white noise". (B) is a polynomial involving the MA parameters and the backshift operator B: (B) = 1 + 1 B + 2 B2 . . . The parameters must lie between -1 and 1. The order of a MA model is the number of parameters. For a pure MA process, the ACF cuts off at a lag corresponding to the order of the process. An ACF that cuts off at lag k is significant at lags of k and below, and nonsignificant for lags greater than k. If the ACF cuts off at lag k, that is evidence that a MA model of order k, or MA(k) model, is appropriate. A MA(k) model has k parameters: 1, 2, . . . k. The MA(1) model is yt = (1 + B) t which is equivalent to yt = t + t-1 The MA(2) model is yt = (1 + 1 B + 2 B2) t = t + 1 t-1 + 2 t-2 [8] [7] [6] [5]

Examples of ACFs and PACFs for data that fit these models are presented in the illustrations on the next page.

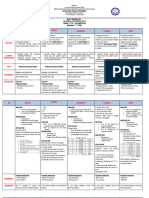

Diagnostics from some simple Moving Average processes.

4 Autoregressive or AR models have the general form (B) yt = t [9]

Here yt and t have the same meaning as in eq. 4. (B) is a polynomial involving the autoregressive parameters (-1 < < 1) and the backshift operator: (B) = 1 + 1 B + 2 B2 . . . [10]

An AR(k) model, or AR model of order k, has k parameters. For a pure AR(k) process, the PACF cuts off at lag k. The AR(1) model is (1 + B) yt = t or yt = - yt-1 + t The AR(2) model is (1 + 1 B + 2 B2) yt = t or yt = - 1yt-1 - 2yt-2 + t [14] [13] [12] [11]

Examples of ACFs and PACFs from data that fit these models are presented in the illustrations on the next page.

Diagnostics for some simple autoregressive processes.

6 Autoregressive Moving Average or ARMA models include both AR and MA terms: (B) yt = (B) t [15]

Since we are usually interested in an expression for y, ARMA models are often written yt = [(B) / (B)] t The ARMA(1,1) model is (1 + B) yt = (1 + B) t or yt = - yt-1 + t + t-1 [18] [17] [16]

Examples of ACFs and PACFs from ARMA(1,1) models are presented in the illustrations below.

Diagnostics for some simple ARMA processes.

7 Fitting Univariate Time Series Models: Model fitting is a sequential process of estimation and evaluation. Here is a pseudocode for fitting a time series model. 1. Inspect the time series to see if it is stationary. If it is not stationary, make some appropriate transformations (see below). 2. Calculate ACF and PACF 3. Guess a form for the model. Start with a simple model. Add new parameters one at a time, only as needed. 4. Fit the model, calculate AIC, and the ACF and PACF for the residuals. 5. If the ACF or PACF of residuals contain significant terms, return to step 3 to make a different guess for the form of the model. Add parameters one at a time. 6. If the ACF and PACF do not contain significant terms, stop. 7. A good model should have non-significant ACF and PACF for residuals, residuals that appear to be stationary and normally-distributed, and a lower AIC than other models. A stationary series is one whose statistical distribution is constant throughout the series. In practice, we will call a series stationary if the mean is roughly constant (no obvious trends) and the variance is roughly constant (scatter around the mean is about the same). If a series is not stationary, it may be impossible to find a stable, well-behaved model. Transformations of the data can be used to make a series more stationary and improve model fitting. Since units are always arbitrary, there is no reason not to transform a series to units that improve model performance. Log transformations often help stabilize the variance of biological data. For more about choosing transformations, see Box et al. (1978). Differencing transforms a series to first differences. The first difference of yt is (1-B)yt. Differencing often makes a nonstationary time series appear to be stationary. Models fit to differenced series are called Autoregressive Integrated Moving Average or ARIMA models.

8 Intervention Analysis Intervention analysis is used to detect effects of an independent variable on a dependent variable in a time series. In some ways it is a time series analog of regression. Intervention analysis can be used to measure the effects of a driver on a response variable, or to measure the effects of disturbances (either experimental or inadvertent) in ecological time series. Definitions Intervention Models link one or more input (or independent) variates to a response (or dependent) variate (Box and Tiao 1975, Wei 1990). For one input variate x and one response variate y, the general form of an intervention model is yt = [(B) / (B)] xt-s + N(t) [19]

Here N(t) is an appropriate noise model for y (for example, an ARMA model). The delay between a change in x and a response in y is s. The intervention model has both a numerator polynomial and a denominator polynomial. The numerator polynomial is (B) = 0 + 1B + 2B2 + . . . [20]

The numerator parameters determine the magnitude of the effect of x on y. These parameters can be any real number; they are not constrained to lie between -1 and +1. The numerator parameters are usually of greatest interest. They are analogs of regression parameters The denominator polynomial is (B) = 1 + 1B + 2B2 + . . . where -1 < < 1. The denominator determines the shape of the response. Graphs of some common intervention models are shown on the next page. [21]

10 Cross-correlations are used to diagnose intervention models. The cross-correlation function (CCF) is a plot of the correlation of x and y versus lag. The CCF can be misleading if x or y are serially correlated. Thus x and y are usually fit to noise models (or "filtered") before calculating the CCF. The lag of the significant CCF term at the shortest lag indicates s, the shift of the intervention effect. If your intervention model has captured all the information about y that is available in x, then the residuals of the model will have no significant cross correlations with x. Fitting intervention models follows a sequential approach similar to the one used to fit univariate time series. 1. Inspect the Y and X time series to see if they are stationary. If they are not stationary, make some appropriate transformations. 2. Calculate ACF and PACF for Y, and the CCF for Y versus X. 3. Guess a form for the model. Start with a simple model. Add new parameters one at a time, only as needed. 4. Fit the model, calculate the AIC, the ACF and PACF for the residuals, and the CCF for the residuals versus X. 5. If the ACF, PACF or CCF contain significant terms, return to step 3 to make a different guess for the form of the model. Add parameters one at a time. 6. If the ACF, PACF, and CCF do not contain significant terms, stop. 7. A good model should have non-significant ACF and PACF for residuals, non-significant CCF for the residuals versus X, residuals that appear to be stationary and normallydistributed, and a lower AIC than other models.

11 REFERENCES Box, G.E.P., G.M. Jenkins and G.C. Reinsel. 1994. Time Series Analysis: Forecasting and Control. Prentice-Hall, Englewood Cliffs, NJ. Box, G.E.P. and G.C. Tiao. 1975. Intervention analysis with application to economic and environmental problems. J. Amer. Stat. Assoc. 70: 70-79. Carpenter, S.R. 1993. Analysis of the ecosystem experiments. Chapter 3 in S.R. Carpenter and J.F. Kitchell (eds), The Trophic Cascade in Lakes. Cambridge Univ. Press, London. Wei, W.W.S. Time Series Analysis. Addison-Wesley, Redwood City, California.

Você também pode gostar

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (399)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (119)

- WLeaf Disease Classification - ResNet50.ipynb - ColaboratoryDocumento12 páginasWLeaf Disease Classification - ResNet50.ipynb - ColaboratoryTefeAinda não há avaliações

- BTonly CH 12345Documento267 páginasBTonly CH 12345jo_hannah_gutierrez43% (7)

- Topic 4 Decision AnalysisDocumento45 páginasTopic 4 Decision AnalysisKiri SorianoAinda não há avaliações

- Sampling With Automatic Gain Control 4Documento31 páginasSampling With Automatic Gain Control 4anandbabugopathotiAinda não há avaliações

- Paper A Parallel Bloom Filter String Searching AlgorithmDocumento28 páginasPaper A Parallel Bloom Filter String Searching AlgorithmbagasAinda não há avaliações

- Clustering: Dr. Akinul Islam JonyDocumento49 páginasClustering: Dr. Akinul Islam Jonymdrubel miahAinda não há avaliações

- DTN4 PDFDocumento4 páginasDTN4 PDFKumara SAinda não há avaliações

- Fourier Transform Solutions and PropertiesDocumento3 páginasFourier Transform Solutions and Propertiesshahroz12Ainda não há avaliações

- Hyperparameter Optimization For Machine Learning Models Based On Bayesian OptimizationDocumento15 páginasHyperparameter Optimization For Machine Learning Models Based On Bayesian OptimizationAminul HaqueAinda não há avaliações

- Facts Are RelativeDocumento2 páginasFacts Are RelativeC CameriniAinda não há avaliações

- Assignment 4.solutionDocumento7 páginasAssignment 4.solutionMohammad SharifAinda não há avaliações

- Test of Chapter 2 Subject: Theory of Machines and MechanismsDocumento1 páginaTest of Chapter 2 Subject: Theory of Machines and MechanismsXuân DanhAinda não há avaliações

- Communications of The ACM: in Computer Sciences Analogous To The Creation ofDocumento3 páginasCommunications of The ACM: in Computer Sciences Analogous To The Creation ofAriel GonzalesAinda não há avaliações

- Hybrid Encryption For Cloud Database Security-AnnotatedDocumento7 páginasHybrid Encryption For Cloud Database Security-AnnotatedClaireAinda não há avaliações

- Test Bank For Introduction To Management Science A Modeling and Case Studies Approach With Spreadsheets 4th Edition by Frederick HillierDocumento37 páginasTest Bank For Introduction To Management Science A Modeling and Case Studies Approach With Spreadsheets 4th Edition by Frederick Hillierdaonhatc2ddrq100% (14)

- DLL-GenMath-Q1 - Nov 7 - 11Documento3 páginasDLL-GenMath-Q1 - Nov 7 - 11Majoy AcebesAinda não há avaliações

- 5-Gorenc Veluscek QF 2016 Prediction of Stock Price Movement Based On Daily High PricesDocumento35 páginas5-Gorenc Veluscek QF 2016 Prediction of Stock Price Movement Based On Daily High PricesDiego ManzurAinda não há avaliações

- Lecture13 of Fluid Dynamics Part 3Documento3 páginasLecture13 of Fluid Dynamics Part 3Khan SabAinda não há avaliações

- Assignm 3Documento3 páginasAssignm 3Luis Bellido C.Ainda não há avaliações

- Cryptography and Network Security Feb 2020Documento1 páginaCryptography and Network Security Feb 2020Akshita dammuAinda não há avaliações

- I. Introduction To Time Series Analysis: X X ... X X XDocumento56 páginasI. Introduction To Time Series Analysis: X X ... X X XCollins MuseraAinda não há avaliações

- Operations Management Solution ManualDocumento1 páginaOperations Management Solution ManualAtif Idrees14% (22)

- Bisection MethodDocumento31 páginasBisection MethodxREDCivicCometxAinda não há avaliações

- PDFDocumento349 páginasPDFOki NurpatriaAinda não há avaliações

- Minimum Cost Spanning Tree Unit-3Documento20 páginasMinimum Cost Spanning Tree Unit-3Phani KumarAinda não há avaliações

- Ise Aiml-Lab ManualDocumento47 páginasIse Aiml-Lab ManualSrijan GuptaAinda não há avaliações

- Nonlinear Control: EACT-635Documento15 páginasNonlinear Control: EACT-635Mahlet WondimuAinda não há avaliações

- Discrete Math 6Documento3 páginasDiscrete Math 6Anastasia AnjelitaAinda não há avaliações

- This Study Resource Was: Problem # 1Documento7 páginasThis Study Resource Was: Problem # 1MELISSA WONG PAU YU -Ainda não há avaliações

- Transportation and Assignment Test BankDocumento15 páginasTransportation and Assignment Test BankMIKASAAinda não há avaliações