Escolar Documentos

Profissional Documentos

Cultura Documentos

Cisco MDS SAN Design Guide - 20121205 - v1.8

Enviado por

sag005Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Cisco MDS SAN Design Guide - 20121205 - v1.8

Enviado por

sag005Direitos autorais:

Formatos disponíveis

Cisco MDS SAN Design Guidelines

Cisco MDS SAN Design Guidelines

Version: 1.8

Owner: Jim Olson Author: Arthur Scrimo

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 1 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Table of Contents

Cisco MDS SAN Design Guidelines ................................................................................ 6 Cisco MDS SAN Design Guide........................................................................................ 7 SAN Topology Guidance ................................................................................................. 8 Considering and planning with SAN oversubscription ................................................... 11 Virtual Storage Area Networks (VSANs) ....................................................................... 18 Solution Design Example............................................................................................... 20 Security and Authentication ........................................................................................... 25 Data Center Network Manager (DCNM) ........................................................................ 26 Conclusion ..................................................................................................................... 27

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 2 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Document history

Document Location

The source of the document can be found in the Team Room, located at: .1 Database Name: Server Name: File Name: TBD TBD Cisco MDS Design Guide 20121205 V1.8.doc

Please address any questions to: Revision History Date of next revision: TBD?

Date of this revision: 8/16/2012

Revision Number 1.0 1.1 1.4 1.6 1.61 1.7 1.8

Revision Summary of Changes Date 1/30/2012 Initialize document creation 1/31/2012 Added the solution section 2/7/2012 Added Port Group Info

Changes marked No No No No No No No

2/22/2012 Updated the IVR Section 2/23/2012 Added DS8800 support on page 15 8/16/2012 Added the Security section 12/5/2012 Added Cabling, Licensing section

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 3 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Approvals

This document requires following approvals: Name Jim Olson Title Distinguished Engineer

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 4 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Distribution

This document has been distributed to: Name Jim Olson Arthur Scrimo Karen Haberli Title Distinguished Engineer Senior SAN Architect Program Manager

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 5 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Cisco MDS SAN Design Guidelines

Purpose of this Document:

The purpose of this document is to describe and explain how to properly design and architect Cisco MDS Storage Area Network (SAN) fabrics for IBM deployments. This document will cover the best practice approach to architecture and will focus on providing the guidelines that have been proven over time to provide the most stable and provide the highest levels of redundancy.

Cisco MDS Series:

http://www.cisco.com/en/US/products/ps5990/index.html

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 6 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Cisco MDS SAN Design Guide

Cisco Advanced SAN Design Using MDS 9500 Series Directors

http://www.cisco.com/en/US/prod/collateral/modules/ps5991/prod_white_paper0900aecd8044c807_ns51 2_Networking_Solutions_White_Paper.html

This is a really good document that Cisco has put together about the more advanced topics in SAN design. Please review and try to adapt these principles in the fabrics that you support.

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 7 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

SAN Topology Guidance

Collapsed Core Topology A common approach to fabric design in smaller fabrics is the collapsed core. This is a good approach if your port requirements are low and does not need the expansiveness of the core/edge topology. Collapsed core is really another way to say that the fabric only has a port count which requires one single switch. Hosts and storage device connections exist within the same physical switch. This is a common approach and can be the foundation for larger fabrics as the customer demands drive the port count and utilization up. In order to meet the demands of high availability and fault tolerance, fabrics are usually deployed in pairs that are typically not connected to each other. This concept creates what is commonly known as dual redundant fabrics. This will allow for fault tolerance and is very common these days in most highly available fabric designs. Even in a collapsed core it is important to deploy dual redundant fabrics.

Core/Edge Topology This approach to SAN topology design seems to be the most common and usually the most versatile for most of accounts that IBM supports. The concept behind the core/edge topology is to simply put the slower less demanding ports out on the edge and the higher speed and more demanding connections in the core. As you can see below the lower speed servers are out on the edge and higher speed devices are attached at the core of the fabric. It is important to understand your environment the best you can so that placement into the fabric is properly designed. This topology allows for the greatest amount of flexibility when it comes to designing a SAN that can withstand the rigors of rapid and unpredictable growth. This topology can quickly be extended to be a multi-tier core/edge as well in which additional switches can be added to create another storage edge, as well as a server edge. Remember that it is very important to deploy these topologies in two separate fully isolated fabrics so that in case there is a catastrophic event in one fabric the customers data can continue to flow and the applications can continue to function in the other fabric. Fabric Inter Switch connections In a Core/Edge topology it is really important to plan around oversubscription between the core and the edge. Typical and safe oversubscription between the host and the edge is about 12:1. This simply means that there needs to be one Inter Switch Link (ISL) for every 12 interfaces at the edge that will connect to the core to get their storage. This is a good starting point for many of the deployments, but may need to be tuned based on the requirements. If you are in a highly virtualized environment with higher speeds and

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 8 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

feeds you may need to lower than 12:1. The opposite can be true of lower speed devices and a higher oversubscription. Port channels should be used wherever possible to maintain redundancy and efficiencies of the connections between switches. Port channels are bundles of ISLs into a single logical link. This will allow for an effective use of those links and can evenly distribute traffic to all ISLs in the port channel. Speed is also a major factor when trying to figure out the Port Channel oversubscription rates and a maximum of 16 interfaces can be added to a port channel. It is important that each of those interfaces be at the same speed. It is not best practice to have different speed interfaces in the same port channel. Example: If you have 384 host ports at the edge of the fabric all running 4Gbps and you need to connect to the core to get to storage resources over 8Gbps ISLs. In order to safely support these connections you would need two Port channels each with eight ISLs. So how did I figure that out? 384 ports at 4Gbps divided by 2 = 192 ports; since I have 8Gbps port channels then I can cut the number of 4Gbps hosts directly in half. Since I want 12:1 oversubscription I divide 192 hosts by 12 to get 16 ISLs. I then roll those 16 ISLs into two separate port channels for redundancy of Port channels. Viola!

Data Protection Only Fabrics (Tape Fabrics) As your environment continues to grow at some point it starts to make sense to consider adding an additional fabric so that you can move some of the critical backup connections off into their own isolated fabric. Freeing up critical port space on your primary fabrics becomes an option as the demand for critical

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 9 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

high speed ports drives your business. In many cases moving the tape or backup infrastructure off into an isolated fabric can provide the buffer of connections needed to move your business forward. Many customers find that the backup infrastructure although very important in the overall data protection plan, becomes less critical then their critical business systems. In this case bringing lower cost smaller switches can be the right solution. Although many tape fabrics are deployed singularly it is recommended that dual tape fabrics be deployed as well. This is even more important if these tape fabrics are comprised of non enterprise class directors.

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 10 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Considering and planning with SAN oversubscription

Regardless of what companies will put on their marketing data sheets all SAN switches are oversubscribed in some way. If they were not oversubscribed the costs to deploy them would be out of reach for most consumers. When used properly oversubscription can play a huge role in driving down costs associated with the deployment of storage infrastructure. So we have a choice, we can either pretend that is does not exist or work within the limitations of the hardware and software. I have seen so many deployments in which the administrators either do not know or do not understand the limitations of the hardware they are deploying and supporting. This section will explain the considerations around oversubscription when deploying Cisco MDS director class switches. Line Cards Understanding your data requirements will really help to ensure that you are selecting the right line cards for your account. Line cards are really the heart of the MDS line of director class switches. In this MDS platform Cisco has created a line of modular line cards that can be inserted into the chassis. In this section I will explain the most relevant line cards for most of the deployments within IBM.

Generation Three Line cards

Cisco MDS 9000 24-Port 8-Gbps Fibre Channel Switching Module

This line card is a high performance line card that has much lower oversubscription and is the perfect card for higher speed devices such as storage and high performance business critical servers. This line card is best used in the core of the fabric and will provide the much needed performance required by bandwidth hungry devices and fabric Inter Switch Links (ISLs). This line card has 192 Gbps of full-duplex bandwidth (96Gb/s egress + 96 Gb/s ingress per line card).

Product Name/Description 24-Port 8-Gbps Fibre Channel Switching Module

Number of Ports Per Port Group

Bandwidth Per Port Group

Maximum Bandwidth Per Port

12.8 Gbps

8 Gbps

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 11 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Cisco MDS 9000 48-Port 8-Gbps Fibre Channel Switching Module

This line card is a lower performance line card that has much higher oversubscription and is the perfect card for servers, virtualization infrastructure, and lower speed tape devices. This line card is best used in the edge of the fabric and will provide the port density required by many environments. This line card has 192 Gbps of full-duplex bandwidth(96Gb/s egress + 96 Gb/s ingress per line card).

Product Name/Description 48-Port 8-Gbps Fibre Channel Switching Module

Number of Ports Per Port Group

Bandwidth Per Port Group

Maximum Bandwidth Per Port

12.8 Gbps

8 Gbps

Cisco MDS 9000 18/4-Port Multiservice Module

This line card is a bit different in that is has both Fibre Channel and IP ports on the same card. This line card is very widely used in site to site replication applications for IBM Global Mirror and other replication technologies. It has 18 ports that can be used for 1/2/4Gbps Fibre Channel and four ports that are 1Gbps Gigabit Ethernet. The idea here is that these Gigabit Ethernet ports will connect up to the Wide Area Network infrastructure for site to site replication using FCIP. This card also has some compression technologies which will allow you to better utilize your WAN links and decrease the time required to keep your data in sync between sites. This card is unique in terms of bandwidth. It provides 76.8 Gb/s of full duplex bandwidth (38.4 Gb/s egress + 38.4 Gb/s ingress)

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 12 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Product Name/Description 18-Port 4-Gbps Fibre Channel Switching Module with 4-Gigabit Ethernet Ports Number of Ports Per Port Group Bandwidth Per Port Group Maximum Bandwidth Per Port

12.8 Gbps

4 Gbps

Generation Four Line cards

Cisco MDS 9000 32-Port 8-Gbps Advanced Fibre Channel Switching Module

This line card is a highest performance line card that Cisco currently offers. This line card is best used in the core of the fabric and will provide the much needed performance required by bandwidth hungry devices and fabric Inter Switch Links (ISLs). This line card has 512 Gbps of full-duplex bandwidth (256 Gb/s egress + 256Gb/s ingress). This card even with all 8Gbps ports is not oversubscribed with Supervisor 2 and Fabric 3 Module. This allows the line cards to utilize the increased slot bandwidth. This card with Fabric 3 module can support up to 32Gbps in each port group (Four ports per port group with eight port groups per line card).

Product Name/Description 32-Port 8-Gbps Advanced Fibre Channel Switching Module

Number of Ports Per Port Group

Bandwidth Per Port Group

Maximum Bandwidth Per Port

32 Gbps

8 Gbps

Cisco MDS 9000 48-Port 8-Gbps Advanced Fibre Channel Switching Module

This line card is still a very high performance line card compared to the Generation three line cards, but includes some built in bandwidth oversubscription. This line card can be used in the core, but it best suited for the edge of the fabric. This line card has 512 Gbps of full-duplex bandwidth (256 Gb/s egress + 256Gb/s ingress). When used in conjunction with the Supervisor 2 and the Fabric 3 modules can support up to 32Gbps in each port group (Six ports per port group with eight port groups per line card).

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 13 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Product Name/Description 48-Port 8-Gbps Advanced Fibre Channel Switching Module Number of Ports Per Port Group Bandwidth Per Port Group Maximum Bandwidth Per Port

32 Gbps

8 Gbps

Here is a list of all of the current line cards and their functions: http://www.cisco.com/en/US/products/ps5991/prod_module_series_home.html

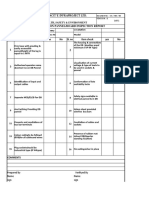

Port groups So lets get right down to itthis is one of the most important considerations when to comes to properly deploying resilient and highly sustainable SAN architectures. If you dont know what port groups are and how they work then this is going to be exactly what you need to keep your SAN out of the next critical situation. Port Groups like they sound are groups of ports that share a set amount of bandwidth. In the case the Generation three (GEN3) line cards have that maximum of 12.8Gbps per port group. That means that all of the ports within that port group have to share that 12.8Gbps regardless of the speed at which they can connect to the fabric. The Generation three 24-port Cisco line card has eight port groups and each port group is comprised of three ports. Port group one contains ports 0, 1, and 2. Port group 2 contains ports 3, 4, and 5 and so on until you get to port group eight which contains ports 21, 22, and 23. Each one of these port groups is allocated a maximum of 12.8Gbps of bandwidth. This means that it is very important that you understand what you are connecting to each port group and what performance characteristics it may have. The 48 port line card is very similar but each port group contains six ports instead of three. That means that there are still eight port groups, but now six ports have to share that 12.8Gbps. It is very important to consider what type of devices and which speeds they connect on the 48 port line card. Generation four line cards tend to have either none or much lower over subscription (see above in the line card section) then the previous generation two and three line cards. This will make design and architecture much easier, but it will still require consideration to keep everything working optimally. Here is a picture of a port group on a 48 port Generation 2 line card so that you can visualize the breakdown:

Keep in mind every card is different and needs to be considered carefully in your overall design. Here is a good link that includes all of the current line cards and the port group information. http://www.cisco.com/en/US/docs/switches/datacenter/mds9000/sw/5_2/configuration/guides/int/nxos/gen2.html#wp1150320

Port Speeds These days everyone thinks that because a port can login at 8Gbps or faster that we should allow that to happen. This is a big misconception, and could be the cause for a lot of performance issues in SAN fabrics. You can use port speed to help limit the amount of bandwidth a device can use and can become a really critical part of proper SAN design. Most of the devices that connect to storage networks cannot usually utilize the amount of bandwidth that they are provided. In many cases servers and tape devices use less than 40% of the available bandwidth. Connecting a device that has peak performance of 80

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 14 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

MBps at 8Gbps speeds is a big waste of potential bandwidth. In this case this port probably only needs to be connected at 2Gbps which would allow the server to grow over time as needed. Coming up with standards for your account is a good idea and should be part of any deployment plan. Here is a good rule of thumb for many accounts. Keep in mind that your account may have a business need to for certain types of devices, but these device types are fairly common in most of the accounts that I have worked with. LTO-4 Tape Drives (120MB/S) LTO-5 Tape Drives (180MB/S) IBM SVC (CG8 Nodes) IBM XIV (Gen 3) IBM DS8700 IBM DS8800 Lower speed Servers Higher speed Servers Virtualization Servers ISLs 2 Gbps Shared or 4Gbps with compression 2 Gbps Shared or 4Gbps with compression 8 Gbps Dedicated 8 Gbps Dedicated 4 Gbps Dedicated 8 Gbps Dedicated 2 Gbps Shared 4 Gbps Shared 8 Gbps Shared 8 Gbps Dedicated

Cisco has a feature in the port settings called Rate Mode that allows the administrator to decide if they will allow the port to be in shared mode or dedicated mode. By default all ports are in shared mode on most of the line cards that Cisco makes. Shared mode simply means that all ports in the port group share the available bandwidth and when the maximum of the port group gets reached each port is throttled to stay within the limits of the port group. Shared mode can work in an environment in which some devices in a port group have a more burst type pattern to their bandwidth needs. Dedicated mode allows the administrator to dedicate bandwidth within a port group. This means that if the administrator decides to allow one port in a port group to connect at 8Gbps dedicated that the other two ports in the port group share the remaining 4.8Gbps (on a 24-port line card). This is a very important consideration and should drive your Cisco SAN fabric designs. The safest design would be to dedicate all three ports at 4Gbps in each port group, which would yield the most predictable bandwidth usage. If you decide to put three 8Gbps ports in port group one. There is a good likely hood that you are going to reach the maximum bandwidth very quickly, as these three ports have the potential to consume 24Gbps of the available 12.8Gbps. As you can see this is probably not a good option and will most likely cause you big performance problems down the road. Just remember that just because a port can log in at 8Gbps does not mean that they can use all 8Gbps, but it also means that it could! It is really important to understand what you are connecting and how this connection may impact the available bandwidth provided.

Port Licensing Many of the smaller switches support On-Demand port activation Licensing. You can expand your SAN connectivity as needed by enabling users to purchase and install additional port licenses. By default, all ports are eligible for license activation. This technology is focused on the small top of rack switches that are design to grow our time as required. Here is an example: On the Cisco MDS 9124 Switch, the first eight ports are licensed by default. You are not required to perform any tasks beyond the default configuration unless you prefer to immediately activate additional ports, make ports ineligible, or move port licenses. Figure 11-1 below shows the ports that are licensed by default for the Cisco MDS 9124 Switch. Figure 11-1 Cisco MDS 9124 Switch Default Port Licenses (fc1/1 - fc1/8)

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 15 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

If you need additional connectivity, you can activate additional ports in 8-port increments with each ondemand port activation license, up to a total of 24 ports. On the Cisco MDS 9134 Switch, the first 24 ports that can operate at 1 Gbps, 2 Gbps, or 4 Gbps are licensed by default. If you need additional connectivity, you can activate the remaining eight ports with one on-demand port activation license. A separate 10G license file is required to activate the remaining two 10-Gbps ports. Figure 11-2 Cisco MDS 9134 Switch Default Port Licenses (fc1/1 - fc1/24)

Cable As technology shifts forward it is important to ensure that your cable infrastructure can support the speed and feeds required in todays technologies. As technology of the switches continues to improve so does the underlying cable technology. It is important to ensure that your aging fiber infrastructure can support upgrades to the newer technology. Here is a quick table:

Applications Wavelength(nm) OM1 Fibre Channel 4 Gb/s 8 Gb/s 16 Gb/s Ethernet 1 Gb/s 10 Gb/s 40 & 100 Gb/s 1 Gb/s 10 Gb/s 850 850 850 1300 1300 275 33 n/s 550 220 (10GBaseLRM) 300 (10GbaseLX4) 850 850 850 70 21 15

Maximum Channel Length (meters) OM2 OM3 OM4

150 50 35

380 150 100

400 200 130

550 82 n/s 550 220 (10GBaseLRM) 300 (10GbaseLX4)

800 300 100 550 220 (10GBaseLRM) 300 (10GbaseLX4)

1100 550 125 550 220 (10GBaseLRM) 300 (10GbaseLX4)

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 16 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Admin Down No, this does not mean that one of your most trusted resources has just fallen down on the job. It refers to a technology that you should take advantage of to protect the other ports within a port group. Once you have reached the limitations within a port group it is a good idea to be proactive and take the other available free ports out of the rotation. If you have four ports in a port group that are using your 12Gbps already, you know that adding another device to that port group could really impact all five devices once it is connected. Sometimes it is best to just leave those two extra ports free and turn them down so that they cannot impact the other four devices already in use. It is important to document these ports either on your switches or in your documentation so that you remember that these ports are down for a good reason. Here is a good example of how I have seen this documented on some accounts:

Port Group P0 P0 P0 P0 P0 P0 Status Service in in in out out out Connection Speed 4 Ded 4 Ded 4 Ded 0 0 0 Actual Speed 4.00 4.00 4.00 0.00 0.00 0.00

End Device CIS01 FC1/1 ISL CIS01 FC1/18 ISL CIS03 FC5/2 ISL Reserved for Bandwidth Reserved for Bandwidth Reserved for Bandwidth

Interface fc1/1 fc1/2 fc1/3 fc1/4 fc1/5 fc1/6

VSAN 101,401 701,2301 101,401 1 1 1

Note ISL to AZ18CIS01 ISL to AZ18CIS01 701/2301 ISL to AZ18CIS03 Out of Service for Bandwidth Out of Service for Bandwidth Out of Service for Bandwidth

Port selection, does it really matter? There are people on both sides of the question on where and how to connect devices. I have spent the last few pages discussing the how to connect part of the question and now I want to discuss the where to. Placing devices on the right line card really matters when it comes to considering fault tolerance and fault isolation. Spanning port channels across line cards can really help to ensure that the environment continues to function in the event a line card may fail. If you put all of your port channels on the same line card and you lose that card then your entire fabric may fail which could severely impact the customer and their business. Servers with multiple Host Bus Adapters (HBAs) in each fabric should also span line cards as required for the same reasons listed above. This simply means that the first connection for a server may go in line card three and the second connection would go to line card four in each fabric. You should always be thinking about how to decrease the impact of a component failure where ever possible.

SAN Naming and Operational considerations It is recommended that fabrics are named in a logical manor. Fabric A should contain the odd domain IDs, odd VSANs, odd tape drives, and odd storage connections. Fabric B should contain all of the even connections. This is a good habit to get in to keep a consistent deployment model. This wont always work based on customer naming scheme or existing infrastructure, but should be used wherever possible.

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 17 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Virtual Storage Area Networks (VSANs)

What the? This is a topic that I am sure that you have heard around every corner in the storage world. This concept has created a lot of buzz in the industry and with it also a lot of confusion. VSANs are simply virtual fabrics on top of a physical platform. The idea is that within a physical director class chassis you can now create fabric level isolation. This level of virtual separation can allow isolation between production, test, development, tape, and business units as a customer might require. Instead of having to deploy unique hardware for each area of the business that needs separation it is much easier to deploy a director class chassis and share the hardware and supports costs across multiple business units or functions while maintaining logical separation. This Cisco technology allows the administrator to decide at the port level which port will reside in which VSAN. This provides a very granular degree of virtualization and also provides a very effective use of resources. VSAN Crazy This feature and its flexibilities can get you in trouble if you are not careful. I would be careful about slicing and dicing your fabrics into hundreds of VSANs. Just like anything else the more fabrics you have the more you have to manage. Keep in mind that by default each VSAN cannot communicate with the other VSANs. So, if you have all of your storage ports in VSAN 10 that means that only ports in VSAN 10 can get to the storage. VSANs must be considered as unique fabrics even if they appear on the same physical fabric. If you have a storage device that you need in all ten of your new VSANs then you would have to provide physical connectivity in to all ten of those VSANs. Unless of course you use IVR IVR Inter-VSAN Routing (IVR) is a technology that allows you to move data between VSANs without having to create a physical connection between the fabrics. This technology is not very widely used for a few reasons. IVR can be pretty expensive and is available as part of the enterprise license. IVR is typically used in Fibre Channel over IP (FCIP) implementations, and comes bundled with the FCIP supported line cards (SSN and 18/4). The typical approach in an FCIP design is to use transit VSANs which allows local VSANs to not have to join fabrics between two locations connected by an IP link. Transit VSANs are used to minimize impacts to the production VSANs. This way if anything happens in the WAN link between the two sites issues are not propagated to the production VSANs. See the example below:

Here is a brief paper on IVR from Ciscos website: http://www.cisco.com/en/US/prod/collateral/ps4159/ps6409/ps4358/prod_white_paper0900aecd8028573 8_ns514_Networking_Solutions_White_Paper.html

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 18 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Properly using VSANs The best approach is usually the simplest approach. I suggest coming up with a logical and practical separation and keep the implantation as small as you can. I find keeping your business units or business functions separated is a good idea, but dont go crazy. I always try to keep my VSAN numbers to the absolute minimum, but based on your design may require more than the norm. I think a good rule of thumb would be to keep the VSAN amount under 10 in each physical fabric. Put your backup VSAN separate from your production VSAN and put the Dev/Test in their own VSAN as well. This way if someone makes a fatal mistake in the Dev/Test VSAN the only thing impacted is Dev/Test. VSANs are a great technology and should almost always be used, but the ability to use them is not a license to over use them. Here is a good example of a proper VSAN breakdown: VSAN Number 10 20 30 40 11 21 31 41 VSAN Use Production Backup Business Critical Development Production Backup Business Critical Development Port Channels 1,2 3 4 5 1,2 3 4 5 Fabric A A A A B B B B

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 19 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Solution Design Example

I think it would be valuable to walk through a real world example and using the design principles above come up with a good solution. I will list out the requirements and then walk you through selecting the right line cards and conceptually walk through the basic design. Customer Requirements: 40 Windows servers all dual attached 10 Windows DB server all dual attached 25 High End IBM AIX Database Servers all dual attached 5 ESXi Hosts each with 10 VMs all dual attached 16 LTO5 Tape Drives for backup and recovery 12 DS8700 Storage Connections High Availability is important to this customer as this is their production environment 50% growth over the next 24 months for the SAN connections

The Solution This solution can fit into a Dual collapsed core topology. This will allow for growth into the future and still meet the HA requirements that the customer requires. Attach all of the AIX hosts at 4Gbps shared in each port group Attach all ESX Hosts at 4Gbps shared in each port group Windows Server will attach at 2Gbps shared Windows DB servers will attach at 4Gbps shared Tape drives will be attached at 2Gbps shared Storage will connect at 8Gbps Dedicated Qty 2 Cisco 9513 Directors were selected (one per fabric) Qty 2 24 Port Generation 3 Line cards per fabric (Total of 4 Line cards) Qty 2 48 Port Generation 3 Line cards per fabric (Total of 4 Line Cards)

Here is the layout that was created to support these requirements for the first fabric. Keep in mind the second fabric would be a mirror of fabric 1:

Cisco MDS 9513 Area PG Slot/Port Status Name:Switch 1 WWN: Type Speed IP Address:10.6.210.x Domain ID: Name / Alias / Zone VSAN Description

Slot 1 1/2/4/8 Gbps 24-Port FC Module 12.8 Gb/s per port group (Gen3) 1 2 3 4 5 6 PG1 PG0 fc1/1 fc1/2 fc1/3 fc1/4 fc1/5 fc1/6 Online Online Online F F F 4S 4S 4S Online Online F F 8D 4S Storage 1 AIX Server 1 Out of Service for Bandwidth AIX Server 2 AIX Server 3 AIX Server 4 10 10 10 AIX Server AIX Server AIX Server 10 10 XIV AIX Server

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 20 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 PG7 PG6 PG5 PG4 PG3 PG2 fc1/7 fc1/8 fc1/9 fc1/10 fc1/11 fc1/12 fc1/13 fc1/14 fc1/15 fc1/16 fc1/17 fc1/18 fc1/19 fc1/20 fc1/21 fc1/22 fc1/23 fc1/24 Online Online F F 8S 8S Online Online Online Online Online Online Online Online F F F F F F F F 4S 4S 4S 4S 4S 4S 4S 8S Online Online F F 8D 4S Online Online F F 8D 4S Storage 2 AIX Server 5 Out of Service for Bandwidth Storage 3 AIX Server 6 Out of Service for Bandwidth AIX Server 13 AIX Server 14 AIX Server 15 AIX Server 16 AIX Server 17 AIX Server 18 AIX Server 25 ESX Server 1 Out of Service for Bandwidth ESX Server 2 ESX Server 3 Out of Service for Bandwidth 10 10 ESX Server ESX Server 10 10 10 10 10 10 10 10 AIX Server AIX Server AIX Server AIX Server AIX Server AIX Server AIX Server ESX Server 10 10 XIV AIX Server 10 10 XIV AIX Server

Slot 2 1/2/4/8 Gbps 24-Port FC Module 12.8 Gb/s per port group (Gen3) 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 PG7 PG6 PG5 PG4 PG3 PG2 PG1 PG0 fc2/1 fc2/2 fc2/3 fc2/4 fc2/5 fc2/6 fc2/7 fc2/8 fc2/9 fc2/10 fc2/11 fc2/12 fc2/13 fc2/14 fc2/15 fc2/16 fc2/17 fc2/18 fc2/19 fc2/20 fc2/21 fc2/22 Online Online Online Online Online Online Online Online F F F F F F F F 4S 4S 4S 4S 4S 4S 8S 8S Online Online F F 8D 4S Online Online Online Online Online F F F F F 4S 4S 4S 8D 4S Online Online F F 8D 4S Storage 4 AIX Server 7 Out of Service for Bandwidth AIX Server 8 AIX Server 9 AIX Server 10 Storage 5 AIX Server 11 Out of Service for Bandwidth Storage 6 AIX Server 12 Out of Service for Bandwidth AIX Server 19 AIX Server 20 AIX Server 21 AIX Server 22 AIX Server 23 AIX Server 24 ESX Server 4 ESX Server 5 Out of Service for Bandwidth 10 10 10 10 10 10 10 10 AIX Server AIX Server AIX Server AIX Server AIX Server AIX Server ESX Server ESX Server 10 10 XIV AIX Server 10 10 10 10 10 AIX Server AIX Server AIX Server XIV AIX Server 10 10 XIV AIX Server

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 21 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

47 48 fc2/23 fc2/24 Slot 3 1/2/4/8 Gbps 48-Port FC Module 12.8 Gb/s per port group (Gen3) 49 50 51 PG0 52 53 54 55 56 57 PG1 58 59 60 61 62 63 PG2 64 65 66 67 68 69 PG3 70 71 72 73 74 75 PG4 76 77 78 79 80 81 PG5 82 83 84 85 PG6 86 fc3/38 fc3/34 fc3/35 fc3/36 fc3/37 Online F 2S Windows Server 40 10 2008 Server fc3/28 fc3/29 fc3/30 fc3/31 fc3/32 fc3/33 Online Online Online F F F 2S 2S 2S Windows Server 37 Windows Server 38 Windows Server 39 10 10 10 2008 Server 2008 Server 2008 Server Online F 2S Windows Server 36 10 2008 Server fc3/22 fc3/23 fc3/24 fc3/25 fc3/26 fc3/27 Online Online Online Online Online Online F F F F F F 2S 4S 4S 2S 2S 2S Windows Server 24 Windows DB Server 3 Windows DB Server 4 Windows Server 33 Windows Server 34 Windows Server 35 10 10 10 10 10 10 2008 Server Win DB Server Win DB Server 2008 Server 2008 Server 2008 Server fc3/16 fc3/17 fc3/18 fc3/19 fc3/20 fc3/21 Online Online Online Online Online Online F F F F F F 2S 4S 4S 2S 2S 2S Windows Server 20 Windows DB Server 1 Windows DB Server 2 Windows Server 21 Windows Server 22 Windows Server 23 10 10 10 10 10 10 2008 Server Win DB Server Win DB Server 2008 Server 2008 Server 2008 Server fc3/10 fc3/11 fc3/12 fc3/13 fc3/14 fc3/15 Online Online Online Online Online Online F F F F F F 2S 2S 2S 2S 2S 2S Windows Server 8 LTO Tape 6 LTO Tape 8 Windows Server 17 Windows Server 18 Windows Server 19 10 20 20 20 10 10 2008 Server LTO 5 LTO 5 2008 Server 2008 Server 2008 Server fc3/4 fc3/5 fc3/6 fc3/7 fc3/8 fc3/9 Online Online Online Online Online Online F F F F F F 2S 2S 2S 2S 2S 2S Windows Server 4 LTO Tape 2 LTO Tape 4 Windows Server 5 Windows Server 6 Windows Server 7 10 20 20 10 10 10 2008 Server LTO 5 LTO 5 2008 Server 2008 Server 2008 Server fc3/1 fc3/2 fc3/3 Online Online Online F F F 2S 2S 2S Windows Server 1 Windows Server 2 Windows Server 3 20 10 10 2008 Server 2008 Server 2008 Server

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 22 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

87 88 89 90 91 92 93 PG7 94 95 96 fc3/46 fc3/47 fc3/48 Slot 4 1/2/4/8 Gbps 48-Port FC Module 12.8 Gb/s per port group (Gen3) 97 98 99 PG0 100 101 102 103 104 105 PG1 106 107 108 109 110 111 PG2 112 113 114 115 116 117 PG3 118 119 120 121 122 123 PG4 124 125 126 fc3/28 fc3/29 fc3/30 fc3/22 fc3/23 fc3/24 fc3/25 fc3/26 fc3/27 Online Online Online Online Online F F F F F 2S 4S 4S 4S 4S Windows Server 32 Windows DB Server 7 Windows DB Server 8 Windows DB Server 9 Windows DB Server 10 10 10 10 10 10 2008 Server Win DB Server Win DB Server Win DB Server Win DB Server fc3/16 fc3/17 fc3/18 fc3/19 fc3/20 fc3/21 Online Online Online Online Online Online F F F F F F 2S 4S 4S 2S 2S 2S Windows Server 28 Windows DB Server 5 Windows DB Server 6 Windows Server 29 Windows Server 30 Windows Server 31 10 10 10 10 10 10 2008 Server Win DB Server Win DB Server 2008 Server 2008 Server 2008 Server fc3/10 fc3/11 fc3/12 fc3/13 fc3/14 fc3/15 Online Online Online Online Online Online F F F F F F 2S 2S 2S 2S 2S 2S Windows Server 16 LTO Tape 14 LTO Tape 16 Windows Server 25 Windows Server 26 Windows Server 27 10 20 20 10 10 10 2008 Server LTO 5 LTO 5 2008 Server 2008 Server 2008 Server fc3/4 fc3/5 fc3/6 fc3/7 fc3/8 fc3/9 Online Online Online Online Online Online F F F F F F 2S 2S 2S 2S 2S 2S Windows Server 12 LTO Tape 10 LTO Tape 12 Windows Server 13 Windows Server 14 Windows Server 15 10 20 20 20 10 10 2008 Server LTO 5 LTO 5 2008 Server 2008 Server 2008 Server fc3/1 fc3/2 fc3/3 Online Online Online F F F 2S 2S 2S Windows Server 9 Windows Server 10 Windows Server 11 20 10 10 2008 Server 2008 Server 2008 Server fc3/39 fc3/40 fc3/41 fc3/42 fc3/43 fc3/44 fc3/45

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 23 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

127 128 129 PG5 130 131 132 133 134 135 PG6 136 137 138 139 140 141 PG7 142 143 144 fc3/46 fc3/47 fc3/48 fc3/40 fc3/41 fc3/42 fc3/43 fc3/44 fc3/45 fc3/34 fc3/35 fc3/36 fc3/37 fc3/38 fc3/39 fc3/31 fc3/32 fc3/33

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 24 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Security and Authentication

AAA Configuration This section describes how to configure authentication, authorization, and accounting (AAA) on the MDS. The objective is to demonstrate common configuration options when enabling AAA services to control access to the command line interface (CLI) on the Cisco MDS. AAA services are commonly configured in conjunction with other protocols such as LDAP, RADIUS or TACACS+ that can interface with one or more AAA servers. The AAA feature allows you to verify the identity of, grant access to, and track the actions of users managing a device running NX-OS device, for example a Nexus 7000 or an MDS. Based on the user ID and password combination that you provide, Cisco NX-OS devices perform local authentication or authorization using the local database or remote authentication or authorization using one or more AAA servers. The Cisco NX-OS software supports authentication, authorization, and accounting independently. For example, you can configure authentication and authorization without configuring accounting. A Cisco Secure Access Control Server (ACS) provides central security services for the Cisco MDS and can be used as a AAA Server but it is not mandatory to use Cisco ACS, Cisco MDS interfaces with other AAA Servers as well. Where possible it is best to centralized authentication, but this is not always an option. In many cases local authentication is required if the account does not have the infrastructure to support centralized authentication. Its IBM best practice to use a AAA (RADIUS or TACACS+) server to better manage and deploy user rights and authentication. Regardless of what authentication method you use, you better makes sure you are following the rules and working with the security team to understand the requirements for the account. Role Based Access Control (RBAC) On a Cisco MDS you can create and manage user accounts and assign roles to those user accounts that limit access to operations on the device. RBAC allows you to define the rules for an assigned role that restrict access that the user has. RBAC is important to ensure that each user has the appropriate level of access for their respective role. Dont be lazy! Take the time to figure it out up front instead of getting dinged on the next audit. Check out the Cisco Security Configuration Guide below for more detailed information: http://www.cisco.com/en/US/docs/switches/datacenter/mds9000/sw/nxos/configuration/guides/sec/sec_cli_4_2_published/sec.html

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 25 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Data Center Network Manager (DCNM)

DCNM SAN Cisco has recently released a new product called DCNM SAN which has replaced the former Cisco Fabric Manager Server (FMS). This is a tool that every production SAN needs for a variety of different reasons. This tool can help manage your SAN devices, gather and report performance, alert when there are issues and generally help SAN administrators to stay on top of their environment. The dashboard below gives you a nice view of what is going on in your environment and provides a quick glance to the things that need attention. One of the biggest and most useful areas is the Top Storage Ports which shows the ports that are seeing the highest utilization in your environment. In case you were wondering if you have Cisco Fabric Manager Server today then this is a free upgrade for IBM branded devices and does not require any additional licenses. If you dont have it you should!

Here is a link to some more information about DCNM SAN: http://www.cisco.com/en/US/products/ps9369/index.html

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 26 of 27

Date: December 5, 2012 Version: 1.8

Cisco MDS SAN Design Guidelines

Conclusion

This document is intended to be used a practical guide to Cisco MDS design and architectural guidance. It is not intended to replace any of the current documentation that IBM and Cisco have released in support of these products. Wading through thousands of pages of technical documentation can be cumbersome and amazingly boring. I wanted to try and provide a perspective from someone that is dealing with this type of design and architecture every day. I hope that you find this guide useful and informative, but just in case you want more information I would suggest checking both the Cisco and IBM websites listed below: http://www.cisco.com/en/US/products/hw/ps4159/ps4358/index.html http://www-03.ibm.com/systems/networking/switches/san/ctype/9500/index.html

Document: Title:

Global Storage Service Line Process Cisco MDS SAN Design Guidelines Page 27 of 27

Date: December 5, 2012 Version: 1.8

Você também pode gostar

- Security MetricsDocumento41 páginasSecurity MetricsAle Wenger100% (1)

- Server Hardening Checklist Win 2008r2Documento6 páginasServer Hardening Checklist Win 2008r2ouadrhirisniAinda não há avaliações

- DRBD-Cookbook: How to create your own cluster solution, without SAN or NAS!No EverandDRBD-Cookbook: How to create your own cluster solution, without SAN or NAS!Ainda não há avaliações

- Veeam Backup 9 5 U4 User Guide VsphereDocumento1.246 páginasVeeam Backup 9 5 U4 User Guide VsphereGC ToolbarAinda não há avaliações

- HPE MSA 2050,2052 Storage and Microsoft Windows Server 2016Documento63 páginasHPE MSA 2050,2052 Storage and Microsoft Windows Server 2016nid56490% (1)

- Avamar Data Domain Whitepaper PDFDocumento30 páginasAvamar Data Domain Whitepaper PDFSyedZulhilmiAinda não há avaliações

- 3PAR Customer PresentationDocumento118 páginas3PAR Customer PresentationPrasadValluraAinda não há avaliações

- Hpe Alletra 9000 - Tier 0 Cloud Native All-Nvme Primary StorageDocumento55 páginasHpe Alletra 9000 - Tier 0 Cloud Native All-Nvme Primary StoragePaul CondoAinda não há avaliações

- Pre Joining GuidelinesDocumento21 páginasPre Joining GuidelinesHarsha VardhanAinda não há avaliações

- SRG - Connectrix B-Series Architecture and Management Overview FinalDocumento79 páginasSRG - Connectrix B-Series Architecture and Management Overview FinalMohit GautamAinda não há avaliações

- Veeam User Guide Backup Replication Community EditionDocumento31 páginasVeeam User Guide Backup Replication Community EditionFlorin Radu DumitriuAinda não há avaliações

- MDS Series Switch Zoning Via CLIDocumento3 páginasMDS Series Switch Zoning Via CLISathish VikruthamalaAinda não há avaliações

- VXRAIL TD EN v3.0Documento189 páginasVXRAIL TD EN v3.0yassine_angeAinda não há avaliações

- MRROBOTepiso GuideDocumento67 páginasMRROBOTepiso Guidelauramera100% (2)

- NetWorker 9.0 Overview - SRG PDFDocumento47 páginasNetWorker 9.0 Overview - SRG PDFmoinkhan31Ainda não há avaliações

- TR-4471-0218-NetApp E-Series EF-Series Best Practices With VeeamDocumento56 páginasTR-4471-0218-NetApp E-Series EF-Series Best Practices With Veeamta cloudAinda não há avaliações

- TCI2109 Lab Guide v2-0Documento126 páginasTCI2109 Lab Guide v2-0sorin_gheorghe20100% (1)

- NetBackup and VCSDocumento6 páginasNetBackup and VCSmohantysAinda não há avaliações

- SAN Switch Trobleshooting SwitchDocumento54 páginasSAN Switch Trobleshooting SwitchSantosh KumarAinda não há avaliações

- Cryptocurrencies - Advantages and Disadvantages: Flamur Bunjaku, Olivera Gjorgieva-Trajkovska, Emilija Miteva-KacarskiDocumento9 páginasCryptocurrencies - Advantages and Disadvantages: Flamur Bunjaku, Olivera Gjorgieva-Trajkovska, Emilija Miteva-KacarskiTheGaming ZoneAinda não há avaliações

- Hitachi Storage Command Suite Replication ManagerDocumento21 páginasHitachi Storage Command Suite Replication ManageraminhirenAinda não há avaliações

- Hitachi Storage Systems IntroductionDocumento41 páginasHitachi Storage Systems Introductionaksmsaid100% (1)

- Goodbye Legacy VDIDocumento22 páginasGoodbye Legacy VDIJesús MorenoAinda não há avaliações

- Docu85417 RecoverPoint For Virtual Machines 5.1 Installation and Deployment GuideDocumento92 páginasDocu85417 RecoverPoint For Virtual Machines 5.1 Installation and Deployment Guidevijayen123Ainda não há avaliações

- Ebook Rubrik Vmug PDFDocumento565 páginasEbook Rubrik Vmug PDFjitendra singhAinda não há avaliações

- FC StandarDocumento196 páginasFC StandarvatiionsonAinda não há avaliações

- Zerto Virtual Manager VSphere Administration GuideDocumento297 páginasZerto Virtual Manager VSphere Administration GuideSergio Mateluna DuránAinda não há avaliações

- Vmware Vsphere With Ontap Best Practices: Netapp SolutionsDocumento36 páginasVmware Vsphere With Ontap Best Practices: Netapp SolutionsSenthilkumar MuthusamyAinda não há avaliações

- Nimble Storage Data MigrationDocumento10 páginasNimble Storage Data MigrationSomAinda não há avaliações

- VMware On NetApp Solutions Student Guide and LabsDocumento314 páginasVMware On NetApp Solutions Student Guide and LabsSrinivasa Rao SAinda não há avaliações

- Storage Exam Study GuideDocumento33 páginasStorage Exam Study GuidebrpindiaAinda não há avaliações

- Joint Publication 2-01, Joint and National Intelligence Support To Military Operations, 2012, Uploaded by Richard J. CampbellDocumento281 páginasJoint Publication 2-01, Joint and National Intelligence Support To Military Operations, 2012, Uploaded by Richard J. CampbellRichard J. Campbell https://twitter.com/Ainda não há avaliações

- Cisco Quick Reference Guide - August - 2010Documento0 páginaCisco Quick Reference Guide - August - 2010sag005Ainda não há avaliações

- For Electrical PannelDocumento2 páginasFor Electrical Panneljithin shankarAinda não há avaliações

- TRONSCAN - TRON BlockChain ExplorerDocumento1 páginaTRONSCAN - TRON BlockChain ExplorerwebpcnetlinkAinda não há avaliações

- The Critical Information Security BenchmarkDocumento128 páginasThe Critical Information Security BenchmarkAhmad Zam ZamiAinda não há avaliações

- DD Os 5.4 Differences Full Version - Student GuideDocumento183 páginasDD Os 5.4 Differences Full Version - Student Guidesorin_gheorghe20Ainda não há avaliações

- Veeam One 10 0 Deployment Guide PDFDocumento255 páginasVeeam One 10 0 Deployment Guide PDFBack Office ScannerAinda não há avaliações

- SAN101 BrocadeDocumento46 páginasSAN101 Brocadedeniz isikAinda não há avaliações

- E I P / E P: Project/LocationDocumento2 páginasE I P / E P: Project/LocationAsad Maher100% (3)

- Red Hat OpenShift With NetAppDocumento110 páginasRed Hat OpenShift With NetAppnabilovic01Ainda não há avaliações

- VMAX3 Business Continuity Management Lab GuideDocumento176 páginasVMAX3 Business Continuity Management Lab GuideMohit GautamAinda não há avaliações

- NetApp Cluster ConceptsDocumento28 páginasNetApp Cluster Conceptssubhrajitm47Ainda não há avaliações

- Poc Guide Post Checklist For VsanDocumento42 páginasPoc Guide Post Checklist For VsanemcviltAinda não há avaliações

- StorageDocumento86 páginasStorageMufti ArdiantAinda não há avaliações

- Isilon 8.0 Setup, Configuration and ManagementDocumento102 páginasIsilon 8.0 Setup, Configuration and ManagementTanmoy Debnath100% (1)

- Nutanix and Vmware Vsan Buyer'S Guide and Reviews September 2019Documento13 páginasNutanix and Vmware Vsan Buyer'S Guide and Reviews September 2019Alan AraújoAinda não há avaliações

- Red Hat Satellite 6.9 Quick Start Guide en UsDocumento25 páginasRed Hat Satellite 6.9 Quick Start Guide en UsSELIMAinda não há avaliações

- BTEC Assignment Brief: (For NQF Only)Documento3 páginasBTEC Assignment Brief: (For NQF Only)JwsAinda não há avaliações

- MDS Quick CommandsDocumento2 páginasMDS Quick CommandsganeshemcAinda não há avaliações

- NetApp Shelf CablingDocumento2 páginasNetApp Shelf CablingStephen KuperAinda não há avaliações

- Dse6321 ExamenDocumento49 páginasDse6321 ExamenBoubacar TOUREAinda não há avaliações

- Intro Dell EMC HCI Prod FamiliesDocumento32 páginasIntro Dell EMC HCI Prod Familiesmore_nerdyAinda não há avaliações

- Master Storage Spaces DirectDocumento282 páginasMaster Storage Spaces DirectZoranZasovskiAinda não há avaliações

- Hitachi Unified Storage Replication User GuideDocumento822 páginasHitachi Unified Storage Replication User Guidepm2009iAinda não há avaliações

- Veeam Backup 12 Release NotesDocumento41 páginasVeeam Backup 12 Release NotesnetvistaAinda não há avaliações

- Isilon Cluster Preventative Maintenance ChecklistDocumento26 páginasIsilon Cluster Preventative Maintenance ChecklistDavid GiriAinda não há avaliações

- h16388 Dell Emc Unity Storage Microsoft Hyper VDocumento60 páginash16388 Dell Emc Unity Storage Microsoft Hyper VdipeshAinda não há avaliações

- HP 3PAR Storeserv StorageDocumento16 páginasHP 3PAR Storeserv StoragejcrlimaAinda não há avaliações

- Veeam One 9 5 Deployment GuideDocumento184 páginasVeeam One 9 5 Deployment GuideMohamed Amine MakAinda não há avaliações

- Cisco UCS Administration Guide PDFDocumento154 páginasCisco UCS Administration Guide PDFSambath KumarAinda não há avaliações

- Netapp Study GuideDocumento13 páginasNetapp Study GuideDonald MillerAinda não há avaliações

- Nimblestorage SMB Solution GuideDocumento23 páginasNimblestorage SMB Solution Guideamrutha.naik7680Ainda não há avaliações

- Netapp Aff A220Documento63 páginasNetapp Aff A220Evgeniy100% (1)

- Vmware Infrastructure Architecture Overview: White PaperDocumento14 páginasVmware Infrastructure Architecture Overview: White PaperZied BannourAinda não há avaliações

- Zerto 10 Questions WPDocumento4 páginasZerto 10 Questions WPStefica van Barneveld-JuricAinda não há avaliações

- Hitachi Nas Replication OverviewDocumento17 páginasHitachi Nas Replication OverviewmuralivibiAinda não há avaliações

- Not Just A Big SwitchDocumento2 páginasNot Just A Big Switchsag005Ainda não há avaliações

- EMC Asynchronous ReplicationDocumento0 páginaEMC Asynchronous Replicationsag005Ainda não há avaliações

- Cicso Datasheet 9513Documento17 páginasCicso Datasheet 9513sag005Ainda não há avaliações

- Information Technology Act, 2000 Amendment 2008Documento14 páginasInformation Technology Act, 2000 Amendment 2008Viraaj Shah0% (1)

- Eduroam (UK) Microsoft NPS Configuration Guide v0.1Documento53 páginasEduroam (UK) Microsoft NPS Configuration Guide v0.1freddy_5725247Ainda não há avaliações

- Telstra Gateway Pro V7610 User GuideDocumento51 páginasTelstra Gateway Pro V7610 User GuideTom StuartAinda não há avaliações

- Case Study NIST CybersecurityDocumento1 páginaCase Study NIST CybersecuritygeorgeAinda não há avaliações

- OjtrainingDocumento2 páginasOjtrainingMissy SamanthaAinda não há avaliações

- Adult Passport Appl Instructions 12 Inch PDFDocumento4 páginasAdult Passport Appl Instructions 12 Inch PDFDrayeeAinda não há avaliações

- Modification Attacks - Case StudiesDocumento6 páginasModification Attacks - Case StudiesnarenAinda não há avaliações

- 2600 26-1Documento34 páginas2600 26-1Si PhuAinda não há avaliações

- CH1 CNSDocumento76 páginasCH1 CNSSARDAR PATELAinda não há avaliações

- 3.g. CPR - Part - 26 - FinalDocumento28 páginas3.g. CPR - Part - 26 - FinalChristian MenguitaAinda não há avaliações

- MIL-HDBK-10131A Design Guidelines For Physical Security of FacilitiesDocumento289 páginasMIL-HDBK-10131A Design Guidelines For Physical Security of Facilitiespazz00% (1)

- CEAT Social Media Policy PDFDocumento3 páginasCEAT Social Media Policy PDFAmit YadavAinda não há avaliações

- LogDocumento40 páginasLogAMELIA ANGGRENIAinda não há avaliações

- 9491 Ps DatasheetDocumento2 páginas9491 Ps Datasheetmehdi227Ainda não há avaliações

- M.Phil Computer Science Biometric System ProjectsDocumento3 páginasM.Phil Computer Science Biometric System ProjectskasanproAinda não há avaliações

- Keys WifiDocumento2 páginasKeys Wificrist Ian IAAinda não há avaliações

- Work How You Want With The World's Most Secure Convertible LaptopDocumento5 páginasWork How You Want With The World's Most Secure Convertible LaptopAnonymous PeM4hbHuBAinda não há avaliações

- 1MRK511311-UEN - en Technical Manual Bay Control REC670 2.0 IECDocumento1.124 páginas1MRK511311-UEN - en Technical Manual Bay Control REC670 2.0 IECPhuongThao NguyenAinda não há avaliações

- Unit 2Documento21 páginasUnit 2Vishal Kumar 1902712Ainda não há avaliações