Escolar Documentos

Profissional Documentos

Cultura Documentos

Transport Layer Services and Protocols

Enviado por

Febin KfTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Transport Layer Services and Protocols

Enviado por

Febin KfDireitos autorais:

Formatos disponíveis

MODULE 3

STUDY OF TRANSPORT LAYER. It is the heart of the whole protocol hierarchy. Its task is to provide reliable, cost -effective data transport from the source machine to the destination machine Without the transport layer, the whole concept of layered protocols would make little sense.

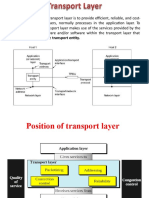

EXPLAIN THE SERVICES PROVIDED TO UPPER LAYERS. The ultimate goal of the transport layer is to provide efficient, reliable, and cost-effective service to its users. To achieve this goal, the transport layer makes use of the services provided by the network layer. The hardware and/or software within the transport layer that does the work is called the transport entity . The transport entity can be located in the op erating system kernel, in a separate user process. The logical relationship is shown below

There are two types of network service, connection -oriented and connectionless, Connections have three phases: establishment, data transfer, and release. If the transport layer service is so similar to the network layer service, why are there two distinct layers? o The transport code runs entirely on the users' machines, but the network layer mostly runs on the routers, which are operated by the carrier The existence of the transport layer makes it possible for the transport service to be more reliable than the underlying network service.

Lost packets and mangled data can be detected and compensated for by the transport layer. Application programmers can write code considering standard set of primitives and have these programs work on a wide variety of networks, without worrying about dealing of different subnet interfaces and unreliable transmission. The bottom four layers can be seen as the transport service provider , whereas the upper layer(s) are the transport service user .

EXPLAIN TRANSPORT SERVICE PRIMITIVES. To allow users to access the transport service, the transport layer must provide some operations to application programs, that is, a transport service interface. Each transport service has its own interface. The main difference between network and transport layer is that the network service is intended to model the service offered by real networks. Real networks can lose packets, so the network service is generally unreliable, where as transport service is reliable. A second difference between the network servic e and transport service is whom the services are intended for. Few users write their own transport entities, and thus few users or programs ever see the bare network service. In contrast, many programs (and thus programmers) see the transport primitives.

To see how these primitives might be used, consider an application with a server and a number of remote clients. o The server executes a LISTEN primitive, typically by calling a library procedure that makes a system call to block the server until a client turns up. o When a client wants to talk to the server, it executes a CONNECT primitive. o The transport entity carries out this primitive by blocking the caller and sending a packet to the server. o Encapsulated in the payload of this packet is a transport layer message for the server's transport entity. TPDU ( Transport Protocol Data Unit ) for messages sent from transport entity to transport entity

In turn, packets are contained in frames. When a frame arrives, the data link layer processes the frame header and passes the contents of the frame payload field up to the network entity. The network entity processes the packet hea der and passes the contents of the packet payload up to the transport entity .

o Getting back to our client-server example, the client's CONNECT call causes a CONNECTION REQUEST TPDU to be sent to the server. o When it arrives, the transport entity checks to see that the server is blocked on a LISTEN (i.e., is interested in handling requests). o It then unblocks the server and sends a CONNECTION ACCEPTED TPDU back to the client. o When this TPDU arrives, the client is unblocked and the connection is established. o Data can now be exchanged using the SEND and RECEIVE primitives. o In the simplest form, either party can do a (blocking) RECEIVE to wait for the other party to do a SEND. o When the TPDU arrives, the receiver is unblocked. It can then process the TPDU and send a reply. In transport layer data exchange is more complicated. A state diagram for a simple connection management scheme. o Transitions labelled in italics are caused by packet arrivals. o The solid lines show the client's state sequence. o The dashed lines show the server's state sequence.

DISCUSS THE ELEMENTS OF TRANSPORT PROTOCOL . The transport service is implemented by a transport protocol used between the two transport entities. In some ways, transport protocols resemble the data link protocols , some significant differences between the two also exist. These differences are due to major dissimilarities between the environments in which the two protocols operate . At the data link layer, two routers communicate directly via a physical channel, whereas at the transport layer, this physical channel is replaced by the entire subnet.

In the data link layer, it is not necessary for a router to specify which router it wants to talk to. In the transport layer, explicit addressing of destinations is required. In the transport layer, initial connection establishment is more complicated, where as in data link layer it is simple. Difference between the data link layer and the transport layer is the potential existence of storage capacity in the subnet. The presence of a large and dynamically varying number of connections in the transport layer may require a different approach than we used in the data link layer.

DISCUSS ADDRESSING. When an application (e.g., a user)wishes to set up a connection to a remote application process, it must specify which one to connect to. In the Internet, these end points are called ports . In ATM networks, they are called AAL-SAPs . We will use the generic term TSAP , the analogous end points in the network layer are then called NSAPs.

A possible scenario for a transport connection is as follows. 1. A time of day server process on host 2 attaches itself to TSAP 1522 to wait for an incoming call. How a process attaches itself to a TSAP is outside the networking model and depends entirely on the local operating system. A call such as our LISTEN might b e used, for example. 2. An application process on host 1 wants to find out the time -of-day, so it issues a CONNECT request specifying TSAP 1208 as the source and TSAP 1522 as the destination. This action ultimately results in a transport connection being established between the application process on host 1 and server 1 on host 2. 3. The application process then sends over a request for the time. 4. The time server process responds with the current time. 5. The transport connection is then released.

EXPLAIN CONNECTION ESTABLISHMENT AND CONNECTION RELEASE. Transport entity to just send a CONNECTION REQUEST TPDU to the destination and wait for a CONNECTION ACCEPTED reply. The problem occurs when the network can lose, store, and duplicate packets. The worst possible nightmare is as follows. o A user establishes a connection with a bank, sends messages telling the bank to transfer a large amount of money to the account of a not entirely-trustworthy person, and then releases the connection. o Unfortunately, each packet in the scenario is duplicated and stored in the subnet. o After the connection has been released, all the packets pop out of the subnet and arrive at the destination in order, asking the bank to establish a new connection, transfer money (a gain), and release the connection. o The bank has no way of telling that these are duplicates. It must assume that this is a second, independent transaction, and transfers the money again. The crux of the problem is the existence of delayed duplicates. It can be attacked in various ways, none of them very satisfactory. One way is to use throw-away transport addresses. In this approach, each time a transport address is needed, a new one is generated. When a connection is released, the address is discarded an d never used again. Another possibility is to give each connection a connection identifier (i.e., a sequence number incremented for each connection established) chosen by the initiating party and put in each TPDU, including the one requesting the connection. After each connection is released, each transport entity could update a table. Packet lifetime can be restricted to a known maximum using one (or more) of the following techniques: o Restricted subnet design.

o Putting a hop counter in each packet. o Timestamping each packet. Three protocol scenarios for establishing a connection using a three-way handshake. CR denotes CONNECTION REQUEST. o (a) Normal operation. o (b) Old duplicate CONNECTION REQUEST appearing out of nowhere. o (c) Duplicate CONNECTION REQUEST and duplicate ACK.

Explanation of fig (a) o Host 1 chooses a sequence number, x, and sends a CONNECTION REQUEST TPDU containing it to host 2. o Host 2 replies with an ACK TPDU acknowledging x and announcing its own initial sequence number, y. o Finally, host 1 acknowledges host 2's choice of an initial sequence number in the first data TPDU that it sends. Explanation of fig(b) o The first TPDU is a delayed duplicate CONNECTION REQUEST from an old connection. o This TPDU arrives at host 2 without hos t 1's knowledge.

o Host 2 reacts to this TPDU by sending host 1 an ACK TPDU, in effect asking for verification that host 1 was indeed trying to set up a new connection. o When host 1 rejects host 2's attempt to establish a connection, host 2 realizes that it was tricked by a delayed duplicate and abandons the connection. In this way, a delayed duplicate does no damage. Explanation of fig (c) The worst case is when both a delayed CONNECTION REQUEST and an ACK are floating around in the subnet Host 2 gets a delayed CONNECTION REQUEST and replies to it. At this point it is crucial to realize that host 2 has proposed using y as the initial sequence number for host 2 to host 1 traffic, knowing full well that no TPDUs containing sequence number y or acknowledgement s to y are still in existence. When the second delayed TPDU arrives at host 2, the fact that z has been acknowledged rather than y tells host 2 that this, too, is an old duplicate. The important thing to realize here is that there is no combination of ol d TPDUs that can cause the protocol to fail and have a connection set up

by accident when no one wants it . Connection Realease

There are two styles of terminating a connection: asymmetric release and symmetric release. Asymmetric release is the way the telephone system works: when one party hangs up, the connection is broken. Symmetric release treats the connection as two separate unidirectional connections and requires each one to be released separately.

After the connection is established, host 1 sends a TPDU that arrives properly at host 2. Then host 1 sends another TPDU. Unfortunately, host 2 issues a DISCONNECT before the second TPDU arrives. The result is that the connection is released and data are lost. Symmetric release does the job when each process has a fixed amount of data to send and clearly knows when it has sent it. In other situations, determining that all the work has been done and the connection should be terminated is not so obvious. This protocol does not work for two men army problem. Solutions for this are shown below

In(a), we see the normal case in which one of the users sends a DR (DISCONNECTION REQUEST) TPDU to initiate the connection release. When it arrives, the recipient sends back a DR TPDU, too, and starts a timer, just in case its DR is lost. When this DR arrives, the original sender

sends back an ACK TPDU and releases the connection. Finally, when the ACK TPDU arrives, the receiver also releases the connection If the final ACK TPDU is lost, as shown in Fig.(b), the situation is saved by the timer. When the timer expires, the connection is released anyway. Now consider the case of the second DR being lost. The user initiating the disconnection will not receive the expected response, will time out, and will start all over again. In Fig.(c) we see how this works, assuming that the second time no TPDUs are lost and all TPDUs are delivered correctly and on time. Our last scenario, Fig.(d), is the same as Fig.(c) except that now we assume all the repeated attempts to retransmit the DR also fail due to lost TPDUs. After N retries, the sender just gives up and releases the connection. Meanwhile, the receiver times out and also exits. While this protocol usually suffices, in theory it can fail if the initial DR and N retransmissions are all lost. The sender will give up and release the connection, while the other side knows .

DISCUSS FLOW CONTROL AND BUFFERING. In some ways the flow control problem in the transport layer is the same as in the data link layer, but in other ways it is different. The basic similarity is that in both layers a sliding window or other scheme is needed on each connection to keep a fast transmitter from overrunning a slow receiver. The main difference is that a router usually has relatively few lines, whereas a host may have numerous connections. This difference makes it impractical to implement the data link buffering strategy in the transport layer. In the data link layer, the sending side must buffe r outgoing frames because they might have to be retransmitted. If the subnet provides datagram service, the sending transport entity must also buffer, and for the same reason. If the receiver knows that the sender buffers all TPDUs until they are acknowledged, the receiver may or may not dedicate specific buffers to specific connections, as it sees fit. The receiver may, for example, maintain a single buffer pool shared by all connections. When a TPDU comes in, an attempt is made to dynamically acquire a new buffer. If one is available, the TPDU is accepted; otherwise, it is discarded. Since the sender is prepared to retransmit TPDUs lost by the subnet, no harm is done by having the receiver drop TPDUs, although some resources are wasted. The sender just keeps trying until it gets an acknowledgement.

Even if the receiver has agreed to do the buffering, there still remains the question of the buffer size. If most TPDUs are nearly the same size, it is natural to organize the buffers as a pool of identically-sized buffers, with one TPDU per buffer. However, if there is wide variation in TPDU size, from a few characters typed at a terminal to thousands of characters from file transfers, a pool of fixed-sized buffers presents problems. If the buffer size is chosen equal to the largest possible TPDU, space will be wasted whenever a short TPDU arrives. If the buffer size is chosen less than the maximum TPDU size, multiple buffers will be needed for long TPDUs, with the attendant complexity.

Another approach to the buffer size problem is to use variable -sized buffers, as in Fig.(b). The advantage here is better memory utilization, at the price of more complicated buffer management. A third possibility is to dedicate a single large circular buffer per connection, as in Fig(c). This system also makes good use of memory, provided that all connections are heavily loaded, but is poor if some connections are lightly loaded.

DISCUSS MULTIPLEXING. Multiplexing several conversations onto connections, virtua l circuits, and physical links plays a role in several layers of the network architecture. In the transport layer the need for multiplexing can arise in a number of ways. When a TPDU comes in, some way is needed to tell which process to give it to. This situation, called upward multiplexing ,

If a user needs more bandwidth than one virtual circuit can provide, a way out is to open multiple network connections and distribute the traffic among them on a round-robin basis, as indicated in Fig.(b). This modus operandi is called downward multiplexing .

.

DISCUSS CRASH RECOVERY If hosts and routers are subject to crashes, recovery from these crashes becomes an issue. If the transport entity is entirely within the hosts, recovery from network and router crashes is straightforward. If the network layer provides datagram service, the transport entities expect lost TPDUs all the time and know how to cope with them. If the network layer provides connection-oriented service, then loss of a virtual circuit is handled by establishing a new one and then probing the remote transport entity to ask it which TPDUs it has received and which ones it has not received. The latter ones can be retransmitted. A more troublesome problem is how to recover from host crashes. In particular, it may be desirable for clients to be able to continue working when servers crash and then quickly reboot. To illustrate the difficulty, let us assume that one host, the client , is sending a long file to another host, the file server, using a simple stop and-wait protocol. The transport layer on the server simply passes the incoming TPDUs to the transport user, one by one. Partway through the transmission, the server crashes. When it comes back up, its tables are reinitialized, so it no longer knows precisely where it was. In an attempt to recover its previous status, the server might send a broadcast TPDU to all other hosts, announcing that it had just crashed and requesting that its clients inform it of the status of all open connections. Each client can be in one of two states: one TPDU outstanding, S1, or no TPDUs outstanding, S0.

Based on only this state information, the client must decide whether to retransmit the most recent TPDU.

STUDY OF APPLICATION LAYER. The layers below the application layer are there to provide reliable transport, but they do not do real work for users. Even in the application layer there is a need for support protocols, to allow the applications to function

DISCUSS DOMAIN NAME SERVER . The essence of DNS is the invention of a hierarchical, domain -based naming scheme and a distributed database system for implementing this naming scheme. It is primarily used for mapping host names and e-mail destinations to IP addresses but can also be used for other purposes. DNS is defined in RFCs 1034 and 1035. Very briefly, the way DNS is used is as follows. To map a name onto an IP address, an application program calls a library procedure called the resolver , passing it the name as a parameter. The resolver sends a UDP packet to a local DNS server, which then looks up the name and returns the IP address to the resolver, which then returns it to the caller. Armed with the IP address, the program can then establish a TCP connection with the destination or send it UDP packets.

DISCUSS NAME SPACE Managing a large and constantly changing set of names is a nontrivial problem. In the postal system, name management is done by requiring letters to specify (implicitly or explicitly) the country, state or province, city, and street address of the addressee. Conceptually, the Internet is divided into over 200 top -level domains , where each domain covers many hosts. Each domain is partitioned into subdomains, and these are further partitioned, and so on. All these domains can be represented by a tree The leaves of the tree represent domains that have no subdomains (but do contain machines, of course). A leaf domain may contain a single host, or it may represent a company and contain thousands of hosts.

Each domain is named by the path upward from it to the (unnamed) root. The components are separated by periods Domain names can be either absolute or relative. An absolute domain name always ends with a period (e.g., eng.sun.com.), whereas a relative one does not . Domain names are case insensitive, so edu, Edu, and EDU mean the same thing.

DISCUSS NAME SERVERS A single name server could contain the entire DNS database and respond to all queries about it. In practice, this server would be so overloaded as to be useless. Furthermore, if it ever went down, the entire Internet would be crippled. To avoid the problems associated with having only a single source of information, the DNS name space is divided into non overlapping zones . Each zone contains some part of the tree and also contains name servers holding the information about that zone. Normally, a zone will have one primary name server, which gets its information from a file on its disk, and one or more secondary name servers, which get their information from the primary name server. To improve reliability, some servers for a zone can be located outside the zone. Where the zone boundaries are placed within a zone is up to that zone's administrator. This decision is made in large part based on how many name servers are desired, and where. When a resolver has a query about a domain name, it passes the query to one of the local name servers. If the domain being sought falls under the jurisdiction of the name server, such as ai.cs.yale.edu falling under cs.yale.edu, it returns the authoritative resource records. An authoritative record is one that comes from the authority that manages the record and is thus always correct.

Authoritative records are in contrast to cached records, which may be out of date. If, however, the domain is remote and no information about the requested domain is available locally, the name server sends a query message to the top-level name server for the domain requested.

In step 1, it sends a query to the local name server, cs.vu.nl. This query contains the domain name sought, the type (A) and the class (IN). Let us suppose the local name server has never had a query for this domain before and knows nothing about it. It may ask a few other nearby name servers, but if none of them know, it sends a UDP packet to the server for edu given in its database , edu server.net. It is unlikely that this server knows the address of linda.cs.yale.edu, and probably does not know cs.yale.edu either, but it must know all of its own children, so it forwards the request to the name server for yale.edu (step 3). In turn, this one forwards the request to cs.yale.edu (step 4), which must have the authoritative resource records. Since each request is from a client to a server, the resource record requested works its way back in steps 5 through 8.

Você também pode gostar

- Transport Layer: - Transport Service - Elements of Transport Protocols - Internet Transfer Protocols Udp and TCPDocumento33 páginasTransport Layer: - Transport Service - Elements of Transport Protocols - Internet Transfer Protocols Udp and TCPJeena Mol AbrahamAinda não há avaliações

- Cnunit 4 NotesDocumento31 páginasCnunit 4 Noteskarthik vaddiAinda não há avaliações

- Transport Layer Services, Elements and ProtocolsDocumento23 páginasTransport Layer Services, Elements and ProtocolsNeha Rathi TaoriAinda não há avaliações

- Unit 4 CNDocumento80 páginasUnit 4 CNnikitha.veeramalluAinda não há avaliações

- 27 BCS53 2020121505394441Documento27 páginas27 BCS53 2020121505394441Pallavi BAinda não há avaliações

- Control, The Internet Transport Protocols: UDP, TCP, Performance Problems in Computer Networks, Networkperformance MeasurementDocumento12 páginasControl, The Internet Transport Protocols: UDP, TCP, Performance Problems in Computer Networks, Networkperformance Measurementtest2012Ainda não há avaliações

- Cn-Unit-6 MDocumento20 páginasCn-Unit-6 MKushal settulariAinda não há avaliações

- Computer Network-Transport LayerDocumento19 páginasComputer Network-Transport LayerSheba ParimalaAinda não há avaliações

- Unit 4Documento18 páginasUnit 4Manasa PAinda não há avaliações

- Transport LayerDocumento55 páginasTransport LayersrinusirisalaAinda não há avaliações

- Computer Networks Transport LayerDocumento24 páginasComputer Networks Transport LayerKarthikAinda não há avaliações

- Transport Layer Provides Reliable Data TransferDocumento22 páginasTransport Layer Provides Reliable Data TransferAnantharaj ManojAinda não há avaliações

- Unit 6 Part 1Documento19 páginasUnit 6 Part 1Thanvi priyusha 1213Ainda não há avaliações

- Chapter 6 The Transport LayerDocumento113 páginasChapter 6 The Transport LayerAbdulaziz MohammedAinda não há avaliações

- Unit 5Documento28 páginasUnit 5Kanimozhi Suguna SAinda não há avaliações

- Computer Networks: by K.Eugine Raj AP/SCAD Engg CollegeDocumento20 páginasComputer Networks: by K.Eugine Raj AP/SCAD Engg CollegegkreuginerajAinda não há avaliações

- Smartzworld.com provides overview of IPv6 extension headersDocumento13 páginasSmartzworld.com provides overview of IPv6 extension headersPraveen KandhalaAinda não há avaliações

- Smartzworld.com provides overview of IPv6 extension headersDocumento14 páginasSmartzworld.com provides overview of IPv6 extension headersPraveen KandhalaAinda não há avaliações

- Computer Networks Assignment QuestionsDocumento8 páginasComputer Networks Assignment Questionsmayank27jaitly100% (1)

- TRANSPORT LAYER LECTURE ON PROCESS-TO-PROCESS DELIVERYDocumento40 páginasTRANSPORT LAYER LECTURE ON PROCESS-TO-PROCESS DELIVERYKanika TyagiAinda não há avaliações

- Chapter 5Documento52 páginasChapter 5Pratham SuhasiaAinda não há avaliações

- Module 11Documento19 páginasModule 11vasanthkumar18Ainda não há avaliações

- Module-4_Transport_Layer_Lecturer-NotesDocumento21 páginasModule-4_Transport_Layer_Lecturer-Notesrockyv9964Ainda não há avaliações

- CNDocumento23 páginasCNManish SalianAinda não há avaliações

- Client/Server Paradigm: Mod 4 Process-To-Process DeliveryDocumento29 páginasClient/Server Paradigm: Mod 4 Process-To-Process DeliverySUREDDY TANUJA MSCS2018Ainda não há avaliações

- Part 5: Transport Protocols For Wireless Mobile Networks: Sequence NumbersDocumento6 páginasPart 5: Transport Protocols For Wireless Mobile Networks: Sequence NumbersKani MozhiAinda não há avaliações

- CHAPTER 5 - KEY IDEAS ON LINK LAYER SERVICES AND MULTIPLE ACCESS PROTOCOLSDocumento16 páginasCHAPTER 5 - KEY IDEAS ON LINK LAYER SERVICES AND MULTIPLE ACCESS PROTOCOLSAnis KhalafAinda não há avaliações

- Computer Networks: Transport Layer Prof. M. Sreenivasa RaoDocumento58 páginasComputer Networks: Transport Layer Prof. M. Sreenivasa RaoBala ChuppalaAinda não há avaliações

- Services Provided by The Transport LayerDocumento16 páginasServices Provided by The Transport LayerCaptain AmericaAinda não há avaliações

- Module 4 - Transport Layer - Lecture NotesDocumento21 páginasModule 4 - Transport Layer - Lecture Noteschaithrak.21.beisAinda não há avaliações

- 18Bsc52C - Computer Networks Unit - VDocumento16 páginas18Bsc52C - Computer Networks Unit - VManju ThirumalaiAinda não há avaliações

- CN Unit-Iv Notes - ExtraDocumento28 páginasCN Unit-Iv Notes - Extrakhurram060123Ainda não há avaliações

- Packect Switching NW (Notes)Documento8 páginasPackect Switching NW (Notes)Sagar ChingaliAinda não há avaliações

- Chapter 3Documento60 páginasChapter 3Markib Singh AdawitahkAinda não há avaliações

- Transport Layer STDDocumento8 páginasTransport Layer STDMd Habibur RahmanAinda não há avaliações

- Ch-4 - TRANSPORT LAYERDocumento105 páginasCh-4 - TRANSPORT LAYERShivang negiAinda não há avaliações

- A Comparison of Techniques For Improving TCP Performance Over Wireless LinksDocumento4 páginasA Comparison of Techniques For Improving TCP Performance Over Wireless LinksMuthu Vijay DeepakAinda não há avaliações

- Computer Network Lecture Notes on Network and Internet Layer DesignDocumento24 páginasComputer Network Lecture Notes on Network and Internet Layer DesignshashankniecAinda não há avaliações

- Unit 5Documento167 páginasUnit 5b628234Ainda não há avaliações

- VEHICLE DTN ROUTING FOR NETWORK DISRUPTIONDocumento5 páginasVEHICLE DTN ROUTING FOR NETWORK DISRUPTIONVaibhav GodseAinda não há avaliações

- Final Solved Asssignment Mb0035Documento14 páginasFinal Solved Asssignment Mb0035Avinash SinghAinda não há avaliações

- CN Unit-4 Imp QA'sDocumento22 páginasCN Unit-4 Imp QA'shajirasabuhiAinda não há avaliações

- Chapter 3 Midterm ReviewDocumento4 páginasChapter 3 Midterm Reviewvirsago42Ainda não há avaliações

- Chapter 5 Bit4001+q+ExDocumento60 páginasChapter 5 Bit4001+q+ExĐình Đức PhạmAinda não há avaliações

- Module 2 CN 18EC71Documento61 páginasModule 2 CN 18EC71Chethan Bhat B SAinda não há avaliações

- Transport LayerDocumento22 páginasTransport Layeryadajyothsna04Ainda não há avaliações

- Chapte Rwise Content DocxDocumento18 páginasChapte Rwise Content DocxwexidaAinda não há avaliações

- Unit5 OracleDocumento16 páginasUnit5 Oraclemarshmallow12211221Ainda não há avaliações

- A Throughput Analysis of TCP in ADHOC NetworksDocumento8 páginasA Throughput Analysis of TCP in ADHOC NetworksCS & ITAinda não há avaliações

- Datocom AnswersDocumento11 páginasDatocom AnswersHalefom DestaAinda não há avaliações

- Computer Networks Assignment - LpuDocumento6 páginasComputer Networks Assignment - LpuHitesh Sahani100% (1)

- CN Ass 3 SolnDocumento10 páginasCN Ass 3 SolnNoobs RageAinda não há avaliações

- Computer NetworkDocumento11 páginasComputer NetworkMd Abu SayemAinda não há avaliações

- Design of A Wired Network Using Different TopologiesDocumento12 páginasDesign of A Wired Network Using Different TopologiesSiva ShankarAinda não há avaliações

- Computer Netwirk 2 Marks 1Documento40 páginasComputer Netwirk 2 Marks 1Amirtharajan RengarajanAinda não há avaliações

- Network Chapter# 20: Transport Protocols Transport Protocols Transport Protocols Transport ProtocolsDocumento6 páginasNetwork Chapter# 20: Transport Protocols Transport Protocols Transport Protocols Transport ProtocolsRevathi RevaAinda não há avaliações

- CCNA Interview Questions You'll Most Likely Be AskedNo EverandCCNA Interview Questions You'll Most Likely Be AskedAinda não há avaliações

- Introduction to Internet & Web Technology: Internet & Web TechnologyNo EverandIntroduction to Internet & Web Technology: Internet & Web TechnologyAinda não há avaliações

- Modeling and Dimensioning of Mobile Wireless Networks: From GSM to LTENo EverandModeling and Dimensioning of Mobile Wireless Networks: From GSM to LTEAinda não há avaliações

- Project Report On Student Information Management System Php-MysqlDocumento87 páginasProject Report On Student Information Management System Php-MysqlKapil Kaushik76% (72)

- Project ReportDocumento96 páginasProject ReportFebin KfAinda não há avaliações

- Project Report On Student Information Management System Php-MysqlDocumento87 páginasProject Report On Student Information Management System Php-MysqlKapil Kaushik76% (72)

- Wearable ComputersDocumento19 páginasWearable Computersid.arun5260100% (10)

- Ricky JacobDocumento1 páginaRicky JacobManojkumar VsAinda não há avaliações

- Owners Manual: Class I, LL &LLL Groups A TO G. DIV L&LL T4Documento28 páginasOwners Manual: Class I, LL &LLL Groups A TO G. DIV L&LL T4TEUKUAinda não há avaliações

- VHF TT98-128432-THR-D SAILOR 6210 - PublicDocumento78 páginasVHF TT98-128432-THR-D SAILOR 6210 - PublicIvanAinda não há avaliações

- ENodeB LTE V100R008C10 Alarm Analysis andDocumento63 páginasENodeB LTE V100R008C10 Alarm Analysis andMesfin TibebeAinda não há avaliações

- I2c ProtocolDocumento28 páginasI2c Protocolishapatil86Ainda não há avaliações

- B No Analysis 1111Documento27 páginasB No Analysis 1111Chitpreet SinghAinda não há avaliações

- Dns AcaidoparaDocumento26 páginasDns AcaidoparaSimara Dos SantosAinda não há avaliações

- TL-SF1016D (Un) 5.0 PDFDocumento2 páginasTL-SF1016D (Un) 5.0 PDFdarkwolfbrAinda não há avaliações

- What is Multiplexing and its Types (FDM, WDM, TDMDocumento26 páginasWhat is Multiplexing and its Types (FDM, WDM, TDMsdfasdfAinda não há avaliações

- HomadeDocumento11 páginasHomadeJagatheeswari JagaAinda não há avaliações

- What Is HART? (: Highway Addressable Remote Transducer)Documento8 páginasWhat Is HART? (: Highway Addressable Remote Transducer)Manish DangiAinda não há avaliações

- CloudPlatform Powered by Apache CloudStack Version 4.5.1 Getting Started GuideDocumento154 páginasCloudPlatform Powered by Apache CloudStack Version 4.5.1 Getting Started GuideSubhendu DharAinda não há avaliações

- Especificaciones Tecnicas DS-2CD2683G2-IZS V5.5.113 20220601Documento6 páginasEspecificaciones Tecnicas DS-2CD2683G2-IZS V5.5.113 20220601RAMONGRANDE30Ainda não há avaliações

- Alu Datenblatt Isam Nelt A eDocumento2 páginasAlu Datenblatt Isam Nelt A eomer_tqAinda não há avaliações

- Lifting Rings: H.Henriksen H.HenriksenDocumento1 páginaLifting Rings: H.Henriksen H.HenriksenNautique SolutionsAinda não há avaliações

- Generations of Mobile CommunicationDocumento5 páginasGenerations of Mobile CommunicationAshna KakkarAinda não há avaliações

- John Lens Oracle Focus DayDocumento18 páginasJohn Lens Oracle Focus Dayrudro_mAinda não há avaliações

- What Is The Prefix Length Notation For The Subnet Mask 255.255.255.224?Documento63 páginasWhat Is The Prefix Length Notation For The Subnet Mask 255.255.255.224?Ahmad AzrulAinda não há avaliações

- Enhanced Wired Controller For Nintendo Switch ManualDocumento6 páginasEnhanced Wired Controller For Nintendo Switch Manualcpmath explicaçõesAinda não há avaliações

- Security and Privacy On The InternetDocumento6 páginasSecurity and Privacy On The InternetBùi Tuấn MinhAinda não há avaliações

- 2G To 3G Reselection and HODocumento36 páginas2G To 3G Reselection and HOanthonyAinda não há avaliações

- Ghosh, Sasthi C. - Sinha, Bhabani P. - Sinha, Koushik - Wireless Networks and Mobile Computing-CRC Press (2016)Documento540 páginasGhosh, Sasthi C. - Sinha, Bhabani P. - Sinha, Koushik - Wireless Networks and Mobile Computing-CRC Press (2016)Abdulassalam KhakoAinda não há avaliações

- Cisco Switches vs. HPE / Aruba Switches: SwitchDocumento16 páginasCisco Switches vs. HPE / Aruba Switches: SwitchabidouAinda não há avaliações

- Tempest & EchelonDocumento30 páginasTempest & Echelonapi-19937584Ainda não há avaliações

- Pragati Engineering College (Autonomous) Mini Project ReviewDocumento12 páginasPragati Engineering College (Autonomous) Mini Project ReviewmukeshAinda não há avaliações

- Nokia IP350-355 System DataSheetDocumento2 páginasNokia IP350-355 System DataSheetesetnod32Ainda não há avaliações

- Launching Exploits One Small Vulnerability For A Company, One GiantDocumento225 páginasLaunching Exploits One Small Vulnerability For A Company, One GiantNadzyaAinda não há avaliações

- CalibrationListing REL 32d International 370a430HPDocumento161 páginasCalibrationListing REL 32d International 370a430HPAnonymous nhF45CAinda não há avaliações

- Arinc 429Documento21 páginasArinc 429F Sa AbbasiAinda não há avaliações

- Mtcna Exam, SampleDocumento4 páginasMtcna Exam, SamplearifAinda não há avaliações

- Maekawa's Distributed Mutual Exclusion Algorithm AnalysisDocumento9 páginasMaekawa's Distributed Mutual Exclusion Algorithm AnalysisjyothisjaganAinda não há avaliações