Escolar Documentos

Profissional Documentos

Cultura Documentos

3D SAR Journal

Enviado por

ramyarakiDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

3D SAR Journal

Enviado por

ramyarakiDireitos autorais:

Formatos disponíveis

Copyright (c) 2010 IEEE. Personal use is permitted.

For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

1

Sparse Signal Methods for 3D Radar Imaging

Christian D. Austin, Emre Ertin, and Randolph L. Moses

The Ohio State University, Department of Electrical and Computer Engineering

2015 Neil Avenue, Columbus, OH 43210, USA

Email: {austinc, ertine, randy}@ece.osu.edu

AbstractSynthetic aperture radar (SAR) imaging is a valu-

able tool in a number of defense surveillance and monitoring

applications. There is increasing interest in three-dimensional

(3D) reconstruction of objects from radar measurements. Tra-

ditional 3D SAR image formation requires data collection over

a densely sampled azimuth-elevation sector. In practice, such

a dense measurement set is difcult or impossible to obtain,

and effective 3D reconstructions using sparse measurements are

sought. This paper presents wide-angle three-dimensional image

reconstruction approaches for object reconstruction that exploit

reconstruction sparsity in the signal domain to ameliorate the

limitations of sparse measurements. Two methods are presented;

rst, we use

p

penalized (for p 1) least squares inversion, and

second, we utilize tomographic SAR processing to derive wide-

angle 3D reconstruction algorithms that are computationally

attractive but apply to a specic class of sparse aperture sam-

plings. All approaches rely on high-frequency radar backscatter

phenomenology so that sparse signal representations align with

physical radar scattering properties of the objects of interest. We

present full 360

3D SAR visualizations of objects from air-to-

ground X-band radar measurements using different ight paths

to illustrate and compare the two approaches.

I. INTRODUCTION

There is increasing interest in three-dimensional (3D) recon-

struction of objects from radar measurements. This interest is

enabled by new data collection capabilities, in which airborne

synthetic aperture radar (SAR) systems are able to interrogate

a scene, such as a city, persistently and over a large range of

aspect angles [1]. Three-dimensional reconstruction is further

motivated by an increasingly difcult class of surveillance

and security challenges, including object detection and ac-

tivity monitoring in urban scenes. Additional information

provided by wide-aspect 3D reconstructions can be useful in

applications such as automatic target recognition (ATR) and

tomographic mapping.

In SAR imaging, an aircraft emits electromagnetic signal

pulses along a ight path and collects the returned echoes. The

returned echoes can be interpreted as one-dimensional lines of

the 3D Fourier transform of the scene, and the aggregation of

radar returns over the ight path denes a manifold of data in

the scenes 3D Fourier domain [2]. A number of techniques

have been proposed for narrow angle 3D reconstruction from

This material is based upon work supported by the Air Force Ofce

of Scientic Research under Award No. FA9550-06-1-0324. Any opinions,

ndings, and conclusions or recommendations expressed in this publication

are those of the authors and do not necessarily reect the views of the Air

Force. C. Austin was supported in part by a fellowship from the Ohio Space

Grant Consortium. This paper is an extension of work previously presented

in [30][32].

this manifold of data. Two different approaches to 3D image

formation are full 3D reconstruction and 2D non-parametric

imaging followed by parametric estimation of the third, height

dimension.

Full 3D reconstruction methods invert an operator to re-

trieve the three-dimensional reectivity function; specically,

in SAR imaging, the operator can be modeled as a Fourier

operator, since data is collected over a manifold in 3D Fourier

space of the scene. Generating high-resolution 3D images

using traditional Fourier processing methods requires that

radar data be collected over a densely sampled set of points in

both azimuth and elevation angle, for example, by collecting

data from many closely spaced linear ight passes over a scene

[3], [4]. This method of imaging requires very large collection

times and storage requirements and may be prohibitively costly

in practice. There is thus motivation to consider more sparsely

sampled data collection strategies, where only a small fraction

of the data required to perform traditional high-resolution

imaging is collected. Sparsely sampled data collections with

elevation diversity can be achieved through nonlinear ight

paths [5][8]. However, when inverse Fourier imaging is

applied to sparsely sampled apertures, reconstruction quality

can be poor. Reconstruction quality can be quantied by the

point spread function (PSF) of the image, dened by the

Fourier transform of the data aperture indicator function. The

mainlobe of this PSF will typically be wider (indicating re-

duced resolution) and the sidelobes higher than for the PSF of

a reconstruction formed from a densely-sampled measurement

aperture (see e.g. [7], [9]). Methods to mitigate this problem

by deconvolving the PSF from the 3D reectivity function

using greedy algorithms were investigated in [5], [6]. In this

paper we develop an

p

regularized least squares approach

to wide-angle 3D radar reconstruction for arbitrary, sparse

apertures, and we demonstrate this approach on the problem

of 3D vehicle reconstruction.

A second approach is based on forming a small set of 2D

SAR images followed by parametric 1D estimation to estimate

the third, or height, dimension in the backscatter prole. We

refer to these as 2D+1D techniques. Interferometric SAR

(IFSAR) is a well-known classical technique for parametric

height estimation from 2D SAR imagery formed at two linear

elevation passes [2], [10]; in this image formation method,

only a sparse data collection in elevation is required to form 3D

images. More recently, multi-baseline extensions, sometimes

referred to as Tomographic SAR or Tomo-SAR, have been

developed for height estimation (see, e.g. [11][20]). In Tomo-

SAR imaging, height estimation can be treated as a sinusoids-

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

2

in-noise problem and can be solved using spectral estimation

techniques. A number of different techniques exist for esti-

mating the height-dependent reectivity of a scene, including

the RELAX algorithm [11], uniform and non-uniform Fourier

beamforming [12], [13], [16], Truncated SVD processing

[14], Capon ltering [16], parameter estimation methods [18],

regularized optimization algorithms [19], [20], and the ES-

PRIT algorithm [21]; a survey of different height estimation

methods is given in [17]. Differential techniques also exist

[19], [22], in which passes are collected at different times

and temporal velocity is also estimated. These techniques

can be formulated as 2D+2D approaches. Many of these

approaches have been applied to strip-map SAR processing

of large scenes, and 3D reconstruction is generally aimed at

reconstruction of buildings, forest canopies, or nonuniform

terrain height. Furthermore, the approaches have been applied

to relatively narrow angle collection geometries, in which an

isotropic scattering assumption is well-approximated. In both

2D+1D and full 3D reconstructions, radar scattering is typi-

cally anisotropic over wide angles and violates the isotropic

point scattering assumption of traditional radar imaging. As

a result, reconstructed image resolution will be worse than

indicated by PSF analysis, which is predicated on an isotropic

scattering assumption [23].

In this paper, we develop and compare two techniques,

one a full 3D method, and one 2D+1D based, for achieving

accurate 3D scene reconstructions from sparse, wide-angle

measurement apertures. Both techniques rely on some ba-

sic properties of scattering physics, and both exploit signal

sparsity (in the reconstruction domain) of radar scenes. In

particular, we are interested in imaging man-made structures

under high-frequency radar operation. Under these operating

conditions, scenes are dominated by a sparse number of

dominant isolated scattering centers; dominant returns result

from objects such as corner or plate reectors made from

electromagnetic conductive material (see e.g. [24]). The rst

algorithm,

p

regularized least-squares (LS) processing, is

a full 3D approach, requires only knowledge of the ight

geometry, and is applicable to image formation in arbitrary

collection geometries. In this paper we will use the term

image formation to denote both 2D and 3D radar scene

reconstructions. In addition, since collected radar data can

be interpreted as samples in the 3D Fourier transform space

of the scene, matrix-vector multiplications in the regularized

LS algorithm can be replaced by the Fast Fourier Transform

(FFT). The regularized LS approach is also known as Basis

Pursuit Denoising when p = 1 [25], [26]. This approach

has been shown to produce well-resolved, 2D SAR image

reconstructions over approximately linear ight paths [27]

[29] it was also used for 3D image reconstruction in [30]

[32]. This

p

regularized LS approach is also used in Tomo-

SAR to resolve scatterers in the height dimension as an

alternative to spectral estimation [19]. For wide-angle 3D SAR

reconstruction, a direct implementation of

p

regularized LS

methods yields a prohibitively large optimization problem;

one of the contributions of this paper is the development

of a computationally tractable implementation. The second

reconstruction algorithm we consider is a Tomo-SAR-based

approach adapted to address vehicle-sized 3D reconstruction

over very wide azimuth data collections, including full 360

circular SAR; this approach exploits knowledge of scatter-

ing sparsity to improve height resolution [32], [33] and is

computationally faster than the rst algorithm; however, it

applies to a more restrictive class of sparse data collection

schemes. Anisotropic scattering over wide angles is addressed

in both algorithms by using non-coherent subaperture imaging,

where scattering is assumed to be isotropic over narrow-angle

subapertures.

We investigate the two wide-angle 3D SAR image formation

methods on different sparse data collection geometries. The

rst collection geometry is a pseudorandom path collection

for three polarizations generated by Visual-D electromagnetic

simulation software, and released as a public dataset by the Air

Force Research Laboratory (AFRL) [34]. The second dataset,

also released by AFRL, is from an actual 2006 multipass

X-band Circular SAR (CSAR) data collection of a ground

scene [35]. This dataset consists of eight fully circular paths in

azimuth, at eight closely-spaced elevation angles with respect

to scene center; this data is polarimetric, in that horizontal-

horizontal (HH), vertical-vertical (VV) and cross-polarization

data is collected. We generate pseudorandom dataset images

using the regularized LS algorithm and multipass CSAR

dataset images using both algorithms; the Tomo-SAR approach

requires multipass data and cannot be applied to the pseu-

dorandom path data. The previously-discussed resolution and

sidelobe issues that result from sparse measurement apertures

are manifest in both of these datasets.

The contributions of this paper can be summarized as

follows. First, we propose a technique to process sparse wide-

angle data, such as circular SAR data, for object recon-

struction; this type of data is becoming increasing important

in persistent surveillance applications. Second we provide

full 3D radar reconstructions using

p

regularized sparsity

techniques, and provide a tractable algorithm, in terms of

memory and computational requirements, for generating full

3D reconstructions from arbitrary, sparse 3D ight paths.

Third, we demonstrate the rst high-delity 3D vehicle recon-

structions from an arbitrary curvilinear ight path. Fourth, we

successfully demonstrate multi-baseline tomographic SAR for

3D reconstructions of passenger vehicles from airborne mea-

surements using full 360

azimuth data from an operational

0.3m resolution X-band radar. Finally, we provide an initial

comparison of Tomo-SAR and

p

regularized LS approaches

on both synthetic and measured X-band radar data of vehicles,

in terms of both reconstruction performance and computational

cost.

An outline of the paper is as follows. First, an overview

of the SAR data model is presented in Section II. Section III

describes the two collection geometries, pseudorandom and

CSAR, and corresponding datasets considered here. These

collection geometries demonstrate some of the challenges

presented by such sparse collections. In Section IV, the

p

reg-

ularized LS imaging algorithm is presented, and in Section V

the wide-angle Tomo-SAR algorithm is discussed. Section VI

presents reconstructed 3D images of vehicles from both the

pseudorandom and CSAR data collection geometries. Finally,

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

3

Section VII concludes, summarizing the main results of the

paper.

II. SAR MODEL

In this section, we briey review the tomographic SAR

model used for reconstruction. We assume that the radar

transmits a wideband signal with bandwidth BW centered

about a center frequency f

c

. Such a signal could be an FM

chirp signal or a stepped-frequency signal, but other wideband

signals can also be used. We also assume that the transmitter

is sufciently far away from the scene so that wavefront

curvature is negligible, and we use a plane wave model for

reconstruction; this assumption is valid, for example, when

the extent of the scene being imaged is much smaller than the

standoff distance from the scene to the radar.

For a radar located at azimuth and elevation with

respect to scene center that transmits an interrogating signal,

the received waveform, in the far-eld case, is given by [2]

r(t; , , pol) =

_

_

B

z

B

z

_

B

y

B

y

g

_

x =

ct

2

, y, z; , , pol

_

d y d z

_

s(t),

(1)

where c is the speed of light, s(t) is a known, bandlimited

signal with center frequency f

c

and bandwidth BW that

represents the transmitted waveform convolved with antenna

responses; pol is the polarization of the transmit/receive signal

pair, and denotes convolution. The x-coordinate is dened

as the radial line from the radar to scene center, and y, and z

are orthogonal to x and to each other. This coordinate system

is a translation in x from scene center and a rotation by (, )

of a xed, ground coordinate system (x, y, z), whose origin

is at scene center. The scenes reectivity function is given

by g( x, y, z; , , pol), or equivalently, by g(x, y, z; , , pol)

in a xed ground coordinate system. Boundaries of the scene

in each dimension are denoted as B

()

. Under the far-eld

assumption, these boundaries are assumed to be sufciently

small so that waveform curvature and range-dependent signal

attenuation can be neglected, which means that these scene

boundaries are on the order of objects but not entire large

scenes. For large scenes, (1) applies locally around setpoints

of interest.

Equation (1) can be interpreted as the Fourier transform of

the scene reectivity function projected onto the x-dimension.

By the projection-slice theorem [2], this Fourier transform

is equivalent to a line along the x-axis in 3D spatial fre-

quency space, or k-space, of the scene reectivity function.

Specically, the 3-D Fourier transform G(k

x

, k

y

, k

z

) of the

reectivity function g(x, y, z; , , pol), observed from angle

(, ) at polarization pol is given by:

G(k

x

, k

y

, k

z

; , , pol) =

_

g(x, y, z; , , pol)

e

j(k

x

x+k

y

y+k

z

z)

dxdy dz.

(2)

The frequency support of each measurement is a line

segment in (k

x

, k

y

, k

z

) with extent

4BW

c

rad/m centered at

4f

c

c

rad/m, and oriented at angle (, ). The ight path denes

which line-segments in k-space are collected, and hence what

subset of k-space is sampled. Typically both the frequency

variable f along each line segment and the ight path are

sampled as f f

j

, (, ) (

n

,

n

), so one obtains a set

of k-space samples indexed on (j, n) as:

k

j,n

x

=

4f

j

c

cos

n

cos

n

k

j,n

y

=

4f

j

c

cos

n

sin

n

(3)

k

j,n

z

=

4f

j

c

sin

n

.

In order to use tomographic inversion techniques to recover

g from k-space measurements, it is often assumed in (2) that

the scene reectivity is isotropic; so, g(x, y, z; , , pol) is

not a function of and . For narrow-angle measurements,

this assumption is generally valid; however, for wide-angle

measurements, the isotropic scattering assumption is not valid

for most scattering centers in the scene [36], [37]. One

approach for reconstruction from wide-angle measurements,

and the one adopted in this paper, is to subdivide the mea-

surements into a set of possibly overlapping subapertures and

to assume scattering is locally isotropic on each subaperture.

Once subaperture reconstructions are obtained, one can then

form an overall wide-angle reconstruction by combining the

narrow-aperture reconstructions in an appropriate way.

In particular, we argue that a good way to implement the

subaperture combination is using a Generalized Likelihood

Ratio Test (GLRT) approach. We assume scattering at each

point (x, y, z) in the scene can be characterized by a limited-

angle response centered at azimuth and elevation and with

some persistence width in each angular dimension. We treat

the persistence angle as xed and known, and we use this to

establish the angular widths of the subapertures used in the

data formation. Since the response center angles (, ) are

unknown, we estimate them using a GLRT formulation: use

a bank of matched lters, each characterized by a center re-

sponse azimuth and a response width and shape, and compute

the response amplitude as

I(x, y, z; pol) = arg max

(,)

|I(x, y, z; , , pol)|, (4)

where I denotes the matched lter output. The maximization

in equation (4) over continuous-valued and is approxi-

mated by discretizing these two variables. Since backprojec-

tion radar image formation can be interpreted as a matched

lter for point scattering responses [38], each matched lter

output I(x, y, z; , , pol) is well-approximated by the sub-

aperture radar image formed from k-space measurements at

discrete center angles (

j

,

j

) and with xed azimuth and

elevation extent. That is, the approach of forming subaperture

radar reconstructions, then combining these reconstructions by

taking the maximum over all subapertures, can be interpreted

as a GLRT approach to reconstruction of limited-persistence

scattering centers. While the approach in (4) assumes that

all scattering centers have identical and known persistence,

generalizations to variable persistence angles can also be

developed [39]. As a side note, each voxel in the image

reconstruction is also characterized by the maximizing (, )

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

4

center angles, providing additional information useful for

image visualization [29] or object recognition [40], [41].

In the algorithms presented below, we will assume that the

available k-space data are partitioned into (possibly overlap-

ping) subapertures, and that reconstructions for each subaper-

ture are obtained using the proposed algorithms. Then, a nal

wide-angle reconstruction is obtained using (4).

An advantage of this locally-isotropic approach is that,

for each subaperture, scattering responses are parameterized

by only location and amplitude. An alternate approach, con-

sidered in [24], [42][44], is to adopt models that directly

characterize anisotropic scattering. One can then directly

estimate scattering centers from the entire wide-angle data

using these models. This latter approach may be posed as

a classical parametric model order and parameter estimation

problem (see, e.g., [24], [44]). Alternately, one can adopt

a nonparametric approach in which anisotropic scattering is

characterized as a linear combination of dictionary elements

that are limited in persistence, and one estimates the ampli-

tudes of a sparse linear combination of dictionary elements;

such an approach has recently been proposed in [42]. A

related nonparametric approach is to estimate an image at each

aspect angle from a sparse linear combination of dictionary

elements; the images are not independently formed, but linked

through a regularization term that penalizes for large changes

in pixel magnitudes that are close in aspect [43]; regularization

enforcing sparsity in these nonparametric approaches is similar

to the

p

reconstruction technique presented in Section IV

below. These wide-angle nonparametric approaches result in

a (much) larger set of dictionary elements than used in the

approach followed here; this is because anisotropic scattering

is characterized by additional parameters such as orientation

and persistence angles. In principle, the approaches in [24],

[42][44] are based on similar assumptions, but represent dif-

ferent algorithmic approaches to estimate the reconstruction.

A detailed comparison of these approaches in terms of both

computation and reconstruction performance remains a topic

for future study.

III. COLLECTION GEOMETRY AND EXAMPLE DATASETS

Before presenting the proposed reconstruction approaches,

it is useful to examine some example data collection apertures

and the associated 3D reconstruction challenges that result.

We will rst present and discuss two sparse radar collection

geometries and their associated reconstruction objectives. The

rst dataset considered is synthetically generated data from a

pseudorandom ight path developed by researchers at AFRL

as a 3D image reconstruction challenge problem [34]; the

second dataset is a collection of X-band eld measurements

from a CSAR radar at eight closely-spaced elevations [35].

A. Pseudorandom Flight Path Dataset

The pseudorandom ight path dataset [34], [45] is generated

by the Visual-D electromagnetic scattering simulator. The

simulator models scattering returns from a radar with center

frequency f

c

= 10 GHz and bandwidth BW = 6 GHz. The

dataset consists of k-space samples computed along a con-

tinuous, far-eld pseudo-random squiggle path in azimuth

and elevation from a construction backhoe vehicle. The path

is intended to simulate an airborne platform that interrogates

the object over a wide range of azimuth and elevation angles,

but doing so while ying along a 1D curved path that

sparsely covers the 2D azimuth-elevation angular sector. Three

polarizations are included in the dataset, vertical-vertical (VV),

horizontal-horizontal (HH), and cross-polarization (HV).

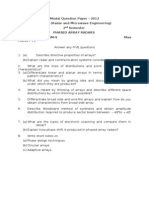

The trace in Figure 1 shows the path as a function of

azimuth and elevation angle, dened with respect to a xed

ground plane coordinate system, and Figure 2(a) displays the

corresponding k-space data that can be collected by the radar,

which is contained between the inner and outer domes that de-

note the minimum and maximum radar frequency, respectively.

The squiggle path is superimposed on the outer dome. The set

of k-space data collected along the squiggle path is very sparse

with respect to the full data dome. The azimuth and elevation

extents of the squiggle path are approximately [66

, 114.1

],

and [18

, 42.1

], respectively. This range of nearly 50

in

azimuth and 25

in elevation indeed represents wide-angle

measurement at X-band; the persistence of many scattering

centers at X-band has been reported to be (signicantly) lower

[36], [37]. In contrast, a lled aperture used to form benchmark

images uses samples at every

1

14

in this azimuth/elevation

sector.

70 75 80 85 90 95 100 105 110

15

20

25

30

35

40

45

Azimuth

E

l

e

v

a

t

i

o

n

Fig. 1. Sparse squigglepath radar measurements as a function of azimuth

and elevation angle in degrees.

B. Multipass Circular SAR Dataset

The second sparse dataset we consider is the multipass

CSAR data from the AFRL GOTCHA Volumetric SAR Data

Set, Version 1.0 [35], [46]. This dataset consists of sampled,

dechirped radar return values that have been transformed

to the form of G(k

x

, k

y

, k

z

; , , pol) in (2). The data is

fully polarimetric from 8, 360

CSAR passes. The planned

nominal collection consists of passes at constant equally-

spaced elevation angles with respect to scene center, with

elevation difference,

el

= 0.18

, in the range [43.7

, 45

].

The actual ight path is not perfectly circular, as shown in

Figure 3, and not at perfectly constant and equally-spaced

elevations. The center frequency of the radar is f

c

= 9.6GHz,

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

5

(a) Squiggle Path

(b) CSAR Path

Fig. 2. Data domes of all k-space data that can be collected by a radar

for (a) the pseudorandom synthetic squiggle path backhoe dataset, and (b)

the GOTCHA dataset; units are in rad/m. Support of the k-space data is

contained between the inner and outer dome. Inner and outer domes show the

minimum and maximum radar interrogating frequencies. The outlines on the

outer domes show the locations of the sparse k-space data collected, which

extends from the outline radially to the inner dome.

and the bandwidth of the radar is 640MHz, signicantly lower

than that of the squiggle path collection. Figure 2(b) shows the

k-space data collected by the eight GOTCHA passes. The k-

space radial extent from the outer dome to inner dome of

data collected, dictated by radar bandwidth, is seen to be

signicantly smaller than in the squiggle path case. Figure 2(b)

also illustrates that the GOTCHA k-space data is very limited

in elevation extent, in contrast to the squiggle path.

Fig. 3. Actual GOTCHA passes. Scale is in meters.

IV.

p

REGULARIZED LEAST-SQUARES IMAGING

ALGORITHM

In this section we present the rst of two 3D imaging

algorithms; this algorithm applies to general data collection

scenarios, but will be used for sparse collections here. The

proposed approach assumes that the number of 3D locations in

which nonzero backscattering occurs is sparse in the 3D recon-

struction space, and applies sparse reconstruction techniques.

We pose the reconstruction as an

p

regularized least-squares

(LS) problem, in which a regularizing term encourages sparse

solutions. This

p

regularized LS imaging algorithm attempts

to t an image-domain scattering model to the measured k-

space data under a penalty on the number of non-zero voxels.

The algorithm assumes that the complex magnitude response

of each scattering center is approximately constant over narrow

aspect angles and across the radar frequency bandwidth. The

algorithm in this section applies to general apertures; this is

in contrast to the second algorithm presented in Section V,

which applies to apertures with specic structure.

Dene a set of N locations in image reconstruction space

as candidate scattering center locations,

C = {(x

n

, y

n

, z

n

)}

N

n=1

. (5)

Typically these locations are chosen on a uniform rectilinear

grid. The M N data measurement matrix is given by

A =

_

e

j(k

x,m

x

n

+k

y,m

y

n

+k

z,m

z

n

)

_

m,n

,

where m indexes the M measured k-space frequencies down

rows, and n indexes the N coordinates in C across columns.

Under the assumption that scattering center amplitude is

constant over the aspect angle extent and radar bandwidth con-

sidered, the measured (subaperture) data from the scattering

center model, (2), can be written in matrix form as

b = A + , (6)

where is the N-dimensional vectorized 3D image that we

wish to reconstruct; it has complex amplitude value

n

in

row n if a scattering center is located at (x

n

, y

n

, z

n

) and is

zero in row n otherwise; the image vector maps to the 3D

image, I(x

n

, y

n

, z

n

), by the relation I(x

n

, y

n

, z

n

) = (i) if

and only if column i of A is from coordinate (x

n

, y

n

, z

n

). The

vector is an M dimensional i.i.d. circular complex Gaussian

noise vector with zero mean and variance

2

n

, and b is an

M-dimensional vector of noisy k-space radar measurements.

The reconstructed image,

, is the solution to the sparse

optimization problem [27], [28]

= argmin

_

b A

2

2

+

p

p

_

, (7)

where the p-norm is denoted as

p

, 0 < p 1, and is a

sparsity penalty weighting parameter. Note that the solution

to (7) applies for general A matrices, and the radar ight

path locations that index the rows of A can be arbitrary. In

particular, ight paths such as the squiggle path in Figure 2(a)

can be used. Many algorithms exist for solving (7) or the

constrained version of this problem when p = 1 (e.g. [26],

[47][50]), or in the more general case, when 0 < p 1 (e.g.

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

6

[28], [51]). We use the iterative majorization-minimization

algorithm in [28] to implement (7). This algorithm is suitable

for the general case when 0 < p 1. The algorithm

has two loops, an outer loop which iterates on a surrogate

function and an inner loop that solves a matrix inverse using

a conjugate gradient algorithm, in our experience, the inner

loop terminates after very few iterations when using a Fourier

operator, as considered here. Empirical evidence also indicates

that this majorization-minimization algorithm terminates faster

than a split Bregman iteration approach [50]. An outline of the

majorization-minimization algorithm implementation is pro-

vided in the Appendix. For algorithm implementation, dene

a uniform rectilinear grid on the x, y, and z spatial axes with

voxel spacings of x, y, and z, respectively. Let the set

of candidate coordinates C in (5) consist of all permutations

of (x, y, z) coordinates from the partitioned axes; then, the set

C denes a uniform 3D grid on the scene. If, in addition,

the k-space samples are on a uniform 3D frequency grid

centered at the origin, the operation A can be implemented

using the computationally efcient 3D Fast Fourier Transform

(FFT) operation. In many scenarios, including the one here,

the measured k-space samples are not on a uniform grid,

and the FFT cannot be used directly. Instead an interpolation

step followed by an FFT is needed. An alternative approach

would be to use Type-2 nonuniform FFTs (NUFFT)s as the

operator A to process data directly on the non-uniform k-

space grid, at added computational cost [52], [53]. Nonuni-

form FFT algorithms require an interpolation step, which is

executed each time the operator A is evaluated; whereas, in

FFT implementation, interpolation occurs only once and the

interpolated data becomes b. When using an iterative algorithm

to solve (7), as used here, having to perform interpolation once

can result in signicant computational savings. Our empirical

results on the X-band data sets considered here suggest that

nearest neighbor interpolation results in well-resolved images

at low computational cost, and so it is adopted here.

Implementing the optimization algorithm solving (7) for

large-scale problems can be challenging from a memory and

computational standpoint. In iterative algorithms, like the one

utilized here, typically, the data vector b as well as the current

iterate of and a gradient with the same dimension as is

stored. For example, in the simulations below, we reconstruct

a scene with N = 182 250 252 1.1 10

7

voxels

to cover a single vehicle. So, at the very least, it would

be necessary to store the data vector in addition to two

vectors of double or single precision in 1.110

7

dimensional

complex space. For algorithms that utilize a conjugate gradient

approach to calculate matrix inverses, it is also necessary to

store a conjugate vector of the same dimension N, and in a

Newton-Raphson approach, it is necessary to store a Hessian

of dimension N N. During each iteration of an algorithm,

it is commonly required to evaluate the operator A and its

adjoint. These operations can become very computationally

expensive when the problem size grows and may result in a

computationally intractable algorithm, unless a fast operator

such as the FFT is employed.

Specically, since A is an M N matrix, direct multi-

plication of A requires MN multiplies and additions per

evaluation. In examples using the squiggle path and nine

subapertures chosen, the average value of these nine M values

is 10

5

, so MN 10

12

operations. After initial interpolation,

an FFT implementation of A requires O(D

3

log(D

3

)) opera-

tions, where D is the maximum number of samples across the

image dimensions. For the imaging example with dimensions

182 250 252, D = 252. For concreteness, assuming the

constant multiple on the order of operations in the FFT is

close to unity, FFT implementation of the operator A requires

approximately 252

3

log(252

3

) 3.810

8

operations; so, FFT

implementation results in computational savings greater than

a factor of 2500.

Since the scattering centers in model (2) are anisotropic and

polarization dependent, we apply (7) to form the image for

each narrow-angle subaperture and polarization, and combine

the images using equation (4). Recent approaches for joint

reconstruction of multiple images [54] may also be applied to

simultaneously reconstruct all polarizations for each subaper-

ture.

V. WIDE-ANGLE TOMOGRAPHIC SAR IMAGING

The second approach we consider for 3D reconstruction

is a tomographic SAR approach [11][20], in which the

relative phase information from several closely spaced col-

lection paths is used to estimate the height scattering prole

using interferometric techniques. Applying this approach in

combination with angle subapertures, one can divide the 3D

problem problem into a set of 2D subaperture image formation

problems followed by 1D spectral estimation computations.

This approach results in signicantly lower computation and

memory requirements as compared with the method presented

in Section IV. On the other hand, the Tomo-SAR-based

approach applies only to multi-baseline images, and thus

applies only to a particular subclass of sparse data collection

geometries. As a result, the algorithm proposed in this section

does not have the generality of the

p

regularized LS approach,

but does provide reduced computation for those cases in which

the data collection geometry is amenable to this approach.

Tomographic SAR approaches have been considered for forest

canopy and building height estimation using relatively narrow-

angle linear collection geometries [13][16], [18][20]. Here,

we adapt this approach to full-360

spotlight SAR data col-

lections and demonstrate 3D vehicle reconstructions.

A. Circular SAR as a Sparse Collection Aperture

The CSAR system collects coherent backscatter mea-

surements r(f;

i

,

, pol) on circular apertures parameter-

ized with azimuth angles {

i

} covering [0, 2] and at

a small set of L (e.g. 2-15) elevation angles {

}. The

backscatter measurements, r(f

j

;

i

,

, pol), are collected at

discrete set of frequency samples {f

j

}. The radar mea-

surements {r(f

j

;

i

,

, pol)} correspond to the samples of

G(k

x

, k

y

, k

z

;

i

,

, pol) on a two-dimensional conical man-

ifold at points k

j,n

x

, k

j,n

y

, and k

j,n

z

from (3) (see Figure 2(b)),

where the aspect indices (i, ) map to the single index n

in (4). The reconstruction problem is to estimate the three-

dimensional reectivity function of the spotlighted scene

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

7

g(x, y, z) from the set of radar returns {r(f

j

;

n

,

n

, pol)}

collected by the radar.

As before, we adopt a subaperture imaging approach, and

for each elevation we divide the azimuth measurements into

M subapertures, each centered at azimuth

m

and with xed,

user-selected azimuth extent (typically 520

for X-band data).

The m-th set of subaperture data, for 1 m M, is thus a

set of L elevation passes at elevation

, centered at azimuth

m

.

Rather than store the k-space data directly, we can provide

compact image products matched to scatterers with limited

persistence, and maintain 1-1 correspondence with the original

k-space data. These image products are 2D ground plane

(z = 0) image sequences {I(x, y, 0;

m

,

, pol)}

m

where

each image is the output of a lter matched to a limited-

persistence reector over the azimuth angles in azimuth win-

dow W

m

(). Specically, the m-th subaperture images are

constructed as

I(x, y, 0;

m

,

, pol) =

F

1

(x,y)

_

G(k

x

, k

y

,

_

k

2

x

+ k

2

y

tan(

);

m

,

, pol)

W

m

_

tan

1

k

x

k

y

_

_

, 1 L,

where F

1

(x,y)

is the 2D inverse Fourier transform, and the

azimuthal window function W(

m

) is dened as:

W

m

() =

_

W

_

_

, /2 < < /2

0, otherwise.

(8)

Here,

m

is the center azimuth angle for the m-th window

and describes the hypothesized azimuth persistence width.

The window function W() is an invertible tapered window

used for cross-range sidelobe reduction; typical choices may

be the Hamming or Taylor windows that are commonly used

in SAR images. Each image can be modulated to baseband

and sampled at a lower resolution in (x, y) without causing

aliasing. Each baseband ground image I

B

(x, y, 0;

m

,

, pol)

is calculated as:

I

B

(x, y, 0;

m

,

, pol) =

I(x, y, 0;

m

,

, pol)e

j(k

0

x,m

x+k

0

y,m

y)

.

(9)

where the center frequency (k

0

x,m

, k

0

y,m

) is determined by

the center aperture

m

, mean elevation angle

and center

frequency f

c

:

k

0

x,m

=

4f

c

c

cos

cos

m

, k

0

y,m

=

4f

c

c

cos

sin

m

.

An important property of this subaperture imaging approach

is that Nyquist sampling of (x, y) in subaperture images is

dictated by the baseband downrange and crossrange k-space

extents, and therefore, the image sample spacing is (much)

less ne than if the full SAR image is formed using all k-

space data jointly [21]. For modest azimuth window extent

in radians, the Nyquist sampling in the downrange is dictated

by the inverse of the radar bandwidth,

1

BW

, and the crossrange

sampling is dictated by

1

(f

c

+BW/2)

; these sample spacings

are much coarser than the

1

2(f

c

+BW/2)

spacing that would be

needed for the full Circular SAR k-space data. The result is

a signicantly smaller storage requirement for CSAR imagery

data.

B. Tomographic SAR

We next present a method for using the set of ground

plane images I

B

(x, y, 0;

m

,

, pol) to construct three dimen-

sional reectivity functions for set of subapertures centered at

(

m

,

).

The input to the wide-angle Tomo-SAR algorithm

is a set of baseband modulated ground plane images

{I

B

(x, y, 0;

m

,

, pol)} at a given subaperture centered at

m

of data collected at elevation cuts

. We process each

subaperture separately; for each subaperture denote the image

sequence as {I(x, y;

, pol)}

L

=1

and consider without loss of

generality

m

= 0. We consider a nite (and small) number,

p, of scattering centers at each resolution cell (x, y) and

reparameterize the scene reectivity g(x, y, z) as

g

p

(x, y) g(x, y, h

p

(x, y)), (10)

where g

p

(x, y) denotes the complex-valued reectivity of the

scattering center at location (x, y, h

p

(x, y)). In general, the

number of scattering centers per resolution cell varies spatially

and needs to be estimated from the data. The ground plane

image for elevation

can can be written as

I(x

, y

, pol) =

s(x, y)

p

g

p

(x, y)e

j tan(

)k

0

x

h

p

(x,y)

e

jxk

0

x

,

(11)

where s(x, y) is the inverse Fourier transform of the 2D

windowing function used in imaging the 2D point spread

function of the imaging operator and k

0

x

= (

4f

c

c

) cos(

) is

the center frequency used in baseband modulation. The ground

locations (x, y, h

p

(x, y)) and the image coordinates (x

l

, y

l

))

are related through layover:

x

l

= x + tan(

)h

p

(x, y). y

l

= y. (12)

We assume that the difference between the elevation angles

for the different passes is sufciently small so that for each

elevation pass the scattering center (x, y, h(x, y)), falls in the

same resolution cell (x

l

, y

l

); for practical object or scene

heights radar point spread functions, and elevation diversity,

this assumption is generally satised. Then the baseband

images from each pass can be modeled as

I(x

l

, y

l

;

, pol) =

p

g

p

(x

l

, y

l

)e

jk

0

x

tan(

)h

p

(x

l

,y

l

)

, (13)

where g

p

(x

l

, y

l

) s(x, y)

_

p

g

p

(x, y)e

jxk

0

x

_

. This can

be expressed as a sum of complex exponential model

I(x

l

, y

l

;

, pol) =

p

g

p

(x

l

, y

l

)e

jk

p

(x

l

,y

l

) tan(

)

, (14)

where the the frequency factor k

p

is given by

k

p

(x

l

, y

l

) =

4f

c

cos(

)

c

h

p

(x

l

, y

l

). (15)

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

8

In general, the elevation spacing of the L measurements

in (14) is not equally-spaced. As an example, even though

GOTCHA CSAR passes have a planned (ideal) equally-spaced

separation of = 0.18

in elevation. Actual ight paths

differ from the planned paths, with the mean of eight elevation

passes at 44.27, 44.18, 44.1, 44.01, 43.92, 43.53, 43.01, 43.06

degrees. In addition, the elevation varies as the aircraft circles

the scene, as shown in Figure 3, so the elevation spacing

changes as a function of subaperture index. Note that the

only elevation angle dependence in (14) is in the phase term.

We see from (14) that, for each pixel (x

l

, y

l

), the problem

of estimating the number P and heights h

p

of scattering

centers from {I(x, y;

, pol)}

L

=1

is one of estimating a set of

complex exponentials from L measurements; see also [11]

[20], [55].

In Tomo-SAR, the number of scattering centers in a res-

olution cell must be estimated before spectral estimation

methods can be applied to estimate height parameters. This

is a model order selection problem, and different methods

exist for model order selection [55][57]; a discussion of

model order selection in the context of Tomo-SAR has been

treated extensively in the literature (see e.g. [17], [20]). In a

recent study [21] using CSAR X-band data of vehicles, the

estimated model order was 1 in a large majority of cases,

and when the model order was > 1, one dominant (large

amplitude) scattering center was often seen. Thus, the complex

exponential signal in (14) is sparse, with typically only 1

scattering center in the height dimension. This suggests that

the estimation bias resulting from forcing the model order to

be 1 may be small for a large fraction of pixels. Choosing

the model order to be 1 presents a computational advantage,

because for the single-exponential case, a maximum likelihood

estimator of its frequency in white measurement noise is given

by the peak of the Fourier transform of the data, and this

Fourier transform is easy to compute. We thus adopt this model

order approximation, and estimate, for each pixel (x

l

, y

l

) the

single dominant height location k

1

(x

l

, y

l

), as the peak of the

Fourier transform of the L, I(x

l

, y

l

;

, pol) values for that

pixel and calculate the height using (15) [12], [13], [16].

The complex amplitude of the Fourier transform at the peak

provides an estimate of the amplitude of the scattering center.

As previously mentioned, in this Tomographic SAR ap-

proach, the 3D reconstruction problem has been realized by

2D followed by 1D processing steps. First, 2D images are

formed for each azimuth subaperture and each elevation angle.

Then, 1D processing is applied to each L1 vector obtained

by stacking the set of L elevation images and selecting

the L values at a pixel location of interest. The processing

reduction is afforded by the particular structure of the sparse

measurement geometry provided by CSAR collections.

VI. 3D IMAGING RESULTS

We next present 3D SAR image reconstruction results from

both the squiggle path and the CSAR datasets, using the

algorithms described in the previous two sections. We show

both raw voxel reconstructed images, and smoothed surface t

reconstructions that are useful for visualization.

A. Squiggle Path Reconstructions

The k-space data from the path shown in Figure 1 are rst

partitioned into overlapping subapertures, each with azimuth

angle extent of 10

and full elevation extent, and separated

by 5

center azimuth increments. As an example, Figure 4

shows the magnitude of k-space data from the k-space subset

in azimuth range of [66

, 76

).

Fig. 4. Magnitude of k-space data subset from azimuth range [66

, 76

).

Lighter colors and smaller points are used for smaller magnitude samples;

darker colors and larger points are used for larger magnitude samples. Axes

units are in rad/m.

Each subset of data is contained in a bounding box

with bandwidths in each dimension of (X

BW

, Y

BW

, Z

BW

) =

(142.80, 314.2, 285.6) rad/m. At these bandwidths, spatial

samples are critically sampled with sample spacings of

(x, y, z) = (0.044, 0.02, 0.022) meters in each respec-

tive dimension. Both the image reconstruction and k-space in-

terpolation are performed on uniformly spaced 182250252

grids. With this size grid, the spatial extent of the reconstructed

images is [4, 4) [2.5, 2.5) [2.77, 2.77) meters in the

x, y, and z dimensions respectively. Each subset of k-space

data is interpolated using nearest neighbor interpolation. In

simulations not presented here, more accurate interpolations

using both the Epanechnikov and Gaussian kernels, were

found to result in nearly identical images, but at much higher

computational cost.

The squiggle path dataset is noiseless. To simulate the effect

of radar measurement noise, we corrupt the k-space data with

i.i.d. circular complex Gaussian noise with zero mean and

variance,

2

n

= 0.9. Real and imaginary parts of the k-space

data have a mean of approximately zero and variance,

2

s

, of

approximately 9; thus, the noise variance is chosen so that the

signal to noise ratio (SNR) is 10 dB, where SNR in decibels

is dened as 10 log(

2

s

2

n

).

First, we show in Figure 5 a side view of a gold standard

benchmark 3D reconstructed backhoe image corresponding

to the squiggle path dataset [45]. The image was formed

using a windowed 3D inverse Fourier transform of a dense k-

space dataset covering the azimuth and elevation range of the

squiggle path; this dense data is given for every

1

14

in azimuth

and elevation angle along an azimuth range of [65.5

, 114.5

]

and elevation range of [17.5

, 42.5

]. Squiggle path k-space

data is contained within this benchmark dataset and is very

sparse with respect to it; see Fig. 1. The squiggle path dataset

consists of only 1.29% of the benchmark data samples.

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

9

(a)

(b)

Fig. 5. Benchmark reconstructed backhoe image using k-space data collected

at every

1

14

in azimuth and elevation angle along an azimuth range of

[65.5

, 114.5

] and elevation range of [17.5

, 42.5

]. Subgure (a) displays

the reconstructed image superimposed on the backhoe facet model, and (b)

shows the reconstructed image without the facet model. Images from [45].

Second, we present image reconstructions from the squiggle

path using standard Fourier image reconstruction. A recon-

structed squiggle path image viewed from the side and top

is shown in Figure 7. The top 25 dB magnitude voxels are

displayed in the image. One sees that the structure of the

backhoe is highly smoothed and distorted due to the high

image sidelobes, and backhoe features, such as the front scoop

are not well localized. Poor image quality is predicted from

the subaperture point spread functions of the sparse squiggle

path; an example is shown in Figure 6 for one subaperture. The

PSF is not well localized and exhibits signicant spreading and

high sidelobes due to the sparseness of the data.

Fig. 6. Magnitude of PSF from the squiggle path over azimuth range

[66

, 76

). Light colors and small points are used for small magnitude voxels;

darker colors and large points are used for large magnitude voxels. Axis units

are in meters.

Figure 8 shows the side and top view of a reconstructed

squiggle path backhoe image using the

p

regularized LS

reconstruction algorithm in Section IV. The top 30 dB magni-

tude voxels are displayed. The images in Figure 8 were formed

by rst reconstructing 27, 3D images from each subaperture

and polarization; images are the solution to the optimization

problem (7). All images are reconstructed using a norm with

p = 1 and sparsity parameter = 10, which are selected

manually. Automatic selection of is an ongoing area of

research [58][60]. Here, p, and were chosen empirically

through visual inspection of images. Final images are formed

by combining the subset images over the maximum of polar-

izations in addition to aspect angles according to (4).

In addition to the scattering point plots displayed in the

top of Figure 8, it is possible to accentuate surfaces of 3D

reconstructed images for visualization by smoothing image

voxels; visualizations are shown in Figures 8(e) and 8(f)

1

.

There are a large array of scientic visualization tools for

accomplishing such a task, such as Maya and ParaView. Maya

visualization examples are given in [61]. Here we apply a

Gaussian kernel with diagonal covariance and equal standard

deviation, , to smooth the voxels. Smoothed images are

formed on a grid with the same dimensions as the original

grid. To speed up the smoothing, the kernel is given a xed

support within some radius of the grid voxel being smoothed.

In Figures 8(e) and 8(f), a standard deviation of = 0.4 m and

grid radius of 3 is used. Voxel magnitude is then displayed

using color and transparency coding. Blue, transparent colors

indicate low relative voxel magnitude and red, opaque colors

indicate large relative voxel magnitude.

As can be seen from Figure 8, features in the sparse

reconstructions are well-resolved. For example, the hood, roof,

and front and back scoops are clearly visible, in the correct

location, and do not exhibit the large sidelobe spreading seen

in Figure 7. The side panels of the driver cab are not visible,

and the arm on the back scoop is not as prominent as in

the benchmark in Figure 5, but most backhoe features in the

benchmark backhoe image are also visible in the squiggle path

reconstruction. There are a small number of artifacts in the

image that do not lie close to the backhoe, namely below

the front and back scoop. These artifacts appear to be due to

multiple-bounce effects that are present in the given scattering

data, rather than to an error artifact of the reconstruction

process. From the top view of the backhoe, the group of voxels

at the top left also appear to be present in the benchmark image

as viewed from an angle not shown in Figure 5; these voxels

are also likely the result of multibounce from the back scoop

and are not artifacts specic to squiggle path reconstruction.

Simulation results presented above were performed in MAT-

LAB on a system with an Intel 3 GHz Dual Core Xeon

processor and 4 GB of memory. Both the interpolation and

sparse optimization in image reconstruction can be computa-

tionally intensive. The Nearest-neighbor interpolation method

1

A movie of this visualization rotating 360

is included in the multi-

media le bh_squiggle_vis.avi, which is available for download on

http://ieeexplore.ieee.org . During rotation of the backhoe, one

side appears more lled than the other due to limited azimuth data collection

extent.

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

10

(a) (b)

(c) (d)

Fig. 7. Reconstructed backhoe from standard Fourier image reconstruction using each subaperture image for an SNR of 10 dB. Lighter colors and smaller

points are used for smaller magnitude voxels; darker colors and larger points are used for larger magnitude voxels. Subgure (a) and (b) show a side view

of the reconstructed image with and without the backhoe facet model superimposed, respectively; subgures (c) and (d) show top views of the reconstructed

image with and without the backhoe facet model superimposed, respectively. The top 25 dB magnitude image voxels are displayed.

is fast compared to

p

regularized LS optimization and took

less than 25 seconds to run on each data subset; sparse

optimization computations took 1726 minutes to run on each

subaperture. Although not investigated here, it may be possible

to alter stopping criterion tolerances in the algorithm to lower

computation times without adversely affecting reconstructed

images.

B. Mulitpass CSAR Reconstructions

We next consider 3D vehicle reconstructions from measured

X-band CSAR data taken over an urban area. Figure 9 shows

a 2D radar ground image obtained from one pass of the CSAR

scene. This is the scene of a parking lot with several vehicles,

including a calibration tophat and a Toyota Camry. Figure 10

shows photographs of the tophat and Camry.

Radar ight location information for the GOTCHA dataset

contains sensor location errors. These errors are corrected

using prominent-point (PP) autofocus [10] solution provided

with the GOTCHA dataset; in addition, spotlighting is used

to reduce computation and memory requirements; these pro-

cesses are discussed in more detail in [32]. We form 3D re-

constructions of two spotlighted areas of the CSAR GOTCHA

scene centered on the tophat, and on the Toyota Camry. For the

p

regularized LS reconstructions, 5

subapertures from 0

to

360

with no overlap were used, for a total of 72 subaperture

images that are combined by (4). Reconstructed

p

regularized

LS image voxels are spaced at 0.1 m in all three dimensions.

The dimensions of the reconstructed tophat and Camry images

in (x, y, z) dimensions are [2, 2) [2, 2) [2, 2)

and [5, 5) [5, 5) [5, 5) meters respectively. These

dimensions dene the k-space bandwidth of the bounding box

and grid used for nearest-neighbor interpolation. The bounding

box bandwidth used in both images is 62.8318 rad/m in all

dimensions. The interpolation grid inside the bounding box

consists of 50 samples for the tophat and 100 samples for

the Camry in each dimension. As before, we chose p and

manually to generate images that produce qualitatively good

reconstructions.

Fig. 9. 2D SAR image of the GOTCHA scene. Image from [46].

Figure 11 shows 3D reconstructions of the tophat and

Camry formed using traditional Fourier reconstruction tech-

niques on each interpolated subaperture dataset, and then by

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

11

(a) (b)

(c) (d)

(e) (f)

Fig. 8. Reconstructed backhoe image using regularized LS for an SNR of 10 dB. The top 30 dB magnitude image voxels are displayed. In (a) through

(d), lighter colors and smaller points are used for smaller magnitude voxels; darker colors and larger points are used for larger magnitude voxels. In (e) and

(f) smoothed visualizations are displayed. The left column of subgures show a side view of the reconstructed image. In (a) the backhoe facet model is

superimposed; The right column of subgures show top views of the reconstructed image. In (b), the backhoe facet model is superimposed.

combining the subaperture reconstructions using (4). The VV

polarization channel is used, and only the top 20 dB of voxels

are shown, with lighter colors and smaller points indicating

lower magnitude scattering and larger points with darker

color indicating larger magnitude scattering. These images are

very similar to ones generated using ltered backprojection

processing. The images have poor resolution, especially in

the slant plane height directions, due to the sparse support

of k-space data in elevation angle; the support window of this

collection geometry results in a point spread function with

spreading and high sidelobes [62].

Figure 12 shows three different views of the tophat 3D

reconstruction using the

p

regularized LS approach. These

reconstructions use the VV polarization data, with parameter

settings of = 0.01 and p = 1, and, in contrast to the

Fourier images, the top 40 dB of voxels are shown. The

reconstruction in Figure 12 clearly shows the circular corner

between the base and cylinder of the tophat (see Figure 10(a)),

and this scattering is well-localized to the correct location.

From the reconstruction, the radius of the tophat is seen to be

approximately 1 m, agreeing with the true radius of the tophat.

Furthermore, there are no visible artifacts in the image.

Figure 13 shows

p

regularized LS reconstructions of the

Toyota Camry for two polarizations (VV and HH). The

parameters = 10, and p = 1 are used in the reconstructions,

and the top 40 dB of scattering centers are shown. To highlight

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

12

(a) Tophat

(b) Camry

Fig. 10. Photographs from the GOTCHA scene. Images from [46].

(a) Tophat

(b) Camry

Fig. 11. Traditional Fourier images

vehicle structure, images are displayed using the smoothing

visualization process as described in Section VI-A with a

Gaussian kernel standard deviation of = 0.1 m. An example

of a non-smoothed scatter point plot of the Camry is given in

Figure 14. Figures 13(g) through 13(i)

2

show combined HH

and VV polarization images formed by taking the maximum

over polarizations in (4) in addition to aspect angle. In all of

the images, the outline of the Camry is clearly visible. The

upper, curved line is direct scattering from the vehicle itself,

whereas the lower curve at 0 m elevation is scattering from the

2

A movie of the combined VV and HH polarization

visualization rotating 360

is included in the multimedia le

sparse_Camry_HH_VV_vis.avi, which is available for download

on http://ieeexplore.ieee.org.

virtual dihedral made up of the ground and the vertical vehicle

sides, front, and back. The HH images appear to show more

scattering from this virtual dihedral than to the VV images, as

there is a more pronounced line below the car; there is also

some scattering above the windshield in the HH image, which

may be an artifact, and does not appear in the VV image. The

apparent artifacts in the VV polarization below the front of the

car and to the side of the car in the 3D view, are scattering

from an adjacent vehicle that is not completely removed by

the spotlighting process.

In Figure 14, we illustrate the aspect dependence of the

proposed

p

regularized LS non-coherent imaging process.

Whereas, previous gures were color-coded on voxel mag-

nitude, Figure 14 is color-coded on azimuth angle. The color

of a voxel indicates center azimuth angle of the subaperture

image that it came from. The circle at the base of the Camry

shows azimuth angle of the aircraft with respect the the Camry.

Computations for Figures 12-14 were performed in MAT-

LAB on a system with an Intel 2.8 GHz Pentium D processor

and 2 GB of memory. Interpolation time with nearest-neighbor

interpolation was negligible; sparse optimization computations

took 3 5 minutes on each subaperture.

(a) 3D view

(b) Side view

(c) Top view

Fig. 12.

p

regularized LS tophat reconstructions with = 0.01 and p = 1.

The top 40 dB magnitude voxels are shown.

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

13

(a) 3D view, VV polarization (b) Side view, VV polarization (c) Top view, VV polarization

(d) 3D view, HH polarization (e) Side view, HH polarization (f) Top view, HH polarization

(g) 3D view, VV and HH polarization (h) Side view, VV and HH polarization (i) Top view, VV and HH polarization

Fig. 13.

p

regularized LS Camry reconstructions with = 10 and p = 1. The top 40 dB magnitude voxels are shown.

In the Tomo-SAR approach, reconstructed scattering centers

are not constrained to lay on a grid in the height dimension.

To compare this imaging method with

p

regularized LS

reconstructed images, data is rst interpolated to a grid with

0.1 m voxel spacing in each dimension; this is the same

spacing used in

p

regularized LS reconstructions. A Gaussian

kernel with standard deviation of = 0.1 m is used for

interpolation.

Figure 15 shows the results of the Tomo-SAR approach

applied to the Camry data after interpolation. The top 20 dB

points are shown. The VV and HH polarization images in

Figure 15(g) through 15(i)

3

are formed by combining the

interpolated VV and HH polarization images as performed in

p

regularized LS reconstructions. Scattering is assumed to be

above the ground plane in calculations; so, unlike in the

p

regularized LS reconstruction, there are no non-zero voxels

below the vehicle. As in the

p

regularized LS reconstruc-

tion, a set of 72 subaperture image sets were formed, each

3

A movie of the combined VV and HH polarization

visualization rotating 360

is included in the multimedia le

sparse_Camry_HH_VV_vis_Tomo_SAR.avi, which is available

for download on http://ieeexplore.ieee.org.

with 5

azimuth extent, and the image-domain subaperture

reconstructions for all polarizations were combined using (4).

The wide-angle Tomo-SAR algorithm was also implemented

in MATLAB and took less than 1 minute to process each

subaperture.

In comparing the

p

regularized LS and Tomographic SAR

reconstructions, some qualitative differences are seen. Most

notably, the Tomo-SAR-based reconstructions are more lled

than the regularized LS reconstructions. This is in large part

due to the way in which sparsity is enforced in the two

techniques; the

p

regularized LS method imposes sparsity

in the full 3D space, while Tomo-SAR-based methods obtain

standard (non-sparse) 2D images and develop sparse recon-

structions only in the 1D height dimension. The 2D image

downrange and crossrange resolutions are approximately 0.3

meters and 0.2 meters, respectively; so, a single bright scat-

tering point will appear as a 0.3m 0.2m at disk, tilted at

45

. For 3D visualizations, we nd the more lled Tomo-SAR

reconstructions to be more easily interpretable. For automated

post-processing such as automatic target recognition that treats

the reconstructed voxels as features, the smaller number of

features provided by the

p

regularized LS approach is likely

Copyright (c) 2010 IEEE. Personal use is permitted. For any other purposes, Permission must be obtained from the IEEE by emailing pubs-permissions@ieee.org.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication.

14

(a) 3D view, VV polarization (b) Side view, VV polarization (c) Top view, VV polarization

(d) 3D view, HH polarization (e) Side view, HH polarization (f) Top view, HH polarization

(g) 3D view, VV and HH polarization (h) Side view, VV and HH polarization (i) Top view, VV and HH polarization

Fig. 15. 3D Camry reconstruction using the wide-angle Tomo-SAR algorithm. The top 20 dB magnitude scatterers are shown

preferable, since it results in less correlated features than in

the Tomo-SAR technique. In comparing computations, we see

that the

p

approach requires more computation time for 3D

reconstructions than the Tomo-SAR approach does, in the

present algorithmic implementations. It should be noted that

we have not undertaken a dedicated effort at computation

optimization, and different relative computation times may

be achieved with additional optimization. Finally, we note

that the

p

regularized LS 3D SAR images do indeed show

signicant sparsity, which provides further justication of the

validity of enforcing a model order of 1 in the Tomo-SAR

reconstructions, as discussed in Section V.

VII. CONCLUSIONS

We have examined the use of scattering sparsity to improve

3D SAR reconstruction from sparse data collection geometries.

We have formulated 3D reconstruction algorithms based on

the premise that radar scattering is sparse in the reconstructed

3D spatial domain. The algorithms consider anisotropic scat-

tering behavior of objects over wide aspect angles, but uses

a GRLT-based approach to noncoherently combine indepen-

dently calculated subaperture images to obtain a wide-angle

reconstruction. Two reconstruction approaches were presented.

The rst uses a sparsity-constrained regularized least-squares

technique to directly compute 3D reconstructions from arbi-

trary collection geometries. The algorithm is demanding in

both computation and memory usage. A second Tomo-SAR

approach is tailored to a particular sparse data collection: a

multi-elevation Circular SAR collection geometry. This latter

approach takes advantage of the particular data collection

geometry to partition a 3D reconstruction problem into a

set of 2D image formation steps followed by 1D height

estimates, yielding savings in both memory and computation.

Both methods are effective at signicantly reducing the large

sidelobe artifacts that are present in traditional Fourier-based

or backprojection reconstruction methods.

We presented 3D image reconstructions using both synthetic

backscatter measurements of a construction backhoe and Cir-

cular SAR X-band radar measurements of an urban ground

scene. In the backhoe case, we presented 3D reconstructions

using a pseudorandom squiggle ight path that is sparse

over a wide-angle aperture in both azimuth and elevation; the