Escolar Documentos

Profissional Documentos

Cultura Documentos

Chapter 3 Discrete Probability Distributions - 2

Enviado por

Victor ChanDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Chapter 3 Discrete Probability Distributions - 2

Enviado por

Victor ChanDireitos autorais:

Formatos disponíveis

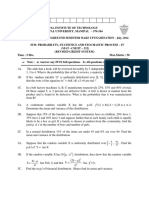

Chapter 3 Discrete Probability Distributions

Chapter Outline

Section 3.1: Discrete Random Variables

Section 3.2: Terminologies in Discrete Random Variables

Probability Mass Function (pmf)

Cumulative Distribution Function (cdf)

Expected Value and Variance ( and 2)

Section 3.3: Binomial Distribution

Permutations and Combinations ( nPkand nCk )

pmf and cdf of Binomial Distribution

Section 3.4: Poisson Distribution

Section 3.5: Poisson Approximation to the Binomial Distribution

Section 3.6: Practice Problems

Why Discrete Probability Distribution?

Flip a coin 50 times. Observe the number of heads.

What are the probabilities of obtaining 0, 1, 2, ... 50 heads?

An average of 2 holes in 100m2 of paper production. A production of 1000m2of paper.

What are the probabilities of obtaining 0, 1, 2,... holes?

Soccer matches between teams A and B. Expected scores between teams A and B are 3:2.

What is the probability that the actual score is 1:0 ?

Section 3.1 Discrete Random Variables

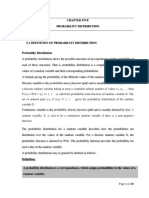

I. Random Variable

A Random Variable (RV) is a numeric quantity that takes different values with specified probabilities

Two types of random variables: Discrete and Continuous

A random variable for which there exists a discrete set of numeric values is a discrete random variable

A random variable which can take a continuous range of value is a continuous random variable.

II. Discrete Random Variable

Discrete random variable: Takes a discrete set of numeric values.

Example 1:

Roll a fair die once

Possible outcomes would be {1,2,... , 6}, which is a set of discrete value.

Let X be the value of the outcome. X is a discrete random variable

Pr(X=1) = Pr(X=2) = = Pr(X=6) = 1/6

III. Continuous Random Variable

Continuous Random Variable: takes any value on a continuum (with an uncountable number of values)

Example 2:

Let the random variable Z be the weight of an individual.

Z is a continuous random variable taking any positive real numbers.

Pr(1<Z<1.7)=0.6

N.B.: We will cover Continuous Random Variable in Chapter 4

Section 3.2 Terminologies in Discrete Random Variables

I. Probability Mass Function

A probability mass function (pmf) assigns a probability to each possible value x of the discrete random variable X.

Notation: Pr(X=x).

The probability mass function (pmf) is also known as a probability distribution.

The probability mass function is an analog to the frequency distribution of a sample:

Probability Mass Function: Population

Frequency Distribution: Sample

Example 3:

Toss two fair coins.

Let X be the (discrete) random variable of the # of heads observed.

Compute the probability mass function.

Solutions:

4 possible outcomes when tossing two coins:

II. Cumulative Distribution Function

Cumulative Distribution Function (cdf) of a random variable X at value x is the probability that X is less than or equal to

the value x.

Notation: F(x) = Pr(X x).

Properties: F(x) is an increasing function from 0 to 1.

Note:

The cdf looks like a series of steps, called the step function.

With the increase in the number of values, the cdf will get smoother and smoother

For a continuous random variable, the cdf is a smooth curve.

Example 3 (Continued):

Toss two fair coins.

Let X be the number of heads observed.

Compute the cumulative distribution function

Solutions:

pmf:

( ) {

The cdf is therefore:

() ( ) {

III. Expected Value

A random variable with many different values

Hard to describe the random variable

Summarize the random variable based on some location and spread parameters.

Expected value of X: is the sum of product of all possible values with their corresponding probabilities:

where x

1

, , x

n

are the possible values X can take.

Note:

is also known as the mean or the population mean.

Expected value is the analog of the arithmetic mean of a sample, as it represents the average value of the random

variable

IV. Variance

Variance of X: is the sum of squares of all possible values of xi - with their corresponding probabilities:

2

is also called the population variance.

Alternative Formula:

where x

1

, , x

n

are the possible values X can take.

Note:

Variance of a random variable X is the analog of the sample variance (s

2

) of a sample.

V. Expected Value and Variance

Example 3 (Continued):

X = # of heads from tossing 2 fair coins.

What is the expected value and variance of X?

( ) {

Solutions:

()

) () () ()

Intuition: On average, we expect 1 head from tossing 2 fair coins.

Alternative formula:

Example:

Consider a discrete random variable X with probability distribution given by

a) Show that k=0.1

b) Compute E(X), E(X

2

) and Var(1-4X)

c) Sketch the CDF of X.

Section 3.3 Binomial Distribution

I. Introduction

Binomial distribution: Probability distribution of the number of successes in n independent experiments, each yields a

probability of success p.

Derivation of Binomial Distribution:

1) Permutations (rearrange objects and values)

2) Factorial

3) Combinations (rearrange objects and values, ordering doesnt matter)

4) Binomial Distribution

II. Permutation

Example 4:

Consider a container with 3 balls (Numbered 1,2 and 3).

Two balls are drawn at random without replacement.

Possible pairs: (1,2), (1,3), (2,3) , (2,1), (3,1), (3,2)

Does the order selected matter in terms of winning?

If the order matters, then there are 6 possible outcomes, => permutations

If the order does not matter, then there are 3 possible outcomes, => combinations

Questions:

Select k objects be selected out of n objects (0kn)

How many ways can we select, if the objects are selected without replacement?

Answer:

There are

If we denote nPk to be the number of permutations of n objects taken k at a time, then

III. Permutations and Factorial

Define the term n factorial (n!) as a product of n terms:

n!= n x (n - 1) x (n - 2) x x 1

Example 5: 5! = (5)(4)(3)(2)(1)=120.

Permutation nPk and Factorial:

IV. Combination

Ordering doesnt matter => Use combination but not permutation

Denote nCk (or (

), pronounce as n choose k) to be the number of combinations of n objects taken k at a time

It represents the number of ways of selecting k objects out of n where the order of selection does not matter.

Example 6:

Evaluate

3

P

2

and

3

C

2

Solutions:

Examples 7:

Consider a lottery from a container with 3 balls (numbering 1,2 and 3).

(a) What is the # of permutations if two balls are drawn at random without replacement?

(b) What is the # of combinations if two balls are drawn at random without replacement?

Solutions:

Let n=3 balls and k=2 balls drawn from the container,

V. Setup of the Binomial Distribution

Examples 8:

Toss a fair coin 10 times

Let X be the total number of heads observed.

What is the distribution of X?

Answer: Binomial Distribution!

General Setup of the Binomial Distribution:

If we

(1) record a fixed number of experiments n,

(2) Probability for the event to happen in each experiment is constant at p.

If X is the total number of events to happen, then

X follows Binomial Distribution with parameters n and p

VI. Probability Mass Function of Binomial Distribution

The probability mass function (pmf) of the Binomial distribution with parameters n and p is given by

Therefore, # of possible outcomes =(

)

VII. Binomial Distribution

Example 9:

Toss a fair coin twice and let X be the number of heads.

Find the probability of obtaining x=0,1 and 2 heads.

Solutions:

n=2 and p=0.5. Hence

Therefore,

Example 10:

What is the probability of obtaining exactly one success in 10 experiments, if the probability of success is 0.1?

Solutions: n=10 and p=0.1. Therefore

The above probability is pretty large: On average, one would expect to obtain only one success out of the 10

experiments.

In fact, X=1 is the mode of the distribution, followed by the X=0 as follows:

VIII. White Blood Cells Count

White blood cells: Cells of immune system to defend the body against both infectious disease and foreign material

Major types of White Blood Cells

Neutrophil: 70% (most abundant)

Eosinophil

Basophil

Monocyte

Lymphocyte

High number of neutrophils counts: Possible bacterial infection/acute viral infections.

A sample of blood is taken => # of neutrophils follow Binomial distribution

Example 11:

Assume that 70% of white blood cells are neutrophils from a healthy person. If 10 white blood cells are examined,

what is the probability mass function of the number of neutrophils?

Solutions:

Let X be the number of neutrophils. Then

X ~Binomial(n=10, p=0.70), with pmf

Note: Pr(X=10) very small at 0.028.

If 10 neutrophils are observed from the sample => Indication on bacterial infection (Chapter 7)

IX. Binomial Distribution --- Shape of the Binomial Distribution

1. Binomial Distribution is Symmetric when p=0.5

Binomial distribution with p=0.5 and various values of n:

Binomial distribution is symmetric when p=0.5. That is,

Pr(X=k) = Pr(X=n-k) for k=0, 1, 2,... N

2. When p<0.5, the distribution is right-skewed.

3. When p>0.5, the distribution is left-skewed.

Binomial Distribution with n=4 Binomial Distribution with n=10

X. Properties of the Binomial Distribution

Mean = = E(X) = np

Intuition: The expected number of successes in n trials is the probability of success in one trial (p) multiplied by n

Variance =

2

= np(1- p) = npq ,where q = 1- p is the probability of failure.

Note: For fixed value of n,

2

is maximized when p=0.5

Standard Deviation: = ( ) =

VI. Variance of the Binomial Distribution

2

= np(1-p) = n[ ( ]

which is a concave function in p and attains its maximum at p=0.5.

Interpretation:

1) When p=0 or 1:

2

=0 as there is no uncertainty on the outcome (Why?)

2) When p=1/2: the pmf spreads out widely across x=0 to x=n. Hence variability is the largest.

VII: Why Probability Distribution?

Covered in Chapter 1:

Suppose that there are 1,000 students in a class. Eachof them flip the same coin 10 times and record the number of

heads each students observed. Below is the frequency table of the number of heads observed by each student:

Question: Is the coin a fair one? (That is, is p=Pr(Head)=0.5?)

Covered in Chapter 3:

Let X be the number of heads observed by flipping a coin 10times. Then X~Binomial(n=10, p)

Below is the probability mass function for p =0.1, 0.3, 0.5, 0.7 and 0.9.

We can then compare the histogram on the previous page with the pmf below to make a guess on what is the true p.

Covered in Later Chapter:

Chapter 6 Estimation Based on the data, try to

(1) Find the best estimate of the unknown probability p,

(2) Find the range of probability p that is likely to generate the data.

Example: 90% of chance p is between 0.6 to 0.9.

Chapter 7-8 Hypothesis Testing

Question: Is the coin fair?

Conclusion: We have 95% confidence that the probability of head p is larger than 0.50.

Section 3.4 Poisson Distribution

I. Poisson Distribution

The second most frequently used discrete distribution

Poisson distribution is usually associated with rare events.

Example:

Of every 10m2 of paper being produced, we expect to observe an average of one surface defect.

Let X be the number of surface defects per 100m2 of production. What is the distribution of X?

Answer: Poisson Distribution

II. Probability Mass Function of Poisson Distribution

Assume that events occur independently with each other.

The probability of k events occurring for a Poisson random variable with parameter is

Where e 2.71828 is the Eulers constant, and is expected number of events to occur

Notation:

Example 12:

Assume # of bacterial colonies is 0.02 per cm2. For an area of 100cm2, find the probability distribution of the

number of bacterial colonies.

Solutions:

The expected number of bacterial colonies per 100cm2 is given by = 0.02(100) = 2 (Colonies)

Let X be the number of colonies in 100cm2, then X~Poisson(=2)

Probability Mass Function:

Example 12 (Continued):

Now, suppose we are interested in a larger area of 200cm2. Whats the probability distribution of the number of

bacterial colonies?

Solutions: =0.02(200) = 4(colonies). Let Y be the number of colonies in 200cm2, then Y~Poisson(=4)

Probability Mass Function:

III. Binomial Distribution vs Poisson Distribution

Binomial distribution: Number of trials n is finite, and the number of events cannot be larger than n.

Poisson distribution: Number of trials is essentially infinite and the number of events can be indefinitely large.

However, probability of k events becomes very small as k increases.

Examples of Binomial Distribution:

Flip a coin n times

Number of neutrophils out of n White Blood Cells

Example of Poisson Distribution:

Electrical system: telephone calls arriving in a system.

Astronomy: photons arriving at a telescope.

Biology: the number of mutations on a strand of DNA per unit time.

Management: customers arriving at a counter or call centre.

Civil Engineering: cars arriving at a traffic light.

Finance and Insurance: Number of Losses/Claims occurring in a given period of time.

IV. Shape of the Poisson Distribution

Poisson Distribution is a right-skewed distribution

Whenis small (e.g. =0.8), the distribution is heavily skewed to the right.

As increases (e.g. =12), the distribution becomes more symmetric, even though its still slightly right-skewed

V. Expected Value and Variance of Poisson Distribution

Suppose that X~Poisson (), that is,

Then the expected value and the variance of X are given by

Section 3.5 Poisson Approximation to the Binomial Distribution

I. Introduction

Binomial distribution: When n is large and p is small, we can think of observing rare events in a large number of trials. In

that case, the binomial distribution can be well approximated by the Poisson distribution, with=np.

Rule of Thumb:

Let X~Binomial(n,p). When n20, p<0.1 and np<5, then

XY~ Poisson() where=np.

Example 12 (Continued):

Let Y be the number of bacterial colonies per 100cm2, with Y ~Poisson( =2.0).

1) You can think of it as a binomial distribution:

Suppose that for each of the small area (at 0.01cm2),

Pr(observing a bacterial) = p =2/10,000 = 0.0002

2) If we observe n=10,000 of those small area (i.e. 100cm2), let X = total number of bacterial colonies

X ~Binomial(n=10,000 , p=0.0002)

3) Combine 1) and 2), X ~ Binomial(n=10,000, p=0.0002) would be well approximated by Poisson distribution, with

Y ~ Poisson (=2.0)

Você também pode gostar

- Chapter 3 Discrete Probability Distributions - Final 3Documento27 páginasChapter 3 Discrete Probability Distributions - Final 3Victor ChanAinda não há avaliações

- Probability 2 FPMDocumento55 páginasProbability 2 FPMNikhilAinda não há avaliações

- Discrete Random Variables and Probability DistributionDocumento20 páginasDiscrete Random Variables and Probability DistributionShiinAinda não há avaliações

- All TutorialsDocumento246 páginasAll TutorialsAngad SinghAinda não há avaliações

- Chapter 3Documento9 páginasChapter 3Nureen Nabilah100% (1)

- Study Material On Theoretical Distribution (15EMAB303)Documento8 páginasStudy Material On Theoretical Distribution (15EMAB303)apoorva aapoorvaAinda não há avaliações

- Unit 2 Ma 202Documento13 páginasUnit 2 Ma 202shubham raj laxmiAinda não há avaliações

- Lesson 5 - Probability DistributionsDocumento8 páginasLesson 5 - Probability DistributionsEdward NjorogeAinda não há avaliações

- Random VariableDocumento39 páginasRandom Variablersgtd dhdfjdAinda não há avaliações

- Prob Dist ConceptDocumento13 páginasProb Dist ConceptVinayak PatilAinda não há avaliações

- Chapter 4Documento21 páginasChapter 4Anonymous szIAUJ2rQ080% (5)

- Chapter3 - Discrete Random Variables - Latest VersionDocumento14 páginasChapter3 - Discrete Random Variables - Latest VersionSuriya MahendranAinda não há avaliações

- Chapter 1Documento13 páginasChapter 1Allen DelaCruz LeonsandaAinda não há avaliações

- UCCM2233 - Chp5.1 Discrete Rv-WbleDocumento76 páginasUCCM2233 - Chp5.1 Discrete Rv-WbleVS ShirleyAinda não há avaliações

- Special DistributionsDocumento14 páginasSpecial Distributionsjsmudher57Ainda não há avaliações

- Chapter 1 Probability DistributionDocumento26 páginasChapter 1 Probability DistributionmfyAinda não há avaliações

- RevisionDocumento3 páginasRevisionFadhlina FirdausAinda não há avaliações

- Problem Set On PDF, CDF, MGF - 1Documento4 páginasProblem Set On PDF, CDF, MGF - 1Siddharth ChavanAinda não há avaliações

- Probability, Statistics and Stochastic Process IV (MAT-CSE, IT 212) RCS (Makeup) (2014) PDFDocumento2 páginasProbability, Statistics and Stochastic Process IV (MAT-CSE, IT 212) RCS (Makeup) (2014) PDFWoof DawgmannAinda não há avaliações

- Probability Distributions.Documento47 páginasProbability Distributions.Sarose ThapaAinda não há avaliações

- Sta 2200 Notes PDFDocumento52 páginasSta 2200 Notes PDFDanielAinda não há avaliações

- M-3 Unit-1 LNDocumento104 páginasM-3 Unit-1 LN21-390Virkula Manish goud GNITC LEMECHAinda não há avaliações

- Notes 515 Summer 07 Chap 4Documento12 páginasNotes 515 Summer 07 Chap 4Akanksha GoelAinda não há avaliações

- Chapter Three: 3. Random Variables and Probability Distributions 3.1. Concept of A Random VariableDocumento6 páginasChapter Three: 3. Random Variables and Probability Distributions 3.1. Concept of A Random VariableYared SisayAinda não há avaliações

- Chapter 3Documento58 páginasChapter 3YaminAinda não há avaliações

- Chapter 6Documento4 páginasChapter 6Ahmad baderAinda não há avaliações

- Supplementary Handout 2Documento9 páginasSupplementary Handout 2mahnoorAinda não há avaliações

- Chapter 3Documento19 páginasChapter 3Shimelis TesemaAinda não há avaliações

- Lecture 8 - MATH 403 - DISCRETE PROBABILITY DISTRIBUTIONSDocumento6 páginasLecture 8 - MATH 403 - DISCRETE PROBABILITY DISTRIBUTIONSJoshua RamirezAinda não há avaliações

- Probability DistributionsDocumento59 páginasProbability DistributionsAkibZ ARTAinda não há avaliações

- Chapter 3Documento39 páginasChapter 3api-3729261Ainda não há avaliações

- Sessions3 and 4Documento42 páginasSessions3 and 4Sourav SharmaAinda não há avaliações

- Add Math Probability DistributionDocumento10 páginasAdd Math Probability Distributionkamil muhammadAinda não há avaliações

- Chap 1 ModuleDocumento31 páginasChap 1 ModuledzikrydsAinda não há avaliações

- Random VariablesDocumento14 páginasRandom Variablesjsmudher57Ainda não há avaliações

- Chapter 5 Discrete Probability Distributions: Definition. If The Random VariableDocumento9 páginasChapter 5 Discrete Probability Distributions: Definition. If The Random VariableSolomon Risty CahuloganAinda não há avaliações

- Chapter 6 Alford PDFDocumento179 páginasChapter 6 Alford PDFrovelyn giltendezAinda não há avaliações

- Chapter 3: Probability: ExperimentDocumento12 páginasChapter 3: Probability: Experimentnilesh bhojaniAinda não há avaliações

- BS UNIT 2 Note # 3Documento7 páginasBS UNIT 2 Note # 3Sherona ReidAinda não há avaliações

- ISI MStat PSB Past Year Paper 2014Documento6 páginasISI MStat PSB Past Year Paper 2014Vinay Kumar ChennojuAinda não há avaliações

- ACTED061L Lesson 4 - Discrete Probability DistributionsDocumento45 páginasACTED061L Lesson 4 - Discrete Probability DistributionsClaira LebrillaAinda não há avaliações

- Tutsheet 5Documento2 páginasTutsheet 5qwertyslajdhjsAinda não há avaliações

- CH-5 Stat IDocumento20 páginasCH-5 Stat IFraol DabaAinda não há avaliações

- Chapter 4Documento17 páginasChapter 4JHAN FAI MOOAinda não há avaliações

- Chapter 6 Prob Stat (Random Variables)Documento49 páginasChapter 6 Prob Stat (Random Variables)necieAinda não há avaliações

- Random VariablesDocumento19 páginasRandom VariablesShōyōHinataAinda não há avaliações

- BM TheoryDocumento25 páginasBM Theorysharankumarg044Ainda não há avaliações

- Random Variables and Probability DistribDocumento82 páginasRandom Variables and Probability DistribVaishnavi BanuAinda não há avaliações

- Chapter 4Documento21 páginasChapter 4Hanis QistinaAinda não há avaliações

- Unit 4. Probability DistributionsDocumento18 páginasUnit 4. Probability DistributionszinfadelsAinda não há avaliações

- 1 StatisticsDocumento96 páginas1 StatisticsJeanne Loren REYESAinda não há avaliações

- ch2 PDFDocumento28 páginasch2 PDFqasem alqasemAinda não há avaliações

- Probability DistributionsDocumento11 páginasProbability Distributionsxpertwriters9Ainda não há avaliações

- M130 Tutorial - 4 Mathematical Expectation-V.6Documento12 páginasM130 Tutorial - 4 Mathematical Expectation-V.6Frederic FarahAinda não há avaliações

- 5 Theorectical Probability DistributionsDocumento55 páginas5 Theorectical Probability Distributionschikutyerejoice1623Ainda não há avaliações

- Basic Statistics For LmsDocumento23 páginasBasic Statistics For Lmshaffa0% (1)

- Lesson 2 Constructing Probability Distributions: Math InstructorDocumento28 páginasLesson 2 Constructing Probability Distributions: Math InstructorGemmadel DuaquiAinda não há avaliações

- Discrete Random Variables and Probability Distributions: Stat 4570/5570 Based On Devore's Book (Ed 8)Documento46 páginasDiscrete Random Variables and Probability Distributions: Stat 4570/5570 Based On Devore's Book (Ed 8)Ahmed BasimAinda não há avaliações

- Learn Statistics Fast: A Simplified Detailed Version for StudentsNo EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsAinda não há avaliações

- Red Cells & Whole Blood DosageDocumento4 páginasRed Cells & Whole Blood DosageVictor ChanAinda não há avaliações

- The FRCPath Exam - Paul BennettDocumento130 páginasThe FRCPath Exam - Paul BennettVictor ChanAinda não há avaliações

- Initial ManagementDocumento2 páginasInitial ManagementVictor ChanAinda não há avaliações

- Clearing The Cervical Spine: DR Claudia AY Cheng Senior Medical Officer Department of Anaesthesia and Intensive CareDocumento78 páginasClearing The Cervical Spine: DR Claudia AY Cheng Senior Medical Officer Department of Anaesthesia and Intensive CareVictor ChanAinda não há avaliações

- Tauma Into To MUS Trauma 2014Documento26 páginasTauma Into To MUS Trauma 2014Victor ChanAinda não há avaliações

- QuDocumento2 páginasQuVictor ChanAinda não há avaliações

- Chap 4 Part IIIDocumento3 páginasChap 4 Part IIIVictor ChanAinda não há avaliações

- Read Each Question Carefully, and Choose The Best AnswerDocumento7 páginasRead Each Question Carefully, and Choose The Best AnswerVictor ChanAinda não há avaliações

- Astronomy 1 Exam 3 Sample: BrighterDocumento7 páginasAstronomy 1 Exam 3 Sample: BrighterVictor ChanAinda não há avaliações

- Restaurant" Using "Whose" As Subordinating Conjunction. Obama Uses This Clause ToDocumento2 páginasRestaurant" Using "Whose" As Subordinating Conjunction. Obama Uses This Clause ToVictor ChanAinda não há avaliações

- AutocrineDocumento5 páginasAutocrineVictor ChanAinda não há avaliações

- Edexcel GCE: Statistics S2Documento16 páginasEdexcel GCE: Statistics S2Victor ChanAinda não há avaliações

- Exam 1 CDocumento8 páginasExam 1 CVictor ChanAinda não há avaliações

- Astronomy I Exam II SampleDocumento7 páginasAstronomy I Exam II SampleVictor ChanAinda não há avaliações

- A1 Exam3 SampleDocumento7 páginasA1 Exam3 SampleVictor ChanAinda não há avaliações

- Module 5 Essay Outline Template (1) 11-53Documento5 páginasModule 5 Essay Outline Template (1) 11-53Victor Chan100% (1)

- Considering Purpose and Audience Music and Movie Piracy On The InternetDocumento2 páginasConsidering Purpose and Audience Music and Movie Piracy On The InternetVictor ChanAinda não há avaliações

- Sample2 Elder AbuseDocumento7 páginasSample2 Elder AbuseVictor ChanAinda não há avaliações

- Core Practical 5Documento16 páginasCore Practical 5Victor ChanAinda não há avaliações

- ITE3001 Mini Project Phase 1Documento3 páginasITE3001 Mini Project Phase 1Victor Chan0% (2)

- Hydroxides of .. Group 1 Metals Group 2Documento2 páginasHydroxides of .. Group 1 Metals Group 2Victor ChanAinda não há avaliações

- Preparatory Manual For Physiotherapy StudentsDocumento41 páginasPreparatory Manual For Physiotherapy StudentsSubhalakshmi Ramakrishnan100% (1)

- Seminar ON: Infection Prevention (Including Hiv) and Standard Safety Measures, Bio - Medical Waste ManagementDocumento24 páginasSeminar ON: Infection Prevention (Including Hiv) and Standard Safety Measures, Bio - Medical Waste ManagementTHONDYNALUAinda não há avaliações

- PathologyDocumento48 páginasPathologyAjay DivvelaAinda não há avaliações

- Yaws Eradication ProgrammeDocumento82 páginasYaws Eradication ProgrammeAparna Aby50% (2)

- Anatomy and Physiology of BloodDocumento10 páginasAnatomy and Physiology of Bloodaoi_rachelle100% (1)

- Golden Points PDFDocumento29 páginasGolden Points PDFBijay Kumar MahatoAinda não há avaliações

- Drug StudyDocumento7 páginasDrug StudyDiana Laura LeiAinda não há avaliações

- Do Eosinophils Have A Role in The Severity of Babesia Annae Infection 2005 Veterinary ParasitologDocumento2 páginasDo Eosinophils Have A Role in The Severity of Babesia Annae Infection 2005 Veterinary ParasitologGabriela Victoria MartinescuAinda não há avaliações

- CH-2 - Biological Classification - NotesDocumento9 páginasCH-2 - Biological Classification - NotesketakiAinda não há avaliações

- Varian Baru Covid 19 Dalam Aspek Medis Dan Biologi MolekulerDocumento20 páginasVarian Baru Covid 19 Dalam Aspek Medis Dan Biologi MolekulerKholida TaurinaAinda não há avaliações

- Trichomonas Vaginalis CaseDocumento5 páginasTrichomonas Vaginalis CaseValerie Gonzaga-CarandangAinda não há avaliações

- Ascaris LumbricoidesDocumento15 páginasAscaris LumbricoidesJyoti DasAinda não há avaliações

- Bee Propolis HandbookDocumento23 páginasBee Propolis HandbookSabdono100% (1)

- Gastroenterology-Liver, Pancreas and Gall Bladder LecturesDocumento7 páginasGastroenterology-Liver, Pancreas and Gall Bladder LecturesMarco Paulo Reyes NaoeAinda não há avaliações

- E-Newsletter - Bebelac Issue 003Documento3 páginasE-Newsletter - Bebelac Issue 003Anita LopokoiyitAinda não há avaliações

- MICBIO GERM THEORY OF DSE and TERMINOLOGIES NOTESDocumento3 páginasMICBIO GERM THEORY OF DSE and TERMINOLOGIES NOTESDemi Labawig100% (1)

- Gastroenteritis in ChildrenDocumento4 páginasGastroenteritis in ChildrenDennifer MadayagAinda não há avaliações

- Terra Universal Volume 5-19 CatalogueDocumento56 páginasTerra Universal Volume 5-19 CatalogueblgndllAinda não há avaliações

- Question BankDocumento15 páginasQuestion BanksumpreronaAinda não há avaliações

- Epidemiological Triad of HIV/AIDS: AgentDocumento8 páginasEpidemiological Triad of HIV/AIDS: AgentRakib HossainAinda não há avaliações

- Urban Water SupplyDocumento207 páginasUrban Water Supplyresky surbaktiAinda não há avaliações

- Case Presentation OnppromDocumento44 páginasCase Presentation Onppromocean329100% (1)

- Aseptic Meningitis: Exams and TestsDocumento8 páginasAseptic Meningitis: Exams and TestsJoylyn Sagon VergaraAinda não há avaliações

- SalmonellosisDocumento26 páginasSalmonellosiscrazieelorraAinda não há avaliações

- Isolation Guidelines HealthDocumento204 páginasIsolation Guidelines HealthGrace Datu100% (1)

- Guia Ingles - Grado 11Documento8 páginasGuia Ingles - Grado 11Juan CuervoAinda não há avaliações

- Mycoplasma Pneumonia DiscussionDocumento18 páginasMycoplasma Pneumonia Discussionmuskaanmir108Ainda não há avaliações

- Bfhajhgcterial Pnfeufmjgonia PosterDocumento1 páginaBfhajhgcterial Pnfeufmjgonia PosterRăzvan RoșcaAinda não há avaliações

- National Calendar For Vaccination: Doses Vaccine Child's AgeDocumento1 páginaNational Calendar For Vaccination: Doses Vaccine Child's AgeĐH ĐỊNH HƯỚNGAinda não há avaliações

- False ChagaDocumento3 páginasFalse ChagaWanda Von Dunajevv100% (3)