Escolar Documentos

Profissional Documentos

Cultura Documentos

Design and Implementation of ADPCM Based Audio Compression Using Verilog

Enviado por

theijes0 notas0% acharam este documento útil (0 voto)

12 visualizações6 páginasThe International Journal of Engineering & Science is aimed at providing a platform for researchers, engineers, scientists, or educators to publish their original research results, to exchange new ideas, to disseminate information in innovative designs, engineering experiences and technological skills. It is also the Journal's objective to promote engineering and technology education. All papers submitted to the Journal will be blind peer-reviewed. Only original articles will be published.

The papers for publication in The International Journal of Engineering& Science are selected through rigorous peer reviews to ensure originality, timeliness, relevance, and readability.

Theoretical work submitted to the Journal should be original in its motivation or modeling structure. Empirical analysis should be based on a theoretical framework and should be capable of replication. It is expected that all materials required for replication (including computer programs and data sets) should be available upon request to the authors.

Título original

j 03502050055

Direitos autorais

© © All Rights Reserved

Formatos disponíveis

PDF, TXT ou leia online no Scribd

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoThe International Journal of Engineering & Science is aimed at providing a platform for researchers, engineers, scientists, or educators to publish their original research results, to exchange new ideas, to disseminate information in innovative designs, engineering experiences and technological skills. It is also the Journal's objective to promote engineering and technology education. All papers submitted to the Journal will be blind peer-reviewed. Only original articles will be published.

The papers for publication in The International Journal of Engineering& Science are selected through rigorous peer reviews to ensure originality, timeliness, relevance, and readability.

Theoretical work submitted to the Journal should be original in its motivation or modeling structure. Empirical analysis should be based on a theoretical framework and should be capable of replication. It is expected that all materials required for replication (including computer programs and data sets) should be available upon request to the authors.

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

0 notas0% acharam este documento útil (0 voto)

12 visualizações6 páginasDesign and Implementation of ADPCM Based Audio Compression Using Verilog

Enviado por

theijesThe International Journal of Engineering & Science is aimed at providing a platform for researchers, engineers, scientists, or educators to publish their original research results, to exchange new ideas, to disseminate information in innovative designs, engineering experiences and technological skills. It is also the Journal's objective to promote engineering and technology education. All papers submitted to the Journal will be blind peer-reviewed. Only original articles will be published.

The papers for publication in The International Journal of Engineering& Science are selected through rigorous peer reviews to ensure originality, timeliness, relevance, and readability.

Theoretical work submitted to the Journal should be original in its motivation or modeling structure. Empirical analysis should be based on a theoretical framework and should be capable of replication. It is expected that all materials required for replication (including computer programs and data sets) should be available upon request to the authors.

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

Você está na página 1de 6

The International Journal Of Engineering And Science (IJES)

|| Volume || 3 || Issue || 5 || Pages || 50-55 || 2014 ||

ISSN (e): 2319 1813 ISSN (p): 2319 1805

www.theijes.com The IJES Page 50

Design and Implementation of ADPCM Based Audio

Compression Using Verilog

Hemanthkumar M P, Swetha H N

Department of ECE, B.G.S. Institute of Technology, B.G.Nagar, Mandya-571448

Assistant Professor ,Department of ECE, B.G.S. Institute of Technology, B.G.Nagar, Mandya-571448

---------------------------------------------------------ABSTRACT-----------------------------------------------------------

Internet-based voice transmission, digital telephony, intercoms, telephone answering machines, and mass

storage, we need to compress audio signals. ADPCM is one of the techniques to reduce the bandwidth in voice

communication. More frequently, the smaller file sizes of compressed but lossy formats are used to store and

transfer audio. Their small file sizes allow faster Internet transmission, as well as lower consumption of space

on memory media. However, lossy formats trade off smaller file size against loss of audio quality, as all such

compression algorithms compromise available signal detail. This paper discusses the implementation of

ADPCM algorithm for audio compression of .wav file. It yields a compressed file of the size of the original

file. The sound quality of the audio file is maintained reasonably after compression.

KEYWORDS: ADPCM, audio compression.

--------------------------------------------------------------------------------------------------------------------------------------

Date of Submission: 25 April 2014 Date of Publication: 20 May 2014

--------------------------------------------------------------------------------------------------------------------------------------

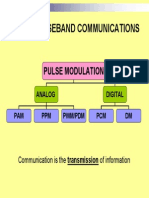

I. INTRODUCTION

Different compression techniques for still pictures include horizontal repeated pixel compression (pcx

format), data conversion (gif format), and fractal path repeated pixels. For motion video, compression is

relatively easy because large portions of the screen don't change between each frame; therefore, only the

changes between images need to be stored. Text compression is extremely simple compared to video and audio.

One method counts the probability of each character and then reassigns smaller bit values to the most common

characters and larger bit values to the least common characters. However, digital samples of audio data have

proven to be very difficult to compress; as these techniques do not work well at all for audio data. The data

changes often and no values are common enough to save sufficient space. The sampling frequencies in use

today are in range from 8 kHz for basic speech to 48 kHz for commercial DAT machines. The number of

quantizes levels is typically a power of 2 to make full use of a fixed number of bits per audio sample. The

typical range of bits per sample is between 8 and 16 bits. The data rates associated with uncompressed digital

audio are substantial. For audio data on a CD, for example, which is sampled at 44.1 kHz with 16 bits per

channel for two channels, about 1.4 megabits per second are processed. A clear need exists for some form of

compression to enable the more efficient storage and transmission of digital audio data. Although lossless

audio compression is not likely to become a dominating technology, it may become a useful complement to

lossy compression algorithms in some applications. This is because, lossless compression algorithms rarely

obtain a compression ratio larger than 3:1, while lossy compression algorithms allow compression ratios to

range up to 12:1 and higher[1]. For lossy algorithms, as compression ratio increases, final audio quality

lowers. For digital music distribution over the Internet, some consumers will want to acquire the best possible

quality of an audio recording for their high-fidelity stereo system. However lossy audio compression

technologies may not be acceptable for this application. Voc File Compressions technique simply removes any

silence from the entire sample. This method analyzes the whole sample and then codes the silence into the

sample using byte codes. Logarithmic compression such as -law and A-law compression only loses

information which the ear would not hear anyway, and gives good quality results for both speech and music.

Although the compression ratio is not very high it requires very little processing power to achieve it. This

method is fast and compresses data into half the size of the original sample. A Linear Predictive Coding (LPC)

encoder compares speech to an analytical model of the vocal tract, then throws away the speech and stores the

parameters of the best-fit model. The MPEG compression is lossy, but nonetheless can achieve transparent,

perceptually lossless compression. It may be observed that differential encoding schemes take advantage of

Design and Implementation of ADPCM Based Audio Compression Using Verilog

www.theijes.com The IJES Page 51

sources that show a high degree of correlation from sample to sample. These schemes predict each sample

based on past source outputs and only encode and transmit the differences between the prediction and the

sample value. When a source output does not change greatly from sample to sample this means the dynamic

range of the differences is smaller than that of the sample output itself. This allows the quantization step size

to be smaller for a desired noise level, or quantization noise to be reduced for a given step size.

II. ADPCM ALGORITHM

The DVI Audio compression using ADPCM (ADPCM) algorithm was first described in an IMA

recommendation on audio formats and conversion practices. ADPCM is a transformation that encodes 16-bit

audio as 4 bits (a 4:1 compression ratio). In order to achieve this level of compression, the algorithm maintains

an adaptive value predictor, which uses the distance between previous samples to store the most likely value of

the next sample. The difference between samples is quantized down to a new sample using an adaptive step-

size. The algorithm in [11] suggests using a table to adapt this step-size to the analyzed data.

ADPCM has become widely used and adapted, and a variant of the algorithm performs voice encoding on

cellular phones.

Fig. 1: (a) ADPCM encoding process Algorithm

Fig 2. ADPCM Encoder Block Diagram

Design and Implementation of ADPCM Based Audio Compression Using Verilog

www.theijes.com The IJES Page 52

Fig. 3: ADPCM encoding process Flowchart

Fig 4. ADPCM Decoder Block Diagram

Design and Implementation of ADPCM Based Audio Compression Using Verilog

www.theijes.com The IJES Page 53

Figure 1(a) shows a block diagram of the ADPCM encoding process. A linear input sample X(n) is

compared to the previous estimate of that input X(n-l). The difference, d(n), along with the present step size,

ss(n), is presented to the encoder logic. This logic, described below, produces an ADPCM output sample. This

output sample is also used to update the step size calculation ss(n+l), and is presented to the decoder to

compute the linear estimate of the input sample. The encoder accepts the differential value, d(n), from the

comparator and the step size, and calculates a 4-bit ADPCM code. The following is a representation of this

calculation in pseudo code:

Let B3 = B2 = B1 = B0 = 0

If (d(n) < 0) then B3 = 1 and d(n) = ABS(d(n))

If (d(n) >= ss(n)) then B2 = 1 and d(n) = d(n) -ss(n)

If (d(n) >= ss(n) / 2) then B1 = 1 and d(n) = d(n) -ss(n) / 2

If (d(n) >= ss(n) / 4) then B0 = 1

L(n) = (10002 * B3) + (1002 * B2) + (102 * B1) + B0

For both the encoding and decoding process, the ADPCM algorithm adjusts the quantizer step size based on

the most recent ADPCM value. The step size for the next sample, n+l, is calculated with the equation,

ss(n+1) = ss(n) * 1.1M(L(n))

This equation can be implemented efficiently using two lookup tables [11]. First table uses the magnitude of

the ADPCM code as an index to look up an adjustment factor. The adjustment factor is used to move an index

pointer located in second table. The index pointer then points to the new step size. This method of adapting the

scale factor with changes in the waveform is optimized for voice signals. When the ADPCM algorithm is reset,

the step size ss(n) is set to the minimum value (16) and the estimated waveform value X is set to zero (half

scale). Playback of 48 samples (24 bytes) of plus and minus zero (10002 and 00002) will reset the algorithm. It

is necessary to alternate positive and negative zero values because the

encoding formula always adds 1/8 of the quantization size. If all values are positive or negative, a DC

component would be added that would create a false reference level.

3.RESULTS

Figure 6 (a) Reset condition

Figure 6(b) encoder out put for orbitary input

.

Design and Implementation of ADPCM Based Audio Compression Using Verilog

www.theijes.com The IJES Page 54

Figure 6(c) encoder output for obituary input

Figure 6(d) decoder reset condetion

Figure 6(e) decoder output for obituary input.

III. CONCLUSION

Implementation of ADPCM algorithm for audio compression resulted in a compressed file, of the

size of the original file. The sound quality of the audio file is maintained reasonably after compression. The

compression leads to better storage scheme which can further be utilized for many applications in

communications. The system can be used for real time audio streaming in future streaming media, such as

audio or video files sent via the Internet. Audio/video data can be transmitted from the server more rapidly

with this system as interruptions in playback as well as temporary modem delays are avoided. The main

application intended for such system is to real time streaming however it could be used in various other

applications such as Voice Storage, wireless communication equipments etc.

Design and Implementation of ADPCM Based Audio Compression Using Verilog

www.theijes.com The IJES Page 55

REFERENCES

[1] Mat Hans and Ronald W. Schafer Lossless compression of digital audio IEEE SIGNAL PROCESSING MAGAZINE, 1053-

5888/01, JULY 2001, pp 21-32

[2] Peilin Liu,Lingzhi Liu, Ning Deng, Xuan Fu, Jiayan Liu, Qianru Liu, VLSI Implementation for Portable Application Oriented MPEG-

4 Audio Codec IEEE International Symposium on Circuits and Systems, 2007. ISCAS 2007. Pp- 777 - 780

[3] Bochow, B. ; Czyrnik, B. ; Multiprocessor implementation of an ATC audio codec International Conference on Acoustics, Speech,

and Signal Processing, 1989. ICASSP-89., 1989, pp. 1981 - 1984 vol.3

[4] Jing Chen ; Heng-Ming Tai ; Real-time implementation of the MPEG-2 audio codec on a DSP IEEE Transactions on Consumer

Electronics, Volume 44 , Issue 3 , pp. 866 871

[5] Gurkhe, V. ; Optimization of an MP3 decoder on the ARM processor Conference on Convergent Technologies for Asia-Pacific

Region TENCON 2003, pp. 1475 - 1478 Vol.4

[6] Kyungjin Byun ; Young-Su Kwon ; Bon-Tae Koo ; Nak-Woong Eum ; Koang-Hui Jeong ; Jae-Eul Koo ; Implmentation of digital

audio effect SoC IEEE International Conference on Multimedia and Expo, 2009. ICME 2009, pp. 1194 1197

[7] Ouyang Kun ; Ouyang Qing ; Li Zhitang ; Zhou Zhengda ; Optimization and Implementation of Speech Codec Based on Symmetric

Multi-processor Platform International Conference on Multimedia Information Networking and Security, 2009. MINES '09. Pp. 234

237

[8] K. Brandenburg and G. Stoll, "The ISO/MPEG-Audio Codec: A Generic Standard for Coding of High Quality Digital Audio," Preprint

3336, 92nd Audio Engineering Society Convention, Vienna1992.

[9] Rabiner and R. Schafer, Digital Processing of Speech Signals (Englewood Cliffs, NJ: Prentice-Hall, 1978).

[10] M. Nishiguchi, K. Akagiri, and T. Suzuki, "A New Audio Bit Rate Reduction System for the CD-I Format," Preprint 2375, 81st Audio

Engineering Society Convention, Los Angeles 1986.

[11] I MA Digital Audio Focus and Technical Working Groups, Recommended Practices for Enhancing Digital Audio Compatibility in

Multimedia System: Revision 3.00, IMA Compatibility Proceedings, Vol. 2, October 1992.

Biographies and Photographs

Mr. Hemanth kumar M P has completed his B.E in Electronics and

communication from MVJCE, Banglore and Pursuing his M.Tech in VLSI and

Embedded system from B.G.S. Institute of Technology, B.G.Nagar. His areas

of Interest are embedded system design using Microcontroller core, CPU

Architecture design and Speech processing.

Você também pode gostar

- Apt x100Documento16 páginasApt x100lbbrhznAinda não há avaliações

- Fixed PT CVSD ImplementationDocumento5 páginasFixed PT CVSD Implementationnittin2Ainda não há avaliações

- Lecture Notes - 17ec741 - Module - Audio & Video Compression - Raja GVDocumento51 páginasLecture Notes - 17ec741 - Module - Audio & Video Compression - Raja GVSOUMYASAI GANESHKARAinda não há avaliações

- EE 413 Digital Communication SystemsDocumento12 páginasEE 413 Digital Communication SystemsSamet DeveliAinda não há avaliações

- Lecture Notes - 17ec741 - Module - Audio & Video Compression - Raja GVDocumento50 páginasLecture Notes - 17ec741 - Module - Audio & Video Compression - Raja GVBlue flames100% (1)

- ADPCM ImplementationDocumento13 páginasADPCM ImplementationDumitru GheorgheAinda não há avaliações

- Pulse-Code Modulation (PCM) Is A Method Used ToDocumento53 páginasPulse-Code Modulation (PCM) Is A Method Used Tosugu2k88983Ainda não há avaliações

- 1987 OKI Voice Synthesis LSI Data BookDocumento214 páginas1987 OKI Voice Synthesis LSI Data BookmigfrayaAinda não há avaliações

- Pulse Code PCMDocumento13 páginasPulse Code PCMumerisahfaqAinda não há avaliações

- Huff Man 1Documento4 páginasHuff Man 1Gaurav PantAinda não há avaliações

- 17ec741 Module 4audio Video Compression Raja GVDocumento52 páginas17ec741 Module 4audio Video Compression Raja GVBoban MathewsAinda não há avaliações

- Introduction To Digital AudioDocumento15 páginasIntroduction To Digital AudioJonah Grace MejasAinda não há avaliações

- Ch8c Data CompressionDocumento7 páginasCh8c Data CompressionmuguchialioAinda não há avaliações

- Digital Sound Synthesis For Multimedia A PDFDocumento17 páginasDigital Sound Synthesis For Multimedia A PDFjuan sebastianAinda não há avaliações

- What Is MPEGDocumento5 páginasWhat Is MPEGARTMehr Eng. GroupAinda não há avaliações

- Digital CommunicationsDocumento39 páginasDigital Communicationsmakomdd100% (1)

- Context-Based Adaptive Arithmetic CodingDocumento13 páginasContext-Based Adaptive Arithmetic CodingperhackerAinda não há avaliações

- Chap 5 CompressionDocumento43 páginasChap 5 CompressionMausam PokhrelAinda não há avaliações

- MMA Theory Assignment: 1. Critique Time and Frequency Domain Feature Pipeline With Diagram? SolutionDocumento17 páginasMMA Theory Assignment: 1. Critique Time and Frequency Domain Feature Pipeline With Diagram? SolutionraghudatheshAinda não há avaliações

- Audio CompressionDocumento11 páginasAudio Compressionjahirkhan.ice.nstuAinda não há avaliações

- Multimedia Commnication Unit 4 VTUDocumento41 páginasMultimedia Commnication Unit 4 VTUraghudathesh100% (1)

- Low Bit Rate CodingDocumento4 páginasLow Bit Rate CodingHiep TruongAinda não há avaliações

- Digital Image ProcessingDocumento11 páginasDigital Image ProcessingSudeshAinda não há avaliações

- Representation of Digital Images and Video: Pertemuan 4Documento55 páginasRepresentation of Digital Images and Video: Pertemuan 4Michael TownleyAinda não há avaliações

- Speech Projects: Sds-01 Speech Recognition Using Cepstral CoefficientsDocumento24 páginasSpeech Projects: Sds-01 Speech Recognition Using Cepstral CoefficientsAnonymous 1aqlkZAinda não há avaliações

- Digital Audio Compression: by Davis Yen PanDocumento14 páginasDigital Audio Compression: by Davis Yen PanEduclashAinda não há avaliações

- Audio Coding: Basics and State of The ArtDocumento6 páginasAudio Coding: Basics and State of The ArtAu Belchez ZamudioAinda não há avaliações

- Audio Coding: Basics and State of The ArtDocumento6 páginasAudio Coding: Basics and State of The ArtAu Belchez ZamudioAinda não há avaliações

- SDM Versus LPCMDocumento23 páginasSDM Versus LPCMRyan MitchleyAinda não há avaliações

- Telephony Expt9Documento9 páginasTelephony Expt9Lerry SampangAinda não há avaliações

- Training Session No.: Digital AudioDocumento28 páginasTraining Session No.: Digital AudioMohd AmirAinda não há avaliações

- Waveform Coding Techniques: Document ID: 8123Documento7 páginasWaveform Coding Techniques: Document ID: 8123Vikram BhuskuteAinda não há avaliações

- Waveform Coding Techniques: Document ID: 8123Documento7 páginasWaveform Coding Techniques: Document ID: 8123Kugly DooAinda não há avaliações

- AacDocumento27 páginasAacramakrishnamullapudiAinda não há avaliações

- Differential Pulse Code ModulationDocumento12 páginasDifferential Pulse Code ModulationNarasimhareddy MmkAinda não há avaliações

- HDL AdaptativeDocumento4 páginasHDL AdaptativeabedoubariAinda não há avaliações

- IJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchDocumento6 páginasIJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchInternational Journal of computational Engineering research (IJCER)Ainda não há avaliações

- Venkata Lakshmi 08011012170 Sep Audio CompressionDocumento8 páginasVenkata Lakshmi 08011012170 Sep Audio CompressionVenkataLakshmi KrishnasamyAinda não há avaliações

- Audio CompressionDocumento81 páginasAudio CompressionRohan BorgalliAinda não há avaliações

- IOSRJEN (WWW - Iosrjen.org) IOSR Journal of EngineeringDocumento5 páginasIOSRJEN (WWW - Iosrjen.org) IOSR Journal of EngineeringIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalAinda não há avaliações

- Implementation of Image and Audio Compression Techniques UsingDocumento26 páginasImplementation of Image and Audio Compression Techniques UsingHemanth KumarAinda não há avaliações

- Module 4Documento81 páginasModule 4jyothi c kAinda não há avaliações

- 1 5 Analog To Digital Voice EncodingDocumento10 páginas1 5 Analog To Digital Voice EncodingDương Thế CườngAinda não há avaliações

- Gip Unit 5 - 2018-19 EvenDocumento36 páginasGip Unit 5 - 2018-19 EvenKalpanaMohanAinda não há avaliações

- Project Paper PDFDocumento8 páginasProject Paper PDFGargi SharmaAinda não há avaliações

- A Tutorial On MPEG/Audio CompressionDocumento12 páginasA Tutorial On MPEG/Audio CompressionNguyen Van CuongAinda não há avaliações

- Dither in Digital AudioDocumento10 páginasDither in Digital AudioNicolás Gutiérrez GAinda não há avaliações

- Itc Review 3 PDFDocumento8 páginasItc Review 3 PDFGvj VamsiAinda não há avaliações

- LPC Modeling: Unit 5 1.speech CompressionDocumento13 páginasLPC Modeling: Unit 5 1.speech CompressionRyan ReedAinda não há avaliações

- Bab 7 Multimedia Kompresi AudioDocumento52 páginasBab 7 Multimedia Kompresi AudioElektro ForceAinda não há avaliações

- Pulse-Code Modulation: "PCM" Redirects Here. For Other Uses, SeeDocumento6 páginasPulse-Code Modulation: "PCM" Redirects Here. For Other Uses, SeesubrampatiAinda não há avaliações

- Noakhali Science & Technology UniversityDocumento12 páginasNoakhali Science & Technology Universityjahirkhan.ice.nstuAinda não há avaliações

- DSP Ieee Papers: Sahasra Software SolutionsDocumento13 páginasDSP Ieee Papers: Sahasra Software Solutionsram85_indiaAinda não há avaliações

- Dolby AC3 Audio Codec and MPEG-2 Advanced Audio Coding: Recommended byDocumento4 páginasDolby AC3 Audio Codec and MPEG-2 Advanced Audio Coding: Recommended byJorge Luis PomachaguaAinda não há avaliações

- Satellite Communication Systems: Lecture 6: Digital Video Compression Systems and StandardsDocumento44 páginasSatellite Communication Systems: Lecture 6: Digital Video Compression Systems and StandardsPhoon LeeAinda não há avaliações

- Amr Half FullDocumento6 páginasAmr Half FullcefunsAinda não há avaliações

- Design of Direct Digital Frequency SynthesizerDocumento26 páginasDesign of Direct Digital Frequency SynthesizerYermakov Vadim IvanovichAinda não há avaliações

- Digital Signal Processing: A Practical Guide for Engineers and ScientistsNo EverandDigital Signal Processing: A Practical Guide for Engineers and ScientistsNota: 4.5 de 5 estrelas4.5/5 (7)

- Defluoridation of Ground Water Using Corn Cobs PowderDocumento4 páginasDefluoridation of Ground Water Using Corn Cobs PowdertheijesAinda não há avaliações

- Development of The Water Potential in River Estuary (Loloan) Based On Society For The Water Conservation in Saba Coastal Village, Gianyar RegencyDocumento9 páginasDevelopment of The Water Potential in River Estuary (Loloan) Based On Society For The Water Conservation in Saba Coastal Village, Gianyar RegencytheijesAinda não há avaliações

- Mixed Model Analysis For OverdispersionDocumento9 páginasMixed Model Analysis For OverdispersiontheijesAinda não há avaliações

- Design and Simulation of A Compact All-Optical Differentiator Based On Silicon Microring ResonatorDocumento5 páginasDesign and Simulation of A Compact All-Optical Differentiator Based On Silicon Microring ResonatortheijesAinda não há avaliações

- Influence of Air-Fuel Mixtures and Gasoline - Cadoba Farinosa Forskk Bioethanol Fuel Mixtures On Emissions of A Spark - Ignition Engine.Documento9 páginasInfluence of Air-Fuel Mixtures and Gasoline - Cadoba Farinosa Forskk Bioethanol Fuel Mixtures On Emissions of A Spark - Ignition Engine.theijesAinda não há avaliações

- Error Correcting CofeDocumento28 páginasError Correcting CofeAnamika singhAinda não há avaliações

- Digital Communication Systems by Simon Haykin-107Documento6 páginasDigital Communication Systems by Simon Haykin-107matildaAinda não há avaliações

- DSPDocumento5 páginasDSPkaloy33Ainda não há avaliações

- Digital Communication Two Marks Q&aDocumento29 páginasDigital Communication Two Marks Q&ashankar70% (10)

- Image Compression Using DCT Implementing MatlabDocumento23 páginasImage Compression Using DCT Implementing Matlabramit7750% (2)

- An 7190Documento5 páginasAn 7190Veneziano SalvatoreAinda não há avaliações

- Samson s700-s1000 - ManualDocumento12 páginasSamson s700-s1000 - Manualtarkn10100% (1)

- DSP Notes KAR Part2Documento23 páginasDSP Notes KAR Part2Srinivas VNAinda não há avaliações

- Dr. Leach's Amplifier and Speaker ProjectsDocumento3 páginasDr. Leach's Amplifier and Speaker ProjectstoxacoAinda não há avaliações

- Chapter 10Documento51 páginasChapter 10Raji Prasad100% (1)

- DSP Lab ReportDocumento26 páginasDSP Lab ReportPramod SnkrAinda não há avaliações

- DSP Final LabDocumento30 páginasDSP Final Labdesi boyAinda não há avaliações

- How To Calculate RSRPDocumento3 páginasHow To Calculate RSRPMugaboAinda não há avaliações

- Service Manual: Power AmplifierDocumento82 páginasService Manual: Power AmplifierWilmer RamirezAinda não há avaliações

- Voice Morphing Seminar ReportDocumento36 páginasVoice Morphing Seminar ReportVinay ReddyAinda não há avaliações

- The Preselector FilterDocumento14 páginasThe Preselector FilteralfredomatiasrojoAinda não há avaliações

- Microunde FiltreDocumento341 páginasMicrounde FiltreSimona DanAinda não há avaliações

- Digital Filter, FIR and IIRDocumento20 páginasDigital Filter, FIR and IIRYared BirhanuAinda não há avaliações

- Influence of RF Oscillators On An Ofdm SignalDocumento12 páginasInfluence of RF Oscillators On An Ofdm SignalLi ChunshuAinda não há avaliações

- Yuan - 2010 - Lecture 11 CMOS Imaging SensorDocumento44 páginasYuan - 2010 - Lecture 11 CMOS Imaging SensorAmaniDarwishAinda não há avaliações

- KAM 500databookDocumento724 páginasKAM 500databookMoazzam NiaziAinda não há avaliações

- Ec 8561 Com. Sys. Lab ManualDocumento85 páginasEc 8561 Com. Sys. Lab ManualSri RamAinda não há avaliações

- Image Enhancement RestorationDocumento74 páginasImage Enhancement RestorationDarsh Singh0% (1)

- New KDFX ObjectsDocumento3 páginasNew KDFX ObjectsMaria Del Rosario De San Nicolas MensajesAinda não há avaliações

- Filter Desing CompleteDocumento0 páginaFilter Desing Completewww.bhawesh.com.npAinda não há avaliações

- A Differential Op-Amp Circuit Collection: Bruce Carter High Performance Linear ProductsDocumento33 páginasA Differential Op-Amp Circuit Collection: Bruce Carter High Performance Linear ProductsKushAinda não há avaliações

- Digital Image Processing: Fourth EditionDocumento83 páginasDigital Image Processing: Fourth EditionIshtiaqAinda não há avaliações

- Panasonic Sa-Ak180 Ak280 SCH (ET)Documento25 páginasPanasonic Sa-Ak180 Ak280 SCH (ET)Corado AlonsoAinda não há avaliações

- Aliasing: Aliasing Is An Effect That Causes Different Signals To Become Indistinguishable FromDocumento6 páginasAliasing: Aliasing Is An Effect That Causes Different Signals To Become Indistinguishable FromChara GalaAinda não há avaliações

- Protea 424c Digital Crossover r02Documento20 páginasProtea 424c Digital Crossover r02wahyuAinda não há avaliações