Escolar Documentos

Profissional Documentos

Cultura Documentos

SOC6078 SOC6078 Advanced Statistics: 4. Models For Categorical Dependent Variables II Extending The Logit and Probit Models

Enviado por

rahulsukhijaTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

SOC6078 SOC6078 Advanced Statistics: 4. Models For Categorical Dependent Variables II Extending The Logit and Probit Models

Enviado por

rahulsukhijaDireitos autorais:

Formatos disponíveis

SOC6078 SOC6078

Advanced Statistics:

4. Models for Categorical

Dependent Variables II

Robert Andersen

Department of Sociology

University of Toronto

Thursday, 24 May 2012

Extending the Logit and Probit Models

We can extend the logit model to dependent

variables with more than two categories

Specific models correspond to specific types of

relations between the categories of the variable

Some examples:

proportional- odds models fit to ordered data p p

binomial logit models fit to grouped data

multinomial logit and multinomial probit

models fit to a variable with several categories models fit to a variable with several categories

that have no order among them

All of these models are part of the more general

family of binomial models that fit under the larger family of binomial models that fit under the larger

umbrella of generalized linear models

2

Multinomial Logit Models

The multinomial logit model is a direct extension of the

binary logit model to a dependent variable with several

unordered categories (i.e., the model does not assume

any order) any order)

Note: Although the model assumes no order in the

categories, it is still sometimes sensible to fit a

multinomial logit model to an ordered variable, especially multinomial logit model to an ordered variable, especially

if the data fail to meet the assumptions for ordered

models, in particular the proportional odds assumption

A multinomial logit model simultaneously fits m-1 logit

models, contrasting each m-1 category of the dependent

variable with a reference category, m

When fit to a dichotomous variable, the multinomial logit

model is identical to the binary logit model model is identical to the binary logit model

Following directly from the binary logit case, the model is fit

using maximum likelihood

3

Multinomial Logit Models

The model is given by the equations:

As this equation shows, there is one set of parameters,

0j

,

1j

, ...

kj

for each category of the dependent variable

accept the reference category p g y

Although technically we could simply fit a series of

separate binary logit models to find the coefficients, these

models would not give us a single overall measure of the

4

g g

deviance

The regression coefficients from multinomial logit models The regression coefficients from multinomial logit models

reflect the log odds of membership in category j

versus the reference category, m

Although the coefficients refer to a baseline, it is possible

to calculate the log odds of being in any pair of categories

j and j

0

j j

5

Multinomial Logit Models in R

The gl mfunction in R does not handle multinomial logit The gl mfunction in R does not handle multinomial logit

models. Instead, the mul t i nomfunction in the nnet library

must be used

The following example is from the Womens Labour-Force The following example is from the Women s Labour-Force

data in Fox (1997)

The goal is to predict propensity to work outside the

home (full-time or part-time) versus not working home (full-time or part-time) versus not working

according to husbands income and whether children

are at home

6

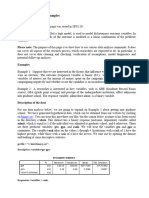

Multinomial Logit Models in R (2)

The odds of

working full-

time versus time versus

not at all for

women with

children at

home are .077

times the odds

of working for

h h those without

children

7

Multinomial Logit Models in R (3)

Effect Displays p y

.

0

Chi l dren Absent

.

0

Chi l dren Present

0

.

8

1

.

not working

part-time

full-time

0

.

8

1

.

4

0

.

6

e

d

P

r

o

b

a

b

i

l

i

t

y

4

0

.

6

e

d

P

r

o

b

a

b

i

l

i

t

y

0

.

2

0

.

4

F

i

t

t

e

0

.

2

0

.

4

F

i

t

t

e

0 10 20 30 40

0

.

0

0 10 20 30 40

0

.

0

8

Husband's Income Husband's Income

R-script for Multinomial Logit Effect Display

9

Multinomial Logit Models

Choice of Reference Category g y

In terms of model fit, choice of the reference category

is arbitrary

Although the coefficients will differ the model fit is Although the coefficients will differ, the model fit is

the same

As shown earlier, it is also possible to determine odds

ratios for the contrasts between any two categories of y g

the dependent variable (though this requires some

calculations or refitting the model with a different

reference category)

Still th t thi t id Still, there are two things to consider:

1. To which category do you want to compare? It is

sensible to make this category the reference

category category.

2. Less change of a convergence problem if the largest

category is the reference.

10

Independence of Irrelevant

Alternatives (IIA) Assumption (1) ( ) p ( )

Relevant with respect to choice models

Assumes that the odds of choosing one option ( say A)

over another ( B) are the same regardless of the over another ( B) are the same regardless of the

existence of other options

In short, the errors associated with predicting each

category are uncorrelated g y

Ex 1: The odds of voting Conservative versus Liberal

should be the same regardless of other parties

i.e., the odds should not change if another party enters

the race

If strategic voting, IIA assumption is not met

Ex 2: If given the choice between Pepsi and Milk but

thi l th h i diff t h th t th nothing else, the choices are different enough that they are

likely independent

If choices are Coke, Pepsi and Milk, the IIA assumption

will be violatedCoke and Pepsi are similar enough that

11

will be violatedCoke and Pepsi are similar enough that

the odds ratio for Coke/Milk will change drastically if

Pepsi is also given as a choice

Independence of Irrelevant

Alternatives (IIA) Assumption (2) ( ) p ( )

The multinomial probit model overcomes the IIA

assumption, by allowing the response errors to correlate

As said earlier however the probit model is more As said earlier, however, the probit model is more

complicated and thus can have problems converging.

As a result, most statistical software programs do not

yet have built-in functions for multinomial probit y p

models.

It can, however, now be done in R using the MNP

package. See:

Imai, Kosuke and David van Dyk (2005). MNP: R

Package for Fitting the Multinomial Probit Model,

Journal of Statistical Software, 14(3):1-32.

In any event the IIA assumption is really not of concern In any event, the IIA assumption is really not of concern

as long as all possible options have been included in the

model

In such cases, the multinomial logit model will suffice

12

In such cases, the multinomial logit model will suffice

Ordered Logit Models

Often we have ordinal measures for which we cannot

necessarily assume that the categories are equally

spacedthis means, of course, that OLS is spaced this means, of course, that OLS is

inappropriate

Likert questionnaire items for opinions, social class

(depending on the conceptualization), level of

d i ( h h ) ll l education (rather than years) etc. are all examples

of dependent variables for which OLS may not be

appropriate

If we can we would also like to preserve the ordered If we can, we would also like to preserve the ordered

character of the data, so a multinomial logit model is

not our first choice

Ordered logit models or ordered probit models g p

can often (but not always) provide a better alternative

The simplest and most commonly used of these

models is the proportional- odds model

13

Ordered Logit Models:

Proportional-odds Model p

Assume a latent continuous variable (e.g., attitudes

toward abortion) is a linear function of the Xs plus a

random error

A survey is unable to tap the concept in great detail, so we A survey is unable to tap the concept in great detail, so we

rely on a 5-point scale. In other words, the latent

continuous variable has been divided into 5 categories

The range of is then divided by m-1=4 boundaries into The range of is then divided by m 1 4 boundaries into

m=5 regions, o

1

<o

2

<<o

m-1

14

Ordered Logit Models:

Proportional-odds Model (2) p ( )

The cumulative probability distribution of Y is easily

f d found:

Where j is the category of interest

15

j g y

Ordered Logit Models:

Proportional-odds Model (3) p ( )

The ordered logit model is then:

or, equivalently or, equivalently

The logits are then cumulative logits that contrast

categories above category j with category j and below categories above category j with category j and below

This means, then, that the regression slopes moving

from one category to the next are equal

I h d fi d l ill i l i

16

In other words, fitted values will give us cumulative

probabilities

Ordered Logit Models:

Proportional-odds Model (4) Proportional odds Model (4)

The Parallel

Slopes

A ti Assumption

(also known as

the proportional

odds odds

assumption)

requires that the

separate

equations for equations for

each category

differ only in their

intercepts p

In other words,

the slopes are

the same when

going from each

17

going from each

category to the

next

Ordered Logit Models:

Proportional-odds Model (5) p ( )

The ordered logit model fits only one coefficient | for

each X, but a separate intercept for each category

Th fi t t ( l t) i t f The first category (or last) is set as a reference

category to which all the intercepts relate

I nterpretation I nterpretation

We interpret the coefficients in exactly the same way

as for the binary logit model except rather than

referring to a single baseline category, we contrast referring to a single baseline category, we contrast

each category and those below it with all the

categories above it

In other words, the logit tells us the log of the odds In other words, the logit tells us the log of the odds

that Y is in one category and below versus above

that category (or vice versa, depending on the

software)

18

)

Ordered Logit Models:

A Caution

Often the categories for an ordinal variable are chosen

arbitrarily

A i t t b t ti it i f th d d l it An important substantive criteria of the ordered logit

model is that the results remain consistent regardless of

how the dependent variable is cut into categories

If t i dd d t ld d l b j i d If a new category is added to an old model by joined

adjacent categories, the substantive conclusions of

the model should remain the samei.e., the

regression coefficients should be the same regardless regression coefficients should be the same regardless

of how many categories the variable is divided into

If this condition cant be met, we cannot have

confidence in the results and might instead consider a confidence in the results, and might instead consider a

multinomial logit model

19

Proportional Odds models in R

Example: Womens Participation (1) p p ( )

Proportional odds models in R are fit using the pol r

function in the MASS package

We specify logit or probit using the method argument: We specify logit or probit using the method argument:

Logit: pol r ( f or mul a, dat a, met hod = l ogi st i c)

Probit: pol r ( f or mul a, dat a, met hod = pr obi t )

Continuing with the Womens Labor-Force Data in Fox Continuing with the Women s Labor Force Data in Fox

(1997) , we examine the propensity to work outside the

home (measured as full-time, part-time, not working)

but this time treating the DV as an ordered factor

20

Proportional Odds models in R

Example: Womens Participation (2) p p ( )

The odds of moving up one category in participation The odds of moving up one category in participation

for women with children present are e

-1.972

=.139 times

what they are for women who do not have children at

home

21

An effect display can shed more light on the model

R-script for Effect Display

22

Effect Display for the

Ordered Logit Model g

1

.

0

Chi l dren Absent

1

.

0

Chi l dren Present

0

.

8

1

y

not working

part-time

full-time

0

.

8

1

y

.

4

0

.

6

e

d

P

r

o

b

a

b

i

l

i

t

y

.

4

0

.

6

e

d

P

r

o

b

a

b

i

l

i

t

y

0

.

2

0

F

i

t

t

e

0

.

2

0

F

i

t

t

e

0 10 20 30 40

0

.

0

0 10 20 30 40

0

.

0

23

Husband's Income Husband's Income

Effect Display for the

Multinomial Logit Model g

1

.

0

Chi l dren Absent

not orking

1

.

0

Chi l dren Present

0

.

8

1

y

not working

part-time

full-time

0

.

8

1

y

.

4

0

.

6

e

d

P

r

o

b

a

b

i

l

i

t

y

.

4

0

.

6

e

d

P

r

o

b

a

b

i

l

i

t

y

0

.

2

0

F

i

t

t

e

0

.

2

0

F

i

t

t

e

0 10 20 30 40

0

.

0

0 10 20 30 40

0

.

0

24

Husband's Income Husband's Income

Testing the

Proportional Odds Assumption p p

The fitted probabilities for the ordered logit and multinomial

logit models differ somewhat, especially for the part-timers

It is of interest then to assess further which model fits the It is of interest, then, to assess further which model fits the

data best. We can do two things:

1. Compare the Akaike information criterion (AIC) for

the two models

AIC=-2*log-likelihood+ 2*p, where p is the number

of parameters in the model

2. The ordered model is nested within the multinomial

model, allowing the use of an Analysis of Deviance to

compare the fit of the two models. If the multinomial

logit models fits better, the proportional odds

assumption has not been met assumption has not been met

There is no function for this test in Rnor does the

anova function apply to proportional odds and

multinomial logit modelsbut it is simple to create a

25

g p

function that compares the fit of the two models

Testing the

Proportional Odds Assumption (2) Proportional Odds Assumption (2)

R function

26

Testing the

Proportional Odds Assumption (3) p p ( )

The statistically significant difference between the two

models indi ates that the p opo tional odds ass mption is models indicates that the proportional odds assumption is

not met

The AIC is also smaller for the multinomial model,

indicating it fits best

27

indicating it fits best

In this case, then, I choose the multinomial logit model

Ordered Probit Models

These models parallel the proportional odds logit models These models parallel the proportional odds logit models

That is, all the same assumptions and tests apply

As with logit models, fitted probabilities can be

calculated to help with interpretation calculated to help with interpretation

As mentioned earlier, the pol r function will fit these

models, but an alternative function, vgl m, is included in

the VGAMpackage (from Thomas Yee) the VGAMpackage (from Thomas Yee)

Continuing with the Womens labour force example, the

ordered probit model is fit simply:

28

Ordered Probit Models (2)

29

Generalized Linear Models (GLM)

Linear model is usually not suitable for non-continuous

dependent variables

1. Non- normal errors poses problem for efficiency p p y

2. Heteroskedasticity can cause both efficiency

problems and bias in the standard errors

3. Nonlinearity: Assumption of linearity, E(c

i

)=0, is y p y (

i

)

usually not met

4. Nonsensical predictions can occur

These failures lead us to the Generalized Linear

d l h h l d d h Model, which generalizes and adapts the assumptions

of the linear model to different types of outcomes other

than continuous variables

A large set of families of models can be A large set of families of models can be

accommodated under this framework

30

Comparing the GLM to the Linear Model

The linear model finds the conditional mean of Y given

the Xs. It assumes then,

Th GLM ll l hi i h h f ll i h The GLM parallels this with the following, much more

flexible assumptions:

31

Generalized Linear Model (GLM):

Three components

1. As in the general linear model, the influence of the

explanatory variables is still linear:

p

where q is called the linear predictor

2. The relationship between q and the modelled mean is

generalized from

i

=q

i

to:

where g is the link function which links the response to

i

q

i

where g is the link function, which links the response to

the linear predictor through a transformation

3. The assumption that the errors c are normally distributed

is generalized to the assumption that c (and thus the

32

is generalized to the assumption that c (and thus the

residuals) has a specified exponential family distribution

Commonly GLMs

The main requirement is that g() can take any value

(positive or negative) so that linear dependence on the

explanatory variables makes sense

The link must also be monotonic and differentiable

The table below gives some important examples

GLMs are fitted using maximum likelihood and Iterative

Weighted Least Squares (see McCullagh and Nelder

33

Weighted Least Squares (see McCullagh and Nelder,

1999: Chapter 2)

GLMs: Interpretation (1)

Interpretation depends on the link for the model

1 Linear model 1. Linear model

A unit increase in X increases the mean () of Y by |

1

A unit increase in X increases the mean () of Y by |

1

2 L it li d l ( l it d l) 2. Logit- linear model ( logit model)

A unit increase in X changes the logit by | and multiples

the odds by exp(|

1

)

the effects are multiplicative on the odds

34

the effects are multiplicative on the odds

Used for binary dependent variables

GLMs: Interpretation (2)

3. Poisson Model

A unit increase in X increases log by |

1

i.e., multiples by exp(|

1

); the effect of explanatory

i bl th i lti li ti variables on the mean is multiplicative

Used for count data

4. Gamma Model

A unit increase in X increases log by |

1

i.e., multiples by exp(|

1

); the effect of explanatory

variables on the mean is multiplicative

35

variables on the mean is multiplicative

Used for continuous data with a gamma distribution

GLMs: Interpretation (3)

Interpretation of the individual coefficients for a multiple

regression is the same as the single predictor case, but in

all cases follows the OLS interpretation in that it is

holding other variables constant

Interpretation of the coefficients from GLMs requires care,

however

Aside from the OLS model, these models are

nonlinear so the coefficients alone are difficult to

interpret

This is especially the case with interaction terms

Usually complementary measuressuch as fitted

values and graphsare needed for a good g p g

understanding of the results

36

Transformations versus GLM link (1)

Using OLS with a transformed dependent variable is

often an acceptable alternative to the GLM

Transformation of Y and using a link function with the

same transformation are NOT the same, however

The OLS model assumes normally distributed errors

when using the transformed variable

If we cannot make this assumption the GLM is a better

37

If we cannot make this assumption, the GLM is a better

alternative

Transformations versus GLM link (2)

Here is an example comparing an OLS regression with a log

transformation of Y with a Gamma model with a log link

0

0

1

5

0

0

n

k

1

0

0

0

0

o

d

e

l

w

i

t

h

l

o

g

l

i

n

5

0

0

0

G

a

m

m

a

m

o

0 5000 10000 15000

0

38

0 5000 10000 15000

OLS with log transformation

Model Fit and Deviance for GLMs

Evaluation of GLMs relies on the deviance

Recall that the deviance of a model can be equated to

the residual sum of squares from OLS regression

Each family of models requires a different calculation for

the deviance, however:

W th d i f t t l d l We can compare the deviance of a constant only model

to the full model, thus meaning we can also find an R

2

analogue

39

Significance Tests (1)

Hypothesis tests and confidence intervals follow the same

general procedures as for all MLEs:

Tests for individual slopes, H

0

: |

j

=|

j

(0)

are based on

j j

the Wald statistic:

Tests that several slopes are 0, H

0

: |

j

= |

q

=0 are Tests that several slopes are 0, H

0

: |

j

|

q

0 are

carried out using the now familiar analysis of deviance,

which is based on the generalized likelihood-ratio test.

The test is distributed as _

2

with degrees of freedom The test is distributed as _ with degrees of freedom

equal to the number of parameters removed from the

model

40

R

2

Analogue

An R

2

analogue can be obtained from the log likelihood

If the fitted model perfectly predicts Y-values (i.e., P

i

=1

when y

i

=1, and P

i

=0 when y

i

=0) then the log

e

L=0. In

other words, the maximized likelihood is L=1.

If the model predictions are less than perfect, log

e

L<0 and

the maximized likelihood is 0<L<1

The degree to which our model improves predictions over

the constant only can be assessed by comparing the

deviance under the two models

The R

2

analogue is then easily calculated:

Where G

1

2

is the deviance for the fitted model and G

0

2

is

the deviance for the intercept only model

41

p y

GLMs in R

GLMs can be fit easily with the l function (it will not fit GLMs can be fit easily with the gl mfunction (it will not fit

multinomial or ordered models, howevermul t i nomin

the nnet library fits multinomial models; pol r in MASS fits

ordered models) ordered models)

Most of the functions available for the l mfunction are also

available for the gl mfunction (e.g., r esi dual s( ) ,

coef f i ci ent s( ) anova( ) Anova( ) etc ) coef f i ci ent s( ) , anova( ) , Anova( ) etc.)

It is important that the correct family is specified

The default is f ami l y=gaussi an which means that a

regular OLS model will be fit regular OLS model will be fit

For families with more than one possible link function,

it may also be necessary to specify the link

42

Poisson Regression Models

It is common to have response variables that are counts

For example, number of associations respondents

belong to, number of corporate interlocks etc. belong to, number of corporate interlocks etc.

Count variables typically have a Poisson distribution

(i.e., the highest frequency of counts is at 0, and the

frequency rapidly decreases through the range of the eque cy ap d y dec eases oug e a ge o e

variable)

By implication, the residuals from a linear model will

not be normally distributed, and OLS is no longer the y , g

appropriate model

Poisson regression models assume a Poisson

distribution for the random component p

Other possible models for count data include:

Quasi- Poisson-

Negative binomial

43

Negative binomial

Zero- inflated Poisson ( ZI P)

Poisson Regression Models (2)

The link function for a Poisson model is the log link:

This looks similar to an OLS with a logged dependent

variable but differs because it also assumes the Poisson variable but differs because it also assumes the Poisson

distribution for the residuals and IWLS is used for

estimation

I n other words we treat the variable as counts I n other words, we treat the variable as counts

rather than as continuous

For Poisson models, a unit increase in X has a

multiplicative impact of e

B

on multiplicative impact of e

B

on .

The mean of Y at x+1 is equal to the mean of Y at x

multiplied by e

B

If B=0, the multiplicative effect is 1:

44

If B 0, the multiplicative effect is 1:

e

B

=e

0

=1

Fitting a Poisson Regression Model:

Example: Voluntary organizations p y g

The data are a subset from the 1981-91 World Values

Survey (n=4803). The variables are as follows:

ASSOC: Number of voluntary associations to which

the respondent belongs (ranges from 0-15)

SEX, AGE S , G

SES: unskilled, skilled, middle upper

COUNTRY: Great Britain, Canada, USA

The goal is to assess differences in membership by SES The goal is to assess differences in membership by SES

and COUNTRY and whether SES effects are different

across countries (i.e., an interaction between SES and

COUNTRY) COUNTRY)

We begin by inspecting the distribution of the dependent

variable, ASSOC

45

Distribution of ASSOC

We see here that

the distribution of

the number of

Hi stogram of ASSOC

3

0

0

0

the number of

associations to

which respondents

belong has many

0

0

0

2

5

0

0

belong has many

0s and a positive

skew

A Poisson

F

r

e

q

u

e

n

c

y

1

5

0

0

2

0

A Poisson

Regression

Model is thus

appropriate

F

5

0

0

1

0

0

0

pp p

0

5

46

ASSOC

0 5 10 15

Poisson Regression Model

No interaction model (1) ( )

I begin by fitting a model excluding the interaction

(notice that I specify f ami l y=poi sson, and thus the log

link is specified by default): link is specified by default):

47

Poisson Regression Model

No interaction model (2) ( )

For effects without interactions, we can determine the

multiplicative effect on the fitted value for each 1 unit

i i X b l l ti (B) increase in X by calculating exp(B)

Below we see that holding everything else constant, on

average, Americans belong to approximately 20% more

voluntary organizations than Canadians (the reference voluntary organizations than Canadians (the reference

category)

This simple interpretation is lost, however, in the presence

of interactions

48

Poisson Regression Model (2)

49

Assessing the Interaction

As usual, the first step is to determine whether the

interaction is statistically significant using an analysis of

deviance

The Anova function in the car package works here (as it The Anova function in the car package works here (as it

does for all models of gl mclass)

Assuming the effects are significant, it is helpful to

calculate fitted values for the different groups calculate fitted values for the different groups

The al l Ef f ect s function in the car package gives fitted

values using the same principles as for the linear and

logit models (it does so for all gl mobjects)

For all Xs not of interest, the means are substituted

into the fitted equation

Fitted values are then found by varying the Xs of

i t t interest

Because we use the log link to map the linear

predictor on to Y, the exponent of the fitted value is

the effect on the Y-scale (al l Ef f ect s does this

50

the effect on the Y scale (al l Ef f ect s does this

automatically)

Assessing the Interaction (2)

The interaction is statistically significant, so I proceed to

find the fitted values

51

Assessing the Interaction (3)

The class

differences are

largest in the US,

and smallest in

Canada

Holding all other

variables to their

means, upper SES

Americans belong

t 2 85 l t to 2.85 voluntary

organizations on

average, while

unskilled unskilled

Americans belong

to only .87

organizations on

52

organizations on

average

Some other models for count data

An assumption of the Poisson model is that the variance

is equal to the mean

If the variance is larger than the meani.e., there is

overdispersion the Poisson model is not appropriate overdispersionthe Poisson model is not appropriate

In such cases, three alternative models are the quasi-

Poisson model, the negative binomial model, and the zero-

inflated Poisson model (ZIP) inflated Poisson model (ZIP)

All three models explicitly model the overdispersion,

though they can give slightly different results

The quasi- Poisson model models the variance as a q

linear function of the mean

The negative binomial model models the variance as

a quadratic function of the mean

The zero- inflated Poisson explicitly takes into

consider a distribution characterized by an abundance

of zeros.

A practical way to assess which fits best is to compare the

53

A practical way to assess which fits best is to compare the

AIC or BIC values

Summary and Conclusions

The linear model fails to accommodate categorical

dependent variables, but a transformation of t allows us

to fit more sensible models

The most commonly used is the logit model The most commonly used is the logit model

Binary logit models (dichotomous variable)

Proportional-odds logit models (ordered variable)

Multinomial logit models (several non ordered Multinomial logit models (several non-ordered

categories)

Interpretation of logit models:

e

b

tells us the odds ratio for an event occurring as X e

b

tells us the odds ratio for an event occurring as X

goes up by onethis interpretation is convenient in a

simple model without interaction terms

Models with interaction terms are intrinsically Models with interaction terms are intrinsically

complicated but their results can be simplified with

fitted probabilities

54

Summary and Conclusions (2)

The probit model gives nearly identical results to those

of the logit model when using binary data

Interpretation of probit models:

The coefficient B

1

from a probit model is interpreted as

the average increase in Z (the underlying latent

variable) for a one unit increase in X

1

, holding all other

Xs constant

Simply put, fitted probits are standardized values of Z

As with logit models, interpretation of the probit g , p p

models can often be improved by calculating fitted

probabilities

If the I I A assumption is violated, multinomial probit p , p

models perform better than multinomial logit models

Since multinomial logit models are typically easier to

estimate, however, they are usually preferred

55

, , y y p

Summary and Conclusions (3)

Generalized linear models are a generalization of the

linear model to outcomes other than quantitative

variables

A different link function depending on the distribution of

the dependent variable is used to map the response to a

linear predictor

Logit models and probit models are two frequently

used sets of models

Binary logit models are for dichotomous variables

Proportional-odds logit models are for ordered data

Multinomial logit models are for a dependent

variable with many categories that do not have an y g

systematic order

Poisson models typically work well for count data

56

Next Week:

Wednesday: Detecting nonnormality,

heteroskedasticity and collinearity

Th d D t ti tli Thursday: Detecting outliers

Assignment #2 due on Wednesday

Você também pode gostar

- 120 DS-With AnswerDocumento32 páginas120 DS-With AnswerAsim Mazin100% (1)

- Advanced Sentence Correction For GMAT SCDocumento27 páginasAdvanced Sentence Correction For GMAT SCharshv3Ainda não há avaliações

- Chapter 15 Qualitative Response Regression Models Part 2Documento31 páginasChapter 15 Qualitative Response Regression Models Part 2dineoraphutiAinda não há avaliações

- Dummy Variable CH 5Documento22 páginasDummy Variable CH 5Bee TadeleAinda não há avaliações

- Discrete Choice Models: Statistics For Marketing & Consumer ResearchDocumento48 páginasDiscrete Choice Models: Statistics For Marketing & Consumer Researchmgrimes2100% (1)

- 2.advanced Regression AnalysisDocumento37 páginas2.advanced Regression AnalysisDinaol TikuAinda não há avaliações

- Business Econometrics: Session VII-VIII DR Tutan Ahmed IIT Kharagpur February 2020Documento21 páginasBusiness Econometrics: Session VII-VIII DR Tutan Ahmed IIT Kharagpur February 2020Aparna JRAinda não há avaliações

- 945 Lecture6 Generalized PDFDocumento63 páginas945 Lecture6 Generalized PDFYusrianti HanikeAinda não há avaliações

- BT4211 Data-Driven Marketing: Customer: Purchase Choice, Quantity, DurationDocumento35 páginasBT4211 Data-Driven Marketing: Customer: Purchase Choice, Quantity, DurationAnirudh MaruAinda não há avaliações

- Multinomial Regression ModelsDocumento35 páginasMultinomial Regression ModelsVinayakaAinda não há avaliações

- Poisson Regression ModelsDocumento14 páginasPoisson Regression ModelsVinayakaAinda não há avaliações

- 1 Logit Probit and Tobit ModelDocumento51 páginas1 Logit Probit and Tobit ModelPrabin Ghimire100% (2)

- Topic 3: Qualitative Response Regression ModelsDocumento29 páginasTopic 3: Qualitative Response Regression ModelsHan YongAinda não há avaliações

- 2.advanced Regression AnalysisDocumento41 páginas2.advanced Regression AnalysisDinaol TikuAinda não há avaliações

- Chap3 - Multiple RegressionDocumento56 páginasChap3 - Multiple RegressionNguyễn Lê Minh AnhAinda não há avaliações

- Fernando, Logit Tobit Probit March 2011Documento19 páginasFernando, Logit Tobit Probit March 2011Trieu Giang BuiAinda não há avaliações

- Lecture 8 - Limited Dependent Var PDFDocumento78 páginasLecture 8 - Limited Dependent Var PDFIRFAN KURNIAWANAinda não há avaliações

- Probit ModelDocumento29 páginasProbit ModelSarvesh JP NambiarAinda não há avaliações

- STAT659: Chapter 6Documento30 páginasSTAT659: Chapter 6simplemtsAinda não há avaliações

- Econometric Modeling: Model Specification and Diagnostic TestingDocumento52 páginasEconometric Modeling: Model Specification and Diagnostic TestingYeasar Ahmed UshanAinda não há avaliações

- Latent Lifestyle Preferences and Household Location DecisionsDocumento53 páginasLatent Lifestyle Preferences and Household Location DecisionsmsmTk1Ainda não há avaliações

- Moment-Based Estimation of Nonlinear Regression Models Under Unobserved Heterogeneity, With Applications To Non-Negative and Fractional ResponsesDocumento29 páginasMoment-Based Estimation of Nonlinear Regression Models Under Unobserved Heterogeneity, With Applications To Non-Negative and Fractional ResponsesVigneshRamakrishnanAinda não há avaliações

- BA Module 5 SummaryDocumento3 páginasBA Module 5 Summarymohita.gupta4Ainda não há avaliações

- Logistic RegressionDocumento42 páginasLogistic RegressionmaniAinda não há avaliações

- Econometrics 1: Dummy Dependent Variables ModelsDocumento12 páginasEconometrics 1: Dummy Dependent Variables ModelsHay Jirenyaa0% (1)

- Work GRP 2 Multinomial Probit and Logit Models ExamplesDocumento5 páginasWork GRP 2 Multinomial Probit and Logit Models ExamplesDeo TuremeAinda não há avaliações

- Alternatives To Logistic Regression (Brief Overview)Documento5 páginasAlternatives To Logistic Regression (Brief Overview)He HAinda não há avaliações

- Business Econometrics Using SAS Tools (BEST) : Class XI and XII - OLS BLUE and Assumption ErrorsDocumento15 páginasBusiness Econometrics Using SAS Tools (BEST) : Class XI and XII - OLS BLUE and Assumption ErrorsPulkit AroraAinda não há avaliações

- Econometrics - Session V-VI - FinalDocumento43 páginasEconometrics - Session V-VI - FinalAparna JRAinda não há avaliações

- Econometric Lec7Documento26 páginasEconometric Lec7nhungAinda não há avaliações

- ListcoefDocumento76 páginasListcoefBrian RogersAinda não há avaliações

- Lecture 4 Intro To ML 27 03 2023 27032023 041559pmDocumento50 páginasLecture 4 Intro To ML 27 03 2023 27032023 041559pmGaylethunder007Ainda não há avaliações

- Fit Indices For SEMDocumento17 páginasFit Indices For SEMRavikanth ReddyAinda não há avaliações

- GLMDocumento26 páginasGLMNirmal RoyAinda não há avaliações

- Logistic RegressionDocumento41 páginasLogistic RegressionSubodh KumarAinda não há avaliações

- Practical - Logistic RegressionDocumento84 páginasPractical - Logistic Regressionwhitenegrogotchicks.619Ainda não há avaliações

- Unit VDocumento27 páginasUnit V05Bala SaatvikAinda não há avaliações

- Econometrics II CH 1Documento48 páginasEconometrics II CH 1nigusu deguAinda não há avaliações

- Assumptions For Regression Analysis: MGMT 230: Introductory StatisticsDocumento3 páginasAssumptions For Regression Analysis: MGMT 230: Introductory StatisticsPoonam NaiduAinda não há avaliações

- Logistic RegressionDocumento30 páginasLogistic RegressionSafaa KahilAinda não há avaliações

- Probit Logit IndianaDocumento62 páginasProbit Logit Indianamb001h2002Ainda não há avaliações

- 09-Limited Dependent Variable ModelsDocumento71 páginas09-Limited Dependent Variable ModelsChristopher WilliamsAinda não há avaliações

- Q. 1 How Is Mode Calculated? Also Discuss Its Merits and Demerits. AnsDocumento13 páginasQ. 1 How Is Mode Calculated? Also Discuss Its Merits and Demerits. AnsGHULAM RABANIAinda não há avaliações

- ADM2304 Multiple Regression Dr. Suren PhansalkerDocumento12 páginasADM2304 Multiple Regression Dr. Suren PhansalkerAndrew WatsonAinda não há avaliações

- Modelos - Sem15 - Logit - Probit - Logistic RegressionDocumento8 páginasModelos - Sem15 - Logit - Probit - Logistic RegressionEmmanuelAinda não há avaliações

- 3 Linear Regression 3Documento10 páginas3 Linear Regression 3neuro.ultragodAinda não há avaliações

- Regression CookbookDocumento11 páginasRegression CookbookPollen1234Ainda não há avaliações

- Lec - SP MethodsDocumento82 páginasLec - SP MethodsverdantoAinda não há avaliações

- A Comparison of Univariate Probit and Logit Models Using SimulationDocumento21 páginasA Comparison of Univariate Probit and Logit Models Using SimulationLotfy LotfyAinda não há avaliações

- Regression PDFDocumento33 páginasRegression PDF波唐Ainda não há avaliações

- Logit & Probit ModelDocumento51 páginasLogit & Probit ModelVinayakaAinda não há avaliações

- Stat 250 Gunderson Lecture Notes Relationships Between Categorical Variables 12: Chi Square AnalysisDocumento17 páginasStat 250 Gunderson Lecture Notes Relationships Between Categorical Variables 12: Chi Square AnalysisDuckieAinda não há avaliações

- Us20 AllisonDocumento10 páginasUs20 Allisonpremium info2222Ainda não há avaliações

- 04 16 Simple RegressionDocumento47 páginas04 16 Simple RegressionWAWAN HERMAWANAinda não há avaliações

- David A. Kenny June 5, 2020Documento9 páginasDavid A. Kenny June 5, 2020Anonymous 85jwqjAinda não há avaliações

- Logit and SpssDocumento37 páginasLogit and SpssSagn MachaAinda não há avaliações

- Introduction To Multi-Level Models: Statistical Background On MlmsDocumento20 páginasIntroduction To Multi-Level Models: Statistical Background On MlmsSabrinamcarlosAinda não há avaliações

- Linear Models BiasDocumento17 páginasLinear Models BiasChathura DewenigurugeAinda não há avaliações

- Multiple Models Approach in Automation: Takagi-Sugeno Fuzzy SystemsNo EverandMultiple Models Approach in Automation: Takagi-Sugeno Fuzzy SystemsAinda não há avaliações

- Common Letter of Recommendation: Schools Using The Common LOR FormDocumento13 páginasCommon Letter of Recommendation: Schools Using The Common LOR FormrahulsukhijaAinda não há avaliações

- 30 Day Plan Magoosh Excel WorksheetDocumento59 páginas30 Day Plan Magoosh Excel WorksheetrahulsukhijaAinda não há avaliações

- Recent DS Collection 1Documento30 páginasRecent DS Collection 1karthykAinda não há avaliações

- Please Click On Individual Worksheets Below To View The Study Plan For SC, CR, RC, and IRDocumento24 páginasPlease Click On Individual Worksheets Below To View The Study Plan For SC, CR, RC, and IRrahulsukhijaAinda não há avaliações

- 3.due To Vs Because ofDocumento5 páginas3.due To Vs Because ofMayank PareekAinda não há avaliações

- Flashcards - Quantitative ReviewDocumento108 páginasFlashcards - Quantitative ReviewEduardo KidoAinda não há avaliações

- Comprehensive Gmat Idiom ListDocumento12 páginasComprehensive Gmat Idiom ListDeepak KumarAinda não há avaliações

- Howto ArgparseDocumento12 páginasHowto ArgparserahulsukhijaAinda não há avaliações

- GMAT ScheduleDocumento4 páginasGMAT ScheduleJitta Raghavender RaoAinda não há avaliações

- Ron Purewal SC Mgmat TipsDocumento12 páginasRon Purewal SC Mgmat TipsTin PhanAinda não há avaliações

- Math Book GMAT ClubDocumento126 páginasMath Book GMAT Clubudhai170819Ainda não há avaliações

- ExtendingDocumento100 páginasExtendingrahulsukhijaAinda não há avaliações

- DistutilsDocumento102 páginasDistutilsrahulsukhijaAinda não há avaliações

- Data Science Hiring Guide PDFDocumento56 páginasData Science Hiring Guide PDFrahulsukhijaAinda não há avaliações

- Duarte SBTemplate DownloadDocumento25 páginasDuarte SBTemplate DownloadrahulsukhijaAinda não há avaliações

- Quandl - Pandas, SciPy, NumPy Cheat Sheet PDFDocumento4 páginasQuandl - Pandas, SciPy, NumPy Cheat Sheet PDFrahulsukhijaAinda não há avaliações

- Python TutorialDocumento87 páginasPython TutorialCristiano PalmaAinda não há avaliações

- PythonForDataScience PDFDocumento1 páginaPythonForDataScience PDFMaisarah Mohd PauziAinda não há avaliações

- ML Performance Improvement CheatsheetDocumento11 páginasML Performance Improvement Cheatsheetrahulsukhija100% (1)

- Descriptive Statistics+probabilityDocumento3 páginasDescriptive Statistics+probabilityrahulsukhija0% (1)

- Principal: Component AnalysisDocumento29 páginasPrincipal: Component AnalysisrahulsukhijaAinda não há avaliações

- Gold CollectionDocumento2 páginasGold CollectionrahulsukhijaAinda não há avaliações

- Statistics ProbabilityDocumento1 páginaStatistics ProbabilityrahulsukhijaAinda não há avaliações

- First Steps To Investing A Beginners Guide Prithvi Haldea PDFDocumento21 páginasFirst Steps To Investing A Beginners Guide Prithvi Haldea PDFnagaravikrishnadiviAinda não há avaliações

- 6 SCMDocumento10 páginas6 SCMrahulsukhijaAinda não há avaliações

- Statistics ProbabilityDocumento1 páginaStatistics Probabilityrahulsukhija50% (2)

- Statistics ProbabilityDocumento1 páginaStatistics ProbabilityrahulsukhijaAinda não há avaliações

- A Purposeful Selection of Variables Macro For Logistic RegressionDocumento5 páginasA Purposeful Selection of Variables Macro For Logistic RegressionrahulsukhijaAinda não há avaliações

- Statnews #78 What Is Survival Analysis?Documento3 páginasStatnews #78 What Is Survival Analysis?rahulsukhijaAinda não há avaliações

- B Boehler-Fox-Cel Se en v1Documento1 páginaB Boehler-Fox-Cel Se en v1tacosanchezbrayanAinda não há avaliações

- Avogadro's NumberDocumento5 páginasAvogadro's NumberM J RhoadesAinda não há avaliações

- 1962 Fallout Shelter DesignDocumento218 páginas1962 Fallout Shelter DesignLouie_popwhatski100% (1)

- 27 Excel Add-InsDocumento10 páginas27 Excel Add-Insgore_11Ainda não há avaliações

- Lecture 1 U & DDocumento33 páginasLecture 1 U & DMr DrayAinda não há avaliações

- Earth and Life Science Copy (Repaired)Documento39 páginasEarth and Life Science Copy (Repaired)Aaron Manuel MunarAinda não há avaliações

- Dry Gas Seal Systems - Part 2Documento3 páginasDry Gas Seal Systems - Part 2Jai-Hong ChungAinda não há avaliações

- Crankshaft Axial Vibration AnalysisDocumento8 páginasCrankshaft Axial Vibration Analysisanmol6237100% (1)

- Astm E2583Documento3 páginasAstm E2583Eliana salamancaAinda não há avaliações

- StiffenerDocumento12 páginasStiffenergholiAinda não há avaliações

- Leaded Receptacle Switch BrochureDocumento18 páginasLeaded Receptacle Switch BrochureArturo De Asis SplushAinda não há avaliações

- Tutorial 5 - Entropy and Gibbs Free Energy - Answers PDFDocumento5 páginasTutorial 5 - Entropy and Gibbs Free Energy - Answers PDFAlfaiz Radea ArbiandaAinda não há avaliações

- Potential Kinetic EnergyDocumento32 páginasPotential Kinetic EnergyKathjoy ParochaAinda não há avaliações

- MathsDocumento2 páginasMathsAditya Singh PatelAinda não há avaliações

- Herschel 400 2 Log BookDocumento29 páginasHerschel 400 2 Log BookEveraldo FaustinoAinda não há avaliações

- Weeemake TrainingDocumento46 páginasWeeemake Trainingdalumpineszoe100% (1)

- Marta Ziemienczuk DissertationDocumento134 páginasMarta Ziemienczuk DissertationLetalis IraAinda não há avaliações

- Acara 4 GranulometriDocumento63 páginasAcara 4 GranulometriHana Riwu KahoAinda não há avaliações

- Nonlinear Systems: Lyapunov Stability Theory - Part 2Documento36 páginasNonlinear Systems: Lyapunov Stability Theory - Part 2giacomoAinda não há avaliações

- GMAT QUANT TOPIC 3 (Inequalities + Absolute Value) SolutionsDocumento46 páginasGMAT QUANT TOPIC 3 (Inequalities + Absolute Value) SolutionsBhagath GottipatiAinda não há avaliações

- Chapter 16. PolymersDocumento4 páginasChapter 16. PolymersAnonAinda não há avaliações

- Electrical Power Plans For Building ConstructionDocumento16 páginasElectrical Power Plans For Building ConstructionKitz DerechoAinda não há avaliações

- Quick Guide To Beam Analysis Using Strand7Documento15 páginasQuick Guide To Beam Analysis Using Strand7Tarek AbulailAinda não há avaliações

- Curriculum I Semester: SL - No Subject Code Subject Name Category L T P CreditsDocumento23 páginasCurriculum I Semester: SL - No Subject Code Subject Name Category L T P CreditsRathinaKumarAinda não há avaliações

- Shore ScleroscopeDocumento6 páginasShore ScleroscopeAaliyahAinda não há avaliações

- Segui 6e ISM Ch08Documento105 páginasSegui 6e ISM Ch08miraj patelAinda não há avaliações

- CFBC DesignDocumento28 páginasCFBC DesignThanga Kalyana Sundaravel100% (2)

- Steam Attemperation Valve and Desuperheater Driven Problems On HRSG'sDocumento25 páginasSteam Attemperation Valve and Desuperheater Driven Problems On HRSG'stetracm100% (1)

- Local Buckling Analysis Based On DNV-OS-F101 2000Documento4 páginasLocal Buckling Analysis Based On DNV-OS-F101 2000shervinyAinda não há avaliações