Escolar Documentos

Profissional Documentos

Cultura Documentos

Video Streaming Over IEEE 802.11

Enviado por

Farouk Boum0 notas0% acharam este documento útil (0 voto)

10 visualizações5 páginasIn this paper, we implement a recently proposed Cross-Layer Framework for Video Streaming. The test-bed allows joint adaptation of the transcoding rate (at the application layer) with the number of redundant forward error correction packets (at the data link layer) When the proposed framework is invoked, the experimental results show that the video transcoding rate is adapted according to the channel.

Descrição original:

Direitos autorais

© © All Rights Reserved

Formatos disponíveis

PDF, TXT ou leia online no Scribd

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoIn this paper, we implement a recently proposed Cross-Layer Framework for Video Streaming. The test-bed allows joint adaptation of the transcoding rate (at the application layer) with the number of redundant forward error correction packets (at the data link layer) When the proposed framework is invoked, the experimental results show that the video transcoding rate is adapted according to the channel.

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

0 notas0% acharam este documento útil (0 voto)

10 visualizações5 páginasVideo Streaming Over IEEE 802.11

Enviado por

Farouk BoumIn this paper, we implement a recently proposed Cross-Layer Framework for Video Streaming. The test-bed allows joint adaptation of the transcoding rate (at the application layer) with the number of redundant forward error correction packets (at the data link layer) When the proposed framework is invoked, the experimental results show that the video transcoding rate is adapted according to the channel.

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

Você está na página 1de 5

Test-Bed Implementation of a Cross-Layer

Framework for Video Streaming over IEEE 802.11

Ad-Hoc Wireless Network

Abstract Video streaming over bandwidth constrained and

highly unpredictable wireless environment has prompted

innovative cross-layer design strategies in the literature.

However, most of the existing cross-layer designs lack the

experimental validation of their real-life performance capability.

In this paper, we have developed a test-bed that implements a

recently proposed cross-layer framework for video streaming

applications over IEEE 802.11 based ad-hoc wireless networks.

The test-bed allows joint adaptation of the transcoding rate (at

the application layer) with the number of redundant forward

error correction packets (at the data link layer). When the

proposed framework is invoked, the experimental results show

that the video transcoding rate is adapted according to the

channel, resulting in a reduced number of lost video frames,

which contributes to a better video quality.

Keywords - cross-layer design; IEEE 802.11; experimentation;

video streaming; wireless networks

I. INTRODUCTION

With the advancements and wide deployment of wireless

local area networks (WLANs) and state-of-the-art video

compression tools, wireless multimedia streaming applications

are emerging in homes, campuses, meeting rooms, tele-

medicine, training facilities, et cetera. The main technical

issues in designing wireless video streaming applications

include: support of different client requirements, error-control,

adaptive transcoding of the pre-coded video to channel

bandwidth, resource management amongst the different

applications, and timely information delivery without

exceeding the stringent delay requirements. To resolve these

issues, many cross-layer based frameworks have been proposed

in the literature, e.g., [1], [2], [3], which provide efficient

solutions of the video streaming applications. The cross-layer

solutions are sometimes too complex to be implemented over a

real-life network. It is therefore required to have a cross-layer

solution that is not only analytically tractable and gives better

system performance, but also provides implementation

simplicity.

Recently, we have developed a cross-layer framework [3]

that jointly interfaces with the application and data-link layers

of the TCP/IP protocol stack. The proposed framework

performs joint adaptation of the transcoding rate (at the

application layer) and the number of redundant forward error

correction (FEC) packets (at the data-link layer). In this paper,

we describe the implementation of the proposed framework on

a test-bed and present results from testing and experimentation.

To our knowledge, there exist only a few implementations

of cross-layer solutions over wireless test-beds in the literature.

For example, a cross-layer framework, called XIAN, is

developed and implemented over a test-bed in [4], where the

authors have provided some experimental results on how the

expected transmission count (ETX) metric, implemented within

the XIAN framework through automated XIAN nano-protocol,

makes the efficient QoS routing decisions. Although both the

kernel and user space functions are clearly defined in [4], the

interaction between the lower layers (e.g., data-link layer) and

upper layers (such as application layer) is not given, which is

crucial in video streaming applications. For video streaming

applications, there is a requirement of changing the transcoding

rate of the incoming bit-stream according to the channel

conditions, and thus requires an interaction of physical and

data-link layers to the application layer. The test-bed model

presented in this paper is different than that of [4] by providing

an access to the application layer from data-link layer through

the integration of kernel and user space functions. Similarly, a

buffer-controlled adaptive video streaming test-bed is presented

in [5], where the available buffer sizes at the hand-held devices

drive the rate-control schemes to adapt the transcoding rate to

the channel conditions. Our work differs from the test-bed

model given in [5] because we take into consideration the real-

time transcoding decisions, instead of having a limited number

of rate-control schemes. This makes our test-bed model more

flexible and adaptive to the changing wireless conditions. The

authors of [6] have considered a peer-to-peer mobile ad-hoc

network for implementing a cross-layer feedback control

scheme for video communication over unstructured tactical

mobile networks. Our work augments the work presented in

[6], in extending the feedback information to the application

layer, such that the transcoding rate can also be changed, in

conjunction with the data-link layer parameters, e.g., number of

redundant FEC packets.

The motivation for this work is driven by the need to assess

the capabilities of our proposed cross-layer framework in a

real-life setting. Results from experimentation would

demonstrate a reduction in frame loss, achieved via the

adaptation of the application layer parameters to the channel

conditions.

The paper is organized as follows. Section II summarizes

the details of the cross-layer framework presented in [3] which

Azfar Moid and Abraham O. Fapojuwo

Department of Electrical and Computer Engineering

The University of Calgary, AB, T2N 1N4, Canada

{amoid@ucalgary.ca, fapojuwo@ucalgary.ca}

978-1-4244-5213-4/09/ $26.00 2009 IEEE 2965

Authorized licensed use limited to: University of Allahabad. Downloaded on May 18,2010 at 20:59:05 UTC from IEEE Xplore. Restrictions apply.

is to be implemented over the test-bed. In section III, we

describe a detailed design of the test-bed and show how the

information is exchanged between the kernel and user spaces.

Experimentation results are given and discussed in section IV,

while section V concludes the paper.

II. CROSS-LAYER FRAMEWORK FOR VIDEO STREAMING

In our previous work [3], we have developed a cross-layer

framework that interfaces with the application and data-link

layers of the TCP/IP protocol stack, as shown in Fig. 1. The

framework, called cross-layer module (CLM), consists of four

main elements, which are briefly summarized here. The first

element of the CLM is the channel estimator, which is

responsible for estimating the current channel conditions.

These channel conditions are extracted from the information on

the packet transmission attempts, available at the data-link

layer. The estimated channel information is then fed to the

other three elements. The second element of the CLM is the

buffer controller, which uses the channel information from the

channel estimator to control the application layers buffer

overflow and/or underflow. The transcoding controller is the

third element of the CLM, which calculates the video

transcoding rate in real-time, based on the information

available from the channel estimator and the buffer controller.

The final element of the CLM is the FEC/ARQ controller,

which optimally calculates the number of redundant FEC

packets required for providing the error resilience functionality,

based on the estimated channel information. By combining all

the four elements of the CLM, we have achieved an efficient

and reliable video streaming solution for the IEEE 802.11

wireless networks.

In this work, we have implemented the CLM on an IEEE

802.11 based wireless network, operating in an ad-hoc mode.

For practical reasons, ad-hoc mode is chosen over

infrastructure mode, due to the unavailability of the proprietary

source code of an access points (AP) firmware that is required

in an infrastructure mode. On the other hand, open source

drivers of the network interface cards (NIC) are readily

available, making it easier for us to implement the cross-layer

framework in an ad-hoc mode. Fig. 2 illustrates the ad-hoc

based wireless network for video streaming, consisting of a

video server and a video client. The original video files are

stored at the video server, which are transcoded and transmitted

to the video client. The CLM is implemented at the video

server (transmitter), and decoder functionality is implemented

at the client (receiver), where video frames are eventually

received, buffered and rendered to the clients terminal. Also,

throughout the paper, a packet refers to an IEEE 802.11 data-

link layer protocol data unit whereas a frame denotes a video

frame at the application layer.

III. TEST-BED CONSTRUCTION DETAILS

The primary goal of the test-bed is to determine and

evaluate the quality of the streamed video, in the presence of

the proposed cross-layer framework. The gain in video quality,

when the cross-layer framework is invoked, will be compared

against the scenario where no such framework is used. The

test-bed will also provide a scaled-down model of real life

video streaming wireless network, over which different

performance evaluation metrics could be monitored.

The test-bed we have developed is restricted by three main

constraints: 1) A maximum of 11 Mbps data-rate is defined for

the IEEE 802.11b wireless devices working in ad-hoc mode [7]

(however, a few proprietary ad-hoc networks can surpass the

limit of 11 Mbps [8]), 2) Operating system dependence the

wireless driver can only be implemented using an open source

operating system, such as Linux, and 3) Media player

dependence only the open source media players (e.g.,

VideoLAN Client VLC) give access to the application layer

coding. Note that, these constraints are only driven by the

practical consideration, and will not affect the functionality of

the test-bed.

The video server is a Linux based machine, running

Fedora10 with a custom built kernel: 2.6.27.21-170. The

processor speed of the video server is 3.0GHz, with the RAM

size of 1GB. We have used a Windows XP (SP3) based

machine as the video receiver, with an Intel Pentium M

Processor running at 1.60GHz, and a RAM size of 512MB.

Linux was chosen at the video server because of its ability to

provide access to the source code of different device drivers

and the kernel core. Due to the popularity of Windows based

client devices, the choice of Windows machine as a client

device is also justified. The video server has a built-in Intel

WiFi card 4965-AGN for which we have used the iwlwifi

driver, available at [9]. On the other hand, a Linksys dual-band,

Atheros based external PCMCIA card (model number:

WPC55AG) was used on the Windows machine, installed with

the Linksys driver (ver. 3.3.0.1561). Both cards are configured

on channel 10 for the IEEE 802.11 ad-hoc mode, at a speed of

Figure 1. Augmented TCP/IP stack with the proposed

cross-layer module (CLM) [3]

Figure 2. Ad-Hoc mode IEEE 802.11 wireless test-bed for

video streaming

2966

Authorized licensed use limited to: University of Allahabad. Downloaded on May 18,2010 at 20:59:05 UTC from IEEE Xplore. Restrictions apply.

11 Mb/s. WEP encryption was also enabled on both machines

to avoid any malicious connection.

The test-bed software architecture is divided into two

levels: kernel space and user space. The kernel space composed

of basic networking functionalities available under Linux,

which directly interacts with the wireless driver module (i.e.,

iwlwifi in this case) when the driver is loaded. The wireless

driver can also be a part of the Linux kernel, but the highly

modular design of the kernel suggests the driver to be a

loadable module. On the other hand, user space contains all the

application programming interfaces (APIs), with which the

application layer programs interact, such as video encoder and

decoder. The interaction between the kernel space and user

space is made possible through the use of kernel hooks.

To implement the cross-layer framework, the first step is to

access the information on the number of packet transmission

attempts, available at the loadable module level of the Linux

kernel. In order to transport this information to the higher

layers, a kernel hook is created at the iwlwifi driver that copies

the packet transmission count to the /proc file system. The

/proc file system serves as an abstraction layer between the

kernel and user spaces. The video encoder, at the application

layer, accesses this information at the /proc file system to

calculate the new target bit-rate for the next video frame. As

given in [3], the target bit-rate of the next video frame is

adjusted with a rate-adjustment factor of 0.8, 1, and 1.2,

corresponding to three channel states: bad, moderate and good,

respectively. Once the refined target bit-rate is obtained, the

next step is to determine the quantization point (QP) for the

next video frame. A large QP (>40) is used for generating

lower bit-rate video stream, and lower QP (<20) is used for

generating higher bit-rate video stream. We have used an initial

value of QP=40 to avoid any packet drop due to higher bit-rate.

The QP for the subsequent frames is calculated by [10]:

( )

( ) ( ) ( ) 1

2 ( )

y

y y y

y

X

R MAD X

QP

= + , (1)

where R is target bit-rate for the next video frame y, MAD is the

mean absolute difference of the motion information between a

reference frame and predicted frame (used to measure the

frame complexity). The constants X

1

and X

2

are model

parameters, which represent the texture and non-texture bits

respectively and updated after transcoding every frame [10].

For a given maximum number of packet transmission

attempts at the data-link layer, the framework also calculates

the number of redundant FEC packets to be added to the video

stream. In Linux, the iwconfig command at the user space

provides control over the data-link layer parameters, such as

maximum number of packet transmission attempts. We have

configured the wireless driver iwlwifi to utilize the MAC-

802.11 subsystem for the Intel 4965-AGN network connection

adapter. We have also modified the function:

iwl4965_tx_status_reply_tx(.), available at iwl-4965.c, to get

the packet transmission information. This information is then

passed to the application layer through the use of socket buffer

IEEE80211_SKB_CB. This socket buffer is used to transport

the packet transmission count to the /proc file system, ready to

be processed at the application layer.

VideoLAN project has developed an excellent open source

media player, known as VLC media player, which supports

many video and audio codecs and file formats [11]. The VLC

media player is platform-independent, hence we have used it as

both the video streaming server and video client on Linux and

Windows machines, respectively. The video packets are

encoded according to the real-time transport protocol (RTP)

standard and sent to the video client over user datagram

protocol (UDP). The transrate.c file available at:

module/stream_out/transrate, provides the location where each

video frame is transcoded. The transrate_video_process(.)

function is modified such that it reads the channel information

available at /proc file systems and calculates a new QP,

according to (1). The video transcoding rate is thus made

compatible to the present network conditions. The video frame

is then packetized and VLC opens the /proc file system again,

which is associated with the packet transmission and reception.

The video packets are copied to the transmission buffer, and

they are eventually transmitted. This mechanism, which adapts

the application layer according to the information available at

the data-link layer, serves as the nucleus of the test-bed

implementation. The open source nature of the implementation

provides versatility, allowing the test-bed to be used for testing

the extensions of the cross-layer design proposed in [3].

To generate the background traffic over the wireless

network, we have used the open source uftp and uftpd programs

(available at [12]) on Linux and Windows machines,

respectively. As the video packets are sent over the UDP

protocol, we have used the same UDP protocol for the

background traffic.

IV. EXPERIMENTAL RESULTS AND DISCUSSION

In order to examine the benefits of the proposed cross-layer

framework for video streaming networks, we have performed

several sets of experiments with three video clips. For these

experiments, three video clips having slow, medium and fast

motion contents, are recorded at a rate of 30 frames/sec, frame

size of 320x240 pixels, and encoded at 268 kbps. Background

traffic, in the form of FTP load, is taken as a file transfer of

700MB over UDP protocol.

We have used the following two performance evaluation

metrics for this study: 1) number of lost video frames at the

video client and 2) video transcoding rate measured at the

video server. A video frame is considered lost when it does not

arrive in its allowed maximum time or it is corrupted in a way

that the error correction schemes could not recover it. The

direct method of analyzing the objective video quality (e.g.,

peak signal to noise ratio PSNR) requires the availability of

reference frame(s) at the video client, thus it cannot be used on

this test-bed. On the other hand, the fluctuations in the video

transcoding rate show how the application layer is adapting to

the channel conditions. The VLC media player, used at the

application layer, provides a graphical user interface (GUI) to

monitor the video transcoding rate in real-time. On the video

server, we have used an open source screen capture tool,

RecordMyDesktop ver. 0.3.8.1 (available at [13]), to capture

the video transcoding rate in real-time.

2967

Authorized licensed use limited to: University of Allahabad. Downloaded on May 18,2010 at 20:59:05 UTC from IEEE Xplore. Restrictions apply.

The performance of the cross-layer framework is measured

and compared under loading and no-loading conditions. The

network is considered loaded when both the video streaming

and background traffic are present at any given time. On the

other hand, no-loading condition refers to the network state

when only the video streaming packets constitute the wireless

traffic. Four kinds of experiments are performed: 1) no loading

condition, w/o the framework, 2) no loading condition, w/ the

framework, 3) loading condition, w/o the framework, and 4)

loading condition, w/ the framework.

In each experiment, the location of the video server does

not change, while the client machine moves away from the

server as each experiment progresses. All the experiments are

performed at the Engineering Building A Block (a three storey

building), at the University of Calgary. Each experiment starts

at time t=0min, both the server and client machines are located

in room ENA-119 on the first floor. The client machine is taken

to the ENA first floors lobby at t=1min, creating an

approximate distance of 15m between the server and client

machines. At t=3min, the client machine is taken to the second

floors lobby, at a distance of 20m from the server. A distance

of approximately 25m is created between the server and client

machines, when the client is moved to the third floor at t=5min.

As the client machine is taken towards the far end of the third

floor at t=7min, the connection between the server and client

drops, and the experiment is terminated. This experimental

detail is also summarized in Table I. Both the video streaming

and background file transfer (under loading condition) start at

t=0min.

A. Number of Lost Frames

Figure 3 shows the number of lost video frames over the

course of experiments. Note that all the three video clips follow

the same pattern as they are encoded at the same bit-rate. When

video traffic is the only traffic present in the network, depicting

no-loading condition, the results are shown in Fig. 3a. It is seen

from Fig. 3a that the number of lost video frames is reduced up

to 50 percent as the distance between server and client machine

increases, when the cross-layer framework is invoked. With an

increase in the distance between client and server machines, the

VLC application reduces the transcoding rate in order to match

the network conditions, causing smaller video frame sizes to be

transmitted over the network. This would allow more video

frames to get through the network without being dropped.

When the network is loaded, a similar pattern is observed in

Fig. 3b. Comparing Figs. 3a and 3b, it is observed that the

number of lost video frames is increased in the presence of

background traffic. A shared bandwidth and increased distance

between the video server and client machine causes more video

frames to get dropped.

B. Video Transcoding Rate

Video transcoding rate, measured in bits per second (bps),

refers to the number of bits used to transcode the video frames

in one second. When the cross-layer framework is not invoked,

it is seen in Fig. 4 that the video transcoding rates do not

change significantly over time, for all the three video clips,

under both loading and no-loading conditions. Without the

cross-layer framework, the VLC application encodes the video

stream according to the available channel bandwidth. When the

cross-layer framework is invoked, it is seen in Figs. 4a and 4b

that if the server and client machines are not very far apart

(e.g., both machines are on the first floor), the transcoding rate

does not change significantly because of the good channel

condition. As the distance between the server and client

machines increases (after t=2min), the cross-layer framework

causes the transcoding rate to decrease such that the frame loss

can be minimized. A continuous drop in the video transcoding

rate, even when the client machine is at a stationary position, is

due to the cross-layer design implemented at the server side,

causing the transcoding rate to drop by 20 percent for each

subsequent frame. This allows a graceful degradation in video

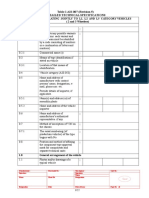

TABLE I

EXPERIMENTS PERFORMED OVER THE TIME

Time

t (min)

Location / Distance

0 Start the experiment at ENA 119 (1m)

1 Start moving to ENG-A, first floor lobby

2 Stay still at ENG-A, first floor lobby (15 m)

3 Start moving to ENG-A, second floor lobby

4 Stay still at ENG-A, second floor lobby (20 m)

5 Start moving to ENG-A, third floor

6 Stay still at ENG-A, third floor (25 m)

7 Start moving to ENG-A, third floor far end

0

200

400

600

800

1000

0 1 2 3 4 5 6 7

Time (min)

N

u

m

b

e

r

o

f

L

o

s

t

F

r

a

m

e

s

Clip 1, w/o fw

Clip 2, w/o fw

Clip 3, w/o fw

Clip 1, w/ fw

Clip 2, w/ fw

Clip 3, w/ fw

0

200

400

600

800

1000

0 1 2 3 4 5 6 7

Time (min)

N

u

m

b

e

r

o

f

L

o

s

t

F

r

a

m

e

s

Clip 1, w/o fw

Clip 2, w/o fw

Clip 3, w/o fw

Clip 1, w/ fw

Clip 2, w/ fw

Clip 3, w/ fw

(a) (b)

Figure 3. Number of lost video frames vs. time (a) no-loading condition, (b) loading condition

2968

Authorized licensed use limited to: University of Allahabad. Downloaded on May 18,2010 at 20:59:05 UTC from IEEE Xplore. Restrictions apply.

quality, unlike a frozen video screen (or a complete blackout)

observed in the absence of the cross-layer framework.

Comparing the loading and no-loading conditions, it is seen in

Fig.4 that the video content are transcoded at a lesser bit-rate,

when the network is loaded with the background traffic. When

the wireless bandwidth is shared by the video streaming

packets and background traffic, the probability of a packet drop

increases, causing a trigger to the application layer to reduce

the transcoding rate.

Clearly, a reduction in the number of lost video frames has

a direct positive impact on the received video quality.

However, the effect of adapting the video transcoding rate on

the received video quality is not that straight forward, but

presents a paradox. On one hand, a low transcoding rate helps

reduce the number of lost video frames, thereby enhancing the

video quality, as noted above. On the other hand, a low video

transcoding rate deteriorates the video quality as the video

frames are now encoded with lesser number of bits. To study

this paradox, a detailed qualitative assessment of the received

video quality is required, this constitutes our future work.

V. CONCLUSION

In this paper, we have developed and presented an ad-hoc

wireless test-bed to implement the cross-layer framework for

video streaming. The test-bed design includes both the

hardware and software components along with some network

monitoring tools to record the systems performance. The

performance of the cross-layer framework is evaluated under

both loading and no-loading conditions and compared against

the cases when no such framework is used. It is found that the

dropped video frames are reduced by up to 50 percent when

the cross-layer framework is invoked, resulting in a better

video quality. The variations in the transcoding rate can also

be seen at the transmitter side, which confirms that the

application layer is adapting according to the current channel

conditions, as expected. It is concluded from the results that

the implemented cross-layer framework provides improved

video quality.

ACKNOWLEDGMENT

The authors acknowledge the support of the University of

Calgary, TRLabs and National Sciences and Engineering

Research Council (NSERC) Canada for this research.

REFERENCES

[1] S. Mohapatra, N. Dutt, A. Nicolau and N. Venkatasubramanian,

DYNAMO: A Cross-Layer Framework for End-to-End QoS and

Energy Optimization in Mobile Handheld Devices, IEEE Journal on

Selected Areas in Communications, vol. 25, no. 4, May 2007, pp. 722 -

737.

[2] M. Nikoupour, A. Nikoupour and M. Dehghan, A Cross-Layer

Framework for Video Streaming over Wireless Ad-Hoc Networks, In

the proceedings of the IEEE Third International Conference on Digital

Information Management, Nov 13-16, 2008, pp. 340 - 345.

[3] A. Moid and A. O. Fapojuwo, A Cross-Layer Framework for Efficient

Streaming of H.264 video over IEEE 802.11 Networks, Journal of

Computer Systems, Networks, and Communications, vol. 2009, Article

ID 682813, 13 pages, 2009. doi:10.1155/2009/682813.

[4] H. Aache, V. Conan, L. Lebrun, J. Leguay, S. Rousseau and D.

Thoumin, XIAN Automated Management and Nano-Protocol to Design

Cross-Layer Metrics for Ad Hoc Networking, Lecture Notes in

Computer Science, Springer Berlin / Heidelberg, vol. 4982/2008, ISBN

978-3-540-79548-3, pp 1-13.

[5] Y.-S. Hong, J.-H. Kim and Y.-H. Kim, A Buffer-Controlled Adaptive

Video Streaming for Mobile Devices, International Conference on

Convergence Information Technology, 21-23 Nov. 2007, pp. 30 - 35.

[6] H. Gharavi and K. Ban, Cross-Layer Feedback Control for Video

Communications via Mobile Ad-Hoc Networks, IEEE 58th Vehicular

Technology Conference, VTC 2003-Fall, vol.5, Oct. 6-9, 2003, pp.

2941-2945.

[7] P. Roshan and J. Leary, 802.11 Wireless LAN Fundamentals, Cisco

Press, ISBN-10: 1-58705-077-3, 2003.

[8] Online Article, available at: http://labs.pcw.co.uk/2005/03/adhoc-

wireless-.html, accessed on May 31, 2009.

[9] Online Reference: http://intellinuxwireless.org/?p=iwlwifi, accessed on

May 31, 2009.

[10] Z. G. Li, F. Pan, K. P. Lim, G. N. Feng, X. Lin and S. Rahardaj,

Adaptive Basic Unit Layer Rate Control for JVT, JVT-G012, 7th

Meeting, Pattaya, Thailand, Mar. 7-14, 2003.

[11] Online Reference: http://www.videolan.org, accessed on May 31, 2009.

[12] Online Reference: http://www.tcnj.edu/~bush/uftp.html, accessed on

May 31, 2009.

[13] Online Reference: http://recordmydesktop.sourceforge.net/, accessed on

May 31, 2009.

0

200

400

600

800

1000

0 1 2 3 4 5 6 7

Time (min)

V

i

d

e

o

T

r

a

n

s

c

o

d

i

n

g

R

a

t

e

(

b

p

s

)

Clip 1, w/o fw

Clip 2, w/o fw

Clip 3, w/o fw

Clip 1, w/ fw

Clip 2, w/ fw

Clip 3, w/ fw

0

200

400

600

800

1000

0 1 2 3 4 5 6 7

Time (min)

V

i

d

e

o

T

r

a

n

s

c

o

d

i

n

g

R

a

t

e

(

b

p

s

)

Clip 1, w/o fw

Clip 2, w/o fw

Clip 3, w/o fw

Clip 1, w/ fw

Clip 2, w/ fw

Clip 3, w/ fw

(a) (b)

Figure 4. Video transcoding rate vs. time (a) no-loading condition, (b) loading condition

2969

Authorized licensed use limited to: University of Allahabad. Downloaded on May 18,2010 at 20:59:05 UTC from IEEE Xplore. Restrictions apply.

Você também pode gostar

- Qoe in Video Streaming Over Wireless Networks: Perspectives and Research ChallengesDocumento23 páginasQoe in Video Streaming Over Wireless Networks: Perspectives and Research ChallengesFarouk BoumAinda não há avaliações

- Mpeg2 de Base PDFDocumento25 páginasMpeg2 de Base PDFFarouk BoumAinda não há avaliações

- A Review of Multiple Description Coding Techniques For Error-Resilient Video DeliveryDocumento59 páginasA Review of Multiple Description Coding Techniques For Error-Resilient Video DeliveryFarouk BoumAinda não há avaliações

- Encoding and Video Content Based HEVC Video Quality PredictionDocumento24 páginasEncoding and Video Content Based HEVC Video Quality PredictionFarouk BoumAinda não há avaliações

- Low Redundancy Layered Multiple Description Scalable CodingDocumento10 páginasLow Redundancy Layered Multiple Description Scalable CodingFarouk BoumAinda não há avaliações

- Mpeg2 de Base PDFDocumento25 páginasMpeg2 de Base PDFFarouk BoumAinda não há avaliações

- Video Streaming Over IEEE 802.11Documento5 páginasVideo Streaming Over IEEE 802.11Farouk BoumAinda não há avaliações

- 1 s2.0 S1570870510001216 MainDocumento12 páginas1 s2.0 S1570870510001216 MainFarouk BoumAinda não há avaliações

- 06340564Documento5 páginas06340564Farouk BoumAinda não há avaliações

- Propagation ModelsDocumento8 páginasPropagation ModelsFarouk BoumAinda não há avaliações

- SCTPDocumento11 páginasSCTPFarouk BoumAinda não há avaliações

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- Fluid MechDocumento2 páginasFluid MechJade Kristine CaleAinda não há avaliações

- AIS - 007 - Rev 5 - Table - 1Documento21 páginasAIS - 007 - Rev 5 - Table - 1Vino Joseph VargheseAinda não há avaliações

- Mempower Busduct - PG - EN - 6 - 2012 PDFDocumento38 páginasMempower Busduct - PG - EN - 6 - 2012 PDFAbelRamadhanAinda não há avaliações

- Building Materials Reuse and RecycleDocumento10 páginasBuilding Materials Reuse and RecyclemymalvernAinda não há avaliações

- GCCE RaptorDocumento4 páginasGCCE RaptorSayidina PanjaitanAinda não há avaliações

- Design of Slab FormsDocumento27 páginasDesign of Slab FormsZevanyaRolandTualaka100% (1)

- Operating Check List For Disel Generator: Date: TimeDocumento2 páginasOperating Check List For Disel Generator: Date: TimeAshfaq BilwarAinda não há avaliações

- En Mirage Classic Installation GuideDocumento4 páginasEn Mirage Classic Installation GuideMykel VelasquezAinda não há avaliações

- Alternator LSA42.3j enDocumento12 páginasAlternator LSA42.3j enArdi Wiranata PermadiAinda não há avaliações

- HRTC RoutesDiverted Via KiratpurManali Four LaneDocumento9 páginasHRTC RoutesDiverted Via KiratpurManali Four Lanepibope6477Ainda não há avaliações

- West Point Partners Project - OverviewDocumento11 páginasWest Point Partners Project - OverviewhudsonvalleyreporterAinda não há avaliações

- 8D ReportDocumento26 páginas8D ReportEbenezer FrancisAinda não há avaliações

- Performance of Trinidad Gas Reservoirs PDFDocumento11 páginasPerformance of Trinidad Gas Reservoirs PDFMarcus ChanAinda não há avaliações

- Fundamentals and History of Cybernetics 2Documento46 páginasFundamentals and History of Cybernetics 2izzul_125z1419Ainda não há avaliações

- TableauDocumento5 páginasTableaudharmendardAinda não há avaliações

- ASTM D1265-11 Muestreo de Gases Método ManualDocumento5 páginasASTM D1265-11 Muestreo de Gases Método ManualDiana Alejandra Castañón IniestraAinda não há avaliações

- Ms For Demin Water Tank Modification Rev 1 Feb. 28 2011lastDocumento9 páginasMs For Demin Water Tank Modification Rev 1 Feb. 28 2011lastsharif339100% (1)

- TWITCH INTERACTIVE, INC. v. JOHN AND JANE DOES 1-100Documento18 páginasTWITCH INTERACTIVE, INC. v. JOHN AND JANE DOES 1-100PolygondotcomAinda não há avaliações

- Classifications of KeysDocumento13 páginasClassifications of KeyssyampnaiduAinda não há avaliações

- 7810-8110-Ca-1540-651-001 - HP FG KodDocumento68 páginas7810-8110-Ca-1540-651-001 - HP FG Kodgopal krishnan0% (1)

- Best Answer For Each Conversation. You Will Hear Each Conversation TwiceDocumento4 páginasBest Answer For Each Conversation. You Will Hear Each Conversation TwiceQuang Nam Ha0% (1)

- Successful Advertising With Google AdWordsDocumento157 páginasSuccessful Advertising With Google AdWordsJerrod Andrews100% (2)

- LabVIEW Project Report Complete HPK Kumar DetailedDocumento62 páginasLabVIEW Project Report Complete HPK Kumar DetailedDanny Vu75% (4)

- Market Intelligence Case Study Sales & MarketingDocumento20 páginasMarket Intelligence Case Study Sales & MarketingBrian ShannyAinda não há avaliações

- 002 SM MP4054Documento2.009 páginas002 SM MP4054tranquangthuanAinda não há avaliações

- SAFMC 2023 CAT B Challenge Booklet - V14novDocumento20 páginasSAFMC 2023 CAT B Challenge Booklet - V14novJarrett LokeAinda não há avaliações

- 575 Tech Specs Placenta Pit FinalDocumento9 páginas575 Tech Specs Placenta Pit FinalJohn Aries Almelor SarzaAinda não há avaliações

- National Convention National Convention On On Quality Concepts Quality ConceptsDocumento9 páginasNational Convention National Convention On On Quality Concepts Quality ConceptsMadhusudana AAinda não há avaliações

- Incident Report: Executive Vice PresidentDocumento1 páginaIncident Report: Executive Vice PresidentEvan MoraledaAinda não há avaliações