Escolar Documentos

Profissional Documentos

Cultura Documentos

Nur Alfi Laela 20400112016 Pbi 1: Test Types

Enviado por

NuralfilaelaTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Nur Alfi Laela 20400112016 Pbi 1: Test Types

Enviado por

NuralfilaelaDireitos autorais:

Formatos disponíveis

NUR ALFI LAELA

20400112016

PBI 1

CHAPTER 3: DESIGNING CLASSROOM LANGUAGE TESTS

In this chapter we will examine tests type to begin the process of designing tests or revising

existing ones.

TEST TYPES

Determine the purpose for the test is the first task that we will face in designing a test for students

so that we as a teacher can focus on the specific objectives of the test.

Language Aptitude Tests

A language aptitude test is designed to measure capacity or general ability to learn a

foreign language. Language aptitude tests are ostensibly designed to apply to the classroom

learning of any language. MLAT (Modern Language Aptitude Test) task consists of five different

tasks: Number learning, phonetic script, spelling clues, word in sentence, and paired associates.

Virtually, theres no unequivocal evidence that language aptitude test predict communicative

success in a language. Moreover, any test that claims to predict success in learning a language is

undoubtedly flawed.

Proficiency Tests

The purpose of proficiency test is to test global competence in a language. A proficiency

test is not limited to any one course, curriculum, or single skill in the language; rather, it test

overall. It includes: standardized multiple choice items on grammar, vocabulary, reading

comprehension, and aural comprehension. Proficiency test are almost always summative and

norm referenced. These kinds of tests are usually not equipped to provide diagnostic feedback.

One of a standardized proficiency test is TOEFL.

Placement Tests

The purpose of placement test is to place a student into a particular level or section of a

language curriculum or school. It usually includes a sampling of the material to be covered in the

various courses in a curriculum. In a placement test, a student should find the test material

neither too easy nor too difficult but appropriately challenging. Placement tests come in many

varieties: assessing comprehension and production, responding through written and oral

performance, multiple choice, and gap filling formats. One of the examples of Placement tests is

the English as a Second Language Placement Test (ESLPT) at San Francisco State University.

Diagnostic Tests

The purpose is to diagnose specific aspects of a language. These tests offer a checklist of

features for the teacher to use in discovering difficulties. It should elicit information on what

students need to work in the future; therefore the test will typically offer more detailed

subcategorized information on the learner. For example, a writing diagnostic test would first elicit

a writing sample of the students. Then, the teacher would identify the organization, content,

spelling, grammar, or vocabulary of their writing. Based on that identifying, teacher would know

the needs of students that should have special focus.

A typical diagnostic test of oral production was created by Clifford Prator (1972) to

accompany a manual of English pronunciation. Test-takers are directed to read a 150-word

passage while they are tape recorded. The test administrator then refers to an inventory of

phonological items for analyzing a learners production. After multiple listening, the

administrator produces a checklist for errors in five separate categories. The main categories

include:

Stress and rhythm,

Intonation,

Vowels,

Consonants, and

Other factors.

Achievement Tests

An achievement test is related directly to classroom lessons, units, or even a total

curriculum. The purpose of achievement tests is to determine whether course objectives have

been met with skills acquired by the end of a period of instruction. Achievement tests should be

limited to particular material addressed in a curriculum within a particular time frame.

Achievement tests belong to summative because they are administered at the end on a unit/term

of study but effective achievement tests can serve as useful wash back by showing the errors of a

students and helping them analyze their weaknesses and strengths.

Achievement tests are often summative because they are administered at the end of a unit or term

of study. The specifications for an achievement test should be determined by :

The objectives of the lesson, unit, or course being assessed

The relative importance (or weight) assigned to each objective

The tasks employed in classroom lessons during the unit of time.

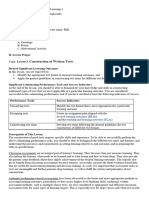

SOME PRACTICAL STEPS TO TEST CONSTRUCTION

Some practical steps in constructing classroom tests:

1) Assessing Clear, Unambiguous Objective.

In this part, you should know the purpose of the test you are creating and what do you

want to test should be according with curriculum objectives, of course, you cannot

possibly test each one of the objectives but choose a possible one in order to evaluate it.

2) Drawing Up Test Specification

In this criterion, you have to create a practical outline of your test, what skills you test,

and what the items will look like. So the objectives must be according to the skill that is

going to be examined.

3) Devising Test Tasks

As you devise your test items, consider such factors as how students will perceive them

(face validity), the extent to which authentic language and contexts are present, potential

difficult caused by cultural schemata, the length of the listening stimuli, how well a story

line comes across, how things like the cloze testing format will work, and other

practicalities.

4) Designing multiple choice test items

Hoghes (2003, pp. 76 78) cautions against a number of weaknesses of multiple choice

items :

The technique tests only recognition knowledge

Guessing may have a considerable effect on test scores

The technique severely restricts what can be tested

It is very difficult to write successful items

Wash back may be harmful

Cheating may be facilitated

If the objective is to design a large-scale standardized test for repeated administrations, then a

multiple choice format does indeed become viable. A primer on terminology:

1. Multiple choice items are all receptive, or selective, response items in that the test-taker

chooses from a set of responses (commonly called a supply type of response) rather than

creating a response. Other receptive item types include true-false questions and matching

lists. (In the discussion here, the guidelines apply primarily to multiple-choice item types

and not necessarily to other receptive types).

2. Every multiple-choice item has a stem, which presents a stimulus, and several (usually

between three and five) options or alternatives to choose from.

3. One of those options, the key, is the correct response, while the others serve as

distractions.

Since there will be occasions when multiple-choice items are appropriate, consider the

following four guidelines for designing multiple-choice items for both classroom based and

large-scale situations (adapted from Gronlund, 1998, pp. 60-75 and J.D. Brown, 1996, pp. 54-57)

1. Design each item to measure a specific objective.

2. State both stem and options as simply and directly as possible.

3. Make certain that the intended answer is clearly the only correct one.

4. Use item indices to accept, discard, or revise items.

SCORING, GRADING, AND GIVING FEED BACK

Scoring

Scoring plan reflects the relative weight that you place on each section and items in each season.

Grading

Grading doesnt mean just giving A for 90-100, and a B for 80-89. Its not that simple. How

you assign letter grades to this test is a product of

the country, culture, and context of the English classroom,

institutional expectations (most of them unwritten),\

explicit and implicit definitions of grades that you have set forth,

the relationship you have established with the class, and

student expectations that have been engendered (cause) in previous test and quizzes in the

class.

Giving Feedback

Feedback should become beneficial wash back. Those are some examples of feedback:

1. A letter grade

2. A total score

3. Four sub scores (speaking, listening, reading, writing)

4. For the listening and reading sections

An indication of correct/incorrect responses

Marginal comments

5. For the oral interview

Scores for each element being rated

A checklist of areas needing work

A post-interview conference to go over the results

6. On the essay

Scores for each element being rated

A checklist of areas needing work

Marginal and end of-essay comments, suggestions

A post-test conference to go over work

A self-assessment

7. On all or selected parts of the test, peer checking of results

8. A whole-class discussion of results of the test

9. Individual conferences with each student to review the whole test 1. A latter grade

In this chapter, guidelines and tools were provided to enable you to address the five questions

about (1) how to determine the purpose or criterion of test, (2) how to state objectives, (3) how to

design specifications, (4) how to select and arrange test tasks, including evaluating those task

with item indices, and (5) how to ensure appropriate washback to the student.

CHAPTER 4: STANDARDIZED TESTING

This chapter has two goal: to introduce the process of constructing, validating, administering, and

interpreting standardized tests of language; and to acquaint you with a variety of current

standardized tests that claim to test overall language proficiency.

WHAT IS STANDARDIZATION?

A standardized test presupposes certain objectives, or criteria, that are held constant

across one form of the test to another. They measure a broad band of competencies that are

usually not exclusive to one particular curriculum. They are norm-referenced and the main goal is

to place test-takers on their relative ranking. Standardized tests are used to assess progress in

schools (child's academic performance), ability to attend institutions of higher education, and to

place students in programs suited to their abilities. The example of them are TOEFL, IELTS, and

etc. The tests are standardized because they specify a set of competencies for a given domain and

through a process of construct validation they program a set of tasks.

ADVANTAGES AND DISADVANTAGES OF STANDARDIZED TEST

Advantages standardized testing are a ready-made previously validated product that frees

the teacher from having to spend hours creating a test, it can be administered to a large groups in

a time limit, and it is also easy to score (computerized or hole-punched grid scoring). Moreover it

has face validity (to measure test-taker knowledge: better or worse)

Disadvantages center largely on inappropriate use of such tests, for example, using an

overall proficiency test as an achievement test simply because of the convenience of the

standardization. Standardized tests are ultimately not a very good measure of individual student

performance and intelligence, because the system is extremely simplistic. A standardized test can

measure whether or not a student knows when Sangkuriang was written, for example, but it

cannot determine whether or not the student has absorbed and thought about the larger issues

surrounding the historical document.

DEVELOPING A STANDARDIZED TEST

Knowing how to develop a standardized test can be helpful to revise an existing test,

adapt or expand an existing test, create a smaller-scale standardized test. In the steps outlined

below, three different standardized tests will be used to exemplify the process of standardized test

design:

(A) The Test of English as a Foreign Language (TOEFL) test of general language ability or

proficiency.

(B) The English as a Second Language Placement Test (ESLPT), San Francisco State

University (SFSU) placement test at a university.

(C) The Graduate Essay Test (GET), SFSU gate-keeping essay test.

1. Determine the purpose and objectives of the test

Standardized tests are expected to provide high practically in administration and scoring

without unduly compromising validity.

(A) TOEFL :

To evaluate the English proficiency of people whose native language is not

English.

Most of colleges and universities in the US use TOEFL score to admit or refuse

international applicants for admission.

(B) ESLPT

To place already admitted students at SFSU in an appropriate course in academic

writing, oral production and grammar-editing.

To provide teachers with some diagnostic information about their students.

(C) GET

To determine whether their writing ability is sufficient to permit them to enter

graduate-level courses in their programs and it is offered beginning of each term.

2. Design test specification

(A) TOEFL

Because TOEFL is a proficiency test, the first step is to define the construct of

language proficiency.

After breaking language competence down into subset of 4 skills each

performance mode can be examined on a continuum of linguistic units:

pronunciation, spelling, word, grammar, discourse, pragmatic features of

language.

Oral production tests can be tests of overall conversational fluency or

pronunciation of a particular subset of phonology and can take form of imitation.

Listening comprehension test can focuses on a particular feature of language or on

overall listening for general meaning.

Reading test aims to test comprehension of long/short passages, single sentences,

phrases, and words.

Writing section tests writing ability in the form of open-ended (free composition)

or it can be structured to elicit anything from correct spelling to discourse-level

competence.

(B) ESLPT

Designing test specs for ESLPT was simpler tasks because the purpose is

placement and construct validation of the test consisted of an examination of the

content of the ESL courses.

In recent revision of ESLPT, content & face validity was the central theoretical

issues to be considered. Then, the major issue centered on designing practical and

reliable task and item response formats.

The specification mirrored reading-based and process writing approach used in the

courses.

(C) GET

Specifications for GET are the skills of writing grammatically and rhetorically

acceptable prose on a topic, with clearly produced organization of ideas and

logical development.

The GET is a direct test of writing ability in which test-takers must write an essay

on a given topic in a two-hour time period.

3. Design, select, and arrange test tasks/items

(A) TOEFL

Content coding: the skills and a variety of subject matter without biasing (the

content must be universal and as neutral as possible)

Statistical characteristic: it include IF and ID

Before items are released into a form of TOEFL, they are piloted and scientifically

selected to meet difficulty specifications within each subsection, section and the

test overall.

(B) ESLPT

For written parts; the main problems are;

selecting appropriate passages (conform the standards of content validity)

providing appropriate prompts (they should fit the passages)

processing data form pilot testing

In the multiple-choice editing test; first (easier task) choose an appropriate essay

within which to embed errors. A more complicated one is to embed a specified

number errors from a previously determined taxonomy of error

categories.(Teacher can perceive the categories from student previous error in

written work & students error can be used as distractors)

(C) GET

Topics are appealing and capable of yielding intended product of an essay that

requires an organized logical arguments conclusion. No pilot testing of prompts is

conducted.

Be careful about the potential cultural effect on the numerous international

students who must take the GET.

4. Make appropriate evaluations of different kinds of items

IF, ID and distractor analysis may not be necessary for classroom (one-time) test, but they

are must for standardized multiple-choice test. For production responses, different forms of

evaluation become important. (ex: practicality, reliability & facility)

practicality: clarity of directions, timing of test, ease of administration & how much time

is required to score

reliability: a major player is instances where more than one scorer is employed and to a

lesser extent when a single scorer has to evaluate tests over long spans of time that could

lead to deterioration of standards

facilities: key for valid and successful items. Unclear direction, complex language,

obscure topic, fuzzy data, culturally biased information may lead to higher level of

difficulty

(A) TOEFL

The IF, ID, and efficiency statistics of the multiple choice items of current forms

the TOEFL are not publicly available information

(B) ESLTP

The multiple-choice editing passage showed the value of statistical findings in

determining the usefulness of items and pointing administrator toward revisions.

(C) GET

No data are collected from students on their perceptions, but the scorers have an

opportunity to reflect on the validity of given topic

5. Specify scoring procedures and reporting formats

(A) TOEFL

Scores are calculated and reported for

- three sections of TOEFL

- a total score

- a separate score

(B) ESLPT

It reports a score for each of the essay section (each essay is read by 2 readers)

Editing section is machined scanned

It provides data to place student and diagnostic information

Student dont receive their essay back

(C) GET

Each GET is read by two trained reader. They give scores between 1 to 4

Recommended score is 6 as threshold for allowing student to pursue graduate-

level courses

If the students gets score below 6, he either repeat the test or take a remedial

course

6. Performing ongoing construct validation studies

Any standardized test must be accompanied by systematic periodic corroboration of its

effectiveness and by steps toward its improvement

(A) TOEFL

The latest study on TOEFL examined the content characteristics of the TOEFL

from a communicative perspective based on current research in applied linguistics

and language proficiency assessment.

(B) ESLPT

The developments of the new ESLPT involved a lengthy process of both content

and construct validation, along with facing such practical issues as scoring the

written sections and a machine-scorable multiple-choice answer sheet.

(C) GET

At this time, there is no research to validate the GET itself. Administrators rely on

the research on university level academic writing tests such as TWE.

In recent years, some criticism of the GET has come from international test-takers

who posit that the topics and time limits of the GET work to the disadvantage of

writers whose native language is not English.

STANDARDIZED LANGUAGE PROFIENCY TESTING

Test of language proficiency presuppose a comprehensive definition of the specific

competencies that comprise overall language ability. Swain (1990) offered multidimensional

view of proficiency assessment by referring to the three linguistic traits (grammar, discourse, and

sociolinguistic) that can be assessed by means of oral, multiple choice, and written responses.

ACTFL association describing about proficiency in four levels: superior, advanced,

intermediate, and novice. Within each level, description of listening, speaking, reading, and

writing are provided as guidelines for assessment.

Você também pode gostar

- Designing Classroom Language Tests Chapter 3Documento9 páginasDesigning Classroom Language Tests Chapter 3Yamith J. Fandiño100% (7)

- Designing ClassroomDocumento20 páginasDesigning ClassroomMuhammad Bambang TAinda não há avaliações

- What Is A Test?: Jamilah@uny - Ac.idDocumento9 páginasWhat Is A Test?: Jamilah@uny - Ac.idស.គ.Ainda não há avaliações

- Designing Classroom Language TestsDocumento9 páginasDesigning Classroom Language Testswida100% (3)

- Basic Competence: Designing Classroom Language Tests Short DescriptionDocumento5 páginasBasic Competence: Designing Classroom Language Tests Short DescriptionIndra Prawira Nanda Mokodongan (bluemocco)Ainda não há avaliações

- Designing Classroom Language TestsDocumento18 páginasDesigning Classroom Language TestsfrdAinda não há avaliações

- Oranye Kemerahan Dan Hijau Bentuk Organik Meditasi Lokakarya Webinar Tema Utama PresentasiDocumento21 páginasOranye Kemerahan Dan Hijau Bentuk Organik Meditasi Lokakarya Webinar Tema Utama PresentasiAan hasanahAinda não há avaliações

- Tests TypesDocumento20 páginasTests TypesThahbah GhawidiAinda não há avaliações

- Designing Classroom Language TestDocumento13 páginasDesigning Classroom Language TestRatihPuspitasari0% (1)

- Chapter 3 Designing Classroom Language TestDocumento17 páginasChapter 3 Designing Classroom Language TestTia DelvannyAinda não há avaliações

- Dokumen - Tips - Chapter 3designing Classroom Language TestsDocumento22 páginasDokumen - Tips - Chapter 3designing Classroom Language TestsShoira YakubovaAinda não há avaliações

- Principles of Teaching D BrownDocumento9 páginasPrinciples of Teaching D BrownBoumata Badda MbarkAinda não há avaliações

- Assessment and Evaluation ZinabiDocumento20 páginasAssessment and Evaluation ZinabiMohamed BamassaoudAinda não há avaliações

- Levia Salsabillah - 210210401074 - ECD - Chap 7Documento3 páginasLevia Salsabillah - 210210401074 - ECD - Chap 7gilank d'hard steylsAinda não há avaliações

- From Discrete Tests to Integrative AssessmentDocumento3 páginasFrom Discrete Tests to Integrative AssessmentRelec RonquilloAinda não há avaliações

- Standardized TestDocumento20 páginasStandardized Testveronica magayAinda não há avaliações

- Шалова АйгульDocumento4 páginasШалова АйгульКамила ЖумагулAinda não há avaliações

- Classroom Language TestDocumento13 páginasClassroom Language TestDesi RahmawatiAinda não há avaliações

- Techniques and ActivitiesDocumento4 páginasTechniques and Activitiesha nguyenAinda não há avaliações

- Creating A Placement TestDocumento6 páginasCreating A Placement TestYahia Hamane0% (1)

- 0000 Summary of D. Brown's AssessmentDocumento36 páginas0000 Summary of D. Brown's AssessmentNegar HajizadehAinda não há avaliações

- Week 4 Lecture - Language Test TypesDocumento13 páginasWeek 4 Lecture - Language Test TypesReziana Nazneen RimiAinda não há avaliações

- СымбатDocumento7 páginasСымбатКамила ЖумагулAinda não há avaliações

- Testing in English Language TeachingDocumento9 páginasTesting in English Language Teachingrezki sabriAinda não há avaliações

- Name: Siti Zahroul Khoiriyah Class: TBI 5E NIM: 12203183024Documento2 páginasName: Siti Zahroul Khoiriyah Class: TBI 5E NIM: 12203183024ZahraaAinda não há avaliações

- EDUC 4 Lesson 5Documento13 páginasEDUC 4 Lesson 5Ray Lorenz OrtegaAinda não há avaliações

- Group 3 (Standardized Testing)Documento16 páginasGroup 3 (Standardized Testing)Aufal MaramAinda não há avaliações

- Language Testing: Open University of SudanDocumento33 páginasLanguage Testing: Open University of SudanAmmar Mustafa Mahadi Alzein100% (2)

- Types of TestDocumento8 páginasTypes of TestPola EsianitaAinda não há avaliações

- Хаджибаева МуножатDocumento5 páginasХаджибаева МуножатКамила ЖумагулAinda não há avaliações

- What Are The Differences Among Evaluation Assessment and Testing PedagogyDocumento8 páginasWhat Are The Differences Among Evaluation Assessment and Testing PedagogyPe Akhpela KhanAinda não há avaliações

- Assessment of Learning Module 3Documento10 páginasAssessment of Learning Module 3chinie mahusayAinda não há avaliações

- 1 - Selected Response and Short AnswerDocumento17 páginas1 - Selected Response and Short AnswerRandiyanto MantuluAinda não há avaliações

- Item Writer GuidelinesDocumento199 páginasItem Writer GuidelinesMatias Yo100% (1)

- STANDARDIZATION PROCESS EXPLAINEDDocumento6 páginasSTANDARDIZATION PROCESS EXPLAINEDAmosAinda não há avaliações

- Language Testing and Assessment GuideDocumento26 páginasLanguage Testing and Assessment GuideLoredana DanaAinda não há avaliações

- Group 3 La Fix Pake BangetDocumento34 páginasGroup 3 La Fix Pake BangetHanna FairussaniaAinda não há avaliações

- Types of English Language Tests Reflected in Grade 8 Model ExamDocumento7 páginasTypes of English Language Tests Reflected in Grade 8 Model ExamSerawit DejeneAinda não há avaliações

- Language TestingDocumento7 páginasLanguage TestingSerawit DejeneAinda não há avaliações

- Language Testing and Assessment, 2018-2019Documento79 páginasLanguage Testing and Assessment, 2018-2019Jasmin CadirAinda não há avaliações

- 9 - Danissa Fitriamalia Gito - 2001055014 - Tugas 1 - Bab 1Documento5 páginas9 - Danissa Fitriamalia Gito - 2001055014 - Tugas 1 - Bab 1Danissa Fitriamalia GitoAinda não há avaliações

- Testing Methods Very GoodDocumento41 páginasTesting Methods Very GoodNinoska Marquez RomeroAinda não há avaliações

- Testing Evaluation PP TDocumento51 páginasTesting Evaluation PP TKelemen Maria MagdolnaAinda não há avaliações

- Assessing EFL learning: evaluation, testing and assessmentDocumento47 páginasAssessing EFL learning: evaluation, testing and assessmentHaziq Kamardin100% (1)

- Stages of Test Construction or SelectionDocumento5 páginasStages of Test Construction or SelectionАнастасия ЗаворотнаяAinda não há avaliações

- FP012 at Eng Trabajo MiledysDocumento9 páginasFP012 at Eng Trabajo MiledysOG WAinda não há avaliações

- Lesson 5 Construction of Written TestDocumento47 páginasLesson 5 Construction of Written TestearnestjoyqtjacintoAinda não há avaliações

- Common Test TechniquesDocumento4 páginasCommon Test TechniquesMerry Lovelyn Delosa Celes100% (1)

- What Are The Differences Among Evaluation, Assessment and Testing - Pedagogy MCQs FPSC Professional SST TestDocumento7 páginasWhat Are The Differences Among Evaluation, Assessment and Testing - Pedagogy MCQs FPSC Professional SST TestFayaz JumaniAinda não há avaliações

- Types of Language Tests and Their PurposesDocumento16 páginasTypes of Language Tests and Their PurposesMuhammad IbrahimAinda não há avaliações

- Assessment 3:4Documento33 páginasAssessment 3:4ahha1834Ainda não há avaliações

- Required Reading No. 6 - Assessment of Learning 1Documento19 páginasRequired Reading No. 6 - Assessment of Learning 1Rovilaine DenzoAinda não há avaliações

- Турманова АсемDocumento4 páginasТурманова АсемКамила ЖумагулAinda não há avaliações

- Achievement TestDocumento7 páginasAchievement TestVyasan JoshiAinda não há avaliações

- Types of TestDocumento8 páginasTypes of TestNawang Wulan0% (1)

- Methods of Assessment: Reasons for Testing and Types of TestsDocumento4 páginasMethods of Assessment: Reasons for Testing and Types of TestsmgterAinda não há avaliações

- Kinds of Tests&Testing - Andi Nur Vira DelaDocumento12 páginasKinds of Tests&Testing - Andi Nur Vira DelaAndi DelaAinda não há avaliações

- Tika Dewi Safitri - 22001073031 - Summary 2 Language AssessmentDocumento4 páginasTika Dewi Safitri - 22001073031 - Summary 2 Language AssessmentTika Dewi SafitriAinda não há avaliações

- How to Practice Before Exams: A Comprehensive Guide to Mastering Study Techniques, Time Management, and Stress Relief for Exam SuccessNo EverandHow to Practice Before Exams: A Comprehensive Guide to Mastering Study Techniques, Time Management, and Stress Relief for Exam SuccessAinda não há avaliações

- Modul Descriptive TextDocumento21 páginasModul Descriptive TextNuralfilaelaAinda não há avaliações

- Decision Support in Heart Disease Prediction System Using Naive BayesDocumento7 páginasDecision Support in Heart Disease Prediction System Using Naive BayesAmr Mohamed El-KoshiryAinda não há avaliações

- Meeting 2 Bahasa Inggris HukumDocumento8 páginasMeeting 2 Bahasa Inggris HukumNuralfilaelaAinda não há avaliações

- Project 01 FDocumento28 páginasProject 01 FsbraajaAinda não há avaliações

- Wacana SBMPTN - Nur Alfi LaelaDocumento2 páginasWacana SBMPTN - Nur Alfi LaelaNuralfilaelaAinda não há avaliações

- Makalah Noun ClauseDocumento5 páginasMakalah Noun ClauseNuralfilaela83% (6)

- Makalah SOSIOOLDocumento11 páginasMakalah SOSIOOLAlfhyahmad AlthafunnisaAinda não há avaliações

- Materials For The Dentistry Students at Gadjah Mada University YogyakartaDocumento3 páginasMaterials For The Dentistry Students at Gadjah Mada University YogyakartaNuralfilaelaAinda não há avaliações

- Sample FootnotesDocumento5 páginasSample FootnotesNuralfilaelaAinda não há avaliações

- Penelitian 2013Documento2 páginasPenelitian 2013NuralfilaelaAinda não há avaliações

- Stat DLLDocumento2 páginasStat DLLIreish Mae RutaAinda não há avaliações

- Task Based Learning TeachingDocumento2 páginasTask Based Learning TeachingLutfan LaAinda não há avaliações

- Syntactic RulesDocumento4 páginasSyntactic RulesCandra Pirngadie100% (12)

- SA102 10 AL-Madany Delta3 MON 0615Documento39 páginasSA102 10 AL-Madany Delta3 MON 0615Raghdah AL-MadanyAinda não há avaliações

- Cuff, Dana Architecture: The Story of Practice 1992Documento11 páginasCuff, Dana Architecture: The Story of Practice 1992metamorfosisgirlAinda não há avaliações

- NumerologyDocumento1 páginaNumerologyMuralidharan KrishnamoorthyAinda não há avaliações

- Promoting LGBT Rights Through Islamic Humanism - Siti Musdah MutiazDocumento9 páginasPromoting LGBT Rights Through Islamic Humanism - Siti Musdah MutiazfaisalalamAinda não há avaliações

- Khwopa Engineering College: Purbanchal UniversityDocumento3 páginasKhwopa Engineering College: Purbanchal UniversitysujanAinda não há avaliações

- Local Migration and GenderDocumento32 páginasLocal Migration and GenderMatthew Thomas BengeAinda não há avaliações

- La Belle EpoqueDocumento1 páginaLa Belle EpoqueVoicu MuicaAinda não há avaliações

- Oral Tradition: Do Storytellers Lie?: Okpewho, IsidoreDocumento19 páginasOral Tradition: Do Storytellers Lie?: Okpewho, IsidoreEvelyneBrenerAinda não há avaliações

- Science & Society (Vol. 76, No. 4)Documento142 páginasScience & Society (Vol. 76, No. 4)Hyeonwoo Kim100% (1)

- Kala Raksha CurriculumDocumento48 páginasKala Raksha CurriculumindrajitAinda não há avaliações

- The Baptism of Early Virginia How Christianity Created Race RebeccaDocumento240 páginasThe Baptism of Early Virginia How Christianity Created Race Rebeccaਮਨਪ੍ਰੀਤ ਸਿੰਘAinda não há avaliações

- Lesson Plan For MapehDocumento3 páginasLesson Plan For MapehjafhejafAinda não há avaliações

- Xiong WeiDocumento14 páginasXiong WeiRăzvanMituAinda não há avaliações

- Subversion and Sociological CanonDocumento12 páginasSubversion and Sociological CanonRatiba Ben MansourAinda não há avaliações

- Teaching Visual Arts in Primary School Teaching Departments With Postmodern Art Education Approachy For ArtDocumento6 páginasTeaching Visual Arts in Primary School Teaching Departments With Postmodern Art Education Approachy For ArtDavid MuñozAinda não há avaliações

- Wolfgang David Cirilo de Melo-The Early Latin Verb System - Archaic Forms in Plautus, Terence, and Beyond (Oxford Classical Monographs) (2007)Documento432 páginasWolfgang David Cirilo de Melo-The Early Latin Verb System - Archaic Forms in Plautus, Terence, and Beyond (Oxford Classical Monographs) (2007)Safaa SaeidAinda não há avaliações

- Women's Role in Eco-Feminist Movements in IndiaDocumento7 páginasWomen's Role in Eco-Feminist Movements in IndiaPrakritiAinda não há avaliações

- Elite TheoryDocumento17 páginasElite Theoryfadeella78% (9)

- JLPT N5Documento2 páginasJLPT N5valisblythe100% (1)

- RC RizalistasDocumento3 páginasRC RizalistasCharles Justin P. MaternalAinda não há avaliações

- G10 TOS and Answer KeyDocumento4 páginasG10 TOS and Answer KeyEzra May100% (1)

- Developmental Stages in Middle Late AdolescenceDocumento21 páginasDevelopmental Stages in Middle Late AdolescenceRizalyn Quillosa100% (1)

- Information Sheet: Detroit Community Lesson PlanDocumento3 páginasInformation Sheet: Detroit Community Lesson Planvictorem17Ainda não há avaliações

- Abstrak TesisDocumento8 páginasAbstrak Tesisalisa solekhaAinda não há avaliações

- Carta 12 PH DDocumento221 páginasCarta 12 PH DcarlodolciAinda não há avaliações

- Business Culture of Turkey ...Documento14 páginasBusiness Culture of Turkey ...Muneeb AhmedAinda não há avaliações

- Asynchronous Act. WK.2Documento2 páginasAsynchronous Act. WK.2EricaAinda não há avaliações