Escolar Documentos

Profissional Documentos

Cultura Documentos

You Bought RAC, Now How Do You Get HA

Enviado por

devjeetDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

You Bought RAC, Now How Do You Get HA

Enviado por

devjeetDireitos autorais:

Formatos disponíveis

Erik Peterson

RAC Development

You bought RAC,

now how do you

get HA?

HA Architecture

HA Outside the Database

Rolling Upgrades

Best Practices

Oracles Integrated HA Solutions

System

Failures

Data

Failures

System

Changes

Data

Changes

Unplanned

Downtime

Planned

Downtime

Real Application Clusters

ASM

Flashback

RMAN & Oracle Secure Backup

H.A.R.D

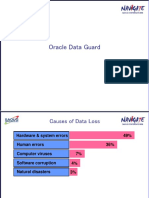

Data Guard

Streams

Online Reconfiguration

Rolling Upgrades

Online Redefinition

O

r

a

c

l

e

M

A

A

B

e

s

t

P

r

a

c

t

i

c

e

s

Data Guard + RAC Configuration

Data Guard + RAC: end-to-end Data Protection and HA

Basis of Maximum Availability Architecture

Managed as a single configuration

Broker

Standby

Database

Standby Site

Primary

Database

Primary Site

R

A

C

R

A

C

Data Guard

HA Architecture Components

Redundant middle or application tier

Redundant network & interconnect

infrastructure

Redundant storage infrastructure

Real Application Clusters (RAC) to protect

from host and instance failures

Data Guard (DG) to protect from human

errors and data failures

Sound operational practices

Oracle RAC

HA Architecture

Clustered

Database Instances

Mirrored Disk

Subsystem

High Speed

Switch or

Interconnect

Hub or

Switch

Fabric

Application Servers/

Network

Centralized

Management

Console

Storage Area Network

Low Latency Interconnect

VIA or Proprietary

Drive and Exploit

Industry Advances in

Clustering

Users

No Single

Point Of Failure

Shared C

ache

Keeping non-Oracle

components HA

Making Applications Resilient

HA Outside the Database

Oracle Clusterware

Provides Application HA

Agent Framework

Please Start

How are you ?

Please Stop

check

start

stop

An example of an Agent used

by the Framework

An Agent to protect an Apache Web Server

The start command

Would invoke the apache command

apachectl k start

Perhaps with a f parameter to locate the configuration file on

shared disk

The check command

There are a number of things that could be checked

- Is the httpd process running ? (cheating)

- Can I request a web page ? (mostly programmatic)

The stop command

Would invoke the apache command

apachectl k stop

start

stop

check

Oracle Clusterware Agents

xclock

Apache

OC4J

SAP Agent

Application VIP

For more details see Using Oracle Clusterware to Protect 3rd

Party Applications on OTN

Making Applications Resilient

1. Implement Services

2. Use FAN and LBA aware connection pool

3. Add FAN callouts cleanup, failback

4. Modify application to retry

Gains: Manages Priorities, Visibility of Use,

Can turn off reporting during aggregation

Services by Priority

Node-1 Node-2 Node-3

Standard Queries

Adhoc Queries

ETL

Database Services for DW

Making your Application React

Connect SQL issue Blocked in R/W Processing last

result

active active wait wait

tcp_ip_cinterval tcp_ip_interval tcp_ip_keepalive -

VIP VIP out of band event -

FAN

out of band event -

FAN

VIP Resources

VIP resources existed since Oracle Database 10gR1

Only used to failover the VIP to another node so that a

client got an instant NAK returned when it tried to connect

to the Virtual IP. They still exist and operate in the same

way in Release 2.

Application VIPs

New resource in Oracle Database 10gR2

Created as functional VIPs which can be used to connect

to an application regardless of the node it is running on

VIP is a dependent resource of the user registered

application

There can be many VIPs, one per User Application

Fast Connection Failover

FAN & Oracle Connection Pools

C2

C3

C4

C5

C6

C7

C8

C9

C1

Instance 1

Instance 2

Instance 3

Connection Pool

Fast Connection Failover

Node Leaves

C2

C3

C4

C5

C6

C7

C8

C9

C1

Instance 1

Instance 2

Connection Pool

FAN

Fast Connection Failover

Node Leaves

C2

C3

C4

C5

C6

C7

C8

C9

C1

Instance 1

Instance 2

Connection Pool

FAN

Fast Connection Failover

End State

C2

C3

C4

C5

C6

C1

Instance 1

Instance 2

Connection Pool

Fast Connection Failover

Instance Join

C2

C3

C4

C5

C6

C7

C8

C9

C1

Instance 1

Instance 2

Instance 3

Service across RAC

Currently limited to Oracle

JDBC connection pool

FAN

Connection Pool

Clients

Pools

1

10

3 4 FAN

7 8 commit

2

5 error

6 retry

9

11 Response-time > SL to DR

RAC

ASM

10g Services

Connection

Caches

Application

Servers

Application

Example

Runtime Connection Load

Balancing

Solves the Connection Pool problem!

Easiest way to take advantage of Load

Balancing Advisory

No application changes required

No extra charge software to buy

Enabled by parameter on datasource

definition

Supported by JDBC and ODP.NET

Runtime Connection Load

Balancing

Client connection pool is integrated with RAC

load balancing advisory

When application does getConnection, the

connection given is the one that will provide

the best service.

Policy defined by setting GOAL on Service

Need to have Connection Load Balancing

Load Balancing Advisory Goals

THROUGHPUT Work requests are directed based

on throughput.

used when the work in a service completes at homogenous

rates. An example is a trading system where work

requests are similar lengths.

SERVICE_TIME Work requests are directed based

on response time.

used when the work in a service completes at various

rates. An example is as internet shopping system where

work requests are various lengths

None Default setting, turn off advisory

Runtime Connection Load Balancing

with JDBC, ODP.NET

Instance 1 Instance 2 Instance 3

connection

cache

CRM is

bored

CRM is

busy

CRM is

very

busy

?

30%

60%

10%

CRM requests connection

FCF is faster

Applications that retry deal w/

transactional case

TAF or FCF

Application Discrete Components

OS, Hardware Changes Full

Clusterware Changes Full

ASM, DB Limited

Storage Upgrades Full

Rolling Upgrades

Services Provides

Application Independence

DW

OLTP 1

OLTP 2

OLTP 3

OLTP 4

Node-4 Node-3 Node-2 Node-1

Node-6 Node-5

Batch Repor

ting

One service brought offline for upgrade while others are still available

Rolling Patch Upgrade using RAC

Initial RAC Configuration Clients on A, Patch B

Oracle

Patch

Upgrades

Operating

System

Upgrades

Upgrade Complete

Hardware

Upgrades

Clients Clients

Clients on B, Patch A

Patch

1 2

3 4

A B

A

A B

B

B

A

A

Patch

B B

B

A

A

Oracle

Clusterware

Upgrades

SQL Apply Rolling Database Upgrades

Major

Release

Upgrades

Patch Set

Upgrades

Cluster

Software &

Hardware

Upgrades

Initial SQL Apply Config

Clients

Redo

Version X Version X

1

B A

Switchover to B, upgrade A

Redo

4

Upgrade

X+1 X+1

B A

Run in mixed mode to test

Redo

3

X+1 X

A B

Upgrade node B to X+1

Upgrade

Logs

Queue

X

2

X+1

A B

Storage Migration Without Downtime

Migration from existing

storage to new storage can be

done by ASM with less

complexity and no downtime

Reduce cost of disk as data

becomes less active (ILM)

Disk Group

New storage is added

to ASM disk group

Disk Group

Storage Migration Without Downtime

Automatic online rebalance

provides online migration to

new storage

Dropping other disks enable

migration to complete

Disk Group

Storage Migration Without Downtime

Automatic online rebalance

completes

Old disks are empty

Disk Group

Storage Migration Without Downtime

Automatic online rebalance

completes

Old disks are empty and

removed from disk group

Disk Group

Storage Migration Without Downtime

More Nodes

Common Setup

RAC Setup Recommendations

Verifying Setup

Keeping your System Up

Best Practices

More Nodes = Greater HA

Email

Payroll

OE

CRM

More Nodes = Greater HA

Email

Payroll

OE

CRM

Node failure has less impact

Go with the Most Common Setup

Source: Oracle RAC Developments Customer Database for

10g RAC implementations

RAC Setup Recommendations

Full Redundancy Networks, Switch, etc.

Bonding/Teaming your Networks/SAN

Use a Switch

Mirroring OCR

Setup 3 Voting Disks

User sets up the

Hardware,

network & storage

Sets up OCFS

( OPT )

Installs

CRS

Installs

RAC

Configures

RAC DB

-post hwos

-post cfs

-post crsinst

-pre crsinst

-pre dbinst

-pre dbcfg

-pre cfs

Verifying Setup

Use Cluster Verification Utility

Verifying Setup

CVU List of Tests

$> ./cluvfy comp -list

Valid components are:

nodereach : checks reachability between nodes

nodecon : checks node connectivity

cfs : checks CFS integrity

ssa : checks shared storage accessibility

space : checks space availability

sys : checks minimum system requirements

clu : checks cluster integrity

clumgr : checks cluster manager integrity

ocr : checks OCR integrity

crs : checks CRS integrity

nodeapp : checks node applications existence

admprv : checks administrative privileges

peer : compares properties with peers

Servers

Storage

Inst ASM

Inst DB

Inst ASM

Inst DB

Verifying Setup:

Destructive Testing

Crash of a client connection

Crash of 1 element

of the interconnect

Crash of the

SAN connection

Crash of the

DB instance

Crash of the

ASM instance

Crash of a

storage

system

Crash of

each fiber

card

Keeping your System Up

Adhere to strong Systems Life Cycle

Disciplines

Comprehensive test plans (functional and stress)

Rehearsed production migration plan

Change Control

Separate environments for Dev, Test, QA/UAT,

Production

Backup and recovery procedures

Security controls

Support Procedures

Keeping your System Up

Test Clusters

When

Any application, parameter or component

change

Why

Doesnt Oracle/OS Vendor/HW Vendor

test?

Your Unique Environment

What happens if you dont?

Keeping your System Up

Change Management

Plan changes to minimize downtime and service

disruption

May mean overnight or weekend work

Avoid critical periods in application cycles, such as month-

or year-end processing

Consider staged changes

One function at a time

One node at a time (if possible)

Include time and resources to back out changes if

necessary

Summary

To build the a full HA architecture with Real

Application Clusters:

Use Services & FAN

Use the full stack: Oracle Clusterware, ASM

Validate your environment w/ every change

Follow MAA Best Practices

Have a Test Cluster

Você também pode gostar

- EASA Form 1 ESSAYDocumento2 páginasEASA Form 1 ESSAYAbdelaziz AbdoAinda não há avaliações

- Emotional Intelligence For Project Managers, May 2, 2014Documento10 páginasEmotional Intelligence For Project Managers, May 2, 2014devjeetAinda não há avaliações

- Oracle Forms Reports Questions and AnswersDocumento8 páginasOracle Forms Reports Questions and Answersjaveed007Ainda não há avaliações

- Oracle RAC Interview QuestionsDocumento7 páginasOracle RAC Interview Questionssai sudarshan100% (1)

- Inventory Management in SAP BW - 4HANADocumento7 páginasInventory Management in SAP BW - 4HANAdibyajotibiswalAinda não há avaliações

- Oracle Database 12c RAC Administration D81250GC10 PDFDocumento5 páginasOracle Database 12c RAC Administration D81250GC10 PDFvineetAinda não há avaliações

- Performing Database BackupsDocumento20 páginasPerforming Database BackupsNett2kAinda não há avaliações

- Rahul Kapoor ERP ResumeDocumento9 páginasRahul Kapoor ERP Resumekiran2710Ainda não há avaliações

- Oracle ArchitectureDocumento78 páginasOracle Architecturejagdeep1919Ainda não há avaliações

- Pres3 - Oracle Memory StructureDocumento36 páginasPres3 - Oracle Memory StructureAbdul WaheedAinda não há avaliações

- SAP.C ABAPD 2309.v2024-02-12.q35Documento34 páginasSAP.C ABAPD 2309.v2024-02-12.q35Mallar SahaAinda não há avaliações

- Database Solutions: OracleDocumento20 páginasDatabase Solutions: OracleDinu ChackoAinda não há avaliações

- Oracle Data Guard 11g Release 2 With Oracle Enterprise Manager 11g Grid ControlDocumento64 páginasOracle Data Guard 11g Release 2 With Oracle Enterprise Manager 11g Grid ControlKashif HussainAinda não há avaliações

- Thinus Presentation 1 1226310290044894 9Documento49 páginasThinus Presentation 1 1226310290044894 9Mohd YasinAinda não há avaliações

- ReportDocumento23 páginasReportrbahuguna2006Ainda não há avaliações

- 1 - Oracle Server Architecture OverviewDocumento22 páginas1 - Oracle Server Architecture OverviewEric LiAinda não há avaliações

- Determining If An Archive Gap Exists: Errors in Alert - Log From The Primary DatabaseDocumento6 páginasDetermining If An Archive Gap Exists: Errors in Alert - Log From The Primary Databaseshaik.naimeAinda não há avaliações

- Less15 Backups TB3Documento20 páginasLess15 Backups TB3yairrAinda não há avaliações

- KP Data Guard For RLSDocumento31 páginasKP Data Guard For RLSKande RajaAinda não há avaliações

- Managing Control Files, Online Redo Logs, and ArchivingDocumento23 páginasManaging Control Files, Online Redo Logs, and ArchivingKalsoom TahirAinda não há avaliações

- Database Tuning: System Procedures and Methodologies That Aid in Tuning Your Oracle DatabaseDocumento21 páginasDatabase Tuning: System Procedures and Methodologies That Aid in Tuning Your Oracle DatabasemladendjAinda não há avaliações

- SGA and Background Process - ArchitectureDocumento68 páginasSGA and Background Process - ArchitectureSAIAinda não há avaliações

- UNIT V FirewallsDocumento19 páginasUNIT V FirewallskvsrvzmAinda não há avaliações

- Oracle DB SecurityDocumento29 páginasOracle DB Securitylakshmi_narayana412Ainda não há avaliações

- Enable AutoConfig On Applications Database Tier ORACLE EBSDocumento23 páginasEnable AutoConfig On Applications Database Tier ORACLE EBSSamuelAinda não há avaliações

- DS LM PDFDocumento95 páginasDS LM PDFAzim KhanAinda não há avaliações

- Les 05 Create BuDocumento40 páginasLes 05 Create Bumohr_shaheenAinda não há avaliações

- Scan VipDocumento55 páginasScan Vippush5Ainda não há avaliações

- How To Install SQL Server 2008 R2Documento31 páginasHow To Install SQL Server 2008 R2tietzjdAinda não há avaliações

- Less11 SecurityDocumento27 páginasLess11 Securityarq_mauroAinda não há avaliações

- Chapter 5 - Security and AuthorizationDocumento40 páginasChapter 5 - Security and Authorizationanon_494993240Ainda não há avaliações

- Oracle EBS R12 ArchitectureDocumento7 páginasOracle EBS R12 ArchitectureAman DuaAinda não há avaliações

- LATCH - Enq TX - Index ContentionDocumento2 páginasLATCH - Enq TX - Index ContentioncotosilvaAinda não há avaliações

- FragmentDocumento14 páginasFragmentshaik.naimeAinda não há avaliações

- VicOUG Dataguard PresentationDocumento57 páginasVicOUG Dataguard PresentationfawwazAinda não há avaliações

- Les 14Documento37 páginasLes 14sherif hassan younisAinda não há avaliações

- Storage FragmentationDocumento53 páginasStorage FragmentationdineshAinda não há avaliações

- Fine Grained Audit TrailDocumento31 páginasFine Grained Audit TrailCarlos EspinozaAinda não há avaliações

- Less Tam6-Shrunk TablespaceDocumento10 páginasLess Tam6-Shrunk TablespaceEric LiAinda não há avaliações

- 25 Most Frequently Used Linux IPTables Rules ExamplesDocumento8 páginas25 Most Frequently Used Linux IPTables Rules ExamplesictnewAinda não há avaliações

- CRONTAB - Crontab Is A File Which Contains The Schedule of CronDocumento3 páginasCRONTAB - Crontab Is A File Which Contains The Schedule of CronNeerajroy GAinda não há avaliações

- ShrinkDocumento10 páginasShrinkPavan PusuluriAinda não há avaliações

- Ch3 Profiles, Password Policies, Privileges, and RolesDocumento79 páginasCh3 Profiles, Password Policies, Privileges, and Rolesahlam alzhraniAinda não há avaliações

- Software Investment Guide-Oracle - 264911252 PDFDocumento62 páginasSoftware Investment Guide-Oracle - 264911252 PDFAishwarya VardhanAinda não há avaliações

- The Installation of Oracle RAC 10g Release 2 On Asianux 2.0 (x86-64)Documento12 páginasThe Installation of Oracle RAC 10g Release 2 On Asianux 2.0 (x86-64)sonaligujralAinda não há avaliações

- Less08 Users PDFDocumento32 páginasLess08 Users PDFBailyIsuizaMendozaAinda não há avaliações

- IntroDocumento23 páginasIntroLabesh SharmaAinda não há avaliações

- DgintroDocumento48 páginasDgintroTolulope AbiodunAinda não há avaliações

- Recovering Standby Database When Archive Log Is Missing Using RMANDocumento5 páginasRecovering Standby Database When Archive Log Is Missing Using RMANgetsatya347Ainda não há avaliações

- Data Guard Process FlowDocumento14 páginasData Guard Process Flownizm_gadiAinda não há avaliações

- Table FragmentationDocumento14 páginasTable FragmentationSraVanKuMarThadakamallaAinda não há avaliações

- Table Fragmentation & How To Avoid Same: SamadhanDocumento7 páginasTable Fragmentation & How To Avoid Same: Samadhanvijaydba5Ainda não há avaliações

- Self-Check 1 Written Test: Name: - Date: - 1. 2. 3Documento1 páginaSelf-Check 1 Written Test: Name: - Date: - 1. 2. 3antex nebyuAinda não há avaliações

- Instance and Media Recovery StructuresDocumento30 páginasInstance and Media Recovery StructuresTalhaAinda não há avaliações

- 3 Steps To Perform SSH Login Without PasswordDocumento16 páginas3 Steps To Perform SSH Login Without Passwordred eagle winsAinda não há avaliações

- Document For Manually Upgrading Oracle Database 11.2.0.3 To 11.2.0.4Documento35 páginasDocument For Manually Upgrading Oracle Database 11.2.0.3 To 11.2.0.4mohd_sami64Ainda não há avaliações

- Configuring Recovery ManagerDocumento21 páginasConfiguring Recovery ManagerArinAliskieAinda não há avaliações

- Les 06Documento19 páginasLes 06Labesh SharmaAinda não há avaliações

- CFNCH Hand OutDocumento25 páginasCFNCH Hand OutJEMAL TADESSEAinda não há avaliações

- Oracle Drivers Config For HADocumento73 páginasOracle Drivers Config For HA신종근Ainda não há avaliações

- D75223GC40Documento5 páginasD75223GC40vineetAinda não há avaliações

- Oracle Database 11g Rac Administration Release2 NewDocumento5 páginasOracle Database 11g Rac Administration Release2 NewMelek MaalejAinda não há avaliações

- Presentation - Top Oracle Database 11g High Availability Best PracticesDocumento67 páginasPresentation - Top Oracle Database 11g High Availability Best PracticesTruong HoangAinda não há avaliações

- Oracle RAC Tuning TipsDocumento12 páginasOracle RAC Tuning TipsgisharoyAinda não há avaliações

- GDocumento7 páginasGCyro BezerraAinda não há avaliações

- ArrowECS SORA IO#102936 TipGuide2 031212Documento5 páginasArrowECS SORA IO#102936 TipGuide2 031212devjeetAinda não há avaliações

- Extreme Performance Using Oracle Timesten In-Memory DatabaseDocumento22 páginasExtreme Performance Using Oracle Timesten In-Memory DatabasedevjeetAinda não há avaliações

- Ziff Davis HowtoformulateawinningbigdatastrategyDocumento9 páginasZiff Davis HowtoformulateawinningbigdatastrategydevjeetAinda não há avaliações

- Point in Time Recovery: Anar GodjaevDocumento9 páginasPoint in Time Recovery: Anar GodjaevdevjeetAinda não há avaliações

- Enabling Solaris Project Settings For Crs (Id 435464.1)Documento3 páginasEnabling Solaris Project Settings For Crs (Id 435464.1)devjeetAinda não há avaliações

- Best Practices Solaris-RACDocumento4 páginasBest Practices Solaris-RACdevjeetAinda não há avaliações

- Give Them Something To Talk About: Brian Solis On The Art of EngagementDocumento4 páginasGive Them Something To Talk About: Brian Solis On The Art of EngagementdevjeetAinda não há avaliações

- Social Media AnalyticsDocumento12 páginasSocial Media AnalyticsdevjeetAinda não há avaliações

- Your Quick Reference Guide: Your Communications Dashboard - The 3CX Web ClientDocumento3 páginasYour Quick Reference Guide: Your Communications Dashboard - The 3CX Web ClientIliyan PetrovAinda não há avaliações

- 2.pisana Provjera KUPDocumento10 páginas2.pisana Provjera KUPsarasara111Ainda não há avaliações

- Data Link LayerDocumento50 páginasData Link LayerShubham ShrivastavaAinda não há avaliações

- Dr. Faris LlwaahDocumento25 páginasDr. Faris LlwaahHussein ShakirAinda não há avaliações

- TestNG Interview QuestionsDocumento5 páginasTestNG Interview QuestionsPriyanka mestryAinda não há avaliações

- BD Rowa Vmotion: Digital Systems For Customer Consultations and SalesDocumento13 páginasBD Rowa Vmotion: Digital Systems For Customer Consultations and SalescharuvilAinda não há avaliações

- RSpec TutorialDocumento22 páginasRSpec TutorialsatyarthgaurAinda não há avaliações

- JD-800 Model Expansion Owner's Manual: 01 © 2021 Roland CorporationDocumento20 páginasJD-800 Model Expansion Owner's Manual: 01 © 2021 Roland CorporationNicolas TilleAinda não há avaliações

- Exploring C PDF TorrentDocumento2 páginasExploring C PDF TorrentAriiAinda não há avaliações

- Mail For Exchange Frequently Asked Questions and Troubleshooting GuideDocumento32 páginasMail For Exchange Frequently Asked Questions and Troubleshooting GuideRamesh DevalapalliAinda não há avaliações

- Social Media Landscape in India and Future OutlookDocumento5 páginasSocial Media Landscape in India and Future Outlookpriyaa2688Ainda não há avaliações

- A Computer MouseDocumento16 páginasA Computer MouseUmar KasymovAinda não há avaliações

- Rust Cheat SheetDocumento8 páginasRust Cheat Sheetnewgmail accountAinda não há avaliações

- PrathyushaDocumento7 páginasPrathyushaNeelam YadavAinda não há avaliações

- Dept CSIT12 Mar 19Documento40 páginasDept CSIT12 Mar 19Varrshini MadhavakannaAinda não há avaliações

- 18 Library: MagazineDocumento1 página18 Library: MagazinejimusoAinda não há avaliações

- Keil Embedded C Tutorial PDFDocumento14 páginasKeil Embedded C Tutorial PDFHarikrishnanAinda não há avaliações

- Oral Questions LP-II: Data Mining (Rapid Miner)Documento6 páginasOral Questions LP-II: Data Mining (Rapid Miner)Sagar KhodeAinda não há avaliações

- Consolidation Practice Top Notch 2 Units 789Documento3 páginasConsolidation Practice Top Notch 2 Units 789Carlos M BeltránAinda não há avaliações

- Oop Assignment # 2 Submitted By: Roll #: DateDocumento11 páginasOop Assignment # 2 Submitted By: Roll #: DateHashir KhanAinda não há avaliações

- Kristalia Indoor Collection 2014Documento340 páginasKristalia Indoor Collection 2014LifengReachDreamAinda não há avaliações

- Prepared By: Dr. Rer. Nat. Ashraf Aboshosha: Mechatronics: Education, Research & DevelopmentDocumento40 páginasPrepared By: Dr. Rer. Nat. Ashraf Aboshosha: Mechatronics: Education, Research & DevelopmentJuan JacksonAinda não há avaliações

- Reseaech MetdologyDocumento56 páginasReseaech MetdologyabyAinda não há avaliações

- AI Automations 101 GuideDocumento5 páginasAI Automations 101 GuideMAinda não há avaliações

- MATH 204 - LESSON 3 - Statistical GraphsDocumento2 páginasMATH 204 - LESSON 3 - Statistical GraphsFmega jemAinda não há avaliações