Escolar Documentos

Profissional Documentos

Cultura Documentos

Conglomerate Inc.'s New PDA: A Segmentation Study: Case Handout

Enviado por

TinaPapadopoulouDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Conglomerate Inc.'s New PDA: A Segmentation Study: Case Handout

Enviado por

TinaPapadopoulouDireitos autorais:

Formatos disponíveis

CASE HANDOUT:

Conglomerate Inc.s New PDA: A Segmentation Study

By

Prof. P.V. (Sundar) Balakrishnan

Cluster Analysis

This note has been prepared in a manner that it should be possible for you to

replicate the results shown below by looking at the screen shots shown in the

appropriate places.

According to the information in this case, involving exploratory research, 160 people

were surveyed using two questionnaires:

a "needs questionnaire" (Segmenation data - Basis Variable) and

a "demographic questionnaire" (Classification variables Discrimination data).

TheCaseandthedatacanbefoundfromthelocationwhereyouloadedthesoftware:

Gotothefoldercontainingthecaseanddata:

~\CasesandExercises\ConneCtorPDA2001(Segmentation)\

o TheCaseisinaPDFfilecalled:

ConneCtorPDA2001Case(Segmentation).pdf

o TheDataisinanExcelSpreadsheet,called:

ConneCtorPDA2001Data(Segmentation).xls

Afteryouhavereadthecase,launchtheExcelspreadsheetcontainingthedata.

Balakrishnan

Question 1

Run only cluster analysis (without Discrimination) on the data to try to identify the

number of distinct segments present in this market. Consider both the distances separating

the segments and the characteristics of the resulting segments. (Note the need to

standardize the data!)

Solution:

Within the Excel Toolbar, select ME->XL ; then select

SEGMENTATION AND CLASSIFICATION

RUN SEGMENTATION

This should bring up a dialog box as follows. Make the appropriate selections.

Among the major decisions that you will hav to make are whether or not to

standardize the data.

By running the default Hierarchical Clustering (Wards method) for the

default nine clusters (i.e, segments) we get the dendogram as shown next:

-2-

Balakrishnan

Dendogram:

Dendograms provide graphical representations of the loss of information

generated by grouping different clusters (or customers) together.

3.13

1.45

Distance

1.11

.34

.28

.27

.26

.25

Cluster ID

Look the vertical axis -- distance measure (Error Sum of Squares) -- on the

dendogram. This shows the following clusters are quite close together and can

be combined with a small loss in consumer grouping information:

A) clusters 4 and 9 at 0.25, ii) clusters 1 and 8 at 0.26, ii) cluster 7-5 at 0.27.

B) fused clusters 1,8 and 6 at 0.28, ii) fused cluster 7-5 and cluster 2 (0.34).

However, note that when going from a four-cluster solution to a three-cluster

solution, the distance to be bridged is much larger (1.11); thus, the four-cluster

solution is indicated by the ESS...

-3-

Balakrishnan

Diagnostics for the cluster analysis

-----------------------------------The following table lists the cluster number to which a row element will belong for varying

specifications of the number of required clusters.

Cluster Members

The following table lists the cluster number to which each observation belongs for varying cluster

solutions. For example, the column "for 2 clusters" gives the cluster number of each observation in a

2-cluster solution. The cluster solution you have selected is in bold with a yellow background.

Observation

/ Cluster solution

1

2

3

4

5

6

7

8

9

With 2

clusters

1

1

1

1

1

1

1

1

1

With 3

clusters

1

1

1

1

1

1

1

1

1

With 4

clusters

1

1

1

1

1

1

1

1

1

With 5

clusters

1

1

1

1

1

1

1

1

1

With 6

clusters

1

1

1

1

1

1

6

1

1

ROW NOS CL2 CL3 CL4 CL5 CL6 CL7 CL8 CL9

------- --- --- --- --- --- --- --- --<SNIP>

105

106

107

108

109

110

111

112

113

114

115

1

1

1

1

1

1

1

1

1

1

1

158

159

160

2

2

2

1

1

1

1

1

1

1

1

1

1

1

4

4

1

4

1

4

4

4

4

1

4

4

4

1

4

1

4

4

4

4

1

4

4

4

1

4

1

4

4

4

4

1

4

4

4

1

4

1

4

4

4

4

1

4

4

4

8

4

8

4

4

4

4

8

4

4

9

8

4

8

9

9

9

4

8

9

3

3

3

3

3

3

3

3

3

3

3

3

3

3

3

<SNIP>

3

3

3

3

3

3

-4-

With 7

clusters

1

1

1

1

1

1

6

1

1

With 8

clusters

1

8

8

8

8

8

6

8

8

With 9

clusters

1

8

8

8

8

8

6

8

8

Balakrishnan

If we run the analysis again, now set to four segments, the program will perform

the agglomeration for us. The first paragraph below tells us about cluster

membership.

NOTE:. Another Cluster Analysis option is the K-Means procedure that attempts to

identify relatively homogeneous groups of cases based on selected characteristics,

using an algorithm that can handle large numbers of cases better than hierarchical

methods.

If a K-Means procedure is chosen, its four-cluster solution is very similar to the

four-cluster solution based on the Ward's hierarchical clustering procedure (See

Appendix I & II at end of document for the K-Means results).

-5-

Balakrishnan

Question 2

Identify and profile (name) the clusters that you select. Given the attributes of

ConneCtor, which cluster would you target for your marketing campaign?

Solution

Cluster Sizes

The following table lists the size of the population and of each segment, in both absolute and

relative terms.

Size / Cluster

Overall

Cluster 1

Cluster 2

Cluster 3

Cluster 4

Number of observations

160

56

51

16

37

Proportion

1

0.35

0.319

0.1

0.231

From the Results, we get the mean for each variable in each cluster:

Segmentation Variables

Means of each segmentation variable for each segment.

Segmentation

Overall Cluster 1 Cluster 2 Cluster 3 Cluster 4

variable / Cluster

Innovator

3.44

3.62

2.43

2.19

5.11

Use Message

5.2

6.52

5.63

3.19

3.49

Use Cell

5.62

6.02

5.43

4.31

5.84

Use PIM

3.99

5.84

2.33

3.06

3.86

Inf Passive

4.45

5.02

3.88

6.12

3.65

Inf Active

4.47

5.11

3.9

6.25

3.51

Remote Access

4

3.89

5.04

5.31

2.16

Share Inf

3.75

3.5

3.73

6.12

3.14

Monitor

4.79

4.29

5.55

5

4.43

Email

4.73

5.98

3.31

2.88

5.59

Web

4.46

5.62

3.04

1.44

5.97

Mmedia

3.98

5.11

2.45

1.94

5.27

Ergonomic

4.63

3.95

4.16

5.5

5.95

Monthly

28.7

24.5

25.3

45.3

32.6

Price

331

285

273

488

411

To characterize clusters (obtained with the default Ward

method) we look for means that are either well above or well below

the Overall mean.

To see the results using the K-Means option, please turn to Appendix I.

It is difficult to decide which segment(s) to target based on

the above information. We need some means of discrimination of

these segments. To this end, we employ the classification data

collected from these respondents.

-6-

Balakrishnan

Question 3

Go back to MENU, check Enable Discrimination and rerun the analysis. How

would you go about targeting the segment(s) you picked in question 2?

Solution

By checking the Discrimination option in the Setup, for the 4 cluster solution, we

get:

-7-

Balakrishnan

Confusion Matrix

Comparison of cluster membership predictions based on discriminant data,

and actual cluster memberships. High values in the diagonal of the confusion matrix (in bold)

indicates that discriminant data is good at predicting cluster membership.

Actual / Predicted cluster

Cluster 1

Cluster 2

Cluster 3

Cluster 4

Actual / Predicted cluster

Cluster 1

Cluster 2

Cluster 3

Cluster 4

Cluster 1

Cluster 2

Cluster 3

Cluster 4

34

10

1

3

11

35

3

0

4

5

12

0

7

1

0

34

Cluster 1

Cluster 2

Cluster 3

Cluster 4

60.70%

19.60%

06.20%

08.10%

19.60%

68.60%

18.80%

00.00%

07.10%

09.80%

75.00%

00.00%

12.50%

02.00%

00.00%

91.90%

Hit Rate (percent of total cases correctly classified)

71.88%

Other Diagnostics for Discriminant Analysis

Discriminant Function

Correlation of variables with each significant discriminant function

(significance level < 0.05).

Discriminant variable / Function Function 1 Function 2

Away

Education

PDA

Income

Business Week

Mgourmet

PC

Construction

Emergency

Cell

Computers

Sales

Service

Age

Field & Stream

PC Magazine

Professional

Variance explained

Cumulative variance explained

Function 3

-0.705

0.704

0.669

0.629

0.405

0.276

0.28

-0.197

-0.161

0.156

0.211

-0.014

-0.308

-0.002

-0.347

0.048

0.327

50.48

-0.132

0.098

0.219

0.138

-0.062

0.15

-0.549

0.37

0.363

-0.348

0.297

-0.386

-0.308

0.069

0.103

0.075

0.014

31.68

0.116

-0.035

0.114

-0.266

0.055

-0.164

-0.073

0.036

0.027

-0.011

-0.061

0.652

-0.468

0.409

-0.379

-0.354

-0.338

17.84

50.48

82.16

100

-8-

Balakrishnan

Next, we examine as to how the Classification (such as demographics) Variables

describe the different segments so as to better target them.

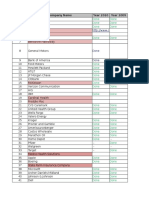

Means for Discrimination Variables in each Cluster

Results Using Wards (default) Method

Discriminant Variables

Means of each discriminant variable for each segment.

Discriminant variable /

Overall

Cluster 1

Cluster 2

Cluster

Away

Education

PDA

Income

Business Week

Mgourmet

PC

Construction

Emergency

Cell

Computers

Sales

Service

Age

Field & Stream

PC Magazine

Professional

4.21

2.51

0.44

66.89

0.28

0.02

0.98

0.08

0.04

0.88

0.23

0.30

0.18

40.01

0.13

0.24

0.16

4.36

2.48

0.45

62.59

0.30

0.00

1.00

0.05

0.02

0.91

0.18

0.54

0.11

43.07

0.04

0.14

0.09

4.84

2.20

0.18

60.53

0.18

0.00

1.00

0.06

0.02

0.90

0.14

0.24

0.39

36.77

0.24

0.29

0.16

Cluster 3

5.38

1.94

0.19

52.44

0.00

0.00

0.81

0.31

0.19

0.63

0.31

0.06

0.13

42.19

0.31

0.25

0.00

Cluster 4

2.60

3.22

0.89

88.43

0.49

0.08

1.00

0.05

0.03

0.89

0.41

0.14

0.03

38.89

0.03

0.32

0.35

To help us characterize clusters we look for variable means that are either well

above or well below the Overall mean.

Question 4

How has this analysis helped you to segment the market for ConneCtor?

Question 5

What other data and analysis would you do to develop a targeted marketing

program for ConneCtor?

-9-

Balakrishnan

APPENDIX I :

Diagnostics for the K-means Cluster Analysis

Cluster Sizes

The following table lists the size of the population and of each segment, in both

absolute and relative terms.

Size / Cluster

Overall

Cluster 1

Cluster 2

Cluster 3 Cluster 4

Number of observations

160

58

48

16

38

Proportion

1

0.363

0.3

0.1

0.237

Means for each basis variable in each cluster:

Segmentation Variables

Means of each segmentation variable for each segment.

Segmentation

Overall Cluster 1 Cluster 2 Cluster 3 Cluster 4

variable / Cluster

Innovator

3.44

3.55

2.4

2.19

5.13

Use Message

5.2

6.52

5.58

3.19

3.55

Use Cell

5.62

5.98

5.42

4.31

5.87

Use PIM

3.99

5.78

2.21

3.06

3.89

Inf Passive

4.45

5.02

3.85

6.12

3.63

Inf Active

4.47

5.14

3.81

6.25

3.53

Remote Access

4

3.78

5.21

5.31

2.26

Share Inf

3.75

3.5

3.71

6.12

3.18

Monitor

4.79

4.29

5.62

5

4.42

Email

4.73

6

3.17

2.88

5.55

Web

4.46

5.47

3.04

1.44

6

Mmedia

3.98

5.1

2.31

1.94

5.24

Ergonomic

4.63

4.03

4.06

5.5

5.89

Monthly

28.7

24.5

25.1

45.3

32.6

Price

331

285

269

488

411

-10-

Balakrishnan

Cluster Members

The following table lists the probabilities of each observations belonging to one of the 4

clusters. The probabilities are based on the inverse of the distance between an

observation and each cluster centroid. The last column lists the cluster with the

highest probability (discrete cluster membership).

Probabilility to Probabilility Probabilility Probabilility

belong to

to belong to to belong to belong to

cluster 1

cluster 2 to cluster 3 cluster 4

Observation /

Cluster solution

Cluster

membership

1

2

3

4

5

6

7

8

9

10

0.511

0.457

0.316

0.482

0.469

0.434

0.578

0.499

0.448

0.446

0.148

0.216

0.283

0.145

0.138

0.160

0.117

0.184

0.174

0.239

0.095

0.127

0.188

0.126

0.152

0.143

0.064

0.118

0.110

0.112

0.247

0.200

0.212

0.247

0.242

0.264

0.241

0.199

0.269

0.203

1

1

1

1

1

1

1

1

1

1

150

151

152

153

154

155

156

157

158

159

160

0.142

0.147

0.114

0.083

0.136

0.148

0.116

0.159

0.113

0.078

0.060

0.230

0.153

0.153

0.109

0.193

0.205

0.150

0.187

0.164

0.107

0.087

0.495

0.566

0.629

0.734

0.528

0.509

0.613

0.494

0.609

0.752

0.805

0.133

0.135

0.105

0.073

0.142

0.139

0.122

0.160

0.114

0.063

0.048

3

3

3

3

3

3

3

3

3

3

3

<SNIP>

-11-

Balakrishnan

APPENDIX II :

Discrimination Means of the Classification Variables

Results Using K-Means Method

Discriminant Variables

Means of each discriminant variable for each segment.

Discriminant variable /

Overall

Cluster 1

Cluster 2

Cluster

Away

Education

PDA

Income

Business Week

Field & Stream

Mgourmet

PC

Construction

Emergency

Cell

Computers

Sales

Age

Professional

Service

PC Magazine

4.21

2.51

0.44

66.89

0.28

0.13

0.02

0.98

0.08

0.04

0.88

0.23

0.30

40.01

0.16

0.18

0.24

4.31

2.50

0.45

62.81

0.29

0.03

0.00

1.00

0.05

0.02

0.90

0.19

0.55

43.72

0.07

0.10

0.16

-12-

4.98

2.15

0.15

59.65

0.17

0.25

0.00

1.00

0.06

0.02

0.92

0.13

0.21

36.08

0.17

0.42

0.29

Cluster 3

5.38

1.94

0.19

52.44

0.00

0.31

0.00

0.81

0.31

0.19

0.63

0.31

0.06

42.19

0.00

0.13

0.25

Cluster 4

2.58

3.21

0.90

88.37

0.50

0.03

0.08

1.00

0.05

0.03

0.90

0.40

0.13

38.37

0.37

0.03

0.32

Balakrishnan

Confusion Matrix

Comparison of cluster membership predictions based on discriminant data,

and actual cluster memberships. High values in the diagonal of the confusion matrix (in

bold)

indicates that discriminant data is good at predicting cluster membership.

Actual / Predicted cluster

Cluster 1

Cluster 1

Cluster 2

Cluster 3

Cluster 4

37

10

2

3

Actual / Predicted cluster

Cluster 1

Cluster 2

Cluster 3

Cluster 4

Cluster 2

Cluster 1

Cluster 3

10

33

2

0

Cluster 2

63.80%

20.80%

12.50%

07.90%

17.20%

68.80%

12.50%

00.00%

Hit Rate (percent of total cases correctly classified)

Cluster 4

4

5

12

0

Cluster 3

06.90%

10.40%

75.00%

00.00%

7

0

0

35

Cluster 4

12.10%

00.00%

00.00%

92.10%

73.12%

E:\DOCS\NewProductMngt\LECTURE-2007\PDA-Cluster-Handout-MEXL.doc

-13-

Você também pode gostar

- Two Step Cluster AnalysisDocumento10 páginasTwo Step Cluster AnalysisRex Rieta VillavelezAinda não há avaliações

- User ManualDocumento8 páginasUser ManualSam AmesAinda não há avaliações

- Optimal Protective Device Scheme For Radial Distribution Networks With Distributed Generation Using Binary Linear ProgrammingDocumento31 páginasOptimal Protective Device Scheme For Radial Distribution Networks With Distributed Generation Using Binary Linear ProgrammingNJ De GuzmanAinda não há avaliações

- Cluster 3.0 Manual: Michael Eisen Updated by Michiel de HoonDocumento32 páginasCluster 3.0 Manual: Michael Eisen Updated by Michiel de HoonnearcoAinda não há avaliações

- RV College of Engineering, Bengaluru-59: Self-Study Component-2Documento20 páginasRV College of Engineering, Bengaluru-59: Self-Study Component-2Alishare Muhammed AkramAinda não há avaliações

- TwoStep Cluster AnalysisDocumento19 páginasTwoStep Cluster Analysisana santosAinda não há avaliações

- DM Gopala Satish Kumar Business Report G8 DSBADocumento26 páginasDM Gopala Satish Kumar Business Report G8 DSBASatish Kumar100% (2)

- Data Mining Case - Unsupervised & Supervised Learning TechniquesDocumento8 páginasData Mining Case - Unsupervised & Supervised Learning TechniquesRoh MerAinda não há avaliações

- Analysis of Two Level DesignsDocumento11 páginasAnalysis of Two Level Designsjuan vidalAinda não há avaliações

- Correlogram TutorialDocumento77 páginasCorrelogram TutorialAsooda AdoosaAinda não há avaliações

- K-Means Cluster Analysis ExplainedDocumento13 páginasK-Means Cluster Analysis Explainedashirwad singh100% (1)

- Ebook 037 Tutorial Spss K Means Cluster Analysis PDFDocumento13 páginasEbook 037 Tutorial Spss K Means Cluster Analysis PDFShivam JadhavAinda não há avaliações

- Irt Lab 2 ManualDocumento22 páginasIrt Lab 2 Manualmartin_s_29Ainda não há avaliações

- Data MiningDocumento28 páginasData MiningGURUPADA PATIAinda não há avaliações

- Advanced Systems Lab Exercises From The Book - VISkiDocumento10 páginasAdvanced Systems Lab Exercises From The Book - VISkiChandana NapagodaAinda não há avaliações

- PS2Documento4 páginasPS2Romil ShahAinda não há avaliações

- MOA - Massive Online Analysis ManualDocumento67 páginasMOA - Massive Online Analysis ManualHolisson SoaresAinda não há avaliações

- J48Documento3 páginasJ48TaironneMatosAinda não há avaliações

- XLStat K-Means ClusteringDocumento6 páginasXLStat K-Means ClusteringIrina AlexandraAinda não há avaliações

- Paper 262 Statistical Methods For Reliability Data Using SASR SoftwareDocumento10 páginasPaper 262 Statistical Methods For Reliability Data Using SASR Softwaredshalev8694Ainda não há avaliações

- Tutorial 3Documento8 páginasTutorial 3diamesborgesAinda não há avaliações

- Lab 1Documento13 páginasLab 1YJohn88Ainda não há avaliações

- CAP3770 Lab#4 DecsionTree Sp2017Documento4 páginasCAP3770 Lab#4 DecsionTree Sp2017MelvingAinda não há avaliações

- BallParam Batch MultiTestDocumento327 páginasBallParam Batch MultiTestlewis poma rojasAinda não há avaliações

- Mvc2 ManualDocumento49 páginasMvc2 ManualMICHELLE ALEXANDRA SOTOMAYOR MONTECINOSAinda não há avaliações

- Decision Tree and PCA-based Fault Diagnosis of Rotating MachineryDocumento18 páginasDecision Tree and PCA-based Fault Diagnosis of Rotating MachineryHemantha Kumar RAinda não há avaliações

- 1 Dear Reader. - .: Appendix For "Are Variations in Term Premia Related To The Macroeconomy?"Documento9 páginas1 Dear Reader. - .: Appendix For "Are Variations in Term Premia Related To The Macroeconomy?"boucharebAinda não há avaliações

- Grand Canyon BUS 352 Entire CourseDocumento7 páginasGrand Canyon BUS 352 Entire CoursepitersunAinda não há avaliações

- Monte Carlo GuideDocumento12 páginasMonte Carlo GuideDominicAinda não há avaliações

- Chapter11 SlidesDocumento20 páginasChapter11 SlidesParth Rajesh ShethAinda não há avaliações

- SPSS Annotated Output K Means Cluster AnalDocumento10 páginasSPSS Annotated Output K Means Cluster AnalAditya MehraAinda não há avaliações

- @vtucode - in Module 4 AI 2021 Scheme 5th SemDocumento11 páginas@vtucode - in Module 4 AI 2021 Scheme 5th SemRatan ShetAinda não há avaliações

- Chi Square Statistica PDFDocumento4 páginasChi Square Statistica PDFAnonymous mIBVSEAinda não há avaliações

- Business Research Methods: Cluster AnalysisDocumento46 páginasBusiness Research Methods: Cluster AnalysiscalmchandanAinda não há avaliações

- Data Mining Discretization Methods and PerformancesDocumento3 páginasData Mining Discretization Methods and PerformancesAashish KhajuriaAinda não há avaliações

- 11-12-K Means Using SPSSDocumento4 páginas11-12-K Means Using SPSSritesh choudhuryAinda não há avaliações

- Ass3 v1Documento4 páginasAss3 v1Reeya PrakashAinda não há avaliações

- Project QuestionsDocumento4 páginasProject Questionsvansh guptaAinda não há avaliações

- Applying Data Mining Techniques To Breast Cancer AnalysisDocumento30 páginasApplying Data Mining Techniques To Breast Cancer AnalysisLuis ReiAinda não há avaliações

- Decision Trees and Random ForestsDocumento25 páginasDecision Trees and Random ForestsAlexandra VeresAinda não há avaliações

- G14 Sameer Bin YounasDocumento31 páginasG14 Sameer Bin YounasSameer YounasAinda não há avaliações

- Final 2018 - Eng - Con SolucDocumento11 páginasFinal 2018 - Eng - Con SolucCezar AlexandruAinda não há avaliações

- Lab 02: Decision Tree With Scikit-Learn: About The Mushroom Data SetDocumento3 páginasLab 02: Decision Tree With Scikit-Learn: About The Mushroom Data Settrung leAinda não há avaliações

- Weka SampleDocumento21 páginasWeka SampleLoveAstroAinda não há avaliações

- OpenPIV Tutorial - Guide to Particle Image Velocimetry Analysis SoftwareDocumento7 páginasOpenPIV Tutorial - Guide to Particle Image Velocimetry Analysis SoftwareAbhijit KushwahaAinda não há avaliações

- Complex Engineering Problem Drug Classification Using Fuzzy C MeansDocumento31 páginasComplex Engineering Problem Drug Classification Using Fuzzy C MeansSameer YounasAinda não há avaliações

- Weka Machine Learning toolkit studyDocumento13 páginasWeka Machine Learning toolkit studysiddarthkantedAinda não há avaliações

- Description: Bank - Marketing - Part1 - Data - CSVDocumento4 páginasDescription: Bank - Marketing - Part1 - Data - CSVravikgovinduAinda não há avaliações

- Final Exam SP '18Documento6 páginasFinal Exam SP '18Jen ChangAinda não há avaliações

- Master Thesis Uni BonnDocumento6 páginasMaster Thesis Uni Bonnaflodtsecumyed100% (2)

- PRACTICAL5Documento23 páginasPRACTICAL5thundergamerz403Ainda não há avaliações

- Data Science and Machine Learning Essentials: Lab 4B - Working With Classification ModelsDocumento29 páginasData Science and Machine Learning Essentials: Lab 4B - Working With Classification ModelsaussatrisAinda não há avaliações

- Experiment 11 - DNA: Biomedical Computation Lab (2) :: Ngee Ann Polytechnic Medical Imaging, Informatics and TelemedicineDocumento5 páginasExperiment 11 - DNA: Biomedical Computation Lab (2) :: Ngee Ann Polytechnic Medical Imaging, Informatics and TelemedicinedavidwongbxAinda não há avaliações

- Master in Advanced Analytics 2017/2018 Data Mining Project Group ElementsDocumento16 páginasMaster in Advanced Analytics 2017/2018 Data Mining Project Group ElementsBiaziAinda não há avaliações

- Machine Learning Business Report - ReshmaDocumento38 páginasMachine Learning Business Report - Reshmareshma ajithAinda não há avaliações

- Design Generator: Crossed FactorsDocumento7 páginasDesign Generator: Crossed FactorsEducadores En MovimientoAinda não há avaliações

- A Method To Build A Representation Using A Classifier and Its Use in A K Nearest Neighbors-Based DeploymentDocumento8 páginasA Method To Build A Representation Using A Classifier and Its Use in A K Nearest Neighbors-Based DeploymentRaghunath CherukuriAinda não há avaliações

- Lab Workbook With Solutions-Final PDFDocumento109 páginasLab Workbook With Solutions-Final PDFAbhinash Alla100% (5)

- Evolutionary Algorithms for Food Science and TechnologyNo EverandEvolutionary Algorithms for Food Science and TechnologyAinda não há avaliações

- DATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABNo EverandDATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABAinda não há avaliações

- Fortune 500 2011 (American & Global Tabs)Documento326 páginasFortune 500 2011 (American & Global Tabs)TinaPapadopoulouAinda não há avaliações

- Status ReportDocumento35 páginasStatus ReportTinaPapadopoulouAinda não há avaliações

- The Marketplace Management of Illicit PleasureDocumento14 páginasThe Marketplace Management of Illicit PleasureTinaPapadopoulouAinda não há avaliações

- Variety, Vice, and VirtueDocumento12 páginasVariety, Vice, and VirtueTinaPapadopoulouAinda não há avaliações

- Conjoint CaseDocumento10 páginasConjoint CaseTinaPapadopoulou100% (1)

- Variety, Vice, and VirtueDocumento12 páginasVariety, Vice, and VirtueTinaPapadopoulouAinda não há avaliações

- The Marketplace Management of Illicit PleasureDocumento14 páginasThe Marketplace Management of Illicit PleasureTinaPapadopoulouAinda não há avaliações

- Applying Social Cognition To Consumer Focused StrategyDocumento415 páginasApplying Social Cognition To Consumer Focused StrategyRoxana Alexandru100% (1)

- CPWD Contractor Enlistment Rules 2005 SummaryDocumento71 páginasCPWD Contractor Enlistment Rules 2005 Summaryvikky717Ainda não há avaliações

- Laude vs. Ginez-Jabalde (MCLE)Documento29 páginasLaude vs. Ginez-Jabalde (MCLE)Justin CebrianAinda não há avaliações

- OspndDocumento97 páginasOspndhoangdo11122002Ainda não há avaliações

- Payroll Activity SummaryDocumento1 páginaPayroll Activity SummaryChoo Li ZiAinda não há avaliações

- Econometrics ProjectDocumento17 páginasEconometrics ProjectAkash ChoudharyAinda não há avaliações

- Martillo Komac KB1500 Parts ManualDocumento12 páginasMartillo Komac KB1500 Parts ManualJOHN FRADER ARRUBLA LOPEZ100% (1)

- TROOP - of - District 2013 Scouting's Journey To ExcellenceDocumento2 páginasTROOP - of - District 2013 Scouting's Journey To ExcellenceAReliableSourceAinda não há avaliações

- Klasifikasi Industri Perusahaan TercatatDocumento39 páginasKlasifikasi Industri Perusahaan TercatatFz FuadiAinda não há avaliações

- Duratone eDocumento1 páginaDuratone eandreinalicAinda não há avaliações

- EIA GuidelineDocumento224 páginasEIA GuidelineAjlaa RahimAinda não há avaliações

- Mphasis Placement PaperDocumento3 páginasMphasis Placement PapernagasaikiranAinda não há avaliações

- Integrated Building Management Platform for Security, Maintenance and Energy EfficiencyDocumento8 páginasIntegrated Building Management Platform for Security, Maintenance and Energy EfficiencyRajesh RajendranAinda não há avaliações

- About Kia Motors Corporation: All-NewDocumento19 páginasAbout Kia Motors Corporation: All-NewWessam FathiAinda não há avaliações

- Optimization TheoryDocumento18 páginasOptimization TheoryDivine Ada PicarAinda não há avaliações

- Iglesia Ni Cristo v. Court of AppealsDocumento2 páginasIglesia Ni Cristo v. Court of AppealsNoreen NombsAinda não há avaliações

- 136 ADMU V Capulong (Roxas)Documento2 páginas136 ADMU V Capulong (Roxas)Trisha Dela RosaAinda não há avaliações

- Research Paper About Cebu PacificDocumento8 páginasResearch Paper About Cebu Pacificwqbdxbvkg100% (1)

- OTA710C User ManualDocumento32 páginasOTA710C User ManualEver Daniel Barreto Rojas100% (2)

- E.R. Hooton, Tom Cooper - Desert Storm - Volume 2 - Operation Desert Storm and The Coalition Liberation of Kuwait 1991 (Middle East@War) (2021, Helion and CompanyDocumento82 páginasE.R. Hooton, Tom Cooper - Desert Storm - Volume 2 - Operation Desert Storm and The Coalition Liberation of Kuwait 1991 (Middle East@War) (2021, Helion and Companydubie dubs100% (5)

- Lifetime Physical Fitness and Wellness A Personalized Program 14th Edition Hoeger Test BankDocumento34 páginasLifetime Physical Fitness and Wellness A Personalized Program 14th Edition Hoeger Test Bankbefoolabraida9d6xm100% (26)

- Open Recruitment Member Kejar Mimpi Periode 2023 (Responses)Documento22 páginasOpen Recruitment Member Kejar Mimpi Periode 2023 (Responses)Sophia Dewi AzzahraAinda não há avaliações

- Interview Tips 1Documento19 páginasInterview Tips 1mdsd57% (7)

- Leader in CSR 2020: A Case Study of Infosys LTDDocumento19 páginasLeader in CSR 2020: A Case Study of Infosys LTDDr.Rashmi GuptaAinda não há avaliações

- Creating Rapid Prototype Metal CastingsDocumento10 páginasCreating Rapid Prototype Metal CastingsShri JalihalAinda não há avaliações

- Framing Business EthicsDocumento18 páginasFraming Business EthicsJabirAinda não há avaliações

- Risk Assessments-These Are The Risk Assessments Which Are Applicable To Works Onsite. Risk Definition and MatrixDocumento8 páginasRisk Assessments-These Are The Risk Assessments Which Are Applicable To Works Onsite. Risk Definition and MatrixTimothy AziegbemiAinda não há avaliações

- Tdi Hazid TemplateDocumento11 páginasTdi Hazid TemplateAnonymous rwojPlYAinda não há avaliações

- Section 2 in The Forest (Conservation) Act, 1980Documento1 páginaSection 2 in The Forest (Conservation) Act, 1980amit singhAinda não há avaliações

- Mr. Arshad Nazer: Bawshar, Sultanate of OmanDocumento2 páginasMr. Arshad Nazer: Bawshar, Sultanate of OmanTop GAinda não há avaliações

- Block P2P Traffic with pfSense using Suricata IPSDocumento6 páginasBlock P2P Traffic with pfSense using Suricata IPSEder Luiz Alves PintoAinda não há avaliações