Escolar Documentos

Profissional Documentos

Cultura Documentos

Seed Block Algorithm

Enviado por

Sandeep Reddy Kankanala0 notas0% acharam este documento útil (0 voto)

144 visualizações5 páginasDDDDD

Título original

Seed Block Algorithm (1)

Direitos autorais

© © All Rights Reserved

Formatos disponíveis

PDF, TXT ou leia online no Scribd

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDDDDD

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

0 notas0% acharam este documento útil (0 voto)

144 visualizações5 páginasSeed Block Algorithm

Enviado por

Sandeep Reddy KankanalaDDDDD

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato PDF, TXT ou leia online no Scribd

Você está na página 1de 5

2013 International Conference on Communication Systems and Network Technologies

Seed Block Algorithm: A Remote Smart Data Back-up Technique for Cloud

Computing

Ms. Kruti Sharma

Prof. Kavita R Singh

Department of Computer Technology,

YCCE, Nagpur (M.S),

441 110, India

kruti.sharma1989@gmail.com

Department of Computer Technology,

Nagpur, YCCE, Nagpur (M.S),

441 110, India

singhkavita19@yahoo.co.in

complete state of the server that takes care of the heavily

generated data which remains unchanged during storing at

main cloud remote server and transmission. Integrity plays

an important role in back-up and recovery services.

In literature many techniques have been proposed

HSDRT[1], PCS[2], ERGOT[4], Linux Box [5], Cold/Hot

backup strategy [6] etc. that, discussed the data recovery

process. However, still various successful techniques are

lagging behind some critical issues like implementation

complexity, low cost, security and time related issues. To

cater this issues, in this paper we propose a smart remote

data backup algorithm, Seed Block Algorithm (SBA). The

contribution of the proposed SBA is twofold; first SBA

helps the users to collect information from any remote

location in the absence of network connectivity and second

to recover the files in case of the file deletion or if the cloud

gets destroyed due to any reason.

This paper is organized as follows: Section II focuses on

the related literature of existing methods that are successful

to some extent in the cloud computing domain. In Section

III, we discuss about the remote data backup server. Section

IV describes the detailed description of the proposed seed

block algorithm (SBA) and Section V shows the results and

experimentation analysis of the proposed SBA. Finally, in

Section VI conclusions are given.

Abstract In cloud computing, data generated in electronic

form are large in amount. To maintain this data efficiently,

there is a necessity of data recovery services. To cater this, in

this paper we propose a smart remote data backup algorithm,

Seed Block Algorithm (SBA). The objective of proposed

algorithm is twofold; first it help the users to collect

information from any remote location in the absence of

network connectivity and second to recover the files in case of

the file deletion or if the cloud gets destroyed due to any

reason. The time related issues are also being solved by

proposed SBA such that it will take minimum time for the

recovery process. Proposed SBA also focuses on the security

concept for the back-up files stored at remote server, without

using any of the existing encryption techniques.

Keywords-Central Repository; Remote Repository; Parity

Cloud Service; Seed Block;

I.

INTRODUCTION

National Institute of Standard and Technology defines as

a model for enabling convenient, on-demand network access

to a share pool of configurable computing service (for exnetworks, servers, storage, applications and services) that can

be provisioned rapidly and released with minimal

management effort or services provider [1]. Today, Cloud

Computing is itself a gigantic technology which is

surpassing all the previous technology of computing (like

cluster, grid, distributed etc.) of this competitive and

challenging IT world. The need of cloud computing is

increasing day by day as its advantages overcome the

disadvantage of various early computing techniques. Cloud

storage provides online storage where data stored in form of

virtualized pool that is usually hosted by third parties. The

hosting company operates large data on large data center and

according to the requirements of the customer these data

center virtualized the resources and expose them as the

storage pools that help user to store files or data objects.

As number of user shares the storage and other resources,

it is possible that other customers can access your data.

Either the human error, faulty equipments, network

connectivity, a bug or any criminal intent may put our cloud

storage on the risk and danger. And changes in the cloud are

also made very frequently; we can term it as data dynamics.

The data dynamics is supported by various operations such

as insertion, deletion and block modification. Since services

are not limited for archiving and taking backup of data;

remote data integrity is also needed. Because the data

integrity always focuses on the validity and fidelity of the

978-0-7695-4958-3/13 $26.00 2013 IEEE

DOI 10.1109/CSNT.2013.85

II.

RELATED LITERATURE

In literature, we study most of the recent back-up and

recovery techniques that have been developed in cloud

computing domain such as HSDRT[1], PCS[2], ERGOT[4],

Linux Box [5], Cold/Hot backup strategy [6] etc. Detail

review shows that none of these techniques are able to

provide best performances under all uncontrolled

circumstances such as cost, security, low implementation

complexity, redundancy and recovery in short span of time.

Among all the techniques reviewed PCS is comparatively

reliable, simple, easy to use and more convenient for data

recovery totally based on parity recovery service. It can

recover data with very high probability. For data recovery, it

generates a virtual disk in user system for data backup,

make parity groups across virtual disk, and store parity data

of parity group in cloud. It uses the ExclusiveOR ( ) for

creating Parity information. However, it is unable to control

the implementation complexities.

On the contrary, HSDRT has come out an efficient

technique for the movable clients such as laptop, smart

phones etc. nevertheless it fails to manage the low cost for

the implementation of the recovery and also unable to

376

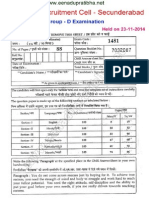

Table-I Comparison between various techniques of Back-up and

recovery [20]

control the data duplication. It an innovative file back-up

concept, which makes use of an effective ultra-widely

distributed data transfer mechanism and a high-speed

encryption technology

The HS-DRT [1] is an innovative file back-up concept,

which makes use of an effective ultra-widely distributed

data transfer mechanism and a high-speed encryption

technology. This proposed system follows two sequences

one is Backup sequence and second is Recovery sequence.

In Backup sequence, it receives the data to be backed-up

and in Recovery Sequence, when some disasters occurs or

periodically, the Supervisory Server (one of the components

of the HSDRT) starts the recovery sequence. However there

are some limitation in this model and therefore, this model

is somehow unable to declare as perfect solution for back-up

and recovery.

Rather, Efficient Routing Grounded on Taxonomy

(ERGOT) [4] is totally based on the semantic analysis and

unable to focus on time and implementation complexity. It

is a Semantic-based System which helps for Service

Discovery in cloud computing. Similarly, we found a unique

way of data retrieval. We made a focus on this technique as

it is not a back-up technique but it provide an efficient

retrieval of data that is completely based on the semantic

similarity between service descriptions and service requests.

ERGOT is built upon 3 components 1) A DHT (Distributed

Hash Table) protocol 2) A SON (Semantic Overlay

Network), 3) A measure of semantic similarity among

service description [4]. Hence, ERGOT combines both these

network Concept. By building a SON over a DHT, ERGOT

proposed semantic-driven query answering in DHT-based

systems. However does not go well with semantic similarity

search models.

In addition, Linux Box model is having very simple

concept of data back-up and recovery with very low cost.

However, in this model protection level is very low. It also

makes the process of migration from one cloud service

provider to other very easy. It is affordable to all consumers

and Small and Medium Business (SMB). This solution

eliminates consumers dependency on the ISP and its

associated backup cost. It can do all these at little cost

named as simple Linux box which will sync up the data at

block/file level from the cloud service provider to the

consumer. It incorporates an application on Linux box that

will perform backup of the cloud onto local drives. The data

transmission will be secure and encrypted. The limitation

we found that a consumer can backup not only the Data but

Sync the entire Virtual Machine[5] which somehow waste

the bandwidth because every time when backup takes place

it will do back-up of entire virtual machine.

Similarly, we also found that one technique basically

focuses on the significant cost reduction and router failure

scenario i.e. (SBBR). It concerns IP logical connectivity that

will be remain unchanged even after a router failure and the

most important factor is that it provides the network

management system via multi-layer signaling.

Additionally [10], it shows how service imposed

maximum outage requirements that have a direct effect on

the setting of the SBRR architecture (e.g. imposing a

minimum number of network-wide shared router resources

locations). However, it is unable to include optimization

concept with cost reduction.

With entirely new concept of virtualization REN cloud

focuses on the low cost infrastructure with the complex

implementation and low security level. Another technique

we found in the field of the data backup is a REN (Research

Education Network) cloud. The lowest cost point of view

we found a model Rent Out the Rented Resources [17]. Its

goal is to reduce the cloud services monetary cost. It

proposed a three phase model for cross cloud federation that

are discovery, matchmaking and authentication. This model

is based on concept of cloud vendor that rent the resources

from venture(s) and after virtualization, rents it to the clients

in form of cloud services.

All these techniques tried to cover different issues

maintaining the cost of implementation as low as possible.

However there is also a technique in which cost increases

gradually as data increases i.e. Cold and Hot back-up

strategy [6] that performs backup and recovery on trigger

basis of failure detection. In Cold Backup Service

Replacement Strategy (CBSRS) recovery process, it is

triggered upon the detection of the service failures and it

will not be triggered when the service is available. In Hot

377

Backup Service Replacement Strategy (HBSRS), a

transcendental recovery strategy for service composition in

dynamic network is applied [6]. During the implementation

of service, the backup services always remain in the

activated states, and then the first returned results of

services will be adopted to ensure the successful

implementation of service composition.

Although each one of the backup solution in cloud

computing is unable to achieve all the issues of remote data

back-up server. The advantages and disadvantages of all

these foresaid techniques are described in the Table-I. And

due to the high applicability of backup process in the

companies, the role of a remote data back up server is very

crucial and hot research topic.

intentionally or unintentionally, it should be not able to

access by third party or any other users/clients.

3) Data Confidentiality

Sometimes clients data files should be kept

confidential such that if no. of users simultaneously

accessing the cloud, then data files that are personal to only

particular client must be able to hide from other clients on

the cloud during accessing of file.

4) Trustworthiness

The remote cloud must possess the Trustworthiness

characteristic. Because the user/client stores their private

data; therefore the cloud and remote backup cloud must play

a trustworthy role.

5) Cost efficiency

The cost of process of data recovery should be efficient

so that maximum no. of company/clients can take advantage

of back-up and recovery service.

There are many techniques that have focused on these

issues. In forthcoming section, we will be discussing a

technique of back-up and recovery in cloud computing

domain that will cover the foresaid issues.

III. REMOTE DATA BACKUP SERVER

When we talk about Backup server of main cloud, we

only think about the copy of main cloud. When this Backup

server is at remote location (i.e. far away from the main

server) and having the complete state of the main cloud,

then this remote location server is termed as Remote Data

Backup Server. The main cloud is termed as the central

repository and remote backup cloud is termed as remote

repository.

IV.

DESIGN OF THE PROPOSED SEED BLOCK ALGORITHM

As discussed in literature, many techniques have been

proposed for recovery and backup such as HSDRT[1],

PCS[2], ERGOT[4], Linux Box[5], Cold/Hot backup

strategy[6] etc. As discussed above low implementation

complexity, low cost, security and time related issues are still

challenging in the field of cloud computing. To tackle these

issues we propose SBA algorithm and in forthcoming

section, we will discuss the design of proposed SBA in

detail.

A. Seed Block Algorithm(SBA) Architechture

This algorithm focuses on simplicity of the back-up and

recovery process. It basically uses the concept of Exclusive

OR (XOR) operation of the computing world. For ex: Suppose there are two data files: A and B. When we XOR A

and B it produced X i.e. X =

. If suppose A data file

get destroyed and we want our A data file back then we are

able to get A data file back, then it is very easy to get back it

.

with the help of B and X data file .i.e. A =

Similarly, the Seed Block Algorithm works to provide the

simple Back-up and recovery process. Its architecture is

shown in Fig-2 consists of the Main Cloud and its clients

and the Remote Server. Here, first we set a random number

in the cloud and unique client id for every client. Second,

whenever the client id is being register in the main cloud;

then client id and random number is getting EXORed ( )

with each other to generate seed block for the particular

client. The generated seed block corresponds to each client

is stored at remote server.

Whenever client creates the file in cloud first time, it is

stored at the main cloud. When it is stored in main server,

the main file of client is being EXORed with the Seed Block

of the particular client. And that EXORed file is stored at

Fig.1 Remote data Backup Server and its Architecture

And if the central repository lost its data under any

circumstances either of any natural calamity (for ex earthquake, flood, fire etc.) or by human attack or deletion

that has been done mistakenly and then it uses the

information from the remote repository. The main objective

of the remote backup facility is to help user to collect

information from any remote location even if network

connectivity is not available or if data not found on main

cloud. As shown in Fig-1 clients are allowed to access the

files from remote repository if the data is not found on

central repository (i.e. indirectly).

The Remote backup services should cover the following

issues:

1) Data Integrity

Data Integrity is concerned with complete state and the

whole structure of the server. It verifies that data such that it

remains unaltered during transmission and reception. It is

the measure of the validity and fidelity of the data present in

the server.

2) Data security

Giving full protection to the clients data is also the

utmost priority for the remote server. And either

378

the remote server in the form of file (pronounced as File

dash). If either unfortunately file in main cloud crashed /

damaged or file is been deleted mistakenly, then the user

will get the original file by EXORing file with the seed

block of the corresponding client to produce the original file

and return the resulted file i.e. original file back to the

requested client. The architecture representation of the Seed

Block Algorithm is shown in the Fig.2.

which can be extended as per the necessity. From Table-II, it

is observed that memory requirement is more in remote

server as compare to the main clouds server because

additional information is placed onto remote server (for

example- different Seed Block of the corresponding client

shown in Fig-2).

Table-II System Environment

B. SBA Algorithm

The proposed SBA algorithm is as follows:

Algorithm 1:

; Remote Server: ;

Initialization: Main Cloud:

;

Clients of Main Cloud: ; Files:

Seed block: ; Random Number: ;

Clients ID:

Input:

created by ; is generated at

;

Output: Recovered file

after deletion at

Given: Authenticated clients could allow uploading,

downloading and do modification on its own the files only.

During experimentation, we found that size of original

data file stored at main cloud is exactly similar to the size of

Back-up file stored at Remote Server as depicted in TableIII. In order to make this fact plausible, we perform this

experiment for different types of files. Results tabulated in

Table-III for this experiment shows that proposed SBA is

very much robust in maintaining the size of recovery file

same as that the original data file. From this we conclude that

proposed SBA recover the data file without any data loss.

Step 1: Generate a random number.

int

for each

and Store

Step 2: Create a seed Block

att

.

(Repeat step 2 for all clients)

creates/modifies a

and stores at

Step 3: If

, then

create as

Step 4: Store at

.

Step 5: If server crashes

deleted from

,

then, we do EXOR to retrieve the original

Step 6: Return

Step 7: END.

to

Table-III: Performance analysis for different types of files

Processing Time means time taken by the process

when client uploads a file at main cloud and that includes

the assembling of data such as the random number from

main cloud, seed block of the corresponding client from the

remote server for EXORing operation; after assembling,

performing the EXORed operation of the contents of the

uploaded file with the seed block and finally stored the

EXORed file onto the remote server. Performance of this

experiment is tabulated in Table-IV. We also observed that

as data size increases, the processing time increases. On

other hand, we also found that performance which is

megabyte per sec (MB/sec) being constant at some level

even if the data size increases as shown in Table-IV.

as:

Table-IV Effect of data size on processing time

Fig.2 Seed Block Algorithm Architecture

V.

EXPERIMENTATION AND RESULT ANALYSIS

In this section, we discuss the experimentation and result

analysis of the SBA algorithm. For experimentation we

focused on different minimal system requirement for main

clouds server and remote server as depicted in Table-II.

From Table-II, memory requirement is kept 8GB and 12GB

for the main clouds server and remote server respectively,

379

REFERENCES

The Fig-3 shows the CPU utilization at Main Cloud and

Remote Server. As shown in Fig-3 the Main Clouds CPU

utilization starts with 0% and as per the client uploads the

file onto it then utilization increases; such that it has to

check whether the client is authenticated or not, at the same

the time it send request to Remote Server for the

corresponding Seed Block. When request reached to

Remote Server it started collecting the details as well as the

seed Block and gives response in form of the seed Block

and during this period, load at Main Cloud decreases which

in return cause for gradual decreases in CPU utilization at

main cloud. After receiving the requested data, CPU

utilization at main cloud increases as it has to perform the

EXORed operation. Again the Final EXORed file sends to

Remote Server. As compared to Table-IV the processing

time given can be compare with the time showing in Fig-3.

[1]

[2]

[3]

[4]

[5]

[6]

[7]

Fig.3 Graph Showing Processor Utilization

[8]

The Fig-4 shows the experimentation result of proposed

SBA. As fig-4 (a) shows the original file which is uploaded

by the client on main cloud. Fig-4 (b) shows the EXORed

file which is stored on the remote server. This file contains

the secured EXORed content of original file and seed block

content of the corresponding client. Fig-4 (c) shows the

recovered file; which indirectly sent to client in the absence

of network connectivity and in case of the file deletion or if

the cloud gets destroyed due to any reason.

[9]

[10]

[11]

[12]

[13]

[14]

[15]

Fig.4 Sample output image of SBA Algorithm

VI.

CONCLUSION

[16]

In this paper, we presented detail design of proposed SBA

algorithm. Proposed SBA is robust in helping the users to

collect information from any remote location in the absence

of network connectivity and also to recover the files in case

of the file deletion or if the cloud gets destroyed due to any

reason. Experimentation and result analysis shows that

proposed SBA also focuses on the security concept for the

back-up files stored at remote server, without using any of

the existing encryption techniques. The time related issues

are also being solved by proposed SBA such that it will take

minimum time for the recovery process.

[17]

[18]

[19]

[20]

380

Yoichiro Ueno, Noriharu Miyaho, Shuichi Suzuki,Muzai Gakuendai,

Inzai-shi, Chiba,Kazuo Ichihara, 2010, Performance Evaluation of a

Disaster Recovery System and Practical Network System

Applications, Fifth International Conference on Systems and

Networks Communications,pp 256-259.

Chi-won Song, Sungmin Park, Dong-wook Kim, Sooyong Kang,

2011, Parity Cloud Service: A Privacy-Protected Personal Data

Recovery Service, International Joint Conference of IEEE

TrustCom-11/IEEE ICESS-11/FCST-11.

Y.Ueno, N.Miyaho, and S.Suzuki, , 2009, Disaster Recovery

Mechanism using Widely Distributed Networking and Secure

Metadata Handling Technology, Proceedings of the 4th edition of

the UPGRADE-CN workshop, pp. 45-48.

Giuseppe Pirro, Paolo Trunfio , Domenico Talia, Paolo Missier and

Carole Goble, 2010, ERGOT: A Semantic-based System for Service

Discovery in Distributed Infrastructures, 10th IEEE/ACM

International Conference on Cluster, Cloud and Grid Computing.

Vijaykumar Javaraiah Brocade Advanced Networks and

Telecommunication Systems (ANTS), 2011, Backup for Cloud and

Disaster Recovery for Consumers and SMBs, IEEE 5th International

Conference, 2011.

Lili Sun, Jianwei An, Yang Yang, Ming Zeng, 2011, Recovery

Strategies for Service Composition in Dynamic Network,

International Conference on Cloud and Service Computing.

Xi Zhou, Junshuai Shi, Yingxiao Xu, Yinsheng Li and Weiwei Sun,

2008, "A backup restoration algorithm of service composition in

MANETs," Communication Technology ICCT 11th IEEE

International Conference, pp. 588-591.

M. Armbrust et al, Above the clouds: A berkeley view of cloud

computing,http://www.eecs.berkeley.edu/Pubs/TechRpts/2009//EEC

S-2009-28.pdf.

F.BKashani, C.Chen,C.Shahabi.WSPDS, 2004, Web Services Peerto-Peer Discovery Service , ICOMP.

Eleni Palkopoulouy, Dominic A. Schupke, Thomas Bauscherty, 2011,

Recovery Time Analysis for the Shared Backup Router Resources

(SBRR) Architecture, IEEE ICC.

Lili Sun, Jianwei An, Yang Yang, Ming Zeng ,2011,Recovery

Strategies for Service Composition in Dynamic Network,

International Conference on Cloud and Service Computing, pp. 221

226.

P.Demeester et al., 1999, Resilience in Multilayer Networks, IEEE

Communications Magazine, Vol. 37, No. 8, p.70-76.

S. Zhang, X. Chen, and X. Huo, 2010, Cloud Computing Research

and Development Trend, IEEE Second International Conference on

Future Networks, pp. 93-97.

T. M. Coughlin and S. L. Linfoot, 2010, A Novel Taxonomy for

Consumer Metadata, IEEE ICCE Conference.

K. Keahey, M. Tsugawa, A. Matsunaga, J. Fortes, 2009, Sky

Computing, IEEE Journal of Internet Computing, vol. 13, pp. 43-51.

M. D. Assuncao, A.Costanzo and R. Buyya, 2009, Evaluating the

Cost- Benefit of Using Cloud Computing to Extend the Capacity of

Clusters, Proceedings of the 18th International Symposium on High

Performance Distributed Computing (HPDC 2009), Germany.

Sheheryar Malik, Fabrice Huet, December 2011, Virtual Cloud:

Rent Out the Rented Resources," 6th International Conference on

Internet Technology and Secure Transactions,11-14 ,Abu Dhabi,

United Arab Emirates.

Wayne A. Jansen, 2011, Cloud Hooks: Security and Privacy Issues

in Cloud Computing, 44th Hawaii International Conference on

System Sciences.Hawaii.

Jinpeng et al, 2009, Managing Security of Virtual Machine Images

in a Cloud Environment, CCSW, Chicago, USA.

Ms..Kruti Sharma,Prof K.R.Singh, 2012, Online data Backup And

Disaster Recovery techniques in cloud computing:A review, IJEIT,

Vol.2, Issue 5.

Você também pode gostar

- Validation Plan for Project NameDocumento7 páginasValidation Plan for Project Namevapaps100% (1)

- Cloud: Get All The Support And Guidance You Need To Be A Success At Using The CLOUDNo EverandCloud: Get All The Support And Guidance You Need To Be A Success At Using The CLOUDAinda não há avaliações

- IR ADV C7055 Series (SM)Documento1.821 páginasIR ADV C7055 Series (SM)Model MAinda não há avaliações

- Unit 1 RISK ANALYSIS AND DISASTER PLANNINGDocumento12 páginasUnit 1 RISK ANALYSIS AND DISASTER PLANNINGdev chauhanAinda não há avaliações

- Secure Cloud Using RGB Value and Homomorphic Encryption For Shared Data in CloudDocumento4 páginasSecure Cloud Using RGB Value and Homomorphic Encryption For Shared Data in CloudEditor IJRITCCAinda não há avaliações

- SQL Server DBA Training Plan 1 PDFDocumento38 páginasSQL Server DBA Training Plan 1 PDFmzille100% (1)

- Auditing IT Governance ControlsDocumento22 páginasAuditing IT Governance ControlsJohn PasquitoAinda não há avaliações

- Hitachi VSP g200 Hardware Installation Guide 04062015 PDFDocumento274 páginasHitachi VSP g200 Hardware Installation Guide 04062015 PDFOscar Daniel Talledo Alvarado100% (1)

- The Ultimate Guide to Unlocking the Full Potential of Cloud Services: Tips, Recommendations, and Strategies for SuccessNo EverandThe Ultimate Guide to Unlocking the Full Potential of Cloud Services: Tips, Recommendations, and Strategies for SuccessAinda não há avaliações

- Seed Block Algorithm A Remote Smart Data Back-Up Technique For Cloud ComputingDocumento5 páginasSeed Block Algorithm A Remote Smart Data Back-Up Technique For Cloud ComputingKarthik BGAinda não há avaliações

- Cloud PDFDocumento5 páginasCloud PDFhelloeveryAinda não há avaliações

- Seed Block Algorithm A Remote Smart DataDocumento7 páginasSeed Block Algorithm A Remote Smart DataChandan Athreya.SAinda não há avaliações

- File Recovery Technique Using Mirroring Concept in Cloud As Storage As A ServiceDocumento4 páginasFile Recovery Technique Using Mirroring Concept in Cloud As Storage As A ServiceEditor IJRITCCAinda não há avaliações

- Towards Secure and Dependable Storage Services in Cloud ComputingDocumento7 páginasTowards Secure and Dependable Storage Services in Cloud ComputingRathai RavikrishnanAinda não há avaliações

- Data Storage Management in CloudDocumento7 páginasData Storage Management in CloudDaniel MatthiasAinda não há avaliações

- 10 11648 J Iotcc S 2017050501 13Documento6 páginas10 11648 J Iotcc S 2017050501 13Jeampierr JIMENEZ MARQUEZAinda não há avaliações

- Analysis of Security Algorithms in Cloud ComputingDocumento6 páginasAnalysis of Security Algorithms in Cloud ComputingInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- Cloud Computing:: WWW - Globalsoftsolutions.inDocumento8 páginasCloud Computing:: WWW - Globalsoftsolutions.inAntony Joseph SelvarajAinda não há avaliações

- What Is Cloud Computing? Cloud Computing Is The Use of Computing Resources (Hardware andDocumento74 páginasWhat Is Cloud Computing? Cloud Computing Is The Use of Computing Resources (Hardware andSanda MounikaAinda não há avaliações

- ID-DPDP Scheme For Storing Authorized Distributed Data in CloudDocumento36 páginasID-DPDP Scheme For Storing Authorized Distributed Data in Cloudmailtoogiri_99636507Ainda não há avaliações

- Data Storage 2pdfDocumento5 páginasData Storage 2pdfIsrat6730 JahanAinda não há avaliações

- Iijit 2014 03 19 022Documento7 páginasIijit 2014 03 19 022International Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- Attribute-Based Access Control With Constant-Size Ciphertext in Cloud ComputingDocumento14 páginasAttribute-Based Access Control With Constant-Size Ciphertext in Cloud ComputingGateway ManagerAinda não há avaliações

- (IJCST-V10I5P53) :MR D Purushothaman, M NaveenDocumento8 páginas(IJCST-V10I5P53) :MR D Purushothaman, M NaveenEighthSenseGroupAinda não há avaliações

- Enhancing Cloud Security Using Multicloud Architecture and Device Based IdentityDocumento6 páginasEnhancing Cloud Security Using Multicloud Architecture and Device Based IdentityshreyassrinathAinda não há avaliações

- 1.1 Working of Cloud ComputingDocumento76 páginas1.1 Working of Cloud ComputingTharun Theja ReddyAinda não há avaliações

- reviewDocumento74 páginasreviewsethumadhavansasi2002Ainda não há avaliações

- Database Security in Cloud Computing via Data FragmentationDocumento7 páginasDatabase Security in Cloud Computing via Data FragmentationMadhuri M SAinda não há avaliações

- Cloud Database Performance and SecurityDocumento9 páginasCloud Database Performance and SecurityP SankarAinda não há avaliações

- UntitledDocumento64 páginasUntitledlekhaAinda não há avaliações

- Security Issues and Solutions in Cloud ComputingDocumento5 páginasSecurity Issues and Solutions in Cloud ComputingNellai SamuraiAinda não há avaliações

- Encryption in Cloud Storage and Demonstration Computer Science EssayDocumento34 páginasEncryption in Cloud Storage and Demonstration Computer Science Essaysohan hazraAinda não há avaliações

- International Journal of Engineering Research and Development (IJERD)Documento5 páginasInternational Journal of Engineering Research and Development (IJERD)IJERDAinda não há avaliações

- ICCC: Information Correctness To The Customers in Cloud Data StorageDocumento4 páginasICCC: Information Correctness To The Customers in Cloud Data StorageIjarcet JournalAinda não há avaliações

- 2018 Conferecne-Asymmetric Secure Storage Scheme For Big Data On Multiple Cloud ProvidersDocumento5 páginas2018 Conferecne-Asymmetric Secure Storage Scheme For Big Data On Multiple Cloud ProvidersRajesh KumarAinda não há avaliações

- Robust Data Security For Cloud While Using Third Party AuditorDocumento5 páginasRobust Data Security For Cloud While Using Third Party Auditoreditor_ijarcsseAinda não há avaliações

- A Cost Efficient Multi-Cloud Data Hosting Scheme Using CharmDocumento6 páginasA Cost Efficient Multi-Cloud Data Hosting Scheme Using CharmvikasbhowateAinda não há avaliações

- Efficient cloud data storage using randomized encryption algorithmsDocumento6 páginasEfficient cloud data storage using randomized encryption algorithmsmanikantaAinda não há avaliações

- Encrypted Data Management With Deduplication in Cloud ComputingDocumento13 páginasEncrypted Data Management With Deduplication in Cloud ComputingsharathAinda não há avaliações

- Towards Secure and Dependable Storage Services in Cloud ComputingDocumento14 páginasTowards Secure and Dependable Storage Services in Cloud ComputingRaghuram SeshabhattarAinda não há avaliações

- A Novel Data Authentication and Monitoring Approach Over CloudDocumento4 páginasA Novel Data Authentication and Monitoring Approach Over CloudseventhsensegroupAinda não há avaliações

- Compusoft, 2 (12), 404-409 PDFDocumento6 páginasCompusoft, 2 (12), 404-409 PDFIjact EditorAinda não há avaliações

- An 3526982701Documento4 páginasAn 3526982701IJMERAinda não há avaliações

- Simple But Comprehensive Study On Cloud StorageDocumento5 páginasSimple But Comprehensive Study On Cloud Storagepenumudi233Ainda não há avaliações

- Verification of Data Integrity in Co-Operative Multicloud StorageDocumento9 páginasVerification of Data Integrity in Co-Operative Multicloud Storagedreamka12Ainda não há avaliações

- Securing Cloud Data Storage: S. P. Jaikar, M. V. NimbalkarDocumento7 páginasSecuring Cloud Data Storage: S. P. Jaikar, M. V. NimbalkarInternational Organization of Scientific Research (IOSR)Ainda não há avaliações

- Adnan-2019-Big Data Security in The Web-Based PDFDocumento13 páginasAdnan-2019-Big Data Security in The Web-Based PDFArmando Rafael Fonseca ZuritaAinda não há avaliações

- Final Report ChaptersDocumento56 páginasFinal Report ChaptersJai Deep PonnamAinda não há avaliações

- 8.IJAEST Vol No 5 Issue No 2 Achieving Availability, Elasticity and Reliability of The Data Access in Cloud Computing 150 155Documento6 páginas8.IJAEST Vol No 5 Issue No 2 Achieving Availability, Elasticity and Reliability of The Data Access in Cloud Computing 150 155noddynoddyAinda não há avaliações

- scopusDocumento7 páginasscopuspranithaAinda não há avaliações

- Chapter 1Documento57 páginasChapter 1Kalyan Reddy AnuguAinda não há avaliações

- A Secure Data Self Destructing Scheme in Cloud ComputingDocumento5 páginasA Secure Data Self Destructing Scheme in Cloud ComputingIJSTEAinda não há avaliações

- IJSRST - Paper - Survey Ppaer B-15Documento4 páginasIJSRST - Paper - Survey Ppaer B-15cyan whiteAinda não há avaliações

- M Phil Computer Science Cloud Computing ProjectsDocumento15 páginasM Phil Computer Science Cloud Computing ProjectskasanproAinda não há avaliações

- M.Phil Computer Science Cloud Computing ProjectsDocumento15 páginasM.Phil Computer Science Cloud Computing ProjectskasanproAinda não há avaliações

- Cloud Computing Security and PrivacyDocumento57 páginasCloud Computing Security and PrivacyMahesh PalaniAinda não há avaliações

- Enhanced Secure Data Sharing Over Cloud Using ABE AlgorithmDocumento4 páginasEnhanced Secure Data Sharing Over Cloud Using ABE Algorithmcyan whiteAinda não há avaliações

- Deduplication of Data in The Cloud-Based Server For Chat/File Transfer ApplicationDocumento8 páginasDeduplication of Data in The Cloud-Based Server For Chat/File Transfer ApplicationZYFERAinda não há avaliações

- A Proposed Work On Adaptive Resource Provisioning For Read Intensive Multi-Tier Applications in The CloudDocumento6 páginasA Proposed Work On Adaptive Resource Provisioning For Read Intensive Multi-Tier Applications in The CloudInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- A Study On Application-Aware Local-Global Source Deduplication For Cloud Backup Services of Personal StorageDocumento3 páginasA Study On Application-Aware Local-Global Source Deduplication For Cloud Backup Services of Personal StorageInternational Journal of Application or Innovation in Engineering & ManagementAinda não há avaliações

- System Requirement SpecificationDocumento38 páginasSystem Requirement SpecificationBala SudhakarAinda não há avaliações

- Secure Distributed DataDocumento33 páginasSecure Distributed Databhargavi100% (1)

- Role Based Access Control Model (RBACM) With Efficient Genetic Algorithm (GA) For Cloud Data Encoding, Encrypting and ForwardingDocumento8 páginasRole Based Access Control Model (RBACM) With Efficient Genetic Algorithm (GA) For Cloud Data Encoding, Encrypting and ForwardingdbpublicationsAinda não há avaliações

- A Study On Secure Data Storage in Public Clouds: T. Dhanur Bavidhira C. SelviDocumento5 páginasA Study On Secure Data Storage in Public Clouds: T. Dhanur Bavidhira C. SelviEditor IJRITCCAinda não há avaliações

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingAinda não há avaliações

- Computer Science Self Management: Fundamentals and ApplicationsNo EverandComputer Science Self Management: Fundamentals and ApplicationsAinda não há avaliações

- 12SHDDocumento11 páginas12SHDSandeep Reddy KankanalaAinda não há avaliações

- DS Live Session Data Wrangling EDA TutorialDocumento14 páginasDS Live Session Data Wrangling EDA TutorialSandeep Reddy KankanalaAinda não há avaliações

- Final Syallbus Group-Iii Panchayat Raj Secretary PDFDocumento2 páginasFinal Syallbus Group-Iii Panchayat Raj Secretary PDFSandeep Reddy KankanalaAinda não há avaliações

- Module 4 - Hypothesis Testing - Summary Concepts in Hypothesis Testing IDocumento21 páginasModule 4 - Hypothesis Testing - Summary Concepts in Hypothesis Testing IshahadAinda não há avaliações

- GroupII PaperII TSPSC2016 QPDocumento21 páginasGroupII PaperII TSPSC2016 QPSandeep Reddy KankanalaAinda não há avaliações

- State and CropDocumento18 páginasState and CropSandeep Reddy KankanalaAinda não há avaliações

- SODocumento23 páginasSOSandeep Reddy KankanalaAinda não há avaliações

- 1497 Campusfile 1Documento4 páginas1497 Campusfile 1Sandeep Reddy KankanalaAinda não há avaliações

- IBPbhjjS Clerk Preliminary Practice Question Paper 7Documento23 páginasIBPbhjjS Clerk Preliminary Practice Question Paper 7Sandeep Reddy KankanalaAinda não há avaliações

- Tricks To Remember Indian History Call4TrickDocumento8 páginasTricks To Remember Indian History Call4TrickSandeep Reddy KankanalaAinda não há avaliações

- Trick To Remember CensusDocumento16 páginasTrick To Remember CensusSandeep Reddy KankanalaAinda não há avaliações

- State and CropDocumento18 páginasState and CropSandeep Reddy KankanalaAinda não há avaliações

- Resume: Name: Saikiran - Thode Mobile: 9490614430 EmailDocumento2 páginasResume: Name: Saikiran - Thode Mobile: 9490614430 EmailSandeep Reddy KankanalaAinda não há avaliações

- RRB Sec 14dec2014Documento14 páginasRRB Sec 14dec2014Sandeep Reddy KankanalaAinda não há avaliações

- RRB ASM Bang Previous Papers 2009 PDFDocumento4 páginasRRB ASM Bang Previous Papers 2009 PDFfotickAinda não há avaliações

- RRC Groupdpaper Sec Morning 23-11-2014Documento13 páginasRRC Groupdpaper Sec Morning 23-11-2014Venkata Ramana Murty GandiAinda não há avaliações

- Data Interpretation - Gr8AmbitionZDocumento13 páginasData Interpretation - Gr8AmbitionZamolkhadseAinda não há avaliações

- Group I I I ServicesDocumento5 páginasGroup I I I ServicesRizwan RizviAinda não há avaliações

- 03.MS WordDocumento40 páginas03.MS WordRichard ErasquinAinda não há avaliações

- April 2015Documento89 páginasApril 2015Sandeep Reddy KankanalaAinda não há avaliações

- ASSSSDocumento25 páginasASSSSSandeep Reddy KankanalaAinda não há avaliações

- BLOOD RELATION Book PDFDocumento48 páginasBLOOD RELATION Book PDFIrfan Shahid100% (2)

- Notification 022015Documento12 páginasNotification 022015Sandeep Reddy KankanalaAinda não há avaliações

- Computer Capsule 2015Documento25 páginasComputer Capsule 2015Bhagwandas GaikwadAinda não há avaliações

- BFA Model PaperDocumento13 páginasBFA Model PaperSandeep Reddy Kankanala50% (10)

- Data InterpretationDocumento12 páginasData InterpretationAbhayMenonAinda não há avaliações

- April GK Power Capsule 2015Documento64 páginasApril GK Power Capsule 2015Sandeep Reddy KankanalaAinda não há avaliações

- Speech Procesing Previous PapersDocumento2 páginasSpeech Procesing Previous PapersSandeep Reddy KankanalaAinda não há avaliações

- Speech Procesing Previous PapersDocumento2 páginasSpeech Procesing Previous PapersSandeep Reddy KankanalaAinda não há avaliações

- Sys Rqs Full PPRDocumento3 páginasSys Rqs Full PPRmh.mavaddatAinda não há avaliações

- SssDocumento53 páginasSssRavi Chandra Reddy MuliAinda não há avaliações

- Answer To PTP - Intermediate - Syllabus 2012 - Jun2014 - Set 3: Paper 9 - Operations Management & Information SystemsDocumento13 páginasAnswer To PTP - Intermediate - Syllabus 2012 - Jun2014 - Set 3: Paper 9 - Operations Management & Information SystemsvikasAinda não há avaliações

- Homep HomeDocumento1 páginaHomep Hometwaru11Ainda não há avaliações

- Course 6231B Microsoft SQL ServerDocumento3 páginasCourse 6231B Microsoft SQL ServercmoscuAinda não há avaliações

- System Error GuideDocumento10 páginasSystem Error GuideJUAN CARLOS ZUÑIGA TIMANAAinda não há avaliações

- Implementing Storage Spaces and Data DeduplicationDocumento43 páginasImplementing Storage Spaces and Data DeduplicationHdjjsdj HdkdjdhAinda não há avaliações

- Apparel Supply PlanningDocumento19 páginasApparel Supply PlanningMonk DhariwalAinda não há avaliações

- EMS RequirementsDocumento37 páginasEMS RequirementsLakshmi Kant YadavAinda não há avaliações

- IT AuditProcessDocumento24 páginasIT AuditProcessRavi DagaAinda não há avaliações

- Storage Devices and MediaDocumento37 páginasStorage Devices and MediaHaya MuqattashAinda não há avaliações

- Cyber Security Unit 2 NotesDocumento32 páginasCyber Security Unit 2 NotessakshamAinda não há avaliações

- Mantis Bug Tracking Tool - Step by Step ProceedureDocumento186 páginasMantis Bug Tracking Tool - Step by Step ProceedureTony Crown100% (1)

- Houdek, Rusek, Gono - 2015 - Analysis of Results For 110 KV Power Lines Restoration MethodologyDocumento5 páginasHoudek, Rusek, Gono - 2015 - Analysis of Results For 110 KV Power Lines Restoration MethodologyAndrés ZúñigaAinda não há avaliações

- Security in Database SystemsDocumento7 páginasSecurity in Database SystemsMueslieh JacksporowblackpearlAinda não há avaliações

- 2nd Sem CSS Module 4Documento6 páginas2nd Sem CSS Module 4Deey Lanne Casuga RufintaAinda não há avaliações

- SG 248426Documento392 páginasSG 248426Stefan VelicaAinda não há avaliações

- StarWind Releases Free Virtual Tape Library Solution Featuring Object Storage and Public Cloud Storage SupportDocumento2 páginasStarWind Releases Free Virtual Tape Library Solution Featuring Object Storage and Public Cloud Storage SupportPR.comAinda não há avaliações

- Tivoli MessagesDocumento851 páginasTivoli Messagesjackiexie3813Ainda não há avaliações

- Cse 2054 Dqac QB PC Aug 2023Documento16 páginasCse 2054 Dqac QB PC Aug 2023Jabla JackAinda não há avaliações

- Rman Hands OnDocumento161 páginasRman Hands OnCharles G GalaxyaanAinda não há avaliações

- Az-103 2 PDFDocumento335 páginasAz-103 2 PDFakhilesh katiyarAinda não há avaliações

- Eplc Om Manual Practices GuideDocumento3 páginasEplc Om Manual Practices GuideEngramjid KhanAinda não há avaliações

- NetVault Backup CLI Reference Guide EnglishDocumento170 páginasNetVault Backup CLI Reference Guide EnglishvitthalbAinda não há avaliações