Escolar Documentos

Profissional Documentos

Cultura Documentos

Data Warehousing: A Tool For The Outcomes Assessment Process

Enviado por

amit621988Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Data Warehousing: A Tool For The Outcomes Assessment Process

Enviado por

amit621988Direitos autorais:

Formatos disponíveis

132

IEEE TRANSACTIONS ON EDUCATION, VOL. 43, NO. 2, MAY 2000

Data Warehousing: A Tool for the Outcomes

Assessment Process

Joanne Ingham

AbstractTo meet EC-2000 assessment criteria, information

from all university constituents needs to be routinely collected

and tracked longitudinally. Typically, one finds that even when

the information exits, it is located across several decentralized

data bases run by different units. Additionally, many universities

are either running older mainframe legacy systems or are in

the process of making the transition to newer systems. These

conditions make it difficult to collect information regarding

students and their performance throughout their undergraduate

career and after. Data warehousing is a system of organizing

institutional data that can support the assessment process. This

paper describes one universitys experience with the development

and management of a data warehouse through the support of the

National Science Foundation Gateway Coalition.

Index TermsAssessment, outcomes assessment.

A review of the literature reveals that data warehousing is becoming an increasingly popular way to store and retrieve data,

primarily in business settings, and more recently in colleges

and universities [2]. Information about this new application has

been reported in conference proceedings [3][5] and in journals

[6][8]. In addition, several data warehouse organizations provide information and white papers on their Web sites.1

This case study will provide a description of the process used

to build and maintain a data warehouse within the Office of Institutional Assessment. As regional and professional accreditation bodies and state education departments adopt requirements

for documentation of continuous improvement, the benefits of

the data warehouse will become evident over time.

II. THE DEVELOPMENT PROCESSCYCLE 1

I. INTRODUCTION

OLYTECHNIC University is a small, private institution

and a member of the National Science Foundation (NSF)

Gateway Coalition. Programs are offered in engineering (chemical, civil, computer, electrical, mechanical), applied sciences

(chemistry, environmental science, information management,

math, physics, computer science), management, liberal studies,

humanities, and social science. Three campuses serve approximately 1700 undergraduates and 1300 graduate students.

The university has been using the Integrated Student Information System (ISIS) as a main source of data, as well as

the record-keeping system. Departmental records are typically

maintained in local data bases, spreadsheets, and computer

and paper files. Additional information is stored on university

UNIX servers, including e-mail, course information, and course

and departmental distribution lists. Currently, the university

is converting its system into an integrated enterprise system

(Peoplesoft). Given the diversity of the existing systems and

the relative difficulty of retrieving information to respond to

the anticipated expectations of EC-2000 and the outcomes

assessment process, establishing a data warehouse was crucial.

Table I describes the comparative benefits of data warehousing.

A data warehouse literally warehouses information about an

organization in a secured computing systems environment to

allow multiple users to extract meaningful, consistent, and accurate data for decision making [1]. A well-designed data warehouse supports an assessment environment in which human energy is focused on the continuous improvement process and not

the mechanical collection of data.

Manuscript received August 1999; revised November 29, 1999.

The author is with Polytechnic University, Brooklyn, NY 11201 USA (e-mail:

jingham@poly.edu).

Publisher Item Identifier S 0018-9359(00)04310-7.

With support from the NSF Gateway Coalition, the process of

building the data warehouse began in the summer of 1997. The

goal of this project was to store institutional data from all appropriate areas of the university in a relational data-base format to

enable timely, accurate analysis for tracking and analyzing patterns of change. The first cycle of development entailed building

the data warehouse The data were drawn from the university

legacy system, departmental data bases, and external university

data, as depicted in Fig. 1. There were four distinct steps, which,

when completed, resulted in the birth of the data warehouse.

Step 1-Recognition and Support for a Centralized

Process: In response to the assessment expectations of

the NSF Gateway Coalition, as well as EC-2000, the effort

was made to establish the Office of Institutional Assessment

and to support the creation of a longitudinal tracking system

to follow students academic careers. In August 1997, a

director and project leader were hired. All necessary computer equipment and software (Access, SQL, Visual Basic,

ASP, COBOL, C-shell Script) were purchased, installed, and

configured by October. In this instance, the data warehouse

was developed and operational within a six-month period.

The warehouse was populated with ten years of institutional

data from the legacy system. For larger institutions or those

whose systems complexities differ, the time required for this

phase may vary.

Step 2Identify Available University Data: The second

step involved determining which other institutional data existed, who managed it, and how the data were formatted. To

accomplish this, meetings were held with each department

director to identify the nature and format of data they routinely

1See the Data Warehousing Knowledge Center at http://www.datawarehousing.org and The Business Intelligence and Data Warehousing Glossary at

http://www.sdgcomputing.com/glossary.htm.

00189359/00$10.00 2000 IEEE

INGHAM: DATA WAREHOUSING

133

TABLE I

COMPARATIVE BENEFITS OF USING A DATA WAREHOUSE VERSUS A LEGACY SYSTEM

Fig. 1.

Building a data warehouse.

collected and stored. While the array of data available were

substantial, it seemed that each department stored data in

different formats. Integrating the potpourri of data files into

a consistent format presented a real challenge for the project

leader. For example, separate relational data-base management

systems existed for offices whose format was determined by

outside funding agencies, the alumni functions and data were

outsourced, and the academic departments used a variety of

software and, often, paper reports. Data from state reports and

the Cooperative Institutional Research Program and the results

of national assessments were processed by researchers at other

institutions.

As a result of requesting data from each department, a number

of critical issues became apparent. These issues arose, in part,

due to a lack of awareness about the revised accreditation expectations. There was a recognized need to make public, and institutionalize, the outcomes assessment process at the institution.

The benefits of a university statement on assessment were rec-

ognized. Such a statement was prepared and is now included in

the new university catalog. Assessment activities had to become

formally recognized as a routine part of university practice.

Second, several directors were reluctant to share their files

with others. They expressed concern about maintaining the confidentiality of information collected for federally funded programs. To address this concern, the vice president and dean of

engineering and applied sciences sent formal release letters clarifying the fundamental purpose of the request and insuring that

only aggregate data would be reported. Last, meetings were conducted with the directors and data-base managers to determine

the scope of the current data and, most important, to identify the

quality or purity of the data available. Unless meticulous care is

taken to import accurate and clean data, the fundamental value

of the warehouse is compromised.

Step 3Data Integration: After all available data had been

identified and examined, they then had to be integrated into the

warehouse. For research purposes and longitudinal tracking for

the Gateway Coalition, a decision was made to include ten years

of history data. The history data were migrated from the university legacy system. These data included the 21-day census data

files, the freshmen cohort files, graduation files, and registrar

files containing grades. Files for the alumni were requested by

program and years out as needed.

The actual collection, examination, and storage of information in the warehouse involved several activities. All files had

to be checked for data quality and to verify that the data were

valid. All duplicate and/or dead data needed to be eliminated

before migration could take place. In addition, data consistency

was checked repeatedly by cross-referencing data from different

sources.

Step 4Developing a Process to Feed the Data Warehouse: Ideally, the data warehouse should be built with the

capacity to accommodate assessment data as they are collected.

A decision was made to move, when possible, away from

paper and pencil data collection strategies and move toward

electronic, Web-based methods. Testing the usefulness of

several different strategies was accomplished by piloting three

Gateway Coalition assessment tools on a small scale. Table II

describes the comparative benefits of a Web-based process.

134

IEEE TRANSACTIONS ON EDUCATION, VOL. 43, NO. 2, MAY 2000

TABLE II

COMPARATIVE BENEFITS OF PAPER VERSUS WEB-BASED ASSESSMENT

Among the first Gateway assessment surveys prepared was a

faculty survey to assess the degree to which faculty participation

in the coalitions activities fostered instructional innovation in

the classroom. The completed paper forms were collected and

the data were entered manually. This process was very labor

intensive and took three weeks to distribute, collect, and enter.

The first pilot effort with a Web-based evaluation involved

the freshman design course. The survey data were collected and

analyzed electronically. Given that the evaluation form existed,

populating the survey was the most time-consuming part. The

Web evaluation form was available to students over a period of

three weeks, and the results were available immediately.

The next pilot involved the mechanical engineering alumni

survey. This survey was prepared and made available as both a

Web-based and paper version A letter with the Web address and

a paper survey were mailed home, and the alumni could select

which method to use. Both approaches were employed to better

determine the relative response rates with an alumni population.

A Web-based civil engineering course-level assessment tool

was tested with nine sophomore- through senior-level courses.

We were, at this point, very confident in the mechanics and security of the technology. Based on further review of the results

of the pilot experiences, an institutional decision was made to

go ahead with a Web-based assessment approach and to pilot

several more assessment tools.

on cohort persistence and graduation rates for gender and ethnicity were run. Last, a series of beta-tests confirmed that the

Web-based survey process was functional and secure.

The startup phase required personnel and equipment. Cycle

1 activities were completed over the course of six months by

one full-time professional working exclusively on this project.

A total of 960 hours were involved. As Cycle 2 was planned,

a decision was made to add a student assistant at 20 hours per

week to handle the Web-based survey activities. As the warehouse became functional, requests for data were, as expected,

numerous. The total spent on new computers, software and peripherals, and eventually a dedicated server came to $15 000.

An additional $35 000 in salaries brought the project costs to

$50 000.

Along with these accomplishments, however, several problems were encountered that necessitated modifications during

Cycle 2. Specifically, as the data warehouse grew, we found

that the data-base software performed slowly. In fact, the functionality and security of the software products were inadequate

for our expanding needs. As Web-based assessment procedures

were applied, the Web connection and speed were not sufficient for our needs. Parallel to this, the university Web team

made a decision to return to a UNIX system utilizing Oracle

for data-base activities.

IV. CYCLE 2SOLVING PROBLEMS

III. EVALUATION OF CYCLE 1

After several months, a number of substantial accomplishments were achieved. An Office of Institutional Assessment was

established and the staff was selected. The space was prepared

and the equipment was set up and functional. Institutional data

for a ten-year period were collected, cleaned, and integrated into

the warehouse. Having run several tests to confirm the accuracy of information generated and the integrity of the system,

the technology was considered operational. The first reports

Experience with the warehouse capabilities and a full appreciation of the scope of the project prompted the decision to move

into a UNIX environment for speed, security, and availability

of institutional support and consistency. Having also made the

decision to adopt a Web-based assessment process, additional

issues had to be considered.

First, additional hardware was purchased. To support the Web

activities, a dedicated server was added to our system. A Sun

Solaris 26 Ultra 10 server and Sun DDS2 backup tape system

INGHAM: DATA WAREHOUSING

were purchased The software selected included Apache Web

Server, Perl 5m and MySQL relational data base with built in

security features. The MySQL data base will allow for a table

up to 6 GB, which we estimate to be adequate for a few decades

of institutional data. Concerns about sufficient room would not

be an issue for some time to come.

With this setup, the Apache could handle up to 50 concurrent users. This feature is more than adequate for an organization with an anticipated enrollment of 5000 graduate and undergraduate students. Based on the first cycle results, new features

such as data integrity checking were also introduced. Results

are now processed before they are submitted or inserted into the

data warehouse. As the warehouse continued to grow, other features were introduced to allow for searches by two, three, or four

letters of the last name to minimize typing.

V. WEB-BASED PROCESS MODIFICATIONS

When the preparation began for university-wide Web-based

course evaluations, a number of critical steps were taken. It was

at this juncture that the Gateway Coalition assessment activities

became integral to the technology.

Working with the dean, department heads, faculty and the

Strategic Planning Team, the universitys mission statement,

program- and course-level goals, and objectives were prepared.

For each undergraduate course, the goals and objectives were

linked with the competencies identified by the Gateway Coalition and EC-2000. A course evaluation form was developed incorporating two component parts. The first part, developed by

the Gateway Coalition, was used to assess specific competencies and skills linked to EC-2000 Criterion 3. The second part

was a locally prepared course evaluation form developed by the

faculty.

Based on lessons learned through beta-tests, a course evaluation process was established that allows each undergraduate

student to complete course evaluations on a Web site2 only for

courses they were registered for that semester. We learned that

the Web site should be available approximately three weeks

prior to the final exam period. Second, the Web address should

be easy to remember and accessible from off-campus locations.

The design of the system insured that students were limited

to evaluating only courses they were registered in, and only

one time. The evaluations were easy to complete and could be

completed at one sitting or several, as desired. Students were

also able to submit written comments. The abundance and

richness of written commentary provided in pilots were delightfully surprising. A few sentences, as well as paragraphs of

thoughtful commentary, were submitted. The comments ranged

from positive to negative. The feedback was often filled with

praise for specific aspects of the course and instructor. Critical

comments were, for the most part, constructive. The student

responses were analyzed and prepared for dissemination using

Access. This feedback then became part of the data warehouse.

The final report was made available to the campus community

on the university intranet.

2See

www2.poly.edu.

135

VI. WAREHOUSE AS A RESOURCE

As faculty and administrators learned more about the new

expectations of the NSF Gateway Coalition, ABET, CSAB, and

Middle States and the importance of outcomes assessment, the

volume of requests increased. Additionally, as information on

retention and graduation rates was disseminated, the value of the

assessment process and capabilities of the longitudinal tracking

system were slowly recognized.

The benefits to the faculty, department heads, and administrators were several. Access to timely, consistent, and thorough

data as needed was faster and more convenient. The administration of alumni surveys by the assessment office allowed the faculty to look at the process and results and not spend a lot of energy on the administration and collection of information. The establishment of routine procedures for user-friendly course-level

assessment meant that the course evaluation process would be

handled on an institutional rather than department level. The

warehouse is also a resource to support the development and implementation of assessment plans for grants. Last, the university

now has the ability to support institutional research using the

longitudinal tracking system.

VII. CONCLUSION

The data warehouse currently manages 100 000 student

records covering a ten-year span, as well as 30 000 course

records over a similar period of time. Data on freshman cohort

retention across any number of variables, as well as similar

graduation history, are available from 1988. Additional data

have been integrated into the system including alumni data,

student placement test scores, and co-op information. Further,

compared to statically processed information, the new data

warehouse has the capability of on-line dynamic results report

generation.

As benchmark data are gathered and the outcomes assessment process is refined, the data warehouse will expand exponentially. The end result is the availability of superior means of

documenting and analyzing continuous improvement on an institutional, program, and course level.

ACKNOWLEDGMENT

The author wishes to thank the members of the NSF Gateway

Coalition for their support with this project. She also wishes to

thank Polytechnic University, Dr. W. R. McShane, Dr. R. Roess,

and A. Polevoy.

REFERENCES

[1] R. Bargain and H. Edelstein, Building, Using, and Managing the Data

Warehouse. Upper Saddle River, NJ: Prentice-Hall, 1997.

[2] J. Frost, M. Dalyrmple, and M. Wang, Focus for institutional researchers: Developing and using a student decision support system, in

Proc. 1998 AIR Annu. Forum, Minneapolis, MN, 1998, pp. 115.

[3] L. J. Mignerey, A data warehouseThe best buy for the money, in

Proc. CAUSE Annu. Conf., Orlando, FL, 1994, p. VI-5-1.

[4] J. D. Porter and J. J. Rome, A data warehouse: Two years later . . .

lessons learned, in Proc. CAUSE Annu. Conf., Orlando, FL, 1994, p.

VI-6-1.

[5] A. Polevoy and J. Ingham, Data warehousing: A tool for facilitating

assessment, in Proc. 29th ASEE/IEEE Frontiers in Education Conf.,

San Juan, Puerto Rico, 1999, p. 11b1-7.

136

[6] C. R. Thomas, Information architecture: The data warehouse foundation, CAUSE/EFFECT, vol. 60, no. 2, p. 3133/3840, Summer 1997.

[7] M. Singleton, Developing and marketing a client/server-based data

warehouse, CAUSE/EFFECT, vol. 16, no. 3, pp. 4752, Fall 1993.

[8] M. Bosworth, Rolling out a data warehouse at UMass: A simple start

to a complex task, CAUSE/EFFECT, vol. 18, no. 1, pp. 4045, Spring

1995.

IEEE TRANSACTIONS ON EDUCATION, VOL. 43, NO. 2, MAY 2000

Joanne M. Ingham was born in Rome, NY, on January 20, 1952. She received

the B.S. degree in biology, secondary education, from the State University of

New York, Oswego, the M.S. degree in counseling from Long Island University,

Brookville Center, NY, and the Ed.D. degree in curriculum and instruction from

St. Johns University, Jamaica, NY.

She has been an Administrator at Polytechnic University, Brooklyn, NY, since

1994 and is currently the Director of Institutional Assessment and Retention in

the Office of Academic Affairs. She has been active in research in the fields

of outcomes assessment and learning styles. She recently completed a research

project with a Fulbright scholar from Mexico comparing the learning styles and

measures of creative performance of American and Mexican engineering students. She taught undergraduate and graduate courses in education at St. Johns

University; Queens College, Queens, NY; and Adelphi University, Garden City,

NY. She is an international Consultant in learning styles.

Você também pode gostar

- Client Application DetailsDocumento6 páginasClient Application DetailsSaran Kumar RamarAinda não há avaliações

- Setrite X - (Solutions) - (09 06 2022)Documento84 páginasSetrite X - (Solutions) - (09 06 2022)Deepti Walia100% (1)

- A Statement of Purpose by A Computer Science and Electrical Engineering Student From IndiaDocumento2 páginasA Statement of Purpose by A Computer Science and Electrical Engineering Student From IndiaMOHAMED MANSUR SAinda não há avaliações

- (Core Textbook) Teaching ESL Composition-Purpose, Process, PracticeDocumento446 páginas(Core Textbook) Teaching ESL Composition-Purpose, Process, PracticeDanh Vu100% (2)

- A Focus On VocabularyDocumento24 páginasA Focus On VocabularyLuizArthurAlmeidaAinda não há avaliações

- APPLICATION Letter for Summer JobDocumento3 páginasAPPLICATION Letter for Summer Jobsel lancionAinda não há avaliações

- Fun Way 2 Teacher's BookDocumento76 páginasFun Way 2 Teacher's BookAgustina MerloAinda não há avaliações

- Georgia SOP + PSDocumento6 páginasGeorgia SOP + PSSan Jay SAinda não há avaliações

- SopDocumento2 páginasSopAkhand RanaAinda não há avaliações

- Compose Clear and Coherent Sentences Using Appropriate Grammatical Structures (Modals) EN5G-Ic-3.6Documento17 páginasCompose Clear and Coherent Sentences Using Appropriate Grammatical Structures (Modals) EN5G-Ic-3.6Freshie Pasco67% (21)

- Doctors at Noida ExtensionDocumento2 páginasDoctors at Noida Extensionamit62198880% (5)

- Bear Hunt ReflectionDocumento4 páginasBear Hunt Reflectionapi-478596695Ainda não há avaliações

- Statement of Purpose for Mechanical Engineering MastersDocumento2 páginasStatement of Purpose for Mechanical Engineering MastersHariharan MokkaralaAinda não há avaliações

- SOP Guidelines IrelandDocumento1 páginaSOP Guidelines Irelandxb1f30Ainda não há avaliações

- Cot - DLP - Mathematics q1 6 by EdgarDocumento3 páginasCot - DLP - Mathematics q1 6 by EdgarEDGAR AZAGRA100% (2)

- Sample Sop 1Documento2 páginasSample Sop 1Manan AhujaAinda não há avaliações

- University of NorthamptonDocumento4 páginasUniversity of NorthamptonBibek BastolaAinda não há avaliações

- Bachelor of Science in IT - SJUTDocumento201 páginasBachelor of Science in IT - SJUTOmmy OmarAinda não há avaliações

- Statement of Purpose-NTUDocumento3 páginasStatement of Purpose-NTUsiddhi dixitAinda não há avaliações

- 2009 UCSD Statement of PurposeDocumento3 páginas2009 UCSD Statement of Purposeelizfink100% (2)

- Sop On Computer ScienceDocumento3 páginasSop On Computer Sciencegurpreet kalsi100% (1)

- Acoounting SOP UpdatedDocumento3 páginasAcoounting SOP UpdatedromanAinda não há avaliações

- SOP For ImperialDocumento4 páginasSOP For ImperialRileena SanyalAinda não há avaliações

- Sop Commerce MsDocumento1 páginaSop Commerce MsNeeraj SharmaAinda não há avaliações

- BarveDocumento2 páginasBarveRamzu FiverrAinda não há avaliações

- Electrical and Electronics Engineering SOPDocumento2 páginasElectrical and Electronics Engineering SOPSaurabh KumarAinda não há avaliações

- ShishirDocumento2 páginasShishirSiddharth SinghAinda não há avaliações

- Taiwan ScholarshipDocumento7 páginasTaiwan ScholarshiptammttgAinda não há avaliações

- A Sample of A Personal Statement For Chinese UniversitiesDocumento2 páginasA Sample of A Personal Statement For Chinese UniversitiesTommasoAinda não há avaliações

- Design Engineer Seeks PhD in Renewable EnergyDocumento2 páginasDesign Engineer Seeks PhD in Renewable EnergyTinku GopalAinda não há avaliações

- Statement of Purpose SampleDocumento1 páginaStatement of Purpose SampleArslan KhanAinda não há avaliações

- Genius is made through grit and knowledgeDocumento2 páginasGenius is made through grit and knowledgeVinit DaveAinda não há avaliações

- Statement of Purpose for MS in Computer ScienceDocumento2 páginasStatement of Purpose for MS in Computer ScienceMohammadRahemanAinda não há avaliações

- SOPDocumento2 páginasSOPgamidi67Ainda não há avaliações

- Statement of PurposeDocumento2 páginasStatement of PurposeGauri GanganiAinda não há avaliações

- Statement of Purpose Final DraftDocumento2 páginasStatement of Purpose Final Draftapi-420664028Ainda não há avaliações

- EssayDocumento3 páginasEssayARPIT GUPTA100% (2)

- 60 Super Tips To Write Statement of Purpose For Graduate SchoolDocumento14 páginas60 Super Tips To Write Statement of Purpose For Graduate SchoolMurali AnirudhAinda não há avaliações

- Sop PDFDocumento2 páginasSop PDFMANISH RAJ -Ainda não há avaliações

- Study Plan PDFDocumento3 páginasStudy Plan PDFAmieAinda não há avaliações

- SopDocumento7 páginasSopstoppopAinda não há avaliações

- Statement of PurposeDocumento2 páginasStatement of PurposeAmbika AzadAinda não há avaliações

- Statement of Purpose for Aerospace EngineeringDocumento2 páginasStatement of Purpose for Aerospace EngineeringHarsh ShethAinda não há avaliações

- Sop Cognitive Science IitkDocumento2 páginasSop Cognitive Science IitkAMITAinda não há avaliações

- Cloud ComputingDocumento12 páginasCloud ComputingcasanovakkAinda não há avaliações

- MS in SE Statement of Purpose by Vani MadhavaramDocumento3 páginasMS in SE Statement of Purpose by Vani MadhavaramNikhitha ReddyAinda não há avaliações

- Data Sop LeedsDocumento2 páginasData Sop LeedsTCR InnovationAinda não há avaliações

- Erasmus Mundus - Food For ThoughtDocumento12 páginasErasmus Mundus - Food For ThoughtwayaAinda não há avaliações

- Mba Sop SampleDocumento2 páginasMba Sop SampleRajshreyash AdhavAinda não há avaliações

- SopDocumento1 páginaSopdaneAinda não há avaliações

- Statement of Purpose CwruDocumento3 páginasStatement of Purpose CwruSuvraChakrabortyAinda não há avaliações

- Statement of Purpose: Name: Course OptedDocumento2 páginasStatement of Purpose: Name: Course Optedsoniya messalaAinda não há avaliações

- Statement of Purpose: Email Phone AddressDocumento2 páginasStatement of Purpose: Email Phone AddressmtechAinda não há avaliações

- Statement of Purpose Letter3 PDFDocumento2 páginasStatement of Purpose Letter3 PDFNitesh ShahAinda não há avaliações

- Statement of PurposeDocumento2 páginasStatement of PurposeVinayakh OmanakuttanAinda não há avaliações

- Sample SOPDocumento2 páginasSample SOPtushar3010@gmail.comAinda não há avaliações

- Dodda It Sop FinalDocumento2 páginasDodda It Sop FinalJammula Tej Mahanth AP19110020056Ainda não há avaliações

- VISVODAYA ENGINEERING COLLEGE, Affiliated To JNTU University, Anantapur, IndiaDocumento2 páginasVISVODAYA ENGINEERING COLLEGE, Affiliated To JNTU University, Anantapur, IndiapriyankaAinda não há avaliações

- Cyber Security Statement of PurposeDocumento3 páginasCyber Security Statement of PurposeRobin JamesAinda não há avaliações

- SopDocumento2 páginasSopNiroop Reddy0% (1)

- ResumeDocumento1 páginaResumeChristine XuAinda não há avaliações

- Vineeth NWMDocumento2 páginasVineeth NWMHinder StoneAinda não há avaliações

- General Arts and ScienceDocumento2 páginasGeneral Arts and Scienceapi-250601176Ainda não há avaliações

- SOPDocumento4 páginasSOPBhanukiran ParavastuAinda não há avaliações

- Sop MS CS 2Documento2 páginasSop MS CS 2Subhajit MondalAinda não há avaliações

- Edw Phase I Final Report1 12-16-05Documento50 páginasEdw Phase I Final Report1 12-16-05Mahfoz KazolAinda não há avaliações

- Student information systems literatureDocumento20 páginasStudent information systems literatureSherwin GipitAinda não há avaliações

- Development of An Alumni Database For A UniversityDocumento21 páginasDevelopment of An Alumni Database For A Universityvikkash arunAinda não há avaliações

- Computerized Student Management for St. Joseph Technical InstituteDocumento23 páginasComputerized Student Management for St. Joseph Technical InstitutetubenaweambroseAinda não há avaliações

- Educational Data Mining Techniques and Algorithms SurveyDocumento5 páginasEducational Data Mining Techniques and Algorithms SurveypriyaAinda não há avaliações

- Unit-3 MHD Part-2Documento12 páginasUnit-3 MHD Part-2amit621988Ainda não há avaliações

- Unit-3 MHD Part-1Documento12 páginasUnit-3 MHD Part-1amit621988Ainda não há avaliações

- Renewable Energy Fuel CellsDocumento14 páginasRenewable Energy Fuel Cellsamit621988100% (1)

- Zkad 003Documento16 páginasZkad 003amit621988Ainda não há avaliações

- Assignment 2Documento1 páginaAssignment 2amit621988Ainda não há avaliações

- Infosys TrainingDocumento5 páginasInfosys Trainingamit621988Ainda não há avaliações

- First Year FormatDocumento28 páginasFirst Year Formatamit621988Ainda não há avaliações

- Subject:: 2019-20 (EVEN) Subject Code: Roe-086 Section: Ee-ADocumento1 páginaSubject:: 2019-20 (EVEN) Subject Code: Roe-086 Section: Ee-Aamit621988Ainda não há avaliações

- SYLLABUSDocumento1 páginaSYLLABUSAnkit SrivastavaAinda não há avaliações

- APQRC Brochure - Jul17 PDFDocumento4 páginasAPQRC Brochure - Jul17 PDFamit621988Ainda não há avaliações

- First Year FormatDocumento28 páginasFirst Year Formatamit621988Ainda não há avaliações

- Assignment 2Documento1 páginaAssignment 2amit621988100% (1)

- CIA-III 2018-19 Even Kee 201Documento2 páginasCIA-III 2018-19 Even Kee 201amit621988Ainda não há avaliações

- JSS Academy Electrical Engineering AssignmentDocumento2 páginasJSS Academy Electrical Engineering Assignmentamit621988Ainda não há avaliações

- Are Smart Cities SmartDocumento7 páginasAre Smart Cities Smartamit621988Ainda não há avaliações

- Notice: Jss MahavidhyapeethaDocumento1 páginaNotice: Jss Mahavidhyapeethaamit621988Ainda não há avaliações

- J B Gupta-Unit-1 ProblemsDocumento4 páginasJ B Gupta-Unit-1 Problemsamit621988Ainda não há avaliações

- Gazet3 d09 NewDocumento254 páginasGazet3 d09 Newamit621988Ainda não há avaliações

- West Bengal Civil Service Exe. Etc. Exam 2018 NotificationDocumento7 páginasWest Bengal Civil Service Exe. Etc. Exam 2018 Notificationamit621988Ainda não há avaliações

- Electronics 08 00102 PDFDocumento21 páginasElectronics 08 00102 PDFamit621988Ainda não há avaliações

- Completed Done Developed Done Setupistobedone 80%: Research Area: Tentative TitleDocumento1 páginaCompleted Done Developed Done Setupistobedone 80%: Research Area: Tentative Titleamit621988Ainda não há avaliações

- Final Call IICPE2016Documento1 páginaFinal Call IICPE2016amit621988Ainda não há avaliações

- Duty Anti Ragging17-1Documento32 páginasDuty Anti Ragging17-1amit621988Ainda não há avaliações

- CO-po-pso NASDocumento3 páginasCO-po-pso NASamit621988Ainda não há avaliações

- IPC, NEE 453 Lab Co Po Pso MappingDocumento1 páginaIPC, NEE 453 Lab Co Po Pso Mappingamit621988Ainda não há avaliações

- JSS MAHAVIDYHAPEETHA Fluid Machinery CIA 1 Results and AnalysisDocumento18 páginasJSS MAHAVIDYHAPEETHA Fluid Machinery CIA 1 Results and Analysisamit621988Ainda não há avaliações

- CS - Enabling The Smart Grid in India-Puducherry Pilot ProjectDocumento3 páginasCS - Enabling The Smart Grid in India-Puducherry Pilot Projectdorababu2007Ainda não há avaliações

- AwardList EEE 409Documento12 páginasAwardList EEE 409amit621988Ainda não há avaliações

- The Peripatetic Observer 2003Documento10 páginasThe Peripatetic Observer 2003SUNY Geneseo Department of EnglishAinda não há avaliações

- Role of Audio Visual Aids in Developing Mathematical Skills at Secondary Level in District KohatDocumento11 páginasRole of Audio Visual Aids in Developing Mathematical Skills at Secondary Level in District KohatSampreety Gogoi100% (1)

- My Friend Narrative-180131055603Documento96 páginasMy Friend Narrative-180131055603เกลอ็ร์สัน ลาปร่าAinda não há avaliações

- Writing A Thesis StatementDocumento1 páginaWriting A Thesis Statementapi-228538378Ainda não há avaliações

- Topic OF M.ED. Dissertations (20210 - 11) : Department OF Education Aligarh Muslim University, AligarhDocumento3 páginasTopic OF M.ED. Dissertations (20210 - 11) : Department OF Education Aligarh Muslim University, AligarhAmrit VallahAinda não há avaliações

- Group IV - Activity 1 and 2Documento5 páginasGroup IV - Activity 1 and 2Morgan MangunayAinda não há avaliações

- Origami Is The Japanese Art of Paper FoldingDocumento3 páginasOrigami Is The Japanese Art of Paper FoldingAnonymous Ru61VbFjAinda não há avaliações

- WITS SRC Voters Guide PDFDocumento60 páginasWITS SRC Voters Guide PDFZaheer Ismail RanderaAinda não há avaliações

- November 2020 (v3) MS - Paper 2 CIE Biology IGCSEDocumento3 páginasNovember 2020 (v3) MS - Paper 2 CIE Biology IGCSEiman hafizAinda não há avaliações

- Recognition of Fuquay-Varina Middle School Heroes From December 8th IncidentDocumento3 páginasRecognition of Fuquay-Varina Middle School Heroes From December 8th IncidentKeung HuiAinda não há avaliações

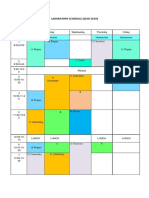

- Laboratory ScheduleDocumento3 páginasLaboratory ScheduleAyu ChristiAinda não há avaliações

- The Merciad, April 30, 2008Documento48 páginasThe Merciad, April 30, 2008TheMerciadAinda não há avaliações

- KSSR - Listening and SpeakingDocumento1 páginaKSSR - Listening and SpeakingNak Tukar Nama BlehAinda não há avaliações

- ARF Nursing School 05Documento7 páginasARF Nursing School 05Fakhar Batool0% (1)

- Sponsors of Literacy Essay #3Documento7 páginasSponsors of Literacy Essay #3Jenna RolfAinda não há avaliações

- Report On Learner'S Observed Values 1 2 3 4Documento2 páginasReport On Learner'S Observed Values 1 2 3 4jussan roaringAinda não há avaliações

- Mar 19-23 Lesson Plan 4 5th GradeDocumento3 páginasMar 19-23 Lesson Plan 4 5th Gradeapi-394190807Ainda não há avaliações

- MIT Department InfoDocumento20 páginasMIT Department InfonaitikkapadiaAinda não há avaliações

- The Dignity of Liberty: Pioneer Institute's 2016 Annual ReportDocumento48 páginasThe Dignity of Liberty: Pioneer Institute's 2016 Annual ReportPioneer InstituteAinda não há avaliações

- Background of The StudyDocumento22 páginasBackground of The Studythe girl in blackAinda não há avaliações

- Position Description FormDocumento3 páginasPosition Description FormGlen Mark MacarioAinda não há avaliações