Escolar Documentos

Profissional Documentos

Cultura Documentos

Performance Comparison of Classification Algorithms

Enviado por

Sreenath MuraliDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Performance Comparison of Classification Algorithms

Enviado por

Sreenath MuraliDireitos autorais:

Formatos disponíveis

International Journal of Advanced and Innovative Research (2278-7844) / # 193 / Volume 4 Issue 4

Performance Comparison of Classification Algorithms

Using WEKA

Sreenath.M#1, D.Sumathi*2

1

PG Scholar, 2Assistant Professor

Department of Computer Science and Engineering, PPG Institute of Technology, Coimbatore, India

1

sreenath.m.91@gmail.com

2

sumicse.sai@gmail.com

Abstract Data mining is the knowledge discovery process by

analysing the huge volume of data from various perspectives and

summarizing it into useful information. Data mining is used to

find cloaked patterns from a large data set. Classification is one

of the most important applications of data mining. Classification

techniques are used to classify data items into predefined class

label. Classification employs supervised learning technique.

During data mining the classifier builds classification models

from an input data set, which are used to predict future data

trends. For study purpose various algorithm available for

classification like decision tree, k-nearest neighbour ,Naive Bayes,

Neural Network, Back propagation, Artificial Neural, Multi class

classification, Multi-layer perceptron, Support vector Machine,

etc. In this paper we introduce four algorithms from them. In

this paper we have worked with different data classification

algorithms and these algorithms have been applied on NSL-KDD

dataset to find out the evaluation criteria using Waikato

Environment for Knowledge Analysis (WEKA).

Keywords classifier algorithms, data mining, nsl-kdd, oner,

Hoeffding tree, decision stump, alternating decision tree, weka.

I. INTRODUCTION

Data mining is the technique of automated data analysis to

reveal previously undetected dependence among data .Three

of the major data mining techniques are classification,

regression and clustering. In this research paper we are

working with the classification because it is most important

process, if we have a very huge database. Weka tool is used

for classification. Classification [1] is one of the most

important techniques in data mining to build classification

models from an input data set.

performance is tested by applying cross validation test mode

instead of using percentage split test mode [7].

II. RELATED WORKS

Data mining is the method of extracting information from vast

dataset. Their techniques apply advanced computation ways to

get unknown relations and total up results by analysing the

determined dataset to form these relations clear and

apprehensible. Hu and et.al [8] conducted experimental

comparison of C4.5, LibSVMs, AdaBoosting C4.5, Bagging

C4.5, and Random Forest on seven Microarray cancer

information sets.

They concluded that C4.5 was higher among all algorithms

and additionally found that information preprocessing and

cleansing improves the potency of classification algorithms.

Shin and et.al [9] conducted comparison between C4.5 and

Nave bayes and hence concluded that C4.5 is out performing

algorithm than Nave bayes. Sharma [10] conducted

experiment with weka environment by comparing four

algorithms specifically ID3, J48, easy CART and Alternating

decision Tree (ADTree).

He compared these four algorithms for spam email dataset in

terms of classification accuracy. According to his simulation

results, the J48 classifier performs better than ID3, CART and

ADTree in terms of classification accuracy. Abdul Hamid M.

Ragab and et.al [11] compared Classification Algorithms for

Students College Enrolment Approval Using Data Mining.

They found that C4.5 gives the best performance and accuracy

and lowest absolute errors, then PART, Random Forest,

These build models are used to predict future data trends [2, 3]. Multilayer Perceptron, and Nave Bayes, respectively.Table-1

Our knowledge about data becomes greater and easier once shows an outline for a few recent works associated with

the classification is complete. We can deduct logic from the classification algorithms performance and sort of the

classified data. Most of all it makes the data retrieval faster applications space for the experimental datasets used.

with better results and new data to be sorted easily.

It illustrates many data mining algorithms that may be applied

There are many data mining tools available [4, 5]. In this into completely different application space.

paper we will be using Weka data mining tool, which is an

open source tool developed using JAVA [6]. It contains tools

for data preprocessing, clustering, classification, visualization,

association rule, regression. It not only supports data

algorithms, but also Meta learners like bagging, boosting and

data preparation. Weka toolkit has achieved the highest

applicability among Orange, Tanagra, and KNIME,

respectively [4]. While using Weka for classification,

2015 IJAIR. All Rights Reserved

193

International Journal of Advanced and Innovative Research (2278-7844) / # 194 / Volume 4 Issue 4

TABLE I

A SUMMARY OF RELATED DATA MINING ALGORITHMS

AND THE APPLICATION DATA SETS USED.

Year

Authors

2010

Nesreen

Ahmed,Amir

Atiya,Neamat

Gayar,Hisham

Shishiny[12]

K.

F.

El

El-

Data mining

Algorithms

Data set

MLP,BNN ,RBF

,K

Nearest

Neighbor

Regression

M3

competition

data

2011

S. Aruna, Dr S.P.

Rajagopalan

and

L.V. Nandakishore

[13]

RBF

networks,Nave

Bayes,J48,CAR

T,SVM-RBF

kernel

WBC,

WDBC, Pima

Indians

Diabetes

database

2011

R. Kishore Kumar,

G. Poonkuzhali, P.

Sudhakar [14]

ID3, J48, Simple

CART

and

Alternating

Decision

Tree

(ADTree)

Spam

Data

2012

Abdullah

H.

Wahbeh,

Mohammed Al-Kabi

[15]

C4.5,SVM,

Nave Bayes

Arabic Text

2012

Rohit

Suman[16]

Arora,

C4.5, MLP

Diabetes and

Glass

2013

S. Vijayarani, M.

Muthulakshmi[17]

Attribute

Selected

Classifier,

Filtered

Classifier,

LogitBoost

Classifying

computer files

2013

Murat Koklu and

Yavuz Unal [18]

MLP, J48, and

Nave Bayes

Classifying

computer files

2014

Devendra

Tiwary[19]

Decision

Tree(DT), Nave

Bayes

(NB),

Artificial Neural

Networks

(ANN), Support

Vector Machine

(SVM).

Credit Card

Kumar

III. METHODOLOGY

We used Intel core i3 Processor platform which consist of 4

GB memory, Windows 7 ultimate operating system, a 500GB

secondary memory .In all the experiments, we used Weka

2015 IJAIR. All Rights Reserved

3.7.11, to find the performance characteristics on the input

data set.

A. Weka interface

Weka (Waikato environment for knowledge Analysis) is a

widely used machine learning software written in Java,

originally developed at the University of Waikato, New

Zealand. The weka suite contains a group of algorithms and

visualization tools for data analysis with graphical user

interfaces for easy access to this functionality.

The Weka is employed in many different application areas,

specifically for academic purposes and research. There are

numerous benefits of Weka:

It is freely obtainable under the gnu General Public

License.

It is portable, since it's totally implemented within

the Java programing language and therefore runs on

almost any architecture.

It is a large collection of data preprocessing and

modelling techniques.

It is simple to use because of its graphical interface.

Weka supports multiple data mining tasks specifically data

preprocessing, clustering, classification, regression, feature

selection and visualization. All techniques of Weka's software

are predicated on the belief that the data is obtainable as one

file or relation, wherever each data point is represented by a

fixed number of attributes.

B. Data set

NSL-KDD data set is used for evaluation. The NSL-KDD data

set is advised to resolve a number of the inherent issues of the

KDD CUP'99 data set. KDD CUP99 is the mostly wide used

data set for anomaly detection. However Tavallaee et al

directed a measurable investigation on this data set and found

two essential issues that enormously influenced the

performance of evaluated systems, and lands up in a very poor

analysis of anomaly detection approaches. To resolve these

problems, they projected anew data set, NSL-KDD that

consists of selected records of the whole KDD data set [20].

The following are the benefits of the NSL-KDD over the

original KDD data set: First, it doesn't include redundant

records within the train set, so the classifiers won't be biased

towards more frequent records. Second, the amount of

selected records from every difficulty level group is inversely

proportional to the share of records in the original KDD data

set. As a result, the classification rates of distinct machine

learning methods vary in a very wider range, which makes it

more efficient to have an accurate evaluation of different

learning techniques. Third, the quantities of records in the

train and test sets is sensible, that makes it reasonable to run

the experiments on the entire set without the requirement to

randomly choose a tiny low portion. Consequently, analysis

194

International Journal of Advanced and Innovative Research (2278-7844) / # 195 / Volume 4 Issue 4

results of different research works are going to be consistent

and comparable.

classification score is the total of the prediction values along

the corresponding ways.

C. Classification algorithms

The following classifier algorithms are taken for the

performance comparison on the NSL-KDD data set.

IV. CLASSIFIER PERFORMANCE MEASURES

A confusion matrix contains information regarding actual and

foreseen classifications done by a classification system.

Performance of such systems is often evaluated using the data

within the matrix. The following Fig. 1 shows the confusion

matrix,

(a) OneR

OneR [21], short for "One Rule", accurate and simple

classification algorithm that generates one rule for every

predictor within the data, then selects the rule with the tiniest

total error as its "one rule". To make a rule for a predictor, we

construct a frequency table for every predictor against the

target. It's been shown that OneR produces rules only slightly

less accurate than progressive classification algorithms

whereas producing rules that are easy for humans to interpret.

(b) Hoeffding Tree

A Hoeffding tree [22] is a progressive, anytime decision tree

induction algorithm that's capable of learning from data

streams, accepting that the distribution generating examples

doesn't change over the long run. Hoeffding trees exploit the

actual fact that a small sample will usually be enough to

decide on the optimal splitting attribute. This is determined

mathematically by the Hoeffding bound that quantifies the

amount of observations required to estimate some statistics

within a prescribed preciseness. One of the features of

Hoeffding Trees not shared by other incremental decision tree

learners is that its sound guarantees of performance.

(c) Decision Stump

A decision stump [23] is a machine learning model consisting

of one-level decision tree. That is, it's a decision tree with one

internal node that is instantly connected to its leaves. The

predictions made by decision stump are based on just one

input feature. They're also known as 1-rules.Decision stumps

are usually used as base learners in machine learning

ensemble techniques like boosting and bagging. For example,

the ViolaJones face detection algorithm employs AdaBoost

with decision stumps as weak learners.

(d) Alternating Decision Tree

An alternating decision tree (ADTree) [24], combines the

simplicity of distinct decision tree with the effectiveness of

boosting. The information illustration combines tree stumps, a

standard prototype deployed in boosting, into a decision tree

kind structure. The various branches aren't any longer

mutually exclusive. The root node could be a prediction node,

and has simply a numeric score. Consecutive layer of nodes

are decision nodes, and are basically a group of decision tree

stumps. Subsequent layer then consists of prediction nodes,

and so on, alternating between prediction nodes and call nodes.

A model is deployed by identifying the possibly multiple

ways from the root node to the leaves through the alternating

decision tree that correspond to the values for the variables of

an observation to be classified. The observation's

Fig. 1 Confusion matrix

The entries within the confusion matrix have meaning which

means within the context of our study:

a is that the number of correct predictions that an

instance is negative,

b is that the number of incorrect predictions that an

instance is positive,

c is that the number of incorrect of predictions that an

instance negative, and

d is that the number of correct predictions that an

instance is positive.

The following are the metrics that is used for the evaluation of

data set:

Accuracy: The accuracy is that the proportion of the

total number of predictions that were correct. it's

determined using the equation:

Accuracy=

Detection Rate::Detection Rate is the proportion of

the predicted positive cases that were correct, as

calculated using the equation:

Detection Rate=

False Alarm Rate: False Alarm Rate

is the

proportion of negatives cases that were incorrectly

classified as positive, as calculated using the equation

False Alarm Rate=b/ (a+b)

V. EXPERIMENTAL RESULTS AND COMPARATIVE

ANALYSIS

We investigated the performance of designated classification

algorithms .The classifications are done using 10-fold crossvalidation. In WEKA, all data is considered as instances and

features within the data are referred to as attributes. The

simulation results are divided into different bar charts for

easier analysis and evaluation. The Table-2 shows the

performance of classifier algorithms on NSL-KDD data set.

TABLE- II

2015 IJAIR. All Rights Reserved

195

International Journal of Advanced and Innovative Research (2278-7844) / # 196 / Volume 4 Issue 4

PERFORMANCE OF CLASSIFIER ALGORITHMS

Classifier

Accuracy

Detection rate

False alarm rate

oneR

0.94615

0.94954

0.06714

Decision

Stump

0.81733

0.94964

0.05025

Hoeffding

Tree

0.95120

0.95515

0.05952

ADTree

0.95094

0.94592

0.07321

The Fig.2 shows the Accuracy of classifiers on NSL-KDD

data set. From the result it can be observed that Hoeffding

Tree is the best classifier, followed by ADTree, oneR and

Decision Stump.

Fig. 3 Detection Rate of classifiers

The Fig. 4 shows the False Alarm Rate of classifiers on NSLKDD data set. The result of the experiment shows that,

Decision Stump is the best classifier, followed by Hoeffding

Tree, oneR and ADTree.

Fig. 2 Accuracy of classifiers

The Fig. 3 shows the Detection Rate of classifiers on NSLKDD data set. The experimental result shows that, Hoeffding

Tree is the best classifier, followed by Decision Stump, oneR

and ADTree.

Fig. 4 False Alarm Rate of classifiers

VI. CONCLUSION

Four classification algorithms are investigated in this paper

with NSL-KDD as data set. They included Hoeffding Tree,

ADTree, oneR and Decision Stump. Comparative study and

analysis related to classification measures included Accuracy,

Detection Rate and False Alarm Rate have been computed by

simulation using Weka Toolkit. Experimental Results show

that Hoeffding Tree gives the best performance in terms of

Accuracy and Detection Rate .But when we consider False

Alarm Rate; Decision Stump is the best performer.

2015 IJAIR. All Rights Reserved

196

International Journal of Advanced and Innovative Research (2278-7844) / # 197 / Volume 4 Issue 4

Technology Convergence and Services, vol. 1, pp. 81-92,

2011.

REFERENCES

[1]

[2]

[3]

[4]

[5]

O. Serhat and A.C Yilamaz, Classification And Prediction

In A Data Mining Application, Journal of Marmara for

Pure and Applied Sciences, Vol. 18, pp. 159-174.

[14]

H. Kaushik and B.G Raviya, Performance Evaluation of

Different Data Mining Classification Algorithm Using

WEKA, Indian Journal of Research(PARIPEX), Vol. 2

,2013.

R. Kishore Kumar, G. Poonkuzhali, and P. Sudhakar,

Comparative Study on Email Spam Classifier using Data

Mining Techniques, Proceedings of Int. MultConf. of

Engineers and Computer Scientists, Vol. 1, 2012.

[15]

H.W Abdullah and A.K Mohamedi, Comparative

Assessment of the Performance of Three, Basic Sci. &

Eng, Vol. 21, pp. 15- 28, 2012.

[16]

A. Rohit and Suman, Comparative Analysis of

Classification Algorithms on Different Datasets using

WEKA, International Journal of Computer Applications,

Vol. 54, pp. 21-25,2012.

[17]

S. Vijayarani1 and M. Muthulakshmi, Comparative Study

on Classification Meta Algorithms, International Journal

of Innovative Research in Computer and Communication

Engineering, Vol. 1, pp. 1768- 1774, 2013.

[18]

K. Murat and U. Yavuz, Analysis of a Population of

Diabetic Patients Databases with Classifiers, International

Journal of Medical, Pharmaceutical Science and

Engineering, Vol.7, pp.176- 178, 2013.

[19]

K.T Devendra, A Comparative Study of Classification

Algorithms for Credit Card Approval using WEKA,

GALAXY International Interdisciplinary Research Journal,

Vol.2,,pp. 165 174, 2014.

[20]

M. Tavallaee, E. Bagheri, W. Lu, and A. Ghorbani, A

detailed analysis of the KDD CUP99 data set, IEEE

International. Conference on Computational Intelligence in

Security and Defence Applications., pp. 5358, 2009.

[21]

Holte, C. Robert. "Very simple classification rules perform

well on most commonly used datasets." Machine learning,

Vol. 11, pp. 63-90,1993.

[22]

G. Hulten, S. Laurie, and D. Pedro, Mining timechanging data streams, Proceedings of the seventh ACM

SIGKDD international conference on Knowledge discovery

and data mining. 2001.

[23]

W.Iba and P. Langley, Induction of One-Level Decision

Trees, in Proceedings of the Ninth International

Conference on Machine Learning, pp. 233240,1992.

[24]

Y. Freund, L. Mason, The alternating decision tree

learning algorithm In Proceeding of the Sixteenth

International Conference on Machine Learning, pp. 124133, 1999.

WaiHoAu, KeithC, C.Chan and XinYao, A

NovelEvolutionaryDataMiningAlgorithm

with

Applications toChurn Prediction, IEEE Transactions On

Evolutionary Computation, Vol. 7, pp. 532- 545, 2003.

G. Karina, S.M Miquel, and S. Beatriz, Tools for

Environmental Data Mining and Intelligent Decision

Support, International Congress on Environmental

Modelling and Software, 2012.

C. Giraud-Carrier and O. Povel, Characterising Data

Mining software, Intelligent Data Analysis, pp. 181

192,2003.

[6]

H. Mark, F. Eibe, H. Geoffrey, P. Bernhard, R. Peter, H.W

Ian, The WEKA Data Mining Software: An Update,

SIGKDD Explorations, Vol. 11, 2009.

[7]

H.W Abdullah ,A.A Qasem , N.A Mohammed, and M.A

Emad , A Comparison Study between Data Mining Tools

over some Classification Methods, International Journal

of Advanced Computer Science and Applications, pp18-26,

2012.

H. Hong, L. Jiuyong, P. Ashley, W. Hua, and D. Grant, A

Comparative Study of Classification Methods for

Microarray Data Analysis, in Australasian Data Mining

Conference, 2006.

[8]

[9]

[10]

[11]

C.T My, S. Dongil, and S. Dongkyoo, A Comparative

Study of Medical Data Classification Methods Based on

Decision Tree and Bagging Algorithms, in Eighth IEEE

International Conference on Dependable, Autonomic and

Secure Computing, 2009.

K.S Aman, A Comparative Study of Classification

Algorithms for Spam Email Data Analysis, International

Journal on Computer Science and Engineering, Vol. 3, pp.

1890- 1895, 2011.

M. Ragab, Abdul Hamid., et al. , A Comparative Analysis

of Classification Algorithms for Students College

Enrollment Approval Using Data Mining., Proceedings

of the 2014 Workshop on Interaction Design in

Educational Environments. 2014.

[12]

K. Ahmed, Nesreen., et al., An empirical comparison of

machine learning models for time series forecasting.,

Econometric Review,s ,Vol. 29 , pp. 594-621, 2010.

[13]

S. Aruna, S.P. Rajagopalan and L.V. Nandakishore, An

Empirical Comparison of Supervised Learning Algorithms

in Disease Detection, International Journal of Information

2015 IJAIR. All Rights Reserved

197

Você também pode gostar

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- DLL Earth and Life Science Feb 27-Mar 3Documento4 páginasDLL Earth and Life Science Feb 27-Mar 3Jonas Miranda Cabusbusan100% (2)

- 10th Prelims 1st Stage 2022 Question Paper With Answer Key 1Documento17 páginas10th Prelims 1st Stage 2022 Question Paper With Answer Key 1Sreenath MuraliAinda não há avaliações

- 10th Prelims Phase 4 Question Paper With Answer KeyDocumento15 páginas10th Prelims Phase 4 Question Paper With Answer KeySreenath MuraliAinda não há avaliações

- 10th Prelims Stage 5 Question Paper & Answer KeyDocumento15 páginas10th Prelims Stage 5 Question Paper & Answer KeySreenath MuraliAinda não há avaliações

- 10th Level Preliminary Phase 3 Question & Ans MarkedDocumento17 páginas10th Level Preliminary Phase 3 Question & Ans MarkedSreenath MuraliAinda não há avaliações

- 10th Prelims Phase 2 Question Paper With Answer MarkedDocumento15 páginas10th Prelims Phase 2 Question Paper With Answer MarkedSreenath MuraliAinda não há avaliações

- A Comprehensive Review On Intrusion Detection SystemsDocumento4 páginasA Comprehensive Review On Intrusion Detection SystemsSreenath MuraliAinda não há avaliações

- Contextualizing Language Lessons - Ecrif & ContextDocumento21 páginasContextualizing Language Lessons - Ecrif & ContextmonikgtrAinda não há avaliações

- Veer Surendra Sai University of Technology: Burla NoticeDocumento3 páginasVeer Surendra Sai University of Technology: Burla NoticeTathagat TripathyAinda não há avaliações

- Tugas PAI Salsabila MaharaniDocumento9 páginasTugas PAI Salsabila MaharanislsblraniAinda não há avaliações

- CAPM® Certification: Lesson 1-IntroductionDocumento8 páginasCAPM® Certification: Lesson 1-IntroductionK RamanadhanAinda não há avaliações

- AMDPG01 Exemption Fees For Research StudentsDocumento1 páginaAMDPG01 Exemption Fees For Research StudentsMuizzudin AzaliAinda não há avaliações

- Grade 8 - June 4 Unlocking of DifficultiesDocumento4 páginasGrade 8 - June 4 Unlocking of DifficultiesRej Panganiban100% (1)

- Teaching Music in Elementary Grades: Preliminary Examination SY: 2021-2022 Test 1Documento3 páginasTeaching Music in Elementary Grades: Preliminary Examination SY: 2021-2022 Test 1Saturnino Jr Morales FerolinoAinda não há avaliações

- Online Learning: A Boon or A Bane?Documento4 páginasOnline Learning: A Boon or A Bane?Roshena sai Dela torreAinda não há avaliações

- General Bulletin 38-2022 - Teachers' Salary Scale 2021-2022Documento3 páginasGeneral Bulletin 38-2022 - Teachers' Salary Scale 2021-2022team TSOTAREAinda não há avaliações

- Social Science Teacher ResumeDocumento2 páginasSocial Science Teacher Resumeapi-708711422Ainda não há avaliações

- Interpersonal IntelligenceDocumento3 páginasInterpersonal Intelligenceangelica padilloAinda não há avaliações

- Learning Plan: (Mapeh - Music)Documento11 páginasLearning Plan: (Mapeh - Music)Shiela Mea BlancheAinda não há avaliações

- Xxxxx-Case BDocumento1 páginaXxxxx-Case BCharlene DalisAinda não há avaliações

- 8607 - Field Notes Fazi KhanDocumento15 páginas8607 - Field Notes Fazi KhanDaud KhanAinda não há avaliações

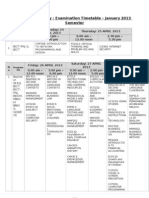

- Examination Timetable - January 2013 SemesterDocumento4 páginasExamination Timetable - January 2013 SemesterWhitney CantuAinda não há avaliações

- Syllabus Uapp697 WhiteDocumento6 páginasSyllabus Uapp697 Whiteapi-431984277Ainda não há avaliações

- 20 Rules of Formulating Knowledge in LearningDocumento14 páginas20 Rules of Formulating Knowledge in LearningSipi SomOfAinda não há avaliações

- Teaching Strategies GOLD Assessment Touring Guide WEBDocumento36 páginasTeaching Strategies GOLD Assessment Touring Guide WEBdroyland8110% (1)

- Group 4 - BSNED - 3 (Portfolio Assessment)Documento67 páginasGroup 4 - BSNED - 3 (Portfolio Assessment)Magdaong, Louise AnneAinda não há avaliações

- Teacher & Aide Evaluation 06-CtcDocumento4 páginasTeacher & Aide Evaluation 06-CtccirclestretchAinda não há avaliações

- Homeroom Guidance: Quarter 1 - Module 1: Decoding The Secrets of Better Study HabitsDocumento10 páginasHomeroom Guidance: Quarter 1 - Module 1: Decoding The Secrets of Better Study HabitsMA TEODORA CABEZADA100% (1)

- He - Keeping Our Classroom CleanDocumento19 páginasHe - Keeping Our Classroom CleanNoumaan Ul Haq SyedAinda não há avaliações

- Science 4-Q4-SLM16Documento15 páginasScience 4-Q4-SLM16Ana ConseAinda não há avaliações

- IJRR74Documento8 páginasIJRR74LIRA LYN T. REBANGCOSAinda não há avaliações

- Tesol Portfolio - Teaching Philosophy FinalDocumento6 páginasTesol Portfolio - Teaching Philosophy Finalapi-361319051Ainda não há avaliações

- Pry 1 Week 1Documento1 páginaPry 1 Week 1Segun MajekodunmiAinda não há avaliações

- Toaz - Info Grade 3 3rd Quarter DLP in English Final PDF PRDocumento330 páginasToaz - Info Grade 3 3rd Quarter DLP in English Final PDF PRhazel atacadorAinda não há avaliações

- Name:: Year 7 Performing Arts News Report Project EvaluationDocumento2 páginasName:: Year 7 Performing Arts News Report Project EvaluationajpAinda não há avaliações

- QRT4 WEEK 8 TG Lesson 101Documento9 páginasQRT4 WEEK 8 TG Lesson 101Madonna Arit Matulac67% (3)