Escolar Documentos

Profissional Documentos

Cultura Documentos

Fuzzy Decision Tree

Enviado por

Sandeep DasDescrição original:

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Fuzzy Decision Tree

Enviado por

Sandeep DasDireitos autorais:

Formatos disponíveis

Journal of Information, Control and Management Systems, Vol. 4, (2006), No.

143

LEARNING FUZZY RULES FROM FUZZY DECISION

TREES

Karol MATIAKO, Jn BOHIK, Vitaly LEVASHENKO, tefan KOVALK

University of ilina, Faculty of Management Science and Informatics,

Slovak Republic

e-mail: Karol.Matiasko@fri.utc.sk, Jan.Bohacik@fri.utc.sk,

Vitaly.Levashenko@fri.utc.sk, Stefan.Kovalik@fri.utc.sk

Abstract

Classification rules are an important tool for discovering knowledge from databases.

Integrating fuzzy logic algorithms into databases allows us to reduce uncertainty which

is connected with data in databases and to increase discovered knowledges accuracy.

In this paper, we analyze some possible variants of making classification rules from a

given fuzzy decision based on cumulative information. We compare their classification

accuracy with the accuracy which is reached by statistical methods and other fuzzy

classification rules.

Keywords: fuzzy rules, fuzzy decision trees, classification, fuzzy logic, decision

making, machine learning

1

INTRODUCTION

Now, data repositories are growing quickly and contain huge amount of data from

commercial, scientific, and other domain areas. According to some estimates, the

amount of data in the world is doubling every twenty months [7]. Because of it,

information and database systems are widely used. The current generation of database

systems is based mainly on a small number of primitives of Structured Query

Language (SQL). The database systems usually use relational databases which consist

of relations (tables) [11]. The tables have attributes in columns and instances in rows.

Because of increasing data, these tables contain more and more covert information.

The covert information can't be found out and transformed into knowledge by classical

SQL queries any more. One approach how to extract knowledge from the tables is to

search dependences from the data.

There are a lot of ways how to describe the dependences among data: statistical

methods Nave Bayes Classifier, k-Nearest Neighbor Classifier etc., neural networks,

decision tables, decision trees, classification rules.

144

Learning Fuzzy Rules from Fuzzy Decision Trees

Nave Bayes Classifier (NBC) represents each instance as a N-dimensional vector

of attribute values [a1, a2, , aM]. Given that there are mc classes c1, , ck, , cmc,

the classifier predicts a new instance (unknown vector X = [a1, a2, , aM]) as

belonging to the class ck having the highest posterior probability conditioned on X. In

other words, X is assigned to class ck if and only if P(ck/X) > P(ck/X) for 1 j mc and

j k. NBC is very simple, it requires only a single scan of the data, thereby providing

high accuracy and speed for large databases. Their performance is comparable to that

of decision trees and neural networks. However, there arise inaccuracies due to a) the

simplified assumptions involved and b) a lack of available probability data or

knowledge about the underlying probability distribution [13, 20].

K-Nearest Neighbor Classifier (k-NN) assumes all instances correspond to points

in the n-dimensional space Rn. During learning, all points with known classes are

remembered. When a new point is classified, the k-nearest points to the new point are

found and are used with a weight for determining the new points class. For the sake of

increasing classification accuracy, greater weights are given to closer points during

classification [12]. For large k, the algorithm is computationally more expensive than a

decision tree or neural network and requires efficient indexing. When there are lots of

instances with known classes, a reduction of their number is needed.

A neural network consists of a system of interconnected neurons. A neuron, in a

simplified way, takes positive and negative stimuli (numerical values) from other

neurons and when the weighted sum of the stimuli is greater than given threshold

value, it activates itself. The neurons output value is usually a non-linear

transformation of the sum of stimuli. In more difficult models, the non-linear

transformation is adapted by some continuous functions. Neural networks are good for

classification. They are an alternative to decision trees or rules when understandable

knowledge is not required. A disadvantage is that it is sometimes difficult to determine

the optimal number of neurons [14].

A decision table is the simplest, most rudimentary way of representing

knowledge. It has instances in rows and attributes in columns. The attributes describe

some properties of the instances. One (or more if its needed) of the attributes are

considered as class attribute. Its usually the last columns attribute. The class attribute

classifies the instances into a class - an admissible value of the class attribute. A

decision table is simply makeable and useable, e. g. a matrix. On the other hand, such a

table may become extremely large for complex situations and its usually hard to

decide which attributes to leave out without affecting the final decision [15].

A group of classification rules is also a popular way of knowledge

representation. Each rule in the group usually has the following form: IF Condition

THEN Conclusion. Both Condition and Conclusion contain one or more

attribute is attributes value and there are operators among them, such as OR, AND.

All classification rules in a group have in their conclusions only the same class

attributes. A group of rules is used for classifying instances into classes [2].

Journal of Information, Control and Management Systems, Vol. 4, (2006), No. 2

145

Another knowledge representation is a (crisp) decision tree. Such a decision tree

consists of several nodes. There are two types of nodes: a) the root and internal nodes,

b) leaves. The root and internal nodes are associated with a non-class attribute.

Leaves are associated with a class. Basically, each non-leaf node has an outgoing

branch for each its attributes possible. To classify a new instance using a decision tree,

beginning with the root, successive internal nodes are visited until a leaf is reached. At

the root or each internal node, the test for the node is applied to the instance. The

outcome of the test at an internal node determines the branch traversed, and the next

node visited. The class for the instance is simply the class of the final leaf node [5].

Thus, the conjunction of all the conditions for the branches from the root to a leaf

constitutes one of the conditions for the class associated with the leaf. It implicates a

decision tree can be represented by classification rules which use AND operator.

The paper is organized as follows. Section 2 explains why its needed to integrate

fuzzy logic into FDTs and fuzzy rules. It also offers a survey of FDTs inductions

methods. Section 3 describes how to make fuzzy classification rules from FDTs based

on cumulative information and how to use them for classification. Section 4

demonstrates our studys experimental results on several databases. Section 6

concludes this work.

2

DATA IMPERFECTION AND FUZZY DECISION TREES

To be able to work with real data its needed to consider cognitive uncertainties.

Because of it, data arent often accurate and contain some uncertainty. These

uncertainties are caused by various reasons, such as a) there is no reliable way how to

measure something e. g. a companys market value, a persons value, etc., b) it can

be too expensive to measure something exactly, c) vagueness it is associated with

humans difficulty of making sharp boundaries e. g. its strange for people to say its

hot when its 30 C and its not hot when its 29.9C, d) ambiguity it is associated

with one-to-many relations, i.e., situations with two or more alternatives such that the

choice between them is left unspecified. These problems with real data have been

successfully solving thanks to fuzzy sets and fuzzy logic for several years. Fuzzy sets

are a generalization of (crisp) sets. In the case of a set, an element x either is the sets

member or isnt. In the case of a fuzzy set, an element x is the sets member with given

membership degree which is denoted by membership function (x). (x) has a value in

the continuous interval 0 to 1 [23]. Fuzzy sets are one of main ideas of Fuzzy Logic,

which is considered as an extension of Boolean Logic as well as Multi-Valued Logic

[21].

Decision trees, which make use of fuzzy sets and fuzzy logic for solving the

introduced uncertainties, are called Fuzzy decision trees (FDTs). It has been developed

several algorithms for making FDTs so far. The algorithms usually make use of

principles of ID3 algorithm which was initially proposed by Quinlan [16] and which

was meant for making crisp decision trees. The algorithms a top-down greedy

heuristic, which means that makes locally optimal decisions at each node. The

146

Learning Fuzzy Rules from Fuzzy Decision Trees

algorithm uses minimal entropy as a criterion at each node. So-called Fuzzy ID3 is a

direct generalization of ID3 to fuzzy case. Such an algorithm uses minimal fuzzy

entropy as a criterion, e. g. [19, 17]. Notice that there are several definitions of fuzzy

entropy [1]. A variant of Fuzzy ID3 is [22] which uses minimal classification

ambiguity instead of minimal fuzzy entropy. An interesting investigation to ID3-based

algorithms, which uses cumulative information estimations, was proposed in [10].

These estimations allow us to build FDTs with different properties (unordered,

ordered, stable, etc.). There are also some algorithms, which are quite different from

ID3. For example, Wang et al. [18] present optimization principles of fuzzy decision

trees based on minimizing the total number and average depth of leaves. The algorithm

minimizes fuzzy entropy and classification ambiguity at node level, and uses fuzzy

clustering so as to merge branches. Another non-Fuzzy ID3 algorithm is discussed in

[4]. The algorithm uses look-ahead method. Its goal is to evaluate so-called

classifiability of instances that are split along branches of a give node.

One way how to make fuzzy rules is a transformation of fuzzy decision trees. In a

crisp case, each leaf of the tree corresponds to one rule. The condition of the rule is a

group of attribute is attributes value which are connected with operator AND. These

attributes in the condition are attributes associated with the nodes in the path from the

root to a considered leaf. The attributes values are the values associated with outgoing

branch of a particular attributes node in the path. The conclusion of the rule is class

attribute is class. The conclusion is the output for the condition. In other words, its

classification for a known instance whose values correspond to the condition. This

notion is based on rules made from crisp decision tree [16]. In this paper in section 3,

we describe a generalization for a fuzzy case. For a fuzzy case, the class values for a

new instance (or, more exactly, membership functions values for particular classes)

are described by several rules which belong to one or more leaves.

3

FUZZY CLASSIFICATION RULES INDUCTION.

The main goal of a made group of classification rules is to determine the value of

the class attribute from the attributes values of a new instance. In this section, we

explain the mechanism of making fuzzy rules from FDTs and the mechanism of their

use for classification. To explain the mechanisms, a FDT which is made on basis of

cumulative information is considered [10].

Let the FDT be made from a database whose instances are described by N

attributes A = {A1, ..., Ai, , AN}. Let an attribute Ai take mi possible values ai,1, ai,2,

, ai,mi, which is denoted by Ai = {ai,1, ..., ai,j, , ai,mi}. In the FDT, each non-leaf

node is associated with an attribute from A. When Ai is associated with a non-leaf

node, the node has mi outgoing branches. The j-th branch of the node is associated with

value ai,j Ai. In general, the database can contain several class attributes which

depend on non-class attributes. Because of simplicity, only one class attribute C is

considered here. The class attribute C has mc possible values c1, ..., ck, , cmc, which is

Journal of Information, Control and Management Systems, Vol. 4, (2006), No. 2

147

denoted by C = {c1, ..., ck, , cmc}. Let the FDT have R leaves L = {l1, ..., lr, ..., lR}. It

is also considered there is a value Fkr for each r-th leaf lr and each k-th class ck. This

value Fkr means the certainty degree of the class ck attached to the leaf node lr.

Now, the transformation of this FDT into fuzzy rules is described. In fuzzy cases,

a new instance e may be classified into different classes with different degrees. In other

words, the instance e is classified into each possible class ck with a degree. For that

reason, a separate group of classification rules can be considered for each class ck,

1kmc. Lets suppose the group of classification rules for ck. Then, each leaf lr L

corresponds to one r-th classification rule. The condition of the r-th classification rule

is a group of attribute is attributes value which are connected with operator AND.

These attributes in the condition of r-th rule are attributes associated with the nodes in

the path from the root to the r-th leaf. The attributes values are the values associated

with the respective outgoing branches of the nodes in the path. The conclusion of the rth rule is C is ck. Lets consider that in the path from the root to the r-th leaf there are

q nodes associated with attributes Ai1, Ai2, , Aiq and respectively their q outgoing

branches associated with the values ai1,j1, ai2,j2, ,aiq,jq. Then the r-th rule has the

following form:

IF Ai1 is ai1,j1 AND Ai2 is ai2,j2 AND AND Aiq is aiq,jq THEN C is ck (with

thruthfulness Fkr ).

In the two following paragraphaps, two ways how to use these fuzzy classification

rules for classification of a new instance e into a class ck, 1kmc, are described. The

first way uses only one classification rule whereas the second uses one or more rules

for the classification. The classification means that the degree the instance e is

classified into the class ck with is denoted by membership function ck(e). ck(e) has a

value in the continuous interval 0 to 1. It is also supposed that all attributes values

membership functions of the new instance e, ai,j(e), ai,j Ai, Ai A, are known.

The first way is based on [16] and was initially meant for classification in crisp

cases. In crisp case, an attributes possible value either is the attributes value or isnt.

In other words, either ai,j(e)=1 or ai,j(e)=0 for ai,j Ai, Ai A. Also, just one ai,j(e) is

equal 1 for ai,j Ai, all the others are equal 0. In fuzzy case, there can be more than one

ai,j(e)>0 for ai,j Ai. To be able to use this first way, ai,j(e) with the maximal value

among all ai,j Ai can be set equal 1 and all the others equal 0. The instance with such

membership functions values is called rounded one. Then one rule is chosen from the

made group of rules. It is the r-th rule whose attributes values ai1,,j, ai2,,j, , aiq,,j

respectively match with ai,j with ai,j(e)=1 of the rounded instance. After that, ck(e) is

set equal Fkqr.

Learning Fuzzy Rules from Fuzzy Decision Trees

148

The second way uses one or more classification rules from cks group for

classification of e into a class ck. The reason why it is like this is that because there

may be several ai,j(e)>0 for a node in the FDT. Thats why, there may be several paths

whose all outgoing nodes branches are associated with ai,j where ai,j(e)>0. And each

this path corresponds to one classification rule. But because of the ai,j(e) are not all

equal 1, it is clear that such each rule should be included in the final value of ck(e)

with a certain weight. The weight is for instance e and the r-th rule in cks group given

by the following form:

Wr (e ) =

a path _ r

(e) ,

where a(e) is the value of the membership function of a attributes value a and path_r

is a set of all attributes values in the condition of r-th classification rule. The weight

Wr(e) is equal 0 if there is a attributes value a whose a(e)=0 in the condition of the rth rule. The membership functions value for the new instance e and for class ck is:

R

ck(e)= Fkr *Wr (e) ,

r =1

r

k

where F is the thruthfulness of the r-th rule, or in other words, the certainty degree of

the class ck attached to the leaf node lr.

If, in the first or the second way, classification only into one class is needed,

instance e is classified into the class ck whose ck(e) k=1, 2, , cmc is maximal.

Let us describe the process of transformation of the FDT into fuzzy rules and the

two mentioned ways of their use for classification with the following examples.

Example 1 An instance is described by N=4 attributes A = {A1, A2, A3, A4} = {Outlook,

Temp(erature), Humidity, Wind} and the instances are classified with one class

attribute C. Each attribute Ai = {ai,1, ..., ai,j, , ai,mi} is defined as follows: A1 = {a1,1,

a1,2, a1,3} = {sunny, cloudy, rain}, A2 = {a2,1, a2,2, a2,3} = {hot, mild, cool}, A3 = {a3,1,

a3,2} = {humid, normal}, A4 = {a4,1, a4,2} = {windy, not windy}. The class attribute C =

{c1, ..., ck, , cmc} is defined as follows: C = Plan= {c1, c2, c3} = {volleyball,

swimming, weight lifting}. There is a FDT in Fig.1. The FDT was made from a

database by using cumulative information. The database and the mechanism how to

build it are described in [9]. The FDT has R=9 leaves. In the leaves there are written

values of Fkr for respective ck, k=1, 2, 3. Our goal is to determine ck(e), k=1, 2, 3 for a

new instance e which is described in the Tab.1 on basis of the FDT.

Journal of Information, Control and Management Systems, Vol. 4, (2006), No. 2

149

Temp (A2)

hot (a2,1)

cool (a2,3)

mild (a2,2)

Outlook (A1)

Outlook (A1)

sunny (a1,1)

cloudy (a1,2)

F19=16.3%

F19= 4.9%

F19=78.8%

rain (a1,3)

rain (a1,3)

sunny (a1,1)

F18=30.7%

F15=32.9%

8

5

F2 =23.9% cloudy (a1,2) F2 =10.6%

8

F2 =58.7%

F35=43.2%

F13=37.6% F14=11.0%

F23=50.4% F24=16.3%

F33=12.0% F34=72.7%

Wind (A4)

Wind (A4)

windy (a4,1)

not windy (a4,2)

windy (a4,1)

not windy (a4,2)

F11=14.2%

F21=67.8%

F31=18.0%

F12=33.2%

F22=62.3%

F32= 4.5%

F16=25.1%

F26= 6.7%

F26=68.2%

F17=55.1%

F27=14.2%

F37=30.7%

l1

l2

l6

l7

Figure 1 An example of a FDT adopted from [9].

Table 1 A new instance e with unknown ck(e)=?, k=1, 2, 3.

Attributes

and

their a1,1

values

ai,j(e)

0.9

A1,2

A1

a1,3

a2,1

A2

A2,2

a2,3

a3,1

A3

a3,2

a4,1

A4

A4,2

0.1

0.0

1.0

0.0

0.0

0.8

0.2

0.4

0.6

The classification rules for class c1 (c1group of rules) made from the FDT in Fig.1.

have the following form:

r=1: IF Temp is hot AND Outlook is sunny AND Wind is windy THEN Plan is

volleyball (thruthfulness F11 = 0.142)

r=2: IF Temp is hot AND Outlook is sunny AND Wind is not windy THEN Plan is

volleyball (thruthfulness F12 = 0.332)

Learning Fuzzy Rules from Fuzzy Decision Trees

150

r=3: IF Temp is hot AND Outlook is cloudy THEN Plan is volleyball (thruthfulness F13

= 0.376)

r=9: IF Temp is cool THEN Plan is volleyball (thruthfulness F19 = 0.163)

Similarly, c2group of 9 rules and c3group of 9 rules can be determined.

The following Examples 2, 3 show how to use the fuzzy classification rules.

Example 2 (classification with one fuzzy rule). First, instance e in Tab.1 is transformed

into rounded instance e in Tab.2.

Table 2 The rounded instance e.

Attributes

A1

a1,2 a1,3

a2,1

and their

values

ai,j(e)

1

0

0

A2

A3

A4

a2,2

a1,2

a1,3

a2,1

a2,2

a1,2

a4,2

It means that, for each Ai (i=1, 2, 3, 4), ai,j(e) (j=1, , mi) with the maximal

value among all ai,j Ai is set equal 1 and all the others equal 0. When ai,j with

ai,j(e)=1 are chosen, the following set {a1,1, a2,1, a3,1, a4,2} = {sunny, hot, humid, not

windy} is obtained. The elements of the set matches with attributes values of fuzzy

classification rule r=2 in Example 1. Therefore, c1(e) for class c1 is computed by

c1(e) = F12 = 0.332. Similarly, c2(e) = F22 = 0.623 on bases of c2group of rules, c3(e)

= F32 = 0.045 on bases of c3group of rules. The maximum value has c2(e). Because of

it, if classification only into one class is needed, instance e is classified into class c2.

Example 3 (classification with several fuzzy rules). Paths, whose all outgoing nodes

branches which are associated with ai,j whose ai,j(e)>0, are shown in several bold

branches in Fig.1. In this situation, its needed to use rules which correspond to leaves

l1, l2, l3. The respective classification rules r = 1, 2, 3 for class c1 are mentioned in

Example 1. For the rule r=1, path_r = {a2,1, a1,1, a4,1}. And so, for instance e described

in Tab.1., W1(e) = 0.9*1.0*0.4 = 0.36. Similarly, W2(e) = 0.9*1.0*0.6= 0.54, W3(e) =

0.1*1.0 = 0.1. All the other Wr(e) are equal 0, r=4, 5, , 9.

Then, c1(e) = 0.142*0.36 + 0.332*0.54 + 0.376*0.1 + 0.11*0 + + 0.163*0 = 0.268.

When its done for c1, c2, c2(e) = 0.631 and c3(e) = 0.101 will be obtained. The

maximum value has c2(e). And so, if classification only into one class is needed,

instance e is classified into class c2.

Journal of Information, Control and Management Systems, Vol. 4, (2006), No. 2

151

EXPERIMENTAL RESULTS

The main purpose of our experimental study is to compare the two mentioned

ways of using fuzzy classification rules made from FDT based on cumulative

information for classification. Their classification accuracy is also compared with other

methods. They all have been coded in Java and the experimental results are obtained

on AMD Turion64 1.6 GHz with 1024 MB of RAM.

The experiments have carried out on selected Machine Learning databases [6].

First, if it was needed, the databases were fuzzyfied with an algorithm introduced in

[8]. Then we separated each database into 2 parts. We used the first part (70% from the

database) for building classification models. The second part (30% from initial

database) was used for verification these models. This process of separation and

verification was repeated 100 times to obtain the models error rate for respective

databases. The error rate is calculated as the ratio of the number of misclassification

combinations to the total number of combinations.

The results of our experiments are in Tab.3, where CA-MM denotes FDT-based

fuzzy classification rules algorithm proposed in [22], CA-SP denotes a CA-MMs

modification which is introduced in [3], CI-RM-M denotes algorithm [10] for making

FDT with cumulative information and which uses the second way of fuzzy

classification rules induction from previous section 3, CI-RM-0 uses the first way of

fuzzy classification rules induction from previous section 3, NBC denotes Nave Bayes

Classifier, k-NN denotes k-Nearest Neighbour Classifier. The numbers in round

brackets denote the order of the method for respective database, number 1 denotes the

least and also the best error rate. The last row contains average error rate for all

databases and respective methods.

Table 3 The error rates comparison of respective methods.

Database

BUPA

Ecoli

Glass

Haberman

Iris

Pima

Wine

Average

Error rate for given database and classification method.

CA-MM

CA-SP

CI-RM-M CI-RM-O NBC

k-NN

0.4253(4) 0.4174(2)

0.4205(3) 0.4416(6)

0.3910(1)

0.4253(4)

0.3112(6)

0.3053(5) 0.2022(2)

0.2654(4) 0.1547(1)

0.2046(3)

(3)

(2)

(5)

(6)

0.4294

0.3988

0.4647

0.5394

0.3335(1)

0.4430(4)

0.2615(3) 0.2624(5)

0.2609(2) 0.2453(1)

0.3471(6)

0.2615(3)

(2)

(4)

(1)

(5)

(2)

0.0400

0.04067

0.02956

0.04556

0.05044(6)

0.0400

(2)

(1)

(5)

(3)

0.2460

0.2436

0.2563

0.2483

0.3091(6)

0.2509(4)

0.06094(4) 0.04566(2) 0.06509(5) 0.02697(1) 0.05094(3)

0.08170(6)

(6)

0.2951

0.2526(5) 0.2301(1)

0.2518(4) 0.2431(3)

0.2409(2)

Learning Fuzzy Rules from Fuzzy Decision Trees

152

Figure 2 The normalized average error rates comparison.

In Figure 2, there is a normalized average error rate for respective methods. It is

computed as: the respective average error rate / the highest average error rate * 100. It

can be seen CI-RM-M which uses several classification rules for classification is

almost 10% better than CI-RM-O and its the best of all methods compared here.

5

CONCLUSION

This paper investigated the mechanism of making fuzzy rules from FDTs based on

cumulative information and two ways of their use for classification of a new instance

into a class. One way is a direct fuzzy analogy of crisp case when one classification

rule is used for the new instances classification. The other way is a generalization of

the former. This uses several classification rules and each rule is included in the

classifications result with a certain weight. Both the ways were compared with each

other. The generalized way has almost 10% better results than the other one on selected

databases and also is the best of all compared methods.

ACKNOWLEDGEMENTS

This research was supported by the grants of Scientific Grant Agency of the

Ministry of Education of Slovak Republic and the Slovak Academy of Science (grant

ZU/05 VV_MVTS13). We would like to say thank you Dr. Irina Davidovskaja (from

Belarusian State Economic University) for constructive comments.

Journal of Information, Control and Management Systems, Vol. 4, (2006), No. 2

153

REFERENCES

[1]

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

SPOSITO, V. A: Linear programming with statistical applications, Iowa, USA

1989.

AI-SHARNAN, S., KARRAY, F., GUAIEB, W., BASIR, O.: Fuzzy Entropy: a

Brief Survey. IEEE International Fuzzy Systems Conference (2001).

BERKA, P.: Knowledge discovery in databases. Academia, Praha, 2003

(Czech).

BOHIK, J.: An Analysis of algorithms for making fuzzy rules. Master

Thesis, The University of ilina, 2006 (Slovak).

DONG, M., KOTHARI, R.: Look-ahead based fuzzy decision tree induction.

IEEE Transactions on Fuzzy Systems 3, Vol. 9 (2001), 461-468.

GAROFALAKIS, M., HYUN, D., RASTOGI, R., SHIM, K.: Building Decision

Trees with Constraints. Data Mining and Knowledge Discovery 7 (2003), 187214.

HETTICH, S., BLAKE, C. L., MERZ ,C. J.: UCI Repository of Machine

Learning Databases [http://www.ics.uci.edu/~mlearn/MLRepository.html].

Irvine, CA, University of California, Dept. of Information and Computer

Science (1998).

JOHN, G. H.: Enhacements to the Data Mining Process. PhD Thesis, Dept. of

Computer Science, Standford University (1997).

LEE, H.-M., CHEN, C.-M., CHEN, J.-M., JOU, Y.-L.: An efficient fuzzy

classifier with feature selection based on fuzzy entropy. IEEE Transaction on

Systems , Man, and Cybernetics Part B: Cybernetics 3, vol. 31 (2001), 426432.

LEVASHENKO, V., KOVALK, ., DAVIDOVSKAJA, I.: Stable fuzzy

decision tree induction. Annual Database Conference DATAKON 2005, Brno

(2005), 273-280.

LEVASHENKO, V., ZAITSEVA, E.: Usage of New Information Estimations

for Induction of Fuzzy Decision Trees. Proc of the 3rd IEEE Int. Conf. on

Intelligent Data Eng. and Automated Learning, Kluwer Publisher (2002), 493499.

MATIAKO, K.: Database Systems. EDIS Print, ilina, 2002 (Slovak).

MITCHELL, T. M.: Machine Learning. McGraw-Hill Companies, USA, 1997.

MITRA, S., ACHARYA, T.: Data mining multimedia, soft computing, and

bioinformatics. Wiley, 2003.

NILSSON, N. J.: Introduction to machine learning. An early draft of a proposed

textbook, nilsson@cs.stanford.edu, 1996.

POOCH, W.: Translation of decision tables. Computing Surveys 2, Vol. 6

(1974).

154

Learning Fuzzy Rules from Fuzzy Decision Trees

[16] QUINLAN, J. R.: Induction of decision trees. Machine Learning 1 (1986), 81106.

[17] UMANOL, M., OKAMOTO, H., HATONO, I., TAMURA, H., KAWACHI, F.,

UMEDZU, U., KINOSHITA J.: Fuzzy decision trees by fuzzy ID3 algorithm

and its application to diagnosis system. IEEE Int. Conf. on Fuzzy Systems

(1994), 2113-2118.

[18] WANG, X. Z., CHEN, B., QIAN, G., YE, F.: On the optimization of fuzzy

decision trees. Fuzzy Sets and Systems 2, Vol. 112 (2000), 117-125.

[19] WEBER, R.: Fuzzy ID3: A class of methods for automatic knowledge

acquisition. 2nd Int. Conf. on Fuzzy Logic and Neural Networks (1992), 265268.

[20] WITTEN, I. H., FRANK, E.: Data Mining Practical Machine Learning Tools

and Techniques with Java Implementations. Morgan Kaufman, 1999.

[21] YEN, J.: Fuzzy Logic - A modern perspective. IEEE Transactions on

Knowledge and Data Engineering 1, Vol. 11 (1999), 153-165.

[22] YUAN, Y., SHAW, M. J: Induction of fuzzy decision trees. Fuzzy Sets and

Systems 69 (1995), 125-139.

[23] ZADEH, L. A.: Fuzzy set. Information and Control 8 (1965), 338-353.

Você também pode gostar

- SOP 10. LabView Data Acquisition ProgramDocumento7 páginasSOP 10. LabView Data Acquisition ProgramMaqsood AhmedAinda não há avaliações

- Decision Tree PrintDocumento4 páginasDecision Tree PrintANKUR SharmaAinda não há avaliações

- Remote Sensing and GIS ProjectDocumento25 páginasRemote Sensing and GIS Projectsumitpai01Ainda não há avaliações

- Technical Proposal UMSDocumento15 páginasTechnical Proposal UMSAhmadFadhlanAbdHamidAinda não há avaliações

- Final Year Project (Proposal Defense) (Autosaved)Documento10 páginasFinal Year Project (Proposal Defense) (Autosaved)Ashwin VelAinda não há avaliações

- User Manual For CASPER GISDocumento34 páginasUser Manual For CASPER GIScharlsandroid01100% (1)

- System Concept in HydrologyDocumento2 páginasSystem Concept in Hydrologyska51100% (1)

- Geo Ras TutorialDocumento49 páginasGeo Ras TutorialirpanchAinda não há avaliações

- Report Mini ProjectDocumento50 páginasReport Mini ProjectNurul Aina FarhanisAinda não há avaliações

- Weather InstumentsDocumento23 páginasWeather InstumentsTakchandra JaikeshanAinda não há avaliações

- Introduction To Synthetic Aperture Sonar PDFDocumento27 páginasIntroduction To Synthetic Aperture Sonar PDFnaivedya_mishraAinda não há avaliações

- Marxan Tutorial CompressDocumento114 páginasMarxan Tutorial CompressEddy HamkaAinda não há avaliações

- Class NoteDocumento20 páginasClass NoteMinn ZawAinda não há avaliações

- Wild Facial Expression RecognitionDocumento11 páginasWild Facial Expression Recognitionminhaz21Ainda não há avaliações

- Swatpluseditor-Docs-1 2 0Documento126 páginasSwatpluseditor-Docs-1 2 0AlexNeumann100% (1)

- Submarine RadomeDocumento75 páginasSubmarine RadomeKarthikeya ReddyAinda não há avaliações

- SWAT Hydrological ModellingDocumento19 páginasSWAT Hydrological ModellingPrasadAinda não há avaliações

- ARIANE 5 Failure - Full ReportDocumento12 páginasARIANE 5 Failure - Full ReportSreedhar GundlapalliAinda não há avaliações

- Final New Pro v2.7 New12Documento128 páginasFinal New Pro v2.7 New12Manjeet SinghAinda não há avaliações

- GIS Lecture6 GeoprocessingDocumento88 páginasGIS Lecture6 GeoprocessingGIS ConsultantAinda não há avaliações

- A Review of Geological ModelingDocumento6 páginasA Review of Geological ModelingWang Xu WangAinda não há avaliações

- The Elements and Importance of Site InvestigationDocumento12 páginasThe Elements and Importance of Site InvestigationArun KhanAinda não há avaliações

- A DEM Generalization by Minor Valley Braion and Detection and Grid FillingDocumento10 páginasA DEM Generalization by Minor Valley Braion and Detection and Grid FillingRuben VAinda não há avaliações

- What Is RTK (Real-Time Kinematic) ?Documento9 páginasWhat Is RTK (Real-Time Kinematic) ?Renz Janfort Junsay GraganzaAinda não há avaliações

- Master Pengolahan BatimetriDocumento355 páginasMaster Pengolahan BatimetriYadi SuryadiAinda não há avaliações

- 2013 Responsibility Matrix v17 WAssetListsDocumento179 páginas2013 Responsibility Matrix v17 WAssetListsKelvinatorAinda não há avaliações

- Introduction To Model Coupling in Earth System Science and Recent DevelopmentsDocumento40 páginasIntroduction To Model Coupling in Earth System Science and Recent Developmentsbartimuf7097Ainda não há avaliações

- Fao Soil TutorialDocumento13 páginasFao Soil TutorialSazzan Npn100% (1)

- 03-Geocoding & Georeferencing in ArcGISDocumento10 páginas03-Geocoding & Georeferencing in ArcGISXuNi NtmAinda não há avaliações

- A Study On Ariane 5 Failure and Reusability of ComponentsDocumento5 páginasA Study On Ariane 5 Failure and Reusability of ComponentsPuja DevgunAinda não há avaliações

- Clustering Techniques and Their Applications in EngineeringDocumento16 páginasClustering Techniques and Their Applications in EngineeringIgor Demetrio100% (1)

- Final ReportDocumento57 páginasFinal ReportJay BavarvaAinda não há avaliações

- Progress Report PDFDocumento5 páginasProgress Report PDFapi-302647308100% (1)

- Ijciet: International Journal of Civil Engineering and Technology (Ijciet)Documento10 páginasIjciet: International Journal of Civil Engineering and Technology (Ijciet)IAEME PublicationAinda não há avaliações

- Introduction To Geophysics CHAPTER ONEDocumento44 páginasIntroduction To Geophysics CHAPTER ONEAbraham SileshiAinda não há avaliações

- Sentinel-2 User Handbook PDFDocumento64 páginasSentinel-2 User Handbook PDFSahadeb Chandra MajumderAinda não há avaliações

- Digital Change Detection Techniques Using Remote Sensor DataDocumento20 páginasDigital Change Detection Techniques Using Remote Sensor Datasantoshborate28025100% (3)

- Weighted ScoringDocumento2 páginasWeighted ScoringNnisa Yakin0% (1)

- Perspex ManualDocumento40 páginasPerspex ManualGarry TaylorAinda não há avaliações

- Geodesign With Little Time and Small DataDocumento13 páginasGeodesign With Little Time and Small DataqpramukantoAinda não há avaliações

- Aurora Cidr03Documento12 páginasAurora Cidr03CobWangAinda não há avaliações

- Book-Data CommunicationDocumento57 páginasBook-Data CommunicationBindu Devender MahajanAinda não há avaliações

- Appendix 3 Cable Management and Route PlanningDocumento5 páginasAppendix 3 Cable Management and Route Planningkaushikray06Ainda não há avaliações

- Stochastic Analysis of Benue River Flow Using Moving Average (Ma) ModelDocumento6 páginasStochastic Analysis of Benue River Flow Using Moving Average (Ma) ModelAJER JOURNALAinda não há avaliações

- Theory of TidesDocumento13 páginasTheory of TidesbaktinusantaraAinda não há avaliações

- Mousetrap Car Lab Report FormatDocumento1 páginaMousetrap Car Lab Report FormatblueberryAinda não há avaliações

- An Improved LOD Specification For 3D Building ModelsDocumento13 páginasAn Improved LOD Specification For 3D Building Modelsminh duongAinda não há avaliações

- Analytic SurfacesDocumento109 páginasAnalytic SurfacesGabriela DogoterAinda não há avaliações

- Shoreline Change Analysis and Its Application To Prediction-A Remote Sensing and Statistics Based ApproachDocumento13 páginasShoreline Change Analysis and Its Application To Prediction-A Remote Sensing and Statistics Based ApproachFirman Tomo SewangAinda não há avaliações

- Lab Manual Geo InformaticsDocumento53 páginasLab Manual Geo InformaticsSami ullah khan BabarAinda não há avaliações

- Fekadu MoredaDocumento226 páginasFekadu MoredaSudharsananPRSAinda não há avaliações

- PTDF Research Proposal RGUDocumento6 páginasPTDF Research Proposal RGUmayo ABIOYEAinda não há avaliações

- Cadastral Survey RequirementsDocumento220 páginasCadastral Survey Requirementssudhakarraj01100% (2)

- Drainage Morpho Me TryDocumento6 páginasDrainage Morpho Me TryaashiqAinda não há avaliações

- Graduate MSC Research Proposal TemplateDocumento1 páginaGraduate MSC Research Proposal TemplateszarAinda não há avaliações

- Spatial Data Infrastructure (SDI) in China Some Potentials and Shortcomings PDFDocumento36 páginasSpatial Data Infrastructure (SDI) in China Some Potentials and Shortcomings PDFchokbingAinda não há avaliações

- IMCORRDocumento247 páginasIMCORRSebastián Esteban Cisternas GuzmánAinda não há avaliações

- Classification Algorithm in Data Mining: AnDocumento6 páginasClassification Algorithm in Data Mining: AnMosaddek HossainAinda não há avaliações

- Decision Trees For Handling Uncertain Data To Identify Bank FraudsDocumento4 páginasDecision Trees For Handling Uncertain Data To Identify Bank FraudsWARSE JournalsAinda não há avaliações

- Paper IJRITCCDocumento5 páginasPaper IJRITCCTakshakDesaiAinda não há avaliações

- Green Mode Power Switch For Valley Switching Converter - Low EMI and High Efficiency FSQ0365, FSQ0265, FSQ0165, FSQ321Documento22 páginasGreen Mode Power Switch For Valley Switching Converter - Low EMI and High Efficiency FSQ0365, FSQ0265, FSQ0165, FSQ321Mekkati MekkatiAinda não há avaliações

- Nonlinear Behavior of Piezoelectric AccelerometersDocumento2 páginasNonlinear Behavior of Piezoelectric AccelerometersscouttypeAinda não há avaliações

- Electromach EX Poster2016 PDFDocumento1 páginaElectromach EX Poster2016 PDFphilippe69Ainda não há avaliações

- BCMT Module 5 - Monitoring and Evaluating Tech4ED CentersDocumento30 páginasBCMT Module 5 - Monitoring and Evaluating Tech4ED CentersCabaluay NHS0% (1)

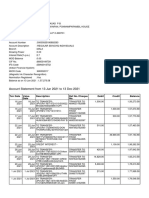

- Account Statement From 13 Jun 2021 To 13 Dec 2021Documento10 páginasAccount Statement From 13 Jun 2021 To 13 Dec 2021Syamprasad P BAinda não há avaliações

- Intercepting #Ajax Requests in #CEFSharp (Chrome For C#) Network Programming inDocumento8 páginasIntercepting #Ajax Requests in #CEFSharp (Chrome For C#) Network Programming inFaisal Tifta ZanyAinda não há avaliações

- DevOps Tools InstallationDocumento4 páginasDevOps Tools InstallationNitin MandloiAinda não há avaliações

- RAC Frequently Asked Questions (RAC FAQ) (Doc ID 220970.1)Documento60 páginasRAC Frequently Asked Questions (RAC FAQ) (Doc ID 220970.1)rlamtilaaAinda não há avaliações

- 3 - DHCP and Wireless Basic SecurityDocumento3 páginas3 - DHCP and Wireless Basic SecurityDmddldldldlAinda não há avaliações

- LG 2300N - DVDDocumento9 páginasLG 2300N - DVDh.keulder1480Ainda não há avaliações

- Service Manual: DSC-F77/FX77Documento2 páginasService Manual: DSC-F77/FX77tm5u2rAinda não há avaliações

- CS201 Introduction To Programming Solved MID Term Paper 03Documento4 páginasCS201 Introduction To Programming Solved MID Term Paper 03ayesharahimAinda não há avaliações

- Enterprise Resourse Planning - Assessment-3Documento8 páginasEnterprise Resourse Planning - Assessment-3Sha iyyaahAinda não há avaliações

- EF4e Int EndtestB AnswersheetDocumento2 páginasEF4e Int EndtestB AnswersheetB Mc0% (1)

- TM811TRE.30-ENG - APROL Runtime System PDFDocumento64 páginasTM811TRE.30-ENG - APROL Runtime System PDFAnton KazakovAinda não há avaliações

- Control Card For SB-3C Security Barrier With Multiplexer Photocell System SB-6P Reference HandbookDocumento10 páginasControl Card For SB-3C Security Barrier With Multiplexer Photocell System SB-6P Reference HandbookEdnelson CostaAinda não há avaliações

- Lab 1Documento8 páginasLab 1Masud SarkerAinda não há avaliações

- 2 2 2 2 2 2 "Enter The Elements of 1st Matrix/n": Int Float IntDocumento12 páginas2 2 2 2 2 2 "Enter The Elements of 1st Matrix/n": Int Float IntSagar SharmaAinda não há avaliações

- ZTE UMTS Idle Mode and Channel Behavior Feature Guide U9.2Documento102 páginasZTE UMTS Idle Mode and Channel Behavior Feature Guide U9.2Pushp SharmaAinda não há avaliações

- Reclaiming Your MemoryDocumento11 páginasReclaiming Your Memoryaz9471Ainda não há avaliações

- Oracle® Provider For OLE DB: Developer's GuideDocumento66 páginasOracle® Provider For OLE DB: Developer's GuideGF JairAinda não há avaliações

- Samsung Monte CodesDocumento2 páginasSamsung Monte CodesideaamulAinda não há avaliações

- LOGO-Simply Ingenious For Small AutomationDocumento8 páginasLOGO-Simply Ingenious For Small Automationcc_bauAinda não há avaliações

- Bangladesh Railway PDFDocumento1 páginaBangladesh Railway PDFMamun NiDAinda não há avaliações

- Codesys Professional Developer Edition: Professional Add-On Tools For The IEC 61131-3 Development SystemDocumento3 páginasCodesys Professional Developer Edition: Professional Add-On Tools For The IEC 61131-3 Development SystemPauloRpindaAinda não há avaliações

- Annex 4 (Word Format)Documento1 páginaAnnex 4 (Word Format)Amores, ArmandoAinda não há avaliações

- Python Go HackersDocumento23 páginasPython Go HackersAdaAdaAjeChanel33% (3)

- A Complete Guide To Alpha OnlineDocumento13 páginasA Complete Guide To Alpha OnlinejoaovictorrmcAinda não há avaliações

- Fuerte, Danielle Angela 06-17 Vlog (G) Ing Sino Ka Man, On Vlogs and Created Filipino IdentitiesDocumento83 páginasFuerte, Danielle Angela 06-17 Vlog (G) Ing Sino Ka Man, On Vlogs and Created Filipino IdentitiesSherren NalaAinda não há avaliações

- Lenovo G460e Compal LA-7011P PAW10 Rev1.0A SchematicDocumento41 páginasLenovo G460e Compal LA-7011P PAW10 Rev1.0A Schematicserrano.flia.coAinda não há avaliações