Escolar Documentos

Profissional Documentos

Cultura Documentos

A Newton Raphson Method For The Solution of System of Equations

Enviado por

sujith reddyDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

A Newton Raphson Method For The Solution of System of Equations

Enviado por

sujith reddyDireitos autorais:

Formatos disponíveis

JOURNAL

OF MATHEMATICAL

ANALYSIS

AND

15, 243-252 (1966)

APPLICATIONS

A Newton-Raphson

Method for the Solution

Systems of Equations

ADI

Tech&n-Israel

Institute

of

BEN-ISRAEL

of Technology and Northwestern

University*

Submitted by Richard Bellman

INTRODUCTION

The Newton-Raphson

method for solving an equation

f(x) =o

is based upon the convergence,

sequence

X la+1

x,

--

under

(1)

suitable

conditions

[l,

(p=O,1,2;**)

;g$

21, of the

(2)

to a solution of (l), where x,, is an approximate solution. A detailed discussion

of the method, together with many applications, can be found in [3].

Extensions to systems of equations

f&l, ***,x7&>

=0

or

f(x) = 0

(3)

f*(% 3**-,x78)= 0

are immediate

in case: m = n, [l], where the analog of (2) is?

X

p+1 = x, -

Hx,)

f(x,)

(P = 0, 1, -*).

(4)

Extensions and applications in Banach spaces were given by Hildebrandt

and Graves [4], Kantorovic [5-71, Altman [8], Stein [9], Bartle [lo], Schroder

[ll] and others. In these works the Frechet derivative replaces J(x) in (4),

yet nonsingularity

is assumed throughout the iterations.

* Part of the research underlying this report was undertaken for the Office of Naval

Research, Contract Nonr-1228(10),

Project NR 047-021, and for the U.S. Army

Research Office-Durham,

Contract No. DA-31-124-ARO-D-322

at Northwestern

University.

Reproduction

of this paper in whole or in part is permitted for any purpose of the United States Government.

* See section on notations below.

243

244

BEN-ISRAEL

The modified Newton-Raphson method

X 9+1 = % - J-Yxo> f(x,)

(P = 0, 1, -)

(5)

was extended in [17] to the case of singular J(x,), and conditions were given

for the sequence

X p+1 = xp - J(xrJ> fb,)

(P = 0, 1, --)

(6)

to converge to a solution of

J*(x& f(x) = 0.

(7)

In this paper the method (4) IS

lk

1 ewise extended to the case of singular

J(xJ, and the resulting sequence (12) is shown to converge to a stationary

point of Z&fiz(x).

NOTATIONS

Let Ek denote the k-dimensional (complex) vector space of vectors x,

with the Euclidean norm /I x I/ = (x, x)l12. Let EmXR denote the space of

m x n complex matrices with the norm

Ij A jl = max (~6: h an eigenvalue of A*A},

A* being the conjugate transpose of A.

These norms satisfy [12]:

IIAx II G IIA II IIx II

for every

XEE~,

A E Emx.

Let R(A), N(A) denote the range resp. null spaceof A, and A+ the generalized

inverse of A, [13].

For u E Ek and r > 0 let

S(u, Y) = {x E Ek : II x -u

II < r}

denote the open ball of radius Y around u.

The components of a function f : En + Em are denoted by J(x), (a = 1,

. . ., m). The Jacobian off at x E En is the m x n matrix

J(x)= (F),

~-\;:::yJ.

For an open set S C I?, the function f : En --+ E* is in the class C(S) if the

mapping En -+ Elxn given by x ---f J(x) is continuous for every x E S [4].

A NEWTON-RAPHSON

245

METHOD

RESULTS

THEOREM 1. Let f : En -+ Em be a function, x0 a point in E, and r > 0

be such that f E c(S(x, , Y)).

Let M, N be positive constants such that for all u, v in S(x, , Y) with

11- v E q]*(v)):

IIJ(v) (u - v) - f(u) + f(v) II < M IIu - v II

03)

IIU+(v) - J+(u)>f(u) II < iv IIu - v I/

(9)

and

for all

M II J+(x) II + N = k < 1

x E WJ , r>

II JkJ II IIfb,) II < (1 - 4 r*

(10)

(11)

Then the sequence

X D+l =

XXI -

(P

J+(%)fw

(12)

0, 1, .**)

convergesto a solution of

J*(x) f(x) = 0

(13)

which lies in S(x,, , t).

PROOF. Let the mapping g : En + En be defined by

g(x) = x - J(x) f (4.

(14)

Equation (12) now becomes:

%+1

gw

(p = 0, 1, ***)

(15)

(p = 1, 2, *em).

(16)

We prove now that

x, E S(x, , T)

For p = 1, (16) is guaranteed by (11). Assuming (16) is true for all subscripts < p, we prove it for: p + 1. Indeed,

%+1

-x,

XP -x,-1

J+(%-l)

J(%-1)

J"b,-1)

ukl-1)

J'(x,)f(x,)

(x,

+

-

(x,

X,-l)

J+(%-l>

-

-x9-1)

We-J

J+G%J

-

fbb)

f&J

+

J'(XD-1)

fh-1)

f(XD-Jl

+ (J+(%-l) - J+(%N fed

(17)

J&-l)

(18)

where

x,

-x,-1

J+bd

(XD -x,-J

follows from

x,

x,-l~w++(x,-l))

w*(x,-d

w-9

246

BEN-ISRAEL

and A+A being the perpendicular projection on R(A*) [14]. Setting u = x, ,

v = xp-1 in (8) and (9), we conclude from (17), (19), and the induction

hypothesis that

-% II < w II I+(%) II + w I/%

l/%+1

II

-x,-1

(20)

and from (10)

II%+1 - x, 81< k IIqJ -

XD-1

II ,

(21)

which implies

-

II%+1

and, finally,

x0 /I <

j=l

kj

I/ x1 -

x0 I/ =

4';

IIXl

;;'

x0

(22)

II

with (11)

IIx9+1

- x0 11< k(1 - kP) r < r,

which proves (16).

Equation (21) proves indeed that the mappingg

IIdx,) - &,-d

(23)

is a contraction

II G k IIx, - xg--1II < IIx, - x9-1 II

in the sense:

(p = 1, 2, ***).

(24)

The sequence {x,},

(p = 0, 1, .*e), converges

therefore

to a vector x*

in

qxo , y).

x* is a solution of (13). Indeed,

IIx* - &*) II < IIx* -

II + IId%> - dx*> II

%+1

G Itx* - xa+l II + k IIx, -x*

where the right-hand

side of (25) tends to zero as p +

(26)

by (14), to

which is equivalent

since

(25)

co. But

x* = !dx*)

is equivalent,

II5

Jb*)

f(x*)

= 0,

(27)

J*(x*)

f(x*)

= 0

(28)

to

N(A+) = N(A*)

for every

A EE~X~ [14].

Q.E.D.

REMARKS.

(a)

If m = n and the matrices 1(x,) are nonsingular,

(p = 0, 1, **a), then (12) reduces to (4), which converges to a solution of (3).

In this case (13) and (3) are indeed equivalent because N(J*(x,))

= (0).

(b)

From

J*(x)f(x)= + grad(z$f~2(4)

(29)

A NEWTON-WHSON

247

METHOD

it follows that the limit x* of the sequence (12) is a stationary point of

~~ifi2(x),

which by Theorem 1 exists in S(x, , Y), where (8)-(11) are satisfied. Even when (3) has a solution in S(x, , r), the sequence (12) does not

necessarily converge to it, but to a stationary point of Cz=,fi2(x) which may

be a least squares solution of (3), or a saddle point of the function CErfi2(x),

etc.

DEFINITION.

A point x is an isolated point for the function f in the linear

manifold L if there is a neighborhood U of x such that

YEUr-IL,

# x - f(Y) # f(x).

THEOREM 2. Let the function f : En--j Em be in the class c(S(u, Y)). Then

u is an isolated point for f in the linear manifold {u + R( J*(u))}.

PROOF.

Suppose the theorem is false. Then there is a sequence

xk E s(u, Y) n {u + R(]*(u))}

(k = 1, 2, )

(30)

such that

and

xk-+u

f(xk) = f(u)

(A = 1, 2, *.)

(31)

From f E C(S(u, r)) it follows that

11 f(u

zk>

f(u)

JW

Zk

11 d

s(il

zk

II)

11 zk

11

(32)

where,

xk = u + !&

(k = 1, 2, a**)

(33)

t -+ 0.

(34)

and

8(t) +O

as

Combining (31) and (32) it follows that

(35)

The sequence{z,}/11 zI, /I, (k = 1, 2, ***), consists of unit vectors which by (30)

and (33) lie in R( J*(u)), and by (35) and (34) converge to a vector in N( J(u)),

a contradiction.

Q.E.D.

REMARKS

(a) In case the linear manifold {u + R(J*(u))} is the whole

space En, (i.e., N(J(u)) = (O}, i.e., the columns of J(u) are linearly independent), Theorem 2 is due to Rodnyanskii [ 151and was extended to Banach

spaces by S. Kurepa [16], whose idea is used in our proof.

(b) Using Theorem 2, the following can be said about the solution x*

of (13), obtained by (12):

248

BEN-ISRAEL

COROLLARY. The limit X* of the sequence (12) is an isolated zero for the

function 1*(x.+) f( x ) zn

th e Iinear manifold {xx + R(J*(x,))},

unless 1(x*) = 0

the zero matrix.

PROOF. x* is in the interior of S(x, , r), therefore f E C'(S(x, , Y,)) for

some Y* > 0. Assuming the corollary to be false it follows, as in (35), that

where uk = x* + zk , (K = 1,2, *a*), is a sequence in {x* + R(J*(x,))}

converging to x* and such that

0 = J*(x*) f(x*) = 1*(x*) f(uk)*

Excluding the trivial case: J(x.+.) = 0 (see remark below), it follows that

(zk/jl zk: I/}, (K = 1,2, a-.), is a sequence of unit vectors in R(J*(x,)), which

by (36) converges to a vector in N(J*(x,) 1(x*)) = N(J(x,)), a contradicQ.E.D.

tion.

REMARK: The definition of an isolated point is vacuous if the linear

manifold L is zero-dimensional. Thus if J(x*) = 0, then R(j*(x,)) = (0)

and every x E En is a zero of J*(x*) f (x).

EXAMPLES

The following examples were solved by the iterative method:

Xwx+k+l

%ol+k

J+(x,,)

f(Xzw+kh

(37)

where 01> 0 is an integer

and

p = 0, 1, **a

For a = 1: (37) reduces to (12), (Y= 0 yields the modified Newton method

of [17], and for (Y> 2 : 01is the number of iterations with the modified method

[17], (with the Jacobian J(xsa), p = 0, 1, a**), between successive computations of the Jacobian J(xPd) and its generalized inverse. In all the examples

worked out, convergence (up to the desired accuracy) required the smallest

number of iterations for (Y= 1; but often for higher values of (Yless computations (and time) were required on account of computing 1 and /+ only

A NEWTON-RAPHSON

249

METHOD

once in 01iterations. The computations were carried out on Philco 2000. The

method of [18] was used in the subroutine of computing the generalized

inverse J+.

EXAMPLE

1. The system of equations is

Equation (13) is

whose solutions are (0,O) a saddle point of Cfi2(x) and (1, l), (- 1, - 1)

the solutions of f(x) = 0. In applying (37), derivatives were replaced by

differences with Ax = 0.001.

Some results are

a=3

1

1.578143

1.355469

1.287151

1.199107

1.155602

1.118148

2.327834

0.222674

1.139125

1.094615

0.088044

0.543432

0.585672

0.037454

0.292134

6.766002

1.501252

0.429757

1.008390

1.008365

1.000981

1.000980

1.000118

1.000118

1.oooooo

1.oOOooo

fh,+J

0.033649

0.000025

0.016825

0.003924

o.oooooo

0.001962

0.000472

0.000000

0.000236

o.ooooO0

o.oooooo

o.oooooO

Cfi

0.001415

0.000019

o.oooooo

0.000000

~0: + k

%,tk

f(xm+J

0

3.oOOoOo

2.OOOOoO

11 .oooOO

1.ooOOo

5.00000

147.0000

pa + k

%u+!i

Note the sharp improvement for each change of Jacobian (iterations:

1, 4, and 7).

250

BEN-ISRAEL

CY=5

1.050657

1.04343 1

1.001078

3.00107*~

1.000002

1.000002

0.192630

0.007226

0.096289

0.004315

0.000000

0.002157

0.000009

0.000000

0.000004

0.000000

1 0.000000

I 0.000000

~ 0.000023

0.000000

0.000000

--I

3.000000

2.000000

11 .OOooo

1.ooooo

5.00000

147.0000

0.046430

i

I

1.000000

1.oooooo

The Jacobian and its generalized inverse were twice calculated (iterations

6), whereas for 01= 3 they were calculated 3 times.

I,

a = 10

T

pa + k

I_

Cfi"

10

11

12

3.0

2.0

1.003686

1.003559

1.OOOOOS

1.000008

1.oooooo

1.oooooo

11.0

1.0

5.0

0.014516

0.000128

0.007258

0.000032

0.000000

0.000016

0.000000

o.oooooo

o.oooooo

147.0

0.000263

0.000000

0.000000

EXAMPLE

2.

f(x) =

f&q

f&

i f&l

, x2) = x12 + x2 - 2 = 0

) x2) = (X1 - 2)s + $2 - 2 = 0

7x2) = (x1 - 1)s + $2 - 9 = 0.

This is an inconsistent system of equations, whose least squares solutions are

(l.OOOOOO, 1.914854) and (1.000000, - 1.914854). Applying (37) with 01= 1

and exact derivatives, resulted in the sequence:

A NEWTON-RAPHSON

METHOD

251

.10.0ooo0

20.00000

1.oooooo

12.116667

498.0000

462.0000

472.0000

145.8136

145.8136

137.8136

684232.0

61515.80

fkJ

Ef Ax,)

EXAMPLE

1.oooooo

6.209640

3.400059

1.oooooo

37.55963

37.55963

29.55963

3695.223

.P

_-

10.56041

10.56041

25.60407

.-

229.60009

1.oooooo

2.239236

1.oooooo

1.938349

1.oooooo

1.914996

1.oooooo

1.914854

4.014178

4.014178

-3.985822

2.757199

2.757199

-5.242801

2.667212

2.667212

-5.332788

2.666667

2.666667

-5.333333

42.691255

42.666667

48.114030

42.666667

3.

1 x1 , x2) = x1 + x2 - 10 = 0

f(x) = 1;2;xl , x2) = x1x2 - 16 = 0.

The solutions of this system are (2,s) and (8,2). However, applying (12) with

an initial x0 on the line: x1 = xa , results in the whole sequence being on the

same line. Indeed,

so that for

J+(xo) =

and consequently x1 is also on the line:

the sequence (12) will, depending on

(4.057646,4.057646)

or (- 3.313982,

squares solutions on the line: xi = xa

409/rglz-6

41

x,, =

1

+

0

a

la

a2)

( 1 a>

xi = xa . Thus confined to: x1 = xa ,

the choice of x,, , converge to either

- 3.313982). These are the 2 least

.

252

BEN-ISRAEL

ACKNOWLEDGMENT

The author gratefully acknowledges

by the SWOPE Foundation.

the computing

facilities

made available to him

REFERENCES

of Numerical Analysis, McGraw-Hill,

I. A. S. HOUSEHOLDER. Principles

2. A. M. OSTROWSKI. Solution

of Equations and Systems of Equations,

1953.

Academic

Press, New York, 1960.

and Nonlinear Boundary-Value

3. R. BELLMAN AND R. KALABA. Quasilinearization

Problems. American Elsevier, New York ,1965.

4. T. H. HILDEBRANDT

AND L. M. GRAVES. Implicit functions and their differentials

in general analysis. Trans. Amer. Muth. Sot. 29 (1927), 127- 153.

5. L. V. KANTOROVIE.

On Newtons method for functional equations. Dokl. Akad.

Nuuk SSSR (N.S.) 59 (1948) 1237-1240; (Math. Rev. 9-537), 1948.

6. L. V. KANTOROVI?.

Functional analysis and applied mathematics. Uspehi Mat.

Nauk (N.S.) 3, No. 6 (28), (1948) 89-185 (Muth. Rev. lo-380), 1949.

7. L. V. KANTOROVIC.

On Newtons method. Trudy Mat. Inst. Steklov. 28 (1949),

104-144 (Math. Rev. 12-419), 1951.

8. M. ALTMAN.

A generalization

of Newtons method. Bull. Acud. Polon. Sci.

(1955), 189-193.

9. M. L. STEIN. Sufficient conditions for the convergence of Newtons method in

complex Banach spaces. Proc. Amer. Math. Sot. 3 (1952), 8.58-863.

10. R. G. BARTLE. Newtons

method in Banach spaces. Proc. Amer. Muth. Sot. 6

(1955), 827-831.

11. J. SCHRGDER. Uber das Newtonsche Verfahren. Arch. Rut. Mech. Anal. 1 (1957),

154- 180.

12. A. S. HOUSEHOLDER. Theory of Matrices in Numerical Analysis. Blaisdell, 1964.

13. R. PENROSE. A generalized inverse for matrices. PYOC. Combridge Phil. Sot. 51

(1955), 406-413.

14. A. BEN-ISRAEL AND A. CHARNES. Contributions

to the theory of generalized inverses. J. Sot. Indust. Appl. Math. 11 (1963), 667-699.

15. A. M. RODNYANSKII.

On continuous and differentiable

mappings of open sets of

Euclidean space. Mat. Sb. (N.S.) 42 (84), (1957), 179-196.

16. S. KUREPA. Remark on the (F)-differentiable

functions in Banach spaces Glusnik

Mat.-Fiz.

Astronom. Ser. II 14 (1959), 213-217; (Math. Rev. 24-A1030), 1962.

17. A. BEN-ISRAEL. A modified Newton-Raphson

method for the solution of systems

of equations. Israel J. Math. 3 (1965), 94-98.

18. A. BEN-ISRAEL AND S. J. WERSAN. An elimination method for computing the generalized inverse of an arbitrary complex matrix. J. Assoc. Comp. Much. 10 (1963),

532-537.

Você também pode gostar

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- Theory of Mechanisms and Machines Ghosh and Mallik PDFDocumento5 páginasTheory of Mechanisms and Machines Ghosh and Mallik PDFsujith reddy16% (31)

- Ondokuz Mayis University International Student ExamDocumento20 páginasOndokuz Mayis University International Student Examhyacinthe charles hlannonAinda não há avaliações

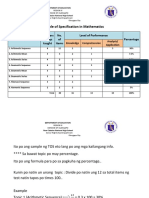

- TOS SampleDocumento3 páginasTOS SampleSusan T. Laxamana100% (1)

- M Olympiad SampleDocumento16 páginasM Olympiad Samplepinkyvennela100% (1)

- A. G. Kurosh - Theory of Groups, Volume Two-Chelsea Pub. Co. (1960) PDFDocumento309 páginasA. G. Kurosh - Theory of Groups, Volume Two-Chelsea Pub. Co. (1960) PDFJames100% (2)

- Redesigned Refrigeration2020 YearDocumento30 páginasRedesigned Refrigeration2020 Yearsujith reddyAinda não há avaliações

- Displacement Analysis of The RCRCR FiveDocumento2 páginasDisplacement Analysis of The RCRCR Fivesujith reddyAinda não há avaliações

- Kinematic Displacement Analysis of A Double Cardon Joint DrivelineDocumento9 páginasKinematic Displacement Analysis of A Double Cardon Joint Drivelinesujith reddyAinda não há avaliações

- Lecture 1 of 5Documento30 páginasLecture 1 of 5awangpaker xxAinda não há avaliações

- Convergence Tests PDFDocumento35 páginasConvergence Tests PDFPraeMaiSamartAinda não há avaliações

- Unit 1 - ANALYTIC GEOMETRY PDFDocumento11 páginasUnit 1 - ANALYTIC GEOMETRY PDFjoshua padsAinda não há avaliações

- Class Xii Maths Marking Scheme KV NeristDocumento13 páginasClass Xii Maths Marking Scheme KV NeristBot1234Ainda não há avaliações

- Form2 MathematicsDocumento4 páginasForm2 MathematicsMichael HarrilalAinda não há avaliações

- Similar Triangle PatternsDocumento11 páginasSimilar Triangle PatternsChanuAinda não há avaliações

- Graduate Functional Analysis Problem Solutions WDocumento17 páginasGraduate Functional Analysis Problem Solutions WLuis ZanxexAinda não há avaliações

- 1802 07880Documento12 páginas1802 07880Jack Ignacio NahmíasAinda não há avaliações

- Derivation of Normal DistributionDocumento5 páginasDerivation of Normal DistributionStevenAinda não há avaliações

- Chapter 1Documento80 páginasChapter 1eshwariAinda não há avaliações

- Herstein: Topics in Algebra - Normal Subgroups and Quotient SubgroupsDocumento2 páginasHerstein: Topics in Algebra - Normal Subgroups and Quotient SubgroupsYukiAinda não há avaliações

- KutascatterplotsDocumento4 páginasKutascatterplotsapi-327041524Ainda não há avaliações

- Differential Equations Tutorial Sheet 1Documento2 páginasDifferential Equations Tutorial Sheet 1AV YogeshAinda não há avaliações

- GRADE 10 SESSION 5 Coordinate Plane Geometry 1Documento59 páginasGRADE 10 SESSION 5 Coordinate Plane Geometry 1Gilbert MadinoAinda não há avaliações

- 2a Notes Permutations CombinationsDocumento12 páginas2a Notes Permutations CombinationsRajAinda não há avaliações

- GOY AL Brothers Prakashan: Important Terms, Definitions and ResultsDocumento7 páginasGOY AL Brothers Prakashan: Important Terms, Definitions and ResultsVijay Vikram SinghAinda não há avaliações

- S5 09-10 Paper I Mock Exam SolutionDocumento16 páginasS5 09-10 Paper I Mock Exam Solutionym5c2324Ainda não há avaliações

- Maa HL Exercises 5.1-5.4 Solutions Derivatives Compiled By: Christos Nikolaidis Calculation of Derivatives A. Practice Questions 1Documento12 páginasMaa HL Exercises 5.1-5.4 Solutions Derivatives Compiled By: Christos Nikolaidis Calculation of Derivatives A. Practice Questions 1gulia grogersAinda não há avaliações

- Reteach: Solving Inequalities by Adding or SubtractingDocumento4 páginasReteach: Solving Inequalities by Adding or SubtractingHala EidAinda não há avaliações

- Part-A: Note: in This Worksheet Symbols Used PDocumento6 páginasPart-A: Note: in This Worksheet Symbols Used PKritikaAinda não há avaliações

- Math (Safal)Documento3 páginasMath (Safal)AdowAinda não há avaliações

- Finite Element Method Chapter 4 - The DSMDocumento17 páginasFinite Element Method Chapter 4 - The DSMsteven_gogAinda não há avaliações

- Hermite DeltaDocumento43 páginasHermite Deltalevi_santosAinda não há avaliações

- (Applications of Mathematics,) Mariano Giaquinta, Stefan Hildebrandt - Calculus of Variations II. The Hamilton Formalism - The Hamiltonian Formalism - v. 2 (2006, Springer)Documento684 páginas(Applications of Mathematics,) Mariano Giaquinta, Stefan Hildebrandt - Calculus of Variations II. The Hamilton Formalism - The Hamiltonian Formalism - v. 2 (2006, Springer)Franklin Silva Yaranga50% (2)

- Nonlinear Dynamical Systems and Control - A Lyapunov Based ApproachDocumento4 páginasNonlinear Dynamical Systems and Control - A Lyapunov Based Approachosnarf706Ainda não há avaliações

- Math9 - q1 - Mod2 - Solving Quadratic Inequality - v3-1Documento43 páginasMath9 - q1 - Mod2 - Solving Quadratic Inequality - v3-1Regina Minguez Sabanal75% (4)