Escolar Documentos

Profissional Documentos

Cultura Documentos

An Intelligent Clustering Forecasting System Based On Change-Point Detection and Aritificial Neural Networks - Application To Financial Economics

Enviado por

muhammadrizTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

An Intelligent Clustering Forecasting System Based On Change-Point Detection and Aritificial Neural Networks - Application To Financial Economics

Enviado por

muhammadrizDireitos autorais:

Formatos disponíveis

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

An Intelligent Clustering Forecasting System based on

Change-Point Detection and Artificial Neural Networks:

Application to Financial Economics

Kyong Joo Oh* and Ingoo Han*

*Graduate School of Management,

Korea Advanced Institute of Science and Technology

Abstract There have been many exciting developments in the

theory of change-point detection. New promising

This article suggests a new clustering forecasting directions of research have emerged, and traditional trends

system to integrate change-point detection and artificial have flourished anew. Furthermore, there are a large

neural networks. The basic concept of proposed model is variety of change-point detection problems in time series

to obtain intervals divided by change point, to identify and dynamical systems. The literature on this topic is

them as change-point groups, and to involve them in the rapidly growing mainly due to applications in engineering,

forecasting model. The proposed models consist of two financial mathematics and econometrics. In these

stages. The first stage, the clustering neural network applications, the problem is known under different

modeling stage, is to detect successive change points in headings, such as quality control, failure detection and

dataset and to forecast change-point group with shock detection. These change-point detections have

backpropagation neural networks (BPN). In this stage, brought to various change-point models, which are

three change-point detection methods are applied and classified as likelihood ratio tests [6, 21, 12, 40, 41, 42, 17,

compared: (1) the parametric method, (2) the 48, 49, 50], nonparametric approaches [4, 30, 31, 32, 33,

nonparametric approach, and (3) model-based approach. 35, 36], and linear model approaches [38, 39, 1, 9, 43, 18,

The next stage is to forecast the final output with BPN. 19, 7, 15, 16]. Csorgo and Horvath [11] provide a concise

Through the application to the financial economics, we overview and rigorous mathematical treatment of methods

compare the proposed models with a neural network model for change-point detections and use a number of datasets

alone and, in addition, determine which of three change to illustrate the effectiveness of the various techniques.

point detection methods can perform better. This article is Most of the previous research for the change-point

then to examine the predictability of the integrated neural detection has a focus on the finding of unknown change

network model based on change-point detection. points for the past, not the forecast for the future [47, 26,

51]. We suggest the neural network forecasting model

using structural change on the basis of the theoretic

background, and compare the neural network model alone

1. Introduction with the proposed models. In the proposed model, the

The prediction of structural changes in financial and change-point detection becomes a basis of classification

economic time series is one of the most important that plays a core role of model building based on time

forecasting problems for economists. Neither factory is series segmentation.

built nor inventory acquired without any prediction that a The performance of the proposed model is evaluated in

serious depression is not going to begin within the next the context of the integrated neural network model which

few months. Polices based on prediction of change points consists of two stages. The first stage obtains intervals

in business cycles can produce stabilizing ones, while ill-

divided by change points, identifies them as change-

timed fiscal and monetary policies may result in some

unintended destabilizing ones. For instance, government point groups, and forecasts the group output with

expenditures are likely to contribute more to excess backpropagation neural networks (BPN). In this stage, the

demand and inflationary pressures during a business various change-point detection methods will be applied

expansion than during a business contradiction. Therefore, and compared: (1) parametric method, (2) nonparametric

the detection and estimation of a structural or parametric approach, and (3) linear model approach. The second stage

change point in forecasting is an important and difficult forecasts the output with BPN. The study then examines

problem. the predictability of the proposed models. To explore the

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 1

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

predictability, we divided the sample data into the training chi-square distribution for large sample sizes [11].

data over one period and the testing data over the next

period. The predictability is examined using the metrics of 2.2. The Pettitt Test: A Nonparametric Approach

the root mean squared error (RMSE), the mean absolute Consider a sequence of random variables

error (MAE) and the mean absolute percentage error X1 , X 2 , , X T , then the sequence is said to have a

(MAPE) in the final stage.

change-point at if X t for t = 1, 2, , have a

In Section 2, we review the various change-point

detection methods. Section 3 describes the proposed model common distribution function F1 ( x ) and X t for

details. Section 4 reports the results of application to t = + 1, + 2, , T have a common distribution F2 ( x) ,

interest rates forecasting. Finally, the concluding remarks and F1 ( x ) F2 ( x) . We consider the problem of testing

are presented in Section 5.

the null hypothesis of no-change, H 0 : = T , against the

2. Change-Point Detection Methods alternative hypothesis of change, H A : 1 < T , using a

According to the classification by Csorgo and Horvath non-parametric statistic.

[11], three major change-point detection methods are used An appealing non-parametric test to detect a change

and compared: (1) the likelihood ratio test for a parametric would be to use a version of the Mann-Whitney two-

method based on likelihood approach, (2) the Pettitt test sample test. A Mann-Whitney type statistic has remarkably

for a nonparametric approach based on the Mann-Whitney stable distribution and provides a robust test of the change

type statistic, and (3) the Chow test for a linear model point resistant to outliers [37]. Let

approach which is involved with linear model. The Pettitt Dij = sgn( X i X j ) (2)

test and the Chow test are selected for nonparametric

approach and linear model approach respectively since

where sgn( x ) = 1 if x>0 , 0 if x = 0, 1 if

they are frequently reviewed on the text and offered by

statistical packages. x < 0 , then consider

t T

2.1. Likelihood Ratio Test (LRT): A Parametric U t ,T = D

i =1 j =t +1

ij (3)

Method

We assume that X1, X 2 , ... , X n are independent

The statistic U t ,T is equivalent to a Mann-Whitney

normal observations with parameters ( 1 , 2 ), ( 2 , 2 ),

statistic for testing that the two samples X 1 , , X t and

... , ( n , 2 ) . Under H 0 , 1 = 2 = = n and

X t +1 , , X T come from the same population. The

under the alternative hypothesis, there is an integer k

statistic U t ,T is then considered for values of t with

such that 1 = 2 = = k k +1 = = n . The

1 t < T . For the test of H 0 : no change against H A :

variance is an unknown. It is further assumed that the

variance as well as the mean may change at an unknown change, we propose the use of the statistic

time, then the maximally selected likelihood ratio test is K T = max U t ,T . (4)

1t <T

max1i n Lk such that

L k = 2 log k = {n log n2 k log k2 (n k ) log n2 k } (1) The limiting distribution of K T is approximated to

where n2 =

1

n

(X i X k )2 , {

2 exp 6k 2 /(T 2 + T 3 ) } for T .

n i =1

2.3. The Chow Test: A Linear Model Approach

1

k n

~

k2 =

( X i X k ) +

n i =1

2

i = k +1

( X i X n k ) 2 ,

Chow [7] suggested the test for structural breaks which

are for stationary variables and a single break. Consider

n the linear regression model with k variables as follows.

1 ~

n2 k =

nk (X

i = k +1

i X nk ) 2 ,

Y = 0 + 1 X 1 + 2 X 2 + + k X k + (5)

n k n

1 1 ~ 1

Xn =

n i =1

X i , X k =

k i =1

X i , X nk =

nk X

i = k +1

i where Y is the value of the response variable in the i th

observation; 0 , 1 , 2 , , k are parameters; X 1 ,

The distribution of max 1i n Lk is approximated to the X 2 , , X k are the values of the independent variables in

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 2

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

the i th observation; is an identically independent In this stage, we make the change-point-assisted neural

random error term with mean E ( ) = 0 and variance network model for the intervals based on various change-

point detection methods.

Var ( ) = 2 under the normal distribution for sample

size n . Step 1: Perform the change-point detection

Now consider the two linear regressions for the two Let x it be t th time series data for i th input variable

subsets of the data modeled separately,

where i = 1, , m , and let y t be t th time series data for

Y = 10 + 11 X 1 + 12 X 2 + + 1k X k + 1 (6)

output variable where t = 1, , n . Multiple change points

Y = 20 + 21 X 1 + 22 X 2 + + 2 k X k + 2 (7)

are obtained under the binary segmentation method [45].

where the number of observations from the first set is n1 With H 0 as in Section 2, under the alternative hypothesis

and the number of observations from the second set is n 2 . we now assume that there are R changes in the

The Chow test statistic is used to test the null hypothesis, parameters, where R is a known integer. The alternative

H 0 : 10 = 20 , 11 = 21 , 12 = 22 , , 1k = 2 k can be formulated as

conditionally upon the same error variance H A( R ) : there are integers 1 < k1 < k 2 < < k R < n

Var ( 1 ) = Var ( 2 ). such that 1 = = k1 k1 +1 = = k 2 k 2 +1 =

The Chow test statistic is computed using three residual

= k R k R +1 = = n for the parameter ' s.

sums of squares errors:

n 2 n1 2 n2 2 We note that the test statistics under the null hypothesis

i

1i 2i k

will remain consistent against H A(R ) as well, despite the

Fchow =

i =1 i =1 i =1 fact that they were derived under the assumption that

(8)

n1 2 n2 2 R = 1 . Without the loss of generality, we can deduce that

+

1i

2i (n1 + n 2 2k )

the tests mentioned in Section 2 are extended to the form

i =1 i =1 for no change against the R changes alternative

H A(R ) .

where the mark ^ means the predicted value obtained

Vostrikova [45] suggested a binary segmentation

from the model. Under the null hypothesis, the Chow test

method as follows. First, use the change-point detection

statistic has an F -distribution with k and (n1 +

test. If H 0 is rejected, the find k1 that is the time where

n 2 2k ) degrees of freedom.

the maximum of Equation (1), (4) and (8) is reached. Next

divide the random sample into two subsamples

The above-mentioned tests detect a possible change

point in the time sequence dataset. Once the change point { t 1 } { t 1 }

x : 1 t k and x : k < t n , where x should be

t

is detected through the test, then the dataset is divided into selected among all input variables such that it is highly

two intervals. The intervals before and after the change correlated with the desired output, and test both

point form homogeneous groups which take heterogeneous subsamples for further changes. The number of change

characteristics from each other. This process becomes a points, R , should be determined under the view of field

fundamental part of the binary segmentation method [45] experts. Thus, one continues this segmentation procedure

explained in Section 3. until the opinion of expert is satisfied.

This process plays a role of clustering which constructs

3. Model Specification groups as well as maintains the time sequence. In this

In order to build the forecasting model based on the point, Step 1 is distinguished from other clustering

structural change, we need to integrate change-point methods such as the k-means nearest neighbor method and

detection methods and artificial neural networks (ANN). the hierarchical clustering method that classify data

The advantages of combining multiple techniques to yield samples by the Euclidean distance between cases without

synergism for discovery and prediction have been widely considering the time sequence. Therefore, this step may be

recognized [14, 22, 23]. The proposed model consists of considered as the time-based clustering model.

two stages: (1) the change-point-assisted clustering (CPC)

stage and (2) the final output forecasting (FOF) stage. Step 2: Train the groups with BPN

ANN is used as a classification tool in CPC and as a The proposed model is based on ANN, where the

forecasting tool in FOF. neuron input path (i) has a signal on it ( xit ) and the

strength of the path is characterized by a weight ( wi ) .

The CPC stage The neuron is modeled as summing the path weight times

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 3

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

the input signal over all paths and adding the node bias together different responses over different intervals based

() . The output ( y t ) is usually a sigmoid shaped on BPN:

logistic function that is expressed as follows: f1 ( x1at , , x mt

a

; 1 ), D 1 = 1

1

y t = f ( xt ) = (9)

a a

f ( x , , x mt ; 2 ), D 2 = 2

m y t +1 = 2 1t (12)

1 + exp

wi x it +

f (, x a , , x a ; ), D

i =1 R +1 1t mt R R +1 = R + 1

for t = 1, 2, , n The variances of the estimated means are denoted by

Note that this S-shaped function reduces the effect of V pure and V prop , which indicate the variances produced

extreme input variables on the performance of the network. by the pure ANN alone and the proposed model

Let respectively. Then, the following establishes:

{ }

Dt = x it : k h 1 < x t k h = h, h = 1, , R + 1. 1

R +1 nh

where k 0 = 1 and k R +1 = n . Once ANN to xit and Dt V pure = ( y

n 1 h =1 i =1

hi y) 2

have been established, we consider predictors of the future R +1 R +1

nh 2 nh

evolution of Dt . A predictor is simply a rule for obtaining

an estimate of Dt +1 for the next observation. The

h =1

n

Sh + n (y

h =1

h y) 2 (13)

R +1

prediction D nh

t +1 of D t +1 can be obtained using some

extrapolation of the observations for input variables

= V prop + n (y

h =1

h y) 2

x1t , x 2t , , x mt subsequently to the ANN that have been nh nh

1 1

chosen, that is to say: where S h2 =

nh 1 (y hi yh ) 2 , yh =

nh y hi for

D t +1 = f ( x1t , x 2t , , x mt ) (10) i =1 i =1

R +1 R +1

1

Then x1t , x 2t , , x mt are adjusted to x1at , x 2at , , x mt

n n

a

h = 1, , R + 1 , y= h yh , n= h , and n h =

n

according to D t +1 . Learning occurs through the h =1 h =1

adjustment of the path weights and node biases. The most the number of sample for h th group.

common method used for the adjustment is BPN, which is Equality holds in (13) if terms in 1 n h are negligible and

known to be a useful tool in many applications such as hence also in 1 n . Thus,

classification, forecasting, and pattern recognition [34].

V pure V prop . (14)

The significant intervals in Step 1 are grouped to detect This is indeed a very useful point to reduce the

the regularities hidden in desired output. Such groups prediction error. In the model, the number of change-point

represent a set of meaningful trends encompassing interest group is an important factor to improve the performance.

rates. Since those trends help to find regularity among the Inequality (14) is always guaranteed if time series

related output values more clearly, the neural network segmentation is optimally established. The error for

model can have a better ability of generalization for the forecasting may be reduced by making the subsampling

unknown data. After Step 1 detect the appropriate groups units within groups homogeneous and the variation

hidden in the time series data, BPN is applied to the input between groups heterogeneous [10]. Thus, the number of

data samples at time t with group outputs for t + 1 given change-point group should be optimally determined. To do

by Step 1. In this sense, the CPC stage is a neural network this, we perform the heuristic experiment as we change the

model that is trained to find an appropriate group for each number of group. Subsequently, we demonstrate that

given sample. inequality (14) holds for the prediction of chaotic financial

data in this article.

The FOF stage FOF is built by applying BPN to each group. FOF is a

When generating ANN predictions. Locally adjusted mapping function between the input sample and the

nonlinear predictions are usually employed for each group corresponding desired output. Once FOF is built, then the

h: sample can be used to forecast the target value. This stage

is needed to evaluate and compare the predictability of the

y t +1 = f h ( x1at , x 2at , , x mt

a

) , h = 1, , R + 1 (11)

proposed integrated models through various change-point

Consider a relationship between y t and xit in which the detection methods.

function E ( y | x) = f ( x; ) is obtained by classifying

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 4

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

4. Application to the U.S. Interest Rates clustering group based on change-point detection while the

Financial analysts and econometricians have frequently next learning is occurred in the FOF stage that forecasts

used piecewise-linear models which also include change- the final output. The models, labeled LRT_NN, PET_NN

point detection. They are known as models with structural and CHOW_NN, indicate that the LRT, the Pettitt test and

breaks in economic literature. In these models, the the Chow test are used respectively as a change-point

parameters are assumed to shift typically once detection method. For validation, four learning models are

during a given sample period and the goal is to estimate also compared.

the two sets of parameters as well as the change point or

structural break. However, this study has a focus on the 16

forecast for the future, not on the finding of unknown 14

changes points for the past, with the assumption that the 12

multiple change points may have occurred. 10

In this study, the proposed model is applied to interest 8

rates forecasting which is one of the most closely watched 6

variables in the financial economy. In general, the 4

movement of interest rates has a series of change points

which occur because of the monetary policy of the

2

government [13, 44, 28, 8, 25, 3]. The case study is 0

Jan-61

Jan-62

Jan-63

Jan-64

Jan-65

Jan-66

Jan-67

Jan-68

Jan-69

Jan-70

Jan-71

Jan-72

Jan-73

Jan-74

Jan-75

Jan-76

Jan-77

Jan-78

Jan-79

Jan-80

Jan-81

Jan-82

Jan-83

Jan-84

Jan-85

Jan-86

Jan-87

Jan-88

Jan-89

Jan-90

Jan-91

Jan-92

Jan-93

Jan-94

Jan-95

Jan-96

Jan-97

Jan-98

Jan-99

conducted for monthly yields on the Treasury bill rate of 1

years maturity in the U.S. Figure 1. Yield of US Treasury bills with a

The input variables used in this study are M2, consumer maturity of 1 year from Jan. 1960 to May 1999

price index, expected real inflation rates and industrial

production index. They are used in both the CPC stage and The change-point detection methods are applied to the

the FOF stage. The lists of variables used in this study are interest rate dataset in the training phase. It is known that

summarized in Table 1. By the previous study, input interest rates at time t are more important than

variables in Table 1 are those which were significant in fundamental economic variables in determining interest

interest rates forecasting [29]. To obtain stationary and rates at time t + 1 [24]. Thus, we apply LRT, the Pettitt

thereby facilitate forecast, the input data were transformed test, and the Chow test to interest rates at time t in the

by a logarithm and a difference operation. Moreover, the training phase. The heuristic experiment is performed as

resulting variables were standardized to eliminate the the number of group is changed. The number of group is

effects of units. varied until the minimum error rate is found for the

prediction values, which is based on MAPE. This metric is

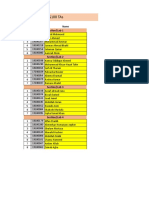

Table 1. Description of Variables. chosen since it is commonly used [5] and is highly robust

Variable Description Attribute [2, 27]. The performance results are observed by the

Name change of the number of group. Table 2 indicates these

TBILL Treasury Bill with 1 years Output results. Four groups are selected as an optimal number of

maturity groups. Furthermore, the Pettitt test shows the best

M2 Money Stock Input

performance. The Pettitt test is recommended in the

CPI Consumer Price Index Input

forecast of chaotic time series since its error rates in Table

ERIR Expected Real Interest Rates Input

IPI Industrial Production Index Input

2 have more stable results than other tests. Nonparametric

statistical property of the Pettitt test is suitable match for a

neural network model that is a kind of nonparametric

The training phase included observations from January

method [46]. On the average, the Chow test has lower

1961 to August 1991 while the testing phase runs from

September 1991 to May 1999. The interest rate data are performance since it has strong constraints that the

observations are required to constitute the linear regression,

presented in Figure 1. Figure 1 shows that the movement

in addition that they are random samples with the normal

of interest rates is highly fluctuated during the last forty

distribution, which is also assumption of LRT. If the given

years.

dataset is not suitable for the regression model, it is natural

The study employed four neural network models. One

that the application of the Chow test may bring about low

model, labeled Pure_NN, include input variables at time

performance.

t to generate a forecast for t + 1 . The input variables are

To highlight the performance due to various models, the

also M2, CPI, ERIR and IPI. The second type has the two-

actual values of Treasury bill rates and their predicted

staged learning model mentioned in section 3. The first

values are shown in Figure 2 when the dataset is

learning is occurred in the CPC stage that forecasts the

segmented into four groups. The predicted values of the

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 5

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

pure BPN model (i.e. Pure_NN) get apart from the actual dependence [20]. Table 4 shows t-values and p-values

values in some intervals. Numerical values for the when the prediction accuracies of the left-vertical methods

performance metrics by predictive model are given in are compared with those for the right-horizontal methods.

Table 3. Figure 3 presents histograms of RMSE, MAE and Mostly, the proposed models using change-point detection

MAPE of predictions for each learning model. According method perform significantly better than the pure BPN

to RMSE, MAE and MAPE, the outcomes indicate that model at a 1% or 5% significant level. This means that the

LRT_NN, PET_NN and CHOW_NN are superior to change-point-assisted clustering model takes a major role

Pure_NN. to improve the performance.

Table 2. Performance results according to the 0.35

change of the number of change based on MAPE

Number of

0.3

Group Chow Pettitt

LRT Average 0.25

to be occurred Test Test

Pure_N N

0.2

LR T_N N

Two Groups 5.443 3.765 3.820 4.342 PET_N N

0.15

C H O W _N N

Four Groups 4.392 3.811 3.746 3.983 0.1

0.05

Eight Groups 3.882 6.695 3.985 4.854

0

R M SE M AE M A PE

7.5 Figure 3. Histogram of RMSE, MAE and MAPE

7 resulting from forecasts of TBILL

6.5

6 Table 4. Pairwise t-tests for the differences in

5.5 Actual residuals for US interest rate prediction based on

5

Pure_N N

the absolute percentage error (APE)

with the significance level in parentheses.

LRT_N N

4.5

PET_N N

4 C H O W _N N

Model LRT_NN CHOW_NN Pure_NN

3.5 PET_NN 0.31 2.60 3.43

3

(0.756) (0.010) * (0.000) **

2.5

LRT_NN 2.58 3.58

(0.011) * (0.000) **

Jun-92

Jun-93

Jun-94

Jun-95

Jun-96

Jun-97

Jun-98

Mar-92

Mar-93

Mar-94

Mar-95

Mar-96

Mar-97

Mar-98

Mar-99

Sep-91

Sep-92

Sep-93

Sep-94

Sep-95

Sep-96

Sep-97

Sep-98

Dec-91

Dec-92

Dec-93

Dec-94

Dec-95

Dec-96

Dec-97

Dec-98

CHOW_NN 2.55

Figure 2. Actual vs predicted values due to (0.012) *

various models for TBILL ** Significant at 1%; * Significant at 5%

Table 3. Performance results in the case In summary, the proposed models turn out to have a

of the U.S. Treasury bill rate forecasting based on high potential in interest rate forecasting. This is

RMSE, MAE and MAPE attributable to the fact that it categorizes the input data

Model RMSE MAE MAPE (%) samples into homogeneous group and extracts regularities

Pure_NN 0.3119 0.2506 5.969 from each homogeneous group. Therefore, the proposed

LRT_NN 0.2224 0.1738 3.811 models cope with the noise or irregularities more

PET_NN 0.2416 0.1745 3.746 efficiently than the pure BPN model. For the chaotic time

CHOW_NN 0.2557 0.1980 4.392 series data, furthermore, the Pettitt test is recommended

since it is free from assumptions and constraints.

When the dataset is segmented into four groups, we use

the pairwise t-test to examine whether there exist the

differences in the predicted values of models according to 5. Concluding Remarks

the absolute percentage error (APE). Since the forecasts The basic concept of proposed model is to obtain

are not statistically independent and not always normally significant intervals by change-point detection, to identify

distributed, we compare the forecasts APEs using the them as change-point groups, and to include them in the

pairwise t-test. Where sample sizes are reasonably large, final output forecasting. In order to validate its

this test is robust to the distribution of the data, to performance, we propose the integrated neural network

nonhomogeneity of variances, and to statistical model which consists of two stages. In the first stage, we

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 6

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

find a change-point to construct the homogeneous groups [10] W.G. Cochran, Sampling techniques, New York: John

and then make the change-point-assisted clustering model Wiley & Sons, 1977.

to predict the group outputs. In the next stage, we apply [11] M. Csorgo and L. Horvath, Limit Theorems in Change-

BPN to forecast the final output. Point Analysis, New York: John Wiley & Sons, 1997.

[12] L.A. Gardner, Jr., On detecting changes in the mean of

The proposed models perform significantly better than normal variates, Ann Math Statist, 40, pp.116-126, 1969.

the pure BPN model at a 1% or 5% significant level. [13] D. Gordon and E.M. Leeper, The dynamic impacts of

Experimental results show that the proposed neural monetary policy: An exercise in tentative identification,

network models outperform the pure BPN model Journal of Political Economy, 102, pp.1228-1247, 1994.

significantly, which implies the high potential of involving [14] J.M. Gottman, Time series analysis, New York: Cambridge

the change-point detection in the model. The proposed University Press, 1981.

models are demonstrated to be useful intelligent data [15] D.L. Hawkins, A test for a change-point in a parametric

analysis methods based on the concept of change-point model based on a maximal Wald-type statistic, SarzkAya

detection. In the case study, finally, we have shown that the Ser (A), 49, pp.368-376, 1987.

[16] D.L. Hawkins, An U-I approach to retrospective testing

proposed models improve the predictability of interest for shift parameters in a linear model, Comm Statist -

rates significantly. Theory Method, 18, pp.3117-3134, 1989.

If further studies are to focus on optimizing the number [17] D.M. Hawkins, Testing a sequence of observations for a

of change point in terms of the performance, the proposed shift in location, J Amer Statist Assoc, 72, pp.180-186,

models have the promising possibilities to improve the 1977.

performance. In the final stage of the model, other [18] D.V. Hinkley, Inference about the intersection in two-

intelligent approaches can be used to forecast the final phase regression, Biometrika, 56, pp.495-504, 1969.

output besides BPN. In addition, the proposed models may [19] D.V. Hinkley, Inference in two-phase regression, J Amer

be applied to other application fields. By the extension of Statist Assoc, 66, pp.736-743, 1971.

[20] R. Iman and W.J. Conover, Modern business statistics,

these points, future research is expected to provide more New York: Wiley, 1983.

improved models with superior performances. [21] Z. Kander and S. Zacks, Test procedures for possible

changes in parameters of statistical distributions occurring

at unknown time points, Ann Math Statist, 37, pp.1196-

1210, 1966.

References [22] K.A. Kaufman, R.S. Michalski, and L. Kerschberg,

[1] D. Andrews, Heteroskedasticity and autocorrelation Mining for knowledge in databases: Goals and general

consistent covariance matrix estimation, Econometrica, description of the INLEN system, in: G. Piatetsky-

59, pp.817-858, 1991. Shapiro and W.J. Frawley (Eds.), Knowledge discovery in

[2] J.S. Armstrong and F. Collopy, Error measures for databases (pp.449-462), Cambridge, MA: AAAI / MIT

generalizing about forecasting methods: Empirical Press, 1991.

comparisons, International Journal of Forecasting, 8, pp. [23] S.H. Kim and N.H. Noh, Predictability of interest rates

69-80, 1992. using data mining tools: A comparative analysis of Korea

[3] F.C. Bagliano and C.A. Favero, Information from and the U.S, Expert Systems with Applications, 15(1),

financial markets and VAR measures of monetary policy, pp.85-95, 1997.

European Economic Review, 43, pp.825-837, 1999. [24] M. Larrain, Empirical tests of chaotic behavior in a

[4] B.E. Brodsky and B.S. Darkhovsky, Nonparametric nonlinear interest rate model, Financial Analysts Journal,

Methods in Change-point Problems. Kluwer, Dordrecht, 47, pp.51-62, 1991.

1993. [25] E.M. Leeper, Narrative and VAR approaches to monetary

[5] R. Carbone and J.S. Armstrong, Evaluation of policy: Common identification problems, Journal of

extrapolative forecasting methods: Results of academicians Monetary Economics, 40, pp.641-657, 1997.

and practitioners, Journal of Forecasting, 1, pp.215-217, [26] H.L. Li and J.R. Yu, A piecewise regression analysis with

1982. automatic change-point detection, Intelligent Data

[6] H. Chernof and S. Zacks, Estimating the current mean of Analysis, 3, pp.75-85, 1999.

a normal distribution which is subjected to changes in [27] S. Makridakis, Accuracy measures: Theoretical and

time, Ann Math Statist, 35, pp.999-1018, 1964. practical concerns, International Journal of Forecasting,

[7] G.C. Chow, Tests of equality between sets of coefficients 9, pp.527-529, 1993.

in two linear regressions, Economerica, 28, pp.591-605, [28] F.S. Mishkin, The economics of money, banking, and

1960. financial markets, New York: Harper Collins, 1995.

[8] L.J. Christiano, M. Eichenbaum, and C.L. Evans, The [29] K.J. Oh and I. Han, Using change-point detection to

effects of monetary policy shocks: Evidence from the flow support artificial neural networks for interest rates

of funds, Review of Economics and Statistics, 78, pp.16- forecasting, Expert systems with applications, 19(2), pp.

34, 1996. 105-115, 2000.

[9] C.S.J. Chu and H. White, A direct test for changing [30] E.S. Page, Continuous inspection schemes, Biometrika,

trend, J Business Economic Statist, 10, pp.289-299, 1992. 41, pp.100-105, 1954.

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 7

Proceedings of the 34th Hawaii International Conference on System Sciences - 2001

[31] E.S. Page, A test for a change in a parameter occurring at [43] D. Siegmund, Sequential Analysis: Tests and Confidence

an unknown point, Biometrika, 42, pp.523-526, 1955. Intervals, New York: Springer-Verlag, 1985.

[32] E. Parzen, Nonparametric Statistics and Related Topics, [44] S. Strongin, The identification of monetary policy

Amsterdam: Elsevier. disturbances. Explaining the liquidity puzzle, Journal of

[33] E. Parzen, Comparison change analysis approach to Monetary Economics, 35, pp.463-497, 1995.

change-point estimation, J Appl Statist Sci, 4, pp.379-402, [45] L.J. Vostrikova, Detecting disorder in multidimensional

1994. random process, Sov. Math. Dokl., 24, pp.55-59, 1981.

[34] D.W. Patterson, Artificial neural networks, New York: [46] H. White, Connectionist nonparametric regression:

Prentice Hall, 1996. Multilayer feedforward networks can learn arbitrary

[35] A.N. Pettitt, A non-parametric approach to the change- mappings, in: H. White (Ed.). Artificial Neural Networks:

point problem, Applied Statistics, 28(2), pp.126-135, 1979. Approximations and Learning Theory, Oxford, UK:

[36] A.N. Pettitt, A simple cumulative sum type statistic for Blackwell, 1992.

the change-point problem with zero-one observations, [47] O. Wolkenhauer and J.M. Edmunds, Possibilistic testing

Biometrika, 67, pp.79-84, 1980a. of distribution functions for change detection, Intelligent

[37] A.N. Pettitt, Some results on estimating a change-point Data Analysis, 1, pp.119-127, 1997.

using nonparametric type statistics, Journal of Statistical [48] K.J. Worsley, On the likelihood ratio test for a shift in

Computation and Simulation, 11, pp.261-272, 1980b. location of normal populations, J Amer Statist Assoc, 74,

[38] R.E. Quandt, The estimation of the parameters of a linear pp.365-367, 1979.

regression system obeying Go separate regimes, J Amer [49] Y.C. Yao, Estimating the number of change-points via

Stat Assoc, 53, pp.873-880, 1958. Schwarzs criterion, Statist Probab Lett, 6, pp.181-189,

[39] R.E. Quandt, Tests of the hypothesis that a linear 1988.

regression system obeys two separate regimes, J Amer [50] Y.C. Yao and R.A. Davis, The asymptotic behavior of the

Statist Assoc, 55, pp.324-330, 1960. likelihood ratio statistic for testing a shift in mean in a

[40] A.K. Sen and M.S. Srivastava, On tests for detecting sequence of independent normal variates, Sanihya Ser (A),

change in mean, Ann Statist, 3, pp.98-108, 1975a. 48, pp.339-353, 1984.

[41] A.K. Sen and M.S. Srivastava, On tests for detecting [51] J.R. Yu, G.H. Tzeng, and H.L. Li, A general piecewise

change in mean when variance is unknown, Ann Inst necessity regression analysis based on linear

Statist Math, 27, pp.479-486, 1975b. programming, Fuzzy Sets and Systems, 105, pp.429-436,

[42] A.K. Sen and M.S. Srivastava, Some one-sided tests for 1999.

change in level, Technometrics, 17, pp.61-64, 1975c.

0-7695-0981-9/01 $10.00 (c) 2001 IEEE 8

Você também pode gostar

- The Data Culture Playbook: A Guide To Building Business Resilience With DataDocumento10 páginasThe Data Culture Playbook: A Guide To Building Business Resilience With DatamuhammadrizAinda não há avaliações

- Ghauri - The Book - Final Drafte PDFDocumento475 páginasGhauri - The Book - Final Drafte PDFMusadiq IslamAinda não há avaliações

- Animals: Automatic Classification of Cat Vocalizations Emitted in Different ContextsDocumento14 páginasAnimals: Automatic Classification of Cat Vocalizations Emitted in Different ContextsmuhammadrizAinda não há avaliações

- CS 100 - Computational Problem Solving: Fall 2017-2018 Quiz 1Documento2 páginasCS 100 - Computational Problem Solving: Fall 2017-2018 Quiz 1muhammadrizAinda não há avaliações

- 305 WRKDOC Sandee Panel Session ReportDocumento11 páginas305 WRKDOC Sandee Panel Session ReportmuhammadrizAinda não há avaliações

- Python Cheat Sheet PDFDocumento26 páginasPython Cheat Sheet PDFharishrnjic100% (2)

- Energy Strategy Reviews: SciencedirectDocumento9 páginasEnergy Strategy Reviews: SciencedirectpaulAinda não há avaliações

- Learning Polynomials With Neural NetworksDocumento9 páginasLearning Polynomials With Neural NetworksjoscribdAinda não há avaliações

- P 33Documento6 páginasP 33muhammadrizAinda não há avaliações

- Practical C++ Programming Teacher's GuideDocumento79 páginasPractical C++ Programming Teacher's GuidesomeguyinozAinda não há avaliações

- Find Smallest Floating Point NumberTITLE Sort Strings Lexicographically TITLE Count Digits in Positive IntegerTITLE Convert Month Number to DaysDocumento7 páginasFind Smallest Floating Point NumberTITLE Sort Strings Lexicographically TITLE Count Digits in Positive IntegerTITLE Convert Month Number to DaysmuhammadrizAinda não há avaliações

- P 33Documento6 páginasP 33muhammadrizAinda não há avaliações

- SWOT Analysis SampleDocumento6 páginasSWOT Analysis Samplehasan_netAinda não há avaliações

- Computational Health Informatics in The Big Data Age - A SurveyDocumento36 páginasComputational Health Informatics in The Big Data Age - A SurveymuhammadrizAinda não há avaliações

- BS CS Fall 2017Documento2 páginasBS CS Fall 2017muhammadrizAinda não há avaliações

- Scha PireDocumento182 páginasScha PiremuhammadrizAinda não há avaliações

- BSEE Fall-17 Finalterm 22th Batch (F2017019) Datesheet 03.01.18Documento3 páginasBSEE Fall-17 Finalterm 22th Batch (F2017019) Datesheet 03.01.18muhammadrizAinda não há avaliações

- Tasks 9Documento2 páginasTasks 9muhammadrizAinda não há avaliações

- PHD OpeningsDocumento1 páginaPHD OpeningsmuhammadrizAinda não há avaliações

- Importance of Silence During Friday Sermon and LUMS Prayer TimesDocumento1 páginaImportance of Silence During Friday Sermon and LUMS Prayer TimeseternaltechAinda não há avaliações

- Publication Detail Performa 2016-17Documento1 páginaPublication Detail Performa 2016-17muhammadrizAinda não há avaliações

- Lab 4 DecisionsDocumento6 páginasLab 4 DecisionsmuhammadrizAinda não há avaliações

- CS 100 Outline 02Documento4 páginasCS 100 Outline 02muhammadrizAinda não há avaliações

- Sparse RepDocumento67 páginasSparse RepmuhammadrizAinda não há avaliações

- DeepLearning TensorflowTraining CurriculumDocumento3 páginasDeepLearning TensorflowTraining CurriculummuhammadrizAinda não há avaliações

- Course Schedule MECH, ELECT Eng DeptDocumento9 páginasCourse Schedule MECH, ELECT Eng DeptmuhammadrizAinda não há avaliações

- Advertisement July2017Documento1 páginaAdvertisement July2017muhammadrizAinda não há avaliações

- Welcome Online CourseDocumento3 páginasWelcome Online CoursemuhammadrizAinda não há avaliações

- CS100 TA's ListDocumento1 páginaCS100 TA's ListmuhammadrizAinda não há avaliações

- TH Course - Name CRH Sec Resource Person Monday Tuesday Wednesday Thurday Friday Saturday S. No Course CodeDocumento1 páginaTH Course - Name CRH Sec Resource Person Monday Tuesday Wednesday Thurday Friday Saturday S. No Course CodemuhammadrizAinda não há avaliações

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (119)

- Stream Processing and AnalyticsDocumento6 páginasStream Processing and AnalyticsPrasanth Tarikoppad100% (1)

- 15CSL76 StudentsDocumento18 páginas15CSL76 StudentsYounus KhanAinda não há avaliações

- Machine Learning A Z Q ADocumento52 páginasMachine Learning A Z Q ADina Garan100% (1)

- Physicochemical Properties of Banana Peel Flour As Influenced by Variety and Stage of Ripeness Multivariate Statistical AnalysisDocumento14 páginasPhysicochemical Properties of Banana Peel Flour As Influenced by Variety and Stage of Ripeness Multivariate Statistical AnalysisnalmondsAinda não há avaliações

- Cluster Analysis in Data MiningDocumento3 páginasCluster Analysis in Data MiningAnna KallivayalilAinda não há avaliações

- Data Mining Methodology for Industrial EngineeringDocumento104 páginasData Mining Methodology for Industrial EngineeringShaik RasoolAinda não há avaliações

- Introduction To Data MiningDocumento3 páginasIntroduction To Data MiningHari 'Whew' PrasetyoAinda não há avaliações

- Requirements For Clustering Data Streams: Dbarbara@gmu - EduDocumento5 páginasRequirements For Clustering Data Streams: Dbarbara@gmu - EduHolisson SoaresAinda não há avaliações

- Findings On Teaching Machine Learning in High School A Ten - Year Systematic Literature ReviewDocumento18 páginasFindings On Teaching Machine Learning in High School A Ten - Year Systematic Literature ReviewNOmeAinda não há avaliações

- Trends and Trajectories For Explainable, Accountable and Intelligible Systems: An HCI Research AgendaDocumento18 páginasTrends and Trajectories For Explainable, Accountable and Intelligible Systems: An HCI Research AgendaUsama IslamAinda não há avaliações

- Density Based ClusteringDocumento22 páginasDensity Based Clusteringanon_857439662Ainda não há avaliações

- Improved Fault Diagnosis in Wireless Sensor Networks Using Deep Learning TechniqueDocumento4 páginasImproved Fault Diagnosis in Wireless Sensor Networks Using Deep Learning TechniqueKeerthi GuruAinda não há avaliações

- K Means PDFDocumento187 páginasK Means PDFMohammed Abdul RahmanAinda não há avaliações

- Compusoft, 3 (6), 938-951 PDFDocumento14 páginasCompusoft, 3 (6), 938-951 PDFIjact EditorAinda não há avaliações

- Fitzgibbon AlgorithmDocumento5 páginasFitzgibbon AlgorithmoctavinavarroAinda não há avaliações

- Big Data Computing - Assignment 6Documento3 páginasBig Data Computing - Assignment 6VarshaMegaAinda não há avaliações

- The Swiss Teaching Style Questionnaire (STSQ) and Adolescent Problem BehaviorsDocumento10 páginasThe Swiss Teaching Style Questionnaire (STSQ) and Adolescent Problem BehaviorsRuxanda MoşcinAinda não há avaliações

- IC-Data Analytics Learner OrientationDocumento31 páginasIC-Data Analytics Learner OrientationSiddhesh PhapaleAinda não há avaliações

- Machine Learning with Kernel MethodsDocumento760 páginasMachine Learning with Kernel MethodshoangntdtAinda não há avaliações

- Advance Database With Lab: Professor & Head (Department of Software Engineering)Documento5 páginasAdvance Database With Lab: Professor & Head (Department of Software Engineering)Mahmud RanaAinda não há avaliações

- PDF Laporan Praktikum Data Mining - CompressDocumento142 páginasPDF Laporan Praktikum Data Mining - CompressMirsa ArmanaAinda não há avaliações

- Data Warehousing and Data MiningDocumento18 páginasData Warehousing and Data Mininglskannan47Ainda não há avaliações

- A Comprehensive Review of Image Segmentation TechnDocumento10 páginasA Comprehensive Review of Image Segmentation TechnAbhayAinda não há avaliações

- Data Mining:: Knowledge Discovery in DatabasesDocumento14 páginasData Mining:: Knowledge Discovery in Databasesapi-20013961Ainda não há avaliações

- Cs8080 Irt Local AuthorDocumento168 páginasCs8080 Irt Local AuthorARVIND K RAinda não há avaliações

- Ckustering DatascienceDocumento37 páginasCkustering Datascienceanon_679166612Ainda não há avaliações

- Clevered Brochure 6-8 YearsDocumento24 páginasClevered Brochure 6-8 YearsLokeshAinda não há avaliações

- Survey of State of The Art Mixed Data Clustering AlgorithmsDocumento20 páginasSurvey of State of The Art Mixed Data Clustering AlgorithmsArman DaliriAinda não há avaliações

- Class4 MoreDataMiningWithWeka 2014 Old VersionDocumento43 páginasClass4 MoreDataMiningWithWeka 2014 Old VersionHuyền TrangAinda não há avaliações