Escolar Documentos

Profissional Documentos

Cultura Documentos

Machine Learning Exam Sample Questions

Enviado por

David LeTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Machine Learning Exam Sample Questions

Enviado por

David LeDireitos autorais:

Formatos disponíveis

Name of Candidate: .....................................................

Student number: .....................................................

Signature: .....................................................

COMP9417 Machine Learning and Data Mining

Final Examination: SAMPLE QUESTIONS

Here are seven questions which are somewhat representative of the type

that will be in the final exam. Each question is of equal value, and should not

take longer than 30 minutes to complete. In the actual exam there will be

some degree of choice as to which questions you can answer. Candidates may

bring authorised calculators to the examination, but no other materials will

be permitted.

1 Please see over

This page intentionally left blank.

2 Please see over

Question 1 [20 marks]

Comparing Lazy and Eager Learning

The following truth table gives an “m–of–n function” for three Boolean variables, where “1”

denotes true and “0” denotes false. In this case the target function is: “exactly two out of three

variables are true”.

X Y Z Class

0 0 0 false

0 0 1 false

0 1 0 false

0 1 1 true

1 0 0 false

1 0 1 true

1 1 0 true

1 1 1 false

A) [4 marks]

Construct a decision tree which is complete and correct for the examples in the table. [Hint:

draw a diagram.]

B) [4 marks]

Construct a set of classification rules from the tree which is complete and correct for the

examples in the table. [Hint: use an if–then–else representation.]

C) [10 marks]

Suppose we define a simple measure of distance between two equal length strings of Boolean

values, as follows. The distance between two such strings B1 and B2 is:

X X

distance(B1 , B2 ) = |( B1 ) − ( B2 )|

P

where Bi is simply the number of variables with value 1 in string Bi . For example:

distance(h0, 0, 0i, h1, 1, 1i) = |0 − 3| = 3

and

distance(h1, 0, 0i, h0, 1, 0i) = |1 − 1| = 0

What is the LOOCV (“Leave–one–out cross-validation”) error of 2–Nearest Neighbour

using our distance function on the examples in the table ? [Show your working.]

D) [2 marks]

Compare your three models. Which do you conclude provides a better representation for

this particular problem ? Give your reasoning (one sentence).

3 Please see over

Question 2 [20 marks]

Bayesian Learning

A) [2 marks]

Explain the difference between the maximum a posteriori hypothesis hMAP and the max-

imum likelihood hypothesis hML .

B) [4 marks]

Would the Naive Bayes classifier be described as a generative or as a discriminative proba-

bilistic model ? Explain your reasoning informally in terms of the conditional probabilities

used in the Naive Bayes classifier.

C) [10 marks]

Using the multivariate Bernoulli distribution to model the probability of some type of

weather occurring or not on a given day, from the following data calculate the probabilities

required for a Naive Bayes classifier to be able to decide whether to play or not.

Use pseudo-counts (Laplace correction) to smooth the probability estimates.

Day Cloudy Windy Play tennis

1 1 1 no

2 0 1 no

3 1 1 no

4 0 1 no

5 0 1 yes

6 1 0 yes

D) [4 marks]

To which class would your Naive Bayes classifier assign each of the following days ?

Day Cloudy Windy Play tennis

7 0 0 ?

8 0 1 ?

4 Please see over

Question 3 [20 marks]

Neural Networks

A) [4 marks]

A linear unit from neural networks is a linear model for numeric prediction that is fitted by

gradient descent. Explain the differences between the batch and incremental (or stochastic)

versions of gradient descent.

B) [4 marks]

Stochastic gradient descent would be expected to deal better with local minima during

learning than batch gradient descent – true or false ? Explain your reasoning.

A) [12 marks]

Suppose a single unit has output o the form:

o = w0 + w1 x1 + w1 x21 + w2 x2 + w2 x22 + · · · + wn xn + wn x2n

The problem is to learn a set of weights wi that minimize squared error. Derive a batch

gradient descent training rule for this unit.

5 Please see over

Question 4 [20 marks]

Ensemble Learning

A) [8 marks]

As model complexity increases from low to high, what effect does this have on:

1) Bias ?

2) Variance ?

3) Predictive accuracy on training data ?

4) Predictive accuracy on test data ?

B) [3 marks]

Is decision tree learning relatively stable ? Describe decision tree learning in terms of bias

and variance in no more than two sentences.

C) [3 marks]

Is nearest neighbour relatively stable ? Describe nearest neighbour in terms of bias and

variance in no more than two sentences.

D) [3 marks]

Bagging reduces bias. True or false ? Give a one sentence explanation of your answer.

E) [3 marks]

Boosting reduces variance. True or false ? Give a one sentence explanation of your answer.

6 Please see over

Question 5 [20 Marks]

Computational Learning Theory

A) [8 marks]

An instance space X is defined using m Boolean attributes. Let the hypothesis space H

be the set of decision trees defined on X (you can assume two classes). What is the largest

set of instances in this setting which is shattered by H ? [Show your reasoning.]

B) [10 marks]

Suppose we have a consistent learner with a hypothesis space restricted to conjunctions

of exactly 8 attributes, each with values {true, false, don’t care}. What is the size of this

learner’s hypothesis space ? Give the formula for the number of examples sufficient to learn

with probability at least 95% an approximation of any hypothesis in this space with error

of at most 10%. [Note: you are not required to compute the solution.]

C) [2 marks]

Informally, which of the following are consequences of the No Free Lunch theorem:

a) averaged over all possible training sets, the variance of a learning algorithm dominates

its bias

b) averaged over all possible training sets, no learning algorithm has a better off-training

set error than any other

c) averaged over all possible target concepts, the bias of a learning algorithm dominates

its variance

d) averaged over all possible target concepts, no learning algorithm has a better off-

training set error than any other

e) averaged over all possible target concepts and training sets, no learning algorithm is

independent of the choice of representation in terms of its classification error

7 Please see over

Question 6 [20 Marks]

Mistake Bounds

Consider the following learning problem on an instance space which has only one feature, i.e.,

each instance is a single integer. Suppose instances are always in the range [1, 5]. The hypothesis

space is one in which each hypothesis is an interval over the integers. More precisely, each

hypothesis h in the hypothesis space H is an interval of the form a ≤ x ≤ b, where a and b are

integer constants and x refers to the instance. For example, the hypothesis 3 ≤ x ≤ 5 classifies

the integers 3, 4 and 5 as positive and all others as negative.

Instance Class

1 Negative

2 Positive

3 Positive

4 Positive

5 Negative

A) [15 marks]

Apply the Halving Algorithm to the five examples in the order in which they appear

in the table above. Show each class prediction and whether or not it is a mistake, plus the

initial hypothesis set and the hypothesis set at the end of each iteration.

B) [5 marks]

What is the worst-case mistake bound for the Halving Algorithm given the hypothesis

space described above ? Give an informal derivation of your bound.

8 Please see over

END OF PAPER

Você também pode gostar

- Mid Term TestDocumento6 páginasMid Term Testsilence123444488Ainda não há avaliações

- HW 1 Eeowh 3Documento6 páginasHW 1 Eeowh 3정하윤Ainda não há avaliações

- FinalSpring2021 MergedDocumento12 páginasFinalSpring2021 MergedShlok SethiaAinda não há avaliações

- Final 2002Documento18 páginasFinal 2002Muhammad MurtazaAinda não há avaliações

- Caltech Online Homework Learning DataDocumento6 páginasCaltech Online Homework Learning Datataulau1234Ainda não há avaliações

- CSC 5825 Intro. To Machine Learning and Applications Midterm ExamDocumento13 páginasCSC 5825 Intro. To Machine Learning and Applications Midterm ExamranaAinda não há avaliações

- Practice Final CS61cDocumento19 páginasPractice Final CS61cEdward Yixin GuoAinda não há avaliações

- Homework 1Documento7 páginasHomework 1Muhammad MurtazaAinda não há avaliações

- Solutions: 10-601 Machine Learning, Midterm Exam: Spring 2008 SolutionsDocumento8 páginasSolutions: 10-601 Machine Learning, Midterm Exam: Spring 2008 Solutionsreshma khemchandaniAinda não há avaliações

- Midterm SolutionsDocumento8 páginasMidterm SolutionsMuhammad MurtazaAinda não há avaliações

- Final Assignment: 1 InstructionsDocumento5 páginasFinal Assignment: 1 InstructionsLanjun ShaoAinda não há avaliações

- Workshop 7Documento8 páginasWorkshop 7Steven AndersonAinda não há avaliações

- Adam Smith Business School Subject of Economics Degree of MSC Degree Exam Basic Econometrics, Econ5002Documento6 páginasAdam Smith Business School Subject of Economics Degree of MSC Degree Exam Basic Econometrics, Econ5002Junjing MaoAinda não há avaliações

- IIT Kanpur Machine Learning End Sem PaperDocumento10 páginasIIT Kanpur Machine Learning End Sem PaperJivnesh SandhanAinda não há avaliações

- Math 156 Practice - Midterm - ExamDocumento80 páginasMath 156 Practice - Midterm - ExamLei AquinoAinda não há avaliações

- 6390 Fall 2022 MidtermDocumento20 páginas6390 Fall 2022 MidtermDaphne LIUAinda não há avaliações

- hw1 PDFDocumento6 páginashw1 PDFrightheartedAinda não há avaliações

- STPM 2014 Maths Paper 3 SolutionsDocumento7 páginasSTPM 2014 Maths Paper 3 SolutionsPeng Peng KekAinda não há avaliações

- 2019 - Introduction To Data Analytics Using RDocumento5 páginas2019 - Introduction To Data Analytics Using RYeickson Mendoza MartinezAinda não há avaliações

- Learning Data Machine Learning Caltech edXDocumento5 páginasLearning Data Machine Learning Caltech edX정하윤Ainda não há avaliações

- Learning From Data HW 6Documento5 páginasLearning From Data HW 6Pratik SachdevaAinda não há avaliações

- Do Not Open Until Instructed To Do So: Math 2131 Final Exam Thursday, April 12th 180 MinutesDocumento11 páginasDo Not Open Until Instructed To Do So: Math 2131 Final Exam Thursday, April 12th 180 MinutesexamkillerAinda não há avaliações

- Final Exam: CS 189 Spring 2020 Introduction To Machine LearningDocumento19 páginasFinal Exam: CS 189 Spring 2020 Introduction To Machine LearningTuba ImranAinda não há avaliações

- Sabah Smkbandaraya Ii Mathst P3 2014 Qa Section A (45 Marks)Documento6 páginasSabah Smkbandaraya Ii Mathst P3 2014 Qa Section A (45 Marks)Peng Peng KekAinda não há avaliações

- Final Fall00Documento9 páginasFinal Fall00chemchemhaAinda não há avaliações

- EXAM 1 : EECS 203 Spring 2015Documento11 páginasEXAM 1 : EECS 203 Spring 2015DmdhineshAinda não há avaliações

- Math 243 Exam 1 Chapter 1 and 4 KeyDocumento8 páginasMath 243 Exam 1 Chapter 1 and 4 KeyAlice KrodeAinda não há avaliações

- Activity 7Documento5 páginasActivity 7Sam Sat PatAinda não há avaliações

- Paradox and Infinity problem set explores higher infiniteDocumento2 páginasParadox and Infinity problem set explores higher infiniteJoe BergeronAinda não há avaliações

- Important Instructions To The Candidates:: Part BDocumento7 páginasImportant Instructions To The Candidates:: Part BNuwan ChathurangaAinda não há avaliações

- Homework 1: Background Test: Due 12 A.M. Tuesday, September 06, 2020Documento4 páginasHomework 1: Background Test: Due 12 A.M. Tuesday, September 06, 2020anwer fadelAinda não há avaliações

- Homework #5: MA 402 Mathematics of Scientific Computing Due: Monday, September 27Documento6 páginasHomework #5: MA 402 Mathematics of Scientific Computing Due: Monday, September 27ra100% (1)

- SLA Mid-termV2 SolnDocumento5 páginasSLA Mid-termV2 Solncadi0761Ainda não há avaliações

- August - 2018-R16 M3Documento2 páginasAugust - 2018-R16 M3nagarajAinda não há avaliações

- Machine Learning Model Predicts House PricesDocumento9 páginasMachine Learning Model Predicts House PricesEducation VietCoAinda não há avaliações

- Homework 4Documento3 páginasHomework 4João Paulo MilaneziAinda não há avaliações

- Econ301 Final spr22Documento12 páginasEcon301 Final spr22Rana AhemdAinda não há avaliações

- Assignment1 PDFDocumento2 páginasAssignment1 PDFrineeth mAinda não há avaliações

- Statistics - BS 4rthDocumento4 páginasStatistics - BS 4rthUzair AhmadAinda não há avaliações

- ST130: Basic Statistics: Duration of Exam: 3 Hours + 10 MinutesDocumento12 páginasST130: Basic Statistics: Duration of Exam: 3 Hours + 10 MinutesChand DivneshAinda não há avaliações

- MidtermSpring 2019Documento6 páginasMidtermSpring 2019Natasha Elena TarunadjajaAinda não há avaliações

- CS 114: Introduction To Computer Science II Final Exam: John Tsai May 16, 2019Documento11 páginasCS 114: Introduction To Computer Science II Final Exam: John Tsai May 16, 2019sula miranAinda não há avaliações

- 5/6/2011: Final Exam: Your NameDocumento4 páginas5/6/2011: Final Exam: Your NameThahir ShahAinda não há avaliações

- Ell784 17 AqDocumento8 páginasEll784 17 Aqlovlesh royAinda não há avaliações

- Test Your Knowledge - Study Session 10Documento8 páginasTest Your Knowledge - Study Session 10Henry CrowterAinda não há avaliações

- Mock Exam - End of Course 2023Documento11 páginasMock Exam - End of Course 2023Gart DesaAinda não há avaliações

- ExamDocumento10 páginasExamapi-304236873Ainda não há avaliações

- RecSys - Final (Solution)Documento6 páginasRecSys - Final (Solution)K201873 Shayan hassanAinda não há avaliações

- CS 540-1: Introduction To Artificial Intelligence: Closed Book (Two Sheets of Notes and Calculators Allowed)Documento10 páginasCS 540-1: Introduction To Artificial Intelligence: Closed Book (Two Sheets of Notes and Calculators Allowed)chemchemhaAinda não há avaliações

- MAM1000W_tutorials-3 - CopyDocumento75 páginasMAM1000W_tutorials-3 - CopyKhanimamba ManyikeAinda não há avaliações

- Statistics and Econometrics I: Midterm ExamDocumento10 páginasStatistics and Econometrics I: Midterm ExamІгор АлейникAinda não há avaliações

- Midterm Solutions PDFDocumento17 páginasMidterm Solutions PDFShayan Ali ShahAinda não há avaliações

- Econometricstutorials Exam QuestionsSelectedAnswersDocumento11 páginasEconometricstutorials Exam QuestionsSelectedAnswersFiliz Ertekin100% (1)

- Stat 401B Exam 1 F16-KeyDocumento7 páginasStat 401B Exam 1 F16-KeyjuanEs2374pAinda não há avaliações

- CS 540: Introduction To Artificial Intelligence: Final Exam: 10:05am-12:05pm, December 19, 2011 Room 168 NolandDocumento10 páginasCS 540: Introduction To Artificial Intelligence: Final Exam: 10:05am-12:05pm, December 19, 2011 Room 168 NolandchemchemhaAinda não há avaliações

- EE 559 Midterm From S11Documento12 páginasEE 559 Midterm From S11chrisc885597Ainda não há avaliações

- 2015 MidtermDocumento8 páginas2015 MidtermThapelo SebolaiAinda não há avaliações

- Let's Practise: Maths Workbook Coursebook 7No EverandLet's Practise: Maths Workbook Coursebook 7Ainda não há avaliações

- Pharmacology in Mind: Xem: T : SangDocumento1 páginaPharmacology in Mind: Xem: T : SangDavid LeAinda não há avaliações

- 2020 NSBHS 7-10 Assessment PolicyDocumento14 páginas2020 NSBHS 7-10 Assessment PolicyDavid LeAinda não há avaliações

- How to write a top medical school applicationDocumento1 páginaHow to write a top medical school applicationDavid LeAinda não há avaliações

- Constructing Critical Literacy Practices Through Technology Tools and InquiryDocumento12 páginasConstructing Critical Literacy Practices Through Technology Tools and InquiryDavid LeAinda não há avaliações

- 2021 CAT and AMC NOTE TO STUDENTSDocumento2 páginas2021 CAT and AMC NOTE TO STUDENTSDavid LeAinda não há avaliações

- Pobble 2Documento5 páginasPobble 2David LeAinda não há avaliações

- Amc Junior 2015Documento7 páginasAmc Junior 2015David Le50% (2)

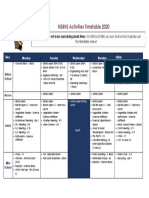

- NSBHS Activities Timetable 2020: Time Monday Tuesday Wednesday Thursday FridayDocumento1 páginaNSBHS Activities Timetable 2020: Time Monday Tuesday Wednesday Thursday FridayDavid LeAinda não há avaliações

- Reading Practice Year 9Documento7 páginasReading Practice Year 9David LeAinda não há avaliações

- Step 4 - Select The Most Appropriate Format - ICanMedDocumento1 páginaStep 4 - Select The Most Appropriate Format - ICanMedDavid LeAinda não há avaliações

- Test AnswersDocumento1 páginaTest AnswersKaren ThereseAinda não há avaliações

- Polya Problem-Solving Seminar Week 1: Induction and PigeonholeDocumento2 páginasPolya Problem-Solving Seminar Week 1: Induction and PigeonholeDavid LeAinda não há avaliações

- t3 Y9 Science Theorybook 2017 SampleDocumento7 páginast3 Y9 Science Theorybook 2017 SampleDavid LeAinda não há avaliações

- Allsop Jake.-Very Short Stories (With Exercises)Documento57 páginasAllsop Jake.-Very Short Stories (With Exercises)David LeAinda não há avaliações

- Zeter Jeff.-Extensive Reading For Academic Success. Advanced C (Answer and Key)Documento12 páginasZeter Jeff.-Extensive Reading For Academic Success. Advanced C (Answer and Key)David LeAinda não há avaliações

- Allsop Jake. - Penguin English Tests - Book 3 - IntermediateDocumento90 páginasAllsop Jake. - Penguin English Tests - Book 3 - IntermediateDavid LeAinda não há avaliações

- ANSWER KEY SECTIONDocumento14 páginasANSWER KEY SECTIONDavid LeAinda não há avaliações

- DNA PaperDocumento18 páginasDNA PaperDavid LeAinda não há avaliações

- VNDefSecinRussia DiplomatDocumento1 páginaVNDefSecinRussia DiplomatDavid LeAinda não há avaliações

- Trading BookDocumento5 páginasTrading BookDavid LeAinda não há avaliações

- A Voice For NatureDocumento15 páginasA Voice For NatureDavid LeAinda não há avaliações

- SSRN Id2150876 PDFDocumento40 páginasSSRN Id2150876 PDFDavid LeAinda não há avaliações

- Trading 3Documento15 páginasTrading 3David LeAinda não há avaliações

- SpecDocumento19 páginasSpecSayed Moin AhmedAinda não há avaliações

- Topic08. Simple Linear RegDocumento29 páginasTopic08. Simple Linear RegDavid LeAinda não há avaliações

- Trading Book 2Documento11 páginasTrading Book 2David LeAinda não há avaliações

- Analysis of Covariance (ANCOVA) ExplainedDocumento16 páginasAnalysis of Covariance (ANCOVA) ExplainedDavid LeAinda não há avaliações

- Reference RosiglitazonevamyocardialinfarctionDocumento8 páginasReference RosiglitazonevamyocardialinfarctionDavid LeAinda não há avaliações

- Budgetary Control NumericalDocumento8 páginasBudgetary Control NumericalPuja AgarwalAinda não há avaliações

- Sarina Dimaggio Teaching Resume 5Documento1 páginaSarina Dimaggio Teaching Resume 5api-660205517Ainda não há avaliações

- Sosai Masutatsu Oyama - Founder of Kyokushin KarateDocumento9 páginasSosai Masutatsu Oyama - Founder of Kyokushin KarateAdriano HernandezAinda não há avaliações

- (Coffeemaker) Tas5542uc - Instructions - For - UseDocumento74 páginas(Coffeemaker) Tas5542uc - Instructions - For - UsePolina BikoulovAinda não há avaliações

- Fright ForwordersDocumento6 páginasFright ForworderskrishnadaskotaAinda não há avaliações

- Gender and DelinquencyDocumento26 páginasGender and DelinquencyCompis Jenny-annAinda não há avaliações

- Ecological Modernization Theory: Taking Stock, Moving ForwardDocumento19 páginasEcological Modernization Theory: Taking Stock, Moving ForwardFritzner PIERREAinda não há avaliações

- OutliningDocumento17 páginasOutliningJohn Mark TabbadAinda não há avaliações

- Midterm Exam ADM3350 Summer 2022 PDFDocumento7 páginasMidterm Exam ADM3350 Summer 2022 PDFHan ZhongAinda não há avaliações

- Bracketing MethodsDocumento13 páginasBracketing Methodsasd dsa100% (1)

- Destiny by T.D. JakesDocumento17 páginasDestiny by T.D. JakesHBG Nashville89% (9)

- HRM ........Documento12 páginasHRM ........Beenish AbbasAinda não há avaliações

- Issues and Concerns Related To Assessment in MalaysianDocumento22 páginasIssues and Concerns Related To Assessment in MalaysianHarrish ZainurinAinda não há avaliações

- इंटरनेट मानक का उपयोगDocumento16 páginasइंटरनेट मानक का उपयोगUdit Kumar SarkarAinda não há avaliações

- Hays Grading System: Group Activity 3 Groups KH PS Acct TS JG PGDocumento1 páginaHays Grading System: Group Activity 3 Groups KH PS Acct TS JG PGkuku129Ainda não há avaliações

- Ecomix RevolutionDocumento4 páginasEcomix RevolutionkrissAinda não há avaliações

- Polystylism in The Context of Postmodern Music. Alfred Schnittke's Concerti GrossiDocumento17 páginasPolystylism in The Context of Postmodern Music. Alfred Schnittke's Concerti Grossiwei wuAinda não há avaliações

- Fitting A Logistic Curve To DataDocumento12 páginasFitting A Logistic Curve To DataXiaoyan ZouAinda não há avaliações

- Lean Foundation TrainingDocumento9 páginasLean Foundation TrainingSaja Unķnøwñ ĞirłAinda não há avaliações

- Symphonological Bioethical Theory: Gladys L. Husted and James H. HustedDocumento13 páginasSymphonological Bioethical Theory: Gladys L. Husted and James H. HustedYuvi Rociandel Luardo100% (1)

- 2005 Australian Secondary Schools Rugby League ChampionshipsDocumento14 páginas2005 Australian Secondary Schools Rugby League ChampionshipsDaisy HuntlyAinda não há avaliações

- Diagram Illustrating The Globalization Concept and ProcessDocumento1 páginaDiagram Illustrating The Globalization Concept and ProcessAnonymous hWHYwX6Ainda não há avaliações

- Public Relations & Communication Theory. J.C. Skinner-1Documento195 páginasPublic Relations & Communication Theory. J.C. Skinner-1Μάτζικα ντε Σπελ50% (2)

- Bach Invention No9 in F Minor - pdf845725625Documento2 páginasBach Invention No9 in F Minor - pdf845725625ArocatrumpetAinda não há avaliações

- C4 - Learning Theories and Program DesignDocumento43 páginasC4 - Learning Theories and Program DesignNeach GaoilAinda não há avaliações

- PCC ConfigDocumento345 páginasPCC ConfigVamsi SuriAinda não há avaliações

- AIA Layer Standards PDFDocumento47 páginasAIA Layer Standards PDFdanielAinda não há avaliações

- StoreFront 3.11Documento162 páginasStoreFront 3.11AnonimovAinda não há avaliações

- 19-Year-Old Female With Hypokalemia EvaluatedDocumento5 páginas19-Year-Old Female With Hypokalemia EvaluatedMohammed AhmedAinda não há avaliações

- Eng9 - Q3 - M4 - W4 - Interpret The Message Conveyed in A Poster - V5Documento19 páginasEng9 - Q3 - M4 - W4 - Interpret The Message Conveyed in A Poster - V5FITZ100% (1)