Escolar Documentos

Profissional Documentos

Cultura Documentos

HCM

Enviado por

ChakravarthiVedaTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

HCM

Enviado por

ChakravarthiVedaDireitos autorais:

Formatos disponíveis

Oracle Human Capital Management Cloud

Data Loading and Data Extraction Best Practices

ORACLE WHITE PAPER | AUGUST 2015

Disclaimer

The following is intended to outline our general product direction. It is intended for information purposes only, and may not be

incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied

upon in making purchasing decisions. The development, release, and timing of any features or functionality described for Oracle’s

products remains at the sole discretion of Oracle.

2 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Table of Contents

Disclaimer 2

Overview 4

Data Loading 4

Co-Existence Deployment 4

Full HR Deployment 5

Recommended Tool for Data Loading: HCM Data Loader 6

HCM Data Loader Features 6

Recommended Best Practices for Data Loading 7

References 10

Data Extraction 11

Build Your Own: Custom Extracts 12

Recommended Tool for Data: HCM Extract 12

Recommended Architecture for Out-Bound Integrations 13

Recommended Best Practices and Design Considerations 15

References 16

Guidance on Use of BI Publisher (BIP) for Data Extraction 16

3 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Overview

As organizations plan their transition to Cloud based HR systems , data loading and data extraction are two of the

most complex—and critical—planning aspects for organizations switching from a traditional HR management

system (HRMS) to a software-as-a-service application such as Oracle HCM Cloud.

During implementation, you are required to make the right choices about conversion and storage of historical HR

data. Companies often struggle with decisions about how much and what type of data to convert and store:

either internally in Oracle HCM Cloud, externally in a data warehouse, by retaining reporting access to the old

system, or through a combination of these or other options. These choices require strategic thinking, as they

involve a number of trade-offs that can greatly impact project effort, cost, and risk. The best way to set about

making the right decisions for your organization is to come to an understanding of the data conversion process, as

well as your organizations’ specific reporting needs and requirements.

Once the system is live, Data Extraction from the HCM Cloud for the purpose of integrations to downstream

systems is equally crucial and requires planning in terms of the number of data elements, the frequency of the

extracts, the timing of the extracts as these impact the system performance and the integrity of the data and so

forth.

This white paper focuses on best practices, considerations, and guidance for both data loading and data

extraction for implementation consultants and system integrators.

Data Loading

Depending on how customers implement HCM Cloud, there are two deployment patterns: co-existence

deployment or full HR deployment. The data loading use case differs for each pattern.

Co-Existence Deployment

For a Talent coexistence deployment, you are keeping part of your existing HCM System and moving your talent

modules to the HCM Cloud as the first step in your full HCM Transition. In this deployment mode, the existing

Core HR continues to be the system of record for HR data.

In this case, keeping the two HCM systems in sync is done using bulk data import:

One time Data Load of Employee data to HCM Cloud for initialization.

Migration of Talent Profile information migrated to HCM Cloud.

Ongoing data load to sync employee changes from the Core HR system of record.

HCM Cloud used for Talent Review, Goals and Performance Management, Workforce compensation,

Analytics.

Feed profile and compensation changes information to other systems from HCM Cloud.

4 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Full HR Deployment

In this deployment mode, you transition your Core HR systems from On Premise to the HCM Cloud.

In this case, the data migration when you are moving your on premise HCM System to the cloud is done via bulk

data import. This allows you to move large amounts of history into the new Cloud Based HCM System.

Data Migration/Initial Conversion: Includes Load Setups, Work Structures, and Employee Data from

legacy HR systems.

HCM Cloud becomes the system of record for HR data.

Feed downstream systems with New Hires, Employee Updates, Transfers, Promotions, Terminations,

Work structures & Updates.

Interfaces to downstream Payroll, Benefits and Time and Labor systems.

Feeds from Recruiting System to HCM Cloud.

Ongoing data load runs: Sometimes if there is an external system that needs to feed to HCM Cloud on a

regular basis even if HCM Cloud is the Master HCM system, you will need the data loaders to run on a

periodic system. Example: Kronos for Time Information feeding to HCM Cloud Payroll.

5 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Recommended Tool for Data Loading: HCM Data Loader

HCM Data Loader (HDL) is a powerful tool for bulk loading data from any source to Oracle Fusion Human Capital

Management .This is the primary and the ONLY mechanism that should be used when moving and synchronizing

large volumes of data between systems.

HCM Data Loader Features

Supports both Co-existence and Full Deployment modes of HCM Cloud.

Supports loading complex hierarchical data, loading large volumes of records, handling iterative loads and

being able to manage records that have date effectivity.

Supports synchronizing incremental updates: large volumes of incremental changes, for example,

capturing every change that happens on the employee record.

Supports PGP-based encryption for data at rest.

Customers are required to deliver a compressed file of data in a prescribed format to the Oracle

WebCenter Content server.

End-to-end automation can be achieved. Once the file is uploaded, the data loading processes can be

initiated either manually from the UI or via a web service invocation (LoaderIntegrationService), if

automation is desired. Note that while the web service-based invocation facility is provided to enable

automation of the process from source systems, the Data Loader is and will remain a file-based bulk load

utility.

6 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Recommended Best Practices for Data Loading

Design time considerations:

o When customers are running multiple loads in parallel, no particular objects’ record should be

split across multiple loads. For example, employees themselves can be split into parallel files for

loads, but no individual employee’s record should be split across multiple files.

o During the planning phase, it is recommended to consider special transaction types such as global

transfer, global temporary assignments, and multiple assignments.

o Work relationship and Salary are two different objects. You can load 3 years of history for work

relationship along with 10 years of history for salary if that’s what is needed to run the

compensation module. Also, Salary is not a date-effective object so you cannot make date

corrections once the data is loaded.

o If you are loading actual email addresses into the stage instance, then don’t forget to turn-off the

email notifications during the conversion process.

Considerations for running multiple loads:

Customers can achieve multiple loads with the following approaches:

a) Splitting the loads based on person complete record For Example a load of 10000 people can be split into

multiple files and these multiple files can be run as parallel loads i.e. 5000 in each file containing mutually

exclusive set this approach can help to achieve the parallelism in data loads.

b) Customers can also consider approach of multiple loads for Entities within a object i.e. load the

Mandatory worker data first and then add on the additional entities for example person name and

legislative information in one data file and then load Person Email and Person phone in another data file.

Customers should never split the data files into multiple files based on Date effective records of

worker.

7 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Considerations For Talent Co-existence:

o We recommend data loads from the Core HR System to Fusion Talent and Comp.

o Further, if there are specific changes in a recruiting system, those are best funneled through

the Core HR System.

Considerations for Full History data load vs. Partial data load:

o Cost/benefit of converting historical data should be evaluated at an early stage in the

implementation.

o Historical data is typically dirtier and therefore more costly to convert the older it is.

o Customers need to design (technically and process-wise) to make historical conversion offline.

o For example, it can be done before a critical cutover window or after go-live.

o As part of the implementation design, customers have to identify the volumes of data loads for

each object and evaluate the impact of historical data in their source instance.

o Additionally, customers should review the process-wise flows in Fusion for the importance of

historical data. For example, a Fusion Financials customer might have a preference for Historical

data to be loaded after a production cutover.

o If its Full HR customer and does not want to go back to old Legacy system for any reason then it is

Historical data has to be loaded before production cutover.

8 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Considerations for Source Keys:

o During the initial implementation design, customers need to consider the type of implementation

and its source of truth for each data object.

o For example, if a customer is implementing the co-existence mode, where the source of truth is a

legacy system for HR record keeping, then the source system keys would be easy to manage the

integration.

o If a customer is implementing the full HCM implementation, and has third party-integrations to

load the data, it would be easy to use User keys (like person number) in this case.

Considerations for ongoing data synchronizations.

o Must avoid two-way masters of data.

o It’s important to have only one source of truth (master data) for a particular object during

integrations.

o Data should always be created first on the identified source of truth and synchronization should

be to other systems. For example: though work structures are needed by all integrating systems

as well in coexistence type implementation, it’s important to have a design to ensure that the

creation of work structures is only done in the master environment and all other instances

requiring work structure data are only synchronizing, and business users are not creating them.

Considerations when dealing with multiple source systems.

o Need to determine master owner of data from source systems. Otherwise, there is a risk of data

corruption/entropy.

o When multiple source systems are feeding data into Fusion HCM , it’s important to ensure the

base records are created first in the Fusion HCM before any updates are performed to avoid

referential integrity issues.

Runtime considerations: performance and sizing

o For any specific or additional requirements that are not met with the default pod configuration

may need modification of the sizing of the pod .These specific cases and requirements can be

discussed with Oracle to provide elastic cloud capability to increasing sizing needs.

Instance management: P2T refreshes

o It’s important to keep the test instances in sync with the production instances at regular intervals.

This enables customers to perform tests of new features, testing data loads in the stage pod

before rolling out in production to avoid issues.

o For co-existence customers, we recommend that the source HR System of Record is also

refreshed with HCM Cloud P2T and only one instance of the source HR system of record is

synched with a HCM Cloud pod.

9 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

o In a multiple phase implementation, P2T should be planned in advance to keep test data close to

production data.

Implementation Considerations:

o It’s a good practice to identify integration requirements prior to the initial design of

implementation for initial loads. This helps customers foresee the data impacts of integrations

ahead of time.

Testing considerations:

o Leverage the Data Validator Tool to verify the quality of the data. Any new changes to source

system data files should to be verified in the stage pod before going to production.

o The Data File Validator Tool can be downloaded from My Oracle Support Doc ID: 2022617.1. You

can use this tool to validate the format of the data file extracted from your source system,

including flex field configuration. It allows some custom rules to be added for more precision in

your extracted data.

Post-data conversion processing

o Validate and reconcile converted data before making any transaction such as running payroll,

compensation cycle, performance reviews, user creation, and so on. Later, if you need to do a

complete purge of the data then it is easier to do the clean-up in core HR rather than trying

to clean up the data from multiple modules.

References

HCM Data Loader (HDL)

HCM Data Loader: Notice of General Availability Doc ID 2022629.1

This note provides the announcement of availability, a brief overview and some basic guidance for

new and existing customers.

HCM Data Loader Frequently Asked Questions (FAQ) Doc ID: 2022630.1

This note provides some questions you may have which include advantages of HDL, object related

information, and other common questions.

Oracle HCM Data Loader: User Guide Doc ID: 1664133.1

This note contains the User Guides for both Release 9 and 10.

HCM Data Loader: Business Object Documentation Doc ID 2020600.1

This note contains detailed information about the objects supported.

Migrating from File-Based Loader to HCM Data Loader Doc ID 2022628.1

This note contains key information that existing customers should think about when planning to

migrate from FBL to HDL.

Integration Guide for Oracle Fusion HCM and Taleo Recruiting (Doc ID 1947417.1)

10 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Data Extraction

Customers need to extract data out of the HCM Cloud to integrate with downstream systems on premise and

third-party providers. If HCM Cloud is the master HCM System, all information is in Fusion HCM and then fed to all

of the downstream systems. These downstream systems are in two buckets:

1. Payroll and Benefits Systems

2. Build your own extracts–Custom Extracts

Payroll and Benefits Systems: these systems require specialized feeds and files sent regularly typical feeds and

types are below. These use HCM extract and the specific Payroll Interface Variant based on the third party payroll

provider.

Category Name Data Elements Configuration Pre seeded

Effort Needed

Payroll ADP US Extracts Employee, Work structure ,Salary Depends on the Yes

Payforce (ADP Payroll/tax data, Gross Earning Calculation as customer

Connections for per the third party specification scenario

Payforce) May require SI configuration for customer

specific ADP Data definition and HCM Cloud

configuration

North Gate Extracts Employee, Work structure ,Salary Depends on the Roadmap

Arinso Payroll Payroll/tax data, Gross Earning Calculation as customer

per the third party specification scenario

May require SI configuration for customer

specific ADP Data definition and HCM Cloud

configuration

Interface to Extracts Employee, Work structure ,Salary Depends on the Yes

PeopleSoft Payroll/tax data, customer (Limited

Payroll, scenario Availability)

& EBS Payroll

Global Generic Consists Payroll/tax data , Gross Earning Depends on the Roadmap

Payroll Interface Calculation etc customer

Used to extract Common HR and Regional scenario

data from an LDG.

SI adds local data as specified by regional

payroll vendors.

Benefits Benefits XML HR xml based Extracts with Employee Benefit Depends on the Yes

Integration Enrollments data that’s transformed by customer

Benefits XML to various Benefits carrier scenario

specifications

Incremental info on

Health/Savings/FSA/Disability/Life AD&D info,

Enrollment, Life Events info, and so forth.

11 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Build Your Own: Custom Extracts

These extracts are created using the toolset that Oracle provides called HCM Extract. You create an extract to

meet specialized needs like feeding downstream CRM, ERP, Financial systems, Legacy and homegrown systems

that require people data.

Typically, customers have downstream integrations for ERP, CRM, and so forth. All of these require Org Structures

and Employee information.

In this case, a master extract gets the data required across the systems. This file is sent to on premise middleware

to manipulate, transform, and send to the downstream systems. Typically the data elements used here are Work

structures, Employee assignment change etc .These tend to have fewer data elements and are simple extracts.

After the initial sync, these are generally a changes-only extract, and hence have fewer rows of data.

Specific HCM Applications not in the Cloud landscape: LMS, Time and Labor have specific object needs. It is

recommend to have dedicated HCM Extracts for these use cases.

Recommended Tool for Data: HCM Extract

The HCM Extracts feature in HCM Cloud is a flexible outbound integration tool for generating data files and

reports. This tool lets you choose the HCM Data, gathers it from the database and archives. It can then convert

this archived data to a format of your choice and deliver it to recipient systems.

HCM Extract has a dedicated UI for specifying the records and attributes to be extracted. The set of

records to be extracted can be controlled using complex selection criteria.

Data elements in an HCM Extract are defined using fast formula database items and rules.

HCM Extract is designed and tuned to handle large volumes. There are over 3000 objects covered.

Using HCM Extract protects your integrations during upgrades and performance is owned and optimized

by Oracle.

The output of an extract can optionally be generated in the following formats by leveraging the built-in

integration with BI Publisher: CSV, XML, Excel, HTML, RTF and PDF.

HCM Extracts can be invoked from the UI in the Data Exchange workarea or from the Checklists UI in the

Payroll workarea. Alternatively, they can be invoked using the FlowActionsService webservice from

outside of HCM Cloud allowing the outbound extract to be automated as part of an overall integration

flow.

HCM Extracts is the ONLY tool that should be used for all bulk retrieval of data and to create integrations

to downstream systems.

12 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Recommended Architecture for Out-Bound Integrations

There are broadly three types of interfaces out of Fusion HCM:

Extracts to feed downstream ERP and other systems work structures and employee data. In this case the

master for the data is Fusion HCM and the downstream systems: Procurement, ERP, others need the

Work Structures and Employee data. This is the most common extract required for most downstream

systems

Specialized extracts for Payroll and Benefits systems. Here the interface will need several attributes of

data most often in a prescribed format.

Interfaces to other systems in the HCM landscape, such as Kronos T&L, other LMS Systems etc. The

interfaces here will also have other attributes such as assignments, locations and skills.

Additional to the type of interfaces we also have additional complexity with organization size and number of

downstream interfaces.

Scenario: small organization that needs 5-10 extracts from HCM Cloud

In this case, you can define and run the extracts and transport the output files to target systems directly from

HCM Cloud.

13 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Scenario: medium/large enterprise that needs HR data for a large number of downstream systems

In this case, it is recommended that you extract only the fewest necessary extracts from HCM Cloud.

Use an on-premise middleware to transform the extracts into the shapes required by downstream systems and

pushed into the downstream systems and databases directly from this middleware layer.

HCM Extracts

Middleware on Premise

(File Transformation, Load,

transport )

Downstream 3rd Party Systems or

systems service providers

14 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Recommended Best Practices and Design Considerations

For customers with a large number of downstream integration requirements, the recommended best

practice is that customers take the fewest necessary extracts from HCM Cloud. These extracts should:

o Have non-overlapping entities and attributes (for example, the same entity does not exist in

multiple extracts).

o Have a clear relationship from the point of relative time when extracts were done.

o Be transformed into the shapes required by downstream systems using a customer’s on-premise

middleware, and pushed into the downstream systems and databases directly from this

middleware layer.

Customers should avoid running multiple extracts with overlapping entities and attributes and attempt to

merge data downstream across these extracts. Attempting transaction level integrity across multiple

extracts run at different times of the day or week.

While smaller output files from HCM Extracts can directly be manipulated in the middleware layer,

Customers should consider using an ETL tool like ODI when processing large files sizes, for exampleif the

output files from HCM Extracts are over 100 MB. Or when there are requirements to synchronize the

data from the HCM Extract output with data from other on premise systems.

When using data persistence, for example an operation data store (ODS) in the on premise middleware

layer: Care should be taken to not use ODS as a source of truth for HR data since it will always be out of

sync with HCM Cloud data.

Customers should not attempt to duplicate HCM Cloud’s security rules on the ODS dataset. HCM Cloud

security rules are very complex and cannot be de-normalized.

Additional best practices for extracts can be categorized into 3 sections:

1. Design Considerations

The HCM Extracts functionality makes use of data base items to retrieve data from the application tables. It

uses User Entity/Route to define the query for obtaining data for a block. The block essentially defines, by

way of a reference to a User Entity, the SQL query/cursor FROM and general WHERE clause, along with

columns that can be part of the SELECT clause per the Database Items defined for that User Entity. So it

crucial for end users to identify and use the right User Entity/DBI Group for extracting the attributes based on

their requirements.

Block links would be used to form a block hierarchy or sequence, with records in each block defining the

actual sequencing of block hierarchy traversal relative to other records in the block. Block links are based

upon a DBI (hence DBI group) defined for each of the respective block user entities, specifying an attribute

from each block with which to form the join criteria. Users need to join the blocks to retrieve the necessary

data in a hierarchical format by using appropriate and efficient joins between the blocks.

Database items (DBIs) and Database Item Groups would be created for these User Entities, which may be

used as the basis for Data Elements within a record and/or for setting the values of relevant contexts for the

block.

15 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

So it is critical to get the extract structure designed by using appropriate blocks based on the requirements in

the first place. Any changes to the structure of the extract will have changes in the XML data structure and

would have significant impact in downstream processing code and thereby the integration as well. Extract

data structure change may also invoke a full extract when run in Changes only mode.

2. High Volume Extract Considerations

When dealing with large volume of data to be exported you have to leverage the multi-threading capabilities

in extracts as well as opt for Changes Only modes for data extraction. Extract Changes only mode mandates

the extract to be designed with threading options. This would help the required data to be archive in parallel

3. Maintenance -Purging Archive Tables

An extract run archives the data into Pay Action Information table. The archive table might grow to a larger

extend based on the number of extracts being run. This could result in performance degradation as the data

volume increases in this table. So it is recommended to rollback the previous extract runs and errored extract

runs if any.

References

HCM Extracts:

o Oracle Fusion HCM Extracts Guide

o Extracts delivery options

o Defining Simple Outbound Interface Using HCM Extracts

o How to use DBIs for formulas and Extracts in Fusion HCM

Guidance on Use of BI Publisher (BIP) for Data Extraction

The use of BIP for data extraction is not a recommended best practice due to the following reasons:

Customers using BI Publisher for data extracts write their own extracts by directly accessing DBMS tables

in the HCM Cloud.

BI Publisher extracts are not protected through upgrades. BIPublisher custom extracts can break each

time there is a change in the schema.

Unlike HCM Extracts , BI Publisher’s custom extract performance is not owned and optimized by Oracle.

16 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Oracle Corporation, World Headquarters Worldwide Inquiries

500 Oracle Parkway Phone: +1.650.506.7000

Redwood Shores, CA 94065, USA Fax: +1.650.506.7200

CONNECT W ITH US

blogs.oracle.com/oracle Copyright © 2015, Oracle and/or its affiliates. All rights reserved. This document is provided for information purposes only, and the contents

hereof are subject to change without notice. This document is not warranted to be error-free, nor subject to any other warranties or conditions,

facebook.com/oracle whether expressed orally or implied in law, including implied warranties and conditions of merchantability or fitness for a particular purpose. We

specifically disclaim any liability with respect to this document, and no contractual obligations are formed either directly or indirectly by this

twitter.com/oracle document. This document may not be reproduced or transmitted in any form or by any means, electronic or mechanical, for any purpose,

without our prior written permission.

oracle.com

Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

Intel and Intel Xeon are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks are used under license and are

trademarks or registered trademarks of SPARC International, Inc. AMD, Opteron, the AMD logo, and the AMD Opteron logo are trademarks or

registered trademarks of Advanced Micro Devices. UNIX is a registered trademark of The Open Group.0115

Oracle Fusion Human Capital Management Cloud

File Transfer Automation and Data Security

March 2015

17 | DATA LOADING AND DATA EXTRACTION BEST PRACTICES

Você também pode gostar

- ID 2235545.1 Oracle HCM Cloud Checklists For Upgrading From Release 11 To Release 12 v3Documento30 páginasID 2235545.1 Oracle HCM Cloud Checklists For Upgrading From Release 11 To Release 12 v3somefunbAinda não há avaliações

- PeopleSoft Human Capital Management 9.2 Through Update Image 23 Installation 072017Documento44 páginasPeopleSoft Human Capital Management 9.2 Through Update Image 23 Installation 072017ChakravarthiVeda100% (1)

- PeopleSoft Human Capital Management 9.2 Through Update Image 23 Installation 072017Documento44 páginasPeopleSoft Human Capital Management 9.2 Through Update Image 23 Installation 072017ChakravarthiVeda100% (1)

- HRX Uk HR Setup r12Documento50 páginasHRX Uk HR Setup r12ChakravarthiVedaAinda não há avaliações

- Fast Formula in Oracle Cloud HCMDocumento32 páginasFast Formula in Oracle Cloud HCMChakravarthiVeda100% (1)

- Electronic Health RecordsDocumento12 páginasElectronic Health RecordsSahar AlmenwerAinda não há avaliações

- Displaying Current Logged User in A OTBI Report in Oracle Fusion HCM Cloud ApplicationDocumento5 páginasDisplaying Current Logged User in A OTBI Report in Oracle Fusion HCM Cloud ApplicationBala SubramanyamAinda não há avaliações

- Security Rules in HRMS (Restrict Users)Documento7 páginasSecurity Rules in HRMS (Restrict Users)zafariqbal_scribdAinda não há avaliações

- Watan Investment Erp Project Irecruitment: Te.040 S T SDocumento21 páginasWatan Investment Erp Project Irecruitment: Te.040 S T SlistoAinda não há avaliações

- Oracle Fusion - HR Setup by Mariam Hamdy Mohamed - TEQNYAT Consulting KSADocumento46 páginasOracle Fusion - HR Setup by Mariam Hamdy Mohamed - TEQNYAT Consulting KSAhamdy20017121Ainda não há avaliações

- Automating HCM Data Loader R11 (October 2017) White PaperDocumento30 páginasAutomating HCM Data Loader R11 (October 2017) White Paperjeffrey_m_sutton100% (1)

- Using Extract Criteria Fast Formula in Oracle HCM Cloud Application A Sample ExampleDocumento2 páginasUsing Extract Criteria Fast Formula in Oracle HCM Cloud Application A Sample ExampleBala SubramanyamAinda não há avaliações

- Setup and User Manual of Online PayslipDocumento8 páginasSetup and User Manual of Online PayslipMohamed Hosny ElwakilAinda não há avaliações

- HCM Extract DMOneDocumento19 páginasHCM Extract DMOnepadma gaddeAinda não há avaliações

- Integrating With HCMDocumento588 páginasIntegrating With HCMbalabalabala123Ainda não há avaliações

- OIC To HCMDocumento23 páginasOIC To HCMDeepak AroraAinda não há avaliações

- HCM Extracts FAQ For Release 5: AnswerDocumento4 páginasHCM Extracts FAQ For Release 5: Answernarendra pAinda não há avaliações

- How To Setup Employee DirectoryDocumento3 páginasHow To Setup Employee DirectoryjosephbijoyAinda não há avaliações

- How To Replace Seeded Saudi Payslip With A Custom OneDocumento64 páginasHow To Replace Seeded Saudi Payslip With A Custom Onehamdy20017121Ainda não há avaliações

- Using A Custom Payroll Flow Pattern To Submit A Parameterized Report in Oracle Cloud ApplicationDocumento9 páginasUsing A Custom Payroll Flow Pattern To Submit A Parameterized Report in Oracle Cloud ApplicationBala SubramanyamAinda não há avaliações

- Oracle Fusion HRMS For UAE Absence Setup White Paper 20.10-1Documento34 páginasOracle Fusion HRMS For UAE Absence Setup White Paper 20.10-1G FernandoAinda não há avaliações

- Oracle HR For Non-HR PeopleDocumento41 páginasOracle HR For Non-HR PeoplenthackAinda não há avaliações

- Fusion Applications PresentationDocumento48 páginasFusion Applications PresentationSridhar YerramAinda não há avaliações

- Oracle Fusion OTBI Reports by Ravinder Reddy: Udem yDocumento7 páginasOracle Fusion OTBI Reports by Ravinder Reddy: Udem yKasiviswanathan MuthiahAinda não há avaliações

- Automating HCM Data Loader PDFDocumento18 páginasAutomating HCM Data Loader PDFpurnachandra426Ainda não há avaliações

- GEH-6839 Mark VIe Control Systems Secure Deployment GuideDocumento2 páginasGEH-6839 Mark VIe Control Systems Secure Deployment Guideeaston zhang100% (1)

- HRX UK HR Setup R12Documento101 páginasHRX UK HR Setup R12Srinivasa Rao AsuruAinda não há avaliações

- Business Architecture A Practical Guide (Jonathan Whelan, Graham Meaden)Documento304 páginasBusiness Architecture A Practical Guide (Jonathan Whelan, Graham Meaden)Shahjahan MohammedAinda não há avaliações

- A HCM Responsive User Experience Setup Whitepaper 18B - 20A PDFDocumento164 páginasA HCM Responsive User Experience Setup Whitepaper 18B - 20A PDFFerasHamdanAinda não há avaliações

- Automation of HCM ExtractDocumento8 páginasAutomation of HCM ExtractyurijapAinda não há avaliações

- Payroll Tables and Views For Oracle HCM CloudDocumento14 páginasPayroll Tables and Views For Oracle HCM CloudChakravarthiVedaAinda não há avaliações

- PHP Hyper Text PreprocessorDocumento35 páginasPHP Hyper Text PreprocessorHimanshu Sharma100% (2)

- EBS R12.1 HCM Bootcamp Datasheet ReDocumento3 páginasEBS R12.1 HCM Bootcamp Datasheet ReUzair ArainAinda não há avaliações

- Generate Load and Report Data Using Custom Payroll Flow PatternDocumento20 páginasGenerate Load and Report Data Using Custom Payroll Flow PatternBala SubramanyamAinda não há avaliações

- Administering Fast FormulasDocumento110 páginasAdministering Fast Formulasnykgupta21Ainda não há avaliações

- R12 Oracle Hrms Implement and Use Fastformula: DurationDocumento2 páginasR12 Oracle Hrms Implement and Use Fastformula: DurationAlochiousDassAinda não há avaliações

- HCM Data Loader in FusionDocumento35 páginasHCM Data Loader in FusionmurliramAinda não há avaliações

- How To Import WBS From Excel To Primavera P6 Using The P6 SDKDocumento15 páginasHow To Import WBS From Excel To Primavera P6 Using The P6 SDKmvkenterprisesAinda não há avaliações

- ATS Timecom Deployment Guide For Time and Labor ApplicationDocumento5 páginasATS Timecom Deployment Guide For Time and Labor ApplicationyurijapAinda não há avaliações

- Case Study - How To Create and Modify FFDocumento8 páginasCase Study - How To Create and Modify FFKiran NambariAinda não há avaliações

- Bind Variables Work For SQL Statements That Are Exactly The Same, Where TheDocumento1 páginaBind Variables Work For SQL Statements That Are Exactly The Same, Where TheAnjali PatelAinda não há avaliações

- HCM Data Loader - Loading Payroll Interface Inbound Records (Doc ID 2141697.1)Documento1 páginaHCM Data Loader - Loading Payroll Interface Inbound Records (Doc ID 2141697.1)iceyrosesAinda não há avaliações

- Workforce Deployment ImplementationDocumento16 páginasWorkforce Deployment ImplementationsailushaAinda não há avaliações

- 05.oracle HCM Cloud R11 Loading WorkersDocumento28 páginas05.oracle HCM Cloud R11 Loading WorkersAshive MohunAinda não há avaliações

- Oracle HRMS Functional Document: Jobs and Positions Setup's (SIT's & EIT's)Documento20 páginasOracle HRMS Functional Document: Jobs and Positions Setup's (SIT's & EIT's)Dhina KaranAinda não há avaliações

- WFM Ter Compare WRKR Holiday To Reported Hours ApDocumento5 páginasWFM Ter Compare WRKR Holiday To Reported Hours ApYonny Isidro RendonAinda não há avaliações

- Create Functiong PartDocumento11 páginasCreate Functiong Partprasad_431Ainda não há avaliações

- Lesson1-HDL Introduction NotesDocumento38 páginasLesson1-HDL Introduction NotesShravanUdayAinda não há avaliações

- HCM Using Person Synchronization ESS JobDocumento13 páginasHCM Using Person Synchronization ESS JobAadii GoyalAinda não há avaliações

- Create Work Schedule in Fusion HCMDocumento4 páginasCreate Work Schedule in Fusion HCMRavish Kumar SinghAinda não há avaliações

- HCM Data Loader Support Diagnostic PDFDocumento9 páginasHCM Data Loader Support Diagnostic PDFsjawadAinda não há avaliações

- Delete Entity in FusionDocumento4 páginasDelete Entity in FusionBick KyyAinda não há avaliações

- Oracle Fusion HRMS SA Payroll BalancesDocumento58 páginasOracle Fusion HRMS SA Payroll BalancesFeras AlswairkyAinda não há avaliações

- Oracle Payroll India User ManualDocumento100 páginasOracle Payroll India User Manualnikhilburbure100% (1)

- SIT and EITDocumento8 páginasSIT and EITJoshua MeyerAinda não há avaliações

- HCM Extract Development in Fusion HCM: Shweta BapatDocumento42 páginasHCM Extract Development in Fusion HCM: Shweta Bapatanushasidagonde100% (1)

- Online Payslip For International LocalizationDocumento19 páginasOnline Payslip For International LocalizationPradeepRaghavaAinda não há avaliações

- Payroll InternationalDocumento20 páginasPayroll InternationalSridhar YerramAinda não há avaliações

- Oracle - Oracle HCM Cloud: Configure Enterprise and Workforce StructuresDocumento5 páginasOracle - Oracle HCM Cloud: Configure Enterprise and Workforce StructuresVishalAinda não há avaliações

- Oracle 1Z0-337 PDF Questions - 1Z0-337 Latest Questions 2018Documento5 páginasOracle 1Z0-337 PDF Questions - 1Z0-337 Latest Questions 2018Pass4 Leads0% (1)

- Applies To:: Apr 10, 2015 HowtoDocumento8 páginasApplies To:: Apr 10, 2015 HowtokottamramreddyAinda não há avaliações

- Fusion HCMDocumento39 páginasFusion HCMPrabhu SubramaniamAinda não há avaliações

- Loading Element Entries Using HCM Data Loader PDFDocumento11 páginasLoading Element Entries Using HCM Data Loader PDFYousef AsadiAinda não há avaliações

- HCM Setup For Non HCM CustomersDocumento6 páginasHCM Setup For Non HCM CustomersMark JaneAinda não há avaliações

- HDL Error Report: (BI Publisher)Documento10 páginasHDL Error Report: (BI Publisher)Gopinath NatAinda não há avaliações

- Oracle Cloud Applications A Complete Guide - 2019 EditionNo EverandOracle Cloud Applications A Complete Guide - 2019 EditionAinda não há avaliações

- Oracle E-Business Suite The Ultimate Step-By-Step GuideNo EverandOracle E-Business Suite The Ultimate Step-By-Step GuideAinda não há avaliações

- EntityDocumento115 páginasEntityChakravarthiVedaAinda não há avaliações

- PeopleSoft Human Capital Management 9.2 Through Update Image 23 Installation 072017Documento2 páginasPeopleSoft Human Capital Management 9.2 Through Update Image 23 Installation 072017ChakravarthiVedaAinda não há avaliações

- PSFTDocumento32 páginasPSFTChakravarthiVedaAinda não há avaliações

- PSFT Extraction Toolkit For HDL White Paper PDFDocumento45 páginasPSFT Extraction Toolkit For HDL White Paper PDFChakravarthiVedaAinda não há avaliações

- Oracle Fusion HCM Data Conversion - Key Notes: An Oracle White Paper September 2014Documento15 páginasOracle Fusion HCM Data Conversion - Key Notes: An Oracle White Paper September 2014ChakravarthiVedaAinda não há avaliações

- HCMDocumento90 páginasHCMChakravarthiVedaAinda não há avaliações

- OracleDocumento54 páginasOracleChakravarthiVedaAinda não há avaliações

- Earnings CodesDocumento249 páginasEarnings CodesChakravarthiVedaAinda não há avaliações

- Oracle Fusion HCM Data Conversion - Key Notes: An Oracle White Paper September 2014Documento15 páginasOracle Fusion HCM Data Conversion - Key Notes: An Oracle White Paper September 2014ChakravarthiVedaAinda não há avaliações

- Applies To:: Oracle Fusion Benefits Plan Design Troubleshooting Guide (Doc ID 1413906.1)Documento3 páginasApplies To:: Oracle Fusion Benefits Plan Design Troubleshooting Guide (Doc ID 1413906.1)ChakravarthiVedaAinda não há avaliações

- Template Builder For Word TutorialDocumento9 páginasTemplate Builder For Word TutorialtemesgenAinda não há avaliações

- PS Maintenance Best PracticesDocumento21 páginasPS Maintenance Best PracticesChakravarthiVedaAinda não há avaliações

- PeopleSoft Upgrade and Onsite Vs Offsite UpgradeDocumento13 páginasPeopleSoft Upgrade and Onsite Vs Offsite UpgradeChakravarthiVedaAinda não há avaliações

- Taleo - How To Set Up A Candidate Selection Workflow v2Documento9 páginasTaleo - How To Set Up A Candidate Selection Workflow v2Carlos AlmeidaAinda não há avaliações

- General Deduction CodesDocumento6 páginasGeneral Deduction CodesChakravarthiVedaAinda não há avaliações

- ExcelToCI TestDocumento7 páginasExcelToCI TestVenkatesh MAinda não há avaliações

- Absence Accrual - Extended Child Care Leave FFDocumento2 páginasAbsence Accrual - Extended Child Care Leave FFChakravarthiVedaAinda não há avaliações

- Using Manager Self - PeopleBooksDocumento26 páginasUsing Manager Self - PeopleBooksChakravarthiVedaAinda não há avaliações

- Call Secondary Page For A Main PageDocumento2 páginasCall Secondary Page For A Main PageChakravarthiVedaAinda não há avaliações

- New Features 9.2 Payroll and TL RECONNECT 2013 Emtec IncDocumento22 páginasNew Features 9.2 Payroll and TL RECONNECT 2013 Emtec IncChakravarthiVedaAinda não há avaliações

- Dasaratha Shani Stotra From Padma PuranaDocumento27 páginasDasaratha Shani Stotra From Padma PuranaChakravarthiVedaAinda não há avaliações

- A Informative Study On Big Data in Present Day WorldDocumento8 páginasA Informative Study On Big Data in Present Day WorldInternational Journal of Innovative Science and Research TechnologyAinda não há avaliações

- 0bqj3ybp4 - What Is Project ManagementDocumento24 páginas0bqj3ybp4 - What Is Project ManagementMughni SamaonAinda não há avaliações

- Digital Base1 - HandbookDocumento23 páginasDigital Base1 - HandbookKeerthi SenthilAinda não há avaliações

- Curriculum Vitae - I Gusti Agung Kartika ShantiDocumento1 páginaCurriculum Vitae - I Gusti Agung Kartika ShantiI Gusti Agung Kartika ShantiAinda não há avaliações

- EXT Read Me For Customers Service Bureau SWIFT CSP 2021 For Kyriba CusDocumento2 páginasEXT Read Me For Customers Service Bureau SWIFT CSP 2021 For Kyriba Cusgautam_86Ainda não há avaliações

- Ccna 1 Module 3 v4.0Documento4 páginasCcna 1 Module 3 v4.0ccnaexploration4Ainda não há avaliações

- Reset The Password of The Admin User On A Cisco Firepower SystemDocumento7 páginasReset The Password of The Admin User On A Cisco Firepower SystemWafikAinda não há avaliações

- Wait EventsDocumento5 páginasWait EventsfaisalwasimAinda não há avaliações

- Web Publishing Test CasesDocumento3 páginasWeb Publishing Test CasesJafar BhattiAinda não há avaliações

- The Ultimate C - C - THR86 - 2011 - SAP Certified Application Associate - SAP SuccessFactors Compensation 2H2020Documento2 páginasThe Ultimate C - C - THR86 - 2011 - SAP Certified Application Associate - SAP SuccessFactors Compensation 2H2020KirstingAinda não há avaliações

- Netapp MAX DataDocumento4 páginasNetapp MAX Datamanasonline11Ainda não há avaliações

- Assembler Module 1-1Documento23 páginasAssembler Module 1-1arunlaldsAinda não há avaliações

- Module 2Documento21 páginasModule 2efrenAinda não há avaliações

- LogDocumento109 páginasLogMerechell MisterioAinda não há avaliações

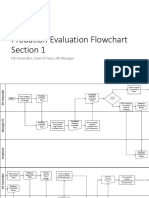

- Probation Evaluation FlowchartDocumento8 páginasProbation Evaluation FlowchartAdid RachmadiansyahAinda não há avaliações

- Unit 8 - Week 6: Assignment 6Documento3 páginasUnit 8 - Week 6: Assignment 6Manju Sk17Ainda não há avaliações

- Multitenant Database ArchitectureDocumento70 páginasMultitenant Database ArchitectureSaifur RahmanAinda não há avaliações

- Gsmme Admin Guide: G Suite Migration For Microsoft ExchangeDocumento54 páginasGsmme Admin Guide: G Suite Migration For Microsoft ExchangeadminakAinda não há avaliações

- Webp - Case Study - 601Documento15 páginasWebp - Case Study - 601Prateek KumarAinda não há avaliações

- 4 A Study of Encryption AlgorithmsDocumento9 páginas4 A Study of Encryption AlgorithmsVivekAinda não há avaliações

- Soa Interview1Documento18 páginasSoa Interview1anilandhraAinda não há avaliações

- Overview of Sap ModulesDocumento10 páginasOverview of Sap ModulesRAJLAXMI THENGDIAinda não há avaliações

- Getting Started With PdiDocumento38 páginasGetting Started With Pdider_teufelAinda não há avaliações

- SRM Mod 3 SRM PlanDocumento23 páginasSRM Mod 3 SRM PlanKedar Vishnu LadAinda não há avaliações