Escolar Documentos

Profissional Documentos

Cultura Documentos

Chapter Three: Item and Test Development Processes

Enviado por

Luxubu HeheDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Chapter Three: Item and Test Development Processes

Enviado por

Luxubu HeheDireitos autorais:

Formatos disponíveis

Chapter Three: Item and Test Development Processes

CHAPTER THREE: ITEM AND TEST DEVELOPMENT PROCESSES

GENERAL KEYSTONE TEST DEVELOPMENT PROCESSES

The core portion of the Spring 2013 Keystone Exams operational administration was made up of items that were

field tested in the Spring 2011 Keystone Exams embedded field test administration. Therefore, the activities that

led to the Spring 2013 Keystone Exams operational administration began with the development of the test items

that appeared in the 2011 administration. In turn, items that appeared on the field test portion of the 2011

administration were developed starting in 2010. In addition, a portion of the core in 2013 will be used for a

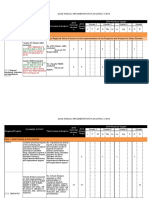

biennial overlap to the 2015 core. Table 3–1 below is a graphic representation of the basic process flow and

overlap of the development cycles.

Table 3–1. General Development and Usage Cycle of the Algebra I, Biology, and Literature Keystone Exams

Admin Events Occurring in Calendar Year

Year 2009 2010 2011 2012 2013 2014* 2015*

Fall 2010

Data Review of Biennial Core-

Stand-alone

Initial Item Fall 2010 FT Biennial Core- to-Core Overlap

FT

2010– Development Data Review of to-Core Overlap (2011 core

for Fall 2010 New Item Spring 2011 Spring 2011 FT Deferred Due to included as a

2011 Operational &

FT Development 2012 Hiatus portion of the

for Spring Embedded FT 2014 core)

2011 FT

2011–

No Operational Administrations in 2011–2012 (Program in Hiatus)

2012

Spring 2013

Operational & Biennial Core-to-

New Item Embedded FT Core Overlap

Winter

2012– Development (2013 core

2012/13 Data Review of

for Spring 2013 included as a

2013* Administration Spring 2013 FT

FT portion of the

Summer 2013 2015 core)

Administration

Spring 2014

Winter 2013/14

Operational &

Administration

Embedded FT

2013– New Item

Data Review of

2014* Development

Spring 2014 FT

for Spring 2014

FT Summer 2014

Administration

Spring 2015

Winter 2014/15

Operational &

Administration

Embedded FT

2014– New Item

Data Review of

2015* Development

Spring 2015 FT

for Spring 2015

FT Summer 2015

Administration

*Projected/scheduled tasks and activities

2013 Keystone Exams Technical Report Page 17

Chapter Three: Item and Test Development Processes

GENERAL TEST DEFINITION

The plan for the Keystone Exam was developed through the collaborative efforts of the Pennsylvania

Department of Education (PDE) and Data Recognition Corporation (DRC). The exams are presented online or in

two printed testing materials, a test book and a separate answer book. The test book contains multiple-choice

(MC) items. The answer book contains scannable pages for multiple-choice responses, constructed-response

(CR) items with response spaces, and demographic data collection areas. All MC items are worth 1 point.

Algebra I CR items receive a maximum of 4 points (on a scale of 0–4), and all Biology and Literature CR items

receive a maximum of 3 points (on a scale of 0–3). In spring 2013, each test form contained common items

(identical on all forms) along with embedded field test items.

FUTURE PLANS FOR CORE-TO-CORE OVERLAP ITEMS

The common items consist of a set of core items taken by all students. In future administrations (starting in

2014), these core items may also include core-to-core overlapping items, which are items that also appeared

on the core form of the administration two years before. The overlap connects the spring and summer

administrations of year x and the winter administration in year x+1, with the year x+2 spring and summer

and year x+3 winter administrations. The first biennial core-to-core overlap from the spring 2011 and winter

2011–2012 core was scheduled to begin with the spring 2013 administration. However, when the program

was placed on hiatus during the 2011–2012 school year, the overlap was moved to the spring 2014

administration.

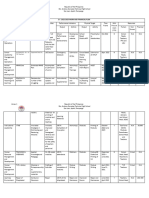

ALGEBRA I TEST DEFINITIONS

The Spring 2013 Algebra I Keystone Exam was composed of 20 forms. All of the forms contained the common

items identical for all students and sets of generally unique items. The following two tables display the design for

Algebra I for forms 1 through 20. The column entries for these tables denote the following:

Number of unique common, or core, MC items

Number of unique common, or core, CR items

Number of embedded MC field test items

Number of embedded CR field test items

Total number of MC and CR items in the form

Table 3–2. Algebra I Test Plan (Spring 2013) per Operational Form

Core Field Test Total

per Form per Form per Form

Module

Algebra I

MC CR MC CR Core & FT Core & FT

Items Items Items Items MC Items CR Items

1 18 3 5 1 23 4

2 18 3 5 1 23 4

Total 36 6 10 2 46 8

2013 Keystone Exams Technical Report Page 18

Chapter Three: Item and Test Development Processes

Table 3–3. Algebra I Test Plan (Spring 2013) per 20 Operational Forms

Core Field Test Total

per 20 Forms per 20 Forms per 20 Forms

Module

Algebra I MC CR MC CR Core & FT Core & FT

Items Items Items Items MC Items CR Items

1 18 3 100 20

2 18 3 100 20 236 46

Total 36 6 200 40

The core portions of the winter 2012/2013 administration were made up of items that were field tested in fall

2010 and/or spring 2011. Although each spring administration includes embedded field test items, the summer,

winter, and breach forms do not include any embedded field test items due to the lower n-counts for these

administrations. However, summer, winter, and breach forms still include the same number of items that

appear in the spring administration. Instead of field test items, the slots in these exams are filled by placeholder

(PH) items. The following table displays the design for the Algebra I Summer, Winter, and Breach operational

forms.

Table 3–4. Algebra I Test Plan (2013 Summer, Winter, and Breach) per Operational Form

Core Placeholders Total Number of Forms

per Form per Form per Form

Module

Algebra I

MC CR MC CR Core & PH Core & PH Master Scrambles

Items Items Items Items MC Items CR Items Core

1 18 3 5 1 23 4

2 18 3 5 1 23 4 1 3

Total 36 6 10 2 46 8

Since an individual student’s score is based solely on the common (or core) items, the total number of

operational points is 60 for Algebra I. The total score is obtained by combining the points from the core MC

(1 point each) and core CR (up to 4 points each) portions of the exam as follows:

Table 3–5. Algebra I Core Points

Module 1 Module 2 Algebra I Total

Algebra I 50% 50% 100%

MC CR MC CR MC CR

Items Items Items Items Items Items

Core Items 18 3 18 3 36 6

Core Points 18 12 18 12 36 24

Total Points 30 30 60

2013 Keystone Exams Technical Report Page 19

Chapter Three: Item and Test Development Processes

The Algebra I Exam results will be reported in two categories based on the two modules of the Algebra I Exam.

The code letters for these Assessment Anchor categories are

1. Operations and Linear Equations & Inequalities

2. Linear Functions and Data Organization

The distribution of Algebra I items into these two categories is shown in the following table.

Table 3–6. Algebra I Module and Anchor Distribution

Algebra I Raw Module Number of Number of

Module Points Weight Anchors Eligible Content

1 30 50% 3 18

2 30 50% 3 15

The reporting categories are further subdivided for specificity and Eligible Content (limits). Each subdivision is

coded by adding an additional character to the framework of the labeling system. These subdivisions are called

Assessment Anchors and Eligible Content. More information about Assessment Anchors and Eligible Content is

in Chapter Two.

For more information concerning the process used to convert the Algebra I operational test plan into forms

(i.e., form construction), see Chapter Six.

For more information concerning the test sessions, timing, and layout for the Algebra I operational exam, see

Chapter Seven.

BIOLOGY TEST DEFINITIONS

The Spring 2013 Biology Keystone Exam was composed of 20 forms. All of the forms contained the common

items identical for all students and sets of generally unique items. The following two tables display the design for

Biology for forms 1 through 20. The column entries for these tables denote the following:

Number of unique common, or core, MC items

Number of unique common, or core, CR items

Number of embedded MC field test items

Number of embedded CR field test items

Total number of MC and CR items in the form

2013 Keystone Exams Technical Report Page 20

Chapter Three: Item and Test Development Processes

Table 3–7. Biology Test Plan (Spring 2013) per Operational Form

Core Field Test Total

per Form per Form per Form

Module

Biology MC CR MC CR Core & Core & FT

Items Items Items Items FT MC CR Items

1 24 3 8 1 32 4

2 24 3 8 1 32 4

Total 48 6 16 2 64 8

Table 3–8. Biology Test Plan (Spring 2013) per 20 Operational Forms

Core Field Test Total

per 20 Forms per 20 Forms per 20 Forms

Module

MC CR MC CR Core & Core & FT

Biology

Items Items Items Items FT MC CR Items

1 24 3 160 20

2 24 3 160 20 368 46

Total 48 6 320 40

The core portions of the winter 2012/2013 administration were made up of items that were field tested in fall

2010 and/or spring 2011. Although each spring administration includes embedded field test items, the summer,

winter, and breach forms do not include any embedded field test items due to the lower n-counts for these

administrations. However, summer, winter, and breach forms still include the same number of items that

appear in the spring administration. Instead of field test items, the slots in these exams are filled by placeholder

(PH) items. The following table displays the design for the Biology Summer, Winter, and Breach operational

forms.

Table 3–9. Biology Test Plan (2013 Summer, Winter, and Breach) per Operational Form

Core Placeholders Total Number of Forms

per Form per Form per Form

Module

MC CR MC CR Core & PH Core & PH Master

Biology

Scrambles

Items Items Items Items MC Items CR Items Core

1 24 3 8 1 32 4

2 24 3 8 1 32 4 1 3

Total 48 6 16 2 64 8

2013 Keystone Exams Technical Report Page 21

Chapter Three: Item and Test Development Processes

Since an individual student’s score is based solely on the common (or core) items, the total number of

operational points is 66 for Biology. The total score is obtained by combining the points from the core MC

(1 point each) and core CR (up to 3 points each) portions of the exam as follows:

Table 3–10. Biology Core Points

Module 1 Module 2 Biology Total

50% 50% 100%

Biology

MC CR MC CR MC CR

Items Items Items Items Items Items

Core Items 24 3 24 3 48 6

Core Points 24 9 24 9 48 18

Total Points 33 33 66

The Biology Exam results will be reported in two categories based on the two modules of the Biology Exam.

1. Cells and Cell Processes

2. Continuity and Unity of Life

The distribution of Biology items into these two categories is shown in the following table.

Table 3–11. Biology Module and Anchor Distribution

Biology Raw Module Number of Number of

Module Points Weight Anchors Eligible Content

1 33 50% 4 16

2 33 50% 4 22

The reporting categories are further subdivided for specificity and Eligible Content (limits). Each subdivision is

coded by adding an additional character to the framework of the labeling system. These subdivisions are called

Assessment Anchors and Eligible Content. More information about Assessment Anchors and Eligible Content is

in Chapter Two.

For more information concerning the process used to convert the Biology operational test plan into forms

(i.e., form construction), see Chapter Six.

For more information concerning the test sessions, timing, and layout for the Biology operational exam, see

Chapter Seven.

2013 Keystone Exams Technical Report Page 22

Chapter Three: Item and Test Development Processes

LITERATURE TEST DEFINITIONS

The Spring 2013 Literature Keystone Exam was composed of 20 forms. All of the forms contained the common

items identical for all students and sets of generally unique items. The following two tables display the design for

Literature for forms 1 through 20. The column entries for these tables denote the following:

Number of unique common, or core, passages

Number of unique common, or core, MC items

Number of unique common, or core, CR items

Number of embedded field test passages

Number of embedded MC field test items

Number of embedded CR field test items

Total number of passages, MC items, and CR items in the form

Table 3–12. Literature Test Plan (Spring 2013) per Operational Form

Core Field Test Total

per Form per Form per Form

Module

Literature

MC CR MC CR Core & FT Core & FT Core & FT

Passages Passages

Items Items Items Items Passages MC Items CR Items

1 2 17 *3 1 6 1 3 23 4

2 2 17 *3 1 6 1 3 23 4

Total 4 34 6 2 12 2 6 46 8

*For each module, one core passage has two CRs and one core passage has one CR.

Table 3–13. Literature Test Plan (Spring 2013) per 20 Operational Forms

Core Field Test Total

per 20 Forms per 20 Forms per 20 Forms

Module

Literature

MC CR MC CR Core & FT Core & FT Core & FT

Passages Passages

Items Items Items Items Passages MC Items CR Items

1 2 17 *3 10 120 20

2 2 17 *3 10 120 20 24 274 46

Total 4 34 6 20 240 40

*For each module, one core passage has two CRs and one core passage has one CR.

The core portions of the winter 2012/2013 administration were made up of items that were field tested in fall

2010 and/or spring 2011. Although each spring administration includes embedded field test items, the summer,

winter, and breach forms do not include any embedded field test items due to the lower n-counts for these

administrations. However, summer, winter, and breach forms still include the same number of items that

appear in the spring administration. Instead of field test items, the slots in these exams are filled by placeholder

items. The following table displays the design for the Literature Summer, Winter, and Breach operational forms.

2013 Keystone Exams Technical Report Page 23

Chapter Three: Item and Test Development Processes

Table 3–14. Literature Test Plan (2013 Summer, Winter, and Breach) per Operational Form

Core Placeholders Total Number of Forms

per Form per Form per Form

Module

Literature

MC CR MC CR Core & PH Core & PH Master Scrambles

Items Items Items Items MC Items CR Items Core

1 2 17 *3 1 6 1

2 2 17 *3 1 6 1 1 3

Total 4 34 6 2 12 2

*For each module, one core passage has two CRs and one core passage has one CR.

Since an individual student’s score is based solely on the common (or core) items, the total number of

operational points is 52 for Literature. The total score is obtained by combining the points from the core MC

(1 point each) and core CR (up to 3 points each) portions of the exam as follows:

Table 3–15. Literature Core Points

Module 1 Module 2 Literature Total

50% 50% 100%

Literature

MC CR MC CR MC CR

Passages Passages Passages

Items Items Items Items Items Items

Core Items 2 17 3 2 17 3 4 34 6

Core Points 17 9 17 9 34 18

Total Points 26 26 52

The Literature Exam results will be reported in two broad categories based on the two modules of the Literature

Exam.

1. Fiction Literature

2. Nonfiction Literature

The distribution of Literature items into these two categories is shown in the following table.

Table 3–16. Literature Module and Anchor Distribution

Literature Raw Module Number of Number of

Module Points Weight Anchors Eligible Content

1 26 50% 2 25

2 26 50% 2 31

The reporting categories are further subdivided for specificity and Eligible Content (limits). Each subdivision is

coded by adding an additional character to the framework of the labeling system. These subdivisions are called

Assessment Anchors and Eligible Content. More information about Assessment Anchors and Eligible Content is

in Chapter Two.

2013 Keystone Exams Technical Report Page 24

Chapter Three: Item and Test Development Processes

For more information concerning the process used to convert the Literature operational test plan into forms

(i.e., form construction), see Chapter Six.

For more information concerning the test sessions, timing, and layout for the Literature operational exam, see

Chapter Seven.

ITEM DEVELOPMENT CONSIDERATIONS

Alignment to the Keystone Assessment Anchors and Eligible Content, course-level appropriateness (as specified

by PDE), depth of knowledge (DOK), item/task level of complexity, estimated difficulty level, relevancy of

context, rationale for distractors, style, accuracy, and correct terminology were major considerations in the item

development process. The Standards for Educational and Psychological Testing (American Educational Research

Association, American Psychological Association, and National Council on Measurement in Education, 1999) and

Universal Design (Thompson, Johnstone, & Thurlow, 2002) guided the development process. In addition,

Fairness in Testing: Training Manual for Issues of Bias, Fairness, and Sensitivity (DRC, 2010) was used for

developing items. All items were reviewed for fairness by bias and sensitivity committees and for content by

Pennsylvania educators and field specialists.

BIAS, FAIRNESS, AND SENSITIVITY OVERVIEW

At every stage of the item and test development process, DRC employs procedures that are designed to

ensure that items and tests meet Standard 7.4 of the Standards for Educational and Psychological Testing

(AERA, APA, & NCME, 1999).

Standard 7.4: Test developers should strive to identify and eliminate language, symbols, words, phrases, and

content that are generally regarded as offensive by members of racial, ethnic, gender, or other groups,

except when judged to be necessary for adequate representation of the domain.

To meet Standard 7.4, DRC uses a series of internal quality steps. DRC provides specific training for test

developers, item writers, and reviewers on how to write, review, revise, and edit items related to issues of

bias, fairness, and sensitivity (as well as based on technical quality). Training also includes an awareness of

and sensitivity to issues of cultural diversity. In addition to providing internal training in reviewing items in

order to eliminate potential bias, DRC also provides external training to the review panels of minority

experts, teachers, and other stakeholders.

DRC’s guidelines for bias, fairness, and sensitivity include instruction concerning how to eliminate language,

symbols, words, phrases, and content that might be considered offensive by members of racial, ethnic,

gender, or other groups. Areas of bias that are specifically targeted include but are not limited to

stereotyping, gender, region/geography, ethnic/cultural, socioeconomics/class, religion, experience, and

biases against a particular age group (ageism) or persons with disabilities. DRC catalogues topics that should

be avoided and maintains balance in gender and ethnic emphasis within the pool of available items and

passages.

See the sections below in this chapter for more information about the Bias, Fairness, and Sensitivity Review

meetings conducted for the Keystone Exams.

2013 Keystone Exams Technical Report Page 25

Chapter Three: Item and Test Development Processes

UNIVERSAL DESIGN OVERVIEW

The principles of universal design were incorporated throughout the item development process to allow

participation of the widest possible range of students in the Keystone Exams. The following checklist was

used as a guideline:

Items measure what they are intended to measure.

Items respect the diversity of the assessment population.

Items have a clear format for text.

Stimuli and items have clear pictures and graphics.

Items have concise and readable text.

Items allow changes to other formats, such as Braille, without changing meaning or difficulty.

The arrangement of the items on the test has an overall appearance that is clean and well

organized.

A more extensive description of the application of the principles of universal design is provided in

Chapter Four.

DEPTH-OF-KNOWLEDGE OVERVIEW

An important element in statewide graduation exams is the alignment between the overall assessment

system and the state’s standards. A methodology developed by Norman Webb (1999, 2006) offers a

comprehensive model that can be applied to a wide variety of contexts. With regard to the alignment

between standards statements and the assessment instruments, Webb’s criteria include five categories, one

of which deals with content. Within the content category is a useful set of levels for evaluating DOK.

According to Webb (1999), “depth-of-knowledge consistency between standards and assessments indicates

alignment if what is elicited from students on the assessment is as demanding cognitively as what students

are expected to know and do as stated in the standards” (Webb, 1999, pp. 7–8). The four levels of cognitive

complexity (i.e., DOK) are as follows:

Level 1: Recall

Level 2: Application of Skill/Concept

Level 3: Strategic Thinking

Level 4: Extended Thinking

DOK levels were incorporated into the item writing and review process, and items were coded with respect

to the level they represented. The default DOK for the Keystone Exams is Level 3. The DOK level for CR items

must be Level 3. The DOK level for MC items must also be Level 3; however, in some specific cases, Level 2 is

allowed when the cognitive intent of an Eligible Content is Level 2. DOK Level 1 and DOK Level 4 are not

included on the Keystone Exams. For more information on DOK (and a comparison of DOK to Bloom’s

Taxonomy), see Appendix A.

2013 Keystone Exams Technical Report Page 26

Chapter Three: Item and Test Development Processes

PASSAGE READIBILITY OVERVIEW

Evaluating the readability of a passage is essentially a judgment by individuals familiar with the classroom

context and what is linguistically appropriate (PDE recommends that the Literature Keystone Exam be

administered at grade 10). Although various readability indices were computed and reviewed, it is

recognized that such methods measure different aspects of readability and are often fraught with particular

interpretive liabilities. Thus, the commonly available readability formulas were not used in a rigid way but

more informally to provide for several snapshots of a passage that senior test development staff considered

along with experience-based judgments in guiding the passage-selection process. In addition, passages were

reviewed by committees of Pennsylvania educators who evaluated each passage for readability and grade-

level appropriateness. For more information on Literature passages, see Chapter Two and the literature

passage-selection process described below.

TEST ITEM READIBILITY OVERVIEW

Careful attention was given to the readability of the items to make certain that the assessment focus of the

item did not shift based on the difficulty of reading the item. Subject/course areas such as Algebra I or

Biology contain many content-specific vocabulary terms. As a result, readability formulas were not used.

However, wherever it was practicable and reasonable, every effort was made to keep the vocabulary at or

one level below the course level for non-Literature exams. There was a conscious effort made to ensure that

each question was evaluating a student’s ability to build toward mastery of the course standards rather than

evaluating the student’s reading ability. Resources used to verify the vocabulary level were the EDL Core

Vocabularies and the Children’s Writer’s Word Book.

In addition, every test question is brought before several different committees composed of Pennsylvania

educators who are course-level/grade-level experts in the content field in question. They review each

question from the perspective of the students they teach, determine the validity of the vocabulary used, and

work to minimize the level of reading required.

Vocabulary was also addressed at the Bias, Fairness, and Sensitivity Review, although the focus was on how

certain words or phrases may represent possible sources of bias or issues of fairness or sensitivity. See the

sections that follow in this chapter for more information about the Bias, Fairness, and Sensitivity Review

meetings conducted for the Keystone Exams.

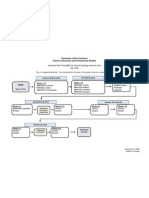

ITEM AND TEST DEVELOPMENT CYCLE

The item development process for items followed a logical cycle and time line, which are outlined in the table

and figure below. On the front end of the schedule, tasks were generally completed with the goal of presenting

field test candidate items to committees of Pennsylvania educators. On the back end of the schedule, all tasks

led to the field test data review and operational test construction. This process represents a typical life cycle for

an embedded Keystone field test event, not a stand-alone field test event or an accelerated development cycle.

The process flowchart, also shown below, illustrates the interrelationship among the steps in the primary cycle

that occurs in a normal process of development (i.e., when the items for field testing are primarily from new

development, as opposed to being selected from an existing item bank). In addition, a detailed process table

describing the item and test development processes also appears in Appendix C.

2013 Keystone Exams Technical Report Page 27

Você também pode gostar

- Status Genatomun37 03052014 03092014Documento43 páginasStatus Genatomun37 03052014 03092014jannetolickaAinda não há avaliações

- In Depth-New Ifrs 2015Documento32 páginasIn Depth-New Ifrs 2015Mubashar HussainAinda não há avaliações

- India GDP quarterly data ARIMA forecastingDocumento5 páginasIndia GDP quarterly data ARIMA forecastingaman guptaAinda não há avaliações

- Tiap 2018 - 2019Documento6 páginasTiap 2018 - 2019Aira Castillano CuevasAinda não há avaliações

- P7 - SRV - Service Man HourDocumento6 páginasP7 - SRV - Service Man HourKhincho ayeAinda não há avaliações

- 10th Chemistry PapersDocumento2 páginas10th Chemistry PapersSabir Ali SiddiqueAinda não há avaliações

- PWC Ifrs Illustrative Ifrs Consolidated Financial Statements 2015Documento204 páginasPWC Ifrs Illustrative Ifrs Consolidated Financial Statements 2015AliAinda não há avaliações

- Name of Item Balance As On End of The Month NTC (Gross)Documento5 páginasName of Item Balance As On End of The Month NTC (Gross)manjunathAinda não há avaliações

- 4q22 Investor Presentaion February 2023Documento34 páginas4q22 Investor Presentaion February 2023mukeshindpatiAinda não há avaliações

- FOXBORO IA - Series - Lifecycle - Product - Phase - ListingDocumento26 páginasFOXBORO IA - Series - Lifecycle - Product - Phase - Listingtricucha priyantoAinda não há avaliações

- 4 02072019 Ap en PDFDocumento6 páginas4 02072019 Ap en PDFValter SilveiraAinda não há avaliações

- Social Compliance Indicator Disclosure Training Material For SEA 2017Documento33 páginasSocial Compliance Indicator Disclosure Training Material For SEA 2017Sehar NazirAinda não há avaliações

- Index: Analysts Caution Higher Lending Rate May Affect Renewed Up-Cycle of Property PricesDocumento6 páginasIndex: Analysts Caution Higher Lending Rate May Affect Renewed Up-Cycle of Property PricesGevansAinda não há avaliações

- Industrial Production Down by 0.5% in Euro AreaDocumento6 páginasIndustrial Production Down by 0.5% in Euro AreaValter SilveiraAinda não há avaliações

- 2018 Q3 Commercial Real Estate Outlook 09-13-2018Documento18 páginas2018 Q3 Commercial Real Estate Outlook 09-13-2018National Association of REALTORS®Ainda não há avaliações

- 2013 CBRE U.S. Cap Rate Survey H2Documento48 páginas2013 CBRE U.S. Cap Rate Survey H2Dyami Myers-TaylorAinda não há avaliações

- Mumbai Office Marketbeat 4Q 2014Documento2 páginasMumbai Office Marketbeat 4Q 2014khansahil.1331Ainda não há avaliações

- Overview: External Sector Overview: External Sector in Myanmar in Myanmar YyDocumento34 páginasOverview: External Sector Overview: External Sector in Myanmar in Myanmar YyAryan SharmaAinda não há avaliações

- INSTRUCTIONS IN THE PREPARATION OF THE CY 2019 ANNUAL WORK AND FINANCIAL PLANDocumento38 páginasINSTRUCTIONS IN THE PREPARATION OF THE CY 2019 ANNUAL WORK AND FINANCIAL PLANBryan Kylle LoAinda não há avaliações

- PPMP FormatDocumento4 páginasPPMP Formataurea belen100% (1)

- Air India Operations Manual Part A PDFDocumento1.188 páginasAir India Operations Manual Part A PDFAirbus330 Airbus330100% (1)

- WIPO's Results Based Management (RBM) FrameworkDocumento1 páginaWIPO's Results Based Management (RBM) FrameworkParmit ChoudhuryAinda não há avaliações

- MBAI PT Flowchart 2010Documento1 páginaMBAI PT Flowchart 2010bpsusfAinda não há avaliações

- Upah Dan Produktivitas Untuk Pembangunan BerkelanjutanDocumento6 páginasUpah Dan Produktivitas Untuk Pembangunan Berkelanjutanmahdi cakepAinda não há avaliações

- Inhouse Yearly PM On ALDDocumento7 páginasInhouse Yearly PM On ALDnakul164Ainda não há avaliações

- Index 2019 03Documento18 páginasIndex 2019 03JamesAinda não há avaliações

- Sustainability Report14 FeDocumento154 páginasSustainability Report14 FeRazaq FathurAinda não há avaliações

- Air India Operations Manual Part ADocumento1.188 páginasAir India Operations Manual Part ABort FortAinda não há avaliações

- Tahun 2015 PDFDocumento499 páginasTahun 2015 PDFIan IsnantaAinda não há avaliações

- PSOE 2014 Business Review-TemplateDocumento33 páginasPSOE 2014 Business Review-TemplatekeweishAinda não há avaliações

- Quarterly Report SummaryDocumento15 páginasQuarterly Report SummaryDavid BayerAinda não há avaliações

- 1q 22 Ir Roadshow PresentationDocumento68 páginas1q 22 Ir Roadshow PresentationIosu Arrue GanzarainAinda não há avaliações

- Industrial Production Up by 0.4% in Euro AreaDocumento6 páginasIndustrial Production Up by 0.4% in Euro AreaDartonni SimangunsongAinda não há avaliações

- Technical Report of Working Group PDFDocumento99 páginasTechnical Report of Working Group PDFDhrubajyoti DattaAinda não há avaliações

- Jakarta Officemarket Report 2q 2012 PDFDocumento10 páginasJakarta Officemarket Report 2q 2012 PDFRizal NoorAinda não há avaliações

- CV For Acadamic Jobs.Documento5 páginasCV For Acadamic Jobs.Sujee HnbaAinda não há avaliações

- Piramal Enterprises Limited Annual Report 2017-18-1 2Documento20 páginasPiramal Enterprises Limited Annual Report 2017-18-1 2Abhimanyu SahniAinda não há avaliações

- PPMPDocumento218 páginasPPMPRogie ApoloAinda não há avaliações

- Efficient Passenger Boarding Powered Fast Airplane TurnaroundDocumento18 páginasEfficient Passenger Boarding Powered Fast Airplane TurnaroundWinterfell DavidsonAinda não há avaliações

- 2015 Project Tracking SpreadsheetDocumento6 páginas2015 Project Tracking Spreadsheetrheri100% (1)

- Circular - 8 Revised MPR - 1Documento2 páginasCircular - 8 Revised MPR - 1Mohit BaghrechaAinda não há avaliações

- 09 18-Nipa-UpdateDocumento54 páginas09 18-Nipa-UpdatejlkongAinda não há avaliações

- Mudon Ahlia Real Estate Co.W.L.L. Annual Training Plan 2014: Prepared byDocumento2 páginasMudon Ahlia Real Estate Co.W.L.L. Annual Training Plan 2014: Prepared byHebatallah FahmyAinda não há avaliações

- I. Assets Utilization: ROA TR/TA (Assets Utilization) - Total Expense/TA (Expense Ratio) - Taxes/TA (Tax Ratio)Documento2 páginasI. Assets Utilization: ROA TR/TA (Assets Utilization) - Total Expense/TA (Expense Ratio) - Taxes/TA (Tax Ratio)Joele shAinda não há avaliações

- Activity Planner For 3 MonthsDocumento7 páginasActivity Planner For 3 MonthsYogi YogaswaraAinda não há avaliações

- Topic 15Documento18 páginasTopic 15azadAinda não há avaliações

- P3 - SRV - Resolution RateDocumento8 páginasP3 - SRV - Resolution RateKhincho ayeAinda não há avaliações

- Commercial Planning V05 - EditsDocumento12 páginasCommercial Planning V05 - Editsnishant.mishraAinda não há avaliações

- First Name Last Name Sex Date of Birth Place of Birth AddressDocumento7 páginasFirst Name Last Name Sex Date of Birth Place of Birth AddressNaser MododiAinda não há avaliações

- Office Performance Commitment and Review FormDocumento2 páginasOffice Performance Commitment and Review Formgraxia04Ainda não há avaliações

- Guidance for internal audit standards implementationDocumento8 páginasGuidance for internal audit standards implementationkaranziaAinda não há avaliações

- A Study of Indoor Thermal Parameters For Naturally Ventilated Occupied Buildings in The Warm-Humid Climate of Southern IndiaDocumento32 páginasA Study of Indoor Thermal Parameters For Naturally Ventilated Occupied Buildings in The Warm-Humid Climate of Southern IndiaNivedita JhaAinda não há avaliações

- F3 - SRV - Gross Profit Margin AnalysisDocumento5 páginasF3 - SRV - Gross Profit Margin AnalysisKhincho ayeAinda não há avaliações

- DIVISION O PERFORMANCE REPORTDocumento18 páginasDIVISION O PERFORMANCE REPORTJepoy MacasaetAinda não há avaliações

- © 2013 Oracle Corporation - Proprietary and ConfidentialDocumento41 páginas© 2013 Oracle Corporation - Proprietary and ConfidentialFarooque M. MohammedAinda não há avaliações

- Weather Impact on Airport Performance QuantifiedDocumento19 páginasWeather Impact on Airport Performance QuantifiedDarshan SawantAinda não há avaliações

- Venus Aviation Academy Financials & Growth ProjectionsDocumento12 páginasVenus Aviation Academy Financials & Growth ProjectionsneerleoAinda não há avaliações

- 2022-2023 Work and Financial PlanDocumento3 páginas2022-2023 Work and Financial PlanGerald Guinto100% (1)

- Small Talk: Practice: Teachingenglish - Lesson PlansDocumento2 páginasSmall Talk: Practice: Teachingenglish - Lesson PlansLuxubu HeheAinda não há avaliações

- Meetings (1) : Getting Down To Business Topic: Meetings and Getting Down To Business AimsDocumento2 páginasMeetings (1) : Getting Down To Business Topic: Meetings and Getting Down To Business AimsLuxubu HeheAinda não há avaliações

- Aca WRT - Key FeaturesDocumento2 páginasAca WRT - Key FeaturesLuxubu HeheAinda não há avaliações

- Links For ListeningDocumento1 páginaLinks For ListeningLuxubu HeheAinda não há avaliações

- AssessYourself 2 KeyDocumento1 páginaAssessYourself 2 KeyLuxubu HeheAinda não há avaliações

- Test Your Progress: Describing A Process: Writing An EssayDocumento2 páginasTest Your Progress: Describing A Process: Writing An EssayLuxubu HeheAinda não há avaliações

- AssessYourself 1Documento3 páginasAssessYourself 1Luxubu HeheAinda não há avaliações

- AssessYourself 2 KeyDocumento1 páginaAssessYourself 2 KeyLuxubu HeheAinda não há avaliações

- AssessYourself 1Documento3 páginasAssessYourself 1Luxubu HeheAinda não há avaliações

- Hedging Language For Aca WriitngDocumento1 páginaHedging Language For Aca WriitngLuxubu HeheAinda não há avaliações

- Listening Pra - LinksDocumento1 páginaListening Pra - LinksLuxubu HeheAinda não há avaliações

- Voc DressDocumento1 páginaVoc DressLuxubu HeheAinda não há avaliações

- Cohesion (Part 1) : Improve Writing & Speaking: What Does That Mean?Documento3 páginasCohesion (Part 1) : Improve Writing & Speaking: What Does That Mean?Luxubu HeheAinda não há avaliações

- Cohesion (Part 1) : Improve Writing & Speaking: What Does That Mean?Documento3 páginasCohesion (Part 1) : Improve Writing & Speaking: What Does That Mean?Luxubu HeheAinda não há avaliações

- Hanks in Advance? No Thank You: Author: Jacob Funnell Posted: 04 / 08 / 16Documento5 páginasHanks in Advance? No Thank You: Author: Jacob Funnell Posted: 04 / 08 / 16Luxubu HeheAinda não há avaliações

- The Writing Process: Acknowledgements Introduction For Teachers Introduction For Students Academic Writing QuizDocumento7 páginasThe Writing Process: Acknowledgements Introduction For Teachers Introduction For Students Academic Writing QuizLuxubu HeheAinda não há avaliações

- The Writing Process: Acknowledgements Introduction For Teachers Introduction For Students Academic Writing QuizDocumento7 páginasThe Writing Process: Acknowledgements Introduction For Teachers Introduction For Students Academic Writing QuizLuxubu HeheAinda não há avaliações

- Coherence & Cohesion: in SummaryDocumento1 páginaCoherence & Cohesion: in SummaryLuxubu HeheAinda não há avaliações

- Reading Endangered KeyDocumento1 páginaReading Endangered KeyLuxubu HeheAinda não há avaliações

- Error RecognitionDocumento1 páginaError RecognitionLuxubu HeheAinda não há avaliações

- Medical Studies Have Shown That Smoking Not Only Leads To Health Problems For The SmokerDocumento1 páginaMedical Studies Have Shown That Smoking Not Only Leads To Health Problems For The SmokerLuxubu HeheAinda não há avaliações

- gr4 wk11 Endangered SpeciesDocumento2 páginasgr4 wk11 Endangered Speciesapi-314389226100% (2)

- WRT 1Documento2 páginasWRT 1Luxubu HeheAinda não há avaliações

- R1 KeyDocumento1 páginaR1 KeyLuxubu HeheAinda não há avaliações

- Using Public Transport Has Become Familiar To Everyone in Vietnam. Millions of People Today Are Travelling by Lots of Kinds of Public TransportDocumento1 páginaUsing Public Transport Has Become Familiar To Everyone in Vietnam. Millions of People Today Are Travelling by Lots of Kinds of Public TransportLuxubu Hehe100% (1)

- O Comp$vision LTD: M / Ra/rwrDocumento5 páginasO Comp$vision LTD: M / Ra/rwrLuxubu HeheAinda não há avaliações

- gr4 wk11 Endangered SpeciesDocumento2 páginasgr4 wk11 Endangered Speciesapi-314389226100% (2)

- WRT 1Documento2 páginasWRT 1Luxubu HeheAinda não há avaliações

- Businesswriting 5Documento4 páginasBusinesswriting 5Luxubu HeheAinda não há avaliações

- Q " I E Elx: Asking For An EstimateDocumento3 páginasQ " I E Elx: Asking For An EstimateLuxubu HeheAinda não há avaliações

- CHC33015-Work in Health and Community services-LG-F-v1.2Documento69 páginasCHC33015-Work in Health and Community services-LG-F-v1.2ஆர்த்தி ஆதிAinda não há avaliações

- AI CriminalCourt Brief V15Documento11 páginasAI CriminalCourt Brief V15Thục AnhAinda não há avaliações

- Code of Judicial Conduct: Basic Legal Ethics Section ADocumento18 páginasCode of Judicial Conduct: Basic Legal Ethics Section AVincent TombigaAinda não há avaliações

- How To Conduct A Diversity & Inclusion Survey: A Step-By-Step GuideDocumento29 páginasHow To Conduct A Diversity & Inclusion Survey: A Step-By-Step Guideheng9224Ainda não há avaliações

- The Trouble With Algorithmic Decisions: An Analytic Road Map To Examine Efficiency and Fairness in Automated and Opaque Decision MakingDocumento15 páginasThe Trouble With Algorithmic Decisions: An Analytic Road Map To Examine Efficiency and Fairness in Automated and Opaque Decision MakingdanielindpAinda não há avaliações

- Module 4 (B) - Exceptions To The Principles of Natural JusticeDocumento10 páginasModule 4 (B) - Exceptions To The Principles of Natural JusticeA y u s hAinda não há avaliações

- "Busal" by Howie Severino: By: Dexter F. Acopiado Neil Geofer Peñaranda Jam Angeline D.C. SilvestreDocumento5 páginas"Busal" by Howie Severino: By: Dexter F. Acopiado Neil Geofer Peñaranda Jam Angeline D.C. SilvestredexAinda não há avaliações

- 5 Final English Grade 6 Week 5 Second QuarterDocumento10 páginas5 Final English Grade 6 Week 5 Second QuarterShirley Padolina100% (1)

- Development of A Scale To Measure Professional SkepticismDocumento24 páginasDevelopment of A Scale To Measure Professional SkepticismagastyacorleoneAinda não há avaliações

- Pre-Test On Identifying BiiasDocumento2 páginasPre-Test On Identifying BiiasVee Jay GarciaAinda não há avaliações

- Jurnal Tugas Kompensasi - Pay EquityDocumento15 páginasJurnal Tugas Kompensasi - Pay Equityolla guritnoAinda não há avaliações

- Judge's Abuse of Disabled Pro Per Results in Landmark Appeal: Disability in Bias California Courts - Disabled Litigant Sacramento County Superior Court - Judicial Council of California Chair Tani Cantil-Sakauye – Americans with Disabilities Act – ADA – California Supreme Court - California Rules of Court Rule 1.100 Requests for Accommodations by Persons with Disabilities – California Civil Code §51 Unruh Civil Rights Act – California Code of Judicial Ethics – Commission on Judicial Performance Victoria B. Henley Director – Bias-Prejudice Against Disabled Court UsersDocumento37 páginasJudge's Abuse of Disabled Pro Per Results in Landmark Appeal: Disability in Bias California Courts - Disabled Litigant Sacramento County Superior Court - Judicial Council of California Chair Tani Cantil-Sakauye – Americans with Disabilities Act – ADA – California Supreme Court - California Rules of Court Rule 1.100 Requests for Accommodations by Persons with Disabilities – California Civil Code §51 Unruh Civil Rights Act – California Code of Judicial Ethics – Commission on Judicial Performance Victoria B. Henley Director – Bias-Prejudice Against Disabled Court UsersCalifornia Judicial Branch News Service - Investigative Reporting Source Material & Story IdeasAinda não há avaliações

- Case Study - Albert v. CursodDocumento8 páginasCase Study - Albert v. CursodAlbert Ventero CursodAinda não há avaliações

- The Bias Beneath: Uncovering Juror Bias in Sexual Assault CasesDocumento7 páginasThe Bias Beneath: Uncovering Juror Bias in Sexual Assault CasesRudolph Trial ConsultingAinda não há avaliações

- CRW - Dallas HuntDocumento2 páginasCRW - Dallas HuntPaul RegushAinda não há avaliações

- Mental Models ChecklistDocumento1 páginaMental Models ChecklistAyush Aggarwal80% (5)

- Brewer - 1979 - In-Group Bias in The Minimal Intergroup SituationDocumento18 páginasBrewer - 1979 - In-Group Bias in The Minimal Intergroup SituationcarlosadmgoAinda não há avaliações

- How Do We Engage With Others While Staying True To Ourselves?Documento4 páginasHow Do We Engage With Others While Staying True To Ourselves?Maria J. VillalpandoAinda não há avaliações

- English 8 3rd Quarter ExaminationDocumento5 páginasEnglish 8 3rd Quarter ExaminationChristian Jay PresiidenteAinda não há avaliações

- 18 Cognitive Bias ExamplesDocumento55 páginas18 Cognitive Bias Examplesbogzi100% (1)

- Strategies of Literary TranslationDocumento7 páginasStrategies of Literary TranslationMuhammad J H AbdullatiefAinda não há avaliações

- Critical Appraisal (Descriptive)Documento14 páginasCritical Appraisal (Descriptive)hanifahrafa100% (1)

- FSOA Study Guide 2012 PDFDocumento64 páginasFSOA Study Guide 2012 PDFKhalid AhmedAinda não há avaliações

- Val Ed SyllabusDocumento25 páginasVal Ed Syllabusroy piamonteAinda não há avaliações

- STS Midterm Exam Score 49 50Documento15 páginasSTS Midterm Exam Score 49 50johnAinda não há avaliações

- Readings in Philippine History Lesson 1 Introduction and Historical ResourcesDocumento7 páginasReadings in Philippine History Lesson 1 Introduction and Historical ResourcesJulie Ann NavarroAinda não há avaliações

- Lecture Notes - 18CS71 - Machine Learning - Module 1: IntroductionDocumento16 páginasLecture Notes - 18CS71 - Machine Learning - Module 1: IntroductionNiroop KAinda não há avaliações

- LECTUREETHICS Reason Impartiality and Moral CourageDocumento3 páginasLECTUREETHICS Reason Impartiality and Moral CourageAngelo PayawalAinda não há avaliações

- Annotated Works CitedDocumento4 páginasAnnotated Works Citedapi-357603868Ainda não há avaliações

- Human Factors in Dam Failure and SafetyDocumento75 páginasHuman Factors in Dam Failure and SafetyIrfan Alvi100% (1)