Escolar Documentos

Profissional Documentos

Cultura Documentos

Lecture11 Standard Backpropagation Matlab Examples

Enviado por

Waqas Cheema0 notas0% acharam este documento útil (0 voto)

24 visualizações7 páginasBack propagation in neural networks

Direitos autorais

© © All Rights Reserved

Formatos disponíveis

DOC, PDF, TXT ou leia online no Scribd

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoBack propagation in neural networks

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato DOC, PDF, TXT ou leia online no Scribd

0 notas0% acharam este documento útil (0 voto)

24 visualizações7 páginasLecture11 Standard Backpropagation Matlab Examples

Enviado por

Waqas CheemaBack propagation in neural networks

Direitos autorais:

© All Rights Reserved

Formatos disponíveis

Baixe no formato DOC, PDF, TXT ou leia online no Scribd

Você está na página 1de 7

CS532 Neural Networks

By Dr. Anwar M. Mirza

Lecture No. 11

Week4, February 28, 2007

Program No. 1

% Back Propagation Neural Network

% by Dr. Anwar M. Mirza

% Solution of the TC Problem using Standard BPN

% Date: Tuesday, March 20, 2001

% Last Modified: Tuesday, September 07, 2004

clear all

close all

clc

%define patterns

%pattern 1

s(1,:)=[0.9 0.9 0.9 0.1 0.9 0.1 0.1 0.9 0.1];

t(1,1)=0.1;

%pattern 2

s(2,:)=[0.1 0.1 0.9 0.9 0.9 0.9 0.1 0.1 0.9];

t(2,1)=0.1;

%pattern 3

s(3,:)=[0.1 0.9 0.1 0.1 0.9 0.1 0.9 0.9 0.9];

t(3,1)=0.1;

%pattern 4

s(4,:)=[0.9 0.1 0.1 0.9 0.9 0.9 0.9 0.1 0.1];

t(4,1)=0.1;

%pattern 5

s(5,:)=[0.9 0.9 0.9 0.9 0.1 0.1 0.9 0.9 0.9];

t(5,1)=0.9;

%pattern 6

s(6,:)=[0.9 0.9 0.9 0.9 0.1 0.9 0.9 0.1 0.9];

t(6,1)=0.9;

%pattern 7

s(7,:)=[0.9 0.9 0.9 0.1 0.1 0.9 0.9 0.9 0.9];

t(7,1)=0.9;

%pattern 8

s(8,:)=[0.9 0.1 0.9 0.9 0.1 0.9 0.9 0.9 0.9];

t(8,1)=0.9;

% display all the input training patterns

figure(1)

for p = 1:8

for k = 1:9

if k<=3

i=1;

j=k;

end

if k>3 & k <=6

i=2;

j=k-3;

end

if k > 6 & k <=9

i=3;

j=k-6;

end

pattern(i,j) = s(p,k);

end

subplot(3,3,p), image(255*pattern),

text(1,0,strcat('Pattern ', num2str(p))), axis off

end

% Initialize some parameters

alpha = 0.01; % learning rate

N = 9; % no. of input units

P = 3; % no. of hidden units

M = 1; % no. of output units

noOfHidWeights = (N+1)*P; % hidden layer weights

noOfOutWeights = (P+1)*M; % output layer weights

% Initialize the hidden and output layer weights

% small random numbers

for j = 1: P+1

for i= 1: N+1

hidWts(i,j) = -0.01+0.02*rand;

end

end

for k= 1:M

for j= 1: P+1

outWts(j,k)= -0.01+0.02*rand;

end

end

for k=1:5000

% randomly select an input : target pair from

% the given pattern

p=fix(1+rand*7);

x=s(p,:);

x(N+1)=1;

outDesired=t(p,:);

% output of the hidden layer units

yInHid=x*hidWts; %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%

yHid=1./(1.+exp(-yInHid));

yHid(P+1)=1;

% Output of the output layer units

yInOut=yHid*outWts; %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

yOut=1./(1. + exp(-yInOut));

%%%%%%End of forward propagation%%%%%%%%%

%Calculate errors and the deltas

outErrors=outDesired - yOut;

outDelta= outErrors*(yOut*(1-yOut));

abc= outWts*outDelta;

hidDelta= abc' * (1-yHid)'*yHid;

% Update weights on the output layer

outWts = outWts + alpha*yHid'*outDelta;

% update weights on the hidden layer

hidWts=hidWts + alpha*x'*hidDelta;

% squared error

err(k)= outErrors*outErrors;

end

figure

plot(err);

xlabel('No. of epochs')

ylabel('Error')

Program No. 2

% Back Propagation Neural Network

% by Dr. Anwar M. Mirza

% Solution of a simple face recognition problem using

Standard BPN

% Date: Tuesday, September 07, 2004

clear all

close all

clc

%define patterns

%pattern 1

i = imread('g1','jpeg');

l = i;

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(1,:)=reshape(k, [1, 3*3])/k1;

t(1,:)=[0.9 0.1];

figure, imshow(l)

%pattern 2

l = imrotate(i,90);

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(2,:)=reshape(k, [1, 3*3])/k1;

t(2,:)=[0.9 0.1];

figure, imshow(l)

%pattern 3

l = imrotate(i,180);

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(3,:)=reshape(k, [1, 3*3])/k1;

t(3,:)=[0.9 0.1];

figure, imshow(l)

%pattern 4

l = imrotate(i,270);

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(4,:)=reshape(k, [1, 3*3])/k1;

t(4,:)=[0.9 0.1];

figure, imshow(l)

%pattern 5

i = imread('b1','jpeg');

l = i;

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(5,:)=reshape(k, [1, 3*3])/k1;

t(5,:)=[0.1 0.9];

figure, imshow(l)

%pattern 6

l = imrotate(i,90);

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(6,:)=reshape(k, [1, 3*3])/k1;

t(6,:)=[0.1 0.9];

figure, imshow(l)

%pattern 7

l = imrotate(i,180);

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(7,:)=reshape(k, [1, 3*3])/k1;

t(7,:)=[0.1 0.9];

figure, imshow(l)

%pattern 8

l = imrotate(i,270);

j = dct2(l);

k = j(1:3,1:3);

k1 = k(1,1);

s(8,:)=reshape(k, [1, 3*3])/k1;

t(8,:)=[0.1 0.9];

figure, imshow(l)

% display all the input training patterns

figure

for p = 1:8

for k = 1:9

if k<=3

i=1;

j=k;

end

if k>3 & k <=6

i=2;

j=k-3;

end

if k > 6 & k <=9

i=3;

j=k-6;

end

pattern(i,j) = s(p,k);

end

subplot(3,3,p), image(255*pattern),

text(1,0,strcat('Pattern ', num2str(p))), axis off

end

% Initialize some parameters

alpha = 0.6; % learning rate

N = 9; % no. of input units

P = 3; % no. of hidden units

M = 2; % no. of output units

noOfHidWeights = (N+1)*P; % hidden layer weights

noOfOutWeights = (P+1)*M; % output layer weights

% Initialize the hidden and output layer weights

% small random numbers

for j = 1: P+1

for i= 1: N+1

hidWts(i,j) = -0.01+0.02*rand;

end

end

for k= 1:M

for j= 1: P+1

outWts(j,k)= -0.01+0.02*rand;

end

end

for k=1:20000

% randomly select an input : target pair from

% the given pattern

p=fix(1+rand*7);

x=s(p,:);

x(N+1)=1;

outDesired=t(p,:);

% output of the hidden layer units

yInHid=x*hidWts; %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%

yHid=(1.-exp(-yInHid))/(1.+exp(-yInHid));

yHid(P+1)=1;

% Output of the output layer units

yInOut=yHid*outWts; %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

yOut=(1. - exp(-yInOut))./(1. + exp(-yInOut));

%%%%%%End of forward propagation%%%%%%%%%

%Calculate errors and the deltas

outErrors=outDesired - yOut;

outDelta= outErrors.*(0.5*(1+yOut).*(1-yOut));

abc= outWts*outDelta';

hidDelta= abc'.*(0.5*(1-yHid).*(1+yHid));

% Update weights on the output layer

outWts = outWts + alpha*yHid'*outDelta;

% update weights on the hidden layer

hidWts=hidWts + alpha*x'*hidDelta;

% squared error

err(k)= outErrors*outErrors';

end

figure

plot(err);

xlabel('No. of epochs')

ylabel('Error')

%

% Testing of the net

%

p=fix(1+rand*7)

x=s(p,:);

x(N+1)=1;

outDesired=t(p,:)

% output of the hidden layer units

yInHid=x*hidWts; %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

yHid=(1.-exp(-yInHid))/(1.+exp(-yInHid));

yHid(P+1)=1;

% Output of the output layer units

yInOut=yHid*outWts; %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%

yOut=(1. - exp(-yInOut))./(1. + exp(-yInOut))

Images “g1.jpg” and “b1.jpg” as used in program 2

Você também pode gostar

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- SolidWorks Surface ModelingDocumento1 páginaSolidWorks Surface ModelingCAD MicroSolutionsAinda não há avaliações

- Ensemble Based Reservoir ModelingDocumento2 páginasEnsemble Based Reservoir ModelingWan Norain Awang LongAinda não há avaliações

- ADMS 2018 Chapter OneDocumento51 páginasADMS 2018 Chapter Oneabi adamuAinda não há avaliações

- Cs403-Finalterm Solved Mcqs With References by MoaazDocumento39 páginasCs403-Finalterm Solved Mcqs With References by Moaazbilaltarar90% (10)

- BSMAC 2019 FlowchartDocumento1 páginaBSMAC 2019 FlowchartMikaela SamonteAinda não há avaliações

- MCA (Revised) R) 1Documento3 páginasMCA (Revised) R) 1nishantgaurav23Ainda não há avaliações

- Cad DrawingsDocumento51 páginasCad DrawingspramodAinda não há avaliações

- Sad - Data Modeling - Data Modeling - WattpadDocumento11 páginasSad - Data Modeling - Data Modeling - WattpadkamalshrishAinda não há avaliações

- 2.160 Identification, Estimation, and Learning Lecture Notes No. 1Documento7 páginas2.160 Identification, Estimation, and Learning Lecture Notes No. 1Cristóbal Eduardo Carreño MosqueiraAinda não há avaliações

- Conversion From DSM To DTMDocumento2 páginasConversion From DSM To DTMBakti NusantaraAinda não há avaliações

- Leapfrog Geo File TypesDocumento5 páginasLeapfrog Geo File TypesELARDK100% (1)

- Model Answers For Chapter 7: CLASSIFICATION AND REGRESSION TREESDocumento3 páginasModel Answers For Chapter 7: CLASSIFICATION AND REGRESSION TREESTest TestAinda não há avaliações

- Unit 2 Ooad 2020 PptcompleteDocumento96 páginasUnit 2 Ooad 2020 PptcompleteLAVANYA KARTHIKEYANAinda não há avaliações

- This Question Paper Consists of 40 Questions. Answer All Questions. Circle Your BEST AnswersDocumento5 páginasThis Question Paper Consists of 40 Questions. Answer All Questions. Circle Your BEST AnswersawaludinAinda não há avaliações

- Operations Management, 10e: (Heizer/Render) Chapter 4 ForecastingDocumento22 páginasOperations Management, 10e: (Heizer/Render) Chapter 4 ForecastingEnas El-AmlehAinda não há avaliações

- SCJA Scja - de Complete EbookDocumento141 páginasSCJA Scja - de Complete Ebooksaurabh.simpyAinda não há avaliações

- Contrast StretchingDocumento9 páginasContrast StretchingKaustav MitraAinda não há avaliações

- Image Enhancement-Spatial Filtering From: Digital Image Processing, Chapter 3Documento56 páginasImage Enhancement-Spatial Filtering From: Digital Image Processing, Chapter 3Jaunty_UAinda não há avaliações

- A Unified Multi-Corner Multi-Mode Static Timing Analysis EngineDocumento30 páginasA Unified Multi-Corner Multi-Mode Static Timing Analysis EnginesumanAinda não há avaliações

- ch3 PDFDocumento23 páginasch3 PDFArdi SujiartaAinda não há avaliações

- IT Project RYTHAMDocumento16 páginasIT Project RYTHAMRYTHAMAinda não há avaliações

- SQLDocumento26 páginasSQLSonal JadhavAinda não há avaliações

- A 3 Forecasting FinalDocumento16 páginasA 3 Forecasting FinalFaiaze IkramahAinda não há avaliações

- Sub Modeling in ANSYS WorkbenchDocumento3 páginasSub Modeling in ANSYS Workbenchchandru20Ainda não há avaliações

- Exploring Random VariablesDocumento13 páginasExploring Random VariablesRex FajardoAinda não há avaliações

- Chapter 02-1 Entities, Attributes, and UIDDocumento36 páginasChapter 02-1 Entities, Attributes, and UIDPradeepthi IntiAinda não há avaliações

- Lab7 ImageResamplingDocumento1 páginaLab7 ImageResamplingBhaskar BelavadiAinda não há avaliações

- SGD For Linear RegressionDocumento4 páginasSGD For Linear RegressionRahul YadavAinda não há avaliações

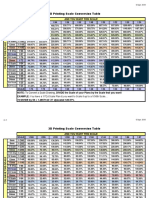

- 3D Printing Conversion v4Documento2 páginas3D Printing Conversion v4Henrique Maximiano Barbosa de SousaAinda não há avaliações