Escolar Documentos

Profissional Documentos

Cultura Documentos

Grid and Cloud Computing

Enviado por

Wesley MutaiDescrição original:

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Grid and Cloud Computing

Enviado por

Wesley MutaiDireitos autorais:

Formatos disponíveis

Grid computing is a term referring to the combination of computer resources from multiple administrative domains to reach a common goal.

The grid can be thought of as a distributed system with non-interactive workloads that involve a large number of files. What distinguishes grid computing from conventional high performance computing systems such as cluster computing is that grids tend to be more loosely coupled, heterogeneous, and geographically dispersed. Although a grid can be dedicated to a specialized application, it is more common that a single grid will be used for a variety of different purposes. Grids are often constructed with the aid of general-purpose grid software libraries known as middleware. Grid size can vary by a considerable amount. Grids are a form of distributed computing whereby a super virtual computer is composed of many networked loosely coupled computers acting together to perform very large tasks. Furthermore, distributed or grid computing, in general, is a special type of parallel computing that relies on complete computers (with onboard CPUs, storage, power supplies, network interfaces, etc.) connected to a network (private, public or the Internet) by a conventional network interface, such as Ethernet. This is in contrast to the traditional notion of a supercomputer, which has many processors connected by a local highspeed computer bus.

Contents

[hide]

1 Overview 2 Comparison of grids and conventional supercomputers 3 Design considerations and variations 4 Market segmentation of the grid computing market o 4.1 The provider side o 4.2 The user side 5 CPU scavenging 6 History 7 Fastest virtual supercomputers 8 Current projects and applications 9 Definitions 10 See also o 10.1 Concepts and related technology o 10.2 Alliances and organizations o 10.3 Production grids o 10.4 International projects o 10.5 National projects o 10.6 Standards and APIs o 10.7 Software implementations and middleware o 10.8 Visualization frameworks 11 References o 11.1 Notes o 11.2 Bibliography

12 External links

[edit] Overview

Grid computing combines computers from multiple administrative domains to reach a common goal,[1] to solve a single task, and may then disappear just as quickly. One of the main strategies of grid computing is to use middleware to divide and apportion pieces of a program among several computers, sometimes up to many thousands. Grid computing involves computation in a distributed fashion, which may also involve the aggregation of largescale cluster computing-based systems. The size of a grid may vary from smallconfined to a network of computer workstations within a corporation, for exampleto large, public collaborations across many companies and networks. "The notion of a confined grid may also be known as an intra-nodes cooperation whilst the notion of a larger, wider grid may thus refer to an inter-nodes cooperation".[2] Grids are a form of distributed computing whereby a super virtual computer is composed of many networked loosely coupled computers acting together to perform very large tasks. This technology has been applied to computationally intensive scientific, mathematical, and academic problems through volunteer computing, and it is used in commercial enterprises for such diverse applications as drug discovery, economic forecasting, seismic analysis, and back office data processing in support for e-commerce and Web services.

[edit] Comparison of grids and conventional supercomputers

Distributed or grid computing in general is a special type of parallel computing that relies on complete computers (with onboard CPUs, storage, power supplies, network interfaces, etc.) connected to a network (private, public or the Internet) by a conventional network interface, such as Ethernet. This is in contrast to the traditional notion of a supercomputer, which has many processors connected by a local high-speed computer bus.[citation needed] The primary advantage of distributed computing is that each node can be purchased as commodity hardware, which, when combined, can produce a similar computing resource as multiprocessor supercomputer, but at a lower cost. This is due to the economies of scale of producing commodity hardware, compared to the lower efficiency of designing and constructing a small number of custom supercomputers. The primary performance disadvantage is that the various processors and local storage areas do not have high-speed connections. This arrangement is thus well-suited to applications in which multiple parallel computations can take place independently, without the need to communicate intermediate results between processors.[citation needed] The high-end scalability of geographically dispersed grids is generally favorable, due to the low need for connectivity between nodes relative to the capacity of the public Internet.[citation

needed]

There are also some differences in programming and deployment. It can be costly and difficult to write programs that can run in the environment of a supercomputer, which may have a custom operating system, or require the program to address concurrency issues. If a problem can be adequately parallelized, a thin layer of grid infrastructure can allow conventional, standalone programs, given a different part of the same problem, to run on multiple machines. This makes it possible to write and debug on a single conventional machine, and eliminates complications due to multiple instances of the same program running in the same shared memory and storage space at the same time.[citation needed]

[edit] Design considerations and variations

One feature of distributed grids is that they can be formed from computing resources belonging to multiple individuals or organizations (known as multiple administrative domains). This can facilitate commercial transactions, as in utility computing, or make it easier to assemble volunteer computing networks.[citation needed] One disadvantage of this feature is that the computers which are actually performing the calculations might not be entirely trustworthy. The designers of the system must thus introduce measures to prevent malfunctions or malicious participants from producing false, misleading, or erroneous results, and from using the system as an attack vector. This often involves assigning work randomly to different nodes (presumably with different owners) and checking that at least two different nodes report the same answer for a given work unit. Discrepancies would identify malfunctioning and malicious nodes.[citation needed] Due to the lack of central control over the hardware, there is no way to guarantee that nodes will not drop out of the network at random times. Some nodes (like laptops or dialup Internet customers) may also be available for computation but not network communications for unpredictable periods. These variations can be accommodated by assigning large work units (thus reducing the need for continuous network connectivity) and reassigning work units when a given node fails to report its results in expected time.[citation needed] The impacts of trust and availability on performance and development difficulty can influence the choice of whether to deploy onto a dedicated computer cluster, to idle machines internal to the developing organization, or to an open external network of volunteers or contractors.[citation needed] In many cases, the participating nodes must trust the central system not to abuse the access that is being granted, by interfering with the operation of other programs, mangling stored information, transmitting private data, or creating new security holes. Other systems employ measures to reduce the amount of trust client nodes must place in the central system such as placing applications in virtual machines.[citation needed] ___ Public systems or those crossing administrative domains (including different departments in the same organization) often result in the need to run on heterogeneous systems, using different operating systems and hardware architectures. With many languages, there is a trade off between investment in software development and the number of platforms that can be supported (and thus the size of the resulting network). Cross-platform languages can reduce the need to make this trade off, though potentially at the expense of high performance on any given node (due to run-time interpretation or lack of optimization for the particular platform).[citation needed]

Various middleware projects have created generic infrastructure to allow diverse scientific and commercial projects to harness a particular associated grid or for the purpose of setting up new grids. BOINC is a common one for various academic projects seeking public volunteers;[citation needed] more are listed at the end of the article. In fact, the middleware can be seen as a layer between the hardware and the software. On top of the middleware, a number of technical areas have to be considered, and these may or may not be middleware independent. Example areas include SLA management, Trust and Security, Virtual organization management, License Management, Portals and Data Management. These technical areas may be taken care of in a commercial solution, though the cutting edge of each area is often found within specific research projects examining the field.[citation needed]

[edit] Market segmentation of the grid computing market

According to IT-Tude.com, for the segmentation of the grid computing market, two perspectives need to be considered: the provider side and the user side:

[edit] The provider side

The overall grid market comprises several specific markets. These are the grid middleware market, the market for grid-enabled applications, the utility computing market, and the softwareas-a-service (SaaS) market. Grid middleware is a specific software product, which enables the sharing of heterogeneous resources, and Virtual Organizations. It is installed and integrated into the existing infrastructure of the involved company or companies, and provides a special layer placed among the heterogeneous infrastructure and the specific user applications. Major grid middlewares are Globus Toolkit, gLite, and UNICORE. Utility computing is referred to as the provision of grid computing and applications as service either as an open grid utility or as a hosting solution for one organization or a VO. Major players in the utility computing market are Sun Microsystems, IBM, and HP. Grid-enabled applications are specific software applications that can utilize grid infrastructure. This is made possible by the use of grid middleware, as pointed out above. Software as a service (SaaS) is software that is owned, delivered and managed remotely by one or more providers. (Gartner 2007) Additionally, SaaS applications are based on a single set of common code and data definitions. They are consumed in a one-to-many model, and SaaS uses a Pay As You Go (PAYG) model or a subscription model that is based on usage. Providers of SaaS do not necessarily own the computing resources themselves, which are required to run their SaaS. Therefore, SaaS providers may draw upon the utility computing market. The utility computing market provides computing resources for SaaS providers.

[edit] The user side

For companies on the demand or user side of the grid computing market, the different segments have significant implications for their IT deployment strategy. The IT deployment strategy as well as the type of IT investments made are relevant aspects for potential grid users and play an important role for grid adoption.

[edit] CPU scavenging

CPU-scavenging, cycle-scavenging, cycle stealing, or shared computing creates a grid from the unused resources in a network of participants (whether worldwide or internal to an organization). Typically this technique uses desktop computer instruction cycles that would otherwise be wasted at night, during lunch, or even in the scattered seconds throughout the day when the computer is waiting for user input or slow devices. Many Volunteer computing projects, such as BOINC, use the CPU scavenging model. In practice, participating computers also donate some supporting amount of disk storage space, RAM, and network bandwidth, in addition to raw CPU power. Heat produced by CPU power in rooms with many computers can be used for fine heating premises.[3] Since nodes are likely to go "offline" from time to time, as their owners use their resources for their primary purpose, this model must be designed to handle such contingencies.

[edit] History

The term grid computing originated in the early 1990s as a metaphor for making computer power as easy to access as an electric power grid in Ian Foster's and Carl Kesselman's seminal work, "The Grid: Blueprint for a new computing infrastructure" (2004). CPU scavenging and volunteer computing were popularized beginning in 1997 by distributed.net and later in 1999 by SETI@home to harness the power of networked PCs worldwide, in order to solve CPU-intensive research problems.[citation needed] The ideas of the grid (including those from distributed computing, object-oriented programming, and Web services) were brought together by Ian Foster, Carl Kesselman, and Steve Tuecke, widely regarded as the "fathers of the grid".[4] They led the effort to create the Globus Toolkit incorporating not just computation management but also storage management, security provisioning, data movement, monitoring, and a toolkit for developing additional services based on the same infrastructure, including agreement negotiation, notification mechanisms, trigger services, and information aggregation. While the Globus Toolkit remains the de facto standard for building grid solutions, a number of other tools have been built that answer some subset of services needed to create an enterprise or global grid. In 2007 the term cloud computing came into popularity, which is conceptually similar to the canonical Foster definition of grid computing (in terms of computing resources being consumed as electricity is from the power grid). Indeed, grid computing is often (but not always) associated

with the delivery of cloud computing systems as exemplified by the AppLogic system from 3tera.[citation needed]

[edit] Fastest virtual supercomputers

BOINC 5.128PFLOPS as of Apr 24th 2010.[5] Folding@Home 5 PFLOPS, as of March 17, 2009 [6] As of April 2010, MilkyWay@Home computes at over 1.6 PFLOPS, with a large amount of this work coming from GPUs.[7] As of April 2010, SETI@Home computes data averages more than 730 TFLOPS.[8] As of April 2010, Einstein@Home is crunching more than 210 TFLOPS.[9] As of April 2010, GIMPS is sustaining 44 TFLOPS.[10]

[edit] Current projects and applications

Main article: List of distributed computing projects Grids computing offer a way to solve Grand Challenge problems such as protein folding, financial modeling, earthquake simulation, and climate/weather modeling. Grids offer a way of using the information technology resources optimally inside an organization. They also provide a means for offering information technology as a utility for commercial and noncommercial clients, with those clients paying only for what they use, as with electricity or water. Grid computing is being applied by the National Science Foundation's National Technology Grid, NASA's Information Power Grid, Pratt & Whitney, Bristol-Myers Squibb Co., and American Express.[citation needed] One of the most famous cycle-scavenging networks is SETI@home, which was using more than 3 million computers to achieve 23.37 sustained teraflops (979 lifetime teraflops) as of September 2001.[11] As of August 2009 Folding@home achieves more than 4 petaflops on over 350,000 machines. The European Union has been a major proponent of grid computing. Many projects have been funded through the framework programme of the European Commission. Many of the projects are highlighted below, but two deserve special mention: BEinGRID and Enabling Grids for EsciencE.[citation needed] BEinGRID (Business Experiments in Grid) is a research project partly funded by the European commission[citation needed] as an Integrated Project under the Sixth Framework Programme (FP6) sponsorship program. Started on June 1, 2006, the project will run 42 months, until November 2009. The project is coordinated by Atos Origin. According to the project fact sheet, their mission is to establish effective routes to foster the adoption of grid computing across the EU and to stimulate research into innovative business models using Grid technologies. To extract best practice and common themes from the experimental implementations, two groups of

consultants are analyzing a series of pilots, one technical, one business. The results of these cross analyzes are provided by the website IT-Tude.com. The project is significant not only for its long duration, but also for its budget, which at 24.8 million Euros, is the largest of any FP6 integrated project. Of this, 15.7 million is provided by the European commission and the remainder by its 98 contributing partner companies. The Enabling Grids for E-sciencE project, which is based in the European Union and includes sites in Asia and the United States, is a follow-up project to the European DataGrid (EDG) and is arguably the largest computing grid on the planet. This, along with the LHC Computing Grid[12] (LCG), has been developed to support the experiments using the CERN Large Hadron Collider. The LCG project is driven by CERN's need to handle huge amounts of data, where storage rates of several gigabytes per second (10 petabytes per year) are required. A list of active sites participating within LCG can be found online[13] as can real time monitoring of the EGEE infrastructure.[14] The relevant software and documentation is also publicly accessible.[15] There is speculation that dedicated fiber optic links, such as those installed by CERN to address the LCG's data-intensive needs, may one day be available to home users thereby providing internet services at speeds up to 10,000 times faster than a traditional broadband connection.[16] Another well-known project is distributed.net, which was started in 1997 and has run a number of successful projects in its history. The NASA Advanced Supercomputing facility (NAS) has run genetic algorithms using the Condor cycle scavenger running on about 350 Sun and SGI workstations. Until April 27, 2007, United Devices operated the United Devices Cancer Research Project based on its Grid MP product, which cycle-scavenges on volunteer PCs connected to the Internet. As of June 2005, the Grid MP ran on about 3.1 million machines.[17] Another well-known project is the World Community Grid. The World Community Grid's mission is to create the largest public computing grid that benefits humanity. This work is built on belief that technological innovation combined with visionary scientific research and largescale volunteerism can change our world for the better. IBM Corporation has donated the hardware, software, technical services, and expertise to build the infrastructure for World Community Grid and provides free hosting, maintenance, and support.[citation needed]

[edit] Definitions

Today there are many definitions of grid computing:

In his article What is the Grid? A Three Point Checklist,[1] Ian Foster lists these primary attributes: o Computing resources are not administered centrally. o Open standards are used. o Nontrivial quality of service is achieved.

Plaszczak/Wellner[18] define grid technology as "the technology that enables resource virtualization, on-demand provisioning, and service (resource) sharing between organizations." IBM defines grid computing as the ability, using a set of open standards and protocols, to gain access to applications and data, processing power, storage capacity and a vast array of other computing resources over the Internet. A grid is a type of parallel and distributed system that enables the sharing, selection, and aggregation of resources distributed across multiple administrative domains based on their (resources) availability, capacity, performance, cost and users' quality-of-service requirements.[19] An earlier example of the notion of computing as utility was in 1965 by MIT's Fernando Corbat. Corbat and the other designers of the Multics operating system envisioned a computer facility operating like a power company or water company. http://www.multicians.org/fjcc3.html Buyya/Venugopal[20] define grid as "a type of parallel and distributed system that enables the sharing, selection, and aggregation of geographically distributed autonomous resources dynamically at runtime depending on their availability, capability, performance, cost, and users' quality-of-service requirements". CERN, one of the largest users of grid technology, talk of The Grid: a service for sharing computer power and data storage capacity over the Internet. [21]

Grids can be categorized with a three stage model of departmental grids, enterprise grids and global grids. These correspond to a firm initially utilising resources within a single group i.e. an engineering department connecting desktop machines, clusters and equipment. This progresses to enterprise grids where nontechnical staff's computing resources can be used for cycle-stealing and storage. A global grid is a connection of enterprise and departmental grids that can be used in a commercial or collaborative manner.

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXx

Cloud computing is not new, in fact it has been around for well over 10 years. Wikipedia defines cloud computing as location-independent computing, whereby shared servers provide resources, software, and data to computers and other devices on demand Sound familiar? Some common examples of cloud computing are services like Hotmail and Windows Live, Gmail, Messenger, Salesforce.com, Amazon EC2, Azure, Office365, the list goes on. But what is right for your situation? What is a private cloud, a public cloud, a hybrid cloud? Lets take a look at cloud computing, what the differences are and where they can fit into your organizations needs. Should you consider adopting a cloud solution? There are three main types of cloud based services: PaaS Platform as a Service is defined as a solution that provides a computing platform. Windows Azure is an example of PaaS in that a developer can write an application to run on the Azure platform, or stack, without having to purchase the hardware and software required to host and run that application. IasS Infrastructure as a Service is defined as a solution that provides a computing infrastructure. Our host, Rackforce.com, is an IaaS provider. They provide all the hardware, networking, connectivity and datacenter requirements. IaaS has been around for a very long time as webhosts, co-location space in a datacenter are two examples of IaaS.

SaaS Software as a Service is defined as a solution that provides software over the Internet. This is the most common cloud service when it comes to usage. Outlook Web Access, Hotmail, Gmail, Messenger, Office365 are all examples of SaaS. You are already using a few of these services.

That is the easy part to define but when it comes to cloud it gets, well, cloudy pretty fast. Each of these solutions can be delivered in a few ways. Most of the examples so far have been examples of what is called Public Cloud. Take Office365 for example. With Office365 Microsoft hosts the infrastructure and software, provides the majority of management and technical support for the service and makes it available publicly to anyone who wants to pay the monthly charge. Windows Azure and RackForce.com are also examples of other public cloud services. With that said there is nothing stopping you from providing these services to your organization alone. Do you run Exchange server and have OWA enabled? Hey guess what you are a private cloud provider! Sort of Private cloud isnt something new, in fact Microsoft has been talking about it for years. Remember the Dynamic Systems Initiative (DSI) from 8 years ago (see image to refresh your memory)? It was all about providing a dynamic infrastructure using monitoring and automation to provide computing services to your organization. A few years later, when virtualization became prevalent came the Dynamic Datacenter Kit. Guess what, this was a refresh of DSI with virtualization brought into the mix. Today with SCVMM and SSP 2.0 it has been refreshed again but now it is called Private Cloud.

So now that you know what a public cloud is and what a private cloud is (essentially the same but different) you might wonder what a hybrid cloud is. The third option is a hybrid cloud which is a mix of a public and private cloud. One of the challenges with public clouds is security and privacy of data. Many people have concerns over issues like the PATRIOT Act, PIPEDA, HIPPA, SarbOx and other regulatory requirements. There may be an

instance where for one reason or another the data has to reside in your datacenter but you want to take advantage of the processing power of the cloud. This is where a hybrid cloud can be a benefit. With a hybrid cloud you could keep your data in house on a local SQL server but perform the data analysis in the cloud. Take for example a business application that stores data in a SQL database and once a day, week, month you have to run a report that requires massive compute power. You could build the infrastructure to host the application that does the computing, but what does that system do the rest of the time besides heat the datacenter and consume electricity? The other option is a hybrid cloud where the application is hosted in the cloud, lets say Windows Azure, but the data is still on your local SQL server. When the application is being run the Azure service provides the scalable compute power and SQL provides the data to be processed. There are plenty of benefits to cloud computing but it can be hard to navigate due to the marketing hype surrounding cloud computing. The same thing happened when virtualization started to take off and all of a sudden redirected profiles became profile virtualization. Nothing changed but the name. The same goes for cloud computing, well new features and technologies are being introduced, the essence is the same. When it comes to the end of the day the infrastructure may look a little different and may be hosted elsewhere but it will still be your job to manage it. The nice thing is the tools you are currently using are evolving to take these changes into account so you will continue to use the same tools to do so. Look past the hype, find the value in cloud computing and dont worry about losing your job because the datacenter is now in the cloud, someone will still have to manage it all!

Você também pode gostar

- WoodCarving Illustrated 044 (Fall 2008)Documento100 páginasWoodCarving Illustrated 044 (Fall 2008)Victor Sanhueza100% (7)

- Ein Extensive ListDocumento60 páginasEin Extensive ListRoberto Monterrosa100% (2)

- Summary Studying Public Policy Michael Howlett CompleteDocumento28 páginasSummary Studying Public Policy Michael Howlett CompletefadwaAinda não há avaliações

- Operational Business Suite Contract by SSNIT Signed in 2012Documento16 páginasOperational Business Suite Contract by SSNIT Signed in 2012GhanaWeb EditorialAinda não há avaliações

- Grid ComputingDocumento8 páginasGrid ComputingKiran SamdyanAinda não há avaliações

- Grid Computing Is The Federation of Computer Resources From MultipleDocumento7 páginasGrid Computing Is The Federation of Computer Resources From MultiplekaushalankushAinda não há avaliações

- Grid Computing: Distributed SystemDocumento15 páginasGrid Computing: Distributed SystemLekha V RamaswamyAinda não há avaliações

- Grid Computing As Virtual Supercomputing: Paper Presented byDocumento6 páginasGrid Computing As Virtual Supercomputing: Paper Presented bysunny_deshmukh_3Ainda não há avaliações

- Grid Computing A New DefinitionDocumento4 páginasGrid Computing A New DefinitionChetan NagarAinda não há avaliações

- Distributed Computing Management Server 2Documento18 páginasDistributed Computing Management Server 2Sumanth MamidalaAinda não há avaliações

- FinalDocumento19 páginasFinalmanjiriaphaleAinda não há avaliações

- UNIT-1: Overview of Grid ComputingDocumento16 páginasUNIT-1: Overview of Grid ComputingFake SinghAinda não há avaliações

- The Poor Man's Super ComputerDocumento12 páginasThe Poor Man's Super ComputerkudupudinageshAinda não há avaliações

- Figure 1. Towards Grid Computing: A Conceptual ViewDocumento35 páginasFigure 1. Towards Grid Computing: A Conceptual ViewRockey VermaAinda não há avaliações

- Grid Computing 1Documento6 páginasGrid Computing 1cheecksaAinda não há avaliações

- Grid Computing: T A S K 8Documento25 páginasGrid Computing: T A S K 8navecgAinda não há avaliações

- Grid Computing Technical Paper On Ict: School of Management Studies CUSAT Kochi-22Documento12 páginasGrid Computing Technical Paper On Ict: School of Management Studies CUSAT Kochi-22Annonymous963258Ainda não há avaliações

- Comparing Cloud Computing and Grid ComputingDocumento15 páginasComparing Cloud Computing and Grid ComputingRajesh RaghavanAinda não há avaliações

- Grid Computing: 1. AbstractDocumento10 páginasGrid Computing: 1. AbstractBhavya DeepthiAinda não há avaliações

- Study and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalDocumento6 páginasStudy and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalIJERDAinda não há avaliações

- Grid PlatformDocumento4 páginasGrid PlatformShantru RautAinda não há avaliações

- Cloud Computing Is The On-Demand Availability ofDocumento8 páginasCloud Computing Is The On-Demand Availability ofVovAinda não há avaliações

- Unit - 1: Cloud Architecture and ModelDocumento9 páginasUnit - 1: Cloud Architecture and ModelRitu SrivastavaAinda não há avaliações

- Grid Computing: College of Computer Science and Information Technology, JunagadhDocumento5 páginasGrid Computing: College of Computer Science and Information Technology, JunagadhTank BhavinAinda não há avaliações

- The Poor Man's Super ComputerDocumento12 páginasThe Poor Man's Super ComputerVishnu ParsiAinda não há avaliações

- ReportDocumento17 páginasReportsaurabh128Ainda não há avaliações

- Grid Computing: 1. AbstractDocumento10 páginasGrid Computing: 1. AbstractARVINDAinda não há avaliações

- De TrqadusDocumento2 páginasDe Trqadusemmy_unikAinda não há avaliações

- Distributed ComputingDocumento13 páginasDistributed Computingpriyanka1229Ainda não há avaliações

- TopologyiesDocumento18 páginasTopologyieslahiruaioitAinda não há avaliações

- Osamah Shihab Al-NuaimiDocumento7 páginasOsamah Shihab Al-NuaimiOmar AbooshAinda não há avaliações

- 69 - Developing Middleware For Hybrid Cloud Computing ArchitecturesDocumento3 páginas69 - Developing Middleware For Hybrid Cloud Computing ArchitecturesLARS Projetos & Soluções em Engenharia ElétricaAinda não há avaliações

- Telecommunication Networks:: Network and Distributed Processing What Is Distributed System?Documento5 páginasTelecommunication Networks:: Network and Distributed Processing What Is Distributed System?MartinaAinda não há avaliações

- Presentation On Cloud ComputingDocumento21 páginasPresentation On Cloud ComputingPraveen Kumar PrajapatiAinda não há avaliações

- Grid ComputingDocumento11 páginasGrid ComputingKrishna KumarAinda não há avaliações

- Cloud Computing: Presentation ByDocumento20 páginasCloud Computing: Presentation Bymahesh_mallidiAinda não há avaliações

- A Seminar Sysnopsis ON Grid ComputingDocumento4 páginasA Seminar Sysnopsis ON Grid ComputingSweetie PieAinda não há avaliações

- Cloud Computing Notes (UNIT-2)Documento43 páginasCloud Computing Notes (UNIT-2)AmanAinda não há avaliações

- Seminar ReportDocumento17 páginasSeminar Reportshinchan noharaAinda não há avaliações

- Seminar ReportDocumento17 páginasSeminar Reportshinchan noharaAinda não há avaliações

- Data CentersDocumento9 páginasData Centersjohn bourkeAinda não há avaliações

- Data CentersDocumento9 páginasData Centersjohn bourkeAinda não há avaliações

- Chapter8 PDFDocumento42 páginasChapter8 PDFBhavneet SinghAinda não há avaliações

- Seminar Presented By: Sehar Sultan M.SC (CS) 4 Semester UAFDocumento33 páginasSeminar Presented By: Sehar Sultan M.SC (CS) 4 Semester UAFSahar SultanAinda não há avaliações

- Grid ComputingDocumento11 páginasGrid ComputingKrishna KumarAinda não há avaliações

- Unit 5 Cloud ComputingDocumento12 páginasUnit 5 Cloud ComputingvibhamanojAinda não há avaliações

- UNIT 1 Grid ComputingDocumento55 páginasUNIT 1 Grid ComputingAbhishek Tomar67% (3)

- Grid Computing MaterialDocumento17 páginasGrid Computing MaterialRajesh KannaAinda não há avaliações

- Different Types of Computing - Grid, Cloud, Utility, Distributed and Cluster ComputingDocumento4 páginasDifferent Types of Computing - Grid, Cloud, Utility, Distributed and Cluster ComputingSandeep MotamariAinda não há avaliações

- Distributed ComputingDocumento6 páginasDistributed ComputingMiguel Gabriel FuentesAinda não há avaliações

- Compare Between Grid and ClusterDocumento12 páginasCompare Between Grid and ClusterharrankashAinda não há avaliações

- Cloud Computing: Cloud Computing Is The Delivery of Computing As A Service Rather Than A Product, WherebyDocumento15 páginasCloud Computing: Cloud Computing Is The Delivery of Computing As A Service Rather Than A Product, WherebyShashank GuptaAinda não há avaliações

- s5 Notes CCDocumento2 páginass5 Notes CCganeshAinda não há avaliações

- Seminar ReportDocumento17 páginasSeminar ReportJp Sahoo0% (1)

- Cloud Computing Is Changing How We Communicate: By-Ravi Ranjan (1NH06CS084)Documento32 páginasCloud Computing Is Changing How We Communicate: By-Ravi Ranjan (1NH06CS084)ranjan_ravi04Ainda não há avaliações

- CC DocumentDocumento13 páginasCC Documentgopikrishnan1234Ainda não há avaliações

- Cloud Computing OverviewDocumento13 páginasCloud Computing OverviewRobert ValeAinda não há avaliações

- Cloud Computing: From Wikipedia, The Free EncyclopediaDocumento14 páginasCloud Computing: From Wikipedia, The Free EncyclopediabalaofindiaAinda não há avaliações

- Reliability of Cloud Computing Services: Anju Mishra & Dr. Viresh Sharma & Dr. Ashish PandeyDocumento10 páginasReliability of Cloud Computing Services: Anju Mishra & Dr. Viresh Sharma & Dr. Ashish PandeyIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalAinda não há avaliações

- Cloud Computing in Distributed System IJERTV1IS10199Documento8 páginasCloud Computing in Distributed System IJERTV1IS10199Nebula OriomAinda não há avaliações

- Cloud Computing Research PaperDocumento11 páginasCloud Computing Research PaperPracheta BansalAinda não há avaliações

- MIS Final Solved Exam 2024Documento16 páginasMIS Final Solved Exam 2024walid.shahat.24d491Ainda não há avaliações

- Computer Science Self Management: Fundamentals and ApplicationsNo EverandComputer Science Self Management: Fundamentals and ApplicationsAinda não há avaliações

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingAinda não há avaliações

- Project Report Format: Instructions For Preparing The Project Report / Term PaperDocumento4 páginasProject Report Format: Instructions For Preparing The Project Report / Term PaperWesley MutaiAinda não há avaliações

- Discrete Fourier TransformDocumento11 páginasDiscrete Fourier TransformWesley MutaiAinda não há avaliações

- MeDocumento1 páginaMeWesley MutaiAinda não há avaliações

- The Discrete Fourier Transform (DFT) : N T T I J IDocumento4 páginasThe Discrete Fourier Transform (DFT) : N T T I J IWesley MutaiAinda não há avaliações

- Important Questions Mba-Ii Sem Organisational BehaviourDocumento24 páginasImportant Questions Mba-Ii Sem Organisational Behaviourvikas__ccAinda não há avaliações

- Digirig Mobile 1 - 9 SchematicDocumento1 páginaDigirig Mobile 1 - 9 SchematicKiki SolihinAinda não há avaliações

- Burndown Sample ClayDocumento64 páginasBurndown Sample ClaybluemaxAinda não há avaliações

- Section 12-22, Art. 3, 1987 Philippine ConstitutionDocumento3 páginasSection 12-22, Art. 3, 1987 Philippine ConstitutionKaren LabogAinda não há avaliações

- The PILOT: July 2023Documento16 páginasThe PILOT: July 2023RSCA Redwood ShoresAinda não há avaliações

- Ara FormDocumento2 páginasAra Formjerish estemAinda não há avaliações

- Instrumentation and Control Important Questions and AnswersDocumento72 páginasInstrumentation and Control Important Questions and AnswersAjay67% (6)

- Statement of Facts:: State of Adawa Vs Republic of RasasaDocumento10 páginasStatement of Facts:: State of Adawa Vs Republic of RasasaChristine Gel MadrilejoAinda não há avaliações

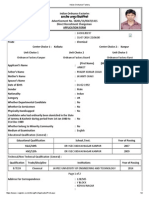

- Indian Ordnance FactoryDocumento2 páginasIndian Ordnance FactoryAniket ChakiAinda não há avaliações

- 13) Api 510 Day 5Documento50 páginas13) Api 510 Day 5hamed100% (1)

- Form Ticketing Latihan ContohDocumento29 páginasForm Ticketing Latihan ContohASPIN SURYONOAinda não há avaliações

- Universal Marine: Welcome To Our One Stop Marine ServicesDocumento8 páginasUniversal Marine: Welcome To Our One Stop Marine Serviceshoangtruongson1111Ainda não há avaliações

- Business Works Student User GuideDocumento14 páginasBusiness Works Student User GuideAkram UddinAinda não há avaliações

- COST v. MMWD Complaint 8.20.19Documento64 páginasCOST v. MMWD Complaint 8.20.19Will HoustonAinda não há avaliações

- 11 - Savulescu Et Al (2020) - Equality or Utility. Ethics and Law of Rationing VentilatorsDocumento6 páginas11 - Savulescu Et Al (2020) - Equality or Utility. Ethics and Law of Rationing VentilatorsCorrado BisottoAinda não há avaliações

- 20151201-Baltic Sea Regional SecurityDocumento38 páginas20151201-Baltic Sea Regional SecurityKebede MichaelAinda não há avaliações

- Pivacare Preventive-ServiceDocumento1 páginaPivacare Preventive-ServiceSadeq NeiroukhAinda não há avaliações

- Mine Gases (Part 1)Documento15 páginasMine Gases (Part 1)Melford LapnawanAinda não há avaliações

- Evaporator EfficiencyDocumento15 páginasEvaporator EfficiencySanjaySinghAdhikariAinda não há avaliações

- Nature Hill Middle School Wants To Raise Money For A NewDocumento1 páginaNature Hill Middle School Wants To Raise Money For A NewAmit PandeyAinda não há avaliações

- Lab ManualDocumento15 páginasLab ManualsamyukthabaswaAinda não há avaliações

- Hydraulic Breakers in Mining ApplicationDocumento28 páginasHydraulic Breakers in Mining ApplicationdrmassterAinda não há avaliações

- D.E.I Technical College, Dayalbagh Agra 5 III Semester Electrical Engg. Electrical Circuits and Measurements Question Bank Unit 1Documento5 páginasD.E.I Technical College, Dayalbagh Agra 5 III Semester Electrical Engg. Electrical Circuits and Measurements Question Bank Unit 1Pritam Kumar Singh100% (1)

- News/procurement-News: WWW - Sbi.co - inDocumento15 páginasNews/procurement-News: WWW - Sbi.co - inssat111Ainda não há avaliações

- 2B. Glicerina - USP-NF-FCC Glycerin Nutritional Statement USP GlycerinDocumento1 página2B. Glicerina - USP-NF-FCC Glycerin Nutritional Statement USP Glycerinchristian muñozAinda não há avaliações

- Peace Corps Guatemala Welcome Book - June 2009Documento42 páginasPeace Corps Guatemala Welcome Book - June 2009Accessible Journal Media: Peace Corps DocumentsAinda não há avaliações