Escolar Documentos

Profissional Documentos

Cultura Documentos

Parallel Monte Carlo With An Intel I7 Quad

Enviado por

Felipe DinizDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Parallel Monte Carlo With An Intel I7 Quad

Enviado por

Felipe DinizDireitos autorais:

Formatos disponíveis

Parallel Monte Carlo with an Intel i7 Quad Core

By darrenjw

Ive recently acquired a new laptop with an Intel i7 Quad Core CPU an i7-940XM to be precise, and Im interested in the possibility of running parallel MCMC codes on this machine (a Dell Precision M4500) in order to speed things up a bit. Im running the AMD64 version of Ubuntu Linux 10.10 on it, as it has 8GB of (1333MHz DDR2 dual channel) RAM. It also contains an NVIDIA 1GB Quadro FX graphics card, which I could probably also use for GPU-style speed-up using CUDA, but I dont really want that kind of hassle if I can avoid it. In a previous post I gave an Introduction to parallel MCMC, which explains how to set up an MPI-based parallel computing environment with Ubuntu. In this post Im just going to look at a very simple embarrassingly parallel Monte Carlo code, based on the code monte-carlo.c from the previous post. The slightly modified version of the code is given below.

01 #include <math.h> 02 #include <mpi.h> 03 #include <gsl/gsl_rng.h> 04 #include "gsl-sprng.h" 05 06 int main(int argc,char *argv[]) 07 { int i,k,N; long Iters; double u,ksum,Nsum; gsl_rng 08 *r; 09 MPI_Init(&argc,&argv); 10 MPI_Comm_size(MPI_COMM_WORLD,&N); 11 MPI_Comm_rank(MPI_COMM_WORLD,&k); 12 Iters=1e9; 13 r=gsl_rng_alloc(gsl_rng_sprng20); 14 for (i=0;i<(Iters/N);i++) { 15 u = gsl_rng_uniform(r); 16 ksum += exp(-u*u); 17 } 1 MPI_Reduce(&ksum,&Nsum,1,MPI_DOUBLE,MPI_SUM,0,MPI_ 8 COMM_WORLD); 19 if (k == 0) { printf("Monte carlo estimate is %f\n", 20 Nsum/Iters ); 21 }

22 MPI_Finalize(); 23 exit(EXIT_SUCCESS); 24 }

This code does 10^9 iterations of a Monte Carlo integral, dividing them equally among the available processes. This code can be compiled with something like:

mpicc -I/usr/local/src/sprng2.0/include -L/usr/local/src/sprng2.0/lib -o monte-carlo montecarlo.c -lsprng -lgmp -lgsl -lgslcblas

and run with (say) 4 processes with a command like:

time mpirun -np 4 monte-carlo

How many processes should one run on this machine? This is an interesting question. There is only one CPU in this laptop, but as the name suggests, it has 4 cores. Furthermore, each of those cores is hyper-threaded, so the linux kernel presents it to the user as 8 processors (4 cores, 8 siblings), as a quick cat /proc/cpuinfo will confirm. Does this really mean that it is the equivalent of 8 independent CPUs? The short answer is no, but running 8 processes of an MPI (or OpenMP) job could still be optimal. I investigated this issue empirically by varying the number of processes for the MPI job from 1 to 10, doing 3 repeats for each to get some kind of idea of variability (Ive been working in a lab recently, so I know that all good biologists always do 3 repeats of everything!). The conditions were by no means optimal, but probably quite typical I was running the Ubuntu window manager, several terminal windows, had Firefox open with several tabs in another workspace, and Xemacs open with several buffers, etc. The raw timings (in seconds) are given below.

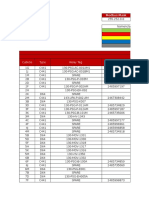

NP 1 2 3 4 5 6 7 8 9 10

T1

T2

T3

62.046 62.042 61.900 35.652 34.737 36.116 29.048 28.238 28.567 23.273 24.184 22.207 24.418 24.735 24.580 21.279 21.184 22.379 20.072 19.758 19.836 17.858 17.836 18.330 20.392 21.290 21.279 22.342 19.685 19.309

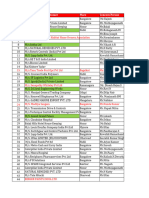

A quick scan of the numbers in the table makes sense the time taken decreases steadily as the number of processes increases up to 8 processes, and then increases again as the number goes above 8. This is exactly the kind of qualitative pattern one would hope to see, but the quantitative pattern is a bit more interesting. First lets look at a plot of the timings.

You will probably need to click on the image to be able to read the axes. The black line gives the average time, and the grey envelope covers all 3 timings. Again, this is broadly what I would have expected time decreasing steadily to 4 processes, then diminishing returns from 4 to 8 processes, and a penalty for attempting to run more than 8 processes in parallel. Now lets look at the speed up (speed relative to the average time of the 1 processor version).

Here again, the qualitative pattern is as expected. However, inspection of the numbers on the y-axis is a little disappointing. Remeber that this not some cleverly parallelised MCMC chain with lots of inter-process communication this is an embarrassingly parallel Monte Carlo job one would expect to see close to 8x speedup on 8 independent CPUs. Here we see that for 4 processes, we get a little over 2.5x speedup, and if we crank things all the way up to 8 processes, we get nearly 3.5x speedup. This

isnt mind-blowing, but it is a lot better than nothing, and this is a fairly powerful processor, so even the 1 processor code is pretty quick So, in answer to the question of how many processes to run, the answer seems to be that if the code is very parallel, running 8 processes will probably be quickest, but running 4 processes probably wont be much slower, and will leave some spare capacity for web browsing, editing, etc, while waiting for the MPI job to run. As ever, YMMV

Você também pode gostar

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5795)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (121)

- Impact of Computer On SocietyDocumento4 páginasImpact of Computer On SocietyraheelAinda não há avaliações

- Cyber Bully ArticleDocumento2 páginasCyber Bully ArticleCharline A. Radislao100% (1)

- Item 3 Ips C441u c441r Ieb Main ListDocumento488 páginasItem 3 Ips C441u c441r Ieb Main Listcristian De la OssaAinda não há avaliações

- Sell Sheet Full - Size-FinalDocumento2 páginasSell Sheet Full - Size-FinalTito BustamanteAinda não há avaliações

- Operating System ComponentsDocumento59 páginasOperating System ComponentsJikku VarUgheseAinda não há avaliações

- 6CS6.2 Unit 5 LearningDocumento41 páginas6CS6.2 Unit 5 LearningAayush AgarwalAinda não há avaliações

- Configuring and Tuning HP Servers For Low-Latency Applications-C01804533Documento29 páginasConfiguring and Tuning HP Servers For Low-Latency Applications-C01804533PhucAnhAinda não há avaliações

- Weilding TechnologyDocumento15 páginasWeilding TechnologyRAMALAKSHMI SUDALAIKANNANAinda não há avaliações

- About Language UniversalsDocumento8 páginasAbout Language UniversalsImran MaqsoodAinda não há avaliações

- CV - Shakir Alhitari - HR ManagerDocumento3 páginasCV - Shakir Alhitari - HR ManagerAnonymous WU31onAinda não há avaliações

- Vmware Validated Design 41 SDDC Architecture DesignDocumento226 páginasVmware Validated Design 41 SDDC Architecture DesignmpuriceAinda não há avaliações

- Guidelines For Hall IC SubassemblyDocumento9 páginasGuidelines For Hall IC SubassemblyvkmsAinda não há avaliações

- Vessel Maneuverability Guide E-Feb17Documento111 páginasVessel Maneuverability Guide E-Feb17KURNIAWAN100% (1)

- Pest Control ChennaiDocumento3 páginasPest Control ChennaiControler33Ainda não há avaliações

- Premium Protection Synthetic Motor Oils (AMO & ARO)Documento2 páginasPremium Protection Synthetic Motor Oils (AMO & ARO)brian5786Ainda não há avaliações

- Manual de TallerDocumento252 páginasManual de TallerEdison RodriguezAinda não há avaliações

- Brendan JoziasseDocumento2 páginasBrendan Joziasseapi-255977608Ainda não há avaliações

- Procedimiento de Test & Pruebas Hidrostaticas M40339-Ppu-R10 HCL / Dosing Pumps Rev.0Documento13 páginasProcedimiento de Test & Pruebas Hidrostaticas M40339-Ppu-R10 HCL / Dosing Pumps Rev.0José Angel TorrealbaAinda não há avaliações

- Yugo m59 - 66 RifleDocumento8 páginasYugo m59 - 66 Riflestraightshooter100% (1)

- Karcher Quotation List - 2023Documento12 páginasKarcher Quotation List - 2023veereshmyb28Ainda não há avaliações

- RVR FM Product ListDocumento37 páginasRVR FM Product Listaspwq0% (1)

- A318/A319/A320/A321: Service BulletinDocumento22 páginasA318/A319/A320/A321: Service BulletinPradeep K sAinda não há avaliações

- Usability Engineering (Human Computer Intreraction)Documento31 páginasUsability Engineering (Human Computer Intreraction)Muhammad Usama NadeemAinda não há avaliações

- 5.pipeline SimulationDocumento33 páginas5.pipeline Simulationcali89Ainda não há avaliações

- University of Colombo Faculty of Graduate Studies: PGDBM 504 - Strategic ManagementDocumento15 páginasUniversity of Colombo Faculty of Graduate Studies: PGDBM 504 - Strategic ManagementPrasanga WdzAinda não há avaliações

- 26-789 Eng Manual Pcd3Documento133 páginas26-789 Eng Manual Pcd3Antun KoricAinda não há avaliações

- RDocumento17 páginasRduongpndngAinda não há avaliações

- Z PurlinDocumento2 páginasZ PurlinAddrien DanielAinda não há avaliações

- Lab 01 IntroductionDocumento4 páginasLab 01 IntroductiontsikboyAinda não há avaliações

- Orkot® TLM & TXM Marine Bearings: Trelleborg Se Aling SolutionsDocumento7 páginasOrkot® TLM & TXM Marine Bearings: Trelleborg Se Aling Solutionsprodn123Ainda não há avaliações