Escolar Documentos

Profissional Documentos

Cultura Documentos

Graph Indexing - A Review

Enviado por

Dominic SteffenDireitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Graph Indexing - A Review

Enviado por

Dominic SteffenDireitos autorais:

Formatos disponíveis

Title: Graph Indexing

Seminar Thesis In the context of the seminar Mining Graph Data at the Chair for Information Systems and Information Management

Supervisor: Tutor: Presented by:

Prof. Dr. Ulrich Mller-Funk Dipl.-Wirt. Inform. Kay Hildebrand Dominic Steffen Jgerstr. 18 48153 Mnster +49 (0251) 2766762 d.steffen@uni-muenster.de

Date of Submission: 2012-01-03

II

Content

Figures ............................................................................................................................. III Formulas ..........................................................................................................................IV 1 Introduction .................................................................................................................. 1 1.1 Motivation ............................................................................................................. 1 1.2 Research Methodology .......................................................................................... 1 1.3 Structure of this paper ........................................................................................... 2 2 Preliminaries on graph databases and queries .............................................................. 3 2.1 Graph databases ..................................................................................................... 3 2.2 Graph Queries ........................................................................................................ 3 2.3 Graph and Subgraph Isomorphism ........................................................................ 6 3 Graph Indexing Algorithms .......................................................................................... 8 3.1 Introduction ........................................................................................................... 8 3.2 Common Structure of Graph Indexing Algorithms ............................................... 8 3.3 Feature-Based Indices ......................................................................................... 10 3.3.1 Features ...................................................................................................... 10 3.3.2 Data-Mining vs. Non-Data-Mining............................................................ 10 3.3.3 Indexing Units ............................................................................................ 12 3.3.4 Graph Index Keys ...................................................................................... 15 3.3.5 Feature-Based Reverse substructure search ............................................... 17 3.3.6 Feature-based similarity search .................................................................. 19 3.4 Hierarchical Indexing .......................................................................................... 21 3.4.1 Hierarchical Indices ................................................................................... 21 3.4.2 Graph Closures ........................................................................................... 21 3.4.3 Induced Subgraphs ..................................................................................... 22 3.5 Spectral Graph Coding ........................................................................................ 23 3.5.1 Spectral Signatures ..................................................................................... 23 3.5.2 Polynomial Characterizations .................................................................... 23 3.5.3 Eigenvalue Characterizations ..................................................................... 24 4 Advanced Implementation Considerations ................................................................. 27 4.1 Response Time and Efficiency Considerations ................................................... 27 4.1.1 Query Response Time ................................................................................ 27 4.1.2 Optimizing search and retrieval ................................................................. 28 4.1.3 Verification Avoidance and Verification-free Techniques ........................ 29 4.2 Parallel and Distributed Execution ...................................................................... 29 5 Conclusion .................................................................................................................. 31 References ....................................................................................................................... 32

III

Figures

Figure 1 Filter and Verification Paradigm ........................................................................ 9 Figure 2 Edge-Feature Matrix ......................................................................................... 20

IV

Formulas

Formula 1 Frequency Difference .................................................................................... 20 Formula 2 Summed Frequency Difference ..................................................................... 20 Formula 3 Second immanantal polynomial .................................................................... 24 Formula 4 Eigenvectors .................................................................................................. 24 Formula 5 Query Response Time.................................................................................... 27

1

1.1

Introduction

Motivation

The retrieval of graphs from a graph database is not a trivial thing to do. The mathematical hardness of graph1 and subgraph2 isomorphism problems make a nave approach to finding the corresponding graphs to a query in a large graph database unfeasible as it would be computationally prohibitively expensive. Yet graphs are in many different domains used as data structures and enjoy rising popularity (Aggarwal, Wang (eds.) 2010). Examples include object recognition (Horaud and Sossa 1995; Macrini et al. 2002), medical applications (Petrakis and Faloutsos 1997), management of Business Process Models (Jin et al. 2010), malware detection (Hu et al. 2009), chemical compounds (Daylight Chemical Information Systems Inc. 2008; Klopman 1992) and many more (Aggarwal, Wang (eds.) 2010; Sakr and Al-Naymat 2010). Usable and useful tools to utilize a repository of graphs are therefore of importance. Typical types of queries however require at some point computationally expensive graph or subgraph isomorphism tests (Sakr and Al-Naymat 2010; Yan and Han 2010). The computational cost of these queries can be reduced by efficient indexing techniques (Sakr and Al-Naymat 2010). There are some recent publications which summarize indexing and querying techniques for graph databases: (Sakr and Al-Naymat 2010) presented different techniques and classified them according to their target graph query types and their indexing strategy, while (Yan and Han 2010) discussed graph indexing and techniques in the context of graph management and mining. This seminar thesis builds on this work by identifying essential elements of indexing algorithms. In the course of this work, different implementation approaches are presented and compared. 1.2 Research Methodology

It is regarded as a best practice for literary reviews to document the research process as complete as necessary to demonstrate rigour and to allow other researches to repeat the source discovery process (Brocke et al. 2009; Webster and Watson 2002). Lead by this guiding principle, the research process that is the foundation of this thesis is disclosed. Sources were discovered by using the academic search engine Google Scholar, searching for matches of the search query in title, abstract, keywords and full text; but considering only documents published within a defined time period. Two searches were

1 2

(Fortin 1996) (Johnson and Garey 1979)

performed with the search terms graph indexing and graph indexing algorithm for the time span of 1990 to 2011 (the current year). The first 100 documents were assessed for relevance in regard to this papers topic. Relevance was assessed by analysing the title and abstract for a reference to indexing methodologies for graph databases for certain graph queries (Exact and approximate structure and substructure search). In cases were the assessment of relevance from the title and abstract was inconclusive, the relevance was assessed by analysing the full text. Irrelevant articles those without any contribution to the problem of indexing graph databases for graph retrieval based on the described queries where discarded. The timespan of 1990 to 2011 was chosen to allow for a thorough analysis of the field of graph indexing during the last two decades. To provide a fairly current content, a third query with the search term graph indexing algorithm was performed for the time span of 2007 to 2011. The first 80 documents were assessed for relevance and included in the research body if deemed relevant. The cut-off points (100 for the first two searches, 80 for the third search) were based on the falling occurrence of relevant papers in the search results. The papers cited above in this section also argue for the need to disclose whether backward or forward searches were performed. For all sources in this paper, iterative and selective backward searches were performed. A backward search is a search that discovers all the references of a query paper. The searches were iterative in the sense that once a discovered paper was deemed relevant, backward search was also applied to it. It was selective as papers deemed irrelevant were discarded and therefore backward and forward searches were not applied to them. Obviously not all discovered sources were used and cited in this work, as this would have introduced too much redundant information. Instead, representative algorithms or approaches were selected and relevant aspects are presented. This literature review is framed by other reviews such as (Bunke 2000; Sakr and AlNaymat 2010; Yan and Han 2010), and commonalities or differing classifications are pointed out. 1.3 Structure of this paper

In the following section, the reader will be introduced to the concepts necessary to understand graph indexing. In section 2 indexing algorithms will be presented. After the basic structure of indexing algorithms has been presented, section 4 will take up some consideration that are of relevance for implementations of indexing algorithms. Section 5 will end this paper with a conclusion.

2

2.1

Preliminaries on graph databases and queries

Graph databases

A repository of graphs is called a graph database3, it contains the graphs that the user deposited and provides access methods to them. A graph database with multiple graphs can be referred to as a graph-transaction setting graph database (Zhao and Han 2010). Definition4: A graph database is a collection of graphs { }.

The graphs contained in a graph database are called member graphs of the database, and ( ) with being the set of they are typically denoted as sextuple vertices of the graph; being the set of edges connecting two vertices; and being the sets of labels for vertices and edges respectively and and being two functions assigning labels to vertices and edges respectively (Sakr and Al-Naymat 2010). Labeled graphs can be classified into two distinct classes according to whether their edges contain directionality information. The two classes therefore are directed-labeled and undirected-labeled graphs (Sakr and Al-Naymat 2010). Examples of the former are XML, resource description frameworks (RDF) and traffic networks while social networks and chemical compound are examples for the latter (Sakr and Al-Naymat 2010). 2.2 Graph Queries

A graph search on a graph databases is called a graph query (Yan and Han 2010). If posed to a database, the database will evaluate the query and will return a (sub-)set of its member graphs, where the members of the set satisfy the query-condition. This is the answer set to the query. Formally, the answer set can be defined as follows: Definition5: Given a graph database function { { ( ) } { }, a graph Query and a Boolean } the answer set is

In the above definition, G denotes the set of all possible graphs. The function m is an appropriate function which determines whether or a given member graph satisfies the query conditions and should be included in the answer set.

Single-graph databases do exist as well. Single-graph databases use a graph as data-structure to store the data contained in the database. A XML document is an example of such a database. These are referred to as single-graph setting (Zhao and Han 2010) 4 According to (Sakr and Al-Naymat 2010; Yan and Han 2010) 5 Based on (Yan and Han 2010)

There are four different types of graph queries: Substructure search, Reverse substructure search, substructure similarity search and reverse similarity search (Sakr and Al-Naymat 2010; Yan and Han 2010)6. Exact matching Full structure search and substructure search queries search the database for exact matches of the query pattern. A substructure search query searches the database for all graphs that contain the query pattern, which can either be a small graph or a graph where some parts are designated as wildcards. Definition7: Given a graph database function ( } A reverse substructure search query returns all member graphs of the database which are contained in the query graph. It can also be called a supergraph query (Sakr and Al-Naymat 2010). The query answer set can therefore be accordingly defined as: Definition8: Given a graph database function ( } ) { { }, a graph Query the answer set is and a Boolean { ( ) ) { { }, a graph Query the answer set is and a Boolean { ( )

Approximate matching Approximate match searches or similarity searches find graphs in the database which are not exact matches of the query graph but are approximations of it, e.g. which bear some similarity (Sakr and Al-Naymat 2010). A major motivation for these kinds of queries is that in some applications the search for an exact match may yield none or very few matches (Yan and Han 2010). This may be because the query graph is distorted by noise, occlusion or accidental alignments, which are common problems in object recognition (Bunke 2000; Sossa and Horaud 1992). Approximate match searches

There does not seem to be consensus on the appropriate naming. Search and Query seem to be interchangeable. Reverse substructure search may also be called Full structure search (Yan and Han 2010) or Supergraph Query(Sakr and Al-Naymat 2010). 7 Based on (Sakr and Al-Naymat 2010; Yan and Han 2010) 8 Based on (Sakr and Al-Naymat 2010; Yan and Han 2010)

allow some variance in the query which is much more efficient then manual query refinement (Yan and Han 2010). The two types of approximate match searches can be described analogously to the exact match searches: A substructure similarity search of a query pattern in a graph database will return all graphs that approximately contain the query graph pattern. Definition9: Given a graph database function { ( ) ( ) { } { }, a graph Query and a Boolean

the answer set is

A reverse substructure similarity search will return all graphs that are approximately contained in the query graph pattern. Definition10: Given a graph database function { ( ) ( ) { } { }, a graph Query and a Boolean

the answer set is

There are two common approaches to select the graphs that will be members of the answer set to an approximate match search query, these are the K-Nearest-Neighbor (K-NN) approach and the range query approach (Sakr and Al-Naymat 2010). In both approaches, the similarity of target member graphs of the database and the query graph is computed using a similarity measure, see for example (Hu et al. 2009; Korn et al. 1996). The K-NN approach then returns an answer set of the nearest target graphs to the query graph up to a set cardinality of an integer k, it returns the k most similar graphs (Sakr and Al-Naymat 2010). The range query approach includes all those target graphs in the answer set which have a similarity score within a user-defined threshold (Sakr and Al-Naymat 2010). There are trade-offs between the two techniques, which have to be taken into account: While the K-NN approach allows to return a fixed-size number of retrieved graphs, the cut-off point may be set too low and omit good candidates (Shokoufandeh et al. 2005) or too high and the answer set will include weak matches, thus degrading the quality of the result. Range queries allow better control over the quality of the result set as all members will be within the defined range, but they will produce sets of varying size which may be potentially large (Shokoufandeh et al. 2005).

10

Based on (Sakr and Al-Naymat 2010; Yan and Han 2010) Based on (Sakr and Al-Naymat 2010; Yan and Han 2010)

An essential problem of this family of queries is the question of how similarity between a graph member and the query graph can be measured, and a precise definition has not yet been made although a number of possible similarity metrics have been proposed (Sakr and Al-Naymat 2010). Often used similarity measures are the maximum common subgraph measure and the graph edit distance measure (Bunke 2000; Yan and Han 2010). The maximum common subgraph of two graphs and is defined as the graph of the set of graphs that are contained in both and that has the highest 11 number of nodes (Bunke 2000). The similarity between the two graphs can then be ( ) ( ) measured as , with being the set of nodes, a query graph, ( ) the maximum common subgraph between the query graph and a target graph , and the set cardinality operator12. Graph edit distance quantifies the edit-operations necessary to adapt a target graph so that it exactly matches the query graph. Edit operations are for example deletion, insertion or substitutions of nodes and edges (Bunke 2000). Obviously, the higher the original similarity between the graphs, the lower the number of edit-operations necessary (Bunke 2000). The graph edit distance can therefore be defined as the shortest sequence of edit operations required to transform graph into graph (Bunke 2000). For a detailed discussion of graph similarity measures and approximate graph matching, the interested reader may take a look at (Aggarwal, Wang (eds.) 2010; Bunke 2000; Gao et al. 2010). 2.3 Graph and Subgraph Isomorphism

The graph isomorphism problem can be easily stated: check to see if two graphs that look differently are actually the same (Fortin 1996, p.1). The graph isomorphism problem tries to find an adjacency-preserving, one-to-one mapping between the vertices of two graphs. Definition13: Two graphs ( ) and ( ) are said to be isomorphic to each other, iff14 there exists a bijective mapping so that ( ) ( ( ) ( )) .

11 12

Alternative: Number of edges (Yan and Han 2010) Based on (Yan and Han 2010), using nodes instead of edges. 13 Based on (Fortin 1996; Sakr and Al-Naymat 2010) 14 If and only if

This can be extended to labelled graphs in the following way: Definition15: Two labelled graphs ( ) and ( ) are said to be isomorphic to each other, iff there ( ) exists a bijective mapping , so that ( ( ) ( )) and ( ) ( ( )) and ( ) (( )) (( ( ) ( )))

A graph isomorphism is therefore a bijective mapping between the nodes of two graphs of identical size (same number of nodes and edges), identical labels and an identical edge structure (Bunke 2000). The subgraph isomorphism problem is a harder variant of the graph isomorphism problem. It tries to find a subgraph contained in a larger graph that is isomorphic to another graph (Bunke 2000; Cook 1971; Klein et al. 2011). Definition: A graph q is subgraph isomorphic to a graph g, if there exists a graph c, with , which is isomorphic to q.

Both problems are computationally expensive to solve. Graph isomorphism is not polynomial-bound, although for some special classes of graphs algorithms with polynomial bound exist (Fortin 1996). The subgraph isomorphism problem is definitely harder, it has been proven to be NP-complete (Cook 1971; Johnson and Garey 1979).

15

Based on (Sakr and Al-Naymat 2010)

3

3.1

Graph Indexing Algorithms

Introduction

Given a particular query, the nave approach to find all database members that match thee query would be to go through the database members one by one and to decide whether or not that particular member is a match to the query. This is called a sequential scan (Sakr and Al-Naymat 2010), and it is obviously a very poor and inefficient method to compute the answer set, especially if one considers the computational complexity of the subgraph and graph isomorphism problems. This motivates the research of appropriate indexing techniques which will reduce the computational cost of a graph query by filtering out members of the database which are obviously not matches to the query. 3.2 Common Structure of Graph Indexing Algorithms

Indexing techniques that facilitate efficient graph retrieval commonly follow a paradigm called Filter-and-Verification (Klein et al. 2011; Sakr and Al-Naymat 2010). The objective of the paradigm is to reduce computational cost through early elimination of impossible matches (Sakr and Al-Naymat 2010). To avoid incurring the huge computational cost of a sequential scan of the complete member set of the database, the paradigm calls for an intermediate step that generates a candidate set. Members of the candidate set have a higher likelihood to be a valid match to a given query than a member of the general population of the database. This is because in a first filtering step, all those member graphs of the database that will not be a match to the given query are eliminated as candidates by a heuristic (Klein et al. 2011). This filtering step utilizes the database index to build the candidate set and to eliminate impossible matches (Sakr and Al-Naymat 2010). In a second step, called verification, every graph of the candidate set is verified against the query to establish whether or not it is a valid match (Sakr and Al-Naymat 2010). All those candidates that satisfy the conditions of the query comprise the answer set, which is the set of all graphs in the database that satisfy the conditions of a given query (Sakr and Al-Naymat 2010). Operation phases of these algorithms can be divided in offline and online processing (Klein et al. 2011; Sakr and Al-Naymat 2010; Zou, Chen, Yu, et al. 2008; Zou, Chen, Zhang, et al. 2008). In the first phase, the offline phase, the index of over the database members is constructed. After the index is constructed, the online phase begins and queries can be processed based on the index.

Filter-and-Verification Paradigm Offline Phase Query Processor

Graph Database

Graph Query

Online Phase

Index Construction Graph Query Filtering

Graph Index

Probing

Query Processor

Candidate Set

Answer Set Verification

Answer Set

Figure 1 Filter and Verification Paradigm16 In the encountered literature, indexing algorithms are often classified by the type of query they support (Sakr and Al-Naymat 2010; Yan and Han 2010), or whether or not they employ data mining techniques (Sakr and Al-Naymat 2010; Zou, Chen, Yu, et al. 2008). This approach to classification however seems to be inconsistent as some algorithms support multiple types of queries, e.g. (He and Singh 2006; Tian and Patel 2008) both support exact and approximate substructure queries. In addition, there are algorithms that do not utilize data mining techniques but do base their index on graph features like algorithms that employ data mining techniques and algorithms that use a holistic approach to index graph databases. Classifying both as non-data mining seems to ignore this fact. Therefore in this work, the presented algorithms are grouped by three distinct characterizing features. This is done to present the algorithms in a concise fashion and is without a claim of general applicability as a classification scheme.

16

Based on (Sakr and Al-Naymat 2010)

10

3.3 3.3.1

Feature-Based Indices Features

Indices can be constructed from a collection of characteristic and distinctive substructures included in the database graphs. These are referred to as features of the graphs (Klein et al. 2011). This approach is based on the simple idea that for two graphs to match each other, they both need to contain the same features (inclusion logic (Chen et al. 2007)). A necessary condition to be included in the candidate set therefore is that a member graph and a query graph share the same features (Klein et al. 2011; Sakr and Al-Naymat 2010). In a subgraph query, all member graphs which do not contain all features of the query pattern will not be considered as candidates, as they cannot contain the query pattern without containing all features of it. The decision which features to use to construct the index is important, as it influences the filtering strength and efficiency of the index-construction (Klein et al. 2011). To achieve a high filtering strength, or pruning power, features should be discriminative (Klein et al. 2011), e.g. they should be selected in a way that based on a small number of features a large part of the database can be excluded from consideration. Inverted indices are commonly used to map features to member graphs (Cheng et al. 2007; Sakr and Al-Naymat 2010; Xie and Yu 2011; Zou, Chen, Zhang, et al. 2008). These indices are called inverted because the indexing process first compiles for every a graph a list of features that are contained in it. This list is then inverted and an index which uses the feature as the key points then to all member graphs which contain the feature (Sakr and Al-Naymat 2010). 3.3.2 Data-Mining vs. Non-Data-Mining

According to Zou et al. in (Zou, Chen, Yu, et al. 2008), feature-based indices can be classified by the method used to build the indices, specifically whether data mining is used or not. If the index is constructed without using data mining, the method can be classified as non-data mining based filtering (Zou, Chen, Yu, et al. 2008). Non-mining techniques index whole constructs of the databases using full enumeration of index features to construct the index (Sakr and Al-Naymat 2010). Their major drawbacks are that they may have reduced filtering strength as they lose structural information when using simple structures, such as paths, as features or that they will need to rely on computationally expensive structure comparisons in the filtering step which will degrade its efficiency (Zou, Chen, Yu, et al. 2008). Zou et al. cite GraphGrep (Giugno and Shasha 2002) as an example of the former while citing Closure-Tree (He and Singh 2006) as an example of the latter. Another problem of techniques which rely on the

11

indiscriminative enumeration of simple features is that the number of index-able features will grow quickly in large and diverse databases (Sakr and Al-Naymat 2010; Yan and Han 2010; Yan et al. 2004; Zhao et al. 2007). To manage this complexity, selection of a subset of these index-able features using data mining methods has been proposed (Yan and Han 2010; Yan et al. 2004). Data-mining based filtering refers to approaches that apply data mining methods to extract a subset of index-able features from the member graphs in the database (Yan, Yu, and Han 2005a; Zhang et al. 2007; Zhao et al. 2007; Zou, Chen, Yu, et al. 2008). The selection occurs after a data-mining operation performed over the database members has collected additional information on the index-able features (Sakr and AlNaymat 2010; Yan and Han 2010; Yan et al. 2004). The selection of the features then occurs based on the frequency of occurrence and discriminative power of the features. Definition17: A graph structure , which is contained in the member graphs of a graph { } is said to be frequent if its support ( ) database ( ) exceeds a predefined minimum threshold , or . Its Support is defined as the number of graphs which contain the graph ( ) { }. structure: Then frequent structures can be used as index features (Yan and Han 2010). Presented with a subgraph query, the database can return all graphs that contain the query pattern directly if the query pattern is an indexed feature. If not, it can decompose the pattern into indexed features and construct the candidate set as the intersection of the sets of graphs which contain an indexed feature that is also included in the query graph (Yan and Han 2010). The candidate set can formerly be defined as { } where k is the number of indexed features contained in the query pattern, i.e. { } ( is the set of indexed features). The size of the candidate set has as its lowest bound the size of the smallest set of member graphs which contain a feature , which is the indexed feature with the lowest support contained in the query pattern. Therefore a low minimum support threshold will reduce the size of candidate sets, which will decrease the cost of verifying the sets (Yan and Han 2010). However, a low minimum support threshold will yield a large number of indexed features, growing the index . A strategy to mitigate this complexity is to avoid using an uniform support threshold for all structures and to use different support thresholds for structures of different sizes: For small structures, it is appropriate to use a low minimum support threshold, for large structures, a high threshold is appropriate (Yan and Han 2010). This approach allows an efficient, yet compact, index which has a lower number of frequent

17

See (Yan and Han 2010)

12

structures compared to an index using low uniform support, while larger structures with low support can be indexed by their smaller subgraphs (Yan and Han 2010). One can model the size-increasing support threshold as a monotonically increasing function ( ) with ( ) (Yan and Han 2010). Linear or exponential functions are typically used (Yan and Han 2010). To further reduce the size of the indexed features, the concept of discriminative structures can be used. If presented with two structures and , where one is contained in the other but with approximately the same support ( ) ( ) it is often sufficient to index only the smaller common substructure as little more information can be preserved if both are indexed (Yan and Han 2010). Frequently changing databases are an environment in which data-mining based filtering techniques fare less well compared to non-data mining approaches (Sakr and AlNaymat 2010; Zou, Chen, Yu, et al. 2008). This is caused by the necessity to rebuild the index as the quality of selected features declines with the number of updates to the database (Sakr and Al-Naymat 2010). This requires another data mining operation being performed on the database. Non-data mining filtering techniques do not have this drawback, as the index can be updated as the database is updated with no additional cost compared to the case in which the addition would have been part of the database during original index construction (Sakr and Al-Naymat 2010). 3.3.3 Indexing Units

There are a number of types of substructures which can be used to construct indices. In order of complexity, these types are simple elements such as nodes and edges, paths, trees and subgraphs. Nodes and edges are the basic constituent elements of graphs. Therefore simple indexing schemes can be built by collecting some statistical information on them, for example frequency of occurrence. In GraphREL (Sakr 2009), Sakr uses a relational framework to store and query graphs, allowing it to be implemented in conventional SQL-databases. The algorithm decomposes graphs into sets of vertices and sets of edges as vertex-to-vertex relations, and hence uses two tables. Both, vertices and edges, can have labels. It collects statistical information about less frequently occurring nodes and edges and uses this information to identify those nodes and edges in the query pattern which occur rarely in the members of the database. Those rare elements determine the highest pruning point. However, indexing structures based on labels of vertices and edges are usually not selective enough to distinguish complicated and interconnected structures (Zhao et al. 2007).

13

Paths are slightly more complex features of graphs than nodes and edges, but are still considered simple (Klein et al. 2011). A path of length is a sequence of vertices ) which are connected by edges ( (Yan et al. 2004). Paths have the advantage, that they are easier to manipulate than other data structures (Sakr and Al-Naymat 2010; Yan et al. 2004), but this comes with the weakness that graphs as simple data structures are not very discriminative as a lot of structural information is lost, especially in short paths (Yan et al. 2004; Zhao et al. 2007). Another disadvantage of path-based approaches is that large and diverse databases will typically contain a huge number of paths, thus increasing the index size (Sakr and Al-Naymat 2010; Yan et al. 2004; Zhao et al. 2007). An often cited18 example of a path-based indexing algorithm is GrapGREP (Giugno and Shasha 2002): GraphGrep enumerates all paths of database members up to an user-defined maximum length. It stores for each unique path (a) in which member graphs it occurs and (b) how often. It uses this information to prune unpromising candidates in the filtering phase by discarding all members which do not contain at least the number of occurrences of a feature path contained in the query pattern. Another indexing feature are trees. Trees have a number of beneficial properties: They are more complex patterns than paths, yet are simpler than graphs, but can preserve almost an equivalent amount of structural information compared to arbitrary subgraph patterns (Zhang et al. 2007; Zhao et al. 2007). It is also possible to calculate the canonical form of any tree within polynomial time, which allows to create a comparable representation of any tree (Zhang et al. 2007). If used in combination with a mining approach19 another benefit of trees is that frequent subtree mining is relatively easier in comparison to frequent subgraph mining (Zhang et al. 2007; Zhao et al. 2007). In addition, Zhao et al. maintains in (Zhao et al. 2007) that a large number of frequent graphs in many real-world datasets are trees in nature. This suggests that frequent graph mining incurs additional computational costs with limited benefits when it comes to increasing filtering strength (Zhao et al. 2007). An example of an algorithm which uses trees as indexing features is TreePI (Zhang et al. 2007), it discovers frequent trees in database members through frequent-tree mining and selects a set of the frequent trees as index patterns. Subtrees in query patterns are matched to index patterns and from the matched index patterns the candidate set is generated. Graphs are the most complex class of index features. They preserve more structural information than simpler classes, like paths (Yan et al. 2004) or trees. Although compared to trees, only slightly more (Zhang et al. 2007; Zhao et al. 2007). Therefore

18 19

See (Klein et al. 2011; Sakr and Al-Naymat 2010; Yan et al. 2004; Zhao et al. 2007) Mining approaches are discussed in 3.3.2

14

graphs as index features outperform paths in filtering strength (Yan et al. 2004), but, as said before, are only slightly better than trees while incurring higher computational costs. Compared to paths, subgraphs are even more numerous in a graph database (Yan et al. 2004). Therefore, the use of arbitrary subgraphs as index features should be used in combination with a mining approach which selects only a subset of possible subgraphs as index features to reduce this complexity (Yan et al. 2004). An example of an algorithm which uses graphs as index features in combination with a mining approach is GIndex (Yan et al. 2004). GIndex mitigates the complexity that arises from the large number of subgraphs which are contained in the database members and which could be used as index features, by selecting only frequently-occurring subgraphs. It further requires that for a particular subgraph to become an index feature its support has to exceed a minimum support threshold, which rises progressively with the size of the subgraph (Sakr and Al-Naymat 2010). When using arbitrary substructures (such as paths, trees and graphs) as index features, it is usually a necessity to remove some substructures from consideration by defining an upper bound on the size of the substructures, e.g. a maximum path-length (Giugno and Shasha 2002) or maximum number of nodes or edges in graph (Yan et al. 2004). This curbs the potentially exponential growth of indexed structures. Another way to reduce the index size is to use non-arbitrary substructures. Those substructures can be simple structures, such as cycles for example. The algorithm GString (Jiang et al. 2007) is an example of such an approach. It uses lines, cycles and stars20 as index features. The main motivation of the authors is that in the application field of chemical compound databases, meaningful structural information can be lost by indexing member graphs using arbitrary substructures. They propose the use of substructures which are semantically meaningful in the application context to improve the efficiency of the search. This approach is an example of how domain-knowledge can influence the design to adapt it to specific needs. An obvious drawback is of course that the extension of this approach to other application domains is not trivial (Sakr and AlNaymat 2010). The use of different index feature classes in combination is a possible way to improve the filtering strength. Tree + (Zhao et al. 2007) uses frequent trees as index-features and supplements this index by a small number of discriminative graphs which improve the pruning power. The selection of the graph-features occurs on demand and without the use of a graph-mining step, by estimating the pruning power of a graph-feature

20

Lines are a series of vertices which are connected end-to-end; Cycles are a series of vertices that form a close loop; Stars are a structure where the vertex at its core connects to several other vertices (Jiang et al. 2007)

15

based on its subtrees. CT-Index (Klein et al. 2011) uses trees and cycles as index features by enumerating all trees and cycles in database member graphs up to certain size. The combination of trees and cycles is advantageous, as trees capture structural information well and cycles are also a distinctive characteristic of graphs which have additional semantic meaning in a number of application areas. If only trees were used as index feature, cycles would be neglected. Another advantage that trees and cycles have over graphs is that their certificates, the canonical form which unambiguously encodes their structure, can be computed in linear time (Klein et al. 2011). 3.3.4 Graph Index Keys

Structural Keys are an index type described in (Daylight Chemical Information Systems Inc. 2008). This index type can be used with feature-based indexing techniques. Structural keys are Boolean arrays, usually represented as a bitmap. Elements in a Boolean array are Boolean bits which can assume either the value 1 (TRUE) or 0 (FALSE). Features are then mapped to a bit position in the array, and the value of it represents presence (TRUE) or absence (FALSE) of that feature in a database member graph. A structural key is assigned to every member graph. The key then represents the graphs structure. If an exemplary indexing scheme uses five features then a structural key would have a length of five bits and a possible structural key might be 01100, indicating that the graph for which this key has been computed contains two of the indexed features, specifically the patterns two and three (as their bits have the value TRUE) but not patterns one, four and five (as their bits have the value FALSE). In retrieval, a structural key is generated for the query pattern. It is then compared to structural keys computed for the database member graphs. For a member to match the query, its structural key has to indicate that every feature contained in the query pattern is also contained in the graph: Every bit-position that assumes the value of TRUE in the structural key computed from the query has to assume TRUE in the structural key of the database member, while all other bit-positions may assume any value. More formerly: Definition21: A graph does not contain a graph if the bitwise-AND ( ) of both structural keys is not equal to the structural key of , i.e.: ( )) ( ) ( ( ) ( ( )) ( ) , with ( ) being the set of features included in a graph a structural key computed over the a feature-set . and ( ) being

21

Based on (Klein et al. 2011)

16

The exemplary graph with the structural key 011000 would therefore be a match to a query 01000 as contains the second feature. It would not be a match to the query 01001 as it would not contain the fifth feature. A member graph with the structural key 00110 would not be a match to either query, as it has no features in common with any of these queries. Databases using structural keys as indices can compute the candidate set quickly, as the necessary Boolean operations are easy and quickly computable. To create a structural key mapping scheme, features have to be known before index construction, and changes to the scheme will require index reconstruction. Structural keys may vary widely in size depending on the selection of features, but are of the same size in a single database as they have to have the same semantic meaning. The choice of the size is characterized as a trade-off between specificity and space: Inclusion of more features increases the size of the structural key but improves the filtering strength. If keys grow large, they may become sparse in the sense that the number of bit-positions which assume the value TRUE is greatly smaller than the number of bit-positions which assume the value FALSE. Structural keys which are sparse have a low information density; they contain only a small amount of information compared to their potential. A variant of structural keys are hash-key fingerprints (Daylight Chemical Information Systems Inc. 2008; Klein et al. 2011), which solve a number of problems that are associated with the use of structural keys. Hash-key fingerprints are also Boolean arrays, but they use a hash-function to map features to bit-positions (Daylight Chemical Information Systems Inc. 2008; Klein et al. 2011). Hash-functions are pseudo-random functions which take a long argument as input and produce a significantly shorter output (Anderson 2010). As the output-space is shorter than the input space, different features may be mapped to the same bit-position, this is referred to as a collision (Anderson 2010; Daylight Chemical Information Systems Inc. 2008). In comparison to structural keys, fingerprints have no predefined semantic meaning (Daylight Chemical Information Systems Inc. 2008). One cannot directly identify from a fingerprint which features are contained in the graph it describes, but every fingerprint will still be characteristic of the graph (Daylight Chemical Information Systems Inc. 2008). The absence of a pre-defined set of features allows fingerprints to be a usable concept in a number of domains (Daylight Chemical Information Systems Inc. 2008). As fingerprints are the result of a mapping from a larger bit-space to a smaller bit-space, a large number of index features can be used without the drawback of a large index size. In fact, the Daylight (Daylight Chemical Information Systems Inc. 2008) system uses

17

folding to solve the problem of sparse, or low information density, structural keys by reducing the size of large fingerprints by dividing it into two halves, which are then combined by bitwise-or. The result will have a higher information density. This process is repeated until the desired information density, the minimum information density, is achieved or even surpassed. Without a predefined meaning, features do not have to be known in advance, and they can be generated directly from the graphs (Klein et al. 2011). If folding is used, then fingerprints computed from query pattern will have to be folded as well, so that they are comparable with the stored fingerprints of the database members (Daylight Chemical Information Systems Inc. 2008). Folding may however increase false-positives as fingerprints tend to get more ambiguous with the number of times they have been folded. This is due to collisions. Therefore an appropriate size has to be found to achieve a desired filtering strength (e.g. one may decide that a fingerprint must be of a size sufficiently large enough to screen out 95% of false positives)(Daylight Chemical Information Systems Inc. 2008). It will however not introduce false-negatives, as bit-positions with the value TRUE will not be set to FALSE (Daylight Chemical Information Systems Inc. 2008). Folding also allows fingerprint-sizes to vary for the database members, depending on their complexity: If fingerprints are folded until a predefined minimum information density is reached, then complex members will have larger fingerprints compared to simpler members. This concept is known as variable-sized fingerprints (Daylight Chemical Information Systems Inc. 2008; Klein et al. 2011). Query fingerprints can be compared to variablesized fingerprints by folding them until they reach the same size (Daylight Chemical Information Systems Inc. 2008). 3.3.5 Feature-Based Reverse substructure search

The feature-based approach can be adapted to perform reverse substructure searches. As reverse substructure searches search for all database graphs that are contained in the query pattern, the pruning strategy follows a logic which looks like a reverse mirrorimage of the already known feature-based ordinary substructure searches: Instead of pruning all database graphs if they do not contain an index-feature which the query pattern contains, they prune all database graphs if they contain an index-feature that the query pattern does not contain (Yan and Han 2010). This exclusion logic can formally be expressed as . Valuable index-features are now all index features which are frequently contained in member graphs of the database, but are unlikely to be contained in a query graph. These features are called contrast features (Yan and Han 2010). Chen et al. discovered that subgraphs that occur with a frequency of in the database members have the highest pruning power, which decreases monotonically for frequencies approaching the lower and higher end, i.e. and

18

(Chen et al. 2007). This can be intuitively grasped as highly frequent substructures in database members will be frequently present in query graphs and this makes for poor pruning power using exclusion logic. Substructures with a low frequency have a very low pruning power (as one can only exclude those few graphs in which those few patterns occur). As set of index-features determines collectively the pruning power of the index, typically a number of features are selected from the middle range of the frequency spectrum (Yan and Han 2010). Feature selection is guided by the goal to create a set that collectively performs well in light of the expected queries (Chen et al. 2007; Yan and Han 2010). Another consideration besides the frequency is again redundancy, and it has been proposed to avoid indexing features which do not add new information to the index (Chen et al. 2007).The feature selection process can be classified by the method used, either as probabilistic or exact (Chen et al. 2007). Probabilistic methods derive the feature set using probabilistic models of the expected queries, while exact methods derive the feature set based on a representative training set of expected queries (Chen et al. 2007). Probabilistic models are problematic as the joint probability for containment of multiple features in a query is hard to derive accurately, yet this is necessary to determine redundancy accurately (Chen et al. 2007). In any case, as the feature set selection is based on data mining, the index may have to be reconstructed frequently as query logs may change, thus degrading the efficiency of the index (Sakr and Al-Naymat 2010). In (Chen et al. 2007), a theoretical framework for feature selection under the mentioned conditions is presented which is then used to build a greedy22 algorithm that approximates the optimal feature set under an exact model. The simple cIndexAlgorithm uses an inverted index: A flat matrix with features as rows, database members as columns and a binary entry indicating if a particular feature is included in a database member as cells. This is improved on in variants called cIndex-BottomUp and cIndex-TopDown, which both use a hierarchical, tree-structured index. The hierarchical model is based on the idea that pruning can be more efficient if the sequence in which the features are checked can be ordered, but depending on the query different orders may be efficient. Therefore a tree structure in which the non-terminal nodes represent features is traversed from the root to the leaf-nodes, which represent member graphs. Edges are chosen depending on whether or not a particular node is contained in the query. The first variant builds the index iteratively from the bottom up by starting with the flat index and generating the next level of the tree by indexing it with the cIndex22

Optimal model selection is also NP-complete, however approximation seems to yield good results (Chen et al. 2007).

19

algorithm. This is repeated until the pruning gain becomes zero. This however puts features with high pruning power at the bottom of the tree. In cIndex-TopDown, this problem is solved by selecting different features for different groups of sample queries and arranging features in a tree structure to identify the appropriate feature set for a given query. Therefore the algorithm first divides the sample query log in groups by the most discriminative feature (the feature which will partition the query log most efficiently). This is repeated until the groups reach a specified minimum-size. The found partitioning features are arranged in a tree structure. If a group reaches the minimumsize, the simple cIndex-algorithm can build the index feature set for this group. This allows the building of indices that are more efficient for different types of queries, as the index-features used can be different. 3.3.6 Feature-based similarity search

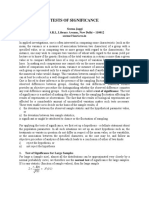

There are a number of algorithms which utilize features to perform similarity queries. Grafil (Yan, Yu, and Han 2005b) uses frequent structures, SAGA (Tian et al. 2007) indexes sets of nodes while TALE (Tian and Patel 2008) considers neighborhood structures. Grafil uses the concept of relaxed query graphs to capture the idea that the target graphs may be different from the query. As a normal substructure search would process a query by enumerating the index features contained in the query graph and prune all database graphs that do not contain all features of the query pattern in the filtering step, a similarity search must allow for some missing features. In Grafil, the query can be relaxed through deletions of the outer edges. This will result in some features to be destroyed, hence missing from the query. Of course, no manual deletions have to be performed, but rather hypothetical deletions: For a given query , the algorithm enumerates all index features that are embedded in the query and creates an edgefeature matrix. The edge-feature matrix is a binary-valued matrix which has a column for every embedding23 of a feature and a row for every edge of the query graph that can be deleted. Its cells have the value 1 if the corresponding feature-embedding covers the edge.

23

As a feature may occur many times in a graph, embeddings is used to describe multiple instances of a feature in graph to avoid confusion with occurrence

20

( )

( )

( )

( )

( )

( )

0 1 1

1 1 0

1 0 1

1 0 0

0 1 0

0 0 1

0 1 1

Figure 2 Edge-Feature Matrix24 In the above matrix, denotes an edge; a feature and

( )

the jth occurrence of the

feature . Now based on this matrix, the maximum number of columns affected by the deletion of -rows can be computed, which is an upper bound on the allowed feature misses. Once the upper bound of allowed misses for each feature is computed, an index which contains the number of embeddings of a feature in a particular graph is utilized to select the candidate set by pruning all those graphs which exceed this upper bound. This is done by calculating the frequency difference of the query graph features and the target database graph features. The frequency difference is denoted as Formula 1 Frequency Difference ( ) {

Where is the number of embeddings of feature in the target graph and is the corresponding number in the query graph . The differences can then be summed up as Formula 2 Summed Frequency Difference ( ) ( )

All graphs which exceed the computed upper bound of differences are then pruned. For all members of the candidate set, structure comparison will be performed in a verification step to ascertain the exact similarity. Instead of connected substructures, the fragmented elements of graphs can be used, as in SAGA (Tian et al. 2007) or TALE (Tian and Patel 2008). SAGA indexes all sets of nodes of a user-defined size k. To avoid the exponential growth of set-size, SAGA only indexes sets of nodes that are within a specific distance of another. The distance is set by an upper bound of the diameter the sets can have. The use of fragments allows the indexing of structures which have gaps in them. TALE is especially build for large

24

From (Yan, Yu, and Han 2005b)

21

graphs, both database and query. It only indexes the neighborhoods of all vertices of a graph. This encodes the local structure while avoiding the need to index the large number of node sets as in SAGA. 3.4 3.4.1 Hierarchical Indexing Hierarchical Indices

Instead of decomposing the member graphs into characteristic features, a number of algorithms try to structure graphs in a hierarchical way (Yan and Han 2010). This is usually done by ordering parts of the graph (like nodes or subgraphs) in a hierarchy and storing it in a hierarchical index structure like a tree or an directed acyclic graph (DAG) (He and Singh 2006; Williams et al. 2007; Zou, Chen, Zhang, et al. 2008). Examples of hierarchical approaches cited in (Yan and Han 2010) are ClosureTree (He and Singh 2006) and GDIndex (Williams et al. 2007). 3.4.2 Graph Closures

In ClosureTree (He and Singh 2006), graph closures are the basis of the hierarchical ordering. A graph closure of two graphs and with a mapping between them is computed by computing the closure over their vertices and edges. A vertex closure (edge closure) is a general vertex (generalized edge) whose attribute is the union of the attribute values of the set of vertices (edges) for which the closure is computed (He and Singh 2006). A graph closure of two graphs under a mapping is therefore a generalized graph ( ) where is the set of vertex closures of the vertices of the two graphs and the corresponding edge closure(He and Singh 2006). A closure of multiple graphs is computed incrementally by first computing the closure of a pair of graphs and then for each additional graph by computing the closure of it and the previously ( ) ( ( ) ) (He and computed closure, i.e. Singh 2006). The graph closure can be interpreted as the bounding box of the underlying graphs, and is therefore similar to the Minimum Bounding Rectangle (MBR) concept used in traditional index structures (He and Singh 2006; Yan and Han 2010). The index structure of ClosureTree is similar to the R-tree indexing mechanism (Sakr and Al-Naymat 2010): The closure tree index structure, or C-tree, is a tree in which each node is a graph closure of its children, with the children of leaf nodes being database graphs and children of internal nodes being simple nodes (He and Singh 2006). C-tree supports both exact and approximate substructure search following the filter-andverification paradigm (He and Singh 2006). Queries are answered by traversing the Ctree and pruning index nodes based on a fast pseudo subgraph isomorphism test for

22

exact substructure search after which an exact subgraph isomorphism verifies members of the candidate set (He and Singh 2006; Yan and Han 2010). Similarity queries are based on edit distance measures and the computation of similarity between the query and nodes is based on heuristic graph mapping methods (He and Singh 2006; Sakr and Al-Naymat 2010). 3.4.3 Induced Subgraphs

GDIndex (Williams et al. 2007), an algorithm authored by Williams et al., uses unique, induced subgraphs of the database members as the indexing unit and organizes them in a directed acyclic graph (DAG) for each member graph. Each unique induced subgraph is represented by a node in the DAG and all nodes in the DAG are cross-indexed in a hash-table for fast isomorphic lookup. A global database index, the Graph Decomposition Index (GDI), is then a DAG which is merged from the DAGs of the member graphs. Subgraphs are unique with respect to isomorphism and for each automorphism25 group only the canonical instance will be kept in the DAG. For a given database member of size , the DAG is constructed by full enumeration of the induced subgraphs from size (The null graph) to the size (The member graph itself) in a process Williams et al. refer to as decomposition. A graph is therefore decomposed into at most subgraphs, but this upper bound is only reached if all labels are unique, and the number is lower otherwise (A graph with identical labels will decompose into subgraphs) (Williams et al. 2007). Nodes in the DAG are organized into tiers according to size, where the mth tier contains all the nodes of size m. The lowest and highest tiers contain only one node each: The lowest tier contains the root-node, the null graph, and the highest tier contains the node representing the full member graph. Connections, or links, between the nodes exist only directional from nodes in the ith tier to nodes in the (i+1)th tier where a node in the ith tier representing a subgraph links to a node in the (i+1)th representing a subgraph which contains . For every enumerated subgraph, an entry is added to a hash table. The hash key is computed by feeding the adjacency matrix of a graph in canonical form into a hash function. This method ensures that all isomorphic graphs will produce the same hash key, but as hash keys may not be unique to a particular canonical code (due to collisions) any given entry in the table may reference multiple graphs. Queries are processed by computing the hash key of the queries canonical code which will then be used to look up matches in the hash table. For every member of the candidate set, its canonical code is compared with the queries canonical code in a second verification step. If the codes are equal, then the graph will be an isomorphic match to the query. The second step has to be performed due to the ambiguity of the hash key. The answer set of a subgraph isomorphism query would then

25

Automorphism are isomorphism of a graph with itself (Fortin 1996)

23

be the set of descendants which correspond to database member graphs of every node in the GDI which represent a graph that is isomorphic to the query pattern. The index structure also allows for similarity queries to be processed. Similarity queries are processed by decomposing the query pattern into subgraphs and to identify nodes in the GDI which are of the same size as the query graph and are within a specified similarity range. The benefit of this approach is that for subgraph queries, no computationally costly verification is required (Sakr and Al-Naymat 2010). For similarity queries however, the technique used may lead to computationally prohibitive searches if the query range is not small (Williams et al. 2007). The index is also not suited for databases with large and diverse graphs (Sakr and Al-Naymat 2010; Williams et al. 2007), as the index size may well grow exponentially with the number of induced subgraphs (Yan and Han 2010). 3.5 3.5.1 Spectral Graph Coding Spectral Signatures

Another approach to compute useful indices for graphs is spectral graph coding. In spectral graph coding, the topological structure of a graph can be captured in numerical space by drawing on methods from spectral graph theory (Zou, Chen, Yu, et al. 2008). That means that certain mathematical properties can be exploited to derive a characteristic description of a graph. Spectral methods are only able to capture the topology of a graph and therefore have to be augmented by other methods to handle labels (Zou, Chen, Yu, et al. 2008). The characterization of a graph derived from spectral methods can be referred to as topological or spectral signature (Shokoufandeh et al. 2005; Zou, Chen, Yu, et al. 2008). Spectral signatures based on polynomial characterizations (Horaud and Sossa 1995; Sossa and Horaud 1992) and on eigenvalues (Demirci et al. 2008; Sengupta and Boyer 1996; Shokoufandeh et al. 2005; Zou, Chen, Yu, et al. 2008) have been proposed. 3.5.2 Polynomial Characterizations

Horaud and Sossa suggest in (Horaud and Sossa 1995) that instead of finding an isomorphic mapping between two graphs, the isomorphism decision problem could be solved by using an algebraic characterization of the adjacency matrix that is invariant under similarity transformation. They further suggest to use polynomials associated with the adjacency matrix of a graph as such an algebraic characterization, as a number of polynomials are known which characterize a graph unambiguously up to an isomorphism. Two polynomials are said to be equal, if they are equal in (1) degree and

24

(2) coefficients. Using such a polynomial, the decision problem whether or not two graphs are isomorphic to each other could then be answered by the result of the decision problem whether their polynomials are equal. In their work with binary graphs, they suggest the second immanantal polynomial associated with the Laplacian matrix 26 of a graph. The polynomial is depicted in Formula 3 with ( ) denoting the Laplacian matrix and the coefficients. The coefficients can be computed in polynomial time ( ) time for a graph with vertices. The index for a given graph is then its sequence of coefficients, which can be compared with other graphs of the same size (same number of vertices). It has to be said, that the characterization in this particular case gets more ambiguous with increasing size of the graph ( ), which makes equality of polynomials a necessary, but no longer sufficient condition (Sossa and Horaud 1992). Formula 3 Second immanantal polynomial27 ( ( )) ( ) ( ( ))

3.5.3

Eigenvalue Characterizations

The use of eigenvalues to characterize and index a graph is also a well-known technique (Demirci et al. 2008; Sengupta and Boyer 1996; Shokoufandeh et al. 2005; Zou, Chen, Yu, et al. 2008). The eigenvalue vector, or eigenvector, and the eigenvalue of a symmetrical -matrix can be computed as follows (Zou, Chen, Yu, et al. 2008): Formula 4 Eigenvectors28 Under the above conditions, the eigenvector and eigenvalue are referred to as normalized. Normalization is necessary, because an infinite number of eigenvectors can be created through scaling, each of which would be associated with a different eigenvalue (Zou, Chen, Yu, et al. 2008). For a -matrix, n eigenvector-eigenvalue pairs exist, which can be denoted as an ordered, non-ascending sequence

26

A Laplacian matrix is similar to the adjacency matrix in that it also is a squared matrix (where the graph has vertices). Elements assume the value of -1 in the intersection of node and column if there is an edge between the row and column nodes, and 0 otherwise. Elements in the intersection of node and column of the same node assume the value of its degree (Horaud and Sossa 1995). 27 Based on (Horaud and Sossa 1995) 28 Where is a symmetrical -matrix; is the eigenvector of length ; is a scalar; And is the inner product of two vectors so that ; based on (Zou, Chen, Yu, et al. 2008)

25

(Zou, Chen, Yu, et al. 2008). The topology of a graph can therefore be encoded in a sequence of eigenvalues computed from its adjacency matrix (Sengupta and Boyer 1996; Shokoufandeh et al. 1999; Zou, Chen, Yu, et al. 2008). This has been done for simple symmetric { } adjacency matrices of non-directed graphs (Shokoufandeh et al. } adjacency matrices 1999; Zou, Chen, Yu, et al. 2008), for simple asymmetric { of directed graphs (Macrini et al. 2002), for Laplacian matrices and for adjacency matrices of trees (Shokoufandeh et al. 1999; Zou, Chen, Yu, et al. 2008). It has also has been shown that the spectral code derived from eigenvalues is quite stable under minor topological perturbation (Shokoufandeh et al. 2005). This means that similar graphs will have a similar spectral code, making eigenvalues interesting for graph similarity queries. The sorted vector of the eigenvalues of a graph could be used as a simple index, however this index would grow to an inconvenient size with large graphs (Shokoufandeh et al. 2005). Possible solutions would be truncation at an arbitrary point. This approach is disadvantageous, as it concentrates the index on the rather weaker global aspects of the structure (Shokoufandeh et al. 2005). Shokoufadeh et. al in (Shokoufandeh et al. 2005) create a signature for DAGs by combining local and global signatures under summation: Given a DAG with maximum branching factor of ( ) they sum the ( ) largest absolute eigenvalues for every subgraph and assign this ( )-dimensional vector to the root of the DAG ( ( ) represents the degree of the subgraph). This is done recursively for each non-terminal node in the DAG, assigning a vector to each node. Should a nodes degree be lower than ( ), then the remaining values will be padded with zeros. These computed eigenvalue sums remain still invariant to any isomorphic transformation of the graph (Shokoufandeh et al. 2005). Shokoufadeh et al. then store each node signature with a pointer to all member graphs in which it occurs (Node signatures of simple subgraphs contained in other graph members will conceivably occur more than once and hence the need to point to different members). For a given query pattern, its node signatures are computed. Subgraph searches can simply build a candidate set by comparing its root signature with the stored signatures. Similarity search can utilize the similarity of eigenvalues (similar graphs will have similar eigenvalues) or match substructure signatures. Zou et al. also utilize eigenvalues to create signatures of local structures which they then store in a hierarchical index (Zou, Chen, Yu, et al. 2008). They enumerate all level-n path trees. A level-n path tree is a tree which is constructed by attaching all paths of a length from a given vertex to a root node representing the origin-vertex of the paths. This local structure is generated for every vertex in a member graph. A signature based on this tree structure is computed by calculating the eigenvalue-vector of its adjacency

26

matrix. In the given algorithm, only the first two largest eigenvalues are used to represent the topology of the local structure of a tree. An index is then constructed for ) where is a hash of the vertex label, is a each vertex as a quadruple ( hash of the vertex neighbours labels and is the eigenvalue-signature (Largest and ) second-largest eigenvalue. Graph indices are then formed by a quadruple ( by performing an elementwise addition of the binary hash codes to build L and N, and by retaining the t largest -eigenvalues as and analogously the t largest eigenvalues as . For a graph database member to be elevated into the candidate set of subgraph query, its index must be element-wise larger than the index-code computed for the query pattern. In addition of storing the graph signature with a pointer to its member graph, a dictionary of the computed vertex indices and information on the number of embeddings of a vertex structure in a particular member graph are stored. A binary tree data structure analogous to an S-Tree and called a GCode-Tree is used to order the graph index-codes hierarchically by ordering the member graphs (leafs of the tree) and denoting non-terminal nodes as element wise additions of hashes. Through that order, a query can traverse the tree and cut off all branches that exceed it (element wise). This allows for a more efficient search than a sequential scan of the codes.

27

Advanced Implementation Considerations

This chapter will take a look at some more advanced considerations that can inform and guide implementation decisions. First, it is discussed how the overall running time of an algorithm is dependent on the implementation of the distinct steps. Then architectures and technologies that allow parallel and distributed execution of indexing and filtering algorithms are presented. 4.1 4.1.1 Response Time and Efficiency Considerations Query Response Time

The primary concern when implementing an indexing scheme is an acceptable query response time (Yan and Han 2010), i.e. a given query needs to be processed within a timeframe that the user finds acceptable. As the query processing is divided into the steps, the overall query response time can be expressed as the sum of the processing time of these steps (Yan, Yu, and Han 2005a). To recall, these steps are: (1) Search, which enumerates all index features in the query graph and computes the candidate set29, (2) Retrieval of the candidate graphs from storage (i.e. the disk) and (3) Verification, which removes false positives from the candidate set and outputs the query answer set (Yan and Han 2010). The cost model of the query response time can therefore be expressed as Formula 5 Query Response Time30 ( In the formula, denotes the average processing time of step ) and is the size of

the candidate answer set. This formula can guide the implementation process. Obviously, the time spent on verification is roughly the same for any given query (Yan, Yu, and Han 2005a), therefore the next best options to reduce the overall query time are to reduce and . Both are heavily depend on the efficiency of I/Ooperations: needs to efficiently access the index to compute the candidate set while needs to efficiently retrieve the graphs from storage (Yan, Yu, and Han 2005a). To put it succinct: The faster data can be accessed, the faster the overall query will be. Another important observation is that there is some interdependence between the first and the second part of the sum: The number of times retrieval and verification

29

In the cited source, the authors call this set the query answer set, but under the definitions established in this thesis the set is the candidate set as it does include potentially false-positives since no verification has been performed yet. 30 Based on (Yan, Yu, and Han 2005a)

28

operations will have to be performed are directly determined by the size of the candidate set (Yan, Yu, and Han 2005a). Improving the filtering strength therefore improves the overall querying time, as lesser false positives will be introduced into the candidate set (Yan and Han 2010; Yan, Yu, and Han 2005a). The candidate set will in any case be at least of the size of the answer set. This motivates research of indexing techniques which try to avoid verification (Sakr 2009; Yan et al. 2004) or allow verification-free queries (Cheng et al. 2007; Williams et al. 2007). 4.1.2 Optimizing search and retrieval

As stated before, both and are heavily dependent on the efficiency of the I/O-process. The retrieval time is dependent on the efficiency of fetching a member graph from storage (Yan, Yu, and Han 2005a). As this concerns storage and organization of the storage, this is out of the scope of this thesis. Therefore, only the efficiency of index retrieval is discussed. As the efficiency of the search step is dependent on the efficiency of accessing the index, index size and storage is essential. As noted in (Yan, Yu, and Han 2005a), accessing an index stored in memory is faster than accessing index files on disk. Therefore the size of the index should be small enough to fit in memory for easy access (Yan, Yu, and Han 2005a). Index-Size can be reduced through the use of hashing techniques. These techniques have been introduced in 3.3 under fingerprints and exemplary uses are (Daylight Chemical Information Systems Inc. 2008; Klein et al. 2011; Williams et al. 2007; Zou, Chen, Yu, et al. 2008). If employed, one has to keep in mind that the reduction in size comes at the expense of increasing ambiguity of the index entries due to collisions. Despite index-size reduction techniques, it may not be possible for large databases to keep the full index in memory, here the use of disk-based indices cannot be avoided (Tian and Patel 2008). This cost can be mitigated by hybrid approaches which use a memory-based index to reduce the number of accesses of a disk-based index beforehand (Cheng et al. 2007). Another idea to improve search efficiency is to avoid nave filter-use and to create a strategy that optimizes filter-selection. Obvious ideas include the use of inexpensive heuristics first, such as pruning all candidates that are smaller than the query graph (in substructure search) or all candidates that do not share the same labels as the query pattern (Klein et al. 2011). Advanced techniques concern themselves with an optimized composition of the used filters: Instead of sequentially testing if a query pattern contains an index-feature, (Chen et al. 2007) impose an hierarchical order on the indexed features according to their ability to quickly prune database members. The hierarchy is stored in a tree-structure and the sequence of features for which the query pattern is

29

tested is determined by the path through the tree. In (Yan, Yu, and Han 2005b), multiple filters are created by clustering features into groups. 4.1.3 Verification Avoidance and Verification-free Techniques

As verification is computationally costly, there is a natural inclination towards avoidance. Therefore some indexing techniques exist, which try to avoid verification of some of the candidates by ascertaining that they are indeed valid matches from their index structure or from prior knowledge. A number of feature-based algorithms, exemplary (Cheng et al. 2009, 2007; Yan et al. 2004), logically do not perform any verification if the query pattern is an exact match of an indexed feature. GraphREL (Sakr 2009) also performs verification only under special conditions. FG*-Index (Cheng et al. 2009) utilizes prior knowledge to avoid verification of some queries. It extends the indexing framework developed in FG-Index (Cheng et al. 2007) by adding an index for frequently queried non-frequent patterns and their answer sets, called FAQ-index, thus eliminating the necessity to verify frequently asked queries. GDIndex (Williams et al. 2007)31 is a technique which does not use verification. It uses exhaustive enumeration of all connected and induced subgraphs, for each of which a canonical code is derived and stored. A query pattern is identically decomposed and a canonical code is computed. Matching this canonical code against an indexed node is sufficient to ascertain isomorphism, making an isomorphism test unnecessary. As noted before, this indexing technique is not suited for large graphs because it would create impractically large indices (Sakr and Al-Naymat 2010). 4.2 Parallel and Distributed Execution

As query processing is very workload extensive, algorithmic implementation can be adapted to the environment in which it is employed. For example, a query could be processed sequentially by a number of processors (either threads in a single machine or by a multiple machines), forming a pipeline or in parallel. Grafils filtering process (Yan, Yu, and Han 2005b) for example can be executed either in pipeline mode or in parallel mode. In the former, the candidate set ( ) produced by a filter is fed as input to the next filter in the sequence which produces the next, potentially smaller, candidate set ( ) . In parallel mode, all filters are executed at the same time, each producing a candidate set. All candidate sets are then combined by an intersection operation to produce the final candidate set. In pipeline mode, intermediate results can inform the filtering strategy in the following filtering steps, this is impossible in parallel

31

See

3.4 Hierarchical Indexing

30