Escolar Documentos

Profissional Documentos

Cultura Documentos

Caches in Multicore Systems: Universitatea Politehnica Din Timisoara Facultatea de Automatica Şi Calculatoare

Enviado por

rotarcalinDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Caches in Multicore Systems: Universitatea Politehnica Din Timisoara Facultatea de Automatica Şi Calculatoare

Enviado por

rotarcalinDireitos autorais:

Formatos disponíveis

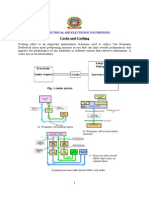

Universitatea Politehnica din Timisoara Facultatea de Automatica i Calculatoare

Project for Computer Engineering Fundaments

Caches in multicore systems

Rotar Calin Viorel An universitar 2010- 2011

Introduction In computer engineering, a cache is a component that transparently stores data so that future requests for that data can be served faster. The data that is stored within a cache might be values that have been computed earlier or duplicates of original values that are stored elsewhere. If requested data is contained in the cache (cache hit), this request can be served by simply reading the cache, which is comparatively faster. Otherwise (cache miss), the data has to be recomputed or fetched from its original storage location, which is comparatively slower. Hence, the more requests can be served from the cache the faster the overall system performance is. As opposed to a buffer, which is managed explicitly by a client, a cache stores data transparently: This means that a client who is requesting data from a system is not aware that the cache exists, which is the origin of the name cache (from French "cacher", to conceal). To be cost efficient and to enable an efficient use of data, caches are relatively small. Nevertheless, caches have proven themselves in many areas of computing because access patterns in typical computer applications have locality of reference. References exhibit temporal locality if data is requested again that has been recently requested already. References exhibit spatial locality if data is requested that is physically stored close to data that has been requested already. Hardware implements cache as a block of memory for temporary storage of data likely to be used again. CPUs and hard drives frequently use a cache, as do web browsers and web servers. A cache is made up of a pool of entries. Each entry has a datum (a nugget of data) - a copy of the same datum in some backing store. Each entry also has a tag, which specifies the identity of the datum in the backing store of which the entry is a copy. When the cache client (a CPU, web browser, operating system) needs to access a datum presumed to exist in the backing store, it first checks the cache. If an entry can be found with a tag matching that of the desired datum, the datum in the entry is used instead. This situation is known as a cache hit. So, for example, a web browser program might check its local cache on disk to see if it has a local copy of the contents of a web page at a particular URL. In this example, the URL is the tag, and the content of the web page is the datum. The percentage of accesses that result in cache hits is known as the hit rate or hit ratio of the cache. The alternative situation, when the cache is consulted and found not to contain a datum with the desired tag, has become known as a cache miss. The previously uncached datum fetched from the backing store during miss handling is usually copied into the cache, ready for the next access. During a cache miss, the CPU usually ejects some other entry in order to make room for the previously uncached datum. The heuristic used to select the entry to eject is known as the replacement policy. One popular replacement policy, "least recently used" (LRU), replaces the least recently used entry (see cache algorithms). More efficient caches compute use frequency against the size of the stored contents, as well as the latencies and throughputs for both the cache and the backing store. While this works well for larger amounts of data, long latencies and slow throughputs, such as experienced with a hard drive and the Internet, it is not efficient for use with a CPU cache. When a system writes a datum to the cache, it must at some point write that datum to the backing store as well. The timing of this write is controlled by what is known as the write policy. In a write-through cache, every write to the cache causes a synchronous write to the backing store.

Alternatively, in a write-back (or write-behind) cache, writes are not immediately mirrored to the store. Instead, the cache tracks which of its locations have been written over and marks these locations as dirty. The data in these locations is written back to the backing store when those data are evicted from the cache, an effect referred to as a lazy write. For this reason, a read miss in a write-back cache (which requires a block to be replaced by another) will often require two memory accesses to service: one to retrieve the needed datum, and one to write replaced data from the cache to the store. Other policies may also trigger data write-back. The client may make many changes to a datum in the cache, and then explicitly notify the cache to write back the datum. No-write allocation (a.k.a. write-no-allocate) is a cache policy which caches only processor reads, i.e. on a write-miss: * Data is written directly to memory, * Data at the missed-write location is not added to cache. This avoids the need for write-back or write-through when the old value of the datum was absent from the cache prior to the write. Entities other than the cache may change the data in the backing store, in which case the copy in the cache may become out-of-date or stale. Alternatively, when the client updates the data in the cache, copies of that data in other caches will become stale. Communication protocols between the cache managers which keep the data consistent are known as coherency protocols. Structure Cache row entries usually have the following structure: tag data blocks valid bit The data blocks (cache line) contain the actual data fetched from the main memory. The valid bit (dirty bit) denotes that this particular entry has valid data. An effective memory address is split (MSB to LSB) into the tag, the index and the displacement (offset), tag index displacement The index length is - ceiling (log2(cache_rows)) - bits and describes which row the data has been put in. The displacement length is - ceiling (log2(cache_rows)) - and specifies which block of the ones we have stored we need. The tag length is address_length index_length displacement_length and contains the most significant bits of the address, which are checked against the current row (the row has been retrieved by index) to see if it is the one we need or another, irrelevant memory location that happened to have the same index bits as the one we want. Associativity Associativity is a trade-off. If there are ten places to which the replacement policy could have mapped a memory location, then to check if that location is in the cache, ten cache entries must be searched. Checking more places takes more power, chip area, and potentially time. On the other hand, caches with more associativity suffer fewer misses (see conflict misses, below), so that the CPU wastes less time reading from the slow main memory. The rule of thumb is that doubling the associativity, from direct mapped to 2-way, or from 2-way to 4-way, has about the

same effect on hit rate as doubling the cache size. Associativity increases beyond 4-way have much less effect on the hit rate, and are generally done for other. In order of increasing (worse) hit times and decreasing (better) miss rates, * direct mapped cachethe best (fastest) hit times, and so the best tradeoff for "large" caches * 2-way set associative cache * 2-way skewed associative cache "the best tradeoff for .... caches whose sizes are in the range 4K-8K bytes" Andr Seznec * 4-way set associative cache * fully associative cache the best (lowest) miss rates, and so the best tradeoff when the miss penalty is very high One of the advantages of a direct mapped cache is that it allows simple and fast speculation. Once the address has been computed, the one cache index which might have a copy of that datum is known. That cache entry can be read, and the processor can continue to work with that data before it finishes checking that the tag actually matches the requested address. The idea of having the processor use the cached data before the tag match completes can be applied to associative caches as well. A subset of the tag, called a hint, can be used to pick just one of the possible cache entries mapping to the requested address. This datum can then be used in parallel with checking the full tag. The hint technique works best when used in the context of address translation, as explained below. Other schemes have been suggested, such as the skewed cache, where the index for way 0 is direct, as above, but the index for way 1 is formed with a hash function. A good hash function has the property that addresses which conflict with the direct mapping tend not to conflict when mapped with the hash function, and so it is less likely that a program will suffer from an unexpectedly large number of conflict misses due to a pathological access pattern. The downside is extra latency from computing the hash function. Additionally, when it comes time to load a new line and evict an old line, it may be difficult to determine which existing line was least recently used, because the new line conflicts with data at different indexes in each way; LRU tracking for non-skewed caches is usually done on a per-set basis. Nevertheless, skewedassociative caches have major advantages over conventional set-associative ones. Multi-level caches Another issue is the fundamental tradeoff between cache latency and hit rate. Larger caches have better hit rates but longer latency. To address this tradeoff, many computers use multiple levels of cache, with small fast caches backed up by larger slower caches. Multi-level caches generally operate by checking the smallest Level 1 (L1) cache first; if it hits, the processor proceeds at high speed. If the smaller cache misses, the next larger cache (L2) is checked, and so on, before external memory is checked. As the latency difference between main memory and the fastest cache has become larger, some processors have begun to utilize as many as three levels of on-chip cache. For example, the Alpha 21164 (1995) had 1 to 64MB off-chip L3 cache; the IBM POWER4 (2001) had a 256 MB L3 cache off-chip, shared among several processors; the Itanium 2 (2003) had a 6 MB unified level 3 (L3) cache on-die; the Itanium 2 (2003) MX 2 Module incorporates two Itanium2 processors along with a shared 64 MB L4 cache on a MCM that was pin compatible with a Madison processor; Intel's Xeon MP product code-named "Tulsa" (2006) features 16 MB of ondie L3 cache shared between two processor cores; the AMD Phenom II (2008) has up to 6 MB

on-die unified L3 cache; and the Intel Core i7 (2008) has an 8 MB on-die unified L3 cache that is inclusive, shared by all cores. The benefits of an L3 cache depend on the application's access patterns. Finally, at the other end of the memory hierarchy, the CPU register file itself can be considered the smallest, fastest cache in the system, with the special characteristic that it is scheduled in softwaretypically by a compiler, as it allocates registers to hold values retrieved from main memory. Register files sometimes also have hierarchy: The Cray-1 (circa 1976) had 8 address "A" and 8 scalar data "S" registers that were generally usable. There was also a set of 64 address "B" and 64 scalar data "T" registers that took longer to access, but were faster than main memory. The "B" and "T" registers were provided because the Cray-1 did not have a data cache. (The Cray-1 did, however, have an instruction cache.) The use of large multilevel caches can substantially reduce memory bandwidth demands of a processor. This has made it possible for several (micro)processors to share the same memory through a shared bus. Caching supports both private and shared data. For private data, once cached, it's treatment is identical to that of a uniprocessor. For shared data, the shared value may be replicated in many caches. Replication has several advantages: * Reduced latency and memory bandwidth requirements. * Reduced contention for data items that are read by multiple processors simultaneously. However, it also introduces a problem: Cache coherence. Cache coherence With multiple caches, one CPU can modify memory at locations that other CPUs have cached. For example: * CPU A reads location x, getting the value N . * Later, CPU B reads the same location, getting the value N . * Next, CPU A writes location x with the value N - 1 . * At this point, any reads from CPU B will get the value N , while reads from CPU A will get the value N - 1 . This problem occurs both with write-through caches and (more seriously) with writeback caches. Coherence defines the behavior of reads and writes to the same memory location. The coherence of caches is obtained if the following conditions are met: 1. A read made by a processor P to a location X that follows a write by the same processor P to X, with no writes of X by another processor occurring between the write and the read instructions made by P, X must always return the value written by P. This condition is related with the program order preservation, and this must be achieved even in monoprocessed architectures. 2. A read made by a processor P1 to location X that follows a write by another processor P2 to X must return the written value made by P2 if no other writes to X made by any processor occur between the two accesses. This condition defines the concept of coherent view of memory. If processors can read the same old value after the write made by P2, we can say that the memory is incoherent. 3. Writes to the same location must be sequenced. In other words, if location X received two different values A and B, in this order, by any two processors, the processors can

never read location X as B and then read it as A. The location X must be seen with values A and B in that order. These conditions are defined supposing that the read and write operations are made instantaneously. However, this doesn't happen in computer hardware given memory latency and other aspects of the architecture. A write by processor P1 may not be seen by a read from processor P2 if the read is made within a very small time after the write has been made. The memory consistency model defines when a written value must be seen by a following read instruction made by the other processors. Coherence protocols: * Directory-based coherence: In a directory-based system, the data being shared is placed in a common directory that maintains the coherence between caches. The directory acts as a filter through which the processor must ask permission to load an entry from the primary memory to its cache. When an entry is changed the directory either updates or invalidates the other caches with that entry. * Snooping is the process where the individual caches monitor address lines for accesses to memory locations that they have cached. When a write operation is observed to a location that a cache has a copy of, the cache controller invalidates its own copy of the snooped memory location. Write invalidate. It is the most common protocol, both for snooping and for directory schemes. The basic idea behind this protocol is that writes to a location invalidate other caches' copies of the block. Reads by other processors on invalidated data cause cache misses. If two processors write at the same time, one wins and obtains exclusive access. Write broadcast (write update). An alternative is to update all cached copies of the data item when it is written. To reduce bandwidth requirements, this protocol keeps track of whether or not a word in the cache is shared. If not, no broadcast is necessary. Performance Differences between Bus Snooping Protocols Write invalidate is much more popular. This is due primarily to the performance differences. Multiple writes to the same word with no intervening reads require multiple broadcasts. With multiword cache blocks, each word written requires a broadcast. For write invalidate, the first word written invalidates. Also write invalidate works on blocks , while write broadcast must work on individual words or bytes. The delay between writing by one processor and reading by another is lower in the write broadcast scheme. For write invalidate, the read causes a miss. Since bus and memory bandwidth are more important in a bus-based multiprocessor, write invalidation performs better. * Snarfing is where a cache controller watches both address and data in an attempt to update its own copy of a memory location when a second master modifies a location in main memory. When a write operation is observed to a location that a cache has a copy of, the cache controller updates its own copy of the snarfed memory location with the new data. Conclusion Cache memory is a vital element in allowing more programs to be run faster and in parallel threads of execution on multicore processors. The key condition to success is that the different cores using the data are able to understand each other and perform the operations in a correct way.

Bibliography: Ulrich Drepper: What Every Programmer Should Know About Memory A course on Multi-core architectures by Jernej Barbic http://www.ece.unm.edu/~jimp/611/slides/chap8_2.html Patterson & Hennessy - Computer Organization and Design. The Hardware-Software Interface http://en.wikipedia.org/wiki/CPU_cache http://en.wikipedia.org/wiki/Cache_coherency

Você também pode gostar

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedNo EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedAinda não há avaliações

- Cache EntriesDocumento13 páginasCache EntriesAnirudh JoshiAinda não há avaliações

- CPU Cache: From Wikipedia, The Free EncyclopediaDocumento19 páginasCPU Cache: From Wikipedia, The Free Encyclopediadevank1505Ainda não há avaliações

- CPU Cache: Details of OperationDocumento18 páginasCPU Cache: Details of OperationIan OmaboeAinda não há avaliações

- Cache (Computing)Documento12 páginasCache (Computing)chahoubAinda não há avaliações

- Cache Central Processing Unit Computer Memory Main Memory LatencyDocumento2 páginasCache Central Processing Unit Computer Memory Main Memory LatencyVaibhav BrahmeAinda não há avaliações

- Cache MemoryDocumento20 páginasCache MemoryTibin ThomasAinda não há avaliações

- Krishna M. Kavi The University of Alabama in Huntsville: Cache MemoriesDocumento5 páginasKrishna M. Kavi The University of Alabama in Huntsville: Cache MemoriesNithyendra RoyAinda não há avaliações

- Term Paper: Cahe Coherence SchemesDocumento12 páginasTerm Paper: Cahe Coherence SchemesVinay GargAinda não há avaliações

- Computer Organization and Architecture Module 3Documento34 páginasComputer Organization and Architecture Module 3Assini Hussain100% (1)

- The System DesignDocumento135 páginasThe System DesignaeifAinda não há avaliações

- Understand CPU Caching ConceptsDocumento14 páginasUnderstand CPU Caching Conceptsabhijit-k_raoAinda não há avaliações

- Unit 5Documento40 páginasUnit 5anand_duraiswamyAinda não há avaliações

- Computer MemoryDocumento51 páginasComputer MemoryWesley SangAinda não há avaliações

- Cache and Caching: Electrical and Electronic EngineeringDocumento15 páginasCache and Caching: Electrical and Electronic EngineeringWanjira KigokoAinda não há avaliações

- Ak ProjectDocumento21 páginasAk ProjectDilaver GashiAinda não há avaliações

- Shared-Memory Multiprocessors - Symmetric Multiprocessing HardwareDocumento7 páginasShared-Memory Multiprocessors - Symmetric Multiprocessing HardwareSilvio DresserAinda não há avaliações

- Cache: Why Level It: Departamento de Informática, Universidade Do Minho 4710 - 057 Braga, Portugal Nunods@ipb - PTDocumento8 páginasCache: Why Level It: Departamento de Informática, Universidade Do Minho 4710 - 057 Braga, Portugal Nunods@ipb - PTsothymohan1293Ainda não há avaliações

- Memory Hierarchy - Introduction: Cost Performance of Memory ReferenceDocumento52 páginasMemory Hierarchy - Introduction: Cost Performance of Memory Referenceravi_jolly223987Ainda não há avaliações

- Cache Memories1Documento3 páginasCache Memories1Ravi BagadeAinda não há avaliações

- Computer Virtual MemoryDocumento18 páginasComputer Virtual MemoryPrachi BohraAinda não há avaliações

- CachememDocumento9 páginasCachememVu Trung Thanh (K16HL)Ainda não há avaliações

- Literature Review of Cache MemoryDocumento7 páginasLiterature Review of Cache Memoryafmzhuwwumwjgf100% (1)

- Design of Cache Memory Mapping Techniques For Low Power ProcessorDocumento6 páginasDesign of Cache Memory Mapping Techniques For Low Power ProcessorhariAinda não há avaliações

- Nonvolatil: RotationalDocumento6 páginasNonvolatil: RotationalPrafulla ShaijumonAinda não há avaliações

- Caching: Application Server CacheDocumento3 páginasCaching: Application Server Cachelokenders801Ainda não há avaliações

- "Cache Memory" in (Microprocessor and Assembly Language) : Lecture-20Documento19 páginas"Cache Memory" in (Microprocessor and Assembly Language) : Lecture-20MUHAMMAD ABDULLAHAinda não há avaliações

- Implementation of Cache MemoryDocumento15 páginasImplementation of Cache MemoryKunj PatelAinda não há avaliações

- Understand CPU Caching ConceptsDocumento11 páginasUnderstand CPU Caching Conceptsabhijitkrao283Ainda não há avaliações

- Shashank Aca AssignmentDocumento21 páginasShashank Aca AssignmentNilesh KmAinda não há avaliações

- Multiprocessors and ThreadDocumento4 páginasMultiprocessors and ThreadhelloansumanAinda não há avaliações

- CacheDocumento14 páginasCacheShobhit SinghAinda não há avaliações

- CO & OS Unit-3 (Only Imp Concepts)Documento26 páginasCO & OS Unit-3 (Only Imp Concepts)0fficial SidharthaAinda não há avaliações

- 4 Unit Speed, Size and CostDocumento5 páginas4 Unit Speed, Size and CostGurram SunithaAinda não há avaliações

- Introduction of Cache MemoryDocumento24 páginasIntroduction of Cache MemorygnshkhrAinda não há avaliações

- Basic Introduction Availability, Security, and Scalability. RAID Technology Made AnDocumento17 páginasBasic Introduction Availability, Security, and Scalability. RAID Technology Made AnvishnuAinda não há avaliações

- Unit 4 - Computer System Organisation - WWW - Rgpvnotes.inDocumento8 páginasUnit 4 - Computer System Organisation - WWW - Rgpvnotes.inmukulgrd1Ainda não há avaliações

- Research Paper On Cache MemoryDocumento8 páginasResearch Paper On Cache Memorypib0b1nisyj2100% (1)

- Cache Memory in Computer OrganizatinDocumento12 páginasCache Memory in Computer OrganizatinJohn Vincent BaylonAinda não há avaliações

- Cache Memory: Computer Architecture Unit-1Documento54 páginasCache Memory: Computer Architecture Unit-1KrishnaAinda não há avaliações

- Cache Coherence: Caches Memory Coherence Caches MultiprocessingDocumento4 páginasCache Coherence: Caches Memory Coherence Caches MultiprocessingSachin MoreAinda não há avaliações

- Cache Memory ADocumento62 páginasCache Memory ARamiz KrasniqiAinda não há avaliações

- Cache MemoryDocumento4 páginasCache MemoryPlay ZoneAinda não há avaliações

- Computer Organization AnswerDocumento6 páginasComputer Organization Answersamir pramanikAinda não há avaliações

- Cache 13115Documento20 páginasCache 13115rohan KottawarAinda não há avaliações

- Module 5Documento39 páginasModule 5adoshadosh0Ainda não há avaliações

- Aca Unit5Documento13 páginasAca Unit5karunakarAinda não há avaliações

- CSCI 8150 Advanced Computer ArchitectureDocumento46 páginasCSCI 8150 Advanced Computer Architecturesunnynnus100% (2)

- MODULE 4 Memory SystemDocumento14 páginasMODULE 4 Memory SystemMadhura N KAinda não há avaliações

- Cache and Caching: Electrical and Electronic EngineeringDocumento15 páginasCache and Caching: Electrical and Electronic EngineeringEnock OmariAinda não há avaliações

- Cache Memory: Replacement AlgorithmsDocumento9 páginasCache Memory: Replacement AlgorithmsLohith LogaAinda não há avaliações

- Hash CacheDocumento18 páginasHash CacheHari S PillaiAinda não há avaliações

- Lecture 6 8405 Computer ArchitectureDocumento16 páginasLecture 6 8405 Computer ArchitecturebokadashAinda não há avaliações

- Evaluating Stream Buffers As A Secondary Cache ReplacementDocumento10 páginasEvaluating Stream Buffers As A Secondary Cache ReplacementVicent Selfa OliverAinda não há avaliações

- Cache MemoryDocumento11 páginasCache MemoryDeepAinda não há avaliações

- Assignment 1Documento4 páginasAssignment 1Syed Khizar HassanAinda não há avaliações

- A Cache Primer: Freescale SemiconductorDocumento16 páginasA Cache Primer: Freescale SemiconductornarendraAinda não há avaliações

- Conspect of Lecture 7Documento13 páginasConspect of Lecture 7arukaborbekovaAinda não há avaliações

- Coa Poster ContentDocumento2 páginasCoa Poster ContentsparenileshAinda não há avaliações

- 1 - Memory Organization Module 4Documento37 páginas1 - Memory Organization Module 4Lalala LandAinda não há avaliações

- Sparcengine Ultra Axi: Oem Technical ManualDocumento174 páginasSparcengine Ultra Axi: Oem Technical ManualDante Nuevo100% (1)

- GPS Tracking SynopsysDocumento25 páginasGPS Tracking SynopsysRohan PolAinda não há avaliações

- Dsa ch1 IntroductionDocumento28 páginasDsa ch1 Introductionapi-394738731Ainda não há avaliações

- Performance Appraisal of Google EmployeesDocumento22 páginasPerformance Appraisal of Google EmployeesbhagyaAinda não há avaliações

- E8044 M5a99x Evo R20 V2 WebDocumento180 páginasE8044 M5a99x Evo R20 V2 Webdanut_dumitruAinda não há avaliações

- Beyond Candlesticks - New Japanese Charting Techniques Revealed - Nison 1994 PDFDocumento270 páginasBeyond Candlesticks - New Japanese Charting Techniques Revealed - Nison 1994 PDFkrishport100% (1)

- Digital Printing PressesDocumento65 páginasDigital Printing PressesLessie556Ainda não há avaliações

- Thesis Information TecnologyDocumento66 páginasThesis Information TecnologyOkap DeveraAinda não há avaliações

- Ishaq Resume (Up To Date)Documento4 páginasIshaq Resume (Up To Date)Ishaq AhamedAinda não há avaliações

- HP Service Manager Wizards GuideDocumento93 páginasHP Service Manager Wizards GuideLaredAinda não há avaliações

- Sample IT Change Management Policies and Procedures GuideDocumento29 páginasSample IT Change Management Policies and Procedures GuidePragnya VedaAinda não há avaliações

- A Review of Hydrostatic Bearing System: Researches and ApplicationsDocumento27 páginasA Review of Hydrostatic Bearing System: Researches and ApplicationsAhmed KhairyAinda não há avaliações

- Basics of CDocumento32 páginasBasics of CKevin PohnimanAinda não há avaliações

- Winn L. Rosch - Hardware BibleDocumento1.151 páginasWinn L. Rosch - Hardware Biblelakatoszoltan92100% (4)

- K2 User Manual 1509Documento39 páginasK2 User Manual 1509Ghofur Al MusthofaAinda não há avaliações

- Whatsapp Remote Code Execution: Bonus in Last PageDocumento11 páginasWhatsapp Remote Code Execution: Bonus in Last PageJohn DuboisAinda não há avaliações

- Bony BZW - Gbs Feed BRD v1.0Documento15 páginasBony BZW - Gbs Feed BRD v1.0umeshchandra0520Ainda não há avaliações

- Python XML ProcessingDocumento5 páginasPython XML ProcessingArush SharmaAinda não há avaliações

- Palm Vein Authentication Technology and Its ApplicationsDocumento4 páginasPalm Vein Authentication Technology and Its ApplicationsSneha MuralidharanAinda não há avaliações

- Controledge PLC Specification: Technical InformationDocumento43 páginasControledge PLC Specification: Technical InformationPhước LùnAinda não há avaliações

- Inventor Split CommandDocumento5 páginasInventor Split CommandjamilAinda não há avaliações

- Dirty Little SecretDocumento5 páginasDirty Little SecretbpgroupAinda não há avaliações

- GM CommandsDocumento3 páginasGM CommandsAnnie ViAinda não há avaliações

- Architecture of (Vxworks) : Labview ProgramsDocumento14 páginasArchitecture of (Vxworks) : Labview ProgramsRangaRaj100% (1)

- Veritas Storage Foundation For Oracle RAC Installation and Configuration GuideDocumento490 páginasVeritas Storage Foundation For Oracle RAC Installation and Configuration GuideTanveer AhmedAinda não há avaliações

- CD RomDocumento4 páginasCD RomViraj BahiraAinda não há avaliações

- 20210913115458D3708 - Session 01 Introduction To Big Data AnalyticsDocumento28 páginas20210913115458D3708 - Session 01 Introduction To Big Data AnalyticsAnthony HarjantoAinda não há avaliações

- CjkanlogjhDocumento172 páginasCjkanlogjhDenis ČernýAinda não há avaliações

- Fundamental Data ConceptDocumento12 páginasFundamental Data Conceptpisabandmut100% (1)

- Microcontroller Based Home Automation SystemDocumento47 páginasMicrocontroller Based Home Automation SystemPRITAM DASAinda não há avaliações

- CompTIA A+ Complete Review Guide: Core 1 Exam 220-1101 and Core 2 Exam 220-1102No EverandCompTIA A+ Complete Review Guide: Core 1 Exam 220-1101 and Core 2 Exam 220-1102Nota: 5 de 5 estrelas5/5 (2)

- iPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsNo EverandiPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsNota: 5 de 5 estrelas5/5 (5)

- Chip War: The Quest to Dominate the World's Most Critical TechnologyNo EverandChip War: The Quest to Dominate the World's Most Critical TechnologyNota: 4.5 de 5 estrelas4.5/5 (229)

- CompTIA A+ Certification All-in-One Exam Guide, Eleventh Edition (Exams 220-1101 & 220-1102)No EverandCompTIA A+ Certification All-in-One Exam Guide, Eleventh Edition (Exams 220-1101 & 220-1102)Nota: 5 de 5 estrelas5/5 (2)

- iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]No EverandiPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]Nota: 5 de 5 estrelas5/5 (5)

- Unlock Any Roku Device: Watch Shows, TV, & Download AppsNo EverandUnlock Any Roku Device: Watch Shows, TV, & Download AppsAinda não há avaliações

- Cyber-Physical Systems: Foundations, Principles and ApplicationsNo EverandCyber-Physical Systems: Foundations, Principles and ApplicationsHoubing H. SongAinda não há avaliações

- Chip War: The Fight for the World's Most Critical TechnologyNo EverandChip War: The Fight for the World's Most Critical TechnologyNota: 4.5 de 5 estrelas4.5/5 (82)

- The comprehensive guide to build Raspberry Pi 5 RoboticsNo EverandThe comprehensive guide to build Raspberry Pi 5 RoboticsAinda não há avaliações

- Arduino and Raspberry Pi Sensor Projects for the Evil GeniusNo EverandArduino and Raspberry Pi Sensor Projects for the Evil GeniusAinda não há avaliações

- CompTIA A+ Complete Practice Tests: Core 1 Exam 220-1101 and Core 2 Exam 220-1102No EverandCompTIA A+ Complete Practice Tests: Core 1 Exam 220-1101 and Core 2 Exam 220-1102Ainda não há avaliações

- How To Market Mobile Apps: Your Step By Step Guide To Marketing Mobile AppsNo EverandHow To Market Mobile Apps: Your Step By Step Guide To Marketing Mobile AppsAinda não há avaliações

- Raspberry PI: Learn Rasberry Pi Programming the Easy Way, A Beginner Friendly User GuideNo EverandRaspberry PI: Learn Rasberry Pi Programming the Easy Way, A Beginner Friendly User GuideAinda não há avaliações

- CompTIA A+ Complete Review Guide: Exam Core 1 220-1001 and Exam Core 2 220-1002No EverandCompTIA A+ Complete Review Guide: Exam Core 1 220-1001 and Exam Core 2 220-1002Nota: 5 de 5 estrelas5/5 (1)

- The User's Directory of Computer NetworksNo EverandThe User's Directory of Computer NetworksTracy LaqueyAinda não há avaliações

- Cancer and EMF Radiation: How to Protect Yourself from the Silent Carcinogen of ElectropollutionNo EverandCancer and EMF Radiation: How to Protect Yourself from the Silent Carcinogen of ElectropollutionNota: 5 de 5 estrelas5/5 (2)

- iPhone X Hacks, Tips and Tricks: Discover 101 Awesome Tips and Tricks for iPhone XS, XS Max and iPhone XNo EverandiPhone X Hacks, Tips and Tricks: Discover 101 Awesome Tips and Tricks for iPhone XS, XS Max and iPhone XNota: 3 de 5 estrelas3/5 (2)

- Debugging Embedded and Real-Time Systems: The Art, Science, Technology, and Tools of Real-Time System DebuggingNo EverandDebugging Embedded and Real-Time Systems: The Art, Science, Technology, and Tools of Real-Time System DebuggingNota: 5 de 5 estrelas5/5 (1)

- Creative Selection: Inside Apple's Design Process During the Golden Age of Steve JobsNo EverandCreative Selection: Inside Apple's Design Process During the Golden Age of Steve JobsNota: 4.5 de 5 estrelas4.5/5 (49)

- Samsung Galaxy S22 Ultra User Guide For Beginners: The Complete User Manual For Getting Started And Mastering The Galaxy S22 Ultra Android PhoneNo EverandSamsung Galaxy S22 Ultra User Guide For Beginners: The Complete User Manual For Getting Started And Mastering The Galaxy S22 Ultra Android PhoneAinda não há avaliações

![iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]](https://imgv2-1-f.scribdassets.com/img/audiobook_square_badge/728318688/198x198/f3385cbfef/1715524978?v=1)