Escolar Documentos

Profissional Documentos

Cultura Documentos

A Novel Approach of Implementing An Optimal K-Means Plus Plus Algorithm For Scalar Data

Enviado por

sinigerskyDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

A Novel Approach of Implementing An Optimal K-Means Plus Plus Algorithm For Scalar Data

Enviado por

sinigerskyDireitos autorais:

Formatos disponíveis

International Scientific Conference Computer Science2011

A Novel Approach of Implementing an Optimal k-Means Plus Plus Algorithm for Scalar Data

Angel Sinigersky1, Vladimir Daskalov1, Chavdar Georgiev1, Dr. Stanislav Dimov2

1

FE-DESIGN, G. Bradistilov 6, 1700 Sofia, Bulgaria, angel.sinigersky@fe-design.de

2

Technical University of Sofia, Sofia, Bulgaria, stani@tu-sofia.bg

Abstract: Cluster analysis is a popular statistical technique for finding groups of similar items within an unknown data set. Based on the popular iterative algorithm k-means for clustering n-dimensional data, we introduce a specialization of k-means for scalar data sets. The general k-means algorithm typically utilizes the Euclidean distance to define similarity between data points. Industrial implementations of that general algorithm use kd-trees to efficiently navigate through the data. In comparison, the processing of scalar data allows sorting the values and in this way, a considerably more efficient storage and processing is possible. In this paper we propose a novel method for initialization of the k-means iterative process of clustering scalar data. Being similar to k-means++, our new technique for initial seeding leads to better convergence and improved quality of the clustering results. Keywords: k-means, improvement, initial seeding, first centroids selection, clustering, scalar data.

1. INTRODUCTION Clustering of data is widely used in many application fields, including machine learning, data mining, pattern recognition, and image analysis. In engineering, clustering can be the first step of the reduction of data sets. The need for run-time improvement of engineering calculation software required the refinement of an existing data reduction algorithm for scalar data. This challenge motivated the development of the novel method presented in this paper. Clustering involves dividing a set of data points into non-overlapping groups - called clusters - of data points, where points in a cluster are more similar to one another than to points in other clusters. The term more similar, when applied to clustered data, usually means closer by some measure of proximity. When a dataset is clustered, every point is assigned to some cluster, and every cluster can be characterized by a single reference point (centroid), usually an average of the points in the cluster. When clustering is used for data reduction, it is accomplished by replacing the coordinates of each point in a cluster with the coordinates of that clusters centroid. The value of a particular clustering method will depend on how good the centroid represent the data as well as how fast the program runs. The standard measure of the spread of a group of points about its centroid is the deviation, or the sum of the square distances between each point and it's cluster's centroid. A generalization of the deviation is used in cluster analysis to indicate the overall quality of a partitioning. It can be used for comparing the accuracy of the calculated centroids, resulting from different algorithms. Specifically, the error measure is the sum of all deviations (one for each cluster):

52

International Scientific Conference Computer Science2011

where is the original data point in the cluster, is the proposed centroid point of the cluster, and is the number of points in that cluster. The notation stands for the distance between and . The error measure indicates the overall spread of data points about their assumed centroid. To achieve a highquality clustering, should be as small as possible. In this paper we discuss only clustering of one-dimensional data sets, similar to the test data sets shown at the end of this paper. The initial centroids are objects of the dataset, which have the minimal distance to the other objects in the same cluster. 2. THE ALGORITHM K-MEANS The clustering algorithm k-means [2] is an iterative method for finding a partitioning of data points into clusters, where each point belongs to the cluster with the nearest mean. The algorithm begins with an initialization step, in which initial cluster-centroids are chosen. Each point is then assigned to the nearest centroid, and each centroid is recomputed as the arithmetic mean of all points assigned to it. The goal is to choose centroids such that , the sum all deviations, is minimized. These two steps -- assignment and center recalculation -- are repeated until the process converges. The converged state is indicated by a clustering which does not change from iteration to iteration. The main algorithm is subdivided into the following steps: A. Choose data points from the given dataset that is being clustered. These points represent the initial group of centroids. B. Assign all remaining data points to their corresponding clusters with nearest centroid. C. When all objects have been assigned, recalculate the positions of the centroids. D. Repeat Steps B and C until the process converges. The total error is monotonically decreasing, which ensures that no clustering is repeated during the iterations of the algorithm. In practice, very few iterations are usually required, which makes the algorithm much faster than most of its competitors. Unfortunately, the k-means algorithm has at least two major shortcomings: First, it has been shown that the worst case running time of the algorithm is super-polynomial in the input size. Second, the approximation found can be arbitrarily bad with respect to the objective function compared to the optimal clustering. An improved version of the k-means algorithm exists, called k-means++ [1]. kmeans++ addresses these disadvantages by specifying a procedure to initialize the cluster centres in a special way before proceeding with the standard k-means iterations. With the k-means++ initialization, the algorithm is guaranteed to find a solution that is competitive to the optimal k-means solution. Simplicity and speed of the modified method are very appealing in practice. Specifically, the k-means++ algorithm chooses a point as a centroid with probability proportional to s contribution to the overall potential for every cluster. The calculation of the clustering then proceeds exactly like the original k-means.

53

International Scientific Conference Computer Science2011

3. NEW METHOD FOR IMPROVED FIRST SEEDING Our study addresses possible performance gains through the specialization of kmeans++ for scalar-valued data sets. Such data sets can be easily ordered, and thus an efficient algorithm for clustering can be derived. In the following description it is assumed that the data points are represented by list of values , sorted in an ascending order. The basic idea of our method is to choose initial centroids among the input data points, so that their initial positions are as close as possible to their final positions. The refined initial starting condition allows the iterative algorithm to converge to a better local minimum. Another effect is an improved convergence: the number of necessary iterations of the main algorithm is decreased significantly. The new algorithm for initial seeding consists of the following steps: A. Choose initial centroids. Exclude all duplicate values from clustering. Check if the number of requested clusters is greater than the number of data points and if so, return all points as centroids. Divide the data set into preliminary clusters, each one of them defined by a lower and a upper bound, for instance as follows: The lower bound of the first cluster is the first data point . The upper bound of the last cluster is the last data point . Upper bound for the cluster is calculated as follows:

The lower bound is the data point found after the upper bound . Within the preliminary clusters, assume that every data point is a centroid candidate. For each centroid candidate, the sum of squared distances between it and all other points within the same cluster is calculated. The data point associated to the minimal sum is taken as the best initial centroid for the cluster. The algorithm then proceeds with steps B, C and D from the original k-means algorithm. B. Assign all remaining data points to their corresponding clusters with nearest centroid. C. When all objects have been assigned, recalculate the positions of the centroids. D. Repeat Steps B and C until the process converges. The scalar property of the data points is exploited in several ways. First, the distance calculation simplifies to subtraction of floating point numbers. Second, a preliminary clustering, like the one presented above, can be easily implemented. It relies entirely on the order of the data points and would not be possible in an efficient way with vector-valued data points. It should be pointed out that the initial subdivision into preliminary clusters can be performed based not on bounds within the domain of data values , but rather based on ranges of the index . This approach must employ an additional algorithm for creating preliminary clusters with similar size. A variant like this would deliver preliminary clusters which do not depend on the data itself. Such a version of the novel clustering initialization was implemented and tested in our study, but details about it are left out due to space limitations. 54

International Scientific Conference Computer Science2011

4. EVALUATION AND RESULTS The proposed new seeding method was tested and compared to the conventional kmeans algorithm, as well as to the k-means++ variant. Our test dataset is contains 100.000 uniformly distributed random floating point values, 31.199 up to 31.308 of them being distinct values. The three algorithms are compared in eight cases intended for different data reduction ratios. Three algorithm characteristics are compared. Run-time - the time consumed for the step of choosing the initial centroids out of the points in the data set. Deviation - the achieved error measure , calculated as the sum of all deviations, or the sum of the square distances between each point and it's cluster's centroid. Iterations - number of iterations needed by the core k-means algorithm to converge. The comparison results are listed in Tab. 1 at the end of this section. A discussion of the graphical representation of these results follows. 4.1. Run-time The trend of the run-time, required for seeding, is plotted against the number of requested clusters in Fig. 1. The standard k-means algorithm exhibits a moderate increase of the seeding run-time when the number of clusters is increased. In comparison, the k-means++ version of the seeding has a faster increasing effort, which makes that method useless for our needs. Our new algorithm performs the initial seeding in an efficient way, such that the delay of the initialization process becomes practically negligible for any number of clusters.

25,00 20,00 Run-time, ms 15,00 10,00 5,00 0,00 1000 5000 10000 15000 20000 25000 Number of requested clusters Fig. 1: Run-time of the seeding versus number of requested clusters 2000 30000 k-means k-means++ improved

The algorithm-version k-means++ seems to exhibit the slowest seeding among the three methods under test. Since Fig. 1 documents the run-time of the seeding only, it is not obvious from the figure how the total run-time of the clustering behaves. k-means++ does indeed pay off when used for vector-valued data and when the number of requested clusters is small compared to the size of the original data set. Yet for scalar data it can hardly compete with the novel method presented in this paper, if run-time is considered. 55

International Scientific Conference Computer Science2011

4.2. Deviation The error measures achieved by the three algorithms are compared in the plot in Fig. 2. The error measure is calculated as a sum of all deviations among the resulting clustering. A common property of all algorithms is the fact that the error measure increases as the number of requested clusters decreases. The new algorithm delivers a seeding leading to the most accurate clustering among three when extensive data reduction is required.

30,00 25,00 20,00 15,00 10,00 5,00 0,00 1000 5000 10000 15000 20000 25000 Number of requested clusters Fig. 2: Error measure versus number of requested clusters. 2000 30000 k-means k-means++ improved

4.3. Number of iterations A comparison of the number of k-means iterations for the three algorithms under consideration is found in Fig. 3. It is evident from the figure that our new algorithm constantly leads to the lowest number of core k-means iterations. As the iterative process usually consumes the most time within the entire clustering procedure, this property is the most valuable one for our application case of scalar data. This positive result is explained as follows. The points chosen for initial centroids by the new improved algorithm are closer to the final positions of the centroids of every cluster. Therefore, fewer iterations are required for the k-means iterative process to converge.

80 70 Number of iterations 60 50 40 30 20 10 0 1000 5000 10000 15000 20000 25000 Number of requested clusters Fig. 3: Number of k-means iterations versus number of requested clusters. 2000 30000 k-means k-means++ improved

56

Deviation

International Scientific Conference Computer Science2011

Tab. 1: Data values for each case Case Num. distinct Num. centroids k-means run-time (s) deviation iterations k-means++ run-time (s) deviation iterations run-time (s) deviation iterations 0,699 24,8535 26 0,057 24,50479 17 1,447 12,2650 17 0,035 12,1512 11 3,502 4,7460 8 0,023 4,71363 7 6,995 2,2234 5 0,02 2,24168 4 10,492 1,3801 4 0,021 1,44284 3 13,895 0,8355 3 0,02 0,96107 1 17,501 2 0,022 0,537 1 21,224 1 0,022 0,1072 1 0,38203 0,0549 0,001 26,71531 69 0,003 13,37371 35 0,018 5,36059 21 0,071 2,58015 11 0,153 1,54658 7 0,26 0,91778 4 0,391 0,4349 4 0,544 0,0646 2 1 31307 1000 2 31199 2000 3 31229 5000 4 31210 10000 5 31308 15000 6 31259 20000 7 31217 25000 8 31214 30000

Our new seeding algorithm

5. SUMMARY In this paper, a new initialization technique is presented for the common k-means clustering algorithm. The new method is a specialization for scalar-valued data sets. It exploits the scalar property for efficiently seeding the initial centroids, passed to the kmeans iterative process. The novel seeding technique itself has a low run-time compared to the standard k-means and to k-means++. The resulting seeding improves significantly the convergence of the iterative clustering process. The quality of the clustering result improves as well. 6. REFERENCES [1] Arthur, D., Vassilvitskii, S. (2007) k-means++: the advantages of careful seeding. Proceedings of the eighteenth annual ACM-SIAM symposium on Discrete algorithms, 10271035 [2] Lloyd, S. P. (1982) Least Squares Quantization in PCM. IEEE Trans. Information Theory 28, 129-137.

57

Você também pode gostar

- Koine GreekDocumento226 páginasKoine GreekΜάριος Αθανασίου100% (6)

- Solution Manual For Modern Quantum Mechanics 2nd Edition by SakuraiDocumento13 páginasSolution Manual For Modern Quantum Mechanics 2nd Edition by Sakuraia440706299Ainda não há avaliações

- Heat & Mass Transfer PDFDocumento2 páginasHeat & Mass Transfer PDFabyabraham_nytro50% (6)

- Cisco Ccna Icnd PPT 2.0 OspfDocumento15 páginasCisco Ccna Icnd PPT 2.0 OspfAMIT RAJ KAUSHIKAinda não há avaliações

- Math 11-CORE Gen Math-Q2-Week 1Documento26 páginasMath 11-CORE Gen Math-Q2-Week 1Christian GebañaAinda não há avaliações

- Power Window Four Windows: Modul ControlsDocumento2 páginasPower Window Four Windows: Modul ControlsHery IswantoAinda não há avaliações

- Performance Evaluation of K-Means Clustering Algorithm With Various Distance MetricsDocumento5 páginasPerformance Evaluation of K-Means Clustering Algorithm With Various Distance MetricsNkiru EdithAinda não há avaliações

- Compressor Anti-Surge ValveDocumento2 páginasCompressor Anti-Surge ValveMoralba SeijasAinda não há avaliações

- Seafloor Spreading TheoryDocumento16 páginasSeafloor Spreading TheoryMark Anthony Evangelista Cabrieto100% (1)

- Analysis&Comparisonof Efficient TechniquesofDocumento5 páginasAnalysis&Comparisonof Efficient TechniquesofasthaAinda não há avaliações

- An Initial Seed Selection AlgorithmDocumento11 páginasAn Initial Seed Selection Algorithmhamzarash090Ainda não há avaliações

- Dynamic Approach To K-Means Clustering Algorithm-2Documento16 páginasDynamic Approach To K-Means Clustering Algorithm-2IAEME PublicationAinda não há avaliações

- PkmeansDocumento6 páginasPkmeansRubén Bresler CampsAinda não há avaliações

- KNN VS KmeansDocumento3 páginasKNN VS KmeansSoubhagya Kumar SahooAinda não há avaliações

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Documento11 páginasIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationAinda não há avaliações

- Clustering Techniques and Their Applications in EngineeringDocumento16 páginasClustering Techniques and Their Applications in EngineeringIgor Demetrio100% (1)

- A Novel Approach For Data Clustering Using Improved K-Means Algorithm PDFDocumento6 páginasA Novel Approach For Data Clustering Using Improved K-Means Algorithm PDFNinad SamelAinda não há avaliações

- Comparative Analysis of K-Means and Fuzzy C-Means AlgorithmsDocumento5 páginasComparative Analysis of K-Means and Fuzzy C-Means AlgorithmsFormat Seorang LegendaAinda não há avaliações

- K-Means ClusteringDocumento8 páginasK-Means ClusteringAbeer PareekAinda não há avaliações

- K Means ClusteringDocumento6 páginasK Means ClusteringAlina Corina BalaAinda não há avaliações

- Discovering Knowledge in Data: Lecture Review ofDocumento20 páginasDiscovering Knowledge in Data: Lecture Review ofmofoelAinda não há avaliações

- An Effective Evolutionary Clustering Algorithm: Hepatitis C Case StudyDocumento6 páginasAn Effective Evolutionary Clustering Algorithm: Hepatitis C Case StudyAhmed Ibrahim TalobaAinda não há avaliações

- An Improved K-Means Clustering AlgorithmDocumento16 páginasAn Improved K-Means Clustering AlgorithmDavid MorenoAinda não há avaliações

- Ijert Ijert: Enhanced Clustering Algorithm For Classification of DatasetsDocumento8 páginasIjert Ijert: Enhanced Clustering Algorithm For Classification of DatasetsPrianca KayasthaAinda não há avaliações

- Create List Using RangeDocumento6 páginasCreate List Using RangeYUKTA JOSHIAinda não há avaliações

- Unit 3 & 4 (p18)Documento18 páginasUnit 3 & 4 (p18)Kashif BaigAinda não há avaliações

- K-Means Clustering Clustering Algorithms Implementation and ComparisonDocumento4 páginasK-Means Clustering Clustering Algorithms Implementation and ComparisonFrankySaputraAinda não há avaliações

- Efficient K-Means Clustering Algorithm Using Feature Weight and Min-Max NormalizationDocumento4 páginasEfficient K-Means Clustering Algorithm Using Feature Weight and Min-Max NormalizationRoopamAinda não há avaliações

- KMEANSDocumento9 páginasKMEANSjohnzenbano120Ainda não há avaliações

- Assignment 5Documento3 páginasAssignment 5Pujan PatelAinda não há avaliações

- SQLDM - Implementing K-Means Clustering Using SQL: Jay B.SimhaDocumento5 páginasSQLDM - Implementing K-Means Clustering Using SQL: Jay B.SimhaMoh Ali MAinda não há avaliações

- A New Method For Dimensionality Reduction Using K-Means Clustering Algorithm For High Dimensional Data SetDocumento6 páginasA New Method For Dimensionality Reduction Using K-Means Clustering Algorithm For High Dimensional Data SetM MediaAinda não há avaliações

- The International Journal of Engineering and Science (The IJES)Documento4 páginasThe International Journal of Engineering and Science (The IJES)theijesAinda não há avaliações

- 4 ClusteringDocumento9 páginas4 ClusteringBibek NeupaneAinda não há avaliações

- Data Clustering Using Kernel BasedDocumento6 páginasData Clustering Using Kernel BasedijitcajournalAinda não há avaliações

- Ijret 110306027Documento4 páginasIjret 110306027International Journal of Research in Engineering and TechnologyAinda não há avaliações

- Normalization Based K Means Clustering AlgorithmDocumento5 páginasNormalization Based K Means Clustering AlgorithmAntonio D'agataAinda não há avaliações

- I Jsa It 04132012Documento4 páginasI Jsa It 04132012WARSE JournalsAinda não há avaliações

- Research Papers On K Means ClusteringDocumento5 páginasResearch Papers On K Means Clusteringtutozew1h1g2100% (3)

- ZaraDocumento47 páginasZaraDavin MaloreAinda não há avaliações

- A Fast K-Means Implementation Using CoresetsDocumento10 páginasA Fast K-Means Implementation Using CoresetsHiinoAinda não há avaliações

- AK-means: An Automatic Clustering Algorithm Based On K-MeansDocumento6 páginasAK-means: An Automatic Clustering Algorithm Based On K-MeansMoorthi vAinda não há avaliações

- Storage Technologies: Digital Assignment 1Documento16 páginasStorage Technologies: Digital Assignment 1Yash PawarAinda não há avaliações

- A Dynamic K-Means Clustering For Data Mining-DikonversiDocumento6 páginasA Dynamic K-Means Clustering For Data Mining-DikonversiIntanSetiawatiAbdullahAinda não há avaliações

- Zhong - 2005 - Efficient Online Spherical K-Means ClusteringDocumento6 páginasZhong - 2005 - Efficient Online Spherical K-Means ClusteringswarmbeesAinda não há avaliações

- TCSOM Clustering Transactions Using Self-Organizing MapDocumento13 páginasTCSOM Clustering Transactions Using Self-Organizing MapAxo ZhangAinda não há avaliações

- Simple K MeansDocumento3 páginasSimple K MeansSrisai KrishnaAinda não há avaliações

- Cluster Center Initialization Algorithm For K-Means ClusteringDocumento10 páginasCluster Center Initialization Algorithm For K-Means ClusteringmauricetappaAinda não há avaliações

- OPTICS: Ordering Points To Identify The Clustering StructureDocumento12 páginasOPTICS: Ordering Points To Identify The Clustering StructureqoberifAinda não há avaliações

- Assignment ClusteringDocumento22 páginasAssignment ClusteringNetra RainaAinda não há avaliações

- Lecture14 NotesDocumento9 páginasLecture14 NoteschelseaAinda não há avaliações

- A Comparative Study of K-Means, K-Medoid and Enhanced K-Medoid AlgorithmsDocumento4 páginasA Comparative Study of K-Means, K-Medoid and Enhanced K-Medoid AlgorithmsIJAFRCAinda não há avaliações

- Parallel MS-Kmeans Clustering Algorithm Based On MDocumento18 páginasParallel MS-Kmeans Clustering Algorithm Based On MjefferyleclercAinda não há avaliações

- Experiment No 7Documento9 páginasExperiment No 7Aman JainAinda não há avaliações

- Data Mining and Machine Learning PDFDocumento10 páginasData Mining and Machine Learning PDFBidof VicAinda não há avaliações

- An Efficient Incremental Clustering AlgorithmDocumento3 páginasAn Efficient Incremental Clustering AlgorithmWorld of Computer Science and Information Technology JournalAinda não há avaliações

- An Introduction To Clustering MethodsDocumento8 páginasAn Introduction To Clustering MethodsmagargieAinda não há avaliações

- Sine Cosine Based Algorithm For Data ClusteringDocumento5 páginasSine Cosine Based Algorithm For Data ClusteringAnonymous lPvvgiQjRAinda não há avaliações

- A Two Step Clustering Method For Mixed Categorical and Numerical DataDocumento9 páginasA Two Step Clustering Method For Mixed Categorical and Numerical Dataaparna_yedlaAinda não há avaliações

- Attack Detection by Clustering and Classification Approach: Ms. Priyanka J. Pathak, Asst. Prof. Snehlata S. DongreDocumento4 páginasAttack Detection by Clustering and Classification Approach: Ms. Priyanka J. Pathak, Asst. Prof. Snehlata S. DongreIjarcsee JournalAinda não há avaliações

- A Dynamic K-Means Clustering For Data MiningDocumento6 páginasA Dynamic K-Means Clustering For Data MiningelymolkoAinda não há avaliações

- Efficient Data Clustering With Link ApproachDocumento8 páginasEfficient Data Clustering With Link ApproachseventhsensegroupAinda não há avaliações

- Journal of Computer Applications - WWW - Jcaksrce.org - Volume 4 Issue 2Documento5 páginasJournal of Computer Applications - WWW - Jcaksrce.org - Volume 4 Issue 2Journal of Computer ApplicationsAinda não há avaliações

- JETIR1503025Documento4 páginasJETIR1503025EdwardAinda não há avaliações

- A Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersDocumento7 páginasA Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersArief YuliansyahAinda não há avaliações

- DynamicclusteringDocumento6 páginasDynamicclusteringkasun prabhathAinda não há avaliações

- Project 2 Clustering Algorithms: Team Members Chaitanya Vedurupaka (50205782) Anirudh Yellapragada (50206970)Documento15 páginasProject 2 Clustering Algorithms: Team Members Chaitanya Vedurupaka (50205782) Anirudh Yellapragada (50206970)vedurupakaAinda não há avaliações

- Python Machine Learning for Beginners: Unsupervised Learning, Clustering, and Dimensionality Reduction. Part 1No EverandPython Machine Learning for Beginners: Unsupervised Learning, Clustering, and Dimensionality Reduction. Part 1Ainda não há avaliações

- Open Mapping Theorem (Functional Analysis)Documento3 páginasOpen Mapping Theorem (Functional Analysis)Silambu SilambarasanAinda não há avaliações

- HKV-8 Valve Catalog SPLRDocumento128 páginasHKV-8 Valve Catalog SPLRCabrera RodriguezAinda não há avaliações

- Reading Report Student's Name: Leonel Lipa Cusi Teacher's Name: Maria Del Pilar, Quintana EspinalDocumento2 páginasReading Report Student's Name: Leonel Lipa Cusi Teacher's Name: Maria Del Pilar, Quintana EspinalleonellipaAinda não há avaliações

- Fiitjee Fiitjee Fiitjee Fiitjee: Fortnightly Assessment QuizDocumento8 páginasFiitjee Fiitjee Fiitjee Fiitjee: Fortnightly Assessment QuizIshaan BagaiAinda não há avaliações

- ECON4150 - Introductory Econometrics Lecture 2: Review of StatisticsDocumento41 páginasECON4150 - Introductory Econometrics Lecture 2: Review of StatisticsSaul DuranAinda não há avaliações

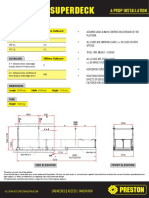

- SuperDeck All ModelsDocumento12 páginasSuperDeck All Modelsarthur chungAinda não há avaliações

- Contoh Pembentangan Poster Di ConferenceDocumento1 páginaContoh Pembentangan Poster Di ConferenceIka 1521Ainda não há avaliações

- Assignments CHSSCDocumento7 páginasAssignments CHSSCphani12_chem5672Ainda não há avaliações

- Prob AnswersDocumento4 páginasProb AnswersDaniel KirovAinda não há avaliações

- Topic: Partnership: Do Not Distribute - Highly Confidential 1Documento7 páginasTopic: Partnership: Do Not Distribute - Highly Confidential 1Tharun NaniAinda não há avaliações

- A Generic Circular BufferDocumento3 páginasA Generic Circular BufferSatish KumarAinda não há avaliações

- Cell Biology: Science Explorer - Cells and HeredityDocumento242 páginasCell Biology: Science Explorer - Cells and HeredityZeinab ElkholyAinda não há avaliações

- Flexible Perovskite Solar CellsDocumento31 páginasFlexible Perovskite Solar CellsPEDRO MIGUEL SOLORZANO PICONAinda não há avaliações

- Decompiled With CFR ControllerDocumento3 páginasDecompiled With CFR ControllerJon EricAinda não há avaliações

- 2017 Alcon Catalogue NewDocumento131 páginas2017 Alcon Catalogue NewJai BhandariAinda não há avaliações

- SampleDocumento43 páginasSampleSri E.Maheswar Reddy Assistant ProfessorAinda não há avaliações

- Fixed Frequency, 99% Duty Cycle Peak Current Mode Notebook System Power ControllerDocumento44 páginasFixed Frequency, 99% Duty Cycle Peak Current Mode Notebook System Power ControllerAualasAinda não há avaliações

- Concept Note For The Conversion of 75 TPHDocumento2 páginasConcept Note For The Conversion of 75 TPHMeera MishraAinda não há avaliações

- TractionDocumento26 páginasTractionYogesh GurjarAinda não há avaliações

- Mechanical Damage and Fatigue Assessment of Dented Pipelines Using FeaDocumento10 páginasMechanical Damage and Fatigue Assessment of Dented Pipelines Using FeaVitor lopesAinda não há avaliações

- Sodium Borohydride Reduction of CyclohexanoneDocumento6 páginasSodium Borohydride Reduction of CyclohexanoneIqmal HakimiAinda não há avaliações

- Ali Math Competition 3 English Reference SolutionsDocumento11 páginasAli Math Competition 3 English Reference SolutionsJEREMIAH ITCHAGBEAinda não há avaliações