Escolar Documentos

Profissional Documentos

Cultura Documentos

TCP Veno and Veno II

Enviado por

Manju ManjuDescrição original:

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

TCP Veno and Veno II

Enviado por

Manju ManjuDireitos autorais:

Formatos disponíveis

TCP Veno and Veno II

Thanks to C. P Fu:

This report gives me a Comprehensive revision and appreciation of Veno

Prepared By: Nie Zheng

November 6, 2006

TCP Veno and Veno II 1

Abstract Wireless access network is widely used in our life, but it can suffer from random packet loss due to bit errors. VoIP is the routing of voice conversation over the internet, and packet loss and jitter are challenges to it. In the near future, IPv4 is upgraded to IPv6 soon, what is the next generation of current layer SCTP, TCP and UDP? Veno and Veno2! In this paper, we will review Veno, compare it to Reno and Vegas, how it improve TCP layer significantly; we will also examine this greatest role in streaming congestion control, and the significant contribution to DCCP; last but not least, we will also forecast the next generation of Veno, Veno2, a greatest choice to enhance both IPv4 and IPv6! Introduction and fundamental of TCP Veno TCP Veno is a novel end-to-end congestion control scheme, published by Franklin FU, The Chinese University of Hong Kong, in 1998. This invention improves TCP performance quite significantly over heterogeneous networks. It is simple and effective for dealing with random packet loss. The key success is that, it not only monitors the congestion level of network, but also uses information to differentiate factors lead to packet loss: congestion and random bit errors. TCP Veno provides different measure to different loss. While Reno multiplicative decrease regardless of cause of packet loss, Veno adjust the slowstart threshold according to congestion level rather than fixed drop factor. It also refines increase algorithm adopted by Reno, so network bandwidth can be fully utilize. Another advantage is deployability and compatibility, and it only needs simple modification at sender side of Reno protocol stack. Veno can achieve significant throughput improvements without adversely affecting other concurrent TCP connections in the same network; Veno can effectively tap unused bandwidth, without hogging network bandwidth at the expense of other TCP connections. Veno can deal with wireless network very well, and throughput improvement even can reach 80%! With out changing receiver side protocol stack of network, only modification of Reno on sender is needed. It can be deployed in server applications over the current Internet, coexisting with Reno. The rest paper consists: TCP Veno algorithm, explanation on detail of mechanism and technique; Veno's evaluation and contribution, based on testbed experiments and live Internet measurements, show comparison between Reno, Vegas and Veno; Streaming congestion control in DCCP, a brief introduction to technique and purpose of DCCP; Veno application in DCCP, how Veno can be applied to DCCP and the advantage using it in DCCP; TCP Veno in future communication, the trend of implementation of Veno II. Veno2 = TCP Veno + DCCP Veno! The last part foresees the bright future of communication with the support of Veno II layer.

TCP Veno and Veno II

TCP Veno algorithm and mechanism A fundamental problem that remains unsolved for TCP is that, identify the difference of random loss and congestion loss. TCP Veno makes use of a state distinguishing scheme, and integrates it into congestion window evolution scheme of TCP Reno. Some method derived from Vegas is also adopted in Veno: measure the so-called Expected and Actual rates in sender side Cwnd: current congestion window size, BaseRTT: minimum measured round-trip time, RTT: smoothed round-trip time measured. We denote backlog at the queue by N here, we can have following equation So we can get the estimation of N Vegas attempts to keep N in a small range of value, this proactive adjustment avoid packet loss, also lead to less aggressive than Reno. Veno make use this N as an indication of network congestion state, also adopt Reno method, that windows size progressively increase as packet loss not occurred. We can conclude congestive state of network from value of N. if N is greater than some level, it is congestive; otherwise, and the network state is non-congestive. Another issue solved by Veno is how to deal with congestion. Veno refines algorithms from Reno Veno refines additive increase (AI) and multiplicative decrease (MD) phase, initial slow start, fast retransmit, fast recovery, computation of the retransmission timeout, and the backoff algorithm not affected, and congestion window (cwnd) evolution here is more efficient: Additive Increase Algorithm: In Reno, there is a window threshold parameter ssthresh. When cwnd is below ssthresh, the slow-start algorithm will be used to adjust cwnd. When cwnd is above ssthresh, cwnd will increase by one every RTT rather than every ACK received. Essentially, cwnd set to cwnd +1/cwnd to achieve linear effect every ACK received. Veno refine it further as follow: In AI phase, IF (N <) THEN set cwnd = cwnd + 1/cwnd for every new ACK ELSE IF (N ) THEN set cwnd = cwnd+ 1/cwnd for every other new ACK Multiplicative Decrease Algorithm In Reno, it employs an algorithm so-called fast retransmit to detect packet loss. When receiver receive out-of-order packet, it continues to send ACKs to sender, ask for such packet retransmission. TCP Veno and Veno II 3

Following is algorithm adopted by Reno: 1) Retransmit the missing packet set ssthresh = cwnd / 2 set cwnd = ssthresh + 3 2) Each time another dup ACK arrives, increment cwnd by one packet. 3) When the next ACK acknowledging new data arrives, set cwnd to ssthresh (value in step 1). Veno refine it as following: In MD phase, 1) Retransmit the missing packet, and IF (N <) THEN set ssthresh = cwnd 4/5 ELSE IF (N ) THEN set ssthresh = cwnd 1/2, and set cwnd = ssthresh + 3, 2) Each time another dup ACK arrives, increment cwnd by one packet. 3) When the next ACK acknowledging new data arrives, set cwnd to ssthresh (value in step 1). Veno assume loss is random when connection is not in congestive states, and reduce by 4/5. When loss due to error, Veno considers connection is in congestive state, ssthresh will be reduced significantly. Veno's evaluation and contribution Here we may emphasize the experiments conducted by C. P. Fu, and examine the advantage compares to Reno. Following is topology adopt by C. P. Fu

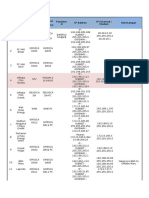

In the simulation, we have Veno and Reno TCP connections sharing the bottleneck link with cross traffic between R1 and R2. Bottleneck link uses FIFO queuing discipline. The links between R2 and all TCP sinks act as wireless links. The aggregating Pareto cross traffic sources with shape parameters of 1.7 (<2) produce Long Range Dependent (LRD) traffic [11], indicates the buffer size of bottleneck link between R1 and R2, indicates the one way propagation delay (does not include transmission time and queuing delay) of bottleneck link. B

TCP Veno and Veno II

represents the bottleneck capacity. Each parameter is applied for both forward and backward directions. Let Cl denote the cross traffic load (i.e., the utilization of bottleneck capacity approximately taken by cross traffic). Let be the random loss rate over wireless links. We apply different Cl and to perform the evaluation under different network conditions. Each experiment lasts for 500 seconds. In this scenario, the packet size of a Veno and a Reno connection is 1460byes. , B and take 60ms, 4Mbps and 28, respectively. The buffer size of all the other links is 50 by default. The links between TCP sources and R1 are assigned with 10Mbps and 0.1ms one-way propagation delay in both directions. The wireless links between R2 and TCP sink have 2Mbps capacity and 0.01ms one-way propagation delay in both directions. Table 1, Table 2 and Table 3 summarized the results for Cl of 30%, 50% and 70% 1, respectively. We obtain three observations 1) PN|C decreases and PN<|W increases when Cl is increased. 2) PN<|W increases and PN|C exhibits fluctuation with the increase of , while Cl is constant. 3) When the network is heavily congested (Cl=70%), Vegass capability of congestion loss distinguishing (PN|C) is seriously weaken.

1. Referring to definition in [3], the backlog can be written as term cwnd*(1BaseRTT/RTT). When a TCP Veno connection establishes, if the network has been heavily loaded by cross traffic, BaseRTT may be overestimated and thus the term (1- BaseRTT/RTT) decrease. Consequently, backlog is underestimated, which results in underestimation of network state. Moreover, since Veno

TCP Veno and Veno II

connection occupies only a small fraction of network capacity about 15% of bottleneck capacity, the impact of this connection on congestion level is insignificant. This implies that BaseRTT may still be overestimated even though Veno drops congestion window to reduce the rate, because network yet cannot be driven out of congestive state. The reason for increasing trends of PN<|W can be explained as follows. If a random loss occurs when the network is in congestion state (at least packets are queueing up in bottleneck buffer and buffer is not overflowed), the random loss will be regarded as congestion loss. Recall that on one hand, as Cl increases, underestimation of network congestion state worsens the accuracy of congestion loss distinguishing. 2. PN<|W increases and PN|C exhibits fluctuation with the increase of , while Cl is constant. More frequent occurrence of random loss that Veno has to react to make less chance for Veno to raise its sending reduces the chances of identifying random loss as congestion loss. We can see that under relatively small Cl (30%, 50%), this trend is more significant than the trend under extremely large Cl (70%), because a TCP flow which uses a very small fraction of network capacity makes minimal influence on network state, as what we have discussed above. Heavy cross traffic load and random loss results in small PN|C and large PN<|W. 3. PN|C reduces with the increase of Cl. In extreme case that Cl is 70% and is 0, PN|C is 0.15. It implies that among about each 7 congestion losses, only one can be accurately classified while the other 6 are misdiagnosed as random losses and correspondingly, rate to drive the network into congestive state and thus, congestion window is dropped only by 1/5. In such case, one may think that a Veno connection must be very aggressive due to its low accuracy of congestion loss distinguishing and will grab much bandwidth resource from the co-existing Reno connection. However, we found that in such extreme case, Veno can still keep its characteristic of compatibility Veno continues to work friendly with Reno without stealing the bandwidth from Reno. Since the congestion window of Veno has been heavily restricted by frequent congestion losses in case of extreme heavy load. So the difference between congestion windows dropping by and 1/5 is insignificant. For example, in Figure 3, when Cl is 70%, the average congestion window of the Veno connection is only about 5.1 while the average congestion window of the Reno connection is 4.9. Simulation results show that packet loss distinguishing in Veno can perform well in networks with light and moderate cross traffic, but in environment with heavy cross traffic, Veno cannot borrow the congestion detection scheme in Vegas and should figure out a better one to replace it if possible. Nonetheless, overall performance of Veno still works well when it competes with other co-existing connection. This distinct feature is definitely attributed to the complete signals monitored - packet loss (detected by duplicated acks) and supplementary state estimation and window reduction action according to these synthesized signals in Veno.

TCP Veno and Veno II

Streaming congestion control (TFRC) in DCCP DCCP, the Datagram Congestion Control Protocol provides a congestion controlled flow. Delay-sensitive applications, such as streaming media, historically used UDP and implemented their own congestion control mechanisms, it is a difficult task. DCCP aims to deploy these applications easily. We aim to provide a simple minimal congestion control protocol upon which other higher-level protocols could be built. We called this the Datagram Congestion Control Protocol. We believe that a new transport protocol is needed, one that combines unreliable datagram delivery with built-in congestion control. This protocol would act as an enabling technology: new and existing applications could use it to easily transfer timely data without destabilizing the Internet. Choice of congestion control mechanism. While our applications are usually able to adjust their transmission rate based on congestion feedback, they do have constraints on how this adaptation can be performed to minimize the effect on quality. Thus, they tend to need some control over the short-term dynamics of the congestion control algorithm, while being fair to other traffic on medium timescales. This control includes in uence over which congestion control algorithm is used|for example, TFRC [8] rather than strict TCP-like congestion control. It would also be possible to modify TCP or SCTP to provide unreliable semantics, or modify RTP to provide congestion control. TCP seems particularly inappropriate, given its byte-stream semantics and reliance on cumulative acknowledgements; changing the semantics to this extent would signicantly complicate TCP implementations and cause serious confusion at rewalls or monitoring systems. SCTP [18] is a better match, as it was originally designed with packet-based semantics and out-of-order delivery in mind. However, the overlap with our requirements is partial at best; SCTP is hardly minimal, provides functionality that is unnecessary for many of the applications that might use DCCP, and does not currently allow the negotiation of different congestion control semantics.2 Adding congestion control to RTP [21] seems a reasonable option for audio/video applications. However, the UDP issues also mostly apply to RTP. In addition, RTP carries rather too many application semantics to really form a general-purpose building block for the future. Carrying RTP over DCCP seems a cleaner separation of functionality. We may require that the scheme adopt also be a TCP-Friendly Rate Control protocol. It must satisfy following requirement: Do not aggressively seek out available bandwidth. That is, increase the sending rate slowly in response to a decrease in the loss event rate. Do not reduce the sending rate in half in response to a single loss event. However, do reduce the sending rate in half in response to several successive loss events. The receiver should report feedback to the sender at least once per round-trip time if it has received any packets in that interval. If the sender has not received feedback after several round-trip times, then the sender should reduce its sending rate, and ultimately stop sending altogether. We can see that, Veno can serve the above purpose very well, it should be satisfiable with any application of streaming congestion control.

TCP Veno and Veno II

TCP Veno in future communication There are 3 big advantages of Veno in futures network For any improved TCP to be worthwhile, it must be easy to deploy over the existing Internet. Therefore, before modifying the legacy TCP design, we must ask the question: Is this modification or design amenable to easy deployment in real networks? To realize this goal, ideally, there should little or preferably no changes required at intermediate routers. For the two ends of the communicating terminals, it is preferred that only one side requires changes, so that the party interested in the enhanced performance can incorporate the new algorithm without requiring or forcing all its peers to adopt the changes. Generally, it is preferred that the sender that sends large volumes of data to many receivers to modify its algorithm (e.g., the Web server) rather than the receivers. The simple modification at the TCP sender stack makes Veno easily deployable in real networks. Any party who is interested in the enhanced performance can single handedly do that by installing the Veno stack. Compatibility refers to whether a newly introduced TCP is compatible with the legacy TCP in the sense that it does not cause any detrimental effects to the legacy TCP, and vice versa, when they are running concurrently in the same network. Veno coexists harmoniously with Reno without stealing bandwidth from Reno. Its improvement is attributed to its efficient utilization of the available bandwidth. Flexibility refers to whether the TCP can deal with a range of different environments effectively. It is difficult to categorically declare one TCP version to be flexible or not flexible. We can say, however, Veno is more flexible than Reno in that it can deal with random loss in wireless networks better, alleviate the suffering in asymmetric networks [1], and has comparable performance in wired networks. To date, much work has been explored to center on accurately making out which of packet losses are due to congestion and which are due to bit-errors or other noncongestion reasons, but, limited to too many uncertain factors (i.e., background traffic changing along the connection path) in real networks, progress in this kind of judging looks very slow. Perhaps, it may be more meaningful and more practical for a TCP connection to differentiate between congestive drops (occurring in congestive state) and noncongestive drops (occurring in noncongestive state). Veno adopts such differentiation to circumvent the packet-loss-type-distinguishing. Further study on this issue will be conducted in future work. Veno2 = TCP Veno + DCCP Veno! The best performance on TCP layer and DCCP layer, lead to the potential of Veno developed further into Veno II. And Veno II can give a excellent support to the next generation internet. We can believe that, Veno II has such potential to develop to the independent platform to support internet service in the near future.

TCP Veno and Veno II

Reference list: 1. C. P. Fu, S. C. Liew, "TCP Veno: TCP Enhancement for Transmission over Wireless Access Networks," IEEE (JSAC) Journal of Selected Areas in Communications, Feb 2003 2. C. L. Zhang, C. P. Fu, et al. "Dynamics Comparison of TCP Reno and Veno," IEEE Globecom, 2004 3. Z. X. Zou, B. S. Lee, C. P. Fu, "Packet Loss and Congestion State in TCP Veno," IEEE ICON, 2004 4. C. B. Fu, J. L. Wang , C. P. Fu, K. Zhang, " Performance Study of TCP Veno in Wireless/Asymmetric Links," International conference on cyberworlds, 2004 5. C. P. Fu, W. Lu, B. S. Lee, "TCP Veno Revisited," IEEE Globecom, 2003 6. Q. X. Pan, S. C. Liew, C. P. Fu, W. Wang, "Performance Study of TCP Veno over wireless LAN and RED router," IEEE Globecom, 2003 7. L. Chung, C. P. Fu, S. C. Liew, "Improvements Achieved by SAK Employing TCP Veno Equilibrium-Oriented Mechanism over Lossy Networks," IEEE EUROCOM, 2001 8. Y. Liu, C. P. Fu, et al. "An Enhancement of Multicast Congestion Control over Hybrid Wired/Wireless Networks," IEEE WCNC , 2004 9. B. Zhou, C. P. Fu, "A Simple Throughput Model for TCP Veno," IEEE ICC, 2006 10. Dr Fu's thesis defense slides 11. Z. X. Zhou's presentation on IEEE ICON, 2004

TCP Veno and Veno II

Você também pode gostar

- Trust-Based Incentive Scheme for Allocating Big Data Tasks in Mobile Social CloudsDocumento12 páginasTrust-Based Incentive Scheme for Allocating Big Data Tasks in Mobile Social CloudsManju ManjuAinda não há avaliações

- Increasing Network Lifetime by Energy-Efficient Routing Scheme For OLSR ProtocolDocumento5 páginasIncreasing Network Lifetime by Energy-Efficient Routing Scheme For OLSR ProtocolManju ManjuAinda não há avaliações

- Ieeepro Techno Solutions - 2014 Ieee Dotnet Project - Deadline Based Resource ProvisioningDocumento14 páginasIeeepro Techno Solutions - 2014 Ieee Dotnet Project - Deadline Based Resource ProvisioningsrinivasanAinda não há avaliações

- Money Pad: The Future WalletDocumento15 páginasMoney Pad: The Future WalletAnvita_Jain_2921Ainda não há avaliações

- Drunk N DriveDocumento3 páginasDrunk N DriveManju ManjuAinda não há avaliações

- FunctionalDocumento1 páginaFunctionalManju ManjuAinda não há avaliações

- SCDNS05Documento2 páginasSCDNS05Manju ManjuAinda não há avaliações

- Conclusion and Future WorkDocumento3 páginasConclusion and Future WorkManju ManjuAinda não há avaliações

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNo EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeNota: 4 de 5 estrelas4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)No EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Nota: 4 de 5 estrelas4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItNo EverandNever Split the Difference: Negotiating As If Your Life Depended On ItNota: 4.5 de 5 estrelas4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerNo EverandThe Emperor of All Maladies: A Biography of CancerNota: 4.5 de 5 estrelas4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNo EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceNota: 4 de 5 estrelas4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingNo EverandThe Little Book of Hygge: Danish Secrets to Happy LivingNota: 3.5 de 5 estrelas3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnNo EverandTeam of Rivals: The Political Genius of Abraham LincolnNota: 4.5 de 5 estrelas4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNo EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaNota: 4.5 de 5 estrelas4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNo EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryNota: 3.5 de 5 estrelas3.5/5 (231)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNo EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureNota: 4.5 de 5 estrelas4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNo EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersNota: 4.5 de 5 estrelas4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaNo EverandThe Unwinding: An Inner History of the New AmericaNota: 4 de 5 estrelas4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyNo EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyNota: 3.5 de 5 estrelas3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNo EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreNota: 4 de 5 estrelas4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)No EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Nota: 4.5 de 5 estrelas4.5/5 (119)

- Shibboleth2 IdP Setup CentOSDocumento17 páginasShibboleth2 IdP Setup CentOSir5an458Ainda não há avaliações

- CCNA Guide To Cisco Networking FundamentalsDocumento46 páginasCCNA Guide To Cisco Networking FundamentalsJack Melson100% (1)

- Palo AltoTroubleshooting Decision Tree Guide PDFDocumento22 páginasPalo AltoTroubleshooting Decision Tree Guide PDFAponteTrujillo100% (1)

- Tenda Wireless Router - User Guide EnglishDocumento106 páginasTenda Wireless Router - User Guide EnglishAdrian MGAinda não há avaliações

- TW DeployingMPLS TextDocumento146 páginasTW DeployingMPLS TextKetan PatelAinda não há avaliações

- Manual de Usuario-NC500-SNMPDocumento15 páginasManual de Usuario-NC500-SNMPMichael Sedano EscobarAinda não há avaliações

- TS-1071 Installation-ConfiguringNetworkCard OS16000 OS15000 OS12002 V3Documento9 páginasTS-1071 Installation-ConfiguringNetworkCard OS16000 OS15000 OS12002 V3FabíolaPinudoAinda não há avaliações

- ENCOR Mar 2021 v3Documento169 páginasENCOR Mar 2021 v3JohnstoneOlooAinda não há avaliações

- 70-341 Core Solutions of Microsoft Exchange Server 2013 2015-12-31 PDFDocumento325 páginas70-341 Core Solutions of Microsoft Exchange Server 2013 2015-12-31 PDFBogus PocusAinda não há avaliações

- Ipv6 Transition Technologies: Yasuo Kashimura Senior Manager, Japan, Apac Ipcc Alcatel-LucentDocumento43 páginasIpv6 Transition Technologies: Yasuo Kashimura Senior Manager, Japan, Apac Ipcc Alcatel-Lucentharikrishna242424Ainda não há avaliações

- Hey Guys I Have Collected Info Related To Ports From Diff Web It Might Be Helpful To UDocumento35 páginasHey Guys I Have Collected Info Related To Ports From Diff Web It Might Be Helpful To UGanesh Kumar VeerlaAinda não há avaliações

- Barly WicaksonoDocumento3 páginasBarly WicaksonoBarly WicaksonoAinda não há avaliações

- SJ-20141021142133-002-ZXHN F660 (V5.2) GPON ONT Maintenance Management Guide (JAZZTEL) PDFDocumento113 páginasSJ-20141021142133-002-ZXHN F660 (V5.2) GPON ONT Maintenance Management Guide (JAZZTEL) PDFcrashpunkAinda não há avaliações

- Exchange 2003 Technical ReferenceDocumento601 páginasExchange 2003 Technical ReferenceLee Wiscovitch100% (4)

- DSL-2520U Z3 Manual v1.00 (DIN)Documento58 páginasDSL-2520U Z3 Manual v1.00 (DIN)vaitarna60mw vaitarnanagarAinda não há avaliações

- Cisco Unified Communications Manager Express System Administrator GuideDocumento1.762 páginasCisco Unified Communications Manager Express System Administrator GuideErnesto EsquerAinda não há avaliações

- CCNPv6 ROUTE Lab8-2 Manual IPv6 Tunnel EIGRP Student FormDocumento6 páginasCCNPv6 ROUTE Lab8-2 Manual IPv6 Tunnel EIGRP Student FormMuvubuka KatimaniAinda não há avaliações

- System 6000 Rfid Encoder pk3695 PDFDocumento7 páginasSystem 6000 Rfid Encoder pk3695 PDFInnocent ElongoAinda não há avaliações

- Starz University College of Science and Technology: Networking Fundamental Net 207 Final ProjectDocumento10 páginasStarz University College of Science and Technology: Networking Fundamental Net 207 Final ProjectWidimongar W. JarqueAinda não há avaliações

- UFW firewall guideDocumento4 páginasUFW firewall guideskorlowsky5098Ainda não há avaliações

- Signaling Connection Control Part (SCCP)Documento14 páginasSignaling Connection Control Part (SCCP)Ercan YilmazAinda não há avaliações

- Combine 1Documento80 páginasCombine 1Canatoy, Christian G.Ainda não há avaliações

- CX600 Metro Services Platform: Huawei Technologies Co.,LtdDocumento8 páginasCX600 Metro Services Platform: Huawei Technologies Co.,Ltdanirban123456Ainda não há avaliações

- Proxy Configuration For PI7.3Documento19 páginasProxy Configuration For PI7.3Rajiv PandeyAinda não há avaliações

- No Lokasi User Comput Er Name Passwor D IP Addres IP External / Modem KeteranganDocumento2 páginasNo Lokasi User Comput Er Name Passwor D IP Addres IP External / Modem KeteranganBang AllenAinda não há avaliações

- Shorewall-2 0 3 PDFDocumento434 páginasShorewall-2 0 3 PDFekiAinda não há avaliações

- Scrip Ega Chanel New Version 2 Isp Game Isp KhususDocumento43 páginasScrip Ega Chanel New Version 2 Isp Game Isp KhususYan Mahendra FAinda não há avaliações

- IoT Messaging ProtocolsDocumento58 páginasIoT Messaging ProtocolsPranjal YadavAinda não há avaliações

- IMS-ZXUN CSCF-BC-EN-Theoretical Basic-System Introduction-1-PPT-201010-42Documento42 páginasIMS-ZXUN CSCF-BC-EN-Theoretical Basic-System Introduction-1-PPT-201010-42BSSAinda não há avaliações

- 1: Ipv6 Addresses Are 128-Bit Hexadecimal Numbers: Private Ipv6 Network Id:-Fd30:5A40:A5Bc:206E::/64Documento3 páginas1: Ipv6 Addresses Are 128-Bit Hexadecimal Numbers: Private Ipv6 Network Id:-Fd30:5A40:A5Bc:206E::/64ajsurkhiAinda não há avaliações