Escolar Documentos

Profissional Documentos

Cultura Documentos

Simulare Fenomene Fizice

Enviado por

Madalin GabrielDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Simulare Fenomene Fizice

Enviado por

Madalin GabrielDireitos autorais:

Formatos disponíveis

Large-scale Parallel Computing of

Cloud Resolving Storm Simulator

Kazuhisa Tsuboki

1

and Atsushi Sakakibara

2

1 Hydrospheric Atmospheric Research Center, Nagoya University

Furo-cho, Chikusa-ku, Nagoya, 464-8601 JAPAN

phone: +81-52-789-3493, fax: +81-52-789-3436

e-mail: tsuboki@ihas.nagoya-u.ac.jp

2 Research Organization for Information Science and Technology

Furo-cho, Chikusa-ku, Nagoya, 464-8601 JAPAN

phone: +81-52-789-3493, fax: +81-52-789-3436

e-mail: atsusi@ihas.nagoya-u.ac.jp

Abstract. A sever thunderstorm is composed of strong convective clouds.

In order to perform a simulation of this type of storms, a very ne-grid

system is necessary to resolve individual convective clouds within a large

domain. Since convective clouds are highly complicated systems of the

cloud dynamics and microphysics, it is required to formulate detailed

cloud physical processes as well as the uid dynamics. A huge memory

and large-scale parallel computing are necessary for the computation. For

this type of computations, we have developed a cloud resolving numerical

model which was named the Cloud Resolving Storm Simulator (CReSS).

In this paper, we will describe the basic formulations and characteristics

of CReSS in detail. We also show some results of numerical experiments

of storms obtained by a large-scale parallel computation using CReSS.

1 Introduction

A numerical cloud model is indispensable for both understanding cloud and pre-

cipitation and their forecasting. Convective clouds and their organized storms

are highly complicated systems determined by the uid dynamics and cloud

microphysics. In order to simulate evolution of a convective cloud storm, calcu-

lation should be performed in a large domain with a very high resolution grid

to resolve individual clouds. It is also required to formulate accurately cloud

physical processes as well as the uid dynamic and thermodynamic processes.

A detailed formulation of cloud physics requires many prognostic variables even

in a bulk method such as cloud, rain, ice, snow, hail and so on. Consequently, a

large-scale parallel computing with a huge memory is necessary for this type of

simulation.

Cloud models have been developed and used for study of cloud dynamics and

cloud microphysics since 1970s (e.g., Klemp and Wilhelmson, 1978 [1]; Ikawa,

1988 [2]; Ikawa and Saito, 1991 [3]; Xue et al., 1995 [4]; Grell et al., 1994 [5]).

These models employed the non-hydrostatic and compressible equations systems

with a ne-grid system. Since the computation of cloud models was very large,

they have been used for research with a limited domain.

The recent progress in high performance computer, especially a parallel com-

puters is extending the potential of cloud models widely. It enables us to per-

form a simulation of mesoscale storm using a cloud model. For this four years,

we have developed a cloud resolving numerical model which was designed for

parallel computers including the Earth Simulator.

The purposes of this study are to develop the cloud resolving model and

its parallel computing to simulate convective clouds and their organized storms.

Thunderstorms which are organization of convective clouds produce many types

of severe weather: heavy rain, hail storm, downburst, tornado and so on. The

simulation of thunderstorms will clarify the characteristics of dynamics and evo-

lution and will contribute to the mesoscale storm prediction.

The cloud resolving model which we are now developing was named the

Cloud Resolving Storm Simulator (CReSS). In this paper, we will describe

the basic formulation and characteristics of CReSS in detail. Some results of

numerical experiments using CReSS will be also presented.

2 Description of CReSS

2.1 Basic Equations and Characteristics

The coordinate system of CReSS is the Cartesian coordinates in horizontal x, y

and a terrain-following curvilinear coordinate in vertical to include the eect

of orography. Using height of the model surface z

s

(x, y) and top height z

t

, the

vertical coordinate (x, y, z) is dened as,

(x, y, z) =

z

t

[z z

s

(x, y)]

z

t

z

s

(x, y)

. (1)

If we use a vertically stretching grid, the eect will be included in (1). Com-

putation of CReSS is performed in the rectangular linear coordinate transformed

from the curvilinear coordinate. The transformed velocity vector will be

U = u, (2)

V = v, (3)

W = (uJ

31

+ vJ

32

+ w)

_

G

1

2

. (4)

where variable components of the transform matrix are dened as

J

31

=

z

x

=

_

z

t

1

_

z

s

(x, y)

x

(5)

J

32

=

z

y

=

_

z

t

1

_

z

s

(x, y)

y

(6)

J

d

=

z

= 1

z

s

(x, y)

z

t

(7)

and the Jacobian of the transformation is

G

1

2

= |J

d

| = J

d

(8)

In this coordinate, the governing equations of dynamics in CReSS will be for-

mulated as follows. The dependent variables of dynamics are three-dimensional

velocity components u, v and w, perturbation pressure p

and perturbation of po-

tential temperature

. For convenience, we use the following variables to express

the equations.

= G

1

2

, u

u, v

v,

w

w, W

W,

.

where is the density of the basic eld which is in the hydrostatic balance.

Using these variables, the momentum equations are

u

t

=

_

u

u

x

+ v

u

y

+ W

_

. .

[rm]

_

x

{J

d

(p

Div

)} +

{J

31

(p

Div

)}

_

. .

[am]

+(f

s

v

f

c

w

)

. .

[rm]

+G

1

2

Turb.u

. .

[physics]

, (9)

v

t

=

_

u

v

x

+ v

v

y

+ W

_

. .

[rm]

_

y

{J

d

(p

Div

)} +

{J

32

(p

Div

)}

_

. .

[am]

f

s

u

..

[rm]

+G

1

2

Turb.v

. .

[physics]

, (10)

w

t

=

_

u

w

x

+ v

w

y

+ W

_

. .

[rm]

(p

Div

)

. .

[am]

g

_

_

_

_

_

..

[gm]

c

2

s

..

[am]

+

q

v

+ q

v

v

+

q

x

1 + q

v

. .

[physics]

_

_

_

_

_

+f

c

u

..

[rm]

+G

1

2

Turb.w

. .

[physics]

, (11)

where Div

is an articial divergence damping term to suppress acoustic waves,

f

s

and f

c

are Coriolis terms, c

2

s

is square of the acoustic wave speed, q

v

and q

x

is mixing ratios of water vapor and hydrometeors, respectively. The equation of

the potential temperature is

t

=

_

u

x

+ v

y

+ W

_

. .

[rm]

w

. .

[gm]

+G

1

2

Turb.

. .

[physics]

+

Src.

. .

[physics]

, (12)

and the pressure equation is

G

1

2

p

t

=

_

J

3

u

p

x

+ J

3

v

p

y

+ J

3

W

p

_

. .

[rm]

+G

1

2

gw

. .

[am]

c

2

s

_

J

3

u

x

+

J

3

v

y

+

J

3

W

_

. .

[am]

+G

1

2

c

2

s

_

1

d

dt

1

Q

dQ

dt

_

. .

[am]

, (13)

where Q is diabatic heating, terms of Turb. is a physical process of the tur-

bulent mixing and term of Src. is source term of potential temperature.

Since the governing equations have no approximation, they will express all

type of waves including the acoustic waves, gravity waves and Rossby waves.

These waves have very wide range of phase speed. The fastest wave is the acous-

tic wave. Although it is unimportant in meteorology, its speed is very large

in comparison with other waves and limits the time increment of integration.

We, therefore, integrate the terms related to the acoustic waves and other terms

with dierent time increments. In the equations (9)(13), [rm] is indicates terms

which are related to rotational mode (the Rossby wave mode), [gm] the diver-

gence mode (gravity wave mode), and [am] the acoustic wave mode, respectively.

Terms of physical processes are indicated by [physics].

2.2 Computational Scheme and Parallel Processing Strategy

In numerical computation, a nite dierence method is used for the spatial

discretization. The coordinates are rectangular and dependent variables are set

on a staggered grid: the Arakawa-C grid in horizontal and the Lorenz grid in

vertical (Fig.1). The coordinates x, y and are dened at the faces of the grid

boxes. The velocity components u, v and w are dened at the same points of the

coordinates x, y and , respectively. The metric tensor J

31

is evaluated at a half

interval below the u point and J

32

at a half interval below the v point. All scalar

variables p

, q

v

and q

x

, the metric tensor J

d

and the transform Jacobian are

dened at the center of the grid boxes. In the computation, an averaging operator

is used to evaluate dependent variables at the same points. All output variables

are obtained at the scalar points.

w

u

v

v

u

w

, Jd

J32

J32

J32

J32

J31

J31

J31

J31

Fig. 1. Structure of the staggered grid and setting of dependent variables.

As mentioned in the previous sub-section, the governing equation includes

all types of waves and the acoustic waves severely limits the time increment.

In order to avoid this diculty, CReSS adopted the mode-splitting technique

(Klemp and Wilhelmson, 1978 [1]) for time integration. In this technique, the

terms related to the acoustic waves in (9) (13) are integrated with a small

time increment and all other terms are with a large time increment t.

t t t + t - t t +

Fig. 2. Schematic representation of the mode-splitting time integration method. The

large time step is indicated by the upper large curved arrows with the increment of t

and the small time step by the lower small curved arrows with the increment of .

Figure 2 shows a schematic representation of the time integration of the

mode-splitting technique. CReSS has two options in the small time step inte-

gration; one is an explicit time integration both in horizontal and vertical and

the other is explicit in horizontal and implicit in vertical. In the latter option,

p

and w are solved implicitly by the Crank-Nicolson scheme in vertical. With

respect to the large time step integration, the leap-frog scheme with the Asselin

time lter is used for time integration. In order to remove grid-scale noise, the

second or forth order computational mixing is used.

Fig. 3. Schematic representation of the two-dimensional domain decomposition and

the communication strategy for the parallel computing.

A large three-dimensional computational domain (order of 100 km) is neces-

sary for the simulation of thunderstorm with a very high resolution (order of less

than 1km). For parallel computing of this type of computation, CReSS adopts a

two dimensional domain decomposition in horizontal (Fig.3). Parallel processing

is performed by the Massage Passing Interface (MPI). Communications between

the individual processing elements (PEs) are performed by data exchange of the

outermost two grids.

1 2 4 8 16 32

100

10

100

1000

10000

R

e

a

l

-

t

i

m

e

Number of Processors

Linear Scaling , Real-time

Fig. 4. Computation time of parallel processing of a test experiment. The model used

in the test had 676735 grid points and was integrated for 50 steps on HITACHI

SR2201.

The performance of parallel processing of CReSS was tested by a simulation

whose grid size was 67 67 35 on HITACHI SR2201. With increase of the

number of PEs, the computation time decreased almost linearly (Fig.4). The

eciency was almost 0.9 or more if the number of PEs was less than 32. When

the number of PEs was 32, the eciency decreased signicantly. Because the

number of grid was too small to use the 32 PEs. The communication between

PEs became relatively large. The results of the test showed a suciently high

performance of the parallel computing of CReSS.

2.3 Initial and Boundary Conditions

Several types of initial and boundary conditions are optional in CReSS. For a

numerical experiment, a horizontally uniform initial eld provided by a sound-

ing prole will be used with an initial disturbance of a thermal bubble or ran-

dom noise. Optional boundary conditions are rigid wall, periodic, zero normal-

gradient, and wave-radiation type of Orlanski (1976) [6].

CReSS has an option to be nested within a coarse-grid model and performs a

prediction experiment. In this option, the initial eld is provided by interpolation

of grid point values and the boundary condition is provided by the coarse-grid

model.

2.4 Physical Processes

Cloud physics is an important physical process. It is formulated by a bulk method

of cold rain which is based on Lin et al. (1983) [7], Cotton et al. (1986) [8],

Murakami (1990) [9], Ikawa and Saito (1991) [3], and Murakami et al. (1994) [10].

The bulk parameterization of cold rain considers water vapor, rain, cloud, ice,

snow, and graupel. Prognostic variables are mixing ratios for water vapor q

v

,

cloud water q

c

, rain water q

r

, cloud ice q

i

, snow q

s

and graupel q

g

. The prognostic

equations of these variables are

q

v

t

= Adv.q

v

+ Turb.q

v

+ Src.q

v

(14)

q

x

t

= Adv.q

x

+ Turb.q

x

+ Src.q

x

+ Fall.q

x

(15)

where q

x

is the representative mixing ratio of q

c

, q

r

, q

i

, q

s

andq

g

, and Adv.,

Turb. and Fall. represent time changes due to advection, turbulent mix-

ing, and fall out, respectively. All sources and sinks of variables are included

in the Src. term. The microphysical processes implemented in the model are

described in Fig.5. Radiation of cloud is not included.

Turbulence is also an important physical process in the cloud model. Param-

eterizations of the subgrid-scale eddy motions in CReSS are one-order closure

of the Smagorinsky (1963) [11] and the 1.5 order closer with turbulent kinetic

energy (TKE). In the latter parameterization, the prognostic equation of TKE

will be used.

CReSS implemented the surface process formulated by a bulk method. In

this process, the surface sensible ux H

S

and latent heat ux LE are formulated

as

H

S

=

a

C

p

C

h

|V

a

|(T

a

T

G

), (16)

LE =

a

LC

h

|V

a

| [q

a

q

vs

(T

G

)] , (17)

-VDvr VDvg

NUA vi

V

D

v

i

V

D

v

c

C

N

c

r

C

L

c

r

MLsr,SHsr

CLrs

MLgr,SHgr

CLri,CL

N

ri,CLrs,CL

N

rs,CLrg,FRrg,FR

N

rg

NUF ci,NUC ci ,NUH ci

MLic

CLcs

CLis,CNis

SPsi,SP

N

si

CLcg

CLsr,CLsg,CNsg,CN

N

sg

S

P

g

i

,

S

P

N

g

i

C

L

i

r

,

C

L

i

g

AG

N

s

AG

N

i

Fall. q

r

V

D

v

s

water vapor (q

v

)

snow (q

s

,N

s

)

cloud water (q

c

)

rain water (q

r

)

cloud ice (q

i

,N

i

)

graupel (q

g

,N

g

)

Fall. q

g

, Fall.( N

g

/ )

Fall. q

s

, Fall. (N

s

/ )

Fig. 5. Diagram describing of water substances and cloud microphysical processes in

the bulk model.

where a indicates the lowest layer of the atmosphere and G the surface.

The coecient of is the evapotranspiration eciency and L is the latent heat

of evaporation. The surface temperature of the ground T

G

is calculated by the

n-layers ground model. The momentum uxes (

x

,

y

) are

x

=

a

C

m

|V

a

|u

a

, (18)

y

=

a

C

m

|V

a

|v

a

. (19)

The bulk coecients C

h

and C

m

are formulated by the scheme of Louis et

al. (1981) [12].

3 Dry Model Experiments

In the development of CReSS, we tested it with respect to several types of

phenomena. In a dry atmosphere, the mountain waves and the Kelvin-Helmholtz

billows were chosen to test CReSS.

The numerical experiment of Kelvin-Helmholtz billows was performed in a

two-dimensional geometry with a grid size of 20 m. The prole of the basic ow

was the hyperbolic tangent type. Stream lines of u and w components (Fig.6)

show a clear cats eye structure of the Kelvin-Helmholtz billows. This result is

very similar to that of Klaassen and Peltier (1985) [13]. The model also simulated

the overturning of potential temperature associated with the billows (Fig.7). This

result shows the model works correctly with a grid size of a few tens meters as

far as in the dry experiment.

Fig. 6. Stream lines of the Kelvin-Helmholtz billow at 240 seconds from the initial

simulated in the two-dimensional geometry.

Fig. 7. Same as Fig.6, but for potential temperature.

In the experiment of mountain waves, we used a horizontal grid size of 400 m

in a three-dimensional geometry. A bell-shaped mountain with a height of 500 m

and with a half-width of 2000 m was placed at the center of the domain. The

basic horizontal ow was 10 m s

1

and the buoyancy frequency was 0.01 s

1

.

The result (Fig.8) shows that upward and downwindward propagating mountain

waves developed with time. The mountain waves pass through the downwind

boundary. This result is closely similar to that obtained by other models as well

as that predicted theoretically.

These results of the dry experiments showed that the uid dynamics part

and the turbulence parameterization of the model worked correctly and realistic

behavior of ow were simulated.

Fig. 8. Vertical velocity at 9000 seconds from the initial obtained by the mountain

wave experiment.

4 Simulation of Tornado within a Supercell

In a simulation experiment of a moist atmosphere, we chose a tornado-producing

supercell observed on 24 September 1999 in the Tokai District of Japan. The

simulation was aiming at resolving the vortex of the tornado within the supercell.

Numerical simulation experiments of a supercell thunderstorm which has a

horizontal scale of several tens kilometers using a cloud model have been per-

formed during the past 20 years (Wilhelmson and Klemp, 1978 [14]; Weisman

and Klemp, 1982, 1984 [15], [16]). Recently, Klemp and Rotunno (1983) [17]

attempted to increase horizontal resolution to simulate a ne structure of a

meso-cyclone within the supercell. It was still dicult to resolve the tornado.

An intense tornado occasionally occurs within the supercell thunderstorm. The

supercell is highly three-dimensional and its horizontal scale is several tens kilo-

meter. A large domain of order of 100 km is necessary to simulate the supercell

using a cloud model. On the other hand, the tornado has a horizontal scale of

a few hundred meters. The simulation of the tornado requires a ne resolution

of horizontal grid spacing of order of 100 m or less. In order to simulate the

supercell and the associated tornado by a cloud model, a huge memory and high

speed CPU are indispensable.

To overcome this diculty, Wicker and Wilhelmson (1995) [18] used an adap-

tive grid method to simulate tornado-genesis. The grid spacing of the ne mesh

was 120 m. They simulated a genesis of tornadic vorticity. Grasso and Cotton

(1995) [19] also used a two-way nesting procedure of a cloud model and simu-

lated a tornadic vorticity. These simulations used a two-way nesting technique.

Nesting methods include complication of communication between the coarse-

grid model and the ne-mesh model through the boundary. On the contrary, the

present research do not use any nesting methods. We attempted to simulate both

the supercell and the tornado using the uniform grid. In this type of simulation,

no complication of the boundary communication. The computational domain of

the present simulation was about 50 50 km and the grid spacing was 100 m.

The integration time was about 2 hours.

The basic eld was give by a sounding at Shionomisaki, Japan at 00 UTC, 24

September 1999 (Fig.9). The initial perturbation was given by a warm thermal

bubble placed near the surface. It caused an initial convective cloud.

Fig. 9. Vertical proles of zonal component (thick line) and meridional component

(thin line) observed at Shionomisaki at 00 UTC, 24 September 1999.

After one hour from the initial time, a quasi-stationary super cell was simu-

lated by CReSS (Fig.10). The hook-shaped precipitation area and the bounded

weak echo region (BWER) which are characteristic features of the supercell were

formed in the simulation. An intense updraft occurred along the surface ank-

ing line. At the central part of BWER or of the updraft, a tornadic vortex was

formed at 90 minutes from the initial time.

The close view of the central part of the vorticity (Fig.11) shows closed con-

tours. The diameter of the vortex is about 500 m and the maximum of vorticity

is about 0.1 s

1

. This is considered to be corresponded to the observed tornado.

Fig. 10. Horizontal display at 600 m of the simulated supercell at 5400 seconds from

the initial. Mixing ratio of rain (gray scales, g kg

1

), vertical velocity (thick lines,

m s

1

), the surface potential temperature at 15 m (thin lines, K) and horizontal velocity

vectors.

The pressure perturbation (Fig.12) also shows closed contours which corresponds

to those of the vorticity. This indicates that the ow of the vortex is in the cy-

clostrophic balance. The vertical cross section of the vortex (Fig.13) shows that

the axis of the vorticity and the associated pressure perturbation is inclined to

the left hand side and extends to a height of 2 km. At the center of the vortex,

the downward extension of cloud is simulated.

While this is a preliminary result of the simulation of the supercell and tor-

nado, some characteristic features of the observation were simulated well. The

important point of this simulation is that both the supercell and the tornado

were simulated in the same grid size. The tornado was produced purely by the

physical processes formulated in the model. A detailed analysis of the simulated

data will provide an important information of the tornado-genesis within the

supercell.

Fig. 11. Close view of the simulated tornado within the supercell. The contour lines

are vorticity (s

1

) and the arrows are horizontal velocity. The arrow scale is shown at

the bottom of the gure.

Fig. 12. Same as Fig.11, but for pressure perturbation.

Fig. 13. Vertical cross section of the simulated tornado. Thick lines are vorticity (s

1

),

dashed lines are pressure perturbation and arrows are horizontal velocity.

5 Simulation of Squall Line

A squall line is a signicant mesoscale convective system. It is usually composed

of an intense convective leading edge and a trailing stratiform region. An in-

tense squall line was observed by three Doppler radars on 16 July 1998 over

the China continent during the intensive eld observation of GAME / HUBEX

(the GEWEX Asian Monsoon Experiment / Huaihe River Basin Experiment).

The squall line extended from the northwest to the southeast with a width of

a few tens kilometers and moved northeastward at a speed of 11 m s

1

. Radar

observation showed that the squall line consisted of intense convective cells along

the leading edge. Some of cells reached to a height of 17 km. The rear-inow

was present at a height of 4 km which descended to cause the intense lower-level

convergence at the leading edge. After the squall line passed over the radar sites,

a stratiform precipitation was extending behind the convective leading edge.

The experimental design of the simulation experiment using CReSS is as

follows. Both the horizontal and vertical grid sizes were 300 m within a domain

of 170 km 120 km. Cloud microphysics was the cold rain type. The boundary

condition was the wave-radiating type. An initial condition was provided by

a dual Doppler analysis and sounding data. The inhomogeneous velocity eld

within the storm was determined by the dual Doppler radar analysis directly

while that of outside the storm and thermodynamic eld were provided by the

sounding observation. Mixing ratios of rain, snow and graupel were estimated

from the radar reectivity while mixing ratios of cloud and ice were set to be zero

at the initial. A horizontal cross section of the initial eld is shown in Fig.14.

Fig. 14. Horizontal cross section of the initial eld at a height of 2.5km at 1033 UTC,

16 July 1998. The color levels mixing ratio of rain (g kg

1

). Arrows show the horizontal

velocity obtained by the dual Doppler analysis and sounding.

Fig. 15. Time series of horizontal displays (upper row) and vertical cross sections (lower

row) of the simulated squall line. Color levels indicate total mixing ratio (g kg

1

) of

rain, snow and graupel. Contour lines indicate total mixing ratio (0.1, 0.5, 1, 2 g kg

1

)

of cloud ice and cloud water. Arrows are horizontal velocity.

The simulated squall line extending from the northwest to the southeast

moved northeastward (Fig.15). The convective reading edge of the simulated

squall line was maintained by the replacement of new convective cells and moved

to the northeast. This is similar to the behavior of the observed squall line. Con-

vective cells reached to a height of about 14 km with large production of graupel

above the melting layer. The rear-inow was signicant as the observation. A

stratiform region extended with time behind the leading edge. Cloud extended

to the southwest to form a cloud cluster.

The result of the simulation experiment showed that CReSS successfully

simulated the development and movement of the squall line.

6 Summary and Future Plans

We are developing the cloud resolving numerical model CReSS for numerical

experiments and simulations of clouds and storms. Parallel computing is in-

dispensable for a large-scale simulations. In this paper, we described the basic

formulations and important characteristics of CReSS. We also showed some re-

sult of the numerical experiments: the Kelvin-Helmholtz billows, the mountain

waves, the tornado within the supercell and the squall line. These results showed

that the CReSS has a capability to simulate thunderstorms and related phenom-

ena.

In the future, we will make CReSS to include detailed cloud microphysical

processes which resolve size distributions of hydrometeors. The parameteriza-

tion of turbulence is another important physical process in cloud. The large

eddy simulation is expected to be used in the model. We will develop CReSS to

enable the two-way nesting within a coarse-grid model for a simulation of a real

weather system. Four-dimensional data assimilation of Doppler radar is also our

next target. Because initial conditions are essential for a simulation of mesoscale

storms.

CReSS is now open for public and any users can download the source code and

documents from the web site at http://www.tokyo.rist.or.jp/CReSS Fujin (in

Japanese) and can use for numerical experiments of cloud-related phenomena.

CReSS has been tested on a several computers: HITACHI SR2201, HITACHI

SR8000, Fujitsu VPP5000, NEC SX4. We expect that CReSS will be performed

on the Earth Simulator and make a large-scale parallel computing to simulate a

details of clouds and storms.

Acknowledgements

This study is a part of a project led by Professor Kamiya, Aichi Gakusen Uni-

versity. The project is supported by the Research Organization for Information

Science and Technology (RIST). The simulations and calculations of this work

were performed using HITACHI S3800 super computer and SR8000 computer

at the Computer Center, the University of Tokyo and Fujitsu VPP5000 at the

Computer Center, Nagoya University. The Grid Analysis and Display System

(GrADS) developed at COLA, University of Maryland was used for displaying

data and drawing gures.

References

1. Klemp, J. B., and R. B. Wilhelmson, 1978: The simulation of three-dimensional

convective storm dynamics. J. Atmos. Sci., 35, 10701096.

2. Ikawa, M., 1988: Comparison of some schemes for nonhydrostatic models with

orography. J. Meteor. Soc. Japan, 66, 753776.

3. Ikawa, M. and K. Saito, 1991: Description of a nonhydrostatic model developed at

the Forecast Research Department of the MRI. Technical Report of the MRI, 28,

238pp.

4. Xue, M., K. K. Droegemeier, V. Wong, A. Shapiro and K. Brewster, 1995: Ad-

vanced Regional Prediction System, Version 4.0. Center for Analysis and Predic-

tion of Storms, University of Oklahoma, 380pp.

5. Grell, G., J. Dudhia and D. Stauer, 1994: A description of the fth-generation of

the Penn State / NCAR mesoscale model (MM5). NCAR Technical Note, 138pp.

6. Orlanski, I., 1976: A simple boundary condition for unbounded hyperbolic ows.

J. Comput. Phys., 21, 251269.

7. Lin, Y. L., R. D. Farley and H. D. Orville, 1983: Bulk parameterization of the snow

eld in a cloud model. J. Climate Appl. Meteor., 22, 10651092.

8. Cotton, W. R., G. J. Tripoli, R. M. Rauber and E. A. Mulvihill, 1986: Numerical

simulation of the eects of varying ice crystal nucleation rates and aggregation

processes on orographic snowfall. J. Climate Appl. Meteor., 25, 16581680.

9. Murakami, M., 1990: Numerical modeling of dynamical and microphysical evolu-

tion of an isolated convective cloud The 19 July 1981 CCOPE cloud. J. Meteor.

Soc. Japan, 68, 107128.

10. Murakami, M., T. L. Clark and W. D. Hall 1994: Numerical simulations of con-

vective snow clouds over the Sea of Japan; Two-dimensional simulations of mixed

layer development and convective snow cloud formation. J. Meteor. Soc. Japan,

72, 4362.

11. Smagorinsky, J., 1963: General circulation experiments with the primitive equa-

tions. I. The basic experiment. Mon. Wea. Rev., 91, 99164.

12. Louis, J. F., M. Tiedtke and J. F. Geleyn, 1981: A short history of the operational

PBL parameterization at ECMWF. Workshop on Planetary Boundary Layer Pa-

rameterization 2527 Nov. 1981, 5979.

13. Klaassen, G. P. and W. R. Peltier, 1985: The evolution of nite amplitude Kelvin-

Helmholtz billows in two spatial dimensions. J. Atmos. Sci., 42, 13211339.

14. Wilhelmson, R. B., and J. B. Klemp, 1978: A numerical study of storm splitting

that leads to long-lived storms. J. Atmos. Sci., 35, 19741986.

15. Weisman, M. L., and J. B. Klemp, 1982: The dependence of numerically simulated

convective storms on vertical wind shear and buoyancy. Mon. Wea. Rev., 110,

504520.

16. Weisman, M. L., and J. B. Klemp, 1984: The structure and classication of nu-

merically simulated convective storms in directionally varying wind shears. Mon.

Wea. Rev., 112, 24782498.

17. Klemp, J. B., and R. Rotunno, 1983: A study of the tornadic region within a

supercell thunderstorm. J. Atmos. Sci., 40, 359377.

18. Wicker, L. J., and R. B. Wilhelmson, 1995: Simulation and analysis of tornado de-

velopment and decay within a three-dimensional supercell thunderstorm. J. Atmos.

Sci., 52, 26752703.

19. Grasso, L. D., and W. R. Cotton, 1995: Numerical simulation of a tornado vortex.

J. Atmos. Sci., 52, 11921203.

Você também pode gostar

- Finite Volume TVD Schemes For Magnetohydrodynamics On UnstructerDocumento21 páginasFinite Volume TVD Schemes For Magnetohydrodynamics On UnstructerRandallHellVargasPradinettAinda não há avaliações

- Interactions between Electromagnetic Fields and Matter: Vieweg Tracts in Pure and Applied PhysicsNo EverandInteractions between Electromagnetic Fields and Matter: Vieweg Tracts in Pure and Applied PhysicsAinda não há avaliações

- PCFD 05Documento8 páginasPCFD 05Saher SaherAinda não há avaliações

- Time Travel: An Approximate Mathematical SolutionNo EverandTime Travel: An Approximate Mathematical SolutionAinda não há avaliações

- Time Domain Simulation of Jack-Up Dynamics Witli The Extremes of A Gaussian ProcessDocumento5 páginasTime Domain Simulation of Jack-Up Dynamics Witli The Extremes of A Gaussian ProcessAndyNgoAinda não há avaliações

- Time Domain Simulation of Jack-Up Dynamics Witli The Extremes of A Gaussian ProcessDocumento5 páginasTime Domain Simulation of Jack-Up Dynamics Witli The Extremes of A Gaussian ProcessMoe LattAinda não há avaliações

- Flow in A Mixed Compression Intake With Linear and Quadratic ElementsDocumento10 páginasFlow in A Mixed Compression Intake With Linear and Quadratic ElementsVarun BhattAinda não há avaliações

- Sensors: Neural Network-Based Self-Tuning PID Control For Underwater VehiclesDocumento18 páginasSensors: Neural Network-Based Self-Tuning PID Control For Underwater VehiclesMiguel Angel GualitoAinda não há avaliações

- Classification of Initial State Granularity Via 2d Fourier ExpansionDocumento10 páginasClassification of Initial State Granularity Via 2d Fourier ExpansionFelicia YoungAinda não há avaliações

- Estimating The Drag Coefficients of Meteorites For All Mach Number RegimesDocumento2 páginasEstimating The Drag Coefficients of Meteorites For All Mach Number RegimesjunkrikaAinda não há avaliações

- Computation of Fluctuating Wind Pressure and Wind Loads On Phased-Array AntennasDocumento10 páginasComputation of Fluctuating Wind Pressure and Wind Loads On Phased-Array AntennasManoj KumarAinda não há avaliações

- Quadrature Squeezing and Geometric-Phase Oscillations in Nano-OpticsDocumento18 páginasQuadrature Squeezing and Geometric-Phase Oscillations in Nano-OpticsNANDEESH KUMAR K MAinda não há avaliações

- Engineering20101100005 44076873Documento13 páginasEngineering20101100005 44076873Abdalla Mohamed AbdallaAinda não há avaliações

- Fractal Transport Phenomena Through The Scale Relativity ModelDocumento8 páginasFractal Transport Phenomena Through The Scale Relativity ModelOscar RepezAinda não há avaliações

- Uniform Asymptotic Formulae For The Spheroidal Radial FunctionDocumento9 páginasUniform Asymptotic Formulae For The Spheroidal Radial FunctionLuLa_IFAinda não há avaliações

- Fluids 04 00081 - PubDocumento24 páginasFluids 04 00081 - PubGuillermo ArayaAinda não há avaliações

- Elastic Wave Finite Difference Modelling As A Practical Exploration ToolDocumento16 páginasElastic Wave Finite Difference Modelling As A Practical Exploration ToolDaniel R PipaAinda não há avaliações

- Finite-Element Method For Elastic Wave PropagationDocumento10 páginasFinite-Element Method For Elastic Wave Propagationbahar1234Ainda não há avaliações

- X-Ray Diffraction by Time-Dependent Deformed Crystals: Theoretical Model and Numerical AnalysisDocumento19 páginasX-Ray Diffraction by Time-Dependent Deformed Crystals: Theoretical Model and Numerical AnalysisPalash SwarnakarAinda não há avaliações

- Numerical Study of Dispersion and Nonlinearity Effects On Tsunami PropagationDocumento13 páginasNumerical Study of Dispersion and Nonlinearity Effects On Tsunami PropagationYassir ArafatAinda não há avaliações

- 1039 SdofDocumento8 páginas1039 SdofMuhammad Yasser AAinda não há avaliações

- Adaptive Simulation of Turbulent Flow Past A Full Car Model: Niclas Jansson Johan Hoffman Murtazo NazarovDocumento7 páginasAdaptive Simulation of Turbulent Flow Past A Full Car Model: Niclas Jansson Johan Hoffman Murtazo NazarovLuiz Fernando T. VargasAinda não há avaliações

- Description of The WRF-1d PBL ModelDocumento11 páginasDescription of The WRF-1d PBL ModelBalaji ManirathnamAinda não há avaliações

- A Time-Split Discontinuous Galerkin Transport Scheme For Global Atmospheric ModelDocumento10 páginasA Time-Split Discontinuous Galerkin Transport Scheme For Global Atmospheric ModelIkky AndrianiAinda não há avaliações

- ICMF2 - Akhmad Tri Prasetyo Et Al 2021Documento10 páginasICMF2 - Akhmad Tri Prasetyo Et Al 2021akhmadtyoAinda não há avaliações

- Tsunami Simulation Using Dipersive Wave Model: Alwafi Pujiraharjo and Tokuzo HOSOYAMADADocumento8 páginasTsunami Simulation Using Dipersive Wave Model: Alwafi Pujiraharjo and Tokuzo HOSOYAMADAmaswafiAinda não há avaliações

- Study of The Atmospheric Wake Turbulence of A CFD Actuator Disc ModelDocumento9 páginasStudy of The Atmospheric Wake Turbulence of A CFD Actuator Disc Modelpierre_elouanAinda não há avaliações

- Modeling of Large Deformations of Hyperelastic MaterialsDocumento4 páginasModeling of Large Deformations of Hyperelastic MaterialsSEP-PublisherAinda não há avaliações

- Modelica-Based Modeling and Simulation of Satellite On-Orbit Deployment and Attitude ControlDocumento9 páginasModelica-Based Modeling and Simulation of Satellite On-Orbit Deployment and Attitude ControlGkkAinda não há avaliações

- V European Conference On Computational Fluid Dynamics Eccomas CFD 2010 J. C. F. Pereira and A. Sequeira (Eds) Lisbon, Portugal, 14-17 June 2010Documento20 páginasV European Conference On Computational Fluid Dynamics Eccomas CFD 2010 J. C. F. Pereira and A. Sequeira (Eds) Lisbon, Portugal, 14-17 June 2010Sagnik BanikAinda não há avaliações

- Conservation of The Nonlinear Curvature Perturbation in Generic Single-Field InflationDocumento8 páginasConservation of The Nonlinear Curvature Perturbation in Generic Single-Field InflationWilliam AlgonerAinda não há avaliações

- Reducing The Number of Time Delays in Coupled Dynamical SystemsDocumento9 páginasReducing The Number of Time Delays in Coupled Dynamical SystemsJese MadridAinda não há avaliações

- The Calculation of Contact Forces Between Particles Using Spring andDocumento6 páginasThe Calculation of Contact Forces Between Particles Using Spring andAbhishek KulkarniAinda não há avaliações

- M. Herrmann - Modeling Primary Breakup: A Three-Dimensional Eulerian Level Set/vortex Sheet Method For Two-Phase Interface DynamicsDocumento12 páginasM. Herrmann - Modeling Primary Breakup: A Three-Dimensional Eulerian Level Set/vortex Sheet Method For Two-Phase Interface DynamicsPonmijAinda não há avaliações

- Spatio-Temporal Characterization With Wavelet Coherence:anexus Between Environment and PandemicDocumento9 páginasSpatio-Temporal Characterization With Wavelet Coherence:anexus Between Environment and PandemicijscAinda não há avaliações

- A Parallel Algorithm For Solving The 3d SCHR Odinger EquationDocumento19 páginasA Parallel Algorithm For Solving The 3d SCHR Odinger EquationSamAinda não há avaliações

- Draft Issue: Dynamic Simulation Otis P. ArmstrongDocumento23 páginasDraft Issue: Dynamic Simulation Otis P. Armstrongotis-a100% (1)

- CH 6Documento15 páginasCH 6Parth PatelAinda não há avaliações

- 2005 Mirza JSCE-AJHEDocumento6 páginas2005 Mirza JSCE-AJHERaza MirzaAinda não há avaliações

- Development - of - An - Integrated - Fuzzy - Logic BallisticDocumento36 páginasDevelopment - of - An - Integrated - Fuzzy - Logic BallisticAnais Pesa MozAinda não há avaliações

- Distance-Redshift Relations in An Anisotropic Cosmological ModelDocumento8 páginasDistance-Redshift Relations in An Anisotropic Cosmological ModelzrbutkAinda não há avaliações

- Domain Structures and Boundary Conditions: Numerical Weather Prediction and Data Assimilation, First EditionDocumento34 páginasDomain Structures and Boundary Conditions: Numerical Weather Prediction and Data Assimilation, First EditionDiamondChuAinda não há avaliações

- Ijms 47 (1) 89-95Documento7 páginasIjms 47 (1) 89-95Gaming news tadkaAinda não há avaliações

- Cav 2001Documento8 páginasCav 2001Daniel Rodriguez CalveteAinda não há avaliações

- Journal of Computational Physics: Jing Chen, Yifan Chen, Hao Wu, Dinghui YangDocumento22 páginasJournal of Computational Physics: Jing Chen, Yifan Chen, Hao Wu, Dinghui Yanganon_583584972Ainda não há avaliações

- SCI.2. Optical Recursion Systems For The Hasimoto Map and Optical Application Wits s2Documento11 páginasSCI.2. Optical Recursion Systems For The Hasimoto Map and Optical Application Wits s2Ahmet SAZAKAinda não há avaliações

- Some Important Issues Related To Wind Sensitive StructuresDocumento29 páginasSome Important Issues Related To Wind Sensitive StructuresThokchom SureshAinda não há avaliações

- PushOver BuildingDocumento4 páginasPushOver BuildingIlir IbraimiAinda não há avaliações

- 2011-4 PaperDocumento9 páginas2011-4 PaperMohamedMagdyAinda não há avaliações

- A Second Order Projection Scheme For The K-Epsilon Turbulence ModelDocumento7 páginasA Second Order Projection Scheme For The K-Epsilon Turbulence ModelmecharashAinda não há avaliações

- SBGF 3240Documento6 páginasSBGF 3240Nonato Colares ColaresAinda não há avaliações

- RubberbandDocumento3 páginasRubberbandMatt MacAinda não há avaliações

- Sandeep Bhatt Et Al - Tree Codes For Vortex Dynamics: Application of A Programming FrameworkDocumento11 páginasSandeep Bhatt Et Al - Tree Codes For Vortex Dynamics: Application of A Programming FrameworkVing666789Ainda não há avaliações

- Derivation of The Adjoint Drift Flux Equations ForDocumento21 páginasDerivation of The Adjoint Drift Flux Equations Forbendel_boyAinda não há avaliações

- Aiac 2021 1647419079Documento12 páginasAiac 2021 1647419079MC AAinda não há avaliações

- Shear Band Formation in Granular Materials: A Micromechanical ApproachDocumento7 páginasShear Band Formation in Granular Materials: A Micromechanical ApproachgeoanagoAinda não há avaliações

- DeAngeli-sbac Pad2003Documento8 páginasDeAngeli-sbac Pad2003Mihaela NastaseAinda não há avaliações

- 2 - AIAA - Computation of Dynamic Stall of A NACA-0012 AirfoilDocumento6 páginas2 - AIAA - Computation of Dynamic Stall of A NACA-0012 Airfoilkgb_261Ainda não há avaliações

- Stochastic Response To Earthquake Forces of A Cable Stayed Bridge 1993Documento8 páginasStochastic Response To Earthquake Forces of A Cable Stayed Bridge 1993ciscoAinda não há avaliações

- SGRDocumento18 páginasSGRMunimAinda não há avaliações

- Get The LUN ID at AIXDocumento4 páginasGet The LUN ID at AIXMq SfsAinda não há avaliações

- Wireshark EthernetDocumento7 páginasWireshark EthernetCherryAinda não há avaliações

- 50 UNIX - Linux Sysadmin TutorialsDocumento4 páginas50 UNIX - Linux Sysadmin Tutorialshammet217Ainda não há avaliações

- Ideapad 330 15intel Platform SpecificationsDocumento1 páginaIdeapad 330 15intel Platform Specificationslegatus2yahoo.grAinda não há avaliações

- Ci QBDocumento13 páginasCi QBGanesh KumarAinda não há avaliações

- Oxygen Enters The Lungs and Then Is Passed On Into Blood. The Blood CarriesDocumento9 páginasOxygen Enters The Lungs and Then Is Passed On Into Blood. The Blood CarriesPranita PotghanAinda não há avaliações

- Ap16 FRQ Chinese LanguageDocumento14 páginasAp16 FRQ Chinese LanguageSr. RZAinda não há avaliações

- Optical Wireless Communications For Beyond 5G Networks and IoT - Unit 6 - Week 3Documento1 páginaOptical Wireless Communications For Beyond 5G Networks and IoT - Unit 6 - Week 3Sarika JainAinda não há avaliações

- Math Lecture 1Documento37 páginasMath Lecture 1Mohamed A. TaherAinda não há avaliações

- Manual Cheetah XIDocumento158 páginasManual Cheetah XIart159357100% (3)

- Screenless DisplayDocumento20 páginasScreenless DisplayjasonAinda não há avaliações

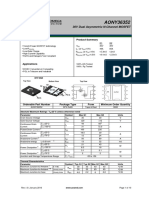

- AONY36352: 30V Dual Asymmetric N-Channel MOSFETDocumento10 páginasAONY36352: 30V Dual Asymmetric N-Channel MOSFETrobertjavi1983Ainda não há avaliações

- Control System Applied Principles of ControlDocumento23 páginasControl System Applied Principles of ControlAngelus Vincent GuilalasAinda não há avaliações

- Security SY0 501 Study GuideDocumento39 páginasSecurity SY0 501 Study GuidereAinda não há avaliações

- On Chain Finance ReportDocumento7 páginasOn Chain Finance ReportSivasankaran KannanAinda não há avaliações

- Let'S Learn Algebra in Earl'S Way The Easy Way!Documento23 páginasLet'S Learn Algebra in Earl'S Way The Easy Way!Cristinejoy OletaAinda não há avaliações

- Boundary-Scan DFT GuidelinesDocumento63 páginasBoundary-Scan DFT GuidelinesSambhav VermanAinda não há avaliações

- Updates From The Eastern Cape Department of EducationDocumento1 páginaUpdates From The Eastern Cape Department of EducationSundayTimesZAAinda não há avaliações

- DB and DT Series Mam PLC ManualDocumento20 páginasDB and DT Series Mam PLC ManualAdolf MutangaduraAinda não há avaliações

- List of Mobile Phones Supported OTG Function PDFDocumento7 páginasList of Mobile Phones Supported OTG Function PDFCarlos Manuel De Los Santos PerezAinda não há avaliações

- Worksheet 01: Software LAN Wi-Fi Attachments Virus Worm Resolves AntiDocumento7 páginasWorksheet 01: Software LAN Wi-Fi Attachments Virus Worm Resolves AntiAisha AnwarAinda não há avaliações

- ZET 8 Astrology Software: Welcome Astrological Feature ListDocumento254 páginasZET 8 Astrology Software: Welcome Astrological Feature ListLeombruno BlueAinda não há avaliações

- VFD Manual PDFDocumento60 páginasVFD Manual PDFray1coAinda não há avaliações

- Chloe Free Presentation TemplateDocumento25 páginasChloe Free Presentation TemplatePredrag StojkovićAinda não há avaliações

- Batch Process in SAP Net Weaver GatewayDocumento12 páginasBatch Process in SAP Net Weaver GatewayArun Varshney (MULAYAM)Ainda não há avaliações

- Homework 7 SolutionsDocumento2 páginasHomework 7 SolutionsDivyesh KumarAinda não há avaliações

- DualityDocumento28 páginasDualitygaascrAinda não há avaliações

- Stepping Heavenward by Prentiss, E. (Elizabeth), 1818-1878Documento181 páginasStepping Heavenward by Prentiss, E. (Elizabeth), 1818-1878Gutenberg.orgAinda não há avaliações

- Camera Trap ManualDocumento94 páginasCamera Trap ManualAndreas VetraAinda não há avaliações

- Periodic Tales: A Cultural History of the Elements, from Arsenic to ZincNo EverandPeriodic Tales: A Cultural History of the Elements, from Arsenic to ZincNota: 3.5 de 5 estrelas3.5/5 (137)

- Giza: The Tesla Connection: Acoustical Science and the Harvesting of Clean EnergyNo EverandGiza: The Tesla Connection: Acoustical Science and the Harvesting of Clean EnergyAinda não há avaliações

- Dark Matter and the Dinosaurs: The Astounding Interconnectedness of the UniverseNo EverandDark Matter and the Dinosaurs: The Astounding Interconnectedness of the UniverseNota: 3.5 de 5 estrelas3.5/5 (69)

- A Brief History of Time: From the Big Bang to Black HolesNo EverandA Brief History of Time: From the Big Bang to Black HolesNota: 4 de 5 estrelas4/5 (2193)

- Summary and Interpretation of Reality TransurfingNo EverandSummary and Interpretation of Reality TransurfingNota: 5 de 5 estrelas5/5 (5)

- Knocking on Heaven's Door: How Physics and Scientific Thinking Illuminate the Universe and the Modern WorldNo EverandKnocking on Heaven's Door: How Physics and Scientific Thinking Illuminate the Universe and the Modern WorldNota: 3.5 de 5 estrelas3.5/5 (64)

- Water to the Angels: William Mulholland, His Monumental Aqueduct, and the Rise of Los AngelesNo EverandWater to the Angels: William Mulholland, His Monumental Aqueduct, and the Rise of Los AngelesNota: 4 de 5 estrelas4/5 (21)

- A Beginner's Guide to Constructing the Universe: The Mathematical Archetypes of Nature, Art, and ScienceNo EverandA Beginner's Guide to Constructing the Universe: The Mathematical Archetypes of Nature, Art, and ScienceNota: 4 de 5 estrelas4/5 (51)

- Civilized To Death: The Price of ProgressNo EverandCivilized To Death: The Price of ProgressNota: 4.5 de 5 estrelas4.5/5 (215)

- Smokejumper: A Memoir by One of America's Most Select Airborne FirefightersNo EverandSmokejumper: A Memoir by One of America's Most Select Airborne FirefightersAinda não há avaliações

- The End of Everything: (Astrophysically Speaking)No EverandThe End of Everything: (Astrophysically Speaking)Nota: 4.5 de 5 estrelas4.5/5 (157)

- The Storm of the Century: Tragedy, Heroism, Survival, and the Epic True Story of America's Deadliest Natural DisasterNo EverandThe Storm of the Century: Tragedy, Heroism, Survival, and the Epic True Story of America's Deadliest Natural DisasterAinda não há avaliações

- Quantum Spirituality: Science, Gnostic Mysticism, and Connecting with Source ConsciousnessNo EverandQuantum Spirituality: Science, Gnostic Mysticism, and Connecting with Source ConsciousnessNota: 4 de 5 estrelas4/5 (6)

- Midnight in Chernobyl: The Story of the World's Greatest Nuclear DisasterNo EverandMidnight in Chernobyl: The Story of the World's Greatest Nuclear DisasterNota: 4.5 de 5 estrelas4.5/5 (410)

- Lost in Math: How Beauty Leads Physics AstrayNo EverandLost in Math: How Beauty Leads Physics AstrayNota: 4.5 de 5 estrelas4.5/5 (125)

- The Beginning of Infinity: Explanations That Transform the WorldNo EverandThe Beginning of Infinity: Explanations That Transform the WorldNota: 5 de 5 estrelas5/5 (60)

- Quantum Physics: What Everyone Needs to KnowNo EverandQuantum Physics: What Everyone Needs to KnowNota: 4.5 de 5 estrelas4.5/5 (49)

- A Brief History of the Earth's Climate: Everyone's Guide to the Science of Climate ChangeNo EverandA Brief History of the Earth's Climate: Everyone's Guide to the Science of Climate ChangeNota: 5 de 5 estrelas5/5 (4)

- The Power of Eight: Harnessing the Miraculous Energies of a Small Group to Heal Others, Your Life, and the WorldNo EverandThe Power of Eight: Harnessing the Miraculous Energies of a Small Group to Heal Others, Your Life, and the WorldNota: 4.5 de 5 estrelas4.5/5 (54)

- Packing for Mars: The Curious Science of Life in the VoidNo EverandPacking for Mars: The Curious Science of Life in the VoidNota: 4 de 5 estrelas4/5 (1396)