Escolar Documentos

Profissional Documentos

Cultura Documentos

HPC Definition: Parallel Computing Concepts and Programming Models

Enviado por

Jiju P NairDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

HPC Definition: Parallel Computing Concepts and Programming Models

Enviado por

Jiju P NairDireitos autorais:

Formatos disponíveis

HPC Definition: Large volume numerical calculations Fast and efficient algorithms improve algorithms for better efficiency.

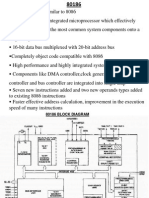

. To look at how parallel things operate. Lectures on basic level. Intel telling architecture of things. So making our program according to architecture. Highly scalable algorithms. I increase the number of nodes, time should scale. We check the scalability. Modification of computing model to take into aspect the latest developments. GPU, MIC processors from Intel (accelearators, processor arrays) Intel answer to GPU computing. Codes for complex problems, climate prediction, protein folding, structural prediction. Sequential versus Parallel In these set lectures fall into parallel. Sequential C, Java, FFT, BLAST, Bubble sort. Talk of programming: Serially minded, c = a + b, we think of programming that way. But we think other things in parallel. Main idea steps in perfect order Methodology: launch a set of task, communicate to make progress. Sorting 500 answer papers by making 5 equal piles, have them sorted by 5 people, merge them together. Communication becomes important Data Parallelism versus Functional Parallelism Team work requires communication. Data Parallel: For i = 0,99 a[i] = b[i] + c[i] multithread can be split into many sets and send to different processors Functional parallelism For i = 0,99 a[i] = b[i] + c[i]

y[i] = c[i]^2 + 2*b[i] Most problems have both data parallelism and functional parallelism Multithreading on one, and message passing on other. Different ways of parallelizing Ways of computing, not programming Oldest parallel programming in processes: arrays and vector computing vector computers. MIC or accelerated card are similar to it. Each processor has its own memory. Why it is not very popular: you had a set of arrays. IF you have a vector of 120 communicate of processor requires high bandwidth. Splitting was to be programmed. Worst problem Needed special design, people using HPC small. Special hardware not produced in large volume. So finally it was cost and scalability issues. Main paradigms shared versus distributed memory programming. Shared memory. All CPU access the same memory. If I give address to some memory then it points to same memory. Today we talk about quad core, multicore one memory that is shared or different. By multi node architecture clusters we are having both shared and distributed memory. A multiple CPU computer with shared memory is called multiprocessor. Uniform memory access or symmetric multiprocessor (SMP) Distance from CPU to memory is same. Distributed multiprocessor. Cache memory. Cache takes a copy of primary memory and keep it there. It is faster. Asymmetric multiprocessing Every CPU fetches the element it has to do and keeps it in cache. Problem of Cache Coherence

Each of the cache could have a copy here. One processor modify that all the cache should get the same. Also you need processor synchronization. Cache coherence and cache miss: Because you think memory location is same. How to overcome this: The moment variable is changed (not in programming level but below it) The moment it is changed it should invalidate in all other cache. So it says cache miss and fetch the data again. Too many cache miss will slow down Computer writes in chunks. So entire block will get invalidated. Just increasing clock speed and bus speed is not enough. Mechanisms of memory management. Distributed multiprocessing NUMA: Non uniform memory access Advantage build this with commodity processors. Interconnection networks in distributed memory architecture Shared medium: Everybody broadcast CPUs connected through some medium Ethernet, every thing broadcasts, Attach a tag to go to CPU 2 but not CPU 3. We do not used it. What is used is Switched medium: Point to point messaging. Different interconnection topologies Best way to connect. Distance form one processor to other is minimum 2 D mesh, hyper tree, fat tree. Fat tree: used in existing clusters in IIT M and use in future also. Link thickness keeps increasing as you go up. Increase bandwidth as you go up. That is very popular you can keep scaling up. Simple Parallel programming sorting numbers in a large array Divide into 5 pieces. Each part is sorted by an independent sequential algorithm and left within region. The resultant parts are merged by simply reordering among adjacent parts.

Some points on work break down 1) We need a Parallel algorithm 2) We should always do simple intuitive breakdowns. 3) Usually highly optimized sequential algorithm are not easily parallization. We have to redo 4) Breaking work often involves some pre or post processing 5) Think of cost in terms of time. Fine versus large grain parallelism and relationship to communicate. Code takes 1 hour breaking into two 35 mins, breaking again will not be half after a certain time their wont be any advantage but there will be problems of communicating and cache miss.

Você também pode gostar

- Multicore Software Development Techniques: Applications, Tips, and TricksNo EverandMulticore Software Development Techniques: Applications, Tips, and TricksNota: 2.5 de 5 estrelas2.5/5 (2)

- Serial and Parallel First 3 LectureDocumento17 páginasSerial and Parallel First 3 LectureAsif KhanAinda não há avaliações

- Aca NotesDocumento148 páginasAca NotesHanisha BavanaAinda não há avaliações

- Accelerating MATLAB with GPU Computing: A Primer with ExamplesNo EverandAccelerating MATLAB with GPU Computing: A Primer with ExamplesNota: 3 de 5 estrelas3/5 (1)

- 1 of 1 PDFDocumento7 páginas1 of 1 PDFpatasutoshAinda não há avaliações

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedNo EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedAinda não há avaliações

- Types of Parallel ComputingDocumento11 páginasTypes of Parallel Computingprakashvivek990Ainda não há avaliações

- Build Your Own Distributed Compilation Cluster: A Practical WalkthroughNo EverandBuild Your Own Distributed Compilation Cluster: A Practical WalkthroughAinda não há avaliações

- Parallel and Distributed Computing AssignmentDocumento5 páginasParallel and Distributed Computing AssignmentUmerAinda não há avaliações

- Unit 7 - Parallel Processing ParadigmDocumento26 páginasUnit 7 - Parallel Processing ParadigmMUHMMAD ZAID KURESHIAinda não há avaliações

- Operating Systems Interview Questions You'll Most Likely Be AskedNo EverandOperating Systems Interview Questions You'll Most Likely Be AskedAinda não há avaliações

- Unit - IDocumento20 páginasUnit - Isas100% (1)

- PG Cloud Computing Unit IIDocumento52 páginasPG Cloud Computing Unit IIdeepthi sanjeevAinda não há avaliações

- Unit VI Parallel Programming ConceptsDocumento90 páginasUnit VI Parallel Programming ConceptsPrathameshAinda não há avaliações

- IntroductionDocumento34 páginasIntroductionjosephboyong542Ainda não há avaliações

- Introduction To: Parallel DistributedDocumento32 páginasIntroduction To: Parallel Distributedaliha ghaffarAinda não há avaliações

- (HPC) PratikDocumento8 páginas(HPC) Pratik60Abhishek KalpavrukshaAinda não há avaliações

- Parallel ComputingDocumento28 páginasParallel ComputingGica SelyAinda não há avaliações

- PD Computing Introduction. Why Use PDCDocumento31 páginasPD Computing Introduction. Why Use PDCmohsanhussain230Ainda não há avaliações

- Watercolor Organic Shapes SlidesManiaDocumento23 páginasWatercolor Organic Shapes SlidesManiaAyush kumarAinda não há avaliações

- CA Classes-251-255Documento5 páginasCA Classes-251-255SrinivasaRaoAinda não há avaliações

- Bit-Level ParallelismDocumento3 páginasBit-Level ParallelismSHAMEEK PATHAKAinda não há avaliações

- Unit 1 NotesDocumento31 páginasUnit 1 NotesRanjith SKAinda não há avaliações

- PDC - Lecture - No. 2Documento31 páginasPDC - Lecture - No. 2nauman tariqAinda não há avaliações

- Parallelism in Computer ArchitectureDocumento27 páginasParallelism in Computer ArchitectureKumarAinda não há avaliações

- Mpi CourseDocumento202 páginasMpi CourseKiarie Jimmy NjugunahAinda não há avaliações

- DistributedcompDocumento13 páginasDistributedcompdiplomaincomputerengineeringgrAinda não há avaliações

- Parallel ProcessingDocumento31 páginasParallel ProcessingSantosh SinghAinda não há avaliações

- Introduction To Parallel ComputingDocumento2 páginasIntroduction To Parallel ComputingAnilRangaAinda não há avaliações

- Unit1 RMD PDFDocumento27 páginasUnit1 RMD PDFMonikaAinda não há avaliações

- Multicore ComputersDocumento18 páginasMulticore ComputersMikias YimerAinda não há avaliações

- Computer System Architecture: Pamantasan NG CabuyaoDocumento12 páginasComputer System Architecture: Pamantasan NG CabuyaoBien MedinaAinda não há avaliações

- Multicore ComputersDocumento21 páginasMulticore Computersmikiasyimer7362Ainda não há avaliações

- M M M M: M M M M M MDocumento28 páginasM M M M: M M M M M MAishwarya Pratap SinghAinda não há avaliações

- 1.1 ParallelismDocumento29 páginas1.1 ParallelismVinay MishraAinda não há avaliações

- MSc Parallel ProcessingDocumento9 páginasMSc Parallel ProcessingZaidBAinda não há avaliações

- HPC NotesDocumento24 páginasHPC NotesShadowOPAinda não há avaliações

- Cloud ComputingDocumento27 páginasCloud ComputingmanojAinda não há avaliações

- ACA Assignment 4Documento16 páginasACA Assignment 4shresth choudharyAinda não há avaliações

- Practical File: Parallel ComputingDocumento34 páginasPractical File: Parallel ComputingGAMING WITH DEAD PANAinda não há avaliações

- Lecture Week - 1 Introduction 1 - SP-24Documento51 páginasLecture Week - 1 Introduction 1 - SP-24imhafeezniaziAinda não há avaliações

- Introduction To Parallel ComputingDocumento34 páginasIntroduction To Parallel ComputingJOna Lyne0% (1)

- Practical 1P: Parallel Computing Lab Name: Dhruv Shah Roll - No:201080901 Batch: IVDocumento3 páginasPractical 1P: Parallel Computing Lab Name: Dhruv Shah Roll - No:201080901 Batch: IVSAMINA ATTARIAinda não há avaliações

- Parallel Computing Seminar ReportDocumento35 páginasParallel Computing Seminar ReportAmeya Waghmare100% (3)

- CS0051 - Module 01Documento52 páginasCS0051 - Module 01Ron BayaniAinda não há avaliações

- COMPUTER ARCHITECTURE AND ORGANIZATIONDocumento124 páginasCOMPUTER ARCHITECTURE AND ORGANIZATIONravidownloadingAinda não há avaliações

- HPC Lectures 1 5Documento18 páginasHPC Lectures 1 5mohamed samyAinda não há avaliações

- Introduction To Parallel and Distributed ComputingDocumento29 páginasIntroduction To Parallel and Distributed ComputingBerto ZerimarAinda não há avaliações

- Dca6105 - Computer ArchitectureDocumento6 páginasDca6105 - Computer Architectureanshika mahajanAinda não há avaliações

- NTSD-ass3Documento3 páginasNTSD-ass3Md. Ziaul Haque ShiponAinda não há avaliações

- Java ConcurrencyDocumento24 páginasJava ConcurrencyKarthik BaskaranAinda não há avaliações

- 2-2-Parallel-Distributed ComputingDocumento2 páginas2-2-Parallel-Distributed ComputingJathin DarsiAinda não há avaliações

- Lecture Week - 2 General Parallelism TermsDocumento24 páginasLecture Week - 2 General Parallelism TermsimhafeezniaziAinda não há avaliações

- Distributed ComputingDocumento26 páginasDistributed ComputingShams IslammAinda não há avaliações

- Parallel Computig AssignmentDocumento15 páginasParallel Computig AssignmentAnkitmauryaAinda não há avaliações

- Parallel Computing: Charles KoelbelDocumento12 páginasParallel Computing: Charles KoelbelSilvio DresserAinda não há avaliações

- Lecture 10Documento34 páginasLecture 10MAIMONA KHALIDAinda não há avaliações

- Making WIMBoot Image (Windows 8.1 and 10)Documento5 páginasMaking WIMBoot Image (Windows 8.1 and 10)Rhey Jen Marcelo Crusem0% (1)

- Sis 5582Documento222 páginasSis 5582Luis HerreraAinda não há avaliações

- HardwareDocumento4 páginasHardwareJulio MachadoAinda não há avaliações

- Access 2013 Beginner Level 1 Adc PDFDocumento99 páginasAccess 2013 Beginner Level 1 Adc PDFsanjanAinda não há avaliações

- CSC 5301 - Paper - DB & DW SecurityDocumento17 páginasCSC 5301 - Paper - DB & DW SecurityMohamedAinda não há avaliações

- Introduction To HCIDocumento47 páginasIntroduction To HCICHaz LinaAinda não há avaliações

- Clickatell SMTPDocumento29 páginasClickatell SMTPmnsy2005Ainda não há avaliações

- A Use Case Framework For Intelligence Driven Security Operations Centers FriDocumento27 páginasA Use Case Framework For Intelligence Driven Security Operations Centers FriehaunterAinda não há avaliações

- Convergence of Technologies: A. Multiple Choice QuestionsDocumento6 páginasConvergence of Technologies: A. Multiple Choice QuestionsBeena MathewAinda não há avaliações

- System Information ReportDocumento31 páginasSystem Information Reportjhosimar mAinda não há avaliações

- SDIO Reference ManualDocumento30 páginasSDIO Reference ManualAbdul RahmanAinda não há avaliações

- Auto HFM Flyer 2017Documento8 páginasAuto HFM Flyer 2017Nikhil KumarAinda não há avaliações

- New Ms Office Book 2007Documento117 páginasNew Ms Office Book 2007Amsa VeniAinda não há avaliações

- 220AQQ3C0Documento95 páginas220AQQ3C0jan_hraskoAinda não há avaliações

- Assignment 4 SBI3013 - Adobe PhotoshopDocumento13 páginasAssignment 4 SBI3013 - Adobe Photoshopojie folorennahAinda não há avaliações

- ADL 5G Deployment ModelsDocumento26 páginasADL 5G Deployment ModelsRaphael M MupetaAinda não há avaliações

- Basic Mpls VPN LabDocumento2 páginasBasic Mpls VPN LabcarolatuAinda não há avaliações

- Rutter VDR-100 G2 User ManualDocumento62 páginasRutter VDR-100 G2 User ManualkaporaluAinda não há avaliações

- Gencomm Control KeysDocumento4 páginasGencomm Control Keyskazishah100% (1)

- Top 10 Business Intelligence Features for BuyersDocumento23 páginasTop 10 Business Intelligence Features for BuyersAhmed YaichAinda não há avaliações

- F77 - Service ManualDocumento120 páginasF77 - Service ManualStas MAinda não há avaliações

- ESP8266 Control Servo Node RED MQTT Mosquitto IoT PDFDocumento5 páginasESP8266 Control Servo Node RED MQTT Mosquitto IoT PDFwahyuAinda não há avaliações

- 8086 Third Term TopicsDocumento46 páginas8086 Third Term TopicsgandharvsikriAinda não há avaliações

- Zentyal As A Gateway - The Perfect SetupDocumento8 páginasZentyal As A Gateway - The Perfect SetupmatAinda não há avaliações

- Chapter 1 DBMSDocumento32 páginasChapter 1 DBMSAishwarya Pandey100% (1)

- User Manual E2533DB/E2533DG DIGITAL Terrestrial Series: SupportDocumento59 páginasUser Manual E2533DB/E2533DG DIGITAL Terrestrial Series: SupportGermán LászlóAinda não há avaliações

- SET-103. RFID Based Security Access Control SystemDocumento3 páginasSET-103. RFID Based Security Access Control SystemShweta dilip Jagtap.Ainda não há avaliações

- HTTP Security Headers with Nginx: Protect Your Site with HSTS, CSP and MoreDocumento8 páginasHTTP Security Headers with Nginx: Protect Your Site with HSTS, CSP and Morecreative.ayanAinda não há avaliações

- DualCell HSDPADocumento29 páginasDualCell HSDPASABER1980Ainda não há avaliações

- Chip War: The Quest to Dominate the World's Most Critical TechnologyNo EverandChip War: The Quest to Dominate the World's Most Critical TechnologyNota: 4.5 de 5 estrelas4.5/5 (227)

- CompTIA A+ Complete Review Guide: Exam Core 1 220-1001 and Exam Core 2 220-1002No EverandCompTIA A+ Complete Review Guide: Exam Core 1 220-1001 and Exam Core 2 220-1002Nota: 5 de 5 estrelas5/5 (1)

- Chip War: The Fight for the World's Most Critical TechnologyNo EverandChip War: The Fight for the World's Most Critical TechnologyNota: 4.5 de 5 estrelas4.5/5 (82)

- 8051 Microcontroller: An Applications Based IntroductionNo Everand8051 Microcontroller: An Applications Based IntroductionNota: 5 de 5 estrelas5/5 (6)

- CompTIA A+ Complete Review Guide: Core 1 Exam 220-1101 and Core 2 Exam 220-1102No EverandCompTIA A+ Complete Review Guide: Core 1 Exam 220-1101 and Core 2 Exam 220-1102Nota: 5 de 5 estrelas5/5 (2)

- Amazon Web Services (AWS) Interview Questions and AnswersNo EverandAmazon Web Services (AWS) Interview Questions and AnswersNota: 4.5 de 5 estrelas4.5/5 (3)

- iPhone X Hacks, Tips and Tricks: Discover 101 Awesome Tips and Tricks for iPhone XS, XS Max and iPhone XNo EverandiPhone X Hacks, Tips and Tricks: Discover 101 Awesome Tips and Tricks for iPhone XS, XS Max and iPhone XNota: 3 de 5 estrelas3/5 (2)

- Model-based System and Architecture Engineering with the Arcadia MethodNo EverandModel-based System and Architecture Engineering with the Arcadia MethodAinda não há avaliações

- Dancing with Qubits: How quantum computing works and how it can change the worldNo EverandDancing with Qubits: How quantum computing works and how it can change the worldNota: 5 de 5 estrelas5/5 (1)

- Creative Selection: Inside Apple's Design Process During the Golden Age of Steve JobsNo EverandCreative Selection: Inside Apple's Design Process During the Golden Age of Steve JobsNota: 4.5 de 5 estrelas4.5/5 (49)

- 2018 (40+) Best Free Apps for Kindle Fire Tablets: +Simple Step-by-Step Guide For New Kindle Fire UsersNo Everand2018 (40+) Best Free Apps for Kindle Fire Tablets: +Simple Step-by-Step Guide For New Kindle Fire UsersAinda não há avaliações

- Hacking With Linux 2020:A Complete Beginners Guide to the World of Hacking Using Linux - Explore the Methods and Tools of Ethical Hacking with LinuxNo EverandHacking With Linux 2020:A Complete Beginners Guide to the World of Hacking Using Linux - Explore the Methods and Tools of Ethical Hacking with LinuxAinda não há avaliações

- From Cell Phones to VOIP: The Evolution of Communication Technology - Technology Books | Children's Reference & NonfictionNo EverandFrom Cell Phones to VOIP: The Evolution of Communication Technology - Technology Books | Children's Reference & NonfictionAinda não há avaliações

- Electronic Dreams: How 1980s Britain Learned to Love the ComputerNo EverandElectronic Dreams: How 1980s Britain Learned to Love the ComputerNota: 5 de 5 estrelas5/5 (1)

- Cancer and EMF Radiation: How to Protect Yourself from the Silent Carcinogen of ElectropollutionNo EverandCancer and EMF Radiation: How to Protect Yourself from the Silent Carcinogen of ElectropollutionNota: 5 de 5 estrelas5/5 (2)

- The No Bull$#!£ Guide to Building Your Own PC: No Bull GuidesNo EverandThe No Bull$#!£ Guide to Building Your Own PC: No Bull GuidesAinda não há avaliações