Escolar Documentos

Profissional Documentos

Cultura Documentos

Review of Probability and Statistics

Enviado por

Yahya KhurshidTítulo original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Review of Probability and Statistics

Enviado por

Yahya KhurshidDireitos autorais:

Formatos disponíveis

1

Review of Probability and

Statistics

2

Random Variables

X is a random variable if it represents a random

draw from some population

a discrete random variable can take on only

selected values (usually Integers)

a continuous random variable can take on any

value in a real interval

associated with each random variable is a

probability distribution

3

Random Variables Examples

the outcome of a fair coin toss a discrete

random variable with P(Head)= 0.5 and

P(Tail)= 0.5

the height of a selected student a

continuous random variable drawn from an

approximately normal distribution

4

Expected Value of X E(X)

The expected value is really just a

probability weighted average of X

E(X) is the mean of the distribution of X,

denoted by

x

Let f(x

i

) be the probability that X=x

i

, then

=

= =

n

i

i i X

x f x X E

1

) ( ) (

5

Variance of X Var(X)

The variance of X is a measure of the

dispersion or spread of the distribution

Var(X) is the expected value of the squared

deviations from the mean, so

( ) | |

2

2

) (

X X

X E X Var o = =

6

More on Variance

The square root of Var(X) is the standard

deviation of X

Var(X) can alternatively be written in terms

of a weighted sum of squared deviations,

because

( ) | | ( ) ( )

=

i X i X

x f x X E

2 2

7

Covariance Cov(X,Y)

Covariance between X and Y is a measure

of the association between two random

variables, X & Y

If positive, then both move up or down

together

If negative, then if X is high, Y is low, vice

versa

( )( ) | |

Y X XY

Y X E Y X Cov o = = ) , (

8

Correlation Between X and Y

Covariance is dependent upon the units of

X & Y [Cov(aX,bY)=abCov(X,Y)]

Correlation, Corr(X,Y), scales covariance

by the standard deviations of X & Y so that

it lies between -1 & 1

| |

2

1

) ( ) (

) , (

Y Var X Var

Y X Cov

Y X

XY

XY

= =

o o

o

9

More on Correlation & Covariance

If o

X,Y

=0 (or equivalently

X,Y

=0) then X

and Y are linearly unrelated

If

X,Y

= 1 then X and Y are said to be

perfectly positively correlated

If

X,Y

= 1 then X and Y are said to be

perfectly negatively correlated

Corr(aX,bY) = Corr(X,Y) if ab>0

Corr(aX,bY) = Corr(X,Y) if ab<0

10

Properties of Expectations

E(a)=a, Var(a)=0

E(

X

)=

X

, i.e. E(E(X))=E(X)

E(aX+b)=aE(X)+b

E(X+Y)=E(X)+E(Y)

E(X-Y)=E(X)-E(Y)

E(X-

X

)=0 or E(X-E(X))=0

E((aX)

2

)=a

2

E(X

2

)

11

More Properties

Var(X) = E(X

2

)

x

2

Var(aX+b) = a

2

Var(X)

Var(X+Y) = Var(X) +Var(Y) +2Cov(X,Y)

Var(X-Y) = Var(X) +Var(Y) - 2Cov(X,Y)

Cov(X,Y) = E(XY)-

x

y

If (and only if) X,Y independent, then

Var(X+Y)=Var(X)+Var(Y), E(XY)=E(X)E(Y)

12

The Normal Distribution

A general normal distribution, with mean

and variance o

2

is written as N(, o

2

)

It has the following probability density

function (pdf)

2

2

2

) (

2

1

) (

o

t o

=

x

e x f

13

The Standard Normal

Any random variable can be standardized by

subtracting the mean, , and dividing by the

standard deviation, o

( )

2

2

2

1

z

e z

=

t

|

X

X

X

Z

o

=

E(Z)=0, Var(Z)=1

Thus, the standard normal, N(0,1), has pdf

14

Properties of the Normal Distn

If X~N(,o

2

), then aX+b ~N(a+b,a

2

o

2

)

A linear combination of independent,

identically distributed (iid) normal random

variables will also be normally distributed

If Y

1

,Y

2

, Y

n

are iid and ~N(,o

2

), then

|

|

.

|

\

|

n

, N ~

2

o

Y

15

Cumulative Distribution Function

For a pdf, f(x), where f(x) is P(X = x), the

cumulative distribution function (cdf), F(x),

is P(X s x); P(X > x) = 1 F(x) =P(X< x)

For the standard normal, |(z), the cdf is

u(z)= P(Z<z), so

P(|Z|>a) = 2P(Z>a) = 2[1-u(a)]

P(a sZ sb) = u(b) u(a)

16

Random Samples and Sampling

For a random variable Y, repeated draws

from the same population can be labeled as

Y

1

, Y

2

, . . . , Y

n

If every combination of n sample points

has an equal chance of being selected, this

is a random sample

A random sample is a set of independent,

identically distributed (i.i.d) random

variables

17

Estimators as Random Variables

Each of our sample statistics (e.g. the

sample mean, sample variance, etc.) is a

random variable - Why?

Each time we pull a random sample, well

get different sample statistics

If we pull lots and lots of samples, well get

a distribution of sample statistics

18

Sampling Distributions

The Estimators from Samples (like Sample

Mean, Sample Variance etc) are themselves

Random Variables and their distributions

are termed as Sampling Distributions.

These include:

Chi Square Distribution

t-Distribution

F-Distribution

19

The Chi-Square Distribution

Suppose that Z

i

, i=1,,n are iid ~ N(0,1),

and X=(Z

i

2

), then

X has a chi-square distribution with n

degrees of freedom (dof), that is

X~_

2

n

If X~_

2

n

, then E(X)=n and Var(X)=2n

20

The t distribution

If a random variable, T, has a t distribution with n

degrees of freedom, then it is denoted as T~t

n

E(T)=0 (for n>1) and Var(T)=n/(n-2) (for n>2)

T is a function of Z~N(0,1) and X~_

2

n

as follows:

n

X

Z

T =

21

The F Distribution

If a random variable, F, has an F distribution with

(k

1

,k

2

) dof, then it is denoted as F~F

k1,k2

F is a function of X

1

~_

2

k1

and X

2

~_

2

k2

as follows:

|

.

|

\

|

|

.

|

\

|

=

2

2

1

1

k

X

k

X

F

22

Estimators and Estimates

Typically, we cant observe the full population, so

we must make inferences based on estimates from

a random sample

An estimator is a mathematical formula for

estimating a population parameter from sample

data

An estimate is the actual number (numerical

value) the formula produces from the sample data

23

Examples of Estimators

Suppose we want to estimate the population mean

Suppose we use the formula for E(Y), but

substitute 1/n for f(y

i

) as the probability weight

since each point has an equal chance of being

included in the sample (sample being random),

then

Can calculate the sample average for our sample:

=

=

n

i

i

Y

n

Y

1

1

24

What Makes a Good Estimator?

Unbiasedness

Efficiency

Mean Square Error (MSE)

Asymptotic properties (for large samples):

Consistency

25

Unbiasedness of Estimators

We want our estimator to be right, on average

We say an estimator, W, of a Population

Parameter, u, is unbiased if E(W)=E(u)

For our example, that means we want

Y

Y E = ) (

26

Proof: Sample Mean is Unbiased

Y Y

n

i

Y

n

i

i

n

i

i

n

n n

Y E

n

Y

n

E Y E

= = =

= |

.

|

\

|

=

=

= =

1 1

) (

1 1

) (

1

1 1

27

Example

Population

Person Age (Years)

A 40

B 42

C 44

D 50

E 65

Take a sample of size four

28

Population and Sample Statistics

Population Possible Samples of Size 4

Person Age ABCD ABCE ABDE ACDE BCDE

A 40 40 40 40 40

B 42 42 42 42 42

C 44 44 44 44 44

D 50 50 50 50 50

E 65 65 65 65 65

Mean 48.2 44 47.8 49.3 49.8 50.3

Variance 102 18.7 135 129 120 108

Std Dev 10.1 4.32 11.6 11.4 11 10.4

29

Unbiasedness

Mean of the Sample Averages =

(44+47.8+49.3+49.8+50.3)/5 = 48.2

Mean of the Sample Variances =

(18.7+135+129+120+108)/5 = 102

Mean of the Sample Std Devs =

(4.32+11.6+11.4+11+10.4)/5 = 9.73

30

Efficiency of Estimator

Want our estimator to be closer to the truth,

on average, than any other estimator

We say an estimator, W, is efficient if

Var(W)< Var( any other estimator)

Note, for our example

n n

Y

n

Var Y Var

n

i

n

i

i

2

1

2

2

1

1 1

) (

o

o = = |

.

|

\

|

=

= =

31

MSE of Estimator

What if we cant find an unbiased

estimator?

Define mean square error as E[(W-u)

2

]

Get trade off between unbiasedness and

efficiency, since MSE = variance + bias

2

For our example, it means minimizing

( ) | | ( ) ( ) ( )

2 2

Y Y

Y E Y Var Y E + =

32

Consistency of Estimator

Asymptotic property, that is, what happens

as the sample size goes to infinity?

Want distribution of W to converge to u,

i.e. plim(W)=u

For our example, it means we want

( ) > n Y P

Y

as 0 c

33

Central Limit Theorem

Asymptotic Normality implies that P(Z<z)u(z)

as n , or P(Z<z)~ u(z)

The central limit theorem states that the

standardized average of any population with mean

and variance o

2

is asymptotically ~N(0,1), or

( ) 1 , 0 ~ N

n

Y

Z

a

Y

o

=

34

Estimate of Population Variance

We have a good estimate of

Y

, would like

a good estimate of o

2

Y

Can use the sample variance given below

note division by n-1, not n, since mean is

estimated too if know can use n

( )

2

1

2

1

1

=

n

i

i

Y Y

n

S

Você também pode gostar

- Statistics Boot Camp: X F X X E DX X XF X E Important Properties of The Expectations OperatorDocumento3 páginasStatistics Boot Camp: X F X X E DX X XF X E Important Properties of The Expectations OperatorHerard NapuecasAinda não há avaliações

- F (A) P (X A) : Var (X) 0 If and Only If X Is A Constant Var (X) Var (X+Y) Var (X) + Var (Y) Var (X-Y)Documento8 páginasF (A) P (X A) : Var (X) 0 If and Only If X Is A Constant Var (X) Var (X+Y) Var (X) + Var (Y) Var (X-Y)PatriciaAinda não há avaliações

- ECONOMETRICS BASICSDocumento61 páginasECONOMETRICS BASICSmaxAinda não há avaliações

- Random Vectors:: A Random Vector Is A Column Vector Whose Elements Are Random VariablesDocumento7 páginasRandom Vectors:: A Random Vector Is A Column Vector Whose Elements Are Random VariablesPatrick MugoAinda não há avaliações

- MultivariableRegression 6Documento44 páginasMultivariableRegression 6Alada manaAinda não há avaliações

- Introductory Econometrics: Solutions of Selected Exercises From Tutorial 1Documento2 páginasIntroductory Econometrics: Solutions of Selected Exercises From Tutorial 1armailgm100% (1)

- Probability Formula SheetDocumento11 páginasProbability Formula SheetJake RoosenbloomAinda não há avaliações

- Random Variables and Probability DistributionsDocumento14 páginasRandom Variables and Probability Distributionsvelkus2013Ainda não há avaliações

- Chapter 4: Probability Distributions: 4.1 Random VariablesDocumento53 páginasChapter 4: Probability Distributions: 4.1 Random VariablesGanesh Nagal100% (1)

- Probability PresentationDocumento26 páginasProbability PresentationNada KamalAinda não há avaliações

- Activity in English III CourtDocumento3 páginasActivity in English III Courtmaria alejandra pinzon murciaAinda não há avaliações

- Chap7 Random ProcessDocumento21 páginasChap7 Random ProcessSeham RaheelAinda não há avaliações

- Samenvatting Chapter 1-3 Econometrie WatsonDocumento16 páginasSamenvatting Chapter 1-3 Econometrie WatsonTess ScholtusAinda não há avaliações

- Statistics 512 Notes I D. SmallDocumento8 páginasStatistics 512 Notes I D. SmallSandeep SinghAinda não há avaliações

- 2 Random Variable 11102020 060347pmDocumento19 páginas2 Random Variable 11102020 060347pmIrfan AslamAinda não há avaliações

- Actsc 432 Review Part 1Documento7 páginasActsc 432 Review Part 1osiccorAinda não há avaliações

- Basics of StatisticsDocumento16 páginasBasics of StatisticsSolaiman Sikder 2125149690Ainda não há avaliações

- Topic 8-Mean Square Estimation-Wiener and Kalman FilteringDocumento73 páginasTopic 8-Mean Square Estimation-Wiener and Kalman FilteringHamza MahmoodAinda não há avaliações

- Chapter 3Documento6 páginasChapter 3Frendick LegaspiAinda não há avaliações

- Multiple Linear RegressionDocumento18 páginasMultiple Linear RegressionJohn DoeAinda não há avaliações

- Pattern Basics and Related OperationsDocumento21 páginasPattern Basics and Related OperationsSri VatsaanAinda não há avaliações

- Review Key Statistical ConceptsDocumento55 páginasReview Key Statistical ConceptsMaitrayaAinda não há avaliações

- Topic 4 - Sequences of Random VariablesDocumento32 páginasTopic 4 - Sequences of Random VariablesHamza MahmoodAinda não há avaliações

- Random Variable & Probability Distribution: Third WeekDocumento51 páginasRandom Variable & Probability Distribution: Third WeekBrigitta AngelinaAinda não há avaliações

- Mathematical ExpectationDocumento25 páginasMathematical Expectationctvd93100% (1)

- ISE1204 Lecture4-1Documento23 páginasISE1204 Lecture4-1tanaka gwitiraAinda não há avaliações

- Basic Statistics For LmsDocumento23 páginasBasic Statistics For Lmshaffa0% (1)

- Business Stat & Emetrics101Documento38 páginasBusiness Stat & Emetrics101kasimAinda não há avaliações

- CH 00Documento4 páginasCH 00Wai Kit LeongAinda não há avaliações

- 2 5244801349324911431 ١٠٢٨١٤Documento62 páginas2 5244801349324911431 ١٠٢٨١٤علي الملكيAinda não há avaliações

- Lecture 2Documento15 páginasLecture 2satyabashaAinda não há avaliações

- Lesson 2: Some Elements of Probability Theory and StatisticsDocumento14 páginasLesson 2: Some Elements of Probability Theory and StatisticsfidanAinda não há avaliações

- Financial Engineering & Risk Management: Review of Basic ProbabilityDocumento46 páginasFinancial Engineering & Risk Management: Review of Basic Probabilityshanky1124Ainda não há avaliações

- 11 - Queing Theory Part 1Documento31 páginas11 - Queing Theory Part 1sairamAinda não há avaliações

- Introduction Statistics Imperial College LondonDocumento474 páginasIntroduction Statistics Imperial College Londoncmtinv50% (2)

- FECO Note 1 - Review of Statistics and Estimation TechniquesDocumento13 páginasFECO Note 1 - Review of Statistics and Estimation TechniquesChinh XuanAinda não há avaliações

- MAE 300 TextbookDocumento95 páginasMAE 300 Textbookmgerges15Ainda não há avaliações

- Class6 Prep ADocumento7 páginasClass6 Prep AMariaTintashAinda não há avaliações

- Chapter 3Documento39 páginasChapter 3api-3729261Ainda não há avaliações

- 05 Discrete PDDocumento44 páginas05 Discrete PDAyushi MauryaAinda não há avaliações

- Lect1 12Documento32 páginasLect1 12Ankit SaxenaAinda não há avaliações

- 03 - Probability Distributions and EstimationDocumento66 páginas03 - Probability Distributions and EstimationayariseifallahAinda não há avaliações

- Data Analysis - Statistics-021216 - NH (Compatibility Mode)Documento8 páginasData Analysis - Statistics-021216 - NH (Compatibility Mode)Jonathan JoeAinda não há avaliações

- Regression Model AssumptionsDocumento50 páginasRegression Model AssumptionsEthiop LyricsAinda não há avaliações

- MIT LECTURE ON DISCRETE RANDOM VARIABLESDocumento18 páginasMIT LECTURE ON DISCRETE RANDOM VARIABLESSarvraj Singh RtAinda não há avaliações

- Machine Learning and Pattern Recognition ExpectationsDocumento3 páginasMachine Learning and Pattern Recognition ExpectationszeliawillscumbergAinda não há avaliações

- Probability and Statistics: A Sample Analogues Approach: Charlie Gibbons Economics 140 University of California, BerkeleyDocumento44 páginasProbability and Statistics: A Sample Analogues Approach: Charlie Gibbons Economics 140 University of California, BerkeleyChristopher GianAinda não há avaliações

- Likelihood, Bayesian, and Decision TheoryDocumento50 páginasLikelihood, Bayesian, and Decision TheorymuralidharanAinda não há avaliações

- The Simple Regression Model (2 Variable Model) : Empirical Economics 26163 Handout 1Documento9 páginasThe Simple Regression Model (2 Variable Model) : Empirical Economics 26163 Handout 1Catalin ParvuAinda não há avaliações

- Specialist12 2ed Ch14Documento38 páginasSpecialist12 2ed Ch14samcarn69Ainda não há avaliações

- Book DownDocumento17 páginasBook DownProf. Madya Dr. Umar Yusuf MadakiAinda não há avaliações

- Random Variables: Prof. Megha SharmaDocumento35 páginasRandom Variables: Prof. Megha SharmaAnil SoniAinda não há avaliações

- Introduction To Statistics and Data Analysis - 5Documento26 páginasIntroduction To Statistics and Data Analysis - 5DanielaPulidoLunaAinda não há avaliações

- The Law of Iterated ExpectationsDocumento3 páginasThe Law of Iterated Expectationsemiliortiz1Ainda não há avaliações

- Probability DistributionsDocumento79 páginasProbability DistributionsGeleni Shalaine BelloAinda não há avaliações

- Math Supplement PDFDocumento17 páginasMath Supplement PDFRajat GargAinda não há avaliações

- Mathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsNo EverandMathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsAinda não há avaliações

- Radically Elementary Probability Theory. (AM-117), Volume 117No EverandRadically Elementary Probability Theory. (AM-117), Volume 117Nota: 4 de 5 estrelas4/5 (2)

- ProE FundamentalsDocumento1.100 páginasProE Fundamentalsapi-3833671100% (9)

- Tibial Component Positioning in Total Knee Arthroplasty: Bone Coverage and Extensor Apparatus AlignmentDocumento7 páginasTibial Component Positioning in Total Knee Arthroplasty: Bone Coverage and Extensor Apparatus AlignmentYahya KhurshidAinda não há avaliações

- Sugar Production From Cane PDFDocumento4 páginasSugar Production From Cane PDFthesapphirefalconAinda não há avaliações

- Self FinanceDocumento44 páginasSelf FinanceYahya KhurshidAinda não há avaliações

- WaqasDocumento11 páginasWaqasMuhammad WaqasAinda não há avaliações

- Departmentalization 1Documento2 páginasDepartmentalization 1Yahya KhurshidAinda não há avaliações

- Alignment of Railway Track Nptel PDFDocumento18 páginasAlignment of Railway Track Nptel PDFAshutosh MauryaAinda não há avaliações

- Template WFP-Expenditure Form 2024Documento22 páginasTemplate WFP-Expenditure Form 2024Joey Simba Jr.Ainda não há avaliações

- Ofper 1 Application For Seagoing AppointmentDocumento4 páginasOfper 1 Application For Seagoing AppointmentNarayana ReddyAinda não há avaliações

- Hydraulics Engineering Course OverviewDocumento35 páginasHydraulics Engineering Course Overviewahmad akramAinda não há avaliações

- Master SEODocumento8 páginasMaster SEOOkane MochiAinda não há avaliações

- WWW - Commonsensemedia - OrgDocumento3 páginasWWW - Commonsensemedia - Orgkbeik001Ainda não há avaliações

- Hi-Line Sportsmen Banquet Is February 23rd: A Chip Off The Ol' Puck!Documento8 páginasHi-Line Sportsmen Banquet Is February 23rd: A Chip Off The Ol' Puck!BS Central, Inc. "The Buzz"Ainda não há avaliações

- EXPERIMENT 4 FlowchartDocumento3 páginasEXPERIMENT 4 FlowchartTRISHA PACLEBAinda não há avaliações

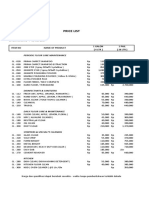

- Price List PPM TerbaruDocumento7 páginasPrice List PPM TerbaruAvip HidayatAinda não há avaliações

- Technical Specification of Heat Pumps ElectroluxDocumento9 páginasTechnical Specification of Heat Pumps ElectroluxAnonymous LDJnXeAinda não há avaliações

- ArDocumento26 páginasArSegunda ManoAinda não há avaliações

- Beauty ProductDocumento12 páginasBeauty ProductSrishti SoniAinda não há avaliações

- Excess AirDocumento10 páginasExcess AirjkaunoAinda não há avaliações

- Done - NSTP 2 SyllabusDocumento9 páginasDone - NSTP 2 SyllabusJoseph MazoAinda não há avaliações

- Paper 4 (A) (I) IGCSE Biology (Time - 30 Mins)Documento12 páginasPaper 4 (A) (I) IGCSE Biology (Time - 30 Mins)Hisham AlEnaiziAinda não há avaliações

- SEC QPP Coop TrainingDocumento62 páginasSEC QPP Coop TrainingAbdalelah BagajateAinda não há avaliações

- Inborn Errors of Metabolism in Infancy: A Guide To DiagnosisDocumento11 páginasInborn Errors of Metabolism in Infancy: A Guide To DiagnosisEdu Diaperlover São PauloAinda não há avaliações

- WindSonic GPA Manual Issue 20Documento31 páginasWindSonic GPA Manual Issue 20stuartAinda não há avaliações

- Draft SemestralWorK Aircraft2Documento7 páginasDraft SemestralWorK Aircraft2Filip SkultetyAinda não há avaliações

- PeopleSoft Security TablesDocumento8 páginasPeopleSoft Security TablesChhavibhasinAinda não há avaliações

- BenchmarkDocumento4 páginasBenchmarkKiran KumarAinda não há avaliações

- Copula and Multivariate Dependencies: Eric MarsdenDocumento48 páginasCopula and Multivariate Dependencies: Eric MarsdenJeampierr Jiménez CheroAinda não há avaliações

- Bio310 Summary 1-5Documento22 páginasBio310 Summary 1-5Syafiqah ArdillaAinda não há avaliações

- UD150L-40E Ope M501-E053GDocumento164 páginasUD150L-40E Ope M501-E053GMahmoud Mady100% (3)

- Mil STD 2154Documento44 páginasMil STD 2154Muh SubhanAinda não há avaliações

- Service Manual: Precision SeriesDocumento32 páginasService Manual: Precision SeriesMoises ShenteAinda não há avaliações

- Mutual Fund PDFDocumento22 páginasMutual Fund PDFRajAinda não há avaliações

- Meet Joe Black (1998) : A Metaphor of LifeDocumento10 páginasMeet Joe Black (1998) : A Metaphor of LifeSara OrsenoAinda não há avaliações

- N4 Electrotechnics August 2021 MemorandumDocumento8 páginasN4 Electrotechnics August 2021 MemorandumPetro Susan BarnardAinda não há avaliações