Escolar Documentos

Profissional Documentos

Cultura Documentos

Data Mining and Its Application and Usage in Medicine: by Radhika

Enviado por

Ali MonDescrição original:

Título original

Direitos autorais

Formatos disponíveis

Compartilhar este documento

Compartilhar ou incorporar documento

Você considera este documento útil?

Este conteúdo é inapropriado?

Denunciar este documentoDireitos autorais:

Formatos disponíveis

Data Mining and Its Application and Usage in Medicine: by Radhika

Enviado por

Ali MonDireitos autorais:

Formatos disponíveis

1

CSE

300

Data mining and its application and

usage in medicine

By Radhika

2

CSE

300

Data Mining and Medicine

History

Past 20 years with relational databases

More dimensions to database queries

earliest and most successful area of data mining

Mid 1800s in London hit by infectious disease

Two theories

Miasma theory Bad air propagated disease

Germ theory Water-borne

Advantages

Discover trends even when we dont understand reasons

Discover irrelevant patterns that confuse than enlighten

Protection against unaided human inference of patterns provide

quantifiable measures and aid human judgment

Data Mining

Patterns persistent and meaningful

Knowledge Discovery of Data

3

CSE

300

The future of data mining

10 biggest killers in the US

Data mining = Process of discovery of interesting,

meaningful and actionable patterns hidden in large

amounts of data

4

CSE

300

Major Issues in Medical Data Mining

Heterogeneity of medical data

Volume and complexity

Physicians interpretation

Poor mathematical categorization

Canonical Form

Solution: Standard vocabularies, interfaces

between different sources of data integrations,

design of electronic patient records

Ethical, Legal and Social Issues

Data Ownership

Lawsuits

Privacy and Security of Human Data

Expected benefits

Administrative Issues

5

CSE

300

Why Data Preprocessing?

Patient records consist of clinical, lab parameters,

results of particular investigations, specific to tasks

Incomplete: lacking attribute values, lacking

certain attributes of interest, or containing only

aggregate data

Noisy: containing errors or outliers

Inconsistent: containing discrepancies in codes or

names

Temporal chronic diseases parameters

No quality data, no quality mining results!

Data warehouse needs consistent integration of

quality data

Medical Domain, to handle incomplete,

inconsistent or noisy data, need people with

domain knowledge

6

CSE

300

What is Data Mining? The KDD Process

Data Cleaning

Data Integration

Databases

Data

Warehouse

Task-relevant

Data

Selection

Data Mining

Pattern Evaluation

7

CSE

300

From Tables and Spreadsheets to Data Cubes

A data warehouse is based on a multidimensional data

model that views data in the form of a data cube

A data cube, such as sales, allows data to be modeled

and viewed in multiple dimensions

Dimension tables, such as item (item_name, brand,

type), or time(day, week, month, quarter, year)

Fact table contains measures (such as dollars_sold)

and keys to each of related dimension tables

W. H. Inmon:A data warehouse is a subject-oriented,

integrated, time-variant, and nonvolatile collection of

data in support of managements decision-making

process.

8

CSE

300

Data Warehouse vs. Heterogeneous DBMS

Data warehouse: update-driven, high performance

Information from heterogeneous sources is

integrated in advance and stored in warehouses for

direct query and analysis

Do not contain most current information

Query processing does not interfere with

processing at local sources

Store and integrate historical information

Support complex multidimensional queries

9

CSE

300

Data Warehouse vs. Operational DBMS

OLTP (on-line transaction processing)

Major task of traditional relational DBMS

Day-to-day operations: purchasing, inventory,

banking, manufacturing, payroll, registration,

accounting, etc.

OLAP (on-line analytical processing)

Major task of data warehouse system

Data analysis and decision making

Distinct features (OLTP vs. OLAP):

User and system orientation: customer vs. market

Data contents: current, detailed vs. historical,

consolidated

Database design: ER + application vs. star + subject

View: current, local vs. evolutionary, integrated

Access patterns: update vs. read-only but complex

queries

10

CSE

300

11

CSE

300

Why Separate Data Warehouse?

High performance for both systems

DBMS tuned for OLTP: access methods, indexing,

concurrency control, recovery

Warehouse tuned for OLAP: complex OLAP queries,

multidimensional view, consolidation

Different functions and different data:

Missing data: Decision support requires historical

data which operational DBs do not typically maintain

Data consolidation: DS requires consolidation

(aggregation, summarization) of data from

heterogeneous sources

Data quality: different sources typically use

inconsistent data representations, codes and formats

which have to be reconciled

12

CSE

300

13

CSE

300

14

CSE

300

Typical OLAP Operations

Roll up (drill-up): summarize data

by climbing up hierarchy or by dimension reduction

Drill down (roll down): reverse of roll-up

from higher level summary to lower level summary or

detailed data, or introducing new dimensions

Slice and dice:

project and select

Pivot (rotate):

reorient the cube, visualization, 3D to series of 2D planes.

Other operations

drill across: involving (across) more than one fact table

drill through: through the bottom level of the cube to its

back-end relational tables (using SQL)

15

CSE

300

16

CSE

300

17

CSE

300

Multi-Tiered Architecture

Data

Warehouse

Extract

Transform

Load

Refresh

OLAP Engine

Analysis

Query

Reports

Data mining

Monitor

&

Integrator

Metadata

Data Sources

Front-End Tools

Serve

Data Marts

Operational

DBs

other

sources

Data Storage

OLAP Server

18

CSE

300

Steps of a KDD Process

Learning the application domain:

relevant prior knowledge and goals of application

Creating a target data set: data selection

Data cleaning and preprocessing: (may take 60% of effort!)

Data reduction and transformation:

Find useful features, dimensionality/variable reduction,

invariant representation.

Choosing functions of data mining

summarization, classification, regression, association,

clustering.

Choosing the mining algorithm(s)

Data mining: search for patterns of interest

Pattern evaluation and knowledge presentation

visualization, transformation, removing redundant patterns,

etc.

Use of discovered knowledge

19

CSE

300

Common Techniques in Data Mining

Predictive Data Mining

Most important

Classification: Relate one set of variables in data to

response variables

Regression: estimate some continuous value

Descriptive Data Mining

Clustering: Discovering groups of similar instances

Association rule extraction

Variables/Observations

Summarization of group descriptions

20

CSE

300

Leukemia

Different types of cells look very similar

Given a number of samples (patients)

can we diagnose the disease accurately?

Predict the outcome of treatment?

Recommend best treatment based of previous

treatments?

Solution: Data mining on micro-array data

38 training patients, 34 testing patients ~ 7000 patient

attributes

2 classes: Acute Lymphoblastic Leukemia(ALL) vs

Acute Myeloid Leukemia (AML)

21

CSE

300

Clustering/Instance Based Learning

Uses specific instances to perform classification than general

IF THEN rules

Nearest Neighbor classifier

Most studied algorithms for medical purposes

Clustering Partitioning a data set into several groups

(clusters) such that

Homogeneity: Objects belonging to the same cluster are

similar to each other

Separation: Objects belonging to different clusters are

dissimilar to each other.

Three elements

The set of objects

The set of attributes

Distance measure

22

CSE

300

Measure the Dissimilarity of Objects

Find best matching instance

Distance function

Measure the dissimilarity between a pair of

data objects

Things to consider

Usually very different for interval-scaled,

boolean, nominal, ordinal and ratio-scaled

variables

Weights should be associated with different

variables based on applications and data

semantic

Quality of a clustering result depends on both the

distance measure adopted and its implementation

23

CSE

300

Minkowski Distance

Minkowski distance: a generalization

If q = 2, d is Euclidean distance

If q = 1, d is Manhattan distance

) 0 ( | | ... | | | | ) , (

2 2 1 1

> + + + = q

q

j

x

i

x

j

x

i

x

j

x

i

x j i d

q

p p

q q

x

i

x

j

q=2

q=1

6

6

12

8.48

X

i

(1,7)

X

j

(7,1)

24

CSE

300

Binary Variables

A contingency table for binary data

Simple matching coefficient

d c b a

c b

j i d

+ + +

+

= ) , (

p d b c a sum

d c d c

b a b a

sum

+ +

+

+

0

1

0 1

Object i

Object j

25

CSE

300

Dissimilarity between Binary Variables

Example

A1 A2 A3 A4 A5 A6 A7

Object 1 1 0 1 1 1 0 0

Object 2 1 1 1 0 0 0 1

Object 1

Object 2

1 0 sum

1 2 2 4

0 2 1 3

sum 4 3 7

7

4

1 2 2 2

2 2

)

2

,

1

( =

+ + +

+

= O O d

26

CSE

300

K-nearest neighbors algorithm

Initialization

Arbitrarily choose k objects as the initial cluster

centers (centroids)

Iteration until no change

For each object O

i

Calculate the distances between O

i

and the k centroids

(Re)assign O

i

to the cluster whose centroid is the

closest to O

i

Update the cluster centroids based on current

assignment

27

CSE

300

k-Means Clustering Method

0

1

2

3

4

5

6

7

8

9

10

0 1 2 3 4 5 6 7 8 9 10

0

1

2

3

4

5

6

7

8

9

10

0 1 2 3 4 5 6 7 8 9 10

0

1

2

3

4

5

6

7

8

9

10

0 1 2 3 4 5 6 7 8 9 10

0

1

2

3

4

5

6

7

8

9

10

0 1 2 3 4 5 6 7 8 9 10

cluster

mean

current

clusters

new

clusters

objects

relocated

28

CSE

300

Dataset

Data set from UCI repository

http://kdd.ics.uci.edu/

768 female Pima Indians evaluated for diabetes

After data cleaning 392 data entries

29

CSE

300

Hierarchical Clustering

Groups observations based on dissimilarity

Compacts database into labels that represent the

observations

Measure of similarity/Dissimilarity

Euclidean Distance

Manhattan Distance

Types of Clustering

Single Link

Average Link

Complete Link

30

CSE

300

Hierarchical Clustering: Comparison

Average-link

Centroid distance

1

2

3

4

5

6

1

2

5

3

4

Single-link Complete-link

1

2

3

4

5

6

1

2

5

3

4

1

2

3

4

5

6

1

2 5

3

4

1

2

3

4

5

6

1

2

3

4

5

31

CSE

300

Compare Dendrograms

1 2 5 3 6 4

1 2 5 3 6 4

1 2 5 3 6 4

2 5 3 6 4 1

Average-link

Centroid distance

Single-link

Complete-link

32

CSE

300

Which Distance Measure is Better?

Each method has both advantages and disadvantages;

application-dependent

Single-link

Can find irregular-shaped clusters

Sensitive to outliers

Complete-link, Average-link, and Centroid distance

Robust to outliers

Tend to break large clusters

Prefer spherical clusters

33

CSE

300

Dendrogram from dataset

Minimum spanning tree through the observations

Single observation that is last to join the cluster is patient whose

blood pressure is at bottom quartile, skin thickness is at bottom

quartile and BMI is in bottom half

Insulin was however largest and she is 59-year old diabetic

34

CSE

300

Dendrogram from dataset

Maximum dissimilarity between observations in one

cluster when compared to another

35

CSE

300

Dendrogram from dataset

Average dissimilarity between observations in one

cluster when compared to another

36

CSE

300

Supervised versus Unsupervised Learning

Supervised learning (classification)

Supervision: Training data (observations,

measurements, etc.) are accompanied by labels

indicating the class of the observations

New data is classified based on training set

Unsupervised learning (clustering)

Class labels of training data are unknown

Given a set of measurements, observations, etc.,

need to establish existence of classes or clusters in

data

37

CSE

300

Derive models that can use patient specific

information, aid clinical decision making

Apriori decision on predictors and variables to predict

No method to find predictors that are not present in the

data

Numeric Response

Least Squares Regression

Categorical Response

Classification trees

Neural Networks

Support Vector Machine

Decision models

Prognosis, Diagnosis and treatment planning

Embed in clinical information systems

Classification and Prediction

38

CSE

300

Least Squares Regression

Find a linear function of predictor variables that

minimize the sum of square difference with response

Supervised learning technique

Predict insulin in our dataset :glucose and BMI

39

CSE

300

Decision Trees

Decision tree

Each internal node tests an attribute

Each branch corresponds to attribute value

Each leaf node assigns a classification

ID3 algorithm

Based on training objects with known class labels to

classify testing objects

Rank attributes with information gain measure

Minimal height

least number of tests to classify an object

Used in commercial tools eg: Clementine

ASSISTANT

Deal with medical datasets

Incomplete data

Discretize continuous variables

Prune unreliable parts of tree

Classify data

40

CSE

300

Decision Trees

41

CSE

300

Algorithm for Decision Tree Induction

Basic algorithm (a greedy algorithm)

Attributes are categorical (if continuous-valued,

they are discretized in advance)

Tree is constructed in a top-down recursive

divide-and-conquer manner

At start, all training examples are at the root

Test attributes are selected on basis of a heuristic

or statistical measure (e.g., information gain)

Examples are partitioned recursively based on

selected attributes

42

CSE

300

Training Dataset

Age BMI Hereditary Vision Risk of

Condition X

P1 <=30 high no fair no

P2 <=30 high no excellent no

P3 >40 high no fair yes

P4 3140 medium no fair yes

P5 3140 low yes fair yes

P6 3140 low yes excellent no

P7 >40 low yes excellent yes

P8 <=30 medium no fair no

P9 <=30 low yes fair yes

P10 3140 medium yes fair yes

P11 <=30 medium yes excellent yes

P12 >40 medium no excellent yes

P13 >40 high yes fair yes

P14 3140 medium no excellent no

43

CSE

300

Construction of A Decision Tree for Condition X

Age?

>40

3040

<=30

[P1,P14]

Yes: 9, No:5

[P1,P2,P8,P9,P11]

Yes: 2, No:3

[P3,P7,P12,P13]

Yes: 4, No:0

[P4,P5,P6,P10,P14]

Yes: 3, No:2

History

no yes

YES

[P1,P2,P8]

Yes: 0,

No:3

[P9,P11]

Yes: 2,

No:0

Vision

fair excellent

NO YES

NO

YES

[P6,P14]

Yes: 0,

No:2

[P4,P5,P10]

Yes: 3,

No:0

44

CSE

300

Entropy and Information Gain

S contains s

i

tuples of class C

i

for i = {1, ..., m}

Information measures info required to classify any

arbitrary tuple

Entropy of attribute A with values {a

1

,a

2

,,a

v

}

Information gained by branching on attribute A

s

s

s

s

,...,s ,s s

i

m

i

i

m 2 1 2

1

log ) I(

=

=

) ,..., (

...

E(A) 1

1

1

mj j

mj j

s s I

s

s s

v

j =

+ +

=

) E( ) ,..., , I( ) Gain( 2 1 A s s s A m =

45

CSE

300

Entropy and Information Gain

Select attribute with the highest information gain (or

greatest entropy reduction)

Such attribute minimizes information needed to

classify samples

46

CSE

300

Rule Induction

IF conditions THEN Conclusion

Eg: CN2

Concept description:

Characterization: provides a concise and succinct summarization of

given collection of data

Comparison: provides descriptions comparing two or more

collections of data

Training set, testing set

Imprecise

Predictive Accuracy

P/P+N

47

CSE

300

Example used in a Clinic

Hip arthoplasty trauma surgeon predict patients long-

term clinical status after surgery

Outcome evaluated during follow-ups for 2 years

2 modeling techniques

Nave Bayesian classifier

Decision trees

Bayesian classifier

P(outcome=good) = 0.55 (11/20 good)

Probability gets updated as more attributes are

considered

P(timing=good|outcome=good) = 9/11 (0.846)

P(outcome = bad) = 9/20

P(timing=good|outcome=bad) = 5/9

48

CSE

300

Nomogram

49

CSE

300

Bayesian Classification

Bayesian classifier vs. decision tree

Decision tree: predict the class label

Bayesian classifier: statistical classifier; predict

class membership probabilities

Based on Bayes theorem; estimate posterior

probability

Nave Bayesian classifier:

Simple classifier that assumes attribute

independence

High speed when applied to large databases

Comparable in performance to decision trees

50

CSE

300

Bayes Theorem

Let X be a data sample whose class label is unknown

Let H

i

be the hypothesis that X belongs to a particular

class C

i

P(H

i

) is class prior probability that X belongs to a

particular class C

i

Can be estimated by n

i

/n from training data

samples

n is the total number of training data samples

n

i

is the number of training data samples of class C

i

) (

) ( ) | (

) | (

X P

i

H P

i

H X P

X

i

H P =

Formula of Bayes Theorem

51

CSE

300

More classification Techniques

Neural Networks

Similar to pattern recognition properties of biological

systems

Most frequently used

Multi-layer perceptrons

Input with bias, connected by weights to hidden, output

Backpropagation neural networks

Support Vector Machines

Separate database to mutually exclusive regions

Transform to another problem space

Kernel functions (dot product)

Output of new points predicted by position

Comparison with classification trees

Not possible to know which features or combination of

features most influence a prediction

52

CSE

300

Multilayer Perceptrons

Non-linear transfer functions to weighted sums of

inputs

Werbos algorithm

Random weights

Training set, Testing set

53

CSE

300

Support Vector Machines

3 steps

Support Vector creation

Maximal distance between points found

Perpendicular decision boundary

Allows some points to be misclassified

Pima Indian data with X1(glucose) X2(BMI)

54

CSE

300

What is Association Rule Mining?

Finding frequent patterns, associations, correlations, or causal

structures among sets of items or objects in transaction

databases, relational databases, and other information

repositories

Example of Association Rules

{High LDL, Low HDL}

{Heart Failure}

PatientID Conditions

1 High LDL Low HDL,

High BMI, Heart Failure

2 High LDL Low HDL,

Heart Failure, Diabetes

3 Diabetes

4 High LDL Low HDL,

Heart Failure

5 High BMI , High LDL

Low HDL, Heart Failure

People who have high LDL

(bad cholesterol), low HDL

(good cholesterol) are at

higher risk of heart failure.

55

CSE

300

Association Rule Mining

Market Basket Analysis

Same groups of items bought placed together

Healthcare

Understanding among association among patients with

demands for similar treatments and services

Goal : find items for which joint probability of

occurrence is high

Basket of binary valued variables

Results form association rules, augmented with

support and confidence

56

CSE

300

Association Rule Mining

D in trans

Y X containing trans

Y X P

#

) ( #

) (

=

Association Rule

An implication

expression of the form

X Y, where X and Y

are itemsets and

XY=C

Rule Evaluation

Metrics

Support (s): Fraction of

transactions that

contain both X and Y

Confidence (c):

Measures how often

items in Y appear in

transactions that

contain X

X containing trans

Y X containing trans

Y X P

#

) ( #

) | (

=

Trans

containing Y

Trans containing

both X and Y

Trans

containing X

D

57

CSE

300

The Apriori Algorithm

Starts with most frequent 1-itemset

Include only those items that pass threshold

Use 1-itemset to generate 2-itemsets

Stop when threshold not satisfied by any itemset

L

1

= {frequent items};

for (k = 1; L

k

!=C; k++) do

Candidate Generation: C

k+1

= candidates

generated from L

k

;

Candidate Counting: for each transaction t in

database do increment the count of all candidates

in C

k+1

that are contained in t

L

k+1

= candidates in C

k+1

with min_sup

return

k

L

k

;

58

CSE

300

Apriori-based Mining

b, e 40

a, b, c, e 30

b, c, e 20

a, c, d 10

Items TID

Min_sup=0.5

1 d

3 e

3 c

3 b

2 a

Sup Itemset

Data base D 1-candidates

Scan D

3 e

3 c

3 b

2 a

Sup Itemset

Freq 1-itemsets

bc

ae

ac

ce

be

ab

Itemset

2-candidates

ce

be

bc

ae

ac

ab

Itemset

2

1

2

2

3

1

Sup

Counting

Scan D

ce

be

bc

ac

Itemset

2

2

2

3

Sup

Freq 2-itemsets

bce

Itemset

3-candidates

bce

Itemset

2

Sup

Freq 3-itemsets

Scan D

59

CSE

300

Principle Component Analysis

Principle Components

In cases of large number of variables, highly possible that

some subsets of the variables are very correlated with each

other. Reduce variables but retain variability in dataset

Linear combinations of variables in the database

Variance of each PC maximized

Display as much spread of the original data

PC orthogonal with each other

Minimize the overlap in the variables

Each component normalized sum of square is unity

Easier for mathematical analysis

Number of PC < Number of variables

Associations found

Small number of PC explain large amount of variance

Example 768 female Pima Indians evaluated for diabetes

Number of times pregnant, two-hour oral glucose tolerance test

(OGTT) plasma glucose, Diastolic blood pressure, Triceps skin

fold thickness, Two-hour serum insulin, BMI, Diabetes pedigree

function, Age, Diabetes onset within last 5 years

60

CSE

300

PCA Example

61

CSE

300

National Cancer Institute

CancerNet http://www.nci.nih.gov

CancerNet for Patients and the Public

CancerNet for Health Professionals

CancerNet for Basic Reasearchers

CancerLit

62

CSE

300

Conclusion

About billion of peoples medical records are

electronically available

Data mining in medicine distinct from other fields due

to nature of data: heterogeneous, with ethical, legal

and social constraints

Most commonly used technique is classification and

prediction with different techniques applied for

different cases

Associative rules describe the data in the database

Medical data mining can be the most rewarding

despite the difficulty

63

CSE

300

Thank you !!!

Você também pode gostar

- Python Machine Learning for Beginners: Unsupervised Learning, Clustering, and Dimensionality Reduction. Part 1No EverandPython Machine Learning for Beginners: Unsupervised Learning, Clustering, and Dimensionality Reduction. Part 1Ainda não há avaliações

- Data Mining and Its Application and Usage in MedicineDocumento63 páginasData Mining and Its Application and Usage in MedicineSubhashini RAinda não há avaliações

- UNIT-1 Introduction To Data MiningDocumento29 páginasUNIT-1 Introduction To Data MiningVedhaVyas MahasivaAinda não há avaliações

- PROFICIENCY Data MiningDocumento6 páginasPROFICIENCY Data MiningAyushi JAINAinda não há avaliações

- Tutor Test and Home Assignment Questions For deDocumento4 páginasTutor Test and Home Assignment Questions For deachaparala4499Ainda não há avaliações

- Data Analytics 2marks PDFDocumento13 páginasData Analytics 2marks PDFshobana100% (1)

- Data Mining: Concepts and Techniques: April 30, 2012Documento64 páginasData Mining: Concepts and Techniques: April 30, 2012Ramana VankudotuAinda não há avaliações

- Dwdmsem 6 QBDocumento13 páginasDwdmsem 6 QBSuresh KumarAinda não há avaliações

- ASS Ignments: Program: BSC It Semester-ViDocumento14 páginasASS Ignments: Program: BSC It Semester-ViAmanya AllanAinda não há avaliações

- Glossary Terms-And-DefinitionsDocumento16 páginasGlossary Terms-And-DefinitionsGabriel SerranoAinda não há avaliações

- Data Mining University AnswerDocumento10 páginasData Mining University Answeroozed12Ainda não há avaliações

- Data Mining 2-5Documento4 páginasData Mining 2-5nirman kumarAinda não há avaliações

- Data Mining Syllabus and QuestionDocumento6 páginasData Mining Syllabus and QuestionComfortablyNumbAinda não há avaliações

- 1 s2.0 S1877050915012326 MainDocumento9 páginas1 s2.0 S1877050915012326 MainIntan Novela ernasAinda não há avaliações

- Rule Extraction in Diagnosis of Vertebral Column DiseaseDocumento5 páginasRule Extraction in Diagnosis of Vertebral Column DiseaseEditor IJRITCCAinda não há avaliações

- Chapter 3: Data MiningDocumento20 páginasChapter 3: Data MiningshreyaAinda não há avaliações

- MIS 385/MBA 664 Systems Implementation With DBMS/ Database ManagementDocumento39 páginasMIS 385/MBA 664 Systems Implementation With DBMS/ Database ManagementRudie BuzzAinda não há avaliações

- Course 6 Week 1 Glossary DA Terms and DefinitionsDocumento18 páginasCourse 6 Week 1 Glossary DA Terms and DefinitionsBadreddine BencharraAinda não há avaliações

- Solutions To DM I MID (A)Documento19 páginasSolutions To DM I MID (A)jyothibellaryv100% (1)

- Glossary: Data AnalyticsDocumento15 páginasGlossary: Data AnalyticsMuhammad SohailAinda não há avaliações

- Contact Me To Get Fully Solved Smu Assignments/Project/Synopsis/Exam Guide PaperDocumento7 páginasContact Me To Get Fully Solved Smu Assignments/Project/Synopsis/Exam Guide PaperMrinal KalitaAinda não há avaliações

- Data Warehousing and Data MiningDocumento18 páginasData Warehousing and Data Mininglskannan47Ainda não há avaliações

- Data Mining: An Overview From A Database PerspectiveDocumento30 páginasData Mining: An Overview From A Database PerspectiveAshish SakpalAinda não há avaliações

- Data Mining Primitives, Languages and System ArchitectureDocumento64 páginasData Mining Primitives, Languages and System Architecturesureshkumar001Ainda não há avaliações

- Module2 Ids 240201 162026Documento11 páginasModule2 Ids 240201 162026sachinsachitha1321Ainda não há avaliações

- Data Science AssignmentDocumento9 páginasData Science AssignmentAnuja SuryawanshiAinda não há avaliações

- Enhancement of Qualities of Clusters by Eliminating Outlier For Data Mining Application in EducationDocumento27 páginasEnhancement of Qualities of Clusters by Eliminating Outlier For Data Mining Application in Educationdiptipatil20Ainda não há avaliações

- Down 3Documento129 páginasDown 3pavunkumarAinda não há avaliações

- Data Mining P5Documento32 páginasData Mining P5Andi PernandaAinda não há avaliações

- Data Mining 2 MarksDocumento17 páginasData Mining 2 MarksSuganya Periasamy100% (1)

- CH 2Documento37 páginasCH 2gauravkhunt110Ainda não há avaliações

- Dev Answer KeyDocumento17 páginasDev Answer Keyjayapriya kce100% (1)

- Data Warehousing/Mining Comp 150 DW Chapter 5: Concept Description: Characterization and ComparisonDocumento59 páginasData Warehousing/Mining Comp 150 DW Chapter 5: Concept Description: Characterization and ComparisonkpchakAinda não há avaliações

- Duck Data Umpire by Cubical Kits: Sarathchand P.V. B.E (Cse), M.Tech (CS), (PHD) Professor and Research ScholarDocumento4 páginasDuck Data Umpire by Cubical Kits: Sarathchand P.V. B.E (Cse), M.Tech (CS), (PHD) Professor and Research ScholarRakeshconclaveAinda não há avaliações

- Satyabhama BigdataDocumento128 páginasSatyabhama BigdataVijaya Kumar VadladiAinda não há avaliações

- FODSDocumento6 páginasFODSJayanth HegdeAinda não há avaliações

- DM 1 PDFDocumento67 páginasDM 1 PDFRahul PawarAinda não há avaliações

- BA Unit 1 Question BankDocumento8 páginasBA Unit 1 Question Banku25sivakumarAinda não há avaliações

- Datamining - Primitives - Languages - System ArchDocumento50 páginasDatamining - Primitives - Languages - System ArchDrArun Kumar ChoudharyAinda não há avaliações

- Dbms Unit 3Documento40 páginasDbms Unit 3Sekar KsrAinda não há avaliações

- Unit 5Documento31 páginasUnit 5minichelAinda não há avaliações

- Syllabus:: 1.1 Data MiningDocumento30 páginasSyllabus:: 1.1 Data MiningMallikarjun BatchanaboinaAinda não há avaliações

- Entity Attribute Value Style Modeling ApproachDocumento30 páginasEntity Attribute Value Style Modeling ApproachjdefreitesAinda não há avaliações

- Data Mining QAsDocumento6 páginasData Mining QAsAjit KumarAinda não há avaliações

- Data PreprocessingDocumento28 páginasData PreprocessingRahul SharmaAinda não há avaliações

- Great Compiled Notes Data Mining V1Documento92 páginasGreat Compiled Notes Data Mining V1MALLIKARJUN YAinda não há avaliações

- Vardha DSDocumento121 páginasVardha DSVipul GuptaAinda não há avaliações

- Chapter 5: Concept Description: Characterization and ComparisonDocumento58 páginasChapter 5: Concept Description: Characterization and ComparisonHarjas BakshiAinda não há avaliações

- 1y EyZZjRcyvhMmWY1XMYQ - Course 5 Week 1 Glossary - DA Terms and DefinitionsDocumento14 páginas1y EyZZjRcyvhMmWY1XMYQ - Course 5 Week 1 Glossary - DA Terms and Definitionskopibiasa kopibiasaAinda não há avaliações

- Lab 1: Preprocessing Using PythonDocumento5 páginasLab 1: Preprocessing Using PythonPF 21 Disha GidwaniAinda não há avaliações

- Data Cleansing Using RDocumento10 páginasData Cleansing Using RDaniel N Sherine Foo0% (1)

- Data MiningDocumento40 páginasData Miningkangmo AbelAinda não há avaliações

- Data Mining Primitives, Languages and System ArchitectureDocumento64 páginasData Mining Primitives, Languages and System ArchitectureSarath KumarAinda não há avaliações

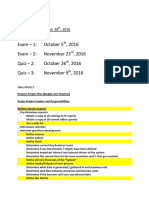

- Exam - 1: October 5, 2016 Exam - 2: November 23, 2016 Quiz - 2: October 26, 2016 Quiz - 3: November 9, 2016Documento14 páginasExam - 1: October 5, 2016 Exam - 2: November 23, 2016 Quiz - 2: October 26, 2016 Quiz - 3: November 9, 2016Sarath LakkarajuAinda não há avaliações

- Dataminig ch1 30006Documento4 páginasDataminig ch1 30006Rehman AliAinda não há avaliações

- Basic RDBMS ConceptsDocumento62 páginasBasic RDBMS ConceptsDrKingshuk SrivastavaAinda não há avaliações

- Data Warehouse NotesDocumento9 páginasData Warehouse NotesFaheem ShaukatAinda não há avaliações

- ML Unit 2Documento41 páginasML Unit 2abhijit kateAinda não há avaliações

- Data Science and Big Data Analytics: Discovering, Analyzing, Visualizing and Presenting DataNo EverandData Science and Big Data Analytics: Discovering, Analyzing, Visualizing and Presenting DataEMC Education ServicesAinda não há avaliações

- UserDocumento4 páginasUserAli MonAinda não há avaliações

- Credit Fraud Detection in The Banking SectorDocumento6 páginasCredit Fraud Detection in The Banking SectorAli MonAinda não há avaliações

- Maths PaperDocumento13 páginasMaths PaperAli MonAinda não há avaliações

- Maths PaperDocumento13 páginasMaths PaperAli MonAinda não há avaliações

- VLT FC 301 - 302 AddendumDocumento30 páginasVLT FC 301 - 302 AddendumRoneiAinda não há avaliações

- Whitepaper: Doc ID MK-PUB-2021-001-EN Classification PUBLIC RELEASEDocumento18 páginasWhitepaper: Doc ID MK-PUB-2021-001-EN Classification PUBLIC RELEASEJuvy MuringAinda não há avaliações

- En LG-C333 SVC Eng 120824 PDFDocumento181 páginasEn LG-C333 SVC Eng 120824 PDFSergio MesquitaAinda não há avaliações

- Skybox Security Best Practices Migrating Next-Gen Firewalls EN PDFDocumento3 páginasSkybox Security Best Practices Migrating Next-Gen Firewalls EN PDFsysdhanabalAinda não há avaliações

- Machine Platform Crowd Andrew MC PDFDocumento351 páginasMachine Platform Crowd Andrew MC PDFShreyas Satardekar100% (3)

- Programmable Zone Sensor: Installation, Operation, and MaintenanceDocumento32 páginasProgrammable Zone Sensor: Installation, Operation, and MaintenanceEdison EspinalAinda não há avaliações

- Part 4Documento23 páginasPart 4AkoKhalediAinda não há avaliações

- Creators2 Lesson7 3rdeditionDocumento27 páginasCreators2 Lesson7 3rdeditionLace CabatoAinda não há avaliações

- cqm13392 7jDocumento8 páginascqm13392 7jsunilbholAinda não há avaliações

- CNC MachinesDocumento9 páginasCNC MachinesMohamed El-WakilAinda não há avaliações

- Cabsat PDFDocumento43 páginasCabsat PDFBhARaT KaThAyATAinda não há avaliações

- ODIN + Remote Flashing - S21 G996BDocumento14 páginasODIN + Remote Flashing - S21 G996BMark CruzAinda não há avaliações

- How Robots and AI Are Creating The 21stDocumento3 páginasHow Robots and AI Are Creating The 21stHung DoAinda não há avaliações

- Basic Statistics Mcqs For Pcs ExamsDocumento4 páginasBasic Statistics Mcqs For Pcs ExamsSirajRahmdil100% (1)

- RevCorporate Governance Risk and Internal ControlsDocumento87 páginasRevCorporate Governance Risk and Internal ControlsKrisha Mabel TabijeAinda não há avaliações

- Test - Management (Robbins & Coulter) - Chapter 18 - Quizlet4Documento6 páginasTest - Management (Robbins & Coulter) - Chapter 18 - Quizlet4Muhammad HaroonAinda não há avaliações

- Vocabulary Learning About Operating SystemsDocumento2 páginasVocabulary Learning About Operating SystemsErnst DgAinda não há avaliações

- Logistics & SCM SyllabusDocumento1 páginaLogistics & SCM SyllabusVignesh KhannaAinda não há avaliações

- Chapter 24 MiscellaneousSystemsDocumento17 páginasChapter 24 MiscellaneousSystemsMark Evan SalutinAinda não há avaliações

- IEEE Xplore Abstract - Automatic Recommendation of API Methods From Feature RequestsDocumento2 páginasIEEE Xplore Abstract - Automatic Recommendation of API Methods From Feature RequestsKshitija SahaniAinda não há avaliações

- A6V10444410 - Multi-Sensor Fire Detector - enDocumento8 páginasA6V10444410 - Multi-Sensor Fire Detector - enRoman DebkovAinda não há avaliações

- 90 Blue Plus ProgrammingDocumento2 páginas90 Blue Plus ProgrammingDaniel TaradaciucAinda não há avaliações

- Dean of Industry Interface JDDocumento3 páginasDean of Industry Interface JDKrushnasamy SuramaniyanAinda não há avaliações

- Beckhoff Main Catalog 2021 Volume2Documento800 páginasBeckhoff Main Catalog 2021 Volume2ipmcmtyAinda não há avaliações

- Three Matlab Implementations of The Lowest-Order Raviart-Thomas Mfem With A Posteriori Error ControlDocumento29 páginasThree Matlab Implementations of The Lowest-Order Raviart-Thomas Mfem With A Posteriori Error ControlPankaj SahlotAinda não há avaliações

- ZW3D CAD Tips How To Design A A Popular QQ DollDocumento10 páginasZW3D CAD Tips How To Design A A Popular QQ DollAbu Mush'ab Putra HaltimAinda não há avaliações

- Heat IncroperaDocumento43 páginasHeat IncroperaAnonymous rEpAAK0iAinda não há avaliações

- Chapter 1 - Perspective DrawingDocumento23 páginasChapter 1 - Perspective DrawingChe Nora Che HassanAinda não há avaliações

- An Approximate Analysis Procedure For Piled Raft FoundationsDocumento21 páginasAn Approximate Analysis Procedure For Piled Raft FoundationsNicky198100% (1)

- CSEC Study Guide - December 7, 2010Documento10 páginasCSEC Study Guide - December 7, 2010Anonymous Azxx3Kp9Ainda não há avaliações